Abstract

A High Altitude Platform Station (HAPS) can facilitate high-speed data communication over wide areas using high-power line-of-sight communication; however, it can significantly interfere with existing systems. Given spectrum sharing with existing systems, the HAPS transmission power must be adjusted to satisfy the interference requirement for incumbent protection. However, excessive transmission power reduction can lead to severe degradation of the HAPS coverage. To solve this problem, we propose a multi-agent Deep Q-learning (DQL)-based transmission power control algorithm to minimize the outage probability of the HAPS downlink while satisfying the interference requirement of an interfered system. In addition, a double DQL (DDQL) is developed to prevent the potential risk of action-value overestimation from the DQL. With a proper state, reward, and training process, all agents cooperatively learn a power control policy for achieving a near-optimal solution. The proposed DQL power control algorithm performs equal or close to the optimal exhaustive search algorithm for varying positions of the interfered system. The proposed DQL and DDQL power control yields the same performance, which indicates that the actional value overestimation does not adversely affect the quality of the learned policy.

1. Introduction

A High Altitude Platform Station (HAPS) is a network node operating in the stratosphere at an altitude of approximately 20 km. The International Telecommunication Union (ITU) defines a HAPS in Article 1.66A as “A station on an object at an altitude of 20 to 50 km and a specified, nominal, fixed point relative to the Earth”. Various studies have been performed on HAPS in recent years, and the commercial applications of HAPS have significantly increased [1]. In addition, the HAPS has potential as a significant component of wireless network architectures [2]. It is also an essential component of next-generation wireless networks, with considerable potential as a wireless access platform for future wireless communication systems [3,4,5].

Because the HAPS is located at high altitudes ranging from 20 to 50 km, the HAPS-to-ground propagation generally experiences lower path loss and a higher line-of-sight probability than typical ground-to-ground propagation. Thus, the HAPS can provide a high data rate for wide coverage; however, it is likely to interfere with various other terrestrial services, e.g., fixed, mobile, and radiolocation. The World Radiocommunication Conference 2019 (WRC-19) adopted a HAPS as the IMT Base Station (HIBS) in the frequency bands below 2.7 GHz previously identified for IMT by Resolution 247 [6], which addresses the potential interference of HAPS with an existing service. In such a situation, if the existing service is not safe from HAPS interference, the two systems cannot coexist. Therefore, the HAPS transmitter is requested to reduce its transmission power to satisfy the interference–to–noise ratio () requirement for protecting the receiver of the existing service. However, if the HAPS transmission power is excessively reduced, the signal–to–interference–plus–noise ratio (SINR) of the HAPS downlink decreases; thus, the outage probability may exceed the desired level. Herein, a HAPS transmission power control algorithm is proposed that aims to minimize the outage probability of the HAPS downlink while satisfying the requirement for protecting incumbents.

1.1. Related Works

Studies have been performed on improving the performance of HAPS. In [7], resource allocation for an Orthogonal Frequency Division Multiple Access (OFDMA)-based HAPS system that uses multicasting in the downlink to maximize the number of user terminals by maximizing the radio resources was studied. The authors of [8] proposed a wireless channel allocation algorithm for a HAPS 5G massive multiple-input multiple-output (MIMO) communication system based on reinforcement learning. Combining Q-learning and backpropagation neural networks allows the algorithm to learn intelligently for varying channel load and block conditions. In [9], a criterion for determining the minimum distance in a mobile user access system was derived, and a channel allocation approach based on predicted changes in the number of users and the call volume was proposed.

Additionally, spectrum sharing studies on HAPS have been performed. In [10], a spectrum sharing study was conducted to share a fixed service using a HAPS with other services in the 31/28-GHz band. Interference mitigation techniques were introduced, e.g., increasing the minimum operational elevation angle or improving the antenna radiation pattern to facilitate sharing with other services. In addition, the possibility of dynamic channel allocation was analyzed. In [11], sharing between a HAPS and a fixed service in the 5.8-GHz band was investigated using a coexistence methodology based on a spectrum emission mask.

In contrast to previous studies in which HAPS communication improvement and spectrum sharing were dealt with separately, in the present study, a combination of spectrum sharing with other systems and HAPS downlink coverage improvement is considered. In this regard, this study is more advanced than previous HAPS-related studies.

Deep Q-learning (DQL) is a reinforcement learning algorithm that applies deep neural networks to reinforcement learning to solve complex problems in the real world. DQL is widely used in various fields, including UAV, drone, and HAPS. In [12], the optimal UAV-BS trajectory was presented using a DQL for optimal placement of UAVs, and the author of [13] used a DQL to determine the optimal link between two UAV nodes. In [14], a DQL is used to find the optimal flight parameters for the collision-free trajectory of the UAV. In [15], two-hop communication was considered to optimize the drone base station trajectory and improve network performance, and a DQL was used to solve the joint two-hop communication scenario. In [16], a DQL was used for multiple-HAPS coordination for communications area coverage. A Double Deep Q-learning (DDQL) is an algorithm developed to prevent the overestimation of a DQL and shows better performance than the DQL in various fields [17].

1.2. Contributions

The contributions of the present study are as follows. (1) For the first time, a multiagent DQL was used to improve the HAPS outage performance and solve the problem of spectrum sharing with existing services. (2) We defined the power control optimization problem to minimize the outage probability of the HAPS downlink under the interference constraint for protecting the existing system. The state and reward for the training agent were designed to consider the objective function and constraints of the optimization problem. (3) Because the HAPS has a multicell structure, the number of power combinations increases exponentially as the number of cells () and power levels increase linearly. Thus, the optimal exhaustive search method requires an impractically long computation time to solve the multicell power optimization problem. The proposed DQL algorithm performs comparably to an optimal exhaustive search with a feasible computation time. (4) Even for varying positions of the interfered system, the proposed DQL produces a proper power control policy, maintaining stable performance. (5) Comparing the proposed DQL algorithm with the DDQL algorithm shows no performance degradation due to overestimation in the proposed DQL. The remainder of this paper is organized as follows.

Section 2 presents the system model, including the system deployment model, HAPS model, interfered system model, and path loss model. In Section 3, the downlink SINR and are calculated. In Section 4, a DQL-based HAPS power control algorithm is proposed. Section 5 presents the simulation results, and Section 6 concludes the paper.

2. System Model

2.1. System Deployment Model

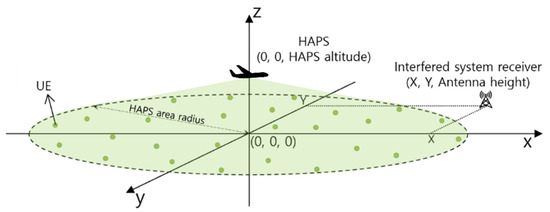

HAPS communication networks are assumed to consist of a single HAPS, multiple ground user equipment (UE) devices (referred to as UEs hereinafter), and a ground interfered receiver. The HAPS, UE, and interfered receiver are distributed in the three-dimensional Cartesian coordinate system, as shown in Figure 1. The coordinates of the HAPS antenna and the interfered receiver antenna are (0, 0, ) and (X, Y, ), respectively. The UE devices with an antenna height of are uniformly distributed within the circular HAPS area.

Figure 1.

System deployment model.

2.2. HAPS Model

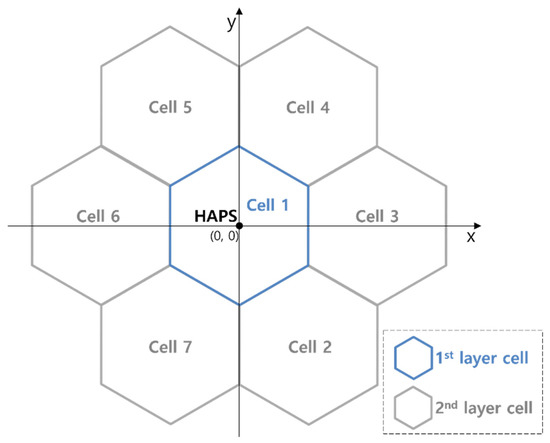

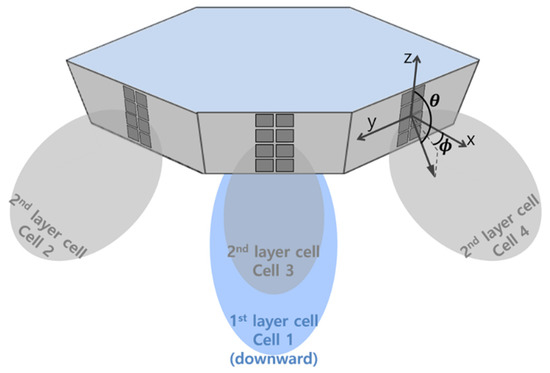

We modeled the HAPS cell deployment and system parameters with reference to the working document for a HAPS coexistence study performed in preparation for WRC-23 [18]. As shown in Figure 2, a single HAPS serves multiple cells that consist of one 1st layer cell denoted as and six 2nd layer cells denoted as to . The six cells of the 2nd layer are arranged at intervals of 60° in the horizontal direction. Figure 3 presents a typical HAPS antenna design for seven-cell structures [4], where seven phased-array antennas conduct beamforming toward the ground to form seven cells, as shown in Figure 2. The 1st layer cell has an antenna tilt of 90°, i.e., perpendicular to the ground; the 2nd layer cell has an antenna tilt of 23°.

Figure 2.

HAPS seven-cell layout.

Figure 3.

Typical antenna structure for multi-cell HAPS communication.

The antenna pattern of the HAPS was designed using the antenna gain formula presented in Recommendation ITU-R M.2101 [19]. The transmitting antenna gain is calculated as the sum of the gain of a single element and the beamforming gain of a multi-antenna array. The single element antenna gain is determined by the azimuth angle () and the elevation angle () between the transmitter and receiver and is calculated as follows:

where represents the maximum antenna gain of a single element, represents the horizontal radiation pattern calculated using Equation (2), and represents the vertical radiation pattern calculated using Equation (3).

Here, represents the horizontal 3 dB beamwidth of a single element, and represents the front-to-back ratio.

Here, represents the vertical 3 dB bandwidth of a single element, and represents the front-to-back ratio.

The transmitting antenna gain of the HAPS is calculated using the antenna arrangement and spacing, as well as the target beamforming direction. The gain for beam i is calculated as follows:

where and represent the number of antennas in the horizontal and vertical directions, respectively. is the superposition vector that overlaps the beams of the antenna elements, which is calculated using Equation (5), and is the weight that directs the antenna element in the beamforming direction, which is calculated using Equation (6).

Here, represent the intervals between the horizontal and vertical antenna arrays, respectively, and represents the wavelength.

Here, and represent the and of the main beam direction, respectively.

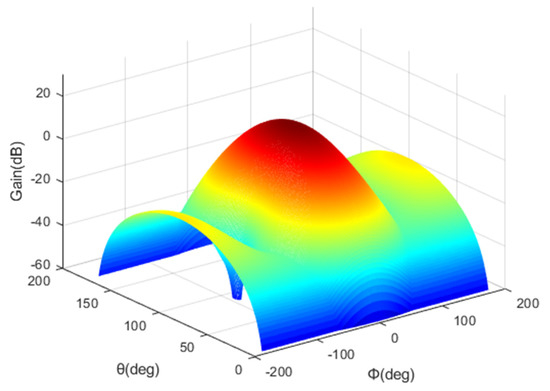

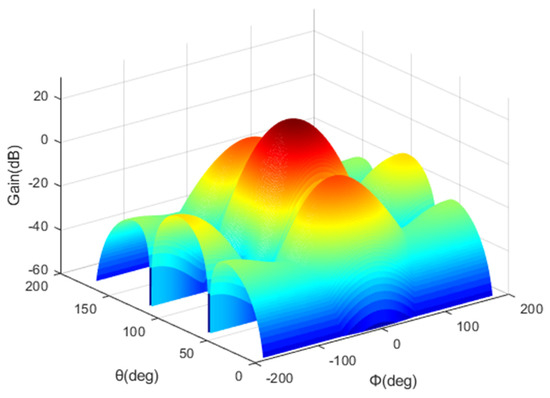

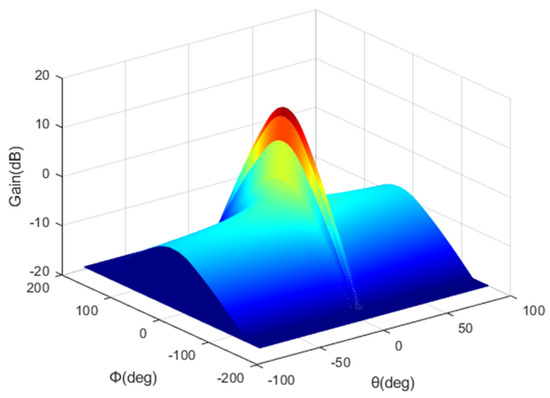

The 1st layer cell of the HAPS uses a 2 × 2 antenna array, and the 2nd layer cell uses a 4 × 2 antenna array. Figure 4 shows the antenna pattern of the 1st layer cell, and Figure 5 shows the antenna pattern of the 2nd layer cell.

Figure 4.

1st layer cell antenna pattern.

Figure 5.

2nd layer cell antenna pattern.

2.3. Interfered System Model

Various interfered systems, e.g., fixed, mobile, and radiolocation services, can be considered for the interference scenario involving a HAPS. We adopted a ground IMT base station (BS) for the interfered system, referring to the potential interference scenario [6]. The antenna pattern of the interfered system was applied by referring to Recommendation ITU-R F.1336 [20]. The receiving antenna gain is calculated as follows:

where represents the maximum gain in the azimuth plane; represents the relative reference antenna gain in the azimuth plane in the normalized direction of (, 0), which is calculated using Equation (8); and represents the relative reference antenna gain in the elevation plane in the normalized direction of (0, ), which is calculated using Equation (9). represents the horizontal gain compression ratio when the azimuth angle is shifted from 0° to , which is calculated using Equation (10).

Here, and are given by Equations (11) and (12), respectively; represents the 3 dB beamwidth in the azimuth plane; and is an azimuth pattern adjustment factor based on the leaked power. The relative minimum gain was calculated using Equation (13).

Returning to Equation (9), is given by Equation (14), and the 3-dB beamwidth in the elevation plane is calculated using Equation (15), where represents the maximum gain in the azimuth plane. In addition, is calculated using Equation (16), where is an elevation pattern adjustment factor based on the leaked power. was calculated using Equation (17), and the attenuation inclination factor was calculated using Equation (18). Figure 6 shows the antenna pattern of the interfered system calculated using Equation (7), which is the pattern for a typical terrestrial BS with a broad beamwidth in the azimuth plane but a narrow beamwidth in the elevation plane.

Figure 6.

Interfered system antenna pattern.

2.4. Path Loss Model

The path loss model of Recommendation ITU-R P.619 [21] was applied to the working document for the HAPS coexistence study performed in preparation for WRC-23 [22]. The total path loss that occurs when the HAPS signal reaches the UE and the IMT BS is expressed as follows:

where represents the free-space path loss calculated using Equation (20), which occurs in a straight path from a transmitting antenna to a receiving antenna in a vacuum state, and is assumed to be 3 dB for depolarization attenuation. represents the attenuation loss due to atmospheric gases. represents the resistive loss due to the spread of the antenna beam as the beam spreads attenuation. and were calculated using the formulae in P.619.

Here, represents the carrier frequency (in GHz), and represents the distance (in km) between the transmitter and receiver.

3. Calculation of Downlink SINR and

3.1. Calculation of Downlink SINR

The signal received by the UE from the HAPS transmission for the th cell () is calculated as follows:

where represents the HAPS transmission power for , represents the transmitting antenna gain of , represents the polarization gain, represents the receiving antenna gain, and represents the ohmic loss. The UE receives signals from all cells and considers the remaining signals (except for the strongest signal) as interference. Equation (22) is used to calculate the signal and interference, and the receiver noise is calculated using Equation (23).

Here, and represent the Boltzmann constant and noise temperature, respectively, and represents the channel bandwidth. represents the noise figure. Finally, the downlink SINR is calculated as follows:

3.2. Calculation of

The interference power received by the interfered receiver from the HAPS transmitter servicing is calculated as follows:

where represents the antenna gain of the interfered receiver. The aggregated interference power at the interfered receiver is calculated as follows:

Finally, after converting the aggregated interference into form in accordance with Equation (27) and comparing it with the protection criteria () of the interfered receiver, it is possible to check whether the interfered receiver is protected from the interference of the HAPS.

4. DQL-Based HAPS Transmission Power Control Algorithm

4.1. Problem Formulation

To satisfy the of the interfered system, the transmission power of the HAPS must be reduced. However, as the power of the HAPS is reduced, the of the UE decreases, and the outage probability increases. Thus, the objective of this study was to find a HAPS transmission power set for each cell, i.e., , that satisfies the of the interfered system while minimizing . The optimization problem of the HAPS transmission power can be formulated as follows:

where represents the number of UEs that do not satisfy the minimum required SINR for a given HAPS transmission power set .

4.2. Proposed Algorithm

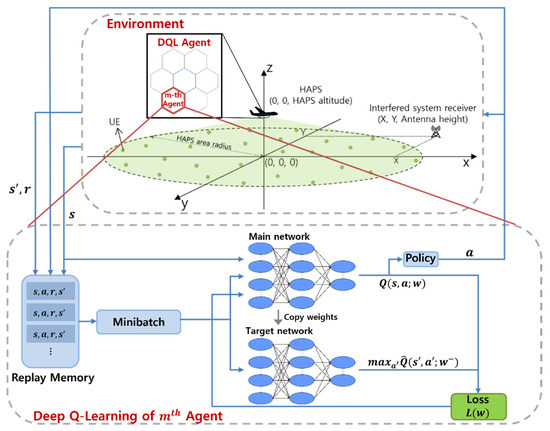

To control the HAPS transmission power, it is necessary to independently determine the power level of each cell. Accordingly, the total number of HAPS transmission power sets increases exponentially to as the number of selectable powers increases linearly. Although an exhaustive search algorithm can be used to find optimal solutions, this incurs excessive complexity and a long computation time. To solve this problem, we propose a DQL-based power optimization algorithm that can find a near-optimal with low complexity. In the proposed DQL model, each agent functions as the power controller of a cell; accordingly, the number of agents is .

The agent—the subject of learning—learns a deep neural network called Deep Q Network (DQN) and selects an action using this network. DQL is an improved Q-learning method. Q-learning is a method for selecting the best action in a specific state through the Q-table of a state-action pair. As the state–action space grows in Q-learning, creating a Q-table and finding the best policy become highly complex. In addition, the use of Q-learning is limited because learning in the Q-table format becomes more complex when multiple agents are used. In contrast, a DQL is a promising way to solve the curse of dimensionality by approximating a Q function using a deep neural network instead of a Q-table. The proposed algorithm uses a method in which each agent learns a policy based on its observation and action while treating all other agents as part of the environment to solve the multiple-agent problem.

The basic DQL parameters (state, action, and reward) are presented below. Each agent learns the policy independently using the training data at each timestep . The state space of the th agent comprises a set of ) interferences that the agent provides to UEs located at the centers of other cells and the agent’s interference to the interfered receiver, which is expressed as

Two power sets configure the action space of an agent: and (unit: dBm). The agent of in the 1st layer cell selects an action from , and the agents of the 2nd layer cell select an action from . All agent actions are initialized to the minimum power value to minimize the interference to the interfered receiver at the beginning of the learning process. The reward is calculated as follows. First, because the interfered receiver must be safe from HAPS interference, an agent receives a fixed of −100 (deficient value) for . In contrast, for , an agent receives computed according to the lower 5% downlink SINR of each cell and the required SINR . The reward can be expressed as

where

Figure 7 shows the structure of the proposed DQL-based HAPS transmission power control algorithm. Each agent learns its DQN, and one DQN consists of the main network, target network, and replay memory. The main network estimates the Q-value corresponding to the state–action pair through a deep neural network with a weight . The main network consists of an input layer composed of seven neurons, a hidden layer consisting of 24 neurons, and an output layer consisting of five neurons. It is a fully connected network. is updated every in the direction that minimizes the loss function . The target network calculates the target value , where is the discount factor; and denotes the state and action, respectively, in the next step; and is the Q-value estimated through the target network with weight . The agent’s transition tuple is piled in the replay memory, from which a minibatch (size of 512 tuples) are randomly sampled at each step. The minibatch data are used to compute the target value . In a DQL, learning is stabilized, and the learning performance is improved through replay memory and a separate target network [23].

Figure 7.

DQL-based HAPS power control architecture.

Algorithm 1 describes the proposed DQL-based HAPS transmission power control algorithm. For DQN training, was set as 100,000, and the minibatch size was set as 512. was set as 500, and was set as 10. The Adam optimizer was used to minimize , and the learning rate and were 0.01 and 0.995, respectively. An -greedy policy was used to balance exploration and exploitation; was initially set as 1 and was reduced by 0.01 for every episode.

| Algorithm 1. Training Process for the DQL-Based HAPS Power Control Algorithm |

|

A DDQL is a reinforcement learning algorithm to improve performance degradation due to the overestimation of the DQL. Action-value can be overestimated by the maximization step in line 16 of Algorithm 1. Therefore, the DDQL calculates the target value as to eliminate the maximization step. The DDQL-based HAPS power control algorithm proceeds the same way as Algorithm 1 except for calculating the target value.

5. Simulation Results

5.1. Simulation Configuration

The simulation was conducted using MATLAB for three positions of the interfered receiver, and the learning order of the agent was randomly set for each . Subsequently, the simulation proceeded according to Algorithm 1. When all episodes were finished, the simulation ended, and the set composed of the power selected by each agent was calculated as the simulation result. Finally, the performance of the simulation was verified by comparing with the optimal power set obtained via an exhaustive search algorithm considering all cases. The total elapsed time of the DQL and exhaustive search was about 7500 s and 21,000 s, respectively. The total elapsed time of the exhaustive search increased exponentially with the rise of N, but the DQL did not. Therefore, the computational efficiency of the DQL is more remarkable as the number of cells and power levels increase. In this simulation, performance comparison with the DDQL was additionally performed to check performance degradation due to overestimation of the DQL.

We applied the HAPS parameters and interfered system parameters, referring to the working document for the HAPS coexistence study performed in preparation for WRC-23 [18,24]. The simulation parameters of the two systems are presented in Table 1 and Table 2, respectively.

Table 1.

HAPS system parameters.

Table 2.

Interfered system (IMT BS) parameters.

5.2. Numerical Analysis

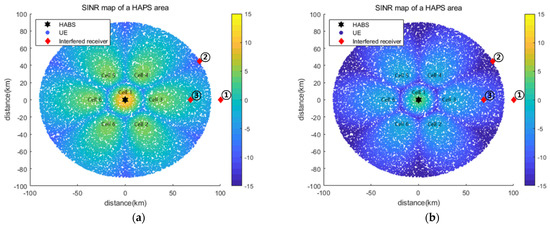

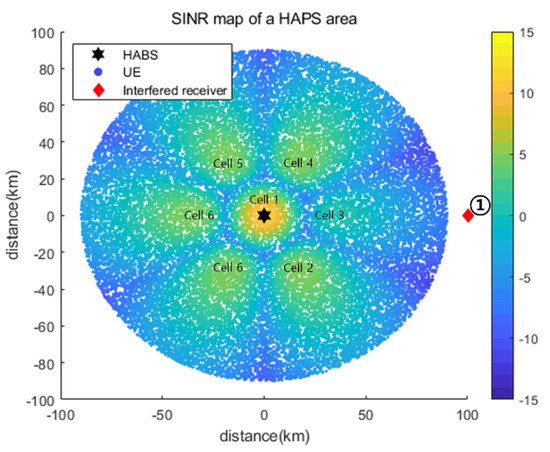

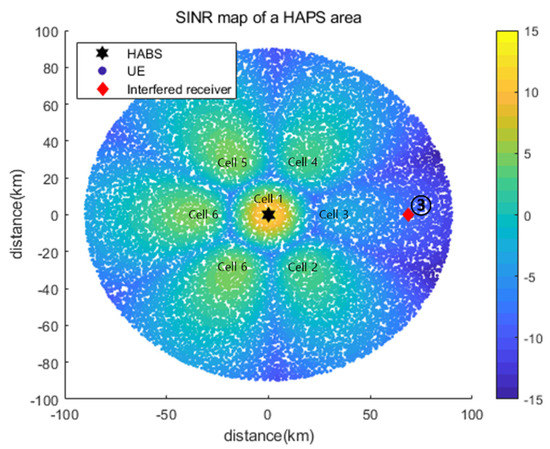

Figure 8 shows the SINR maps obtained using and for all cells, that is, with no power control. We considered the three positions of the interfered receiver that do not satisfy the of −6 dB for the use of . In addition, the three locations were designed considering the representative interference power, which can accurately reflect the operating characteristics of the proposed power control algorithm. Interfered receiver ① was located in the main beam direction for and received the highest interference from . Therefore, the minimum power use of only satisfied an of −6 dB. Interfered receiver ② was placed on the boundary between and and thus received equal (and the strongest) interference from these two cells. Interfered receiver ③ was located in the main beam direction for , as the interfered receiver. However, the minimum power use of only could not satisfy the of −6 dB, and at least one other cell had to use less than the maximum power.

Figure 8.

(a) SINR map for ; (b) SINR map for .

Table 3 presents the and for and with varying interfered receiver locations. The results confirm that the and had a tradeoff relationship. The same is shown regardless of the interference receiver position because of the absence of power control. Next, we compared the simulation results of the optimal exhaustive search and the proposed DQL-based power control algorithm for the three positions of the interfered receiver.

Table 3.

and for the interfered receiver locations.

5.2.1. Simulation Results for Interfered Receiver ①

Figure 9 shows the SINR map based on the acquired using the proposed DQL-based power control algorithm for interfered receiver ①. Table 4 presents a performance comparison of the values obtained via an exhaustive search and and a comparison of DQL and DDQL results. As shown, was equal to the optimal value , providing the same and performance. Because the interfered receiver was located in the azimuth main beam direction of , the power of significantly affected the interfered receiver. Even though all other cells used the maximum power, their interference was negligible. Therefore, all the cells except for used the maximum power for minimizing , as shown in Table 4.

Figure 9.

SINR map based on the obtained using the proposed DQL-based power control algorithm for interfered receiver ①.

Table 4.

Performance comparison for interfered receiver ①.

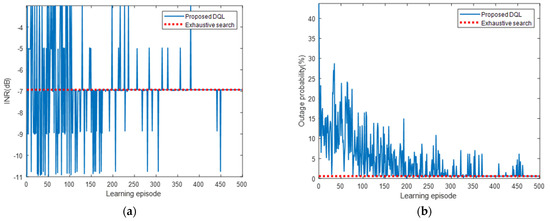

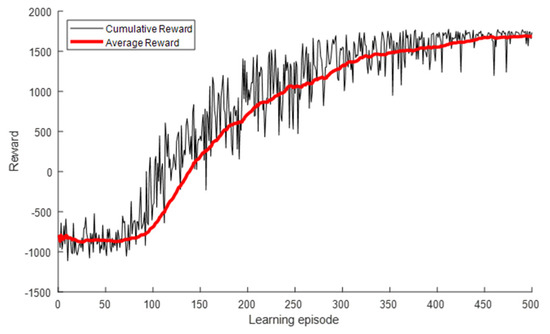

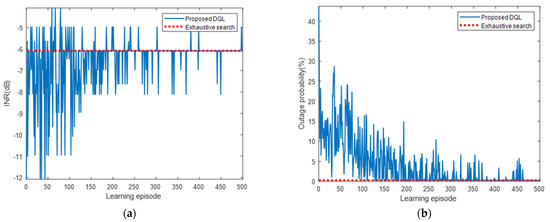

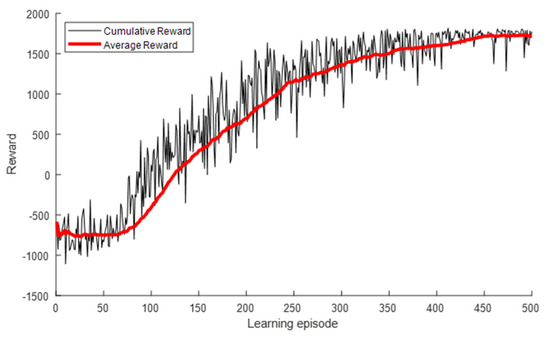

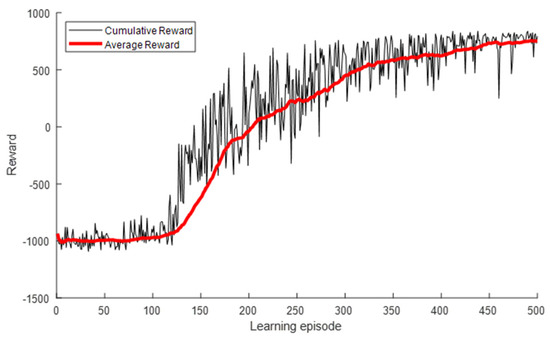

Figure 10 presents the and for each learning episode. As shown, the and converged to the optimal values of the exhaustive search algorithm as the number of learning episodes increased. The started at −11.01 dB, which was the value for the use of , as shown in Table 3, and converged to the optimal value of −6.93 dB. Similarly, started at 43.7% and converged to 0.6%. A large variance due to frequent exploration was observed at the beginning of the learning, but it gradually decreased and converged as the learning progressed. Figure 11 presents the cumulative and average rewards for each learning episode. As shown, the reward rapidly increased and then gradually converged at approximately 300 episodes, indicating that the proposed DQL training process allowed the agent to learn the power control algorithm quickly and stably.

Figure 10.

(a) and (b) for each learning episode for interfered receiver ①.

Figure 11.

Reward for each learning episode for interfered receiver ①.

We compared the learning results of the DQL and DDQL. Even when the DDQL is used, the results are the same as in Table 4 and Figure 10 and Figure 11, which shows that the overestimation of the DQL did not occur. As a result, it was confirmed that performance degradation due to overestimation did not happen, and sufficient learning is possible only with DQL.

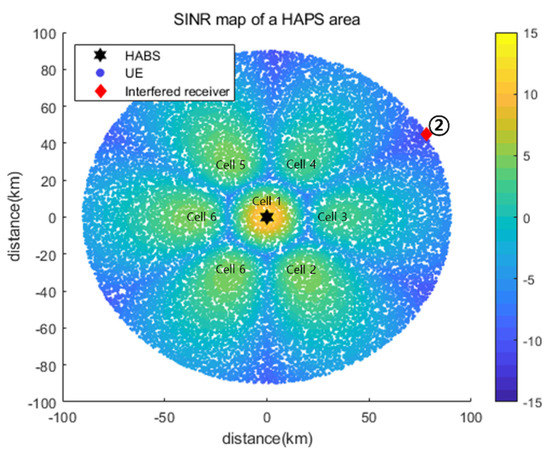

5.2.2. Simulation Results for Interfered Receiver ②

Figure 12 shows the SINR map based on acquired using the proposed DQL-based power control algorithm for interfered receiver ②. Table 5 presents a performance comparison of the values obtained via an exhaustive search and and a comparison of the DQL and DDQL results. As shown, was equal to the optimal value , providing the same and performance. The interfered receiver was located on the boundary between and and, thus, received equal (and the strongest) interference from these two cells. In addition, even though all the cells other than and used the maximum power, their interference was marginal. Therefore, in the optimal power control, and reduced the power required to satisfy the , whereas all the other cells used the maximum power for minimizing , as shown in Table 5.

Figure 12.

SINR map based on the obtained using the proposed DQL-based power control algorithm for the interfered receiver ②.

Table 5.

Performance comparison for interfered receiver ②.

As shown in Figure 13, the and converged to the optimal values of the exhaustive search algorithm. Similar to the case of receiver ①, as the learning progressed, the converged from −12.08 to −6.08 dB, and the converged from 43.7% to 0.2%. Figure 14 shows that the reward gradually converged at approximately 300 episodes, indicating that the proposed DQL training process allowed the agent to quickly and stably learn the power control algorithm. We compared the learning results of the DQL and DDQL. Even when the DDQL was used, the results were the same as in Table 5 and Figure 13 and Figure 14, verifying that the desired learning is attainable with the DQL only.

Figure 13.

(a) and (b) for each learning episode for interfered receiver ②.

Figure 14.

Reward for each learning episode for interfered receiver ②.

5.2.3. Simulation Results for Interfered Receiver ③

Figure 15 shows the SINR map based on obtained using the proposed DQL-based power control algorithm for interfered receiver ③. The interfered receiver was located in the azimuth main lobe direction of . It was closer to the HAPS than the receiver considered in Section 5.2.1 and was more severely affected by ; was not satisfied even for the minimum power of . Thus, the optimal power control adjusted the power of and , which caused the second-most interference. Table 6 presents a comparison of the values obtained using an exhaustive search and and a comparison of the DQL and DDQL results. Although the of was 0.6% higher than that of , it corresponded to the third-smallest value among the 78,125 values generated by the exhaustive search algorithm. In summary, the proposed power control algorithm achieved outstanding performance close to the optimal value.

Figure 15.

SINR map based on obtained using the proposed DQL-based power control algorithm for interfered receiver ③.

Table 6.

Performance comparison for interfered receiver ③.

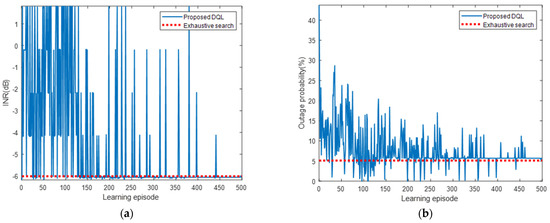

As shown in Figure 16, the and converged to the optimal values of the exhaustive search algorithm, with slight gaps. Similar to the results presented in Section 5.2.1, as the learning progressed, the converged from −6.19 to −6.06 dB, and the converged from 43.7% to 5.7%. Figure 17 shows the cumulative and average rewards for each learning episode. The reward exhibited no noticeable improvement until approximately 130 episodes, after which it rapidly increased and then gradually converged at approximately 350 episodes. This is because to satisfy the , more agents had to take action, and the actions had to be more diverse. Nonetheless, the proposed DQL training process allowed the agent to learn the power control algorithm quickly and stably. We compared the learning results of the DQL and DDQL. Even when the DDQL was used, the results were the same as in Table 6 and Figure 16 and Figure 17, verifying that the desired learning is attainable with the DQL only.

Figure 16.

(a) and (b) pout for each learning episode for interfered receiver ③.

Figure 17.

Reward for each learning episode for interfered receiver ③.

6. Conclusions

This paper proposed a DQL-based transmission power control algorithm for multicell HAPS communication that involved spectrum sharing with existing services. The proposed algorithm aimed to find a solution to the power control optimization problem for minimizing the outage probability of the HAPS downlink under the interference constraint to protect existing systems. We compared the solution with the optimal solution acquired using the exhaustive search algorithm. The simulation results confirmed that the proposed algorithm was comparable to the optimal exhaustive search.

Future work will include various power levels and expanding to multiple-HAPS communication in spectrum sharing with multiple interference systems. Since the increase in the power level could reveal a value-based algorithm’s limit, it is preferred to apply the policy-based algorithm. Given that multiple-HAPS communication could lead to the non-stationarity problem of multiagent reinforcement learning, its solution would be worth studying.

Author Contributions

Conceptualization and methodology, S.J. and H.-S.J.; software, S.J. and W.Y.; validation, formal analysis, and investigation, S.J. and W.Y. and H.-S.J.; resources and data curation, H.K.C. and E.N.; writing—original draft preparation, S.J. and W.Y.; writing—review and editing, S.J., H.-S.J. and J.P.; visualization, W.Y. and H.K.C.; supervision, J.P.; project administration, H.-S.J. and J.P.; funding acquisition, J.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Agency for Defense Development (ADD).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Arum, S.C.; Grace, D.; Mitchell, P.D. A review of wireless communication using high-altitude platforms for extended coverage and capacity. Comput. Commun. 2020, 157, 232–256. [Google Scholar] [CrossRef]

- Alam, M.S.; Kurt, G.K.; Yanikomeroglu, H.; Zhu, P.; Đào, N.D. High altitude platform station based super macro base station constellations. IEEE Commun. Mag. 2021, 59, 103–109. [Google Scholar] [CrossRef]

- Kurt, G.K.; Khoshkholgh, M.G.; Alfattani, S.; Ibrahim, A.; Darwish, T.S.; Alam, M.S.; Yanikomeroglu, H.; Yongacoglu, A. A vision and framework for the high altitude platform station (HAPS) networks of the future. IEEE Commun. Surv. Tutor. 2021, 23, 729–779. [Google Scholar] [CrossRef]

- Hsieh, F.; Jardel, F.; Visotsky, E.; Vook, F.; Ghosh, A.; Picha, B. UAV-based Multi-cell HAPS Communication: System Design and Performance Evaluation. In Proceedings of the GLOBECOM 2020-2020 IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020. [Google Scholar]

- Xing, Y.; Hsieh, F.; Ghosh, A.; Rappaport, T.S. High Altitude Platform Stations (HAPS): Architecture and System Performance. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Online, 7–11 April 2021. [Google Scholar]

- International Telecommunications Union (ITU). World Radio Communication Conference 2019 (WRC-19) Final Acts; International Telecommunications Union: Geneva, Switzerland, 2020; p. 366. [Google Scholar]

- Ibrahim, A.; Alfa, A.S. Using Lagrangian relaxation for radio resource allocation in high altitude platforms. IEEE Trans. Wirel. Commun. 2015, 14, 5823–5835. [Google Scholar] [CrossRef] [Green Version]

- Guan, M.; Wu, Z.; Cui, Y.; Cao, X.; Wang, L.; Ye, J.; Peng, B. An intelligent wireless channel allocation in HAPS 5G communication system based on reinforcement learning. EURASIP J. Wirel. Commun. Netw. 2019, 2019, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Guan, M.; Wang, L.; Chen, L. Channel allocation for hot spot areas in HAPS communication based on the prediction of mobile user characteristics. Intell. Autom. Soft Comput. 2016, 22, 613–620. [Google Scholar] [CrossRef]

- Oodo, M.; Miura, R.; Hori, T.; Morisaki, T.; Kashiki, K.; Suzuki, M. Sharing and compatibility study between fixed service using high altitude platform stations (HAPS) and other services in the 31/28 GHz bands. Wirel. Pers. Commun. 2002, 23, 3–14. [Google Scholar] [CrossRef]

- Mokayef, M.; Rahman, T.A.; Ngah, R.; Ahmed, M.Y. Spectrum sharing model for coexistence between high altitude platform system and fixed services at 5.8 GHz. Int. J. Multimed. Ubiquitous Eng. 2013, 8, 265–275. [Google Scholar] [CrossRef]

- Lee, W.; Jeon, Y.; Kim, T.; Kim, Y.I. Deep Reinforcement Learning for UAV Trajectory Design Considering Mobile Ground Users. Sensors 2021, 21, 8239. [Google Scholar] [CrossRef] [PubMed]

- Koushik, A.M.; Hu, F.; Kumar, S. Deep Q-Learning-Based Node Positioning for Throughput-Optimal Communications in Dynamic UAV Swarm Network. IEEE Trans. Cogn. Commun. Netw. 2019, 5, 554–566. [Google Scholar] [CrossRef]

- Raja, G.; Anbalagan, S.; Narayanan, V.S.; Jayaram, S.; Ganapathisubramaniyan, A. Inter-UAV collision avoidance using Deep-Q-learning in flocking environment. In Proceedings of the 2019 IEEE 10th Annual Ubiquitous Computing, Electronics and Mobile Communication Conference, New York, NY, USA, 10–12 October 2019; pp. 1089–1095. [Google Scholar]

- Fotouhi, A.; Ding, M.; Hassan, M. Deep Q-Learning for Two-Hop Communications of Drone Base Stations. Sensors 2021, 21, 1960. [Google Scholar] [CrossRef] [PubMed]

- Anicho, O.; Charlesworth, P.B.; Baicher, G.S.; Nagar, A.K. Reinforcement learning versus swarm intelligence for autonomous multi-HAPS coordination. SN Appl. Sci. 2021, 3, 1–11. [Google Scholar] [CrossRef]

- Hasselt, H.V.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- International Telecommunications Union Radiocommunication Sector (ITU-R). Working Document towards a Preliminary Draft New Report ITU-R M.[HIBS-CHARACTERISTICS]/Working Document Related to WRC-23 Agenda Item 1.4; R19-WP5D Contribution 716 (Chapter 4-Annex 4.19); International Telecommunications Union: Geneva, Switzerland, 2021. [Google Scholar]

- International Telecommunications Union Radiocommunication Sector (ITU-R). Modelling and Simulation of IMT Networks and Systems for Use in Sharing and Compatibility Studies; Recommendation ITU-R M.2101-0; International Telecommunications Union: Geneva, Switzerland, 2017. [Google Scholar]

- International Telecommunications Union Radiocommunication Sector (ITU-R). Reference Radiation Patterns of Omnidirectional, Sectoral and Other Antennas for the Fixed and Mobile Service for Use in Sharing Studies in the Frequency Range from 400 MHz to about 70 GHz; Recommendation ITU-R F.1336-5; International Telecommunications Union: Geneva, Switzerland, 2019. [Google Scholar]

- International Telecommunications Union Radiocommunication Sector (ITU-R). Propagation Data Required for the Evaluation of Interference between Stations in Space and Those on the Surface of the Earth; Recommendation ITU-R P.619-5; International Telecommunications Union: Geneva, Switzerland, 2021. [Google Scholar]

- International Telecommunications Union Radiocommunication Sector (ITU-R). Working Document towards Sharing and Compatibility Studies of HIBS under WRC-23 Agenda Item 1.4—Sharing and Compatibility Studies of High-Altitude Platform Stations as IMT Base Stations (HIBS) on WRC-23 Agenda Item 1.4; R19-WP5D Contribution 716 (Chapter 4—Annex 4.20); International Telecommunications Union: Geneva, Switzerland, 2021. [Google Scholar]

- Mnih, V.; Kavucuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- International Telecommunications Union Radiocommunication Sector (ITU-R). Characteristics of Terrestrial Component of IMT for Sharing and Compatibility Studies in Preparation for WRC-23; R19-WP5D Temporary Document 422 (Revision 2); International Telecommunications Union: Geneva, Switzerland, 2021. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).