Abstract

Mobile and wearable devices have enabled numerous applications, including activity tracking, wellness monitoring, and human–computer interaction, that measure and improve our daily lives. Many of these applications are made possible by leveraging the rich collection of low-power sensors found in many mobile and wearable devices to perform human activity recognition (HAR). Recently, deep learning has greatly pushed the boundaries of HAR on mobile and wearable devices. This paper systematically categorizes and summarizes existing work that introduces deep learning methods for wearables-based HAR and provides a comprehensive analysis of the current advancements, developing trends, and major challenges. We also present cutting-edge frontiers and future directions for deep learning-based HAR.

1. Introduction

Since the first Linux-based smartwatch was presented in 2000 at the IEEE International Solid-State Circuits Conference (ISSCC) by Steve Mann, who was later hailed as the “father of wearable computing”, the 21st century has witnessed a rapid growth of wearables. For example, as of January 2020, 21% of adults in the United States, most of whom are not opposed to sharing data with medical researchers, own a smartwatch [1].

In addition to being fashion accessories, wearables provide unprecedented opportunities for monitoring human physiological signals and facilitating natural and seamless interaction between humans and machines. Wearables integrate low-power sensors that allow them to sense movement and other physiological signals such as heart rate, temperature, blood pressure, and electrodermal activity. The rapid proliferation of wearable technologies and advancements in sensing analytics have spurred the growth of human activity recognition (HAR). As a general understanding of the HAR shown in Figure 1, HAR has drastically improved the quality of service in a broad range of applications spanning healthcare, entertainment, gaming, industry, and lifestyle, among others. Market analysts from Meticulous Research® [2] forecast that the global wearable devices market will grow at a compound annual growth rate of 11.3% from 2019, reaching $62.82 billion by 2025, with companies like Fitbit®, Garmin®, and Huawei Technologies® investing more capital into the area.

Figure 1.

Wearable devices and their application. (a) Distribution of wearable applications [6]. (b) Typical wearable devices. (c) Distribution of wearable devices placed on common body areas [6].

In the past decade, deep learning (DL) has revolutionized traditional machine learning (ML) and brought about improved performance in many fields, including image recognition, object detection, speech recognition, and natural language processing. DL has improved the performance and robustness of HAR, speeding its adoption and application to a wide range of wearable sensor-based applications. There are two key reasons why DL is effective for many applications. First, DL methods are able to directly learn robust features from raw data for specific applications, whereas features generally need to be manually extracted or engineered in traditional ML approaches, which usually requires expert domain knowledge and a large amount of human effort. Deep neural networks can efficiently learn representative features from raw signals with little domain knowledge. Second, deep neural networks have been shown to be universal function approximators, capable of approximating almost any function given a large enough network and sufficient observations [3,4,5]. Due to this expressive power, DL has seen a substantial growth in HAR-based applications.

Despite promising results in DL, there are still many challenges and problems to overcome, leaving room for more research opportunities. We present a review on deep learning in HAR with wearable sensors and elaborate on ongoing challenges, obstacles, and future directions in this field.

Specifically, we focus on the recognition of physical activities, including locomotion, activities of daily living (ADL), exercise, and factory work. While DL has shown a lot of promise in other applications, such as ambient scene analysis, emotion recognition, or subject identification, we focus on HAR. Throughout this work, we present brief and high-level summaries of major DL methods that have significantly impacted wearable HAR. For more details about specific algorithms or basic DL, we refer the reader to original papers, textbooks, and tutorials [7,8]. Our contributions are summarized as followings.

- (i)

- Firstly, we give an overview of the background of the human activity recognition research field, including the traditional and novel applications where the research community is focusing, the sensors that are utilized in these applications, as well as widely-used publicly available datasets.

- (ii)

- Then, after briefly introducing the popular mainstream deep learning algorithms, we give a review of the relevant papers over the years using deep learning in human activity recognition using wearables. We categorize the papers in our scope according to the algorithm (autoencoder, CNN, RNN, etc.). In addition, we compare different DL algorithms in terms of the accuracy of the public dataset, pros and cons, deployment, and high-level model selection criteria.

- (iii)

- We provide a comprehensive systematic review on the current issues, challenges, and opportunities in the HAR domain and the latest advancements towards solutions. At last, honorably and humbly, we make our best to shed light on the possible future directions with the hope to benefit students and young researchers in this field.

2. Methodology

2.1. Research Question

In this work, we propose several major research questions, including What the real-world applications of HAR, mainstream sensors, and major public datasets are in this field, What deep learning approaches are employed in the field of HAR and what pros and cons each of them have, and What challenges we are facing in this field and what opportunities and potential solutions we may have. In this work, we review the state-of-the-art work in this field and present our answers to these questions.

This article is organized as follows: We compare this work with related existing review work in this field in Section 3. Section 4.1 introduces common applications for HAR. Section 4.2 summarizes the types of sensors commonly used in HAR. Section 4.3 summarizes major datasets that are commonly used to build HAR applications. Section 5 introduces the major works in DL that contribute to HAR. Section 6 discusses major challenges, trends, and opportunities for future work. We provide concluding remarks in Section 7.

2.2. Research Scope

In order to provide a comprehensive overview of the whole HAR field, we conducted a systematic review for human activity recognition. To ensure that our work satisfies the requirements of a high-quality systemic review, we conducted the 27-item PRISMA review process [9] and ensured that our work satisfied each requirement. We searched in Google Scholar with meta-keywords (We began compiling papers for this review in November 2020. As we were preparing this review, we compiled a second round of papers in November 2021 to incorporate the latest works published in 2021). (A) “Human activity recognition”, “motion recognition”, “locomotion recognition”, “hand gesture recognition”, “wearable”, (B) “deep learning”, “autoencoder” (alternatively “auto-encoder”), “deep belief network”, “convolutional neural network” (alternatively “convolution neural network”), “recurrent neural network”, “LSTM”, “recurrent neural network”, “generative adversarial network” (alternatively “GAN”), “reinforcement learning”, “attention”, “deep semi-supervised learning”, and “graph neural network”. We used an AND rule to get combinations of the above meta-keywords (A) and (B). For each combination, we obtained top 200 search results ranked by relevance. We didn’t consider any patent or citation-only search result (no content available online).

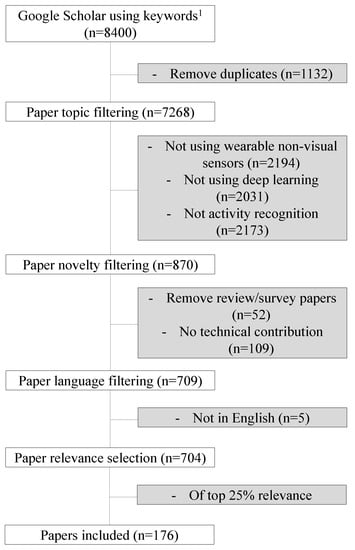

There are several exclusion criteria to build the database of the paper we reviewed. First of all, we omitted image or video-based HAR works, such as [10], since there is a huge body of work in the computer vision community and the method is significantly different from sensor-based HAR. Secondly, we removed the papers using environmental sensors or systems assisted by environmental sensors such as WiFi- and RFID-based HAR. Thirdly, we removed the papers with minor algorithmic advancements based on prior works. We aim to present the technical progress and algorithmic achievements in HAR, so we avoid presenting works that do not stress the novelty of methods. In the end, as the field of wearable-based HAR is becoming excessively popular and numerous papers are coming out, it is not a surprise to find that many papers share rather similar approaches, and it is almost impossible and less meaningful to cover all of them. Figure 2 shows the consort diagram that outlines step-by-step how we filtered out papers to arrive at the final 176 papers we included in this review. We obtained 8400 papers in the first step by searching keywords mentioned above on Google Scholar. Next, we removed papers that did not align with the topics in this review (i.e., works that do not utilize deep learning in wearable systems), leaving us with 870 papers. In this step, we removed 2194 papers that utilized vision, 2031 papers that did not use deep learning, and 2173 papers that did not perform human activity recognition. Then, we removed 52 review papers, 109 papers that did not propose novel systems or algorithms, and five papers that were not in English, leaving us with 704 papers. Finally, we selected the top 25% most relevant papers to review, leaving us with 176 papers that we reviewed for this work. We used the relevancy score provided through Google Scholar to select the papers to include in this systemic review. Therefore, we select, categorize, and summarize representative works to present in this review paper. We adhere to the goal of our work throughout the whole paper, that is, to give an overall introduction to new researchers entering this field and present cutting-edge research challenges and opportunities.

Figure 2.

Consort diagram outlining how we selected the final papers we included in this work.

However, we admit that the review process conducted in this work has some limitations. Due to the overwhelming amount of papers in this field in recent years, it is almost impossible to include all the published papers in the field of deep learning-based wearable human activity recognition in a single review paper. The selection of the representative works to present in this paper is unavoidably subject to the risk of bias. Besides, we may miss the very first paper initiating or adopting a certain method. At last, due to the nature of human-related research and machine learning research, many possibilities could cause heterogeneity among study results, including the heterogeneity in devices, heterogeneity from the demography of participants, and even heterogeneity from the algorithm implementation details.

2.3. Taxonomy of Human Activity Recognition

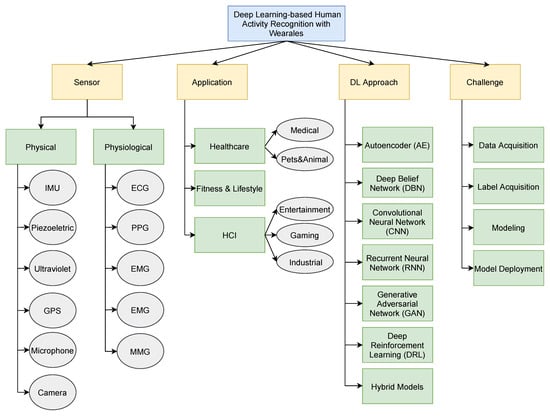

In order to obtain a straightforward understanding of the hierarchies under the tree of HAR, we illustrate the taxonomy of HAR as shown in Figure 3. We categorized existing HAR works into four dimensions: Sensor, application, DL approach, and challenge. There are basically two kinds of sensors: Physical sensors and physiological sensors. Physical sensors include Inertial Measurement Unit (IMU), piezoelectric sensor, GPS, wearable camera, etc. Some exemplary physiological sensors are electromyography (EMG) and photoplethysmography (PPG), just to name a few. In terms of the applications of HAR systems, we categorized them into healthcare, fitness& lifestyle, and Human Computer Interaction (HCI). Regarding the DL algorithm, we introduce six approaches, including autoencoder (AE), Deep Belief Network (DBN), Convolutional Neural Network, Recurrent Neural Network (including Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs)), Generative Adversarial Network (GAN), and Deep Reinforcement Learning (DRL). In the end, we discuss the challenges our research community is facing and the state-of-the-art works are coping with, also shown in Figure 3.

Figure 3.

Taxonomy of Deep Learning-based Human Activity Recognition with Wearables.

3. Related Work

There are some existing review papers in the literature for deep learning approaches for sensor-based human activity recognition [11,12,13,14].

Nweke et al. accentuated the advancements in deep learning models by proposing a taxonomy of generative, discriminative, and hybrid methods along with further explorations for the advantages and limitations up to year 2018 [12]. Similarly, Wang et al. conducted a thorough analysis on different sensor-based modalities, deep learning models, and their respective applications up to the year 2017 [11]. However, in recent years, due to huge advancements in the availability and computational power of computing resources and cutting-edge deep learning techniques, the applied deep learning area has been revolutionized and reached all-time-high performance in the field of sensor-based human activity recognition. Therefore, we aim to present the most recent advances and most exciting achievements in the community in a timely manner to our readers.

In another work, Chen et al. provided the community a comprehensive review which has done an in-depth analysis of the challenges/opportunities for deep learning in sensor-based HAR and proposed a new taxonomy for the challenges ahead of the activity recognition systems [13]. In contrast, we view our work as more of a gentle introduction of this field to students and novices in the way that our literature review provides the community with a detailed analysis on most recent state-of-the-art deep learning architectures (i.e., CNN, RNN, GAN, Deep Reinforcement Learning, and hybrid models) and their respective pros and cons on HAR benchmark datasets. At the same time, we distill our knowledge and experience from our past works in this field and present the challenges and opportunities from a different viewpoint. Another recent work was presented by Ramanujam et al. , in which they categorized the deep learning architectures in CNN, LSTM, and hybrid methods and conducted an in-depth analysis on the benchmark datasets [14]. Compared with their work, our paper pays more attention to the most recent cutting-edge deep learning methods applied on HAR on-body sensory data, such as GAN and DRL. We also provide both new learners and experienced researchers with a profound resource in terms of model comparison, model selection and model deployment. In a nutshell, our review has thoroughly analysed most up-to-date deep learning architectures applied on various wearable sensors, elaborated on their respective applications, and compared performances on public datasets. What’s more, we attempt to cover the most recent advances in resolving the challenges and difficulties and shed light on possible research opportunities.

4. Human Activity Recognition Overview

4.1. Applications

In this section, we illustrate the major areas and applications of wearable devices in HAR. Figure 1a, taken from the wearable technology database [6], breaks down the distribution of application types of 582 commercial wearables registered since 2015 [6]. The database suggests that wearables are increasing in popularity and will impact people’s lives in several ways, particularly in applications ranging from fitness and lifestyle to medical and human-computer interaction.

4.1.1. Wearables in Fitness and Lifestyle

Physical activity involves activities such as sitting, walking, laying down, going up or downstairs, jogging, and running [15]. Regular physical activity is increasingly being linked to a reduction in risk for many chronic diseases, such as obesity, diabetes, and cardiovascular disease, and has been shown to improve mental health [16]. The data recorded by wearable devices during these activities include plenty of information, such as duration and intensity of activity, which further reveals an individual’s daily habits and health conditions [17]. For example, dedicated products such as Fitbit [18] can estimate and record energy expenditure on smart devices, which can further serve as an important step in tracking personal activity and preventing chronic diseases [19]. Moreover, there has been evidence of the association between modes of transport (motor vehicle, walking, cycling, and public transport) and obesity-related outcomes [20]. Being aware of daily locomotion and transportation patterns can provide physicians with the necessary information to better understand patients’ conditions and also encourage users to engage in more exercise to promote behavior change [21]. Therefore, the use of wearables in fitness and lifestyle has the potential to significantly advance one of the most prolific aspects of HAR applications [22,23,24,25,26,27,28,29,30].

Energy (or calorie) expenditure (EE) estimation has grown to be an important reason why people care to track their personal activity. Self-reflection and self-regulation of one’s own behavior and the habit has been important factor in designing interventions that prevent chronic diseases such as obesity, diabetes, and cardiovascular diseases.

4.1.2. Wearables in Healthcare and Rehabilitation

HAR has greatly impacted the ability to diagnose and capture pertinent information in healthcare and rehabilitation domains. By tracking, storing, and sharing patient data with medical institutions, wearables have become instrumental for physicians in patient health assessment and monitoring. Specifically, several works have introduced systems and methods for monitoring and assessing Parkinson disease (PD) symptoms [31,32,33,34,35,36]. Pulmonary disease, such as Chronic Obstructive Pulmonary Disease (COPD), asthma, and COVID-19, is one of leading causes of morbidity and mortality. Some recent works use wearables to detect cough activity, a major symptom of pulmonary diseases [37,38,39,40]. Other works have introduced methods for monitoring stroke in infants using wearable accelerometers [41] and methods for assessing depressive symptoms utilizing wrist-worn sensors [42]. In addition, detecting muscular activities and hand motions using electromyography (EMG) sensors has been widely applied to enable improved prostheses control for people with missing or damaged limbs [43,44,45,46].

4.1.3. Wearables in Human Computer Interaction (HCI)

Modern wearable technology in HCI has provided us with flexible and convenient methods to control and communicate with electronics, computers, and robots. For example, a wrist-worn wearable outfitted with an inertial measurement unit (IMU) can easily detect the wrist shaking [47,48,49] to control smart devices to skip a song by shaking the hand, instead of bringing up the screen, locating, and pushing a button. Furthermore, wearable devices have played an essential role in many HCI applications in entertainment systems and immersive technology. One example field is augmented reality (AR) and virtual reality (VR), which has changed the way we interact and view the world. Thanks to accurate activity, gesture, and motion detection from wearables, these applications could induce feelings of cold or hot weather by providing an immersive experience by varying the virtual environment and could enable more realistic interaction between the human and virtual objects [43,44].

4.2. Wearable Sensors

Wearable sensors are the foundation of HAR systems. As shown in Figure 1b, there are a large number of off-the-shelf smart devices or prototypes under development today, including smartphones, smartwatches, smart glasses, smart rings [50], smart gloves [51], smart armbands [52], smart necklaces [53,54,55], smart shoes [56], and E-tattoos [57]. These wearable devices cover the human body from head to toe with a general distribution of devices shown in Figure 1c, as reported by [6]. The advance of micro-electro-mechanical system (MEMS) technology (microscopic devices, comprising a central unit such as a microprocessor and multiple components that interact with the surroundings such as microsensors) has allowed wearables to be miniaturized and lightweight to reduce the burden on adherence to the use of wearables and Internet of Things (IoT) technologies. In this section, we introduce and discuss some of the most prevalent MEMS sensors commonly used in wearables for HAR. The summary of wearable sensors is represented as a part of Figure 3.

4.2.1. Inertial Measurement Unit (IMU)

Inertial measurement unit (IMU) is an integrated sensor package comprising of accelerometer, gyroscope, and sometimes magnetometer. Specifically, an accelerometer detects linear motion and gravitational forces by measuring the acceleration in 3 axes (x, y, and z), while a gyroscope measures rotation rate (roll, yaw, and pitch). The magnetometer is used to detect and measure the earth’s magnetic fields. Since a magnetometer is often used to obtain the posture and orientation in accordance with the geomagnetic field, which is typically outside the scope of HAR, the magnetometer is not always included in data analysis for HAR. By contrast, accelerometers and gyroscopes are commonly used in many HAR applications. We refer to an IMU package comprising a 3-axis accelerometer and a 3-axis gyroscope as a 6-axis IMU. This component is often referred to as a 9-axis IMU if a 3-axis magnetometer is also integrated. Owing to mass manufacturing and the widespread use of smartphones and wearable devices in our daily lives, IMU data are becoming more ubiquitous and more readily available to collect. In many HAR applications, researchers carefully choose the sampling rate of the IMU sensors depending on the activity of interest, often choosing to sample between 10 and several hundred Hz. In [58], Chung et al. tested a range of sampling rates and gave the best one in his application. Besides, it’s been shown that higher sampling rates allow the system to capture signals with higher precision and frequencies, leading to more accurate models at the cost of higher energy and resource consumption. For example, the projects presented in [59,60] utilize sampling rates above the typical rate. These works sample at 4 kHz to sense the vibrations generated from the interaction between a hand and a physical object.

4.2.2. Electrocardiography (ECG) and Photoplethysmography (PPG)

Electrocardiography (ECG) and photoplethysmography (PPG) are the most commonly used sensing modalities for heart rate monitoring. ECG, also called EKG, detects the heart’s electrical activity through electrodes attached to the body. The standard 12-lead ECG attaches ten non-intrusive electrodes to form 12 leads on the limbs and chest. ECG is primarily employed to detect and diagnose cardiovascular disease and abnormal cardiac rhythms. PPG relies on using a low-intensity infrared (IR) light sensor to measure blood flow caused by the expansion and contraction of heart chambers and blood vessels. Changes in blood flow are detected by the PPG sensor as changes in the intensity of light; filters are then applied to the signal to obtain an estimate of heart rate. Since ECG directly measures the electrical signals that control heart activity, it typically provides more accurate measurements for heart rate and often serves as a baseline for evaluating PPG sensors.

4.2.3. Electromyography (EMG)

Electromyography (EMG) measures the electrical activity produced by muscle movement and contractions. EMG was first introduced in clinical tests to assess and diagnose the functionality of muscles and motor neurons. There are two types of EMG sensors: Surface EMG (sEMG) and intramuscular EMG (iEMG). sEMG uses an array of electrodes placed on the skin to measure the electrical signals generated by muscles through the surface of the skin [61]. There are a number of wearable applications that detect and assess daily activities using sEMG [44,62]. In [63], researchers developed a neural network that distinguishes ten different hand motions using sEMG to advance the effectiveness of prosthetic hands. iEMG places electrodes directly into the muscle beneath the skin. Because of its invasive nature, non-invasive wearable HAR systems do not typically include iEMG.

4.2.4. Mechanomyography (MMG)

Mechanomyography (MMG) uses a microphone or accelerometer to measure low-frequency muscle contractions and vibrations, as opposed to EMG, which uses electrodes. For example, 4-channel MMG signals from the thigh can be used to detect knee motion patterns [64]. Detecting these knee motions is helpful for the development of power-assisted wearables for powered lower limb prostheses. The authors create a convolutional neural network and support vector machine (CNN-SVM) architecture comprising a seven-layer CNN to learn dominant features for specific knee movements. The authors then replace the fully connected layers with an SVM classifier trained with the extracted feature vectors to improve knee motion pattern recognition. Moreover, Meagher et al. [65] proposed developing an MMG device as a wearable sensor to detect mechanical muscle activity for rehabilitation after stroke.

Other wearable sensors used in HAR include (but are not limited to) piezoelectric sensor [66,67] for converting changes in pressure, acceleration, temperature, strain, or force to electrical charge, barometric pressure sensor [68] for atmospheric pressure, temperature measurement [69], electroencephalography (EEG) for measuring brain activity [70], respiration sensors for breathing monitoring [71], ultraviolet (UV) sensors [72] for sun exposure assessment, GPS for location sensing, microphones for audio recording [39,73,74], and wearable cameras for image or video recording [55]. It is also important to note that the wearable camera market has drastically grown with cameras such as GoPro becoming mainstream [75,76,77,78] over the last few years. However, due to privacy concerns posed by participants related to video recording, utilizing wearable cameras for longitudinal activity recognition is not as prevalent as other sensors. Additionally, HAR with image/video processing has been extensively studied in the computer vision community [79,80], and the methodologies commonly used differ significantly from techniques used for IMUs, EEG, PPG, etc. For these reasons, despite their significance in applications of deep learning methods, this work does not cover image and video sensing for HAR.

4.3. Major Datasets

We list the major datasets employed to train and evaluate various ML and DL techniques in Table 1, ranked based on the number of citations they received per year according to Google Scholar. As described in the earlier sections, most datasets are collected via IMU, GPS, or ECG. While most datasets are used to recognize physical activity or daily activities [81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99], there are also a few datasets dedicated to hand gestures [100,101], breathing patterns [102], and car assembly line activities [103], as well as those that monitor gait for patients with PD [104].

Table 1.

Major Public Datasets for Wearable-based HAR.

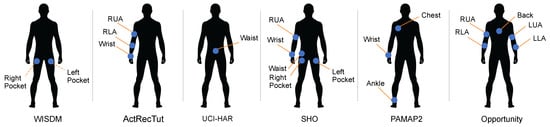

Most of the datasets listed above are publicly available. The University of California Riverside-Time Series Classification (UCR-TSC) archive is a collection of datasets collected from various sensing modalities [109]. The UCR-TSC archive was first released and included 16 datasets, growing to 85 datasets by 2015 and 128 by October 2018. Recently, researchers from the University of East Anglia have collaborated with UCR to generate a new collection of datasets, which includes nine categories of HAR: BasicMotions , Cricket, Epilepsy, ERing, Handwriting, Libras, NATOPS, RacketSports, and UWaveGestureLibrary [106]. One of the most commonly used datasets is the OPPORTUNITY dataset [90]. This dataset contains data collected from 12 subjects using 15 wireless and wired networked sensor systems, with 72 sensors and ten modalities attached to the body or the environment. Existing HAR papers mainly focus on data from on-body sensors, including 7 IMUs and 12 additional 3D accelerometers for classifying 18 kinds of activities. Researchers have proposed various algorithms to extract features from sensor signals and to perform activity classification using machine-learned models like K Nearest Neighbor (KNN) and SVM [22,110,111,112,113,114,115,116,117,118]. Another widely-used dataset is PAMAP2 [91], which is collected from 9 subjects performing 18 different activities, ranging from jumping to house cleaning, with 3 IMUs (100-Hz sampling rate) and a heart rate monitor (9 Hz) attached to each subject. Other datasets such as Skoda [103] and WISDM [81] are also commonly used to train and evaluate HAR algorithms. In Figure 4, we present the placement of inertial sensors in 9 common datasets.

Figure 4.

Placement of inertial sensors in different datasets: WISDOM; ActRecTut; UCI-HAR; SHO; PAMAP2; and Opportunity.

5. Deep Learning Approaches

In recent years, DL approaches have outperformed traditional ML approaches in a wide range of HAR tasks. There are three key factors behind deep learning’s success: Increasingly available data, hardware acceleration, and algorithmic advancements. The growth of datasets publicly shared through the web has allowed developers and researchers to quickly develop robust and complex models. The development of GPUs and FPGAs have drastically shortened the training time of complex and large models. Finally, improvements in optimization and training techniques have also improved training speed. In this section, we will describe and summarize HAR works from six types of deep learning approaches. We also present an overview of deep learning approaches in Figure 3.

5.1. Autoencoder

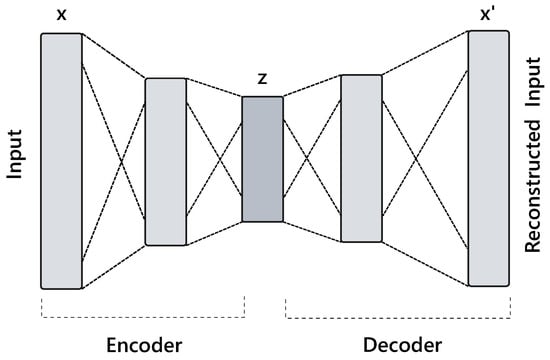

The autoencoder, originally called “autoassociative learning module”, was first proposed in the 1980s as an unsupervised pre-training method for artificial neural networks (ANN) [119]. Autoencoders have been widely adopted as an unsupervised method for learning features. As such, the outputs of autoencoders are often used as inputs to other networks and algorithms to improve performance [120,121,122,123,124]. An autoencoder is generally composed of an encoder module and a decoder module. The encoding module encodes the input signals into a latent space, while the decoder module transforms signals from the latent space back into the original domain. As shown in Figure 5, the encoder and decoder module is usually several dense layers (i.e., fully connected layers) of the form

where , are the learnable parameters of the encoder and decoder. is the non-linear activation function, such as Sigmoid, tanh, or rectified linear unit (ReLU). and refer to the weights of the layer, while and are the bias vectors. By minimizing a loss function applied on and , autoencoders aim at generating the final output by imitating the input. Autoencoders are efficient tools for finding optimal codes, , and performing dimensionality reduction. An autoencoder’s strength in dimensionality reduction has been applied to HAR in wearables [34,121,125,126,127,128,129,130,131] and functions as a powerful tool for denoising and information retrieval.

Figure 5.

Illustration of an autoencoder network [132].

As such, autoencoders are most commonly used for feature extraction and dimensionality reduction [120,122,123,124,125,126,133,134,135,136,137,138,139,140,141]. Autoencoders are generally used individually or in a stacked architecture with multiple autoencoders. Mean squared error or mean squared error plus KL divergence loss functions are typically used to train autoencoders. Li et al. presents an autoencoder architecture where a sparse autoencoder and a denoising autoencoder are used to explore useful feature representations from accelerometer and gyroscope sensor data, and then they perform classification using support vector machines [125]. Experiments are performed on a public HAR dataset [82] from the UCI repository, and the classification accuracy is compared with that of Fast Fourier Transform (FFT) in the frequency domain and Principal Component Analysis (PCA). The result reveals that the stacked autoencoder has the highest accuracy of 92.16% and provides a 7% advantage over traditional methods with hand-crafted features. Jun and Choi [142] studied the classification of newborn and infant activities into four classes: Sleeping, moving in agony, moving in normal condition, and movement by an external force. Using the data from an accelerometer attached to the body and a three-layer autoencoder combined with k-means clustering, they achieve 96% weighted accuracy in an unsupervised way. Additionally, autoencoders have been explored for feature extraction in domain transfer learning [143], detecting unseen data [144], and recognizing null classes [145]. For example, Prabono et al. [146] propose a two-phase autoencoder-based approach of domain adaptation for human activity recognition. In addition, Garcia et al. [147] proposed an effective multi-class algorithm that consists of an ensemble of autoencoders where each autoencoder is associated with a separate class. This modular structure of classifiers makes models more flexible when adding new classes, which only calls for adding new autoencoders instead of re-training the model.

Furthermore, autoencoders are commonly used to sanitize and denoise raw sensor data [127,130,148], a known problem with wearable signals that impacts our ability to learn patterns in the data. Mohammed and Tashev in [127] investigated the use of sensors integrated into common pieces of clothing for HAR. However, they found that sensors attached to loose clothing are prone to contain large amounts of motion artifacts, leading to low mean signal-to-noise ratios (SNR). To remove motion artifacts, the authors propose a deconvolutional sequence-to-sequence autoencoder (DSTSAE). The weights for this network are trained with a weighted form of a standard VAE loss function. Experiments show that the DSTSAE outperforms traditional Kalman Filters and improves the SNR from −12 dB to +18.2 dB, with the F1-score of recognizing gestures improved by 14.4% and locomotion activities by 55.3%. Gao et al. explores the use of stacking autoencoders to denoise raw sensor data to improve HAR using the UCI dataset [82,130]. Then, LightGBM (LBG) is used to classify activities using the denoised signals.

Autoencoders are also commonly used to detect abnormal muscle movements, such as Parkinson’s Disease and Autism Spectrum Disorder (ASD). Rad et al. in [34] utilizes an autoencoder to denoise and extract optimized features of different movements and use a one-class SVM to detect movement anomalies. To reduce the overfitting of the autoencoder, the authors inject artificial noise to simulate different types of perturbations into the training data. Sigcha et al. in [149] uses a denoising autoencoder to detect freezing of gait (FOG) in Parkinson’s disease patients. The autoencoder is only trained using data labelled as a normal movement. During the testing phase, samples with significant statistical differences from training data are classified as abnormal FOG events.

As autoencoders map data into a nonlinear and low-dimensional latent space, they are well-suited for applications requiring privacy preservation. Malekzadeh et al. developed a novel replacement autoencoder that removes prominent features of sensitive activities, such as drinking, smoking, or using the restroom [121]. Specifically, the replacement autoencoder is trained to produce a non-sensitive output from a sensitive input via stochastic replacement while keeping characteristics of other less sensitive activities unchanged. Extensive experiments are performed on Opportunity [90], Skoda [103], and Hand-Gesture [100] datasets. The result shows that the proposed replacement autoencoder can retain the recognition accuracy of non-sensitive tasks using state-of-the-art techniques while simultaneously reducing detection capability for sensitive tasks.

Mohammad et al. introduces a framework called Guardian-Estimator-Neutralizer (GEN) that attempts to recognize activities while preserving gender privacy [128]. The rationale behind GEN is to transform the data into a set of features containing only non-sensitive features. The Guardian, which is constructed by a deep denoising autoencoder, transforms the data into representation in an inference-specific space. The Estimator comprises a multitask convolutional neural network that guides the Guardian by estimating sensitive and non-sensitive information in the transformed data. Due to privacy concerns, it attempts to recognize an activity without disclosing a participant’s gender. The Neutralizer is an optimizer that helps the Guardian converge to a near-optimal transformation function. Both the publicly available MobiAct [150] and a new dataset, MotionSense, are used to evaluate the proposed framework’s efficacy. Experimental results demonstrate that the proposed framework can maintain the usefulness of the transformed data for activity recognition while reducing the gender classification accuracy to 50% (random guessing) from more than 90% when using raw sensor data. Similarly, the same authors have proposed another anonymizing autoencoder in [129] for classifying different activities while reducing user identification accuracy. Unlike most works, where the output to the encoder is used as features for classification, this work utilizes both the encoder and decoder outputs. Experiments performed on a self-collected dataset from the accelerometer and gyroscope showcased excellent activity recognition performance (above 92%) while keeping user identification accuracy below 7%.

5.2. Deep Belief Network (DBN)

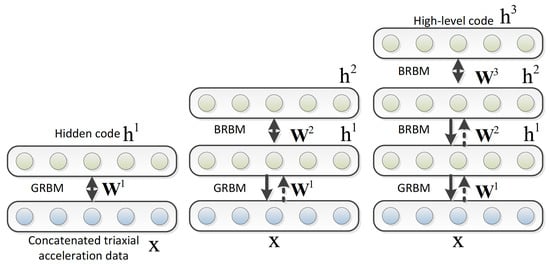

A DBN, as illustrated in Figure 6, is formed by stacking multiple simple unsupervised networks, where the hidden layer of the preceding network serves as the visible layer for the next. The representation of each sub-network is generally the restricted Boltzmann machine (RBM), an undirected generative energy-based model with a “visible” input layer, a hidden layer, and intra-layer connections in between. The DBN typically has connections between the layers but not between units within each layer. This structure leads to a fast and layer-wise unsupervised training procedure, where contrastive divergence (a training technique to approximate the relationship between a network’s weights and its error) is applied to every pair of layers in the DBN architecture sequentially, starting from the “lowest” pair.

Figure 6.

The greedy layer-wise training of DBNs. The first level is trained on triaxial acceleration data. Then, more RBMs are repeatedly stacked to form a deep activity recognition model [151].

The observation that DBNs can be trained greedily led to one of the first effective deep learning algorithms [152]. There are many attractive implementations and uses of DBNs in real-life applications such as drug discovery [153], natural language understanding [154], fault diagnosis [155], etc. There are also many attempts to perform HAR with DBNs. In early exploratory work back in 2011 [156], a five-layer DBN is trained with the input acceleration data collected from mobile phones. The accuracy improvement ranges from 1% to 32% when compared to traditional ML methods with manually extracted features.

In later works, DBN is applied to publicly available datasets [151,157,158,159]. In [157], two five-layer DBNs with different structures are applied to the Opportunity dataset [90], USC-HAD dataset [94], and DSA dataset [87], and the results demonstrate improved accuracy for HAR over traditional ML methods for all the three datasets. Specifically, the accuracy for the Opportunity, USC-HAD, and DSA datasets are 82.3% (1.6% improvement over traditional methods), 99.2% (13.9% improvement), and 99.1% (15.9% improvement), respectively. In addition, Alsheikh et al. [151] tested the activity recognition performance of DBNs using different parameter settings. Instead of using the raw acceleration data similar to [156], they used spectrogram signals of the triaxial accelerometer data to train the deep activity recognition models. They found that deep models with more layers outperform the shallow models, and the topology of layers having more neurons than the input layer is shown to be more advantageous, which indicates overcompete representation is essential for learning deep models. The accuracy of the tuned DBN was 98.23%, 91.5%, and 89.38% on the WISDM [81], Daphnet [104], and Skoda [103] benchmark datasets, respectively. In [158], a RBM is used to improve upon other methods of sensor fusion, as neural networks can identify non-intuitive predictive features largely from cross-sensor correlations and thus offer a more accurate estimation. The recognition accuracy with this architecture on the Skoda dataset reached 81%, which is around 6% higher than the traditional classification method with the best performance (Random Forest).

In addition to taking advantage of public datasets, there are also researchers employing DBNs on human activity or health-related recognition with self-collected datasets [31,160]. In [31], DBNs are employed in Parkinson’s disease diagnosis to explore if they can cope with the unreliable labelling that results from naturalistic recording environments. The data was collected with two tri-axial accelerometers, with one worn on each wrist of the participant. The DBNs built are two-layer RBMs, with the first layer as a Guassian-binary RBM (containing gaussian visible units) and the second layer as binary-binary (containing only binary units) (please refer to [161] for details). In [160], an unsupervised five-layer DBM-DNN is applied for the automatic detection of eating episodes via commercial bluetooth headsets collecting raw audio signals, and demonstrate classification improvement even in the presence of ambient noise. The accuracy of the proposed DBM-DNN approach is 94%, which is significantly better than SVM with a 75.6% accuracy.

5.3. Convolutional Neural Network (CNN)

A CNN comprises convolutional layers that make use of the convolution operation, pooling layers, fully connected layers, and an output layer (usually Softmax layer). The convolution operation with a shared kernel enables the learning process of space invariant features. Because each filter in a convolutional layer has a defined receptive field, CNN is good at capturing local dependency, compared with a fully-connected neural network. Though each kernel in a layer covers a limited size of input neurons, by stacking multiple layers, the neurons of higher layers will cover a larger more global receptive field. The pyramid structure of CNN contributes to its capability of gathering low-level local features into high-level semantic meanings. This allows CNN to learn excellent features as shown in [162], which compares the features extracted from CNN to hand-crafted time and frequency domain features (Fast Fourier Transform and Discrete Cosine Transform).

CNN incorporates a pooling layer that follows each convolutional layer in most cases. A pooling layer compresses the representation it is learning and strengthens the model against noise by dropping a portion of the output to a convolutional layer. Generally, a few fully connected layers follow after a stack of convolutional and pooling layers that reduce feature dimensionality before being fed into the output layer. A softmax classifier is usually selected as the final output layer. However, as an exception, some studies explored the use of traditional classifiers as the output layer in a CNN [64,118].

Most CNNs use univariate or multivariate sensor data as input. Besides raw or filtered sensor data, the magnitude of 3-axis acceleration is often used as input, as shown in [26]. Researchers have tried encoding time-series data into 2D images as input into the CNN. In [163], the Short-time Fourier transform (STFT) for time-series sensor data is calculated, and its power spectrum is used as the input to a CNN. Since time series data is generally one-dimensional, most CNNs adopt 1D-CNN kernels. Works that use frequency-domain inputs (e.g., spectrogram), which have an additional frequency dimension, will generally use 2D-CNN kernels [164]. The choice of 1D-CNN kernel size normally falls in the range of 1 × 3 to 1 × 5 (with exceptions in [22,63,64] where kernels of size 1 × 8, 2 × 101, and 1 × 20 are adopted).

To discover the relationship between the number of layers, the kernel size, and the complexity level of the tasks, we picked and summarized several typical studies in Table 2. A majority of the CNNs consist of five to nine layers [23,63,64,113,114,165,166,167,168], usually including two to three convolutional layers, two to three max-pooling layers, followed by one to two fully connected layers before feeding the feature representation into the output layer (softmax layer in most cases). Dong et al. [169] demonstrated performance improvements by leveraging both handcrafted time and frequency domain features along with features generated from a CNN, called HAR-Net, to classify six locomotion activities using accelerometer and gyroscope signals from a smartphone. Ravi et al. [170] used a shallow three-layer CNN network including a convolutional layer, a fully connected layer, and a softmax layer to perform on-device activity recognition on a resource-limited platform and shown its effectiveness and efficiency on public datasets. Zeng et al. [22] and Lee et al. [26] also used a small number of layers (four layers). The choice of the loss function is an important decision in training CNNs. In classification tasks, cross-entropy is most commonly used, while in regression tasks, mean squared error is most commonly used. Most CNN models process input data by extracting and learning channel-wise features separately while Huang et al. [167] first propose a shallow CNN that considers cross-channel communication. The channels in the same layer interact with each other to obtain discriminative features of sensor data.

Table 2.

Summary of typical studies that use layer-by-layer CNN structure in HAR and their configurations. We aim to present the relationship of CNN kernels, layers, and targeted problems (application and sensors). Key: C—convolutional layer; P—max-pooling layer; FC—fully connected layer; S—softmax; S1—accelerometer; S2—gyroscope; S3—magnetometer; S4—EMG; S5—ECG

The number of sensors used in a HAR study can vary from a single one to as many as 23 [90]. In [23], a single accelerometer is used to collect data from three locations on the body: Cloth pocket, trouser pocket and waist. The authors collect data on 100 subjects, including eight activities such as falling, running, jumping, walking, walking quickly, step walking, walking upstairs, and walking downstairs. Moreover, HAR applications can involve multiple sensors of different types. To account for all these different types of sensors and activities, Grzeszick et al. [176] proposed a multi-branch CNN architecture. A multi-branch design adopts a parallel structure that trains separate kernels for each IMU sensor and concatenates the output of branches at a late stage, after which one or more fully connected layers are applied on the flattened feature representation before feeding into the final output layer. For instance, a CNN-IMU architecture contains m parallel branches, one per IMU. Each branch contains seven layers, then the outputs of each branch are concatenated and fed into a fully connected and a softmax output layer. Gao et al. [177] has introduced a novel dual attention module including channel and temporal attention to improving the representation learning ability of a CNN model. Their method has outperformed regular CNN considerably on a number of public datasets such as PAMAP2 [91], WISDM [81], UNIMIB SHAR [93], and Opportunity [90].

Another advantage of DL is that the features learned in one domain can be easily generalized or transferred to other domains. The same human activities performed by different individuals can have drastically different sensor readings. To address this challenge, Matsui et al. [163] adapted their activity recognition to each individual by adding a few hidden layers and customizing the weights using a small amount of individual data. They were able to show a 3% improvement in recognition performance.

5.4. Recurrent Neural Network (RNN)

Initially, the idea of using temporal information was proposed in 1991 [178] to recognize a finger alphabet consisting of 42 symbols and in 1995 [179] to classify 66 different hand shapes with about 98% accuracy. Since then, the recurrent neural network (RNN) with time series as input has been widely applied to classify human activities or estimate hand gestures [180,181,182,183,184,185,186,187].

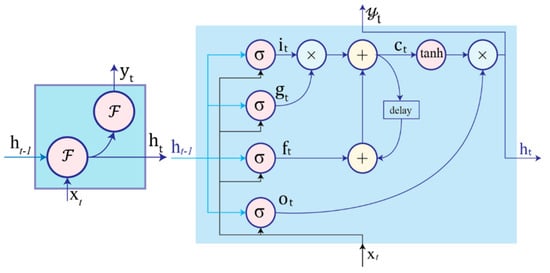

Unlike feed-forward neural networks, an RNN processes the input data in a recurrent behavior. Equivalent to a directed graph, RNN exhibits dynamic behaviors and possesses the capability of modelling temporal and sequential relationships due to a hidden layer with recurrent connections. A typical structure for an RNN is shown in Figure 7 with the current input, , and previous hidden state, . The network generates the current hidden state, , and output, , is as follows:

where , , and are the weights for the hidden-to-hidden recurrent connection, input-to-hidden connection, and hidden-to-output connection, respectively. and are bias terms for the hidden and output states, respectively. Furthermore, each node is associated with an element-wise non-linearity function as an activation function such as the sigmoid, hyperbolic tangent (tanh), or rectified linear unit (ReLU).

Figure 7.

Schematic diagram of an RNN node and LSTM cell [202]. Left: RNN node where is the previous hidden state, is the current input sample data, is the current hidden state, is the current output, and is the activation function. Right: LSTM cell with internal recurrence and outer recurrence .

In addition, many researchers have undertaken extensive work to improve the performance of RNN models in the context of human activity recognition and have proposed various models based on RNNs, including Independently RNN (IndRNN) [188], Continuous Time RNN (CTRNN) [189], Personalized RNN (PerRNN) [190], Colliding Bodies Optimization RNN (CBO-RNN) [191]. Unlike previous models with one-dimension time-series input, Lv et al. [192] builds a CNN + RNN model with stacked multisensor data in each channel for fusion before feeding into the CNN layer. Ketykó et al. [193] uses an RNN to address the domain adaptation problem caused by intra-session, sensor placement, and intra-subject variances.

HAR improves with longer context information and longer temporal intervals. However, this may result in vanishing or exploding gradient problems while backpropagating gradients [194]. In an effort to address these challenges, long short-term memory (LSTM)-based RNNs [195], and Gated Recurrent Units (GRUs) [196] are introduced to model temporal sequences and their broad dependencies. The GRU introduces a reset and update gate to control the flow of inputs to a cell [197,198,199,200,201]. The LSTM has been shown capable of memorizing and modelling the long-term dependency in data. Therefore, LSTMs have taken a dominant role in time-series and textual data analysis. It has made substantial contributions to human activity recognition, speech recognition, handwriting recognition, natural language processing, video analysis, etc. As illustrated in Figure 7 [202], a LSTM cell is composed of: (1) input gate, , for controlling flow of new information; (2) forget gate, , setting whether to forget content according to internal state; (3) output gate, , controlling output information flow; (4) input modulation gate, , as main input; (5) internal state, , dictates cell internal recurrence; (6) hidden state, , contains information from samples encountered within the context window previously. The relationship between these variables are listed as Equation (2) [202].

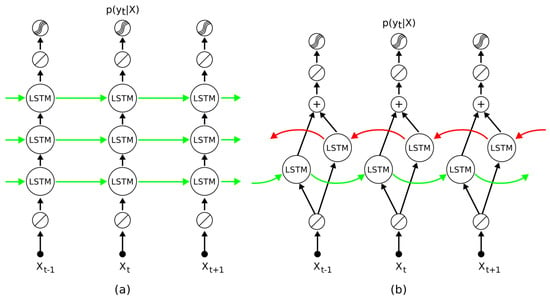

As shown in Figure 8, the input time series data is segmented into windows and fed into the LSTM model. For each time step, the model computes class prediction scores, which are then merged via late-fusion and used to calculate class membership probabilities through the softmax layer. Previous studies have shown that LSTMs have high performance in wearable HAR [199,202,203]. Researchers in [204] rigorously examine the impact of hyperparameters in LSTM with the fANOVA framework across three representative datasets, containing movement data captured by wearable sensors. The authors assessed thousands of settings with random hyperparameters and provided guidelines for practitioners seeking to apply deep learning to their own problem scenarios [204]. Bidirectional LSTMs, having both past and future recurrent connections, were used in [205,206] to classify activities.

Figure 8.

The structure of LSTM and bi-directional LSTM model [204]. (a). LSTM network hidden layers containing LSTM cells and a final softmax layer at the top. (b) bi-directional LSTM network with two parallel tracks in both future (green) and past (red) directions.

Researchers have also explored other architectures involving LSTMs to improve benchmarks on HAR datasets. Residual networks possess the advantage that they are much easier to train as the addition operator enables gradients to pass through more directly. Residual connections do not impede gradients and could help to refine the output of layers. For example, [200] proposes a harmonic loss function and [207] combines LSTM with batch normalization to achieve 92% accuracy with raw accelerometer and gyroscope data. Ref. [208] proposes a hybrid CNN and LSTM model (DeepConvLSTM) for activity recognition using multimodal wearable sensor data. DeepConvLSTM performed significantly better in distinguishing closely-related activities, such as “Open/Close Door” and “Open/Close Drawer”. Moreover, Multitask LSTM is developed in [209] to first extract features with shared weight, and then classify activities and estimate intensity in separate branches. Qin et al. proposed a deep-learning algorithm that combines CNN and LSTM networks [210]. They achieved 98.1% accuracy on the SHL transportation mode classification dataset with CNN-extracted and hand-crafted features as input. Similarly, other researchers [211,212,213,214,215,216,217,218,219] have also developed the CNN-LSTM model in various application scenarios by taking advantage of the feature extraction ability of CNN and the time-series data reasoning ability of LSTM. Interestingly, utilizing CNN and LSTM combined model, researchers in [219] attempt to eliminate sampling rate variability, missing data, and misaligned data timestamps with data augmentation when using multiple on-body sensors. Researchers in [220] explored the placement effect of motion sensors and discovered that the chest position is ideal for physical activity identification.

Raw IMU and EMG time series data are commonly used as inputs to RNNs [193,221,222,223,224,225]. A number of major datasets used to train and evaluate RNN models have been created, including the Sussex-Huawei Locomotion-Transportation (SHL) [188,198], PAMAP2 [192,226] and Opporunity [203]. In addition to raw time series data [199], Besides raw time series data, custom features are also commonly used as inputs to RNNs. Ref. [197] showed that training an RNN with raw data and with simple custom features yielded similar performance for gesture recognition (96.89% vs 93.38%).

However, long time series may have many sources of noise and irrelevant information. The concept of attention mechanism was proposed in the domain of neural machine translation to address the problem of RNNs being unable to remember long-term relationships. The attention module mimics human visual attention to building direct mappings between the words/phrases that represent the same meaning in two languages. It eliminates the interference from unrelated parts of the input when predicting the output. This is similar to what we as humans perform when we translate a sentence or see a picture for the first time; we tend to focus on the most prominent and central parts of the picture. An RNN encoder attention module is centred around a vector of importance weights. The weight vector is computed with a trainable feedforward network and is combined with RNN outputs at all the time steps through the dot product. The feedforward network takes all the RNN immediate outputs as input to learn the weights for each time step. [201] utilizes attention in combination with a 1D CNN Gated Recurrent Units (GRUs), achieving HAR performances of 96.5% ± 1.0%, 93.1% ± 2.2%, and 89.3% ± 1.3% on Heterogeneous [86], Skoda [103], and PAMAP2 [91] datasets, respectively. [226] applies temporal attention and sensor attention into LSTM to improve the overall activity recognition accuracy by adaptively focusing on important time windows and sensor modalities.

In recent years, block-based modularized DL networks have been gaining traction. Some examples are GoogLeNet with an Inception module and Resnet with residual blocks. The HAR community is also actively exploring the application of block-based networks. In [227], the authors have used GoogLeNet’s Inception module combined with a GRU layer to build a HAR model. The proposed model was showed performance improvements on three public datasets (Opportunity, PAMAP2 and Smartphones datasets). Qian et al. [228] developed the model with in a statistical module to learn all orders of moments statistics as features, LSTM in a spatial module to learn correlations among sensors placements, and LSTM + CNN in a temporal module to learn temporal sequence dependencies along the time scale.

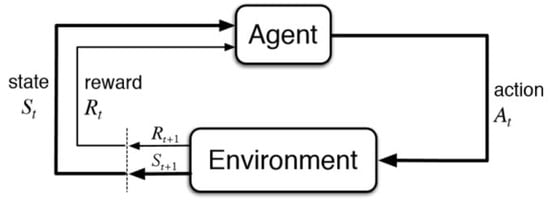

5.5. Deep Reinforcement Learning (DRL)

AE, DBN, CNN, and RNN fall within the realm of supervised or unsupervised learning. Reinforcement learning is another paradigm where an agent attempts to learn optimal policies for making decisions in an environment. At each time step, the agent takes an action and then receives a reward from the environment. The state of the environment accordingly changes with the action made by the agent. The goal of the agent is to learn the (near) optimal policy (or probability of action, state pairs) through the interaction with the environment in order to maximize a cumulative long-term reward. The two entities—agent and environment—and the three key elements—action, state and reward—collectively form the paradigm of RL. The structure of RL is shown in Figure 9.

Figure 9.

A typical structure of a reinforcement learning network [229].

In the domain of HAR, [230] uses DRL to predict arm movements with 98.33% accuracy. Ref. [231] developed a reinforcement learning model for imitating the walking pattern of a lower-limb amputee on a musculoskeletal model. The system showed 98.02% locomotion mode recognition accuracy. Having a high locomotion recognition accuracy is critical because it helps lower-limb amputees prevent secondary impairments during rehabilitation. In [232], Bhat et al. propose a HAR online learning framework that takes advantage of reinforcement learning utilizing a policy gradient algorithm for faster convergence achieving 97.7% in recognizing six activities.

5.6. Generative Adversarial Network (GAN)

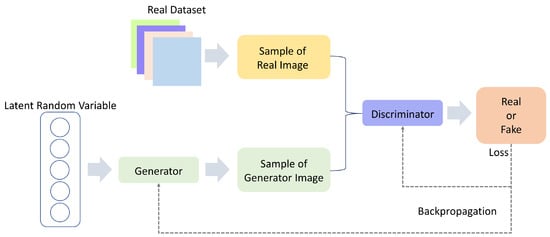

Originally proposed to generate credible fake images that resemble the images in the training set, GAN is a type of deep generative model, which is able to create new samples after learning from real data [233]. It comprises two networks, the generator () and the discriminator (), competing against each other in a zero-sum game framework as shown in Figure 10. During the training phase, the generator takes as input a random vector and transforms to plausible synthetic samples to challenge the discriminator to differentiate between original samples and fake samples . In this process, the generator strives to make the output probability approach one, in contrast with the discriminator, which tries to make the function’s output probability as close to zero as possible. The two adversarial rivals are optimized by finding the Nash equilibrium of the game in a zero-sum game setting, which means the adversarial rivals’ gains would be maintained regardless of what strategies are selected. However, it is not theoretically guaranteed that GAN zero-sum games reach Nash Equilibria [234].

Figure 10.

The structure of generative adversarial network.

GAN model has shown remarkable performance in generating synthetic data with high quality and rich details [235,236]. In the field of HAR, GAN has been applied as a semi-supervised learning approach to deal with unlabeled or partially labelled data for improving performance by learning representations from the unlabeled data, which later will be utilized by the network to generalize to the unseen data distribution [237]. Afterwards, GAN has shown the ability to generate balanced and realistic synthetic sensor data. Wang et al. [238] utilized GANs with a customized network to generate synthetic data from the public HAR dataset HASC2010corpus [239]. Similarly, Alharbi et al. [240] assessed synthetic data with CNN or LSTM models as a generator. In two public datasets, Sussex-Huawei Locomotion (SHL) and Smoking Activity Dataset (SAD), the discriminator was built with CNN layers, and the results demonstrated synthetic data with high quality and diversity with two public datasets. Moreover, by oversampling and adding synthetic sensor data into the training, researchers augmented and alleviated the originally imbalanced training set to achieve better performance. In [241,242], they generated verisimilar data of different activities, and Shi et al. [243] used the Boulic kinematic model, which aims to capture the three-dimensional positioning trend to synthesize personified walking data. Due to the ability to generate new data, GAN has been widely applied in transfer learning in HAR to help with the dramatic performance drop when the pre-trained model are tested against unseen data from new users. In transfer learning techniques, the learned knowledge from the source domain (subject) is transferred to the target domain to decrease the lack of performance of the models within the target domain. Moreover, [244] is an attempt that utilized GAN to perform cross-subject transfer learning for HAR since collecting data for each new user was infeasible. With the same idea, cross-subject transfer learning based on GAN outperformed those without GAN on Opportunity benchmark dataset in [244] and outperformed unsupervised learning on UCI and USC-HAD dataset [245]. Even more, transfer learning under conditions of cross-body, cross-user, and cross-sensor has been demonstrated superior performance in [246].

However, much more effort is needed in generating verisimilar data to alleviate the burden and cost of collecting sufficient user data. Additionally, it is typically challenging to obtain well-trained GAN models owing to the wide variability in amplitude, frequency, and period of the signals obtained from different types of activities.

5.7. Hybrid Models

As an advancement of machine learning models, researchers take advantage of different methods and propose hybrid models. The combination of CNN and LSTM endows the model capability of extracting local features as well as long-term dependencies in sequential data, especially for HAR time series data. For example, Challa et al. [247] proposed a hybrid of CNN and bidirectional long short-term memory (BiLSTM). The accuracy on UCI-HAR, WISDM [81], and PAMAP2 [91] datasets achieved 96.37%, 96.05%, and 94.29%, respectively. Dua et al. [248] proposed a model with CNN combined with GRU and obtained an accuracy of 96.20%, 97.21%, and 95.27% on UCI-HAR, WISDM [81], and PAMAP2 [91] datasets, respectively. In order to have a straightforward view of the functionality of hybrid models, we list several papers with CNN only, LSTM only, CNN + GRU, and CNN + LSTM in Table 3 and Table 4. In addition, Zhang et al. [249] proposed to combine reinforcement learning and LSTM model to improve the adaptability of different kinds of sensors, including EEG (EID dataset), RFID (RSSI dataset) [250], and wearable IMU (PAMAP2 dataset) [91]. Ref. [251] employed CNN for feature extraction and a reinforced selective attention model to automatically choose the most characteristic information from multiple channels.

Table 3.

Comparison of models on UCI-HAR dataset.

Table 4.

Comparison of models on PAMAP2 dataset.

5.8. Summary and Selection of Suitable Methods

Since the last decade, DL methods have gradually dominated a number of artificial intelligence areas, including sensor-based human activity recognition, due to its automatic feature extraction capability, strong expressive power, and the high performance rendered. When a sufficient amount of data are available, we are becoming prone to turn to DL methods. With all these types of available DL approaches discussed above, we need to get a full understanding of the pros and cons of these approaches in order to select the appropriate approach wisely. To this end, we briefly analyze the characteristics of each approach and attempt to give readers high-level guidance on how to choose the DL approach according to the needs and requirements.

The most salient characteristic of auto-encoder is that it does not require any annotation. Therefore, it is widely adopted in the paradigm of unsupervised learning. Due to its exceptional capability in dimension reduction and noise suppression, it is often leveraged to extract low-dimensional feature representation from raw input. However, auto-encoders may not necessarily learn the correct and relevant characteristics of the problem at hand. There is also generally little insight that can be gained for sensor-based auto-encoders, making it difficult to know which parameters to adjust during training. Deep belief networks are a generative model generally used for solving unsupervised tasks by learning low-dimensional features. Today, DBNs have been less often chosen compared with other DL approaches and are rarely used due to the tedious training process and increased training difficulty with DBN when the network goes deeper [7].

CNN architecture is powerful to extract hierarchical features owing to its layer-by-layer hierarchical structure. When compared with other approaches like RNN and GAN, CNN is relatively easy to implement. Besides, as one of the most studied DL approaches in image processing and computer vision, there is a large range of CNN variants existing that we can choose from to transfer to sensor-based HAR applications. When sensor data are represented as two-dimensional input, we can directly start with pre-trained models on a large image dataset (e.g., ImageNet) to fasten the convergence process and achieve better performance. Therefore, adapting the CNN approach enjoys a higher degree of flexibility in the available network architecture (e.g., GoogLeNet, MobileNet, ResNet, etc) than other DL approaches. However, CNN architecture has the requirement of fixed-sized input, in contrast to RNN, which accepts flexible input size. In addition, compared with unsupervised learning methods such as auto-encoder and DBN, a large number of annotated data are required, which usually demands expensive labelling resources and human effort to prepare the dataset. The biggest advantage of RNN and LSTMs is that they can model time series data (nearly all sensor data) and temporal relationships very well. Additionally, RNN and LSTMs can accept flexible input data size. The factors that prevent RNN and LSTMs from becoming the de facto method in DL-based HAR is that they are difficult to train in multiple aspects. They require a long training time and are very susceptible to diminishing/exploding gradients. It is also difficult to train them to efficiently model long time series.

GAN, as a generative model, can be used as a data augmentation method. Because it has a strong expressive capability to learn and imitate the latent data distribution of the targeted data, it outperforms traditional data augmentation methods [36]. Owing to its inherent data augmentation ability, GAN has the advantage of alleviating data demands at the beginning. However, GAN is often considered as hard to train because it alternatively trains a generator and a discriminator. Many variants of GAN and special training techniques have been proposed to tackle the converging issue [257,258,259].

Reinforcement learning is a relatively new area that is being explored for select areas in HAR, such as modelling muscle and arm movements [230,231]. Reinforcement learning is a type of unsupervised learning because it does not require explicit labels. Additionally, due to its online nature, reinforcement learning agents can be trained online while deployed in a real system. However, reinforcement learning agents are often difficult and time-consuming to train. Additionally, in the realm of DL-based HAR, the reward of the agent has to be given by a human, as in the case of [230,232]. In other words, even though people do not have to give explicit labels, humans are still required to provide something akin to a label (the reward) to train the agent.

When starting to choose a DL approach, we have a list of factors to consider, including the complexity of the target problem, dataset size, the availability and size of annotation, data quality, available computing resource, as well as the requirement of training time. Firstly, we have to evaluate and examine the problem complexity to decide upon promising venues of machine learning methods. For example, if the problem is simple enough to resolve with the provided sensor modality, it’s very likely that manual feature engineering and traditional machine learning method can provide satisfying results thus no DL method is needed. Secondly, before we choose the routine of DL, we would like to make sure the dataset size is sufficient to support a DL method. The lack of a sufficiently large corpus of labelled high-quality data is a major reason why DL methods cannot produce an expected result. Normally, when training a DL model with a limited dataset size, the model will be prone to overfitting, and the generalizability will be sacrificed, thus using a very deep network may not be a good choice. One option is to go for a shallow neural network or a traditional ML approach. Another option is to utilize specific algorithms to make the most out of the data. To be specific, data augmentation methods such as GAN can be readily implemented. Thirdly, another determining factor is the availability and size of annotation. When there is a large corpus of unlabeled sensor data at hand, a semi-supervised learning scheme is a promising direction one could consider, which will be discussed later in this work. Besides the availability of sensor data, the data quality also influences the network design. If the sensor is vulnerable to environmental noise, inducing a small SNR, some type of denoising structure (e.g., denoising auto-encoder) and increasing depth of the model can be considered to increase the noise-resiliency of the DL model. At last, a full evaluation of available computing resources and expected model training time cannot be more important for developers and researchers to choose a suitable DL approach.

6. Challenges and Opportunities

Though HAR has seen rapid growth, there are still a number of challenges that, if addressed, could further improve the status quo, leading to increased adoption of novel HAR techniques in existing and future wearables. In this section, we discuss these challenges and opportunities in HAR. Note that the issues discussed here are applicable to general HAR, not only DL-based HAR. We look to discuss and analyze the following four questions under our research question (challenges and opportunities), which overlap with the four major constituents of machine learning.

- What are the challenges in data acquisition? How do we resolve them?

- What are the challenges in label acquisition? What are the current methods?

- What are the challenges in modeling? What are potential solutions?

- What are the challenges in model deployment? What are potential opportunities?

6.1. Challenges in Data Acquisition

Data is the cornerstone of artificial intelligence. Models only perform as well as the quality of the training data. To build generalizable models, careful attention should be paid to data collection, ensuring the participants are representative of the population of interest. Moreover, determining a sufficient training dataset size is important in HAR. Currently, there is no well-defined method for determining the sample size of training data. However, showing the convergence of the error rate as a function of training data size is one approach shown by Yang et al. [260]. Acquiring a massive amount of high-quality data at a low cost is critical in every domain. In HAR, collecting raw data is labor-intensive considering a large number of different wearables. Therefore, proposing and developing innovative approaches to augmenting data with high quality is imperative for the growth of HAR research.

6.1.1. The Need for More Data

Data collection requires a considerable amount of effort in HAR. Particularly when researchers propose their original hardware, it is inevitable to collect data on users. Data augmentation is commonly used to generate synthetic training data when there is a data shortage. Synthetic noise is applied to real data to obtain new training samples. In general, using the dataset augmented with synthetic training samples yields higher classification accuracy when compared to using the original dataset [36,56,261,262]. Giorgi et al. augmented their dataset by varying each signal sample with translation drawn from a small uniform distribution and showed improvements in accuracy using this augmented dataset [56]. Ismail Fawaz et al. [262] utilized Dynamic Time Warping to augment data and tested on UCR archive [105]. Deep learning methods are also used to augment the datasets to improve performance [238,263,264]. Alzantot et al. [264] and Wang et al. [238] employed GAN to synthesize sensor data using existing sensor data. Ramponi et al. [263] designed a conditional GAN-based framework to generate new irregularly-sampled time series to augment unbalanced data sets. Several works extracted 3D motion information from videos and transferred the knowledge to synthesize virtual on-body IMU sensor data [265,266]. In this way, they realized cross-modal IMU sensor data generation using traditional computer vision and graphics methods. Opportunity: We have listed some of the most recent works focusing on cross-modal sensor data synthesis. However, few researchers (if any) used a deep generative model to build a video-sensor multi-modal system. If we take a broader view, many works are using cross-modal deep generative models (such as GAN) in data synthesis, such as from video to audio [267], from text to image and vice versa [268,269]. Therefore, taking advantage of the cutting-edge deep generative models may contribute to addressing the wearable sensor data scarcity issue [270]. Another avenue of research is to utilize transfer learning, borrowing well-trained models from domains with high performing classifiers (i.e., images), and adapting them using a few samples of sensor data.

6.1.2. Data Quality and Missing Data

The quality of models is highly dependent on the quality of the training data. Many real-world collection scenarios introduce different sources of noise that degrade data quality, such as electromagnetic interference or uncertainty in task scheduling for devices that perform sampling [271]. In addition to improving hardware systems, multiple algorithms have been proposed to clean or impute poor-quality data. Data imputation is one of the most common methods to replace poor quality data or fill in missing data when sampling rates fluctuate greatly. For example, Cao et al. introduced a bi-directional recurrent neural network to impute time series data on the UCI localization dataset [272]. Luo et al. utilized a GAN to infer missing time series data [273]. Saeed et al. proposed an adversarial autoencoder (AAE) framework to perform data imputation [132]. Opportunity: To address this challenge, more research into automated methods for evaluating and quantifying the quality is needed to identify better, remove, and/or correct for poor quality data. Additionally, it has been experimentally shown that deep neural networks have the ability to learn well even if trained with noisy data, given that the networks are large enough and the dataset is large enough [274]. This motivates the need for HAR researchers to focus on other areas of importance, such as how to deploy larger models in real systems efficiently (Section 6.4) and generate more data (Section 6.1.1), which could potentially aid in solving this problem.

6.1.3. Privacy Protection