Abstract

The variation in skin textures and injuries, as well as the detection and classification of skin cancer, is a difficult task. Manually detecting skin lesions from dermoscopy images is a difficult and time-consuming process. Recent advancements in the domains of the internet of things (IoT) and artificial intelligence for medical applications demonstrated improvements in both accuracy and computational time. In this paper, a new method for multiclass skin lesion classification using best deep learning feature fusion and an extreme learning machine is proposed. The proposed method includes five primary steps: image acquisition and contrast enhancement; deep learning feature extraction using transfer learning; best feature selection using hybrid whale optimization and entropy-mutual information (EMI) approach; fusion of selected features using a modified canonical correlation based approach; and, finally, extreme learning machine based classification. The feature selection step improves the system’s computational efficiency and accuracy. The experiment is carried out on two publicly available datasets, HAM10000 and ISIC2018. The achieved accuracy on both datasets is 93.40 and 94.36 percent. When compared to state-of-the-art (SOTA) techniques, the proposed method’s accuracy is improved. Furthermore, the proposed method is computationally efficient.

1. Introduction

Skin cancer is caused by the abnormal growth of skin cells. Skin cancer cases have increased dramatically in recent years [1]. The increase in skin cancer is because of the reduction in the ozone layer, which is protection against ultraviolet rays [2]. Squamous cell carcinoma, actinic keratosis (solar keratosis), melanoma, and basal cell carcinoma are all types of skin cancer [3]. Melanoma is the most dangerous type of skin cancer, out of all the others. Melanoma begins in the melanocyte cells, which resemble a mole and are brown or black in color [4]. The occurrence rate of melanoma is 7%, but 75% of deaths are caused by this type of deadly cancer [5].

In 2017, in the USA, 3590 deaths occurred from 95,360 cases. From these cases, 87,110 were melanoma cases. The reported deaths in 2018 are 13,460 from a total of 99,550 cases. The melanoma cases that occurred in 2018 are 91,270. In 2019, 104,350 cases of skin cancer were reported in the USA alone. The number of reported cases in men was 62,320 and in women was 42,030. The number of melanoma cases was 96,480 (reportedly 57,220 in men and 39,260 in women) of all skin cancer cases reported in 2019. The number of deaths in 2019 due to melanoma was 7320 [6]. More than 15,000 people in the USA die every year from skin cancer lesions. In the next few decades, the mortality rate by melanoma infections could increase [7].

Because of the differences in skin textures and injuries, detecting skin cancer is a difficult task. As a result, dermatologists employ a noninvasive technique known as dermoscopy to detect skin lesions at an early stage [8]. The first step in dermoscopy is to apply the gel to the infected area. Then, a magnified image is acquired by using a magnifying tool. This magnified image provides a better visualization to examine the structure of the lesion area. The detection accuracy depends on the experience of the dermatologist [9]. A study shows that the dermatologist’s detection accuracy can vary between 75% and 84% [10]. Manual identification of skin lesions using dermoscopy, on the other hand, is a time-consuming procedure with a high risk of error, even for experienced dermatologists [11]. Therefore, researchers have introduced different computer-aided diagnostic (CAD) techniques based on machine learning and deep CNN features [12].

Dermatologists can use CAD systems to identify skin lesions more quickly and accurately. A CAD system’s key steps are skin image dataset acquisition, feature extraction and selection, and classification [13]. The utilization of deep features for skin lesion detection and classification has been shown to be of immense importance in the last few years compared to the traditional feature extraction techniques [14]. The deep features are extracted from the fully connected layers of a CNN model that was later employed for the classification [15]. Deep features, as opposed to traditional methods, such as texture, color, and shape, include both local and global information about an image. An image’s local information is extracted from the convolutional layer, while the global information is captured from the 1D layers (global average pooling and fully connected) [16]. In the traditional methods, shape features, such as HOG, color, and texture (LBP), are extracted separately [17].

A few researchers faced the problem of redundant features that mislead the multiclass lesion classification. Therefore, it is essential to develop a feature selection technique that selects the most appropriate features for final classification [18]. A few recently introduced feature selection techniques include grasshopper, binary whale optimization, among others [19]. As compared to the binary class classification problem, the multiclass classification is more complex due to the similar nature of skin lesions. Moreover, the intraclass similarity is another problem for the multiclass classification.

In this work, a new framework is proposed based on the hybrid whale optimization deep feature selection that later fused with a modified canonical correlation approach. The contrast stretching method is proposed to improve the visibility quality of the lesion region. The deep learning model is trained on the contrast enhanced lesions instead of original dermoscopic images. Further, two feature selection techniques are utilized in order to improve the accuracy and reduce the computational time.

2. Related Work

Several techniques and methods have been developed for the robust segmentation and classification of skin lesions [8]. The developed techniques utilized computer vision-based machine learning techniques [20]. Machine learning algorithms utilized supervised learning and deep learning methods for robust detection, segmentation, and classification of skin lesions [21]. Dorj et al. [22] utilized the deep features from pre-trained AlexNet model and that had been given to SVM for classification. Their technique produced promising results for lesion classification. Ren et al. [23] presented a fusion mechanism for the segmentation of a skin lesion. The spatial attention and channel attention modules extracted the information from channels of skin images. The implementation of serial network fusion for segmentation achieved competitive accuracy. Automated skin lesion segmentation [24] and classification was performed using the deep CNN-based mutual boot strapping network. The segmentation and classification used learned information of both phases using a bootstrapping technique. The proposed method was implemented on challenging skin lesion datasets and achieved robust segmentation and classification performance. A deep learning [25] technique was deployed to detect and classify the melanoma from dermoscopic images. A deep residual network was used to extract deep feature and fisher vectors utilized for image encoding. SVM classification was performed with chi-square to detect and classify the melanoma from discriminatory images of dermoscopy. The presented method achieved robust performance on the challenging ISBI 2016 dataset. Khan et al. [26] came up with a novel deep learning-based methodology for efficient segmentation and classification of skin lesions. The segmentation was performed using mask recurrent neural network (Mask-RNN). A pyramid network feature was used with Resnet50 for feature extraction and lesion classification performed with the SoftMax classifier. The HAM10000 dataset was utilized for the presented deep learning method evaluation and achieved competitive performance. A deep learning-based novel cascaded architecture [27] presented the ability for recognition of skin lesions. The diffusion of knowledge was required to perform analysis of the skin lesion and segmentation of lesions. The data augmentation was performed to remove the class disparity and implanted technique compared with the state-of-the-art (SOTA) technique of skin lesion classification and segmentation. The super pixel [28] method adopted for efficient segmentation and recognition of skin lesions used a novel segmentation technique. Image registration and segmentation techniques were fused for feature derivation, in order to achieve the better lesion segmentation results. The deep learning-based techniques [29] achieve robust performance in skin lesion segmentation, detection, and classification. Sikkandar et al. [30] came up with a new GrabCut and neuro fuzzy algorithm for recognition and segmentation of skin lesion. Preprocessing performed using top hat filter, GrabCut method used for segmentation, feature extraction performed using deep CNN model inception, and images of skin lesions classified using neuro fuzzy classifier. The presented technique applied on ISIC dataset and achieved robust performance in terms lesion segmentation and classification. Researchers in [31] used the transfer learning technique on the PH2 dataset for the classification of skin lesions. They performed transfer learning on the AlexNet network and achieved an accuracy of 98.61%.

The methods described above follow some standard steps for lesion classification, including preprocessing of lesion images, segmentation of skin lesions, extraction of deep learning features from segmented lesions, and, finally, classification using supervised learning methods. The accuracy of features extracted using pre-trained deep learning models is higher than that of features extracted using traditional techniques. The key limitation of the methods described above is the inclusion of redundant features, which not only reduces system accuracy but also increases computational time. Furthermore, the majority concentrated on the binary class classification problem. In this paper, we proposed a new framework for multiclass classification using deep learning and fusion of best selected features.

3. Datasets

Two datasets are utilized in this work for the experimental process, such as HAM10000 and ISIC2018. The detail of both datasets is given below.

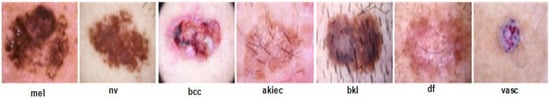

HAM1000 Dataset: The HAM10000 “Human Against Machine with 10,000 training images” dataset is one of the largest datasets, which contains 10,015 total dermoscopy images, used for detecting pigmented skin lesions, that are publicly accessible through the ISIC repository [32]. This dataset is grouped into seven different classes with a number of images, i.e., melanocytic nevus (nv = 6705), actinic keratosis (akiec = 327), dermatofibroma (df = 115), basal cell carcinoma (bcc = 514), vascular lesion (vacs = 115), benign keratosis (bkl = 1099), and melanoma (mel = 1113) [33]. The dataset contains 54% male and 45% female skin lesion images. It is a complex dataset with many skin lesion images having low inter-class and high intra-class variation issues, therefore the classification of these skin classes is not an easy task, and the chances of a high misclassification rate are significant. A few sample images are shown in Figure 1.

Figure 1.

Sample images of HAM1000 dataset. melanoma (mel), melanocytic nevus (nv), basal cell carcinoma (bcc), actinic keratosis intraepithelial carcinoma (akiec), benign keratosis (bkl), dermatofibroma (df), and vascular lesion (vasc).

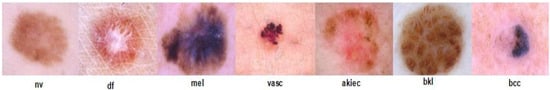

ISIC 2018 Dataset: The ISIC 2018 dataset was published by the International Skin Imaging Collaboration (ISIC) as a large-scale dataset of dermoscopy images that included over 12,500 images. The dataset performs three different tasks, i.e., lesion segmentation, attribute detection, and disease classification, respectively [34]. For the classification task, this dataset consists of more than 10,000 images of seven type of classes [35]. The sample images are illustrated in Figure 2. The ISIC 2018 challenge has two main problems: first, the limited number of images in some classes; and, secondly, the imbalanced number of images in different classes makes the classifier difficult to correct classification.

Figure 2.

Sample images of ISIC 2018 dataset.

4. Proposed Methodology

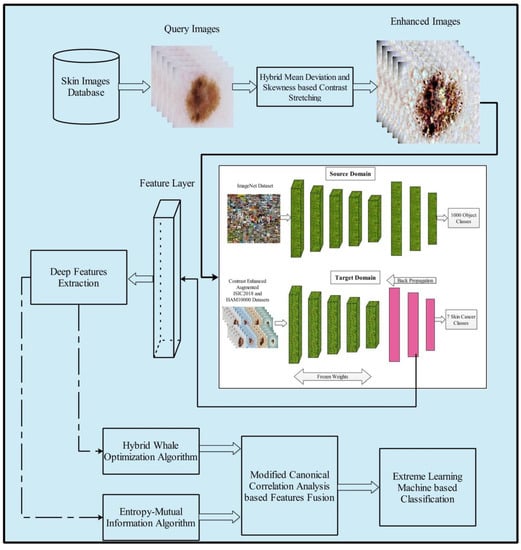

The proposed method for multiclass skin cancer classification used deep learning and the fusion of the best selected features, and is presented in this section. The architecture of the proposed method is illustrated in Figure 3. Five primary steps are performed in the proposed method: image acquisition and contrast enhancement; deep learning features extraction using transfer learning; selection of best features using hybrid whale optimization and entropy-mutual information (EMI) approach; fusion of selected features using modified canonical correlation based approach; and, finally, ELM-based classification. The detail of each step is given below subsections.

Figure 3.

Proposed multiclass skin lesion classification using optimal deep learning features fusion.

4.1. Contrast Enhancement

Contrast enhancement is an important step in the area of medical imaging to improve the local contrast of an image. In medical image processing, contrast stretching is usually applied for accurate skin lesion detection; however, in this step, contrast stretching is applied for the sake of more useful feature extraction. Many techniques are presented in the literature for global contrast enhancement, but a very few of them focused on local contrast stretching. In this work, a hybrid contrast stretching technique is proposed based on absolute mean deviation and a skewness function. This method increases the contrast of skin lesion region and makes the image more useful for the further processing. Mathematically, this process is defined as follows:

Consider is dermoscopic database, is an input image of dimension . Let, represent the total number of pixels in the image and is the each image pixel. The absolute mean deviation (AMD) and skewness are formulated through Equations (1) and (2).

where the is AMD of the image, is average mean of the dataset (), and is the standard deviation, respectively. By employing these values, the final transformation image is obtained as follows:

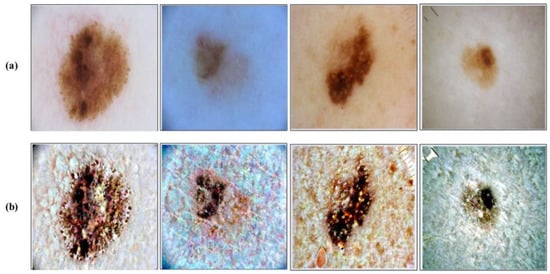

where is final transformed image and denote the image pixel’s. This process is applied on the entire selected datasets before training of the deep learning models. A few sample results are illustrated in Figure 4. In this figure, it is clearly illustrated that after the proposed transformation, the lesion region is more easy to identify.

Figure 4.

Contrast enhancement results. (a) Original dermoscopic images; (b) Proposed contrast enhancement.

4.2. Convolutional Neural Network

In the area of medical imaging, CNN showed promising performance for both segmentation and classification tasks. Generally, a CNN architecture consists of a number of layers, such as input layer, convolutional layer, activation layer, ReLu, pooling, fully connected, and SoftMax.

The first layer of a convolutional layer is the input layer. This layer is always in , where denotes the number of channels. In this layer, the whole image pixels are considered as input to the next layer. Through this layer, both low-level and high-level features are extracted.

The second layer is a convolutional layer, mainly utilized to extract feature information from the images using convolutional operation and dot product. This layer is defined as follows:

where, denotes the output of the convolutional layer. The result of this layer returned into a matrix format, which consists of the number of positive and negative values. The negative values are not required for the next step; therefore, converting those pixel values into positive ones is essential. For this purpose, an activation layer, called the ReLu layer, is applied. This layer transforms the negative pixel values into zero and keeps positive values as it is. Mathematically, it is defined as follows:

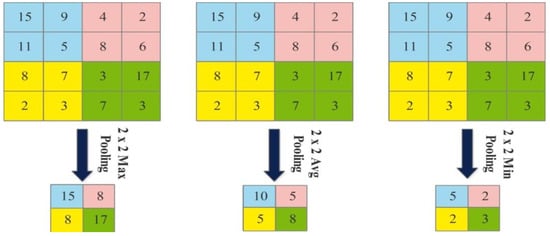

The next layer is the pooling layer, which reduces the spatial size of an image after the convolutional layer. This layer is usually applied between two convolutional layers. Visually, the pooling layer operations are illustrated in Figure 5 and mathematical output is defined as follows:

Figure 5.

An example of max pooling, average pooling, and min pooling.

The fully connected layer (FC) comprises neurons fully linked with all preceding layers of activations. After that, the activations can be calculated using matrix multiplication and bias offset. Finally, the performance of this layer is graded using the SoftMax classifier. Mathematically it is defined as:

A SoftMax function is used to take either positive, zero, or negative real value as input to all the values of . As an input vector, each value is subjected to an exponential equation.

4.3. Deep Learning Features Extraction

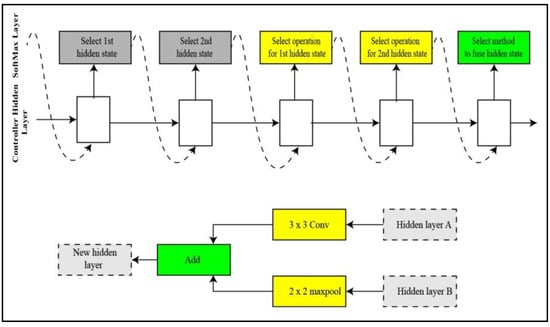

NASNET Large: A neural architecture search (NAS) [36] deep network trained over a million images of the challenging image database ImageNet [37]. The input size of image for the NasNet Large is 331-by-331. A child network of unique structure is created in NAS by the recurrent neural network (RNN). The child networks are trained using a holdout method to achieve accuracy. The controller is updated using the combined accuracy of child networks to create a better architecture for the network. The controller structure is represented in Figure 6. The controller predictions are gathered into blocks. Five unique SoftMax classifiers make five predictions at five steps of the block in accordance with the discrete choices of blocks.

Figure 6.

Illustration of controller process for NasNet large.

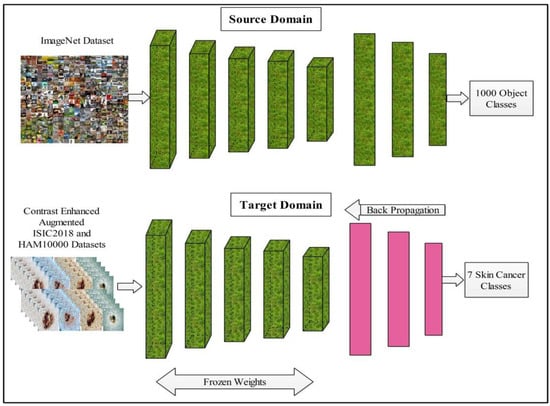

In the process of model fine-tuning, we first remove the last three layers of this model and add a new layer according to the number of dataset classes. After the fine-tuning process, transfer learning is employed for the training of the model. In the training process, several hyperparameters are selected, such as the learning rate of 0.001, epochs of 100, minimum batch size of 64, and SGD for learning. The transfer learning process is illustrated in Figure 7. After the training of a finely tuned model on skin datasets, features are extracted from the average pool layer and utilized for the further processing, such as feature selection.

Figure 7.

Transfer learning based model learning and features extraction.

4.4. Optimal Feature Selection

The selection of the best features is an important step, with the advantage of improving the classification accuracy and reducing the computational time [38]. In this work, two methods are applied for the selection of best features. In the first method, a hybrid whale optimization algorithm is proposed. HWO Algorithm: Originally, the WOA was introduced by Mirjalili [39]. This algorithm is executed in two phases: (i) encircling prey and (ii) search for prey. In other words, two steps are performed: an exploitation phase and an exploration phase. Mathematically, these phases are formulated as:

where is position vector, is best solution, and are coefficient vectors, is random vector of value [0, 1], is decrease linearly from 2 to 0 over the iterations, and is absolute value, respectively. The update the positions according to the best known solutions . The values and are located in the whale according to their best solutions. The shrinking behavior is updated through the following equation:

Later, the distance is computed among and to create the position of a neighbor search agent.

where is a constant and value is 1, and is a random number between −1 and 1. The final shrinking process is formulated through the following equation:

where the is a random number of value between 0 and 1. In the exploration phase, a random search agent is selected to guide the search.

where is a random search agent and is selected agents (features). The extreme learning machine (ELM) classifier [40] is adopted for the classification error and to make the balance among classification classes, the following fitness function is employed:

where is classification error computed from ELM. The best solutions are picked and saved in . These best solutions are further refined using the sorting and absolute mean deviation (AMD)-based function. AMD is computed through Equation (1) and final selection function is defined as follows:

EMI Selection Technique: Another technique, named Fuzzy Entropy Mutual Information (EMI), is proposed for features uncertainty handling. The fuzzy entropy is initially computed from the original feature vector and then embedded into the mutual information equation for the final selection. Fuzzy entropy is defined as follows:

Using this equation, the join entropy of two variables is computed as follows:

After that, the common information is computed among and , known as mutual information.

The resultant vector is finally fused with the HWOA-based selected feature vector using modified canonical correlation analysis (MdCAA).

4.5. Optimal Feature Fusion

The best selected features of HWO and EMI are finally fused in one matrix using modified canonical correlation analysis. Mathematically, the original CCA is formulated as follows:

where j, k, and l represent dimensions of sample space, n denotes observation size. CCA aims to find projection directories.

That raises the correlation between where X = [, Y = [ and Z = [ represents sample matrices. Formally, in CCA we solve:

where define covariance matrix between feature sets and , , represents covariance within three feature sets. When the matrices within feature sets are non-singular, then CCA can be obtained by computing generalized Eigen-problem.

Let = , = , = denote three projection directories matrices. Where the vector pairs corresponds to the largest d generalized Eigen value. From the three modalities we can get the fused feature as termed below:

where is the fused vector that later sorted into descending order and remove the redundant features by comparing approach. The final results features are finally classified using ELM classifier.

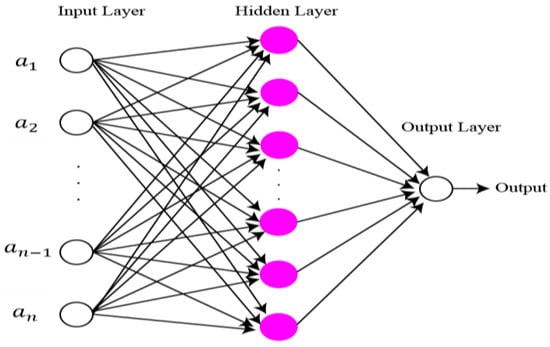

4.6. Extreme Learning Machine

The final features are classified using extreme learning machine (ELM) [41]. The structure of ELM is illustrated in Figure 8. Compared to classical gradient-based neural networks (GBNN), the ELM rate of learning and generalization efficiency is better. In the case of the ELM algorithm, the assignment of weights and hidden biases are carried out randomly. Although a least square technique is used for the calculation of output weight. Mathematical representation of ELM is as follows:

Figure 8.

Structure of ELM classifier.

Suppose ELM has unique samples from the fused feature set. The approximation of the desired output can be carried out with help of ELM having zero error which can be denoted as

where is input samples and is output samples. L refers to nodes of hidden layer, are weights for output, activation function is referred to as . and refer to input weights and input bias, respectively. The activation function for ELM is the radial basis function. The matrix of Equation (35) can be denoted as:

where the weights of matrix are represented as , the target output is represented as , the hidden layer output can be represented as .

Practically, the nodes of hidden layer are less than the total number of samples of training (S). The matrix of the output weight is not a singular matrix. The ELM approximation for target output cannot be with zero error. That is why the Moore Penrose (MP) generalization inverted method is utilized to obtain approximation and to express output weights.

where refers the generalized inverse of hidden layer of output.

5. Results and Analysis

The proposed multiclass skin lesion classification framework results are presented in this section. Two publicly available datasets, named HAM10000 [32] and ISIC2018 [42], are employed for the experimental process. Both datasets consist of seven different skin lesion classes. As mentioned in Section 4.1, the data augmentation process is employed in this work to handle the imbalanced issue. Therefore, the balanced datasets are considered for the experimentations. Several parameters are employed in this work during the training process, such as a training rate of 0.001; a mini batch size of 64, and max epochs of 500. The 50:50 is considered for the training and testing process for both datasets, whereas the cross validation value is 10. Several classifiers are utilized for the experimental process, such as multiclass SVM (MC-SVM), fine KNN (F-KNN), decision trees (DT), Naïve Bayes, ensemble tree (EBT), and single hidden layer extreme learning machine (ELM). The performance of each classifier is computed using accuracy, precision, FDR, and computational time. The simulations of proposed framework are performed on MATLAB2020b. Moreover, an 8GB graphics processing unit (GPU) is utilized for processing of the proposed framework.

Experiments: The following experiments are performed in this work for validation of the proposed framework:

- -

- Classification using originally deep features;

- -

- Classification using HWOA-based best features selection;

- -

- Classification using EMI-based best features selection;

- -

- Classification using best selected features fusion approach.

5.1. Results on HAM10000 Dataset

Table 1 presents the classification results of HAM10000 dataset using originally deep extracted features from fine-tuned NasNet large network. The features are extracted from the global average pool layer and the classification is performed. On this layer, the length of extracted deep features is . The highest achieved accuracy noted in this table is 84.90%, for the ELM classifier. The precision and FDR values are 84.10 and 15.90%, respectively. Additionally, the computational time of ELM is 214.5536 (s). A few other classifiers are also implemented, such as MC-SVM, F-KNN, DT, Naïve Bayes, and EBT, and the accuracy value is 84.72, 80.90, 79.63, 81.50, and 82.96%, respectively. The accuracy and precision value of ELM is better, compared to these classifiers. Additionally, computationally, ELM is more efficient than rest of the classifiers.

Table 1.

Classification accuracy using originally extracted deep features for HAM10000.

After the experimentation on the originally deep features, two feature selection techniques are introduced, named HWOA and EMI. Table 2 presents the classification results of the HWOA-based best features selection algorithm. The higher noted accuracy in this table is 85.10%. The precision and FDR values of this classifier are 84.86 and 15.14%, respectively. Computation time of ELM is 147.6329 (s), which is less as compared to the other mentioned classifiers, such as MC-SVM, F-KNN, to name a few. Compared to the results of HWOA-based feature selection with originally deep extracted (from Table 1), it is noted that a minor improvement is occurred in the accuracy but there is significant change in the computational time.

Table 2.

Classification accuracy using HWOA based feature selection for HAM10000 dataset.

Table 3 presents the classification accuracy using EMI-based best feature selection approach. In this table, the best noted accuracy value is 84.90, whereas the computational time is 90.3560 (s). This selection method has also shown slight improvement in the accuracy but there is a high change in the computational time (compared with Table 1 and Table 2). Based on these three experiments, it is analyzed that the selection of the best features shows slight improvement in the accuracy value but higher change in the computational time. After the process of best features selection, time is significantly reduced.

Table 3.

Classification accuracy using EMI-based feature selection for HAM10000 dataset.

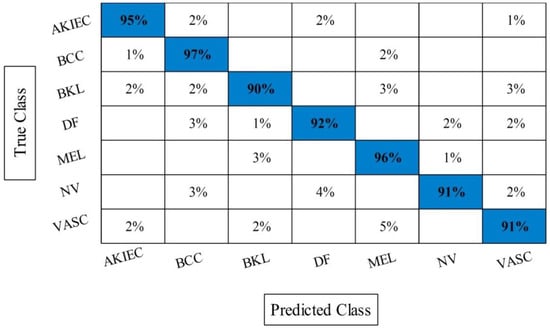

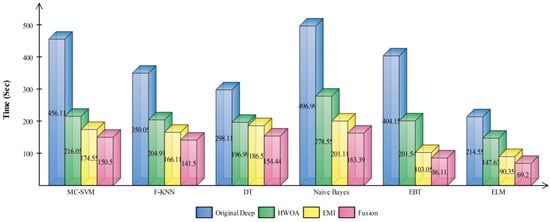

The best selected features through HWOA and EMI are finally fused using proposed fusion approach. Table 4 presents the results of features fusion. In this table, the best noted accuracy value is 93.40% that is improved than the accuracy values given in Table 1, Table 2 and Table 3. After the fusion process, the change in the accuracy value is approximately 8%. The values of precision and FDR is 93.10 and 6.90%, respectively. Additionally, the computational time is reduced and reached to 69.2036 (s). The recent best time was 90.3560 (s) for EMI-based features selection approach. For the rest of the classifiers, such as MC-SVM, F-KNN, DT, Naïve Bayes, and Ensemble trees, also showed the improved performance after the fusion process. The accuracy value of ELM is further verified by Figure 9 in the form of confusion matrix. In this figure, the diagonal value represents the correct prediction rate. Figure 10 illustrated the overall depiction of computational time for all four experiments using HAM10000 dataset. Based on this figure, it is clearly analyzing that the fusion process is computationally less expensive than original deep features, HWOA, and EMI. The fusion process reduced some features that are not meet the criteria, given in Equation (33). Moreover, the ELM is performed overall better than rest of the classifiers.

Table 4.

Classification accuracy using selected features fusion approach for HAM10000 dataset.

Figure 9.

Confusion matrix of ELM after best selected features fusion for HAM10000.

Figure 10.

Computational time based comparison among middle steps for HAM10000.

5.2. Results on ISIC2018 Dataset

The classification results on ISIC2018 dataset are presented in this section. Several experiments are performed to validate the propose results. Table 5 presents the classification results of ISIC2018 dataset using originally deep extracted features. The ELM classifier achieved a highest accuracy of 85.96%, whereas the precision value is 85.52%. The rest of the classifiers achieved an accuracy of 83.90, 81.40, 82.56, 83.50, and 82.16%, respectively. The computational time of each classifier is also noted and minimum noted time is 286.1009 (s). To overcome the problem of higher computational time and improved accuracy, a hybrid WOA is proposed. The results of HWOA are given in Table 6. This table presents the best accuracy of 87.86%, whereas the computational time is 184.5294 (s). The computed accuracies of other classifiers are 85.14, 82.96, 84.60, 85.36, and 83.90%, respectively. This results in this table showing that the use of HWOA improved in the accuracy and minimizes the computational time. To further reduce the computational time, another feature selection technique is proposed named EMI. The results of this approach are given in Table 7. This table showed that the accuracy is almost consistent but the computational time is minimized.

Table 5.

Classification accuracy using originally extracted deep features for ISIC2018 dataset.

Table 6.

Classification accuracy using HWOA-based feature selection for ISIC2018 dataset.

Table 7.

Classification accuracy using EMI-based feature selection for ISIC2018 dataset.

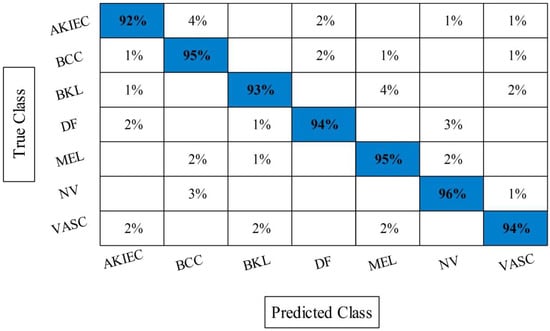

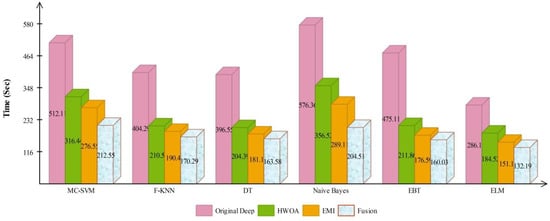

The accuracy of HWOA- and EMI-based feature selection is not improved as compared to the existing techniques; therefore, a fusion technique is proposed. Table 8 presents the results of features fusion. In this table, the best noted accuracy value is 94.36% that is improved than the accuracy values given in Table 5, Table 6 and Table 7. After the fusion process, the change in the accuracy value is approximately 7%. The computational time is also reduced after the fusion process and reached to 132.1990 (s). The recent best time was 184.5294 (s) for EMI-based features selection approach. For the rest of the classifiers accuracy is also improved (91.84, 90.60, 92.56, 92.80, and 90.46%, respectively). Figure 11 illustrated the confusion matrix of ELM classifier for ISIC2018 dataset. Through this figure, the ELM accuracy can be verified. Figure 12 illustrated the overall depiction of computational time for all four experiments using ISIC2018 dataset. Based on this figure, it is clearly analyzed that the fusion process is computationally less expensive. Moreover, ELM classifier is computationally less expensive than other listed classifiers in this table.

Table 8.

Classification accuracy using selected features fusion approach for ISIC2018 dataset.

Figure 11.

Confusion matrix of ELM after best selected features fusion for ISIC2018 dataset.

Figure 12.

Computational time based comparison among middle steps for ISIC2018 dataset.

5.3. Discussion

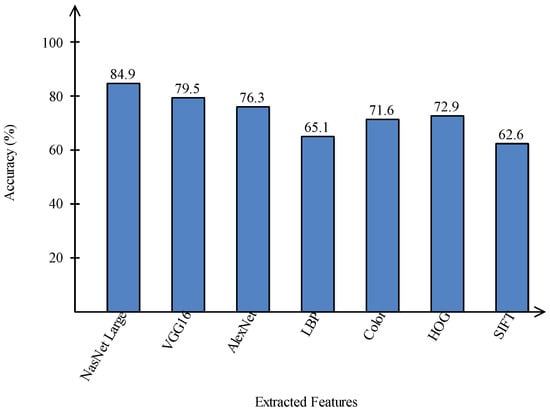

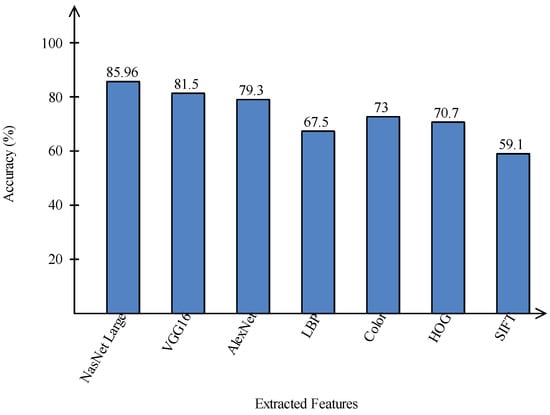

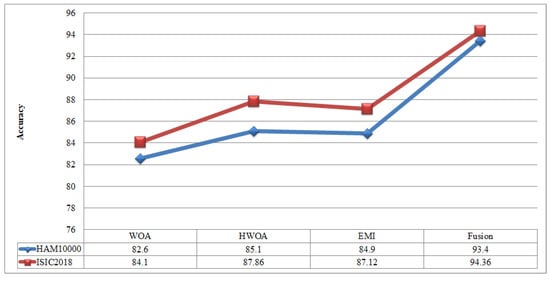

A comparison is conducted among extracted deep features and traditional features, as illustrated in Figure 13 and Figure 14. In these figures, it is illustrated that the deep learning-based extracted features (i.e., NAsNet Large, VGG16, Alexnet) give better results than traditional features (i.e., HOG, LBP, SIFT, and Color) on selected datasets. In the traditional features, HOG and color give better results than SIFT and LBP. In addition, Figure 15 showing the comparison among original WOA-, HWOA-, and EMI-based best feature selection.

Figure 13.

Comparison of deep learning and traditional features in terms of accuracy on HAM10000 dataset.

Figure 14.

Comparison of deep learning and traditional features in terms of accuracy on ISIC2018 dataset.

Figure 15.

Comparison among original WOA with HWOA, EMI, and Fusion techniques.

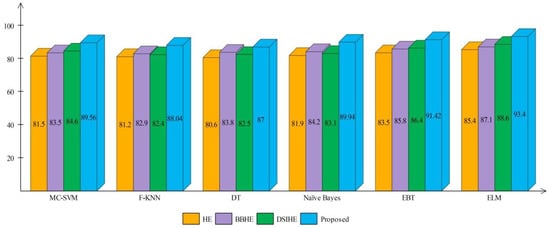

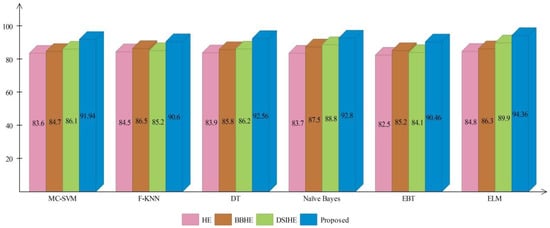

A comprehensive analysis of different contrast enhancement techniques is also performed, with the proposed enhancement technique as the chosen enhancement technique. In place of the proposed enhancement technique, three well-known techniques, such as HE [43], BBHE [44], and DSIHE [45], are chosen and included in the proposed framework. Figure 16 and Figure 17 show the computed and plotted results after the addition of these techniques. Based on these figures, it is clear that the proposed contrast enhancement technique produces superior results. The second best accuracy is provided by the DSIHE contrast enhancement technique.

Figure 16.

Comparison of different contrast enhancement techniques for HAM10000 dataset.

Figure 17.

Comparison of different contrast enhancement techniques for ISIC2018 dataset.

Finally, a comparison is also conducted with state of the art (SOTA) techniques, as shown in Table 9. In this table, Huang et al. [46] used the HAM10000 dataset for the experimental process and achieved an accuracy of 85.80%. Hamsi et al. [47] achieved an accuracy of 87.70% for the HAM10000 dataset. Chaturvedi et al. [48] improved accuracy on Ham10000 and reached to 92.83%. Recently, Khan et al. [26] introduced a deep learning framework and obtained an accuracy of 86.50% on the Imbalanced HAM10000 dataset. Authors in [49,50] introduced a deep learning-based framework and achieved an accuracy of 92.40% and 93.4%. In the proposed method, the obtained accuracy is 93.40% on HAM10000 dataset and 94.36% on ISIC2018 dataset. This showed that the proposed method outperformed than SOTA.

Table 9.

Comparison of the proposed method accuracy with SOTA techniques.

The key strength of this work is the selection of optimal features. The fusion process of optimal features shows better results. The key limitation of this work is high computational time due to more number of steps. In the future, the lesion segmentation step will be adopted and utilized for the direct deep learning features extraction process. Moreover, the ISIC2019 and ISIC2020 datasets will be employed for the experimental process.

6. Conclusions

This paper proposes an automated deep learning-based framework for multiclass skin lesion classification. The proposed method includes a number of important steps, including contrast enhancement of the skin lesion using a new function, deep learning model learning via TL using enhanced images, best feature selection via two techniques—HWO and EMI, fusion of selected features via a modified CCA approach, and finally ELM-based classification. The experimental process demonstrates that the proposed method outperformed the other methods on the chosen datasets. Overall, it is concluded that:

- The contrast enhancement step improve the quality of lesion region that later extracts the more relevant features;

- The selection of optimal features improves the classification accuracy and reduces the computational time;

- The fusion process further helps in the improvement of classification accuracy and reduces some redundant features through comparing approach.

In the future, the recent deep learning models shall be considers and refine in the middle layers [51,52,53]. Augmentation of datasets is important step and it can be refining through more up-to-date methods [54,55]. Moreover, the optimization through some latest techniques should be opted [56,57].

Author Contributions

Conceptualization, F.A. and M.S.; methodology, F.A., J.C. and M.A.K.; software, F.A., J.C. and M.A.K.; validation, F.A., M.S. and M.A.K.; formal analysis, M.S. and U.T.; investigation, M.S. and U.T.; resources, M.S. and U.T.; data curation, M.A.K. and H.-S.Y.; writing—original draft preparation, F.A. and M.A.K.; writing—review and editing, U.T., J.C. and H.-S.Y.; visualization, M.A.K. and H.-S.Y.; supervision, M.S., J.C. and M.A.K.; project administration, H.-S.Y., J.C. and U.T.; funding acquisition, H.-S.Y. and J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (Ministry of Science and ICT; MSIT) under Grant RF-2018R1A5A7059549.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Strzelecki, M.H.; Strąkowska, M.; Kozłowski, M.; Urbańczyk, T.; Wielowieyska-Szybińska, D.; Kociołek, M. Skin Lesion Detection Algorithms in Whole Body Images. Sensors 2021, 21, 6639. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Wang, L.; Cheng, S.; Li, Y. FAC-Net: Feedback Attention Network Based on Context Encoder Network for Skin Lesion Segmentation. Sensors 2021, 21, 5172. [Google Scholar] [CrossRef] [PubMed]

- Kumar, M.; Alshehri, M.; AlGhamdi, R.; Sharma, P.; Deep, V. A DE-ANN Inspired Skin Cancer Detection Approach Using Fuzzy C-Means Clustering. Mob. Netw. Appl. 2020, 25, 1319–1329. [Google Scholar] [CrossRef]

- Akram, T.; Sharif, M.; Saba, T.; Javed, K.; Lali, I.U.; Tanik, U.J.; Rehman, A. Construction of saliency map and hybrid set of features for efficient segmentation and classification of skin lesion. Microsc. Res. Tech. 2019, 82, 741–763. [Google Scholar] [CrossRef]

- Saba, T.; Rehman, A.; Marie-Sainte, S.L. Region Extraction and Classification of Skin Cancer: A Heterogeneous framework of Deep CNN Features Fusion and Reduction. J. Med. Syst. 2019, 43, 289. [Google Scholar] [CrossRef]

- Khan, M.A.; Khan, M.A.; Ahmed, F.; Mittal, M.; Goyal, L.M.; Hemanth, D.J.; Satapathy, S.C. Gastrointestinal diseases segmentation and classification based on duo-deep architectures. Pattern Recognit. Lett. 2019, 131, 193–204. [Google Scholar] [CrossRef]

- Nehal, K.S.; Bichakjian, C.K. Update on keratinocyte carcinomas. N. Engl. J. Med. 2018, 379, 363–374. [Google Scholar] [CrossRef]

- Gouabou, A.F.; Damoiseaux, J.-L.; Monnier, J.; Iguernaissi, R.; Moudafi, A.; Merad, D. Ensemble Method of Convolutional Neural Networks with Directed Acyclic Graph Using Dermoscopic Images: Melanoma Detection Application. Sensors 2021, 21, 3999. [Google Scholar] [CrossRef]

- Bafounta, M.-L.; Beauchet, A.; Aegerter, P.; Saiag, P. Is dermoscopy (epiluminescence microscopy) useful for the diagnosis of melanoma: Results of a meta-analysis using techniques adapted to the evaluation of diagnostic tests. Arch. Dermatol. 2001, 137, 1343–1350. [Google Scholar] [CrossRef] [Green Version]

- Argenziano, G.; Soyer, H.P.; Chimenti, S.; Talamini, R.; Corona, R.; Sera, F.; Binder, M.; Cerroni, L.; De Rosa, G.; Ferrara, G.; et al. Dermoscopy of pigmented skin lesions: Results of a consensus meeting via the Internet. J. Am. Acad. Dermatol. 2003, 48, 679–693. [Google Scholar] [CrossRef] [Green Version]

- Sharif, M.; Akram, T.; Kadry, S.; Hsu, C.-H. A two-stream deep neural network-based intelligent system for complex skin cancer types classification. Int. J. Intell. Syst. 2021. [Google Scholar] [CrossRef]

- Alhaisoni, M.; Tariq, U.; Hussain, N.; Majid, A.; Damaševičius, R.; Maskeliūnas, R. COVID-19 Case Recognition from Chest CT Images by Deep Learning, Entropy-Controlled Firefly Optimization, and Parallel Feature Fusion. Sensors 2021, 21, 7286. [Google Scholar]

- Khan, M.A.; Muhammad, K.; Sharif, M.; Akram, T.; Kadry, S. Intelligent fusion-assisted skin lesion localization and classification for smart healthcare. Neural Comput. Appl. 2021, 1–16. [Google Scholar] [CrossRef]

- Adegun, A.; Viriri, S. Deep learning techniques for skin lesion analysis and melanoma cancer detection: A survey of state-of-the-art. Artif. Intell. Rev. 2020, 54, 811–841. [Google Scholar] [CrossRef]

- Dey, S.; Roychoudhury, R.; Malakar, S.; Sarkar, R. An optimized fuzzy ensemble of convolutional neural networks for detecting tuberculosis from Chest X-ray images. Appl. Soft Comput. 2021, 114, 108094. [Google Scholar] [CrossRef]

- Khamparia, A.; Singh, P.K.; Rani, P.; Samanta, D.; Khanna, A.; Bhushan, B. An internet of health things-driven deep learning framework for detection and classification of skin cancer using transfer learning. Trans. Emerg. Telecommun. Technol. 2020, 32, e3963. [Google Scholar] [CrossRef]

- Akram, T.; Sharif, M.; Shahzad, A.; Aurangzeb, K.; Alhussein, M.; Haider, S.I.; Altamrah, A. An implementation of normal distribution based segmentation and entropy controlled features selection for skin lesion detection and classification. BMC Cancer 2018, 18, 638. [Google Scholar] [CrossRef]

- Wang, Z. Gesture recognition by model matching of slope difference distribution features. Measurement 2021, 181, 109590. [Google Scholar] [CrossRef]

- Mafarja, M.; Mirjalili, S. Whale optimization approaches for wrapper feature selection. Appl. Soft Comput. 2018, 62, 441–453. [Google Scholar] [CrossRef]

- Zafar, K.; Gilani, S.O.; Waris, A.; Ahmed, A.; Jamil, M.; Khan, M.N.; Sohail Kashif, A. Skin Lesion Segmentation from Dermoscopic Images Using Convolutional Neural Network. Sensors 2020, 20, 1601. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, L.; Lin, J.P.; Wang, Z.J.; Wang, H. An End-to-End Multi-Task Deep Learning Framework for Skin Lesion Analysis. IEEE J. Biomed. Health Inform. 2020, 24, 2912–2921. [Google Scholar] [CrossRef]

- Aziz, S.; Bilal, M.; Khan, M.U.; Amjad, F. Deep Learning-based Automatic Morphological Classification of Leukocytes Using Blood Smears. In Proceedings of the 2020 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Istanbul, Turkey, 14–15 April 2020; pp. 1–5. [Google Scholar]

- Ren, Y.; Yu, L.; Tian, S.; Cheng, J.; Guo, Z.; Zhang, Y. Serial attention network for skin lesion segmentation. J. Ambient. Intell. Humaniz. Comput. 2021, 1–12. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, J.; Xia, Y.; Shen, C. A Mutual Bootstrapping Model for Automated Skin Lesion Segmentation and Classification. IEEE Trans. Med. Imaging 2020, 39, 2482–2493. [Google Scholar] [CrossRef] [Green Version]

- Yu, Z.; Jiang, X.; Zhou, F.; Qin, J.; Ni, D.; Chen, S.; Lei, B.; Wang, T. Melanoma Recognition in Dermoscopy Images via Aggregated Deep Convolutional Features. IEEE Trans. Biomed. Eng. 2018, 66, 1006–1016. [Google Scholar] [CrossRef]

- Khan, M.A.; Zhang, Y.-D.; Sharif, M.; Akram, T. Pixels to Classes: Intelligent Learning Framework for Multiclass Skin Lesion Localization and Classification. Comput. Electr. Eng. 2021, 90, 106956. [Google Scholar] [CrossRef]

- Jin, Q.; Cui, H.; Sun, C.; Meng, Z.; Su, R. Cascade knowledge diffusion network for skin lesion diagnosis and segmentation. Appl. Soft Comput. 2020, 99, 106881. [Google Scholar] [CrossRef]

- Navarro, F.; Escudero-Vinolo, M.; Bescos, J. Accurate Segmentation and Registration of Skin Lesion Images to Evaluate Lesion Change. IEEE J. Biomed. Health Inform. 2018, 23, 501–508. [Google Scholar] [CrossRef]

- Al-Masni, M.; Kim, D.-H.; Kim, T.-S. Multiple skin lesions diagnostics via integrated deep convolutional networks for segmentation and classification. Comput. Methods Programs Biomed. 2020, 190, 105351. [Google Scholar] [CrossRef] [PubMed]

- Sikkandar, M.Y.; Alrasheadi, B.A.; Prakash, N.B.; Hemalakshmi, G.R.; Mohanarathinam, A.; Shankar, K. Deep learning based an automated skin lesion segmentation and intelligent classification model. J. Ambient Intell. Humaniz. Comput. 2020, 12, 3245–3255. [Google Scholar] [CrossRef]

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Skin Cancer Classification Using Deep Learning and Transfer Learning. In Proceedings of the 2018 9th Cairo International Biomedical Engineering Conference (CIBEC), Cairo, Egypt, 20–22 December 2018; pp. 90–93. [Google Scholar]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- Yao, P.; Shen, S.; Xu, M.; Liu, P.; Zhang, F.; Xing, J.; Shao, P.; Kaffenberger, B.; Xu, R.X. Single Model Deep Learning on Imbalanced Small Datasets for Skin Lesion Classification. arXiv 2021, arXiv:2102.01284. [Google Scholar] [CrossRef]

- Codella, N.; Rotemberg, V.; Tschandl, P.; Celebi, M.E.; Dusza, S.; Gutman, D.; Helba, B.; Kalloo, A.; Liopyris, K.; Marchetti, M. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (isic). arXiv 2019, arXiv:1902.03368. [Google Scholar]

- Li, Y.; Shen, L. Skin Lesion Analysis towards Melanoma Detection Using Deep Learning Network. Sensors 2018, 18, 556. [Google Scholar] [CrossRef] [Green Version]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Li, F.-F. ImageNet: Crowdsourcing, Benchmarking & Other Cool Things. CMU VASC Semin. 2010, 16, 18–25. [Google Scholar]

- Arshad, M.; Khan, M.A.; Tariq, U.; Armghan, A.; Alenezi, F.; Javed, M.Y.; Aslam, S.M.; Kadry, S. A Computer-Aided Diagnosis System Using Deep Learning for Multiclass Skin Lesion Classification. Comput. Intell. Neurosci. 2021, 2021, 9619079. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhou, H.; Ding, X.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [Green Version]

- Milton, M.A.A. Automated skin lesion classification using ensemble of deep neural networks in ISIC 2018: Skin lesion analysis towards melanoma detection challenge. arXiv 2019, arXiv:1901.10802. [Google Scholar]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Kim, Y.-T. Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans. Consum. Electron. 1997, 43, 1–8. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Zhang, B. Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Trans. Consum. Electron. 1999, 45, 68–75. [Google Scholar] [CrossRef]

- Huang, H.; Hsu, B.W.; Lee, C.; Tseng, V.S. Development of a light-weight deep learning model for cloud applications and remote diagnosis of skin cancers. J. Dermatol. 2020, 48, 310–316. [Google Scholar] [CrossRef] [PubMed]

- Thurnhofer-Hemsi, K.; Domínguez, E. A Convolutional Neural Network Framework for Accurate Skin Cancer Detection. Neural Process. Lett. 2020, 53, 3073–3093. [Google Scholar] [CrossRef]

- Chaturvedi, S.S.; Tembhurne, J.V.; Diwan, T. A multi-class skin Cancer classification using deep convolutional neural networks. Multimed. Tools Appl. 2020, 79, 28477–28498. [Google Scholar] [CrossRef]

- Almaraz-Damian, J.-A.; Ponomaryov, V.; Sadovnychiy, S.; Castillejos-Fernandez, H. Melanoma and Nevus Skin Lesion Classification Using Handcraft and Deep Learning Feature Fusion via Mutual Information Measures. Entropy 2020, 22, 484. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Xie, Y.; Xia, Y.; Shen, C. Attention Residual Learning for Skin Lesion Classification. IEEE Trans. Med. Imaging 2019, 38, 2092–2103. [Google Scholar] [CrossRef]

- Afza, F.; Sharif, M.; Kadry, S.; Manogaran, G.; Saba, T.; Ashraf, I.; Damaševičius, R. A framework of human action recognition using length control features fusion and weighted entropy-variances based feature selection. Image Vis. Comput. 2021, 106, 104090. [Google Scholar] [CrossRef]

- Rashid, M.; Sharif, M.; Javed, K.; Akram, T. Classification of gastrointestinal diseases of stomach from WCE using improved saliency-based method and discriminant features selection. Multimed. Tools Appl. 2019, 78, 27743–27770. [Google Scholar]

- Akram, T.; Zhang, Y.-D.; Sharif, M. Attributes based skin lesion detection and recognition: A mask RCNN and transfer learning-based deep learning framework. Pattern Recognit. Lett. 2021, 143, 58–66. [Google Scholar]

- Akram, T.; Gul, S.; Shahzad, A.; Altaf, M.; Naqvi, S.S.R.; Damaševičius, R.; Maskeliūnas, R. A novel framework for rapid diagnosis of COVID-19 on computed tomography scans. Pattern Anal. Appl. 2021, 1–14. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Khan, S.A.; Attique, M.; Rehman, A.; Seo, S. A resource conscious human action recognition framework using 26-layered deep convolutional neural network. Multimed. Tools Appl. 2021, 80, 35827–35849. [Google Scholar]

- Nawaz, M.; Nazir, T.; Javed, A.; Tariq, U.; Yong, H.-S.; Cha, J. An Efficient Deep Learning Approach to Automatic Glaucoma Detection Using Optic Disc and Optic Cup Localization. Sensors 2022, 22, 434. [Google Scholar] [CrossRef]

- Alqahtani, A.; Khan, A.; Alsubai, S.; Binbusayyis, A.; Ch, M.; Yong, H.-S.; Cha, J. Cucumber Leaf Diseases Recognition Using Multi Level Deep Entropy-ELM Feature Selection. Appl. Sci. 2022, 12, 593. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).