Mobile Contactless Fingerprint Recognition: Implementation, Performance and Usability Aspects

Abstract

:1. Introduction

- We present the first fully automated four-finger capturing and preprocessing scheme with integrated quality assessment in form of an Android app. A description of every implementation step of the preprocessing pipeline is given.

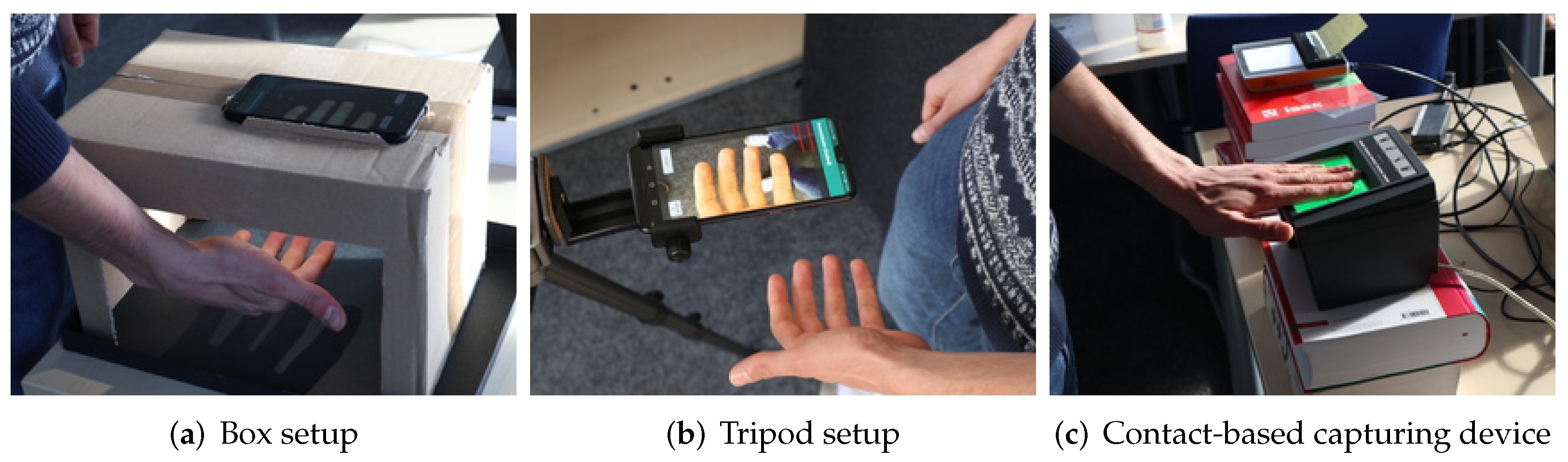

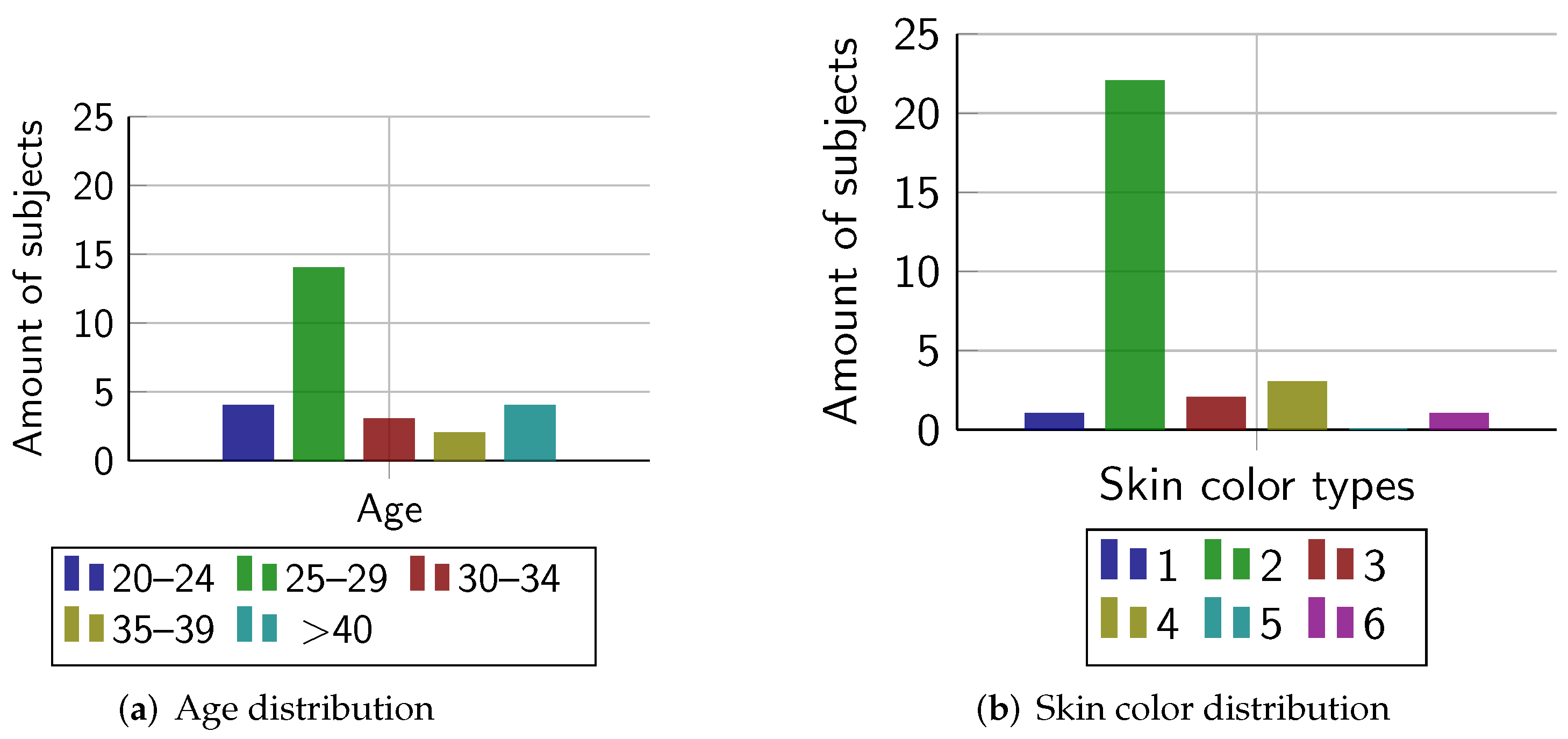

- To benchmark our proposed system, we acquired a database under real-life conditions. A number of 29 subjects was captured by two contactless capturing devices in different environmental situations. Contact-based samples were also acquired as baseline.

- We further evaluate the biometric performance of our acquired database and measure the interoperability between both capturing device types.

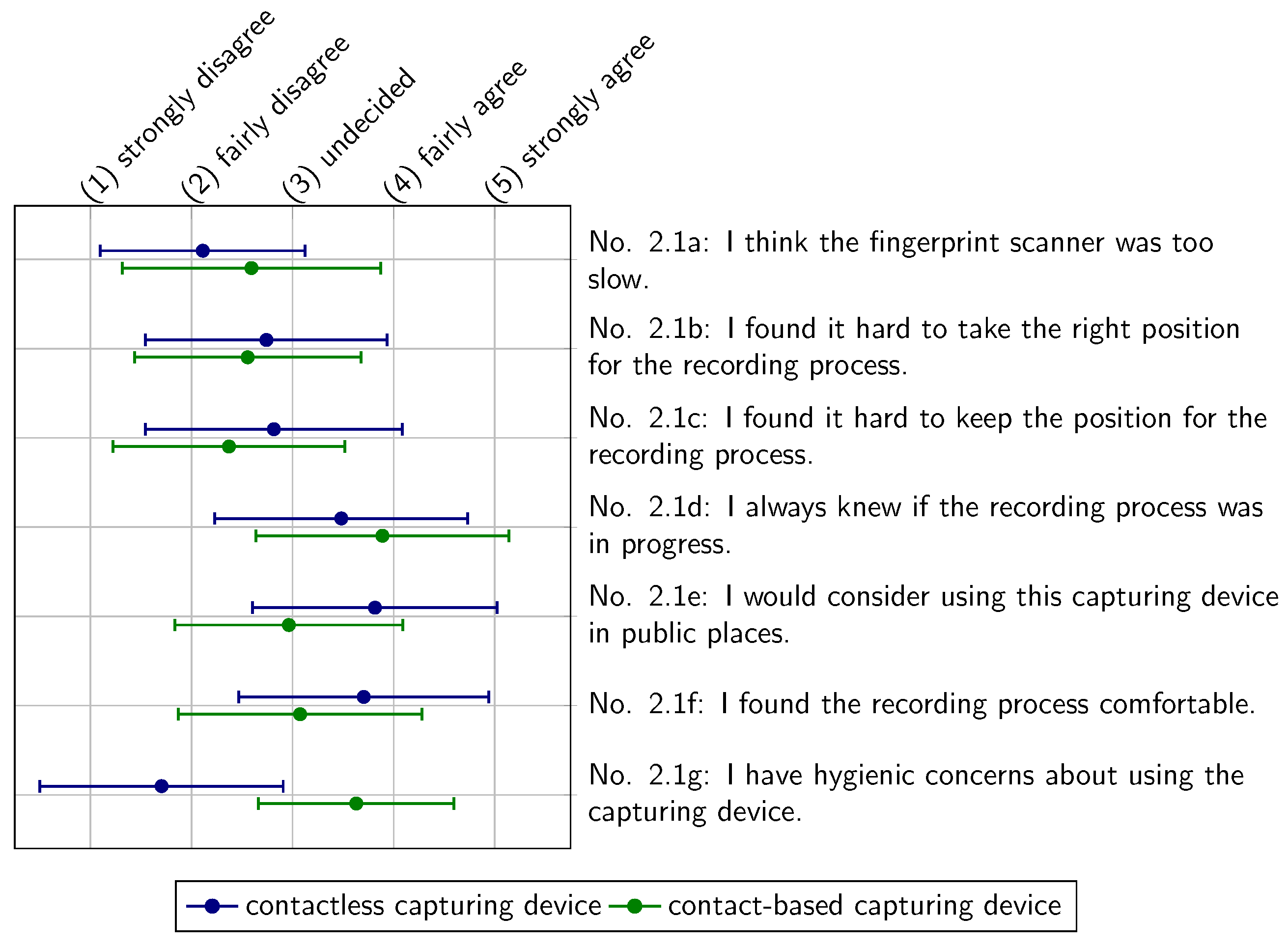

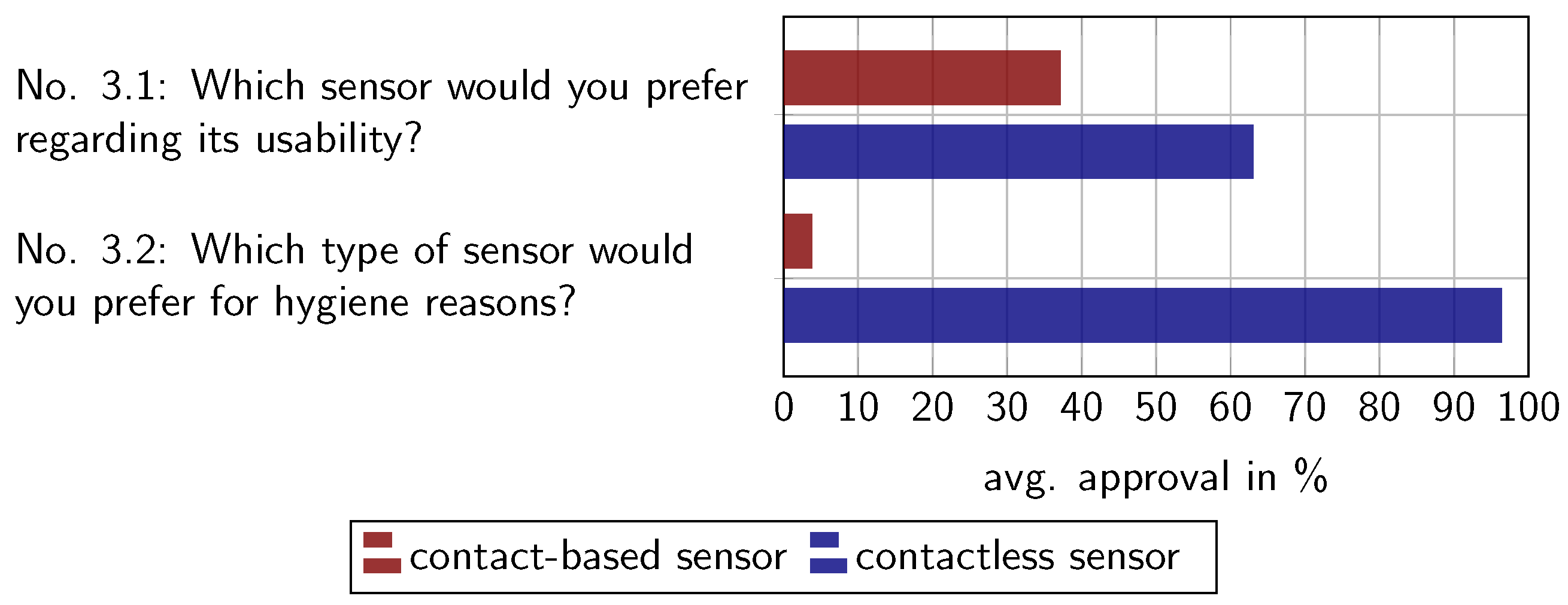

- We provide a first comparative study about the usability of contactless and contact-based fingerprint recognition schemes. The study was conducted after the capture sessions and reports the users’ experiences in terms of hygiene and convenience.

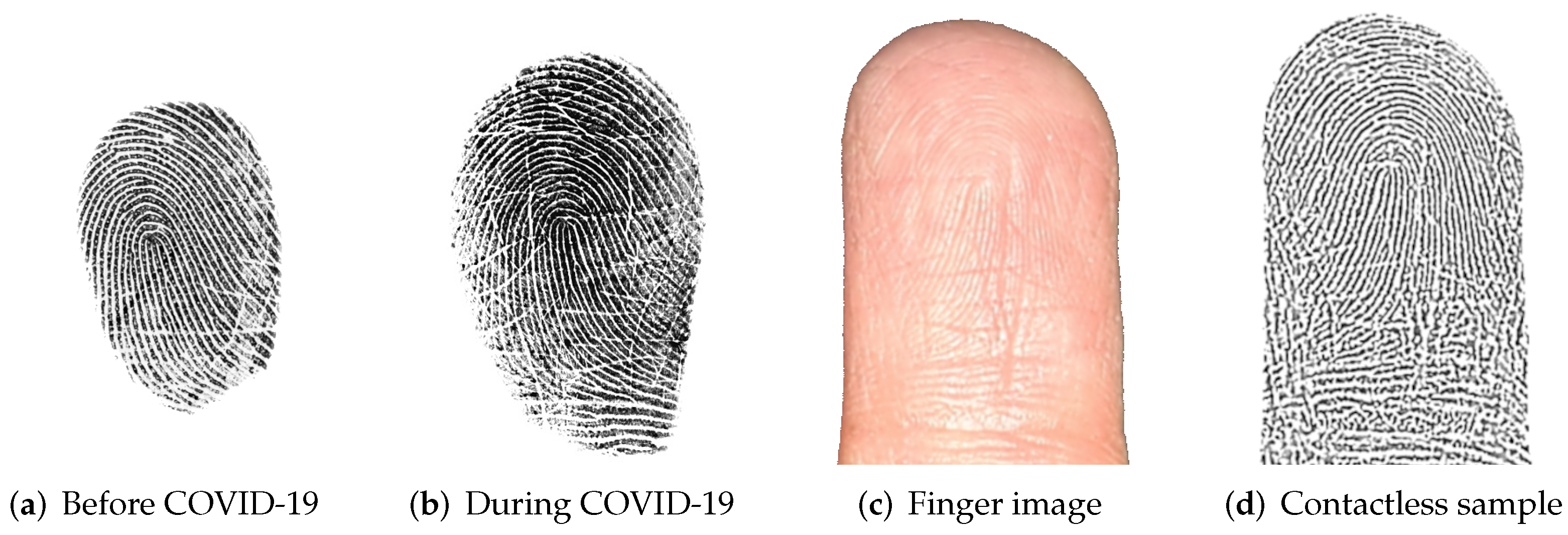

- Based on our experimental results, we elaborate on the impact of the current COVID-19 pandemic on fingerprint recognition in terms of biometric performance and user acceptance. Furthermore, we summarize implementation aspects which we consider as beneficial for mobile contactless fingerprint recognition.

2. Related Work

| Authors | Year | Device Type | Mobile/ Stationary | Multi-Finger Capturing | Automatic Capturing | Free Finger Positioning | Quality Assessment | On-Device Processing | Usability Evaluation |

|---|---|---|---|---|---|---|---|---|---|

| Hiew et al. [3] | 2007 | P | S | N | N | N | N | N | N |

| Piuri and Scotti [6] | 2008 | W | S | N | N | N | N | N | N |

| Wang et al. [4] | 2009 | P | S | N | N | N | N | N | N |

| Kumar and Zhou [7] | 2011 | W | S | N | N | N | N | N | N |

| Noh et al. [8] | 2011 | P | S | Y | Y | N | N | N | Y |

| Derawi et al. [9] | 2012 | S | S | N | N | N | N | N | N |

| Stein et al. [10] | 2013 | S | M | N | Y | Y | N | Y | N |

| Raghavendra et al. [11] | 2014 | P | S | N | N | Y | N | N | N |

| Tiwari and Gupta [12] | 2015 | S | M | N | N | Y | N | N | N |

| Sankaran et al. [13] | 2015 | S | M | N | Y | N | N | N | N |

| Carney et al. [14] | 2017 | S | M | Y | Y | N | N | Y | N |

| Deb et al. [15] | 2018 | S | M | N | Y | Y | Y | Y | N |

| Weissenfeld et al. [16] | 2018 | P | M | Y | Y | Y | N | Y | Y |

| Birajadar et al. [17] | 2019 | S | M | N | Y | N | N | N | N |

| Attrish et al. [5] | 2021 | P | S | N | N | N | N | Y | N |

| Kauba et al. [18] | 2021 | S | M | Y | Y | Y | N | Y | N |

| Our method | 2021 | S | M | Y | Y | Y | Y | Y | Y |

3. Mobile Contactless Recognition Pipeline

- An Android application running on a smartphone which continuously captures finger images as candidates for the final fingerprints and provides user feedback.

- A free positioning of the four inner-hand fingers without guidelines or a framing.

- An integrated quality assessment which selects the best-suited finger image from the list of candidates.

- A fully automated processing pipeline which processes the selected candidate to fingerprints ready for the recognition workflow.

3.1. Capturing

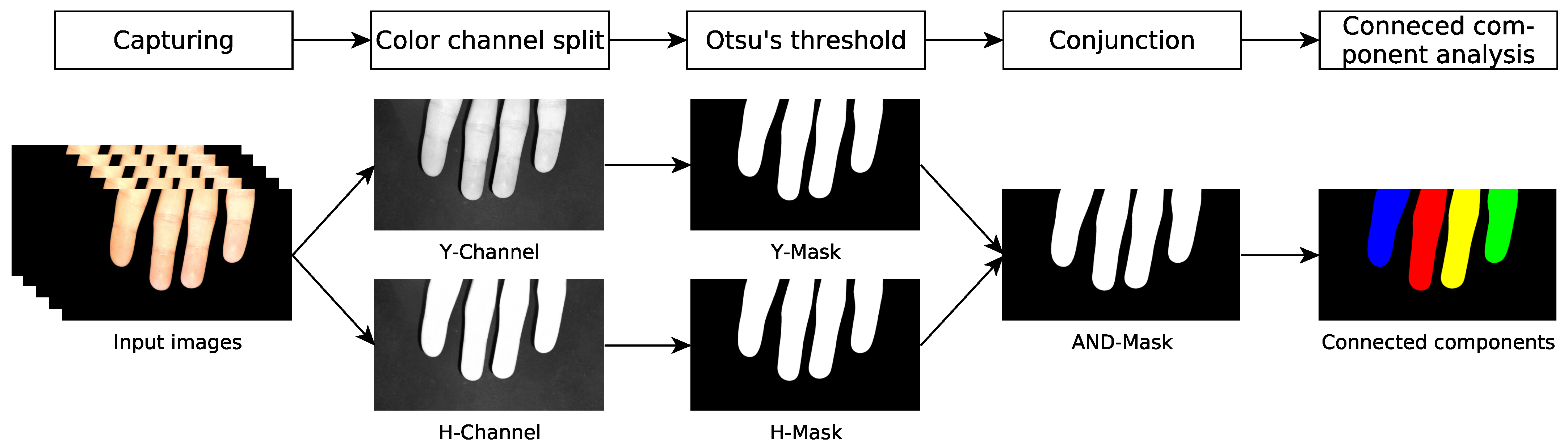

3.2. Segmentation of the Hand Area

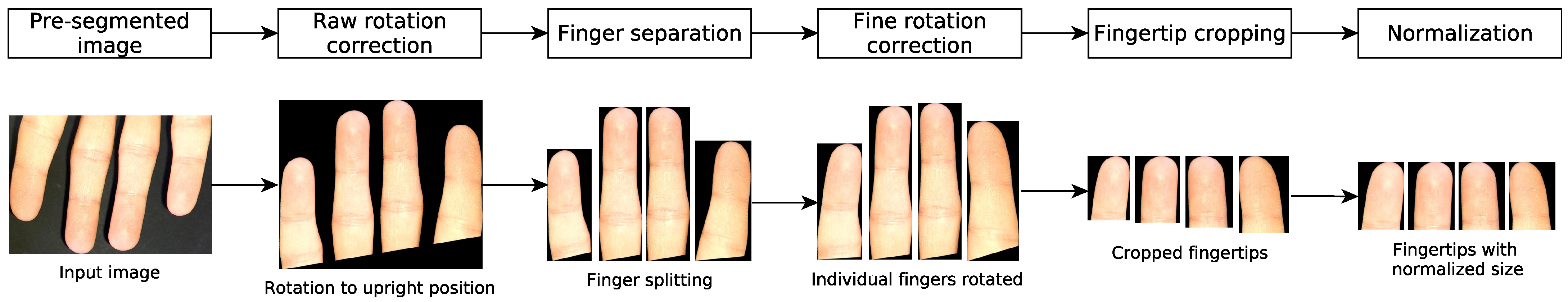

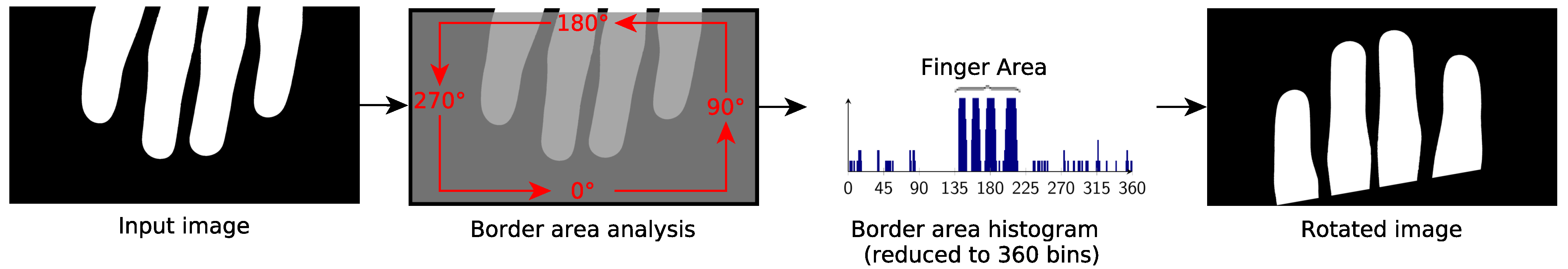

3.3. Rotation Correction, Fingertip Detection, and Normalization

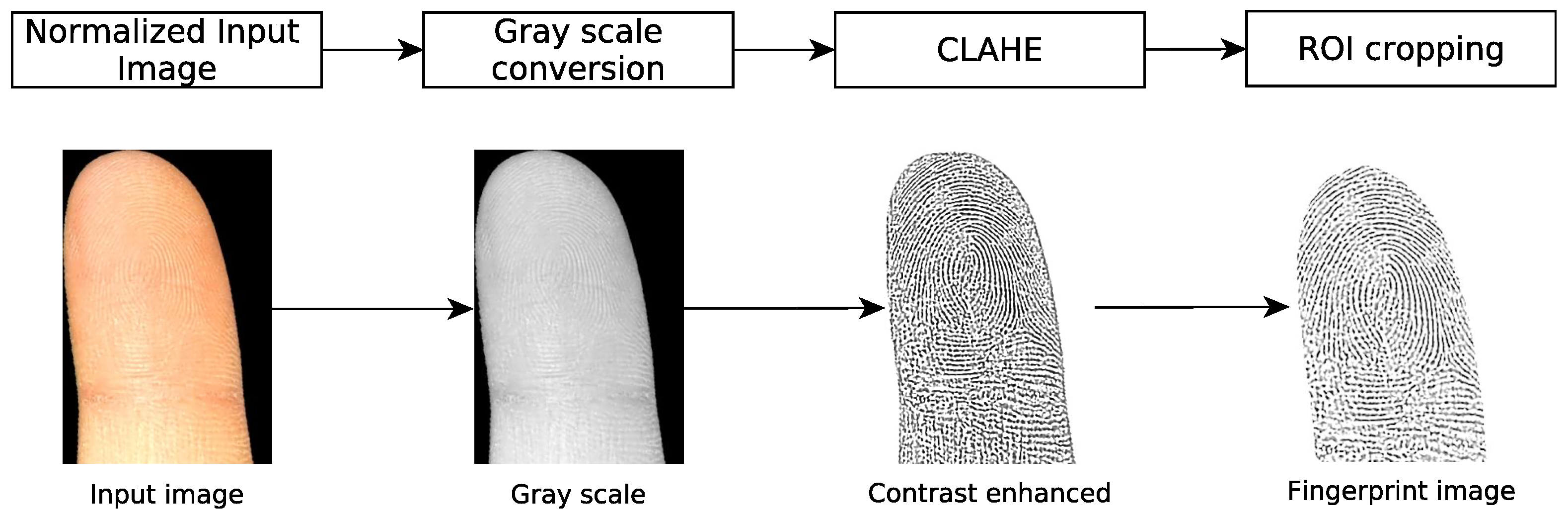

3.4. Fingerprint Processing

3.5. Quality Assessment

- Segmentation: Analysis of the dominant components in the binary mask. Here, the amount of dominant contours, as well as their shape, size, and position are analyzed. In addition, the relative positions to each other are inspected.

- Capturing: Evaluation of the fingerprint sharpness. A Sobel filter evaluates the sharpness of the processed grayscale fingerprint image. A square of 32 × 32 pixels at the center of the image is considered. A histogram analysis then assesses the sharpness of the image.

- Rotation, cropping: Assessment of the fingerprint size. The size of the fingerprint image after the cropping stage shows whether the fingerprint image is of sufficient quality.

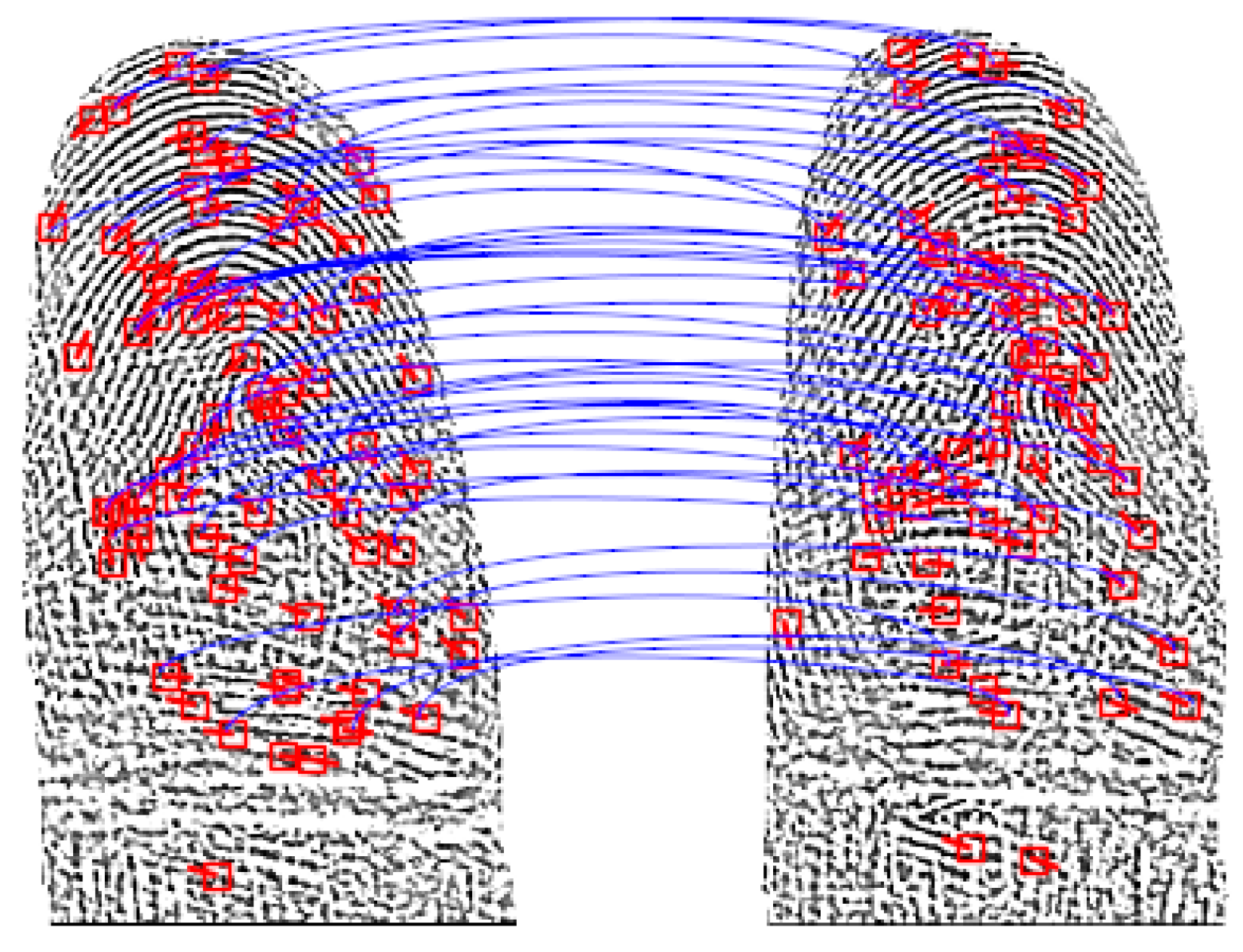

3.6. Feature Extraction and Comparison

4. Experimental Setup

4.1. Database Acquisition

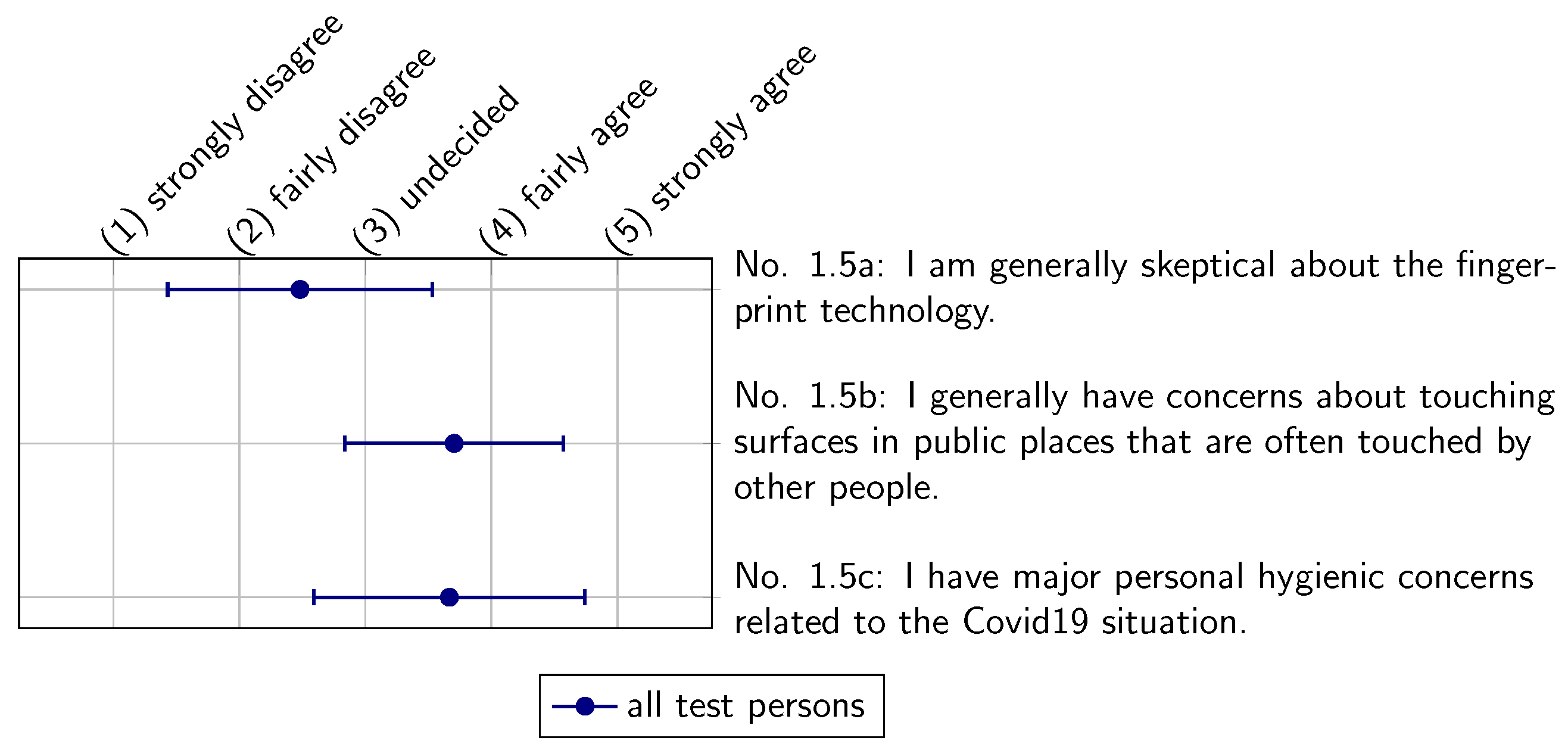

4.2. Usability Study Design

5. Results

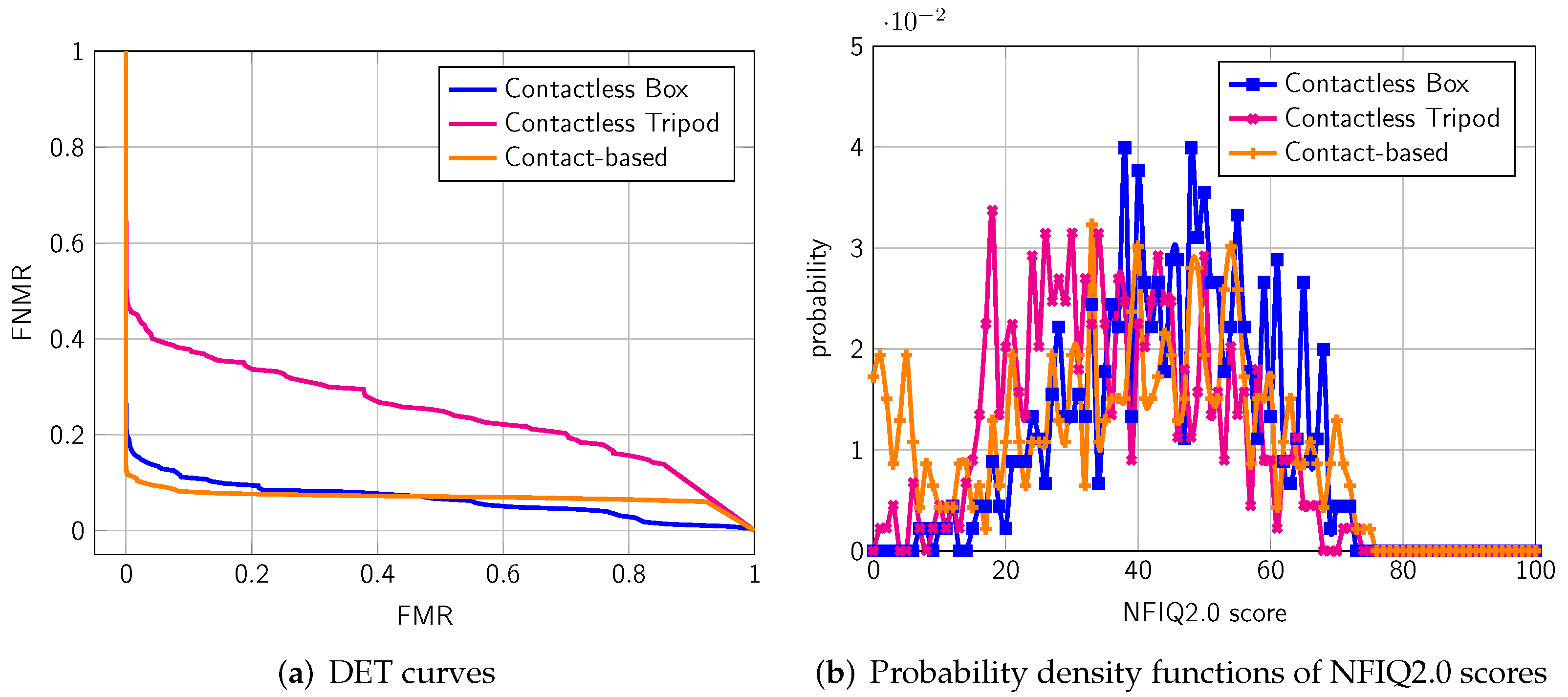

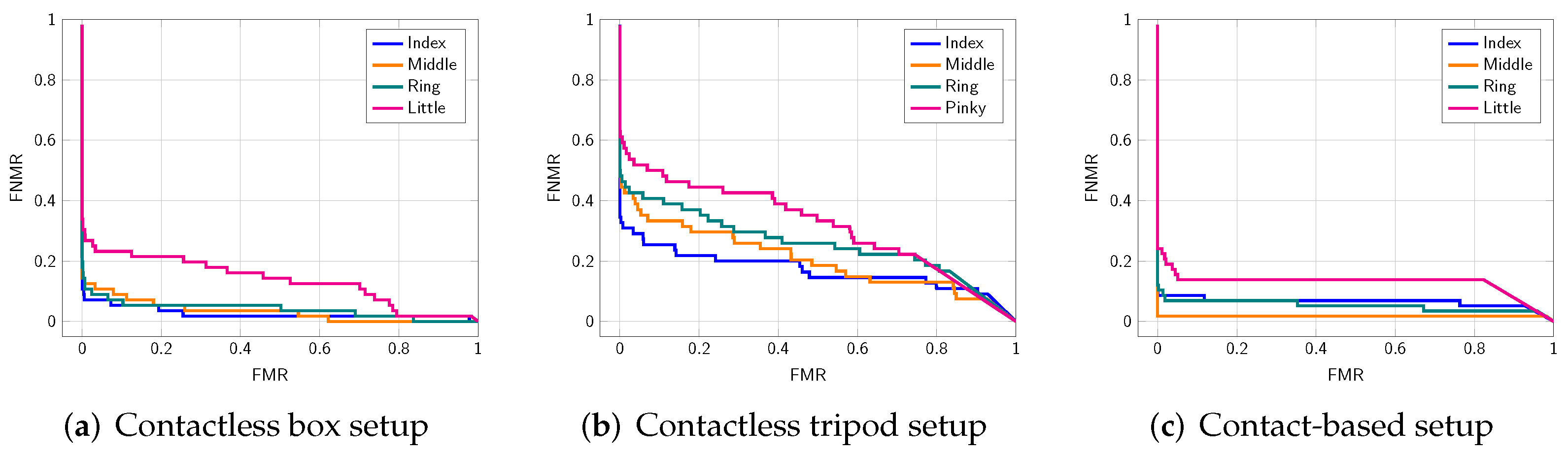

5.1. Biometric Performance

5.2. Usability Study

6. Impact of the COVID-19 Pandemic on Fingerprint Recognition

6.1. Impact of Hand Disinfection on Biometric Performance

6.2. User Acceptance

7. Implementation Aspects

7.1. Four-Finger Capturing

- Faster and more accurate recognition process: Due to a larger proportion of finger area in the image, focusing algorithms work more precisely. This results in less misfocusing and segmentation issues.

- Improved biometric performance: The direct capturing of four fingerprints in one single capturing attempt is highly suitable for biometric fusion. As shown in Table 6, this lowers the EER without any additional capturing and with very little additional processing.

7.2. Automatic Capturing and On-Device Processing

7.3. Environmental Influences

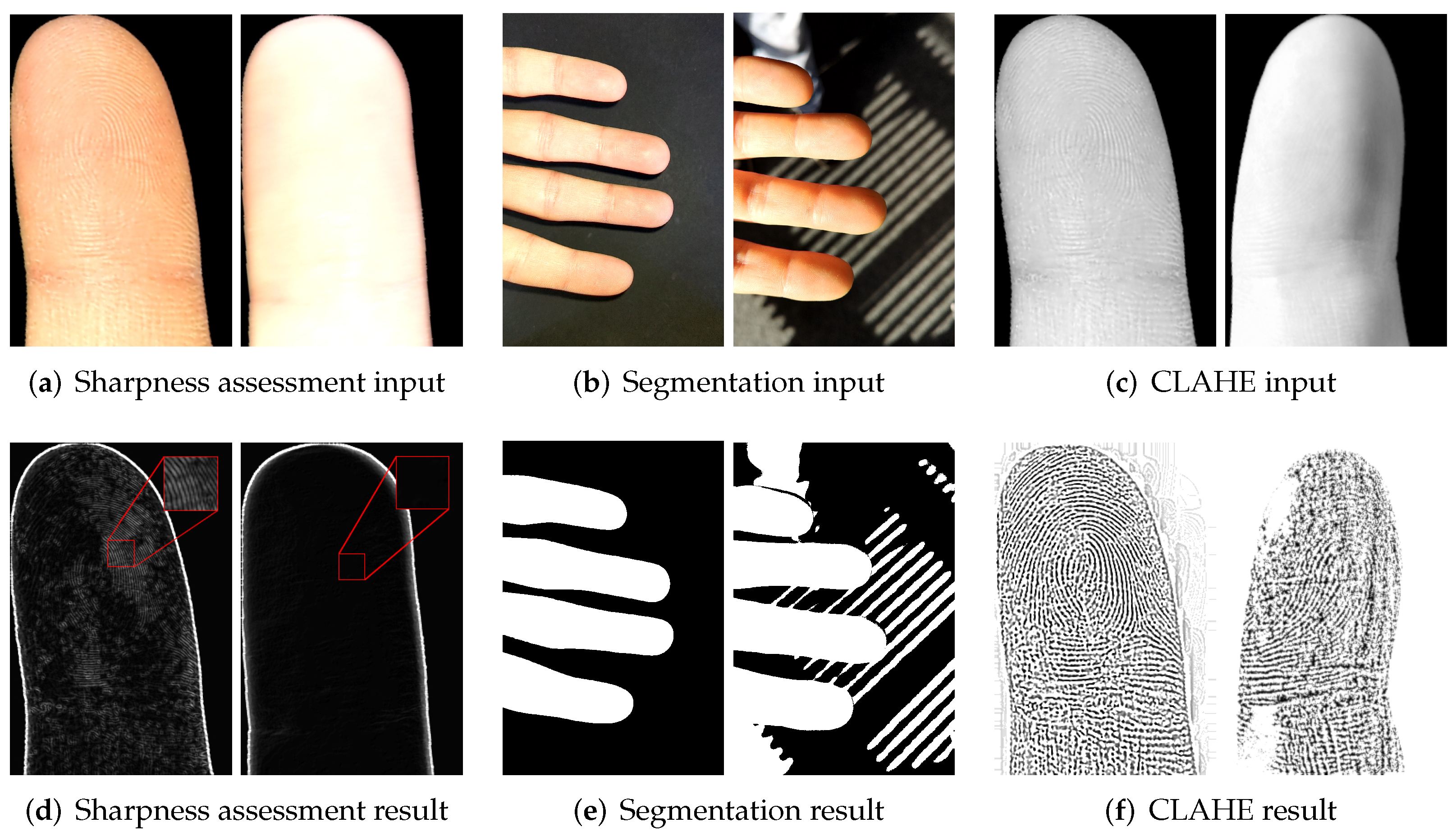

- Focusing of the hand area needs to be very accurate and fast in order to provide sharp finger images. Here, a focus point which is missed by a few millimeters causes a blurred and unusable image. Figure 17a,d illustrate the difference between a sharp finger image and a slightly unfocused image with the help of a Sobel filter. Additionally, the focus has to follow the hand movement in order to achieve a continuous stream of sharp images. The focus of our tested devices tend to fail under challenging illuminations which was not the case in the constrained environment.

- Segmentation, rotation, and finger separation rely on a binary mask in which the hand area is clearly separated from the background. Figure 17b,e show examples of a successful and unsuccessful segmentation. Impurities in the segmentation mask lead to connected areas between the fingertips and artifacts at the border region of the image. This causes inaccurate detection and separation of the fingertips and incorrect rotation results. Because of heterogeneous background, this is more often the case in unconstrained setups.

- Finger image enhancement using the CLAHE algorithm normalizes dark and bright areas on the finger image. From Figure 17c,e, we can see that this also works on samples of high contrast. Nevertheless, the results of challenging images may become more blurry.

7.4. Feature Extraction Strategies

7.5. Visual Instruction

7.6. Robust Capturing of Different Skin Colors and Finger Characteristics

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | Source code will be made available at https://gitlab.com/jannispriesnitz/mtfr (accessed on 8 December 2021). |

| 2 | Due to privacy regulations, it is not possible to make the database collected in this work publicly available. |

| 3 | The original algorithm uses minutiae quadruplets, i.e., additionally considers the minutiae type (e.g., ridge ending or bifurcation). As only minutiae triplets are extracted by the used minutiae extractors, the algorithm was modified to ignore the type information. |

| 4 | In this experiment, we consider only the same finger-IDs from a different subject as false match. |

References

- Okereafor, K.; Ekong, I.; Okon Markson, I.; Enwere, K. Fingerprint Biometric System Hygiene and the Risk of COVID-19 Transmission. JMIR Biomed. Eng. 2020, 5, e19623. [Google Scholar] [CrossRef]

- Chen, Y.; Parziale, G.; Diaz-Santana, E.; Jain, A.K. 3D touchless fingerprints: Compatibility with legacy rolled images. In Proceedings of the 2006 Biometrics Symposium: Special Session on Research at the Biometric Consortium Conference, Baltimore, MD, USA, 21 August–19 September 2006; pp. 1–6. [Google Scholar]

- Hiew, B.Y.; Teoh, A.B.J.; Pang, Y.H. Digital camera based fingerprint recognition. In Proceedings of the International Conference on Telecommunications and Malaysia International Conference on Communications, Penang, Malaysia, 14–17 May 2007; pp. 676–681. [Google Scholar]

- Wang, L.; El-Maksoud, R.H.A.; Sasian, J.M.; Kuhn, W.P.; Gee, K.; Valencia, V.S. A novel contactless aliveness-testing (CAT) fingerprint capturing device. In Proceedings of the Novel Optical Systems Design and Optimization XII, San Diego, CA, USA, 3–4 August 2009; Volume 7429, p. 742915. [Google Scholar]

- Attrish, A.; Bharat, N.; Anand, V.; Kanhangad, V. A Contactless Fingerprint Recognition System. arXiv 2021, arXiv:2108.09048. [Google Scholar]

- Piuri, V.; Scotti, F. Fingerprint Biometrics via Low-cost capturing devices and Webcams. In Proceedings of the Second International Conference on Biometrics: Theory, Applications and Systems (BTAS), Washington, DC, USA, 28 September–1 October 2008; pp. 1–6. [Google Scholar]

- Kumar, A.; Zhou, Y. Contactless fingerprint identification using level zero features. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Colorado Springs, CO, USA, 20–25 June 2011; pp. 114–119. [Google Scholar]

- Noh, D.; Choi, H.; Kim, J. Touchless capturing device capturing five fingerprint images by one rotating camera. Opt. Eng. 2011, 50, 113202. [Google Scholar] [CrossRef]

- Derawi, M.O.; Yang, B.; Busch, C. Fingerprint Recognition with Embedded Cameras on Mobile Phones. In Proceedings of the Security and Privacy in Mobile Information and Communication Systems (ICST), Frankfurt, Germany, 25–27 June 2012; pp. 136–147. [Google Scholar]

- Stein, C.; Bouatou, V.; Busch, C. Video-based fingerphoto recognition with anti-spoofing techniques with smartphone cameras. In Proceedings of the International Conference of the Biometric Special Interest Group (BIOSIG), Darmstadt, Germany, 5–6 September 2013; pp. 1–12. [Google Scholar]

- Raghavendra, R.; Raja, K.B.; Surbiryala, J.; Busch, C. A low-cost multimodal biometric capturing device to capture finger vein and fingerprint. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–7. [Google Scholar]

- Tiwari, K.; Gupta, P. A touch-less fingerphoto recognition system for mobile hand-held devices. In Proceedings of the International Conference on Biometrics (ICB), Phuket, Thailand, 19–22 May 2015; pp. 151–156. [Google Scholar]

- Sankaran, A.; Malhotra, A.; Mittal, A.; Vatsa, M.; Singh, R. On smartphone camera based fingerphoto authentication. In Proceedings of the 7th International Conference on Biometrics Theory, Applications and Systems (BTAS), Arlington, VA, USA, 8–11 September 2015; pp. 1–7. [Google Scholar]

- Carney, L.A.; Kane, J.; Mather, J.F.; Othman, A.; Simpson, A.G.; Tavanai, A.; Tyson, R.A.; Xue, Y. A Multi-Finger Touchless Fingerprinting System: Mobile Fingerphoto and Legacy Database Interoperability. In Proceedings of the 4th International Conference on Biomedical and Bioinformatics Engineering (ICBBE), Seoul, Korea, 12–14 November 2017; pp. 139–147. [Google Scholar]

- Deb, D.; Chugh, T.; Engelsma, J.; Cao, K.; Nain, N.; Kendall, J.; Jain, A.K. Matching Fingerphotos to Slap Fingerprint Images. arXiv 2018, arXiv:1804.08122. [Google Scholar]

- Weissenfeld, A.; Strobl, B.; Daubner, F. Contactless finger and face capturing on a secure handheld embedded device. In Proceedings of the 2018 Design, Automation Test in Europe Conference Exhibition (DATE), Dresden, Germany, 19–23 March 2018; pp. 1321–1326. [Google Scholar]

- Birajadar, P.; Haria, M.; Kulkarni, P.; Gupta, S.; Joshi, P.; Singh, B.; Gadre, V. Towards smartphone-based touchless fingerprint recognition. Sādhanā 2019, 44, 161. [Google Scholar] [CrossRef] [Green Version]

- Kauba, C.; Söllinger, D.; Kirchgasser, S.; Weissenfeld, A.; Fernández Domínguez, G.; Strobl, B.; Uhl, A. Towards Using Police Officers’ Business Smartphones for Contactless Fingerprint Acquisition and Enabling Fingerprint Comparison against Contact-Based Datasets. Sensors 2021, 21, 2248. [Google Scholar] [CrossRef] [PubMed]

- Priesnitz, J.; Rathgeb, C.; Buchmann, N.; Busch, C. An Overview of Touchless 2D Fingerprint Recognition. EURASIP J. Image Video Process. 2021, 2021, 25. [Google Scholar] [CrossRef]

- Yin, X.; Zhu, Y.; Hu, J. A Survey on 2D and 3D Contactless Fingerprint Biometrics: A Taxonomy, Review, and Future Directions. IEEE Open J. Comput. Soc. 2021, 2, 370–381. [Google Scholar] [CrossRef]

- Hiew, B.Y.; Teoh, A.B.J.; Ngo, D.C.L. Automatic Digital Camera Based Fingerprint Image Preprocessing. In Proceedings of the International Conference on Computer Graphics, Imaging and Visualisation (CGIV), Sydney, Australia, 26–28 July 2006; pp. 182–189. [Google Scholar]

- Sisodia, D.S.; Vandana, T.; Choudhary, M. A conglomerate technique for finger print recognition using phone camera captured images. In Proceedings of the International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), Chennai, India, 21–22 September 2017; pp. 2740–2746. [Google Scholar]

- Wang, K.; Cui, H.; Cao, Y.; Xing, X.; Zhang, R. A Preprocessing Algorithm for Touchless Fingerprint Images. In Biometric Recognition; Springer: Cham, Switzerland, 2016; pp. 224–234. [Google Scholar]

- Malhotra, A.; Sankaran, A.; Mittal, A.; Vatsa, M.; Singh, R. Fingerphoto authentication using smartphone camera captured under varying environmental conditions. In Human Recognition in Unconstrained Environments; Elsevier: Amsterdam, The Netherlands, 2017; pp. 119–144. [Google Scholar]

- Raghavendra, R.; Busch, C.; Yang, B. Scaling-robust fingerprint verification with smartphone camera in real-life scenarios. In Proceedings of the Sixth International Conference on Biometrics: Theory, Applications and Systems (BTAS), Arlington, VA, USA, 29 September–2 October 2013; pp. 1–8. [Google Scholar]

- Stein, C.; Nickel, C.; Busch, C. Fingerphoto recognition with smartphone cameras. In Proceedings of the International Conference of Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 6–7 September 2012; pp. 1–12. [Google Scholar]

- NIST. NFIQ2.0: NIST Fingerprint Image Quality 2.0. Available online: https://github.com/usnistgov/NFIQ2 (accessed on 9 January 2022).

- Priesnitz, J.; Rathgeb, C.; Buchmann, N.; Busch, C. Touchless Fingerprint Sample Quality: Prerequisites for the Applicability of NFIQ2. 0. In Proceedings of the International Conference of the Biometrics Special Interest Group (BIOSIG), Online, 16–18 September 2020; pp. 1–5. [Google Scholar]

- Lin, C.; Kumar, A. Contactless and Partial 3D Fingerprint Recognition using Multi-view Deep Representation. Pattern Recognit. 2018, 83, 314–327. [Google Scholar] [CrossRef]

- ISO/IEC 19794-4:2011; Information Technology—Biometric Data Interchange Formats—Part 4: Finger Image Data; Standard, International Organization for Standardization: Geneva, Switzerland, 2011.

- IEC 19795-1; Information Technology–Biometric Performance Testing and Reporting-Part 1: Principles and Framework; ISO/IEC: Geneva, Switzerland, 2021.

- Fitzpatrick, T.B. The Validity and Practicality of Sun-Reactive Skin Types I Through VI. Arch. Dermatol. 1988, 124, 869–871. [Google Scholar] [CrossRef] [PubMed]

- Furman, S.M.; Stanton, B.C.; Theofanos, M.F.; Libert, J.M.; Grantham, J.D. Contactless Fingerprint Devices Usability Test; Technical Report NIST IR 8171; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2017. [CrossRef]

- Porst, R. Fragebogen: Ein Arbeitsbuch, 4th ed.; Studienskripten zur Soziologie; Springer VS: Wiesbaden, Germany, 2014; OCLC: 870294421. [Google Scholar]

- Rohrmann, B. Empirische Studien zur Entwicklung von Antwortskalen für die sozialwissenschaftliche Forschung. Z. FüR Sozialpsychologie 1978, 9, 222–245. [Google Scholar]

- Tang, Y.; Gao, F.; Feng, J.; Liu, Y. FingerNet: An unified deep network for fingerprint minutiae extraction. In Proceedings of the International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 108–116. [Google Scholar]

- Važan, R. SourceAFIS—Opensource Fingerprint Matcher. 2019. Available online: https://sourceafis.machinezoo.com/ (accessed on 9 January 2022).

- Ortega-Garcia, J.; Fierrez-Aguilar, J.; Simon, D.; Gonzalez, J.; Faundez-Zanuy, M.; Espinosa, V.; Satue, A.; Hernaez, I.; Igarza, J.; Vivaracho, C.; et al. MCYT baseline corpus: A bimodal biometric database. IEE Proc. Vis. Image Signal Process. 2003, 150, 395–401. [Google Scholar] [CrossRef] [Green Version]

- Cappelli, R.; Ferrara, M.; Franco, A.; Maltoni, D. Fingerprint Verification Competition 2006. Biom. Technol. Today 2007, 15, 7–9. [Google Scholar] [CrossRef]

- Kumar, A. The Hong Kong Polytechnic University Contactless 2D to Contact-Based 2D Fingerprint Images Database Version 1.0. 2017. Available online: http://www4.comp.polyu.edu.hk/csajaykr/fingerprint.htm (accessed on 9 January 2022).

- Mann, H.B.; Whitney, D.R. On a Test of Whether one of Two Random Variables is Stochastically Larger than the Other. Ann. Math. Stat. 1947, 18, 50–60. [Google Scholar] [CrossRef]

- Olsen, M.A.; Dusio, M.; Busch, C. Fingerprint skin moisture impact on biometric performance. In Proceedings of the 3rd International Workshop on Biometrics and Forensics (IWBF 2015), Gjovik, Norway, 3–4 March 2015; pp. 1–6. [Google Scholar]

- O’Connell, K.A.; Enos, C.W.; Prodanovic, E. Case Report: Handwashing-Induced Dermatitis During the COVID-19 Pandemic. Am. Fam. Physician 2020, 102, 327–328. [Google Scholar] [PubMed]

- Tan, S.W.; Oh, C.C. Contact Dermatitis from Hand Hygiene Practices in the COVID-19 Pandemic. Ann. Acad. Med. Singap. 2020, 49, 674–676. [Google Scholar] [CrossRef] [PubMed]

- Otter, J.A.; Donskey, C.; Yezli, S.; Douthwaite, S.; Goldenberg, S.D.; Weber, D.J. Transmission of SARS and MERS coronaviruses and influenza virus in healthcare settings: The possible role of dry surface contamination. J. Hosp. Infect. 2016, 92, 235–250. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- European Union. Commission Implementing Decision (EU) 2019/329 of 25 February 2019 laying down the specifications for the quality, resolution and use of fingerprints and facial image for biometric verification and identification in the Entry/Exit System (EES). Off. J. Eur. Union 2019, 57, 18–28. [Google Scholar]

- VeriFinger, SDK Neuro Technology; Neuro Technology: Vilnius, Lithuania, 2010.

- Vyas, R.; Kumar, A. A Collaborative Approach using Ridge-Valley Minutiae for More Accurate Contactless Fingerprint Identification. arXiv 2019, arXiv:1909.06045. [Google Scholar]

| Device | Google Pixel 4 | Huawei P20 Pro |

|---|---|---|

| Chipset | Snapdragon 855 | Kirin 970 |

| CPU | Octa-core | |

| Ram | 6 GB | |

| Camera | 12.2 MP, f/1.7, 27 mm | 40 MP, f/1.8, 27 mm |

| Flash mode | Always on | |

| Avg. system load | ∼84% | ∼73% |

| Type | Setup | Device | Subjects Captured | Rounds | Samples |

|---|---|---|---|---|---|

| Contactless | box | Google Pixel 4 | 28 | 2 | 448 |

| Contactless | tripod | Huawei P20 Pro | 28 | 2 | 448 |

| Contact-based | - | Crossmatch Guardian 100 | 29 | 2 | 464 |

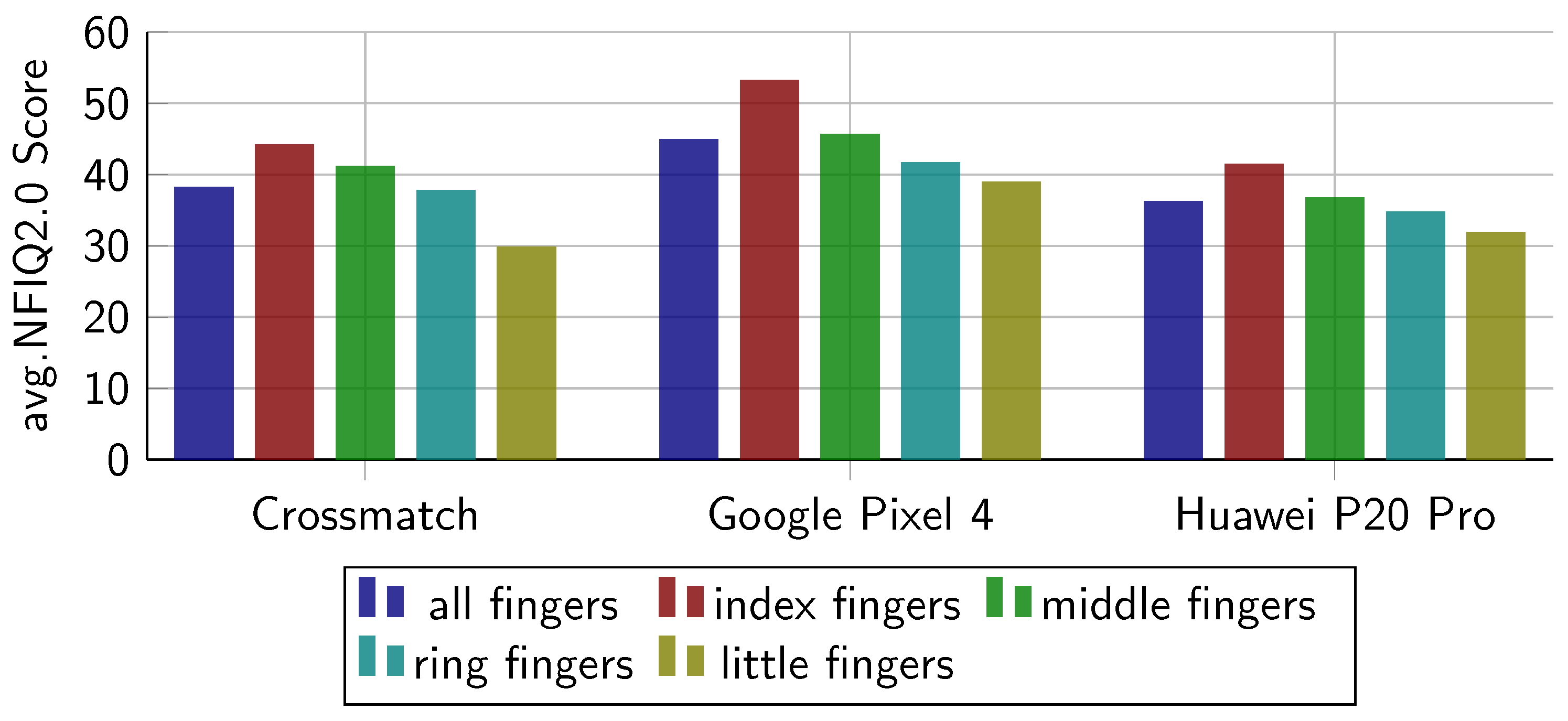

| Capturing Device | Subset | Avg. NFIQ2.0 Score | EER (%) |

|---|---|---|---|

| Contactless box | All fingers | 44.80 (±13.51) | 10.71 |

| Contactless tripod | All fingers | 36.15 (±14.45) | 30.41 |

| Contact-based | All fingers | 38.15 (±19.33 ) | 8.19 |

| Capturing Device | Fingers | Avg. NFIQ2.0 Score | EER (%) |

|---|---|---|---|

| Contactless box | Index fingers | 53.16 (±11.27) | 7.14 |

| Contactless box | Middle fingers | 45.59 (±11.06) | 8.91 |

| Contactless box | Ring fingers | 41.57 (±12.89) | 7.14 |

| Contactless box | Little fingers | 38.88 (±14.21) | 21.43 |

| Contactless tripod | Index fingers | 41.38 (±14.29) | 21.81 |

| Contactless tripod | Middle fingers | 36.68 (±13.01) | 28.58 |

| Contactless tripod | Ring fingers | 34.68 (±14.28) | 29.62 |

| Contactless tripod | Little fingers | 31.79 (±14.63) | 38.98 |

| Contact-based | Index fingers | 44.06 (±17.53 ) | 8.62 |

| Contact-based | Middle fingers | 41.08 (±19.71 ) | 1.72 |

| Contact-based | Ring fingers | 37.68 (±17.08 ) | 6.90 |

| Contact-based | Little fingers | 29.78 (±19.94 ) | 13.79 |

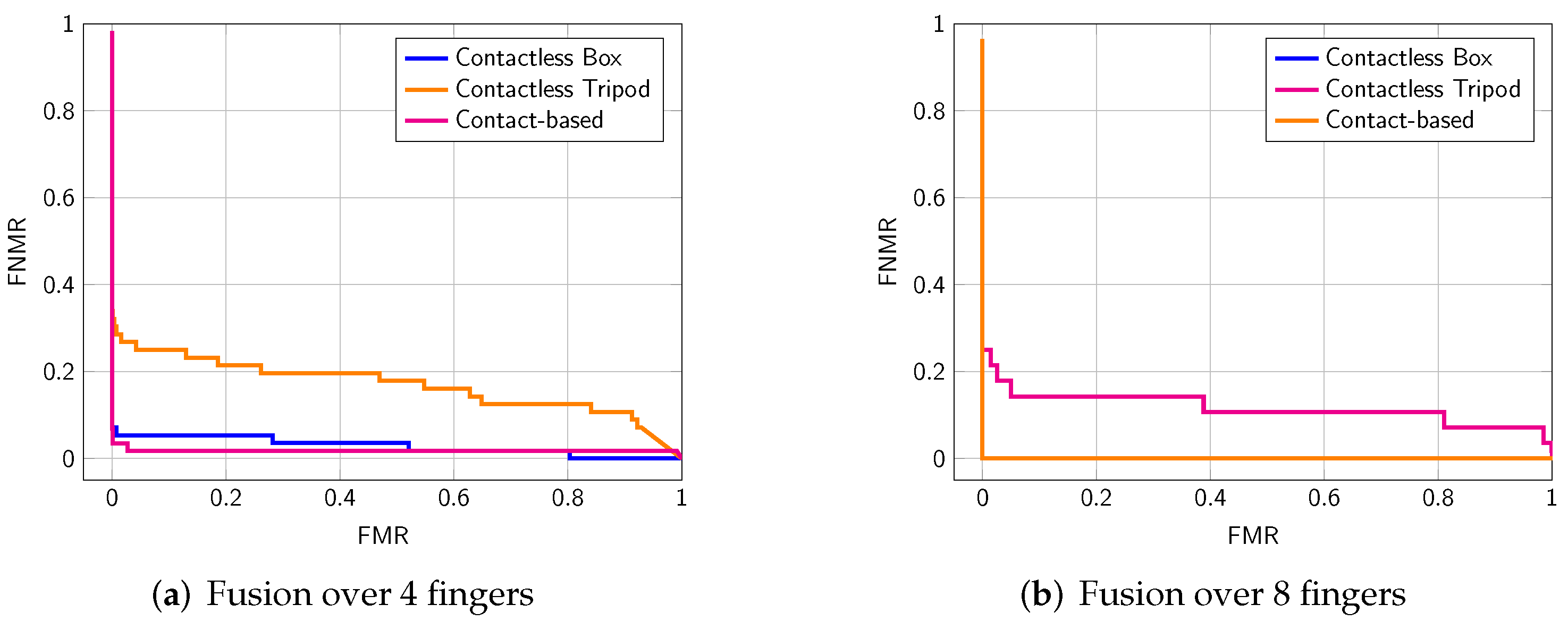

| Capturing Device | Fusion Approach | EER (%) |

|---|---|---|

| Contactless box | 4 fingers | 5.36 |

| Contactless box | 8 fingers | 0.00 |

| Contactless tripod | 4 fingers | 21.42 |

| Contactless tripod | 8 fingers | 14.29 |

| Contact-based | 4 finger | 2.22 |

| Contact-based | 8 finger | 0.00 |

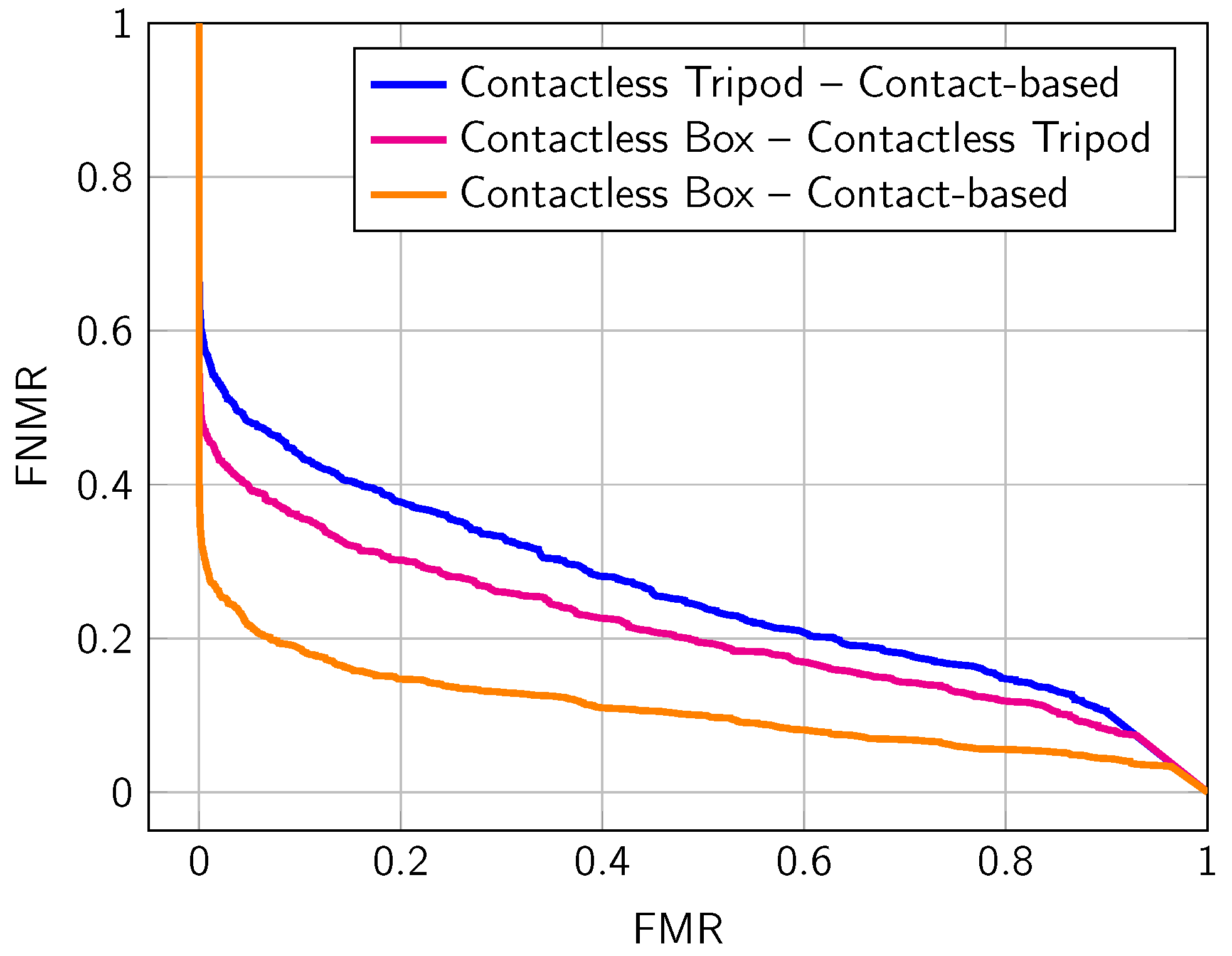

| Capturing Device A | Capturing Device B | EER (%) |

|---|---|---|

| Contactless box | Contactless tripod | 27.27 |

| Contactless box | Contact-based | 15.71 |

| Contactless tripod | Contact-based | 32.02 |

| Database | Subset | Avg. NFIQ2.0 Score | EER (%) |

|---|---|---|---|

| MCYT | dp | 37.58 (±15.17) | 0.48 |

| pb | 33.02 (±13.99) | 1.35 | |

| FVC06 | DB2-A | 36.07 (±9.07) | 0.15 |

| PolyU | Contactless session 1 | 47.71 (±10.86) | 3.91 |

| Contactless session 2 | 47.08 (±13.21) | 3.17 | |

| Our database | Contact-based | 38.15 (±19.33 ) | 8.19 |

| Contactless box | 44.80 (±13.51) | 10.71 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Priesnitz, J.; Huesmann, R.; Rathgeb, C.; Buchmann, N.; Busch, C. Mobile Contactless Fingerprint Recognition: Implementation, Performance and Usability Aspects. Sensors 2022, 22, 792. https://doi.org/10.3390/s22030792

Priesnitz J, Huesmann R, Rathgeb C, Buchmann N, Busch C. Mobile Contactless Fingerprint Recognition: Implementation, Performance and Usability Aspects. Sensors. 2022; 22(3):792. https://doi.org/10.3390/s22030792

Chicago/Turabian StylePriesnitz, Jannis, Rolf Huesmann, Christian Rathgeb, Nicolas Buchmann, and Christoph Busch. 2022. "Mobile Contactless Fingerprint Recognition: Implementation, Performance and Usability Aspects" Sensors 22, no. 3: 792. https://doi.org/10.3390/s22030792

APA StylePriesnitz, J., Huesmann, R., Rathgeb, C., Buchmann, N., & Busch, C. (2022). Mobile Contactless Fingerprint Recognition: Implementation, Performance and Usability Aspects. Sensors, 22(3), 792. https://doi.org/10.3390/s22030792