Embedded Sensing System for Recognizing Citrus Flowers Using Cascaded Fusion YOLOv4-CF + FPGA

Abstract

:1. Introduction

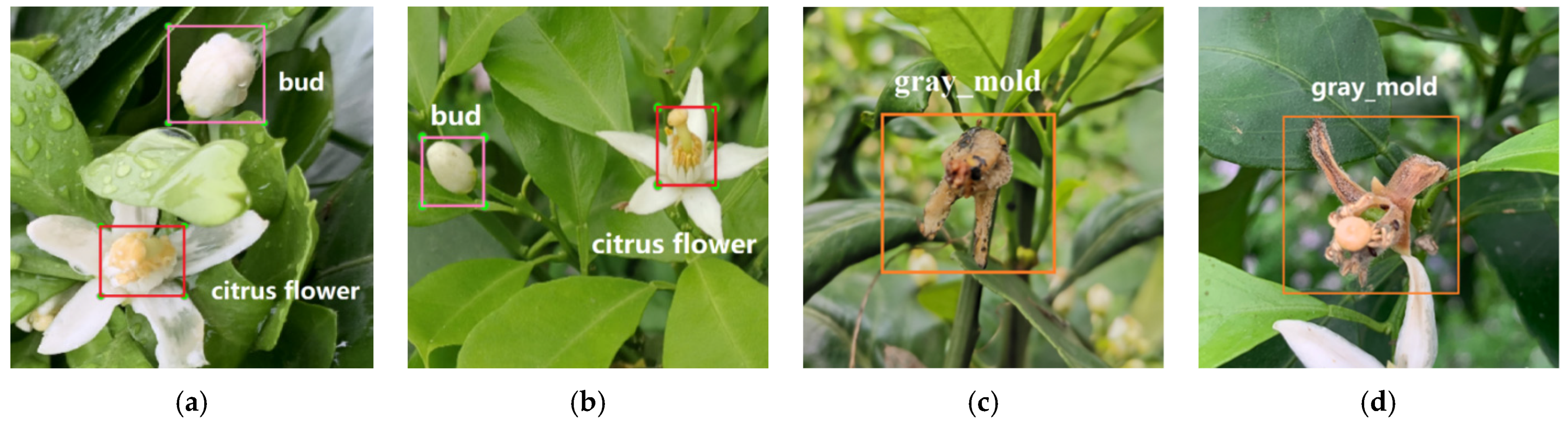

- A lightweight object recognition model using cascade fusion YOLOv4-CF is proposed, which recognizes multi-type objects in their natural environments, such as citrus buds, citrus flowers, and gray mold.

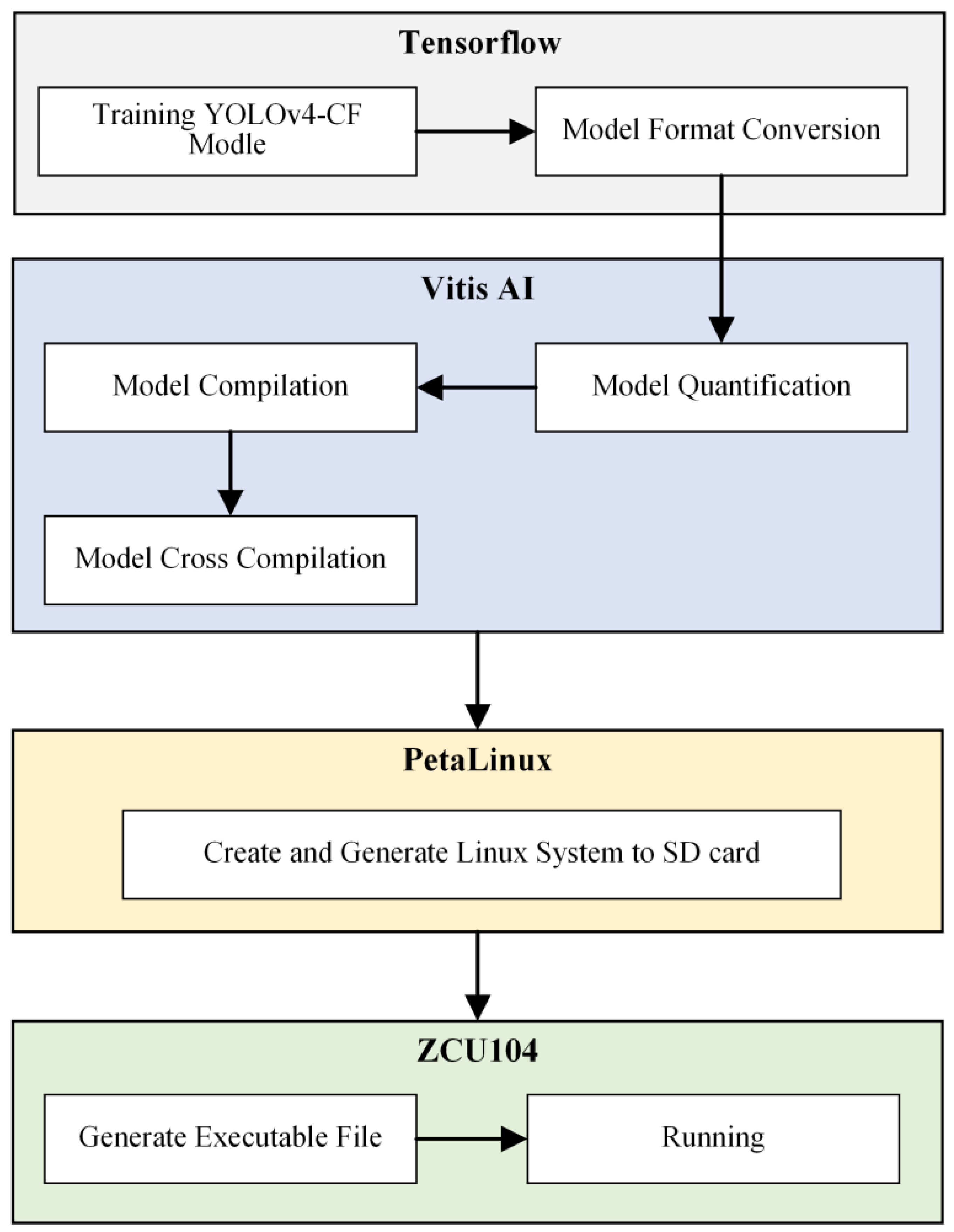

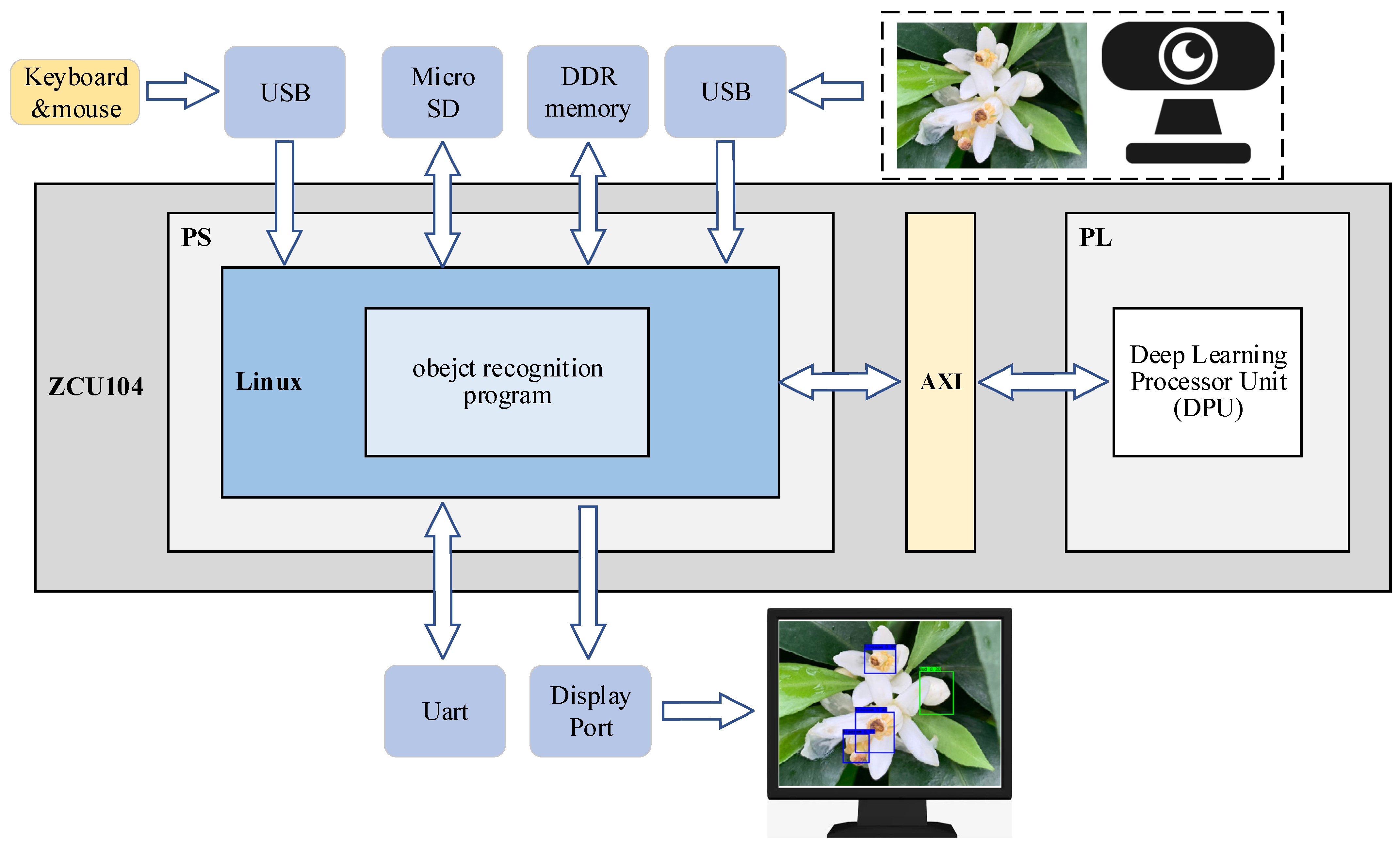

- A method for deploying convolutional neural networks to an FPGA embedded sensing system is provided.

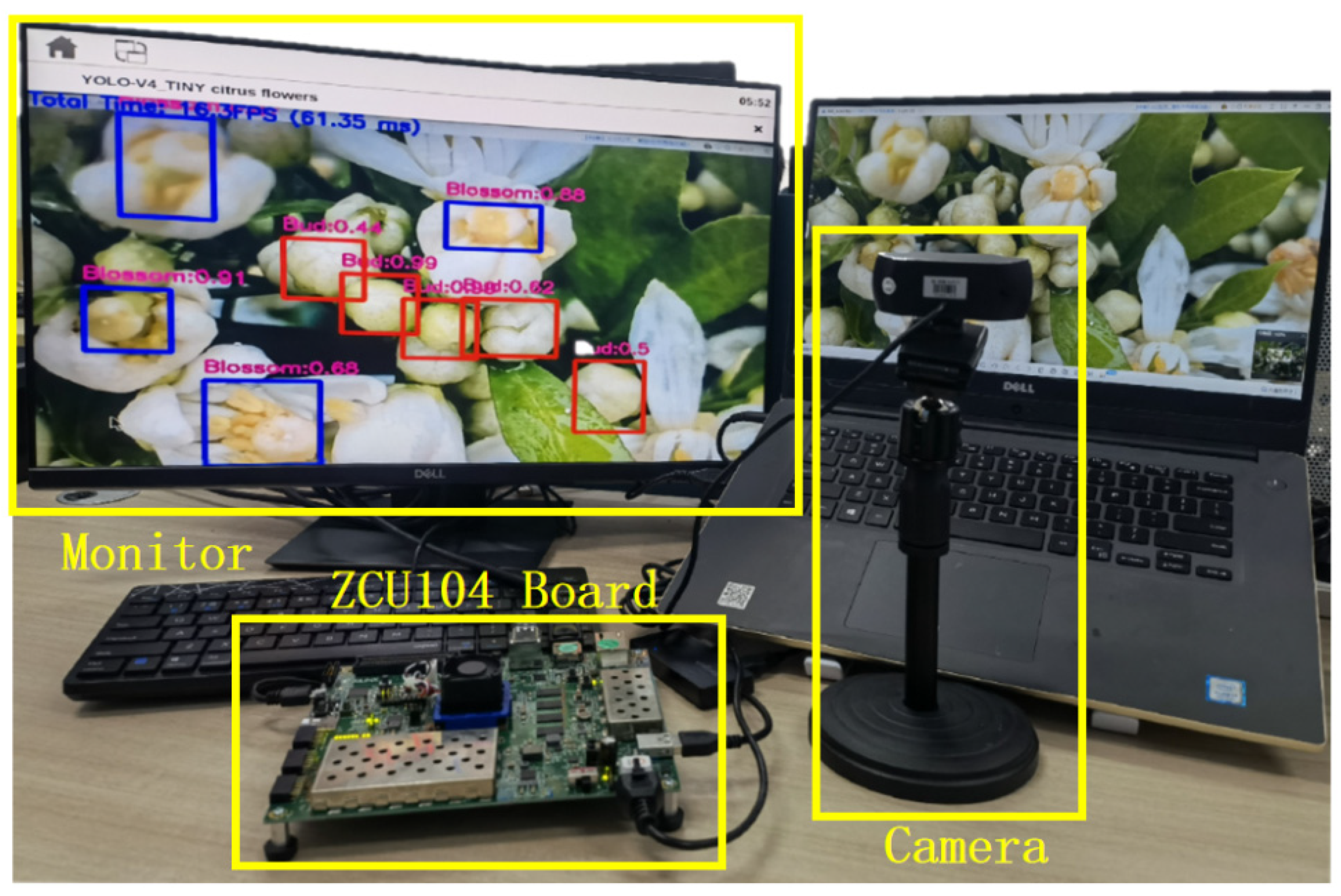

- An embedded sensing system for recognizing citrus flowers is designed by quantitatively applying the proposed YOLOv4-CF model to an FPGA platform.

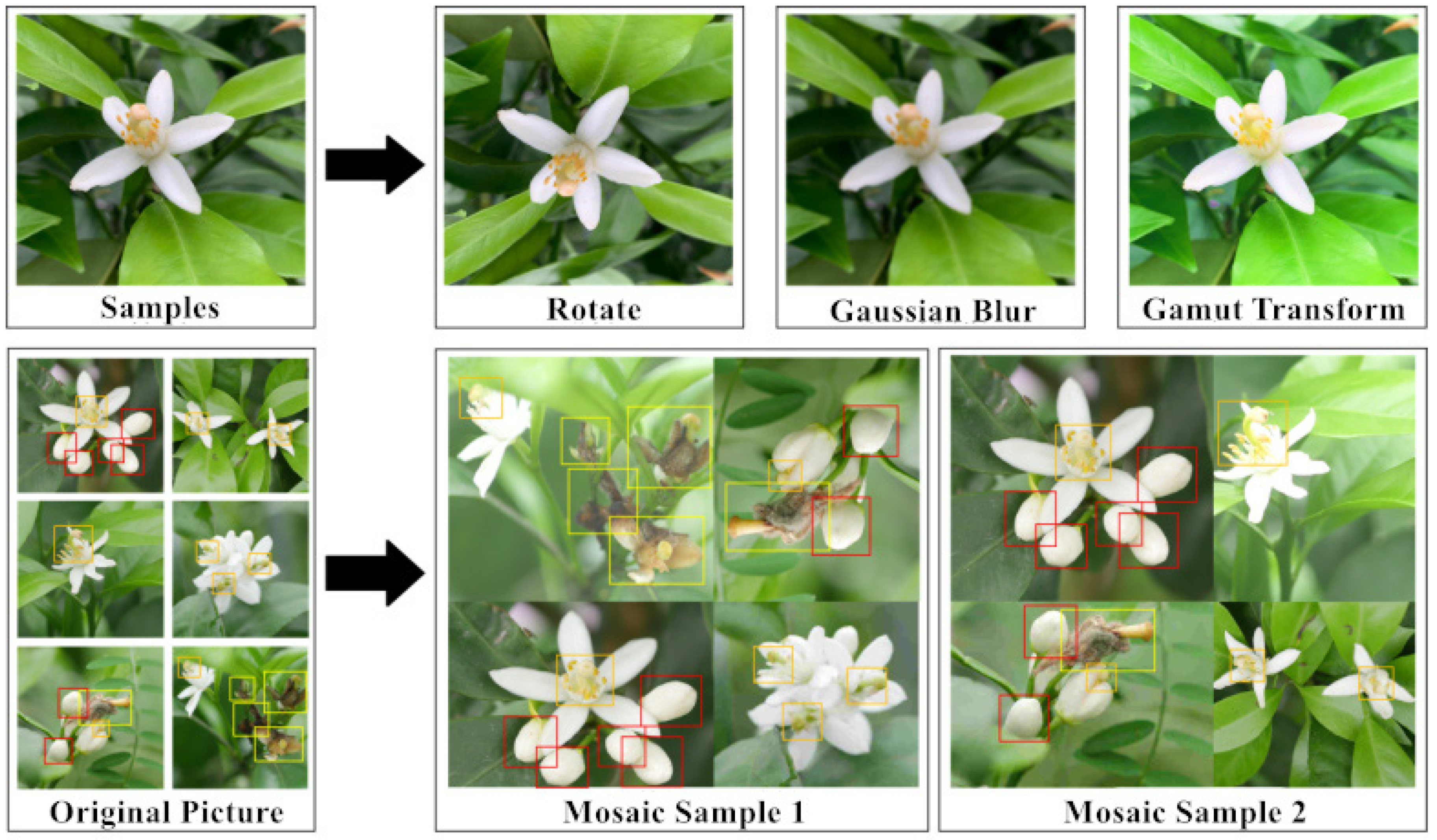

2. Experimental Data and Processing Methods

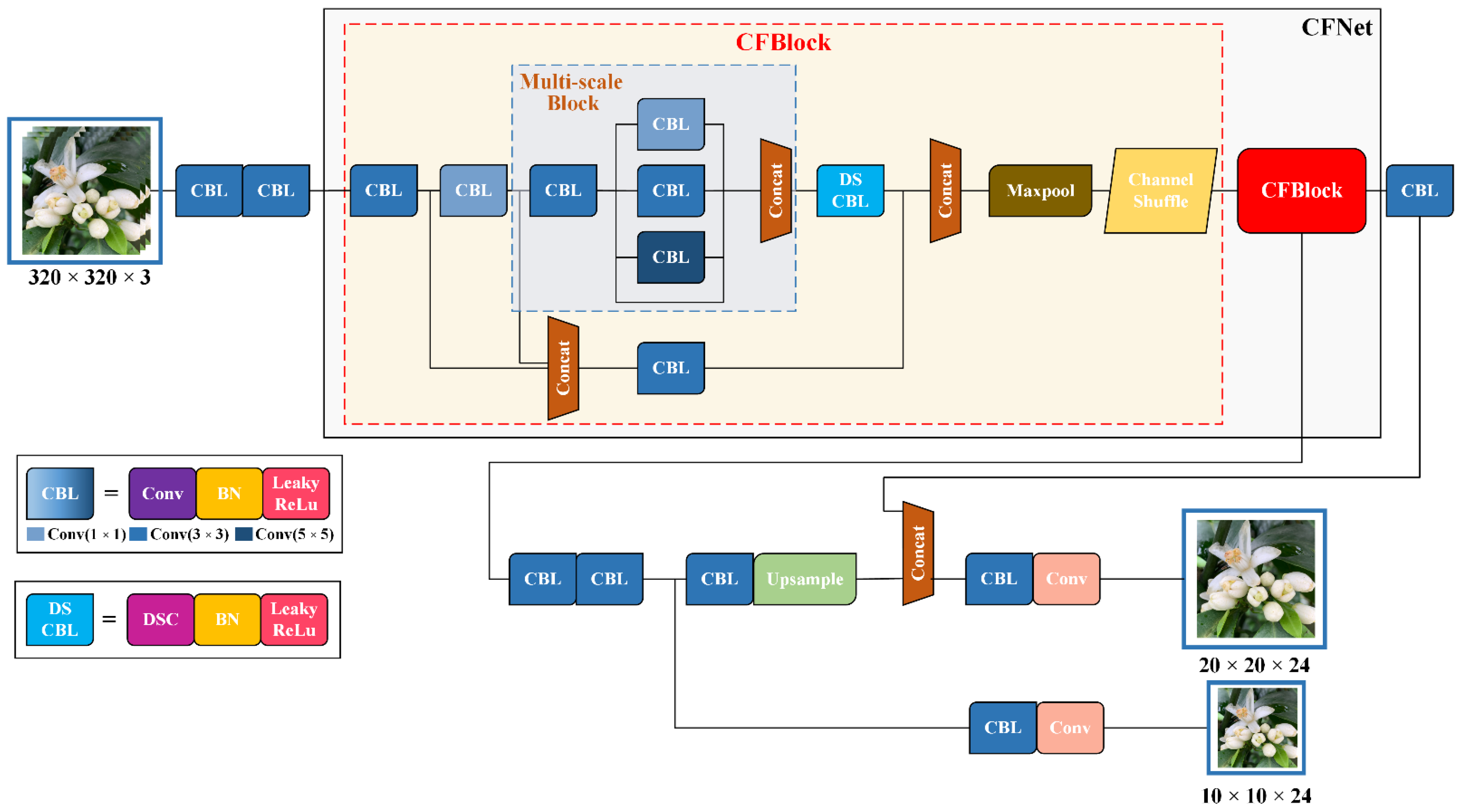

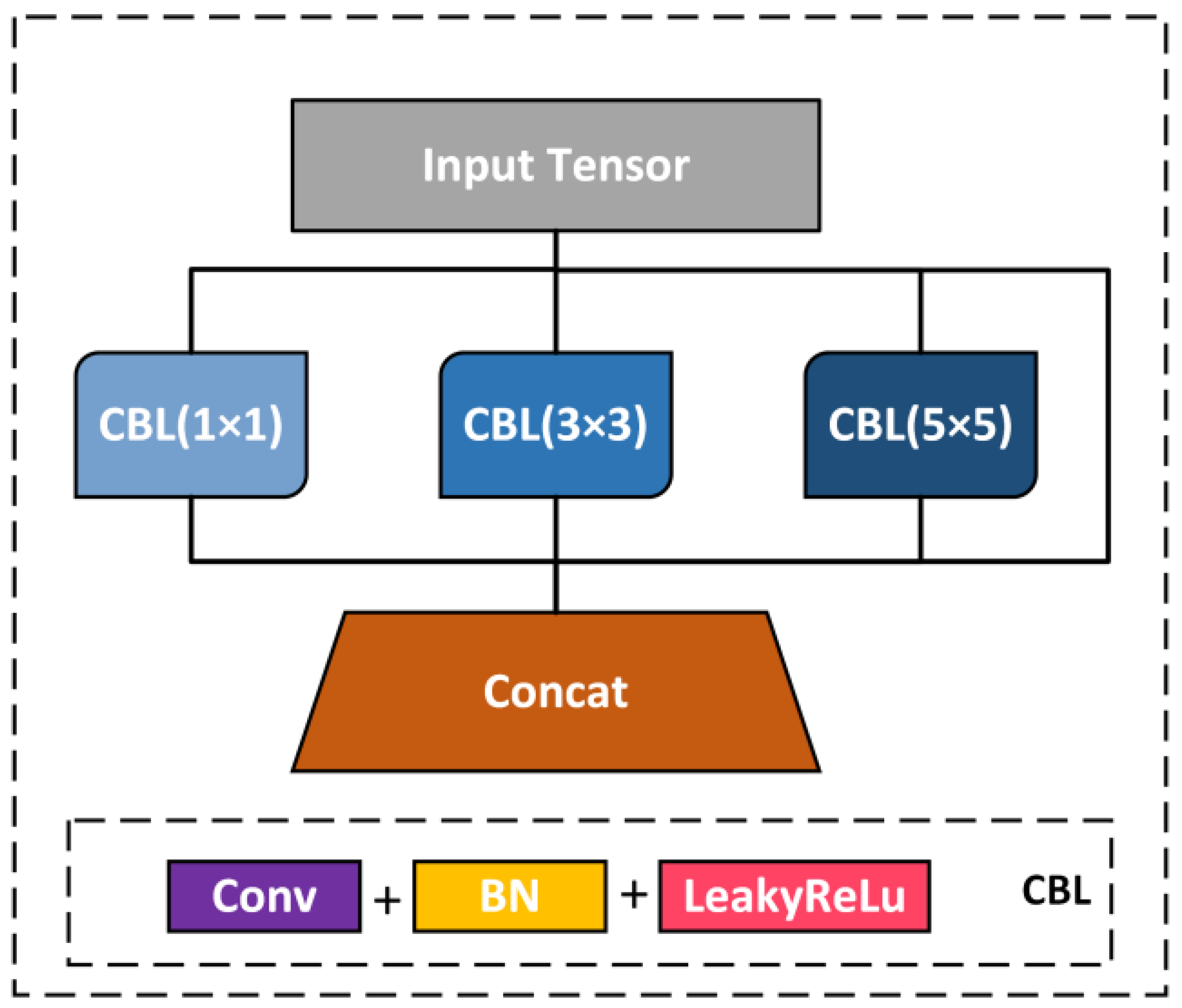

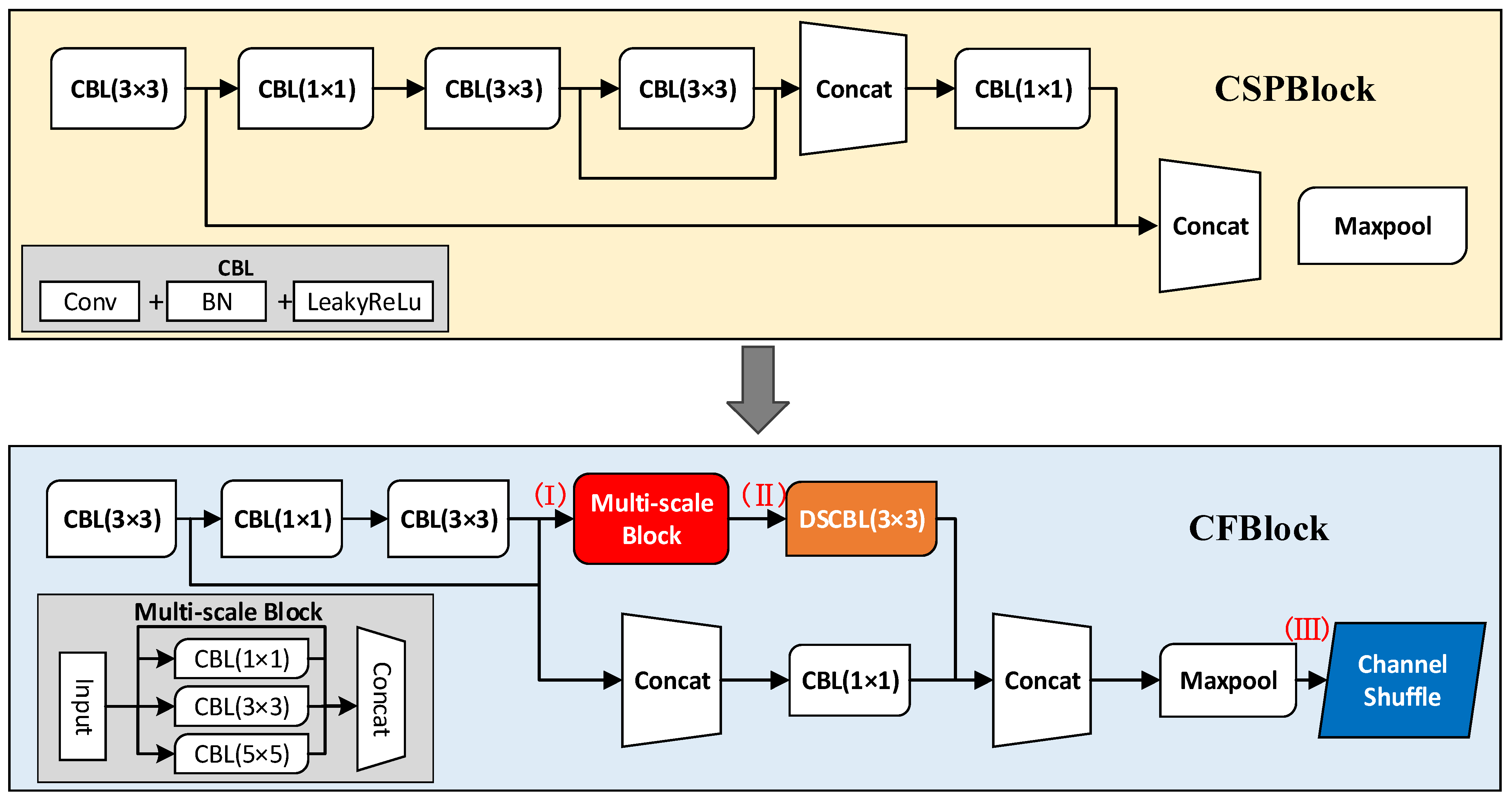

3. YOLOv4-CF Accurate Recognition Model

- Multi-Scale Block

- 2.

- Depth Separable Convolution Block

- 3.

- Channel Shuffle

4. FPGA Embedded Platform and Recognition Model Migration and Deployment

5. Results and Analysis

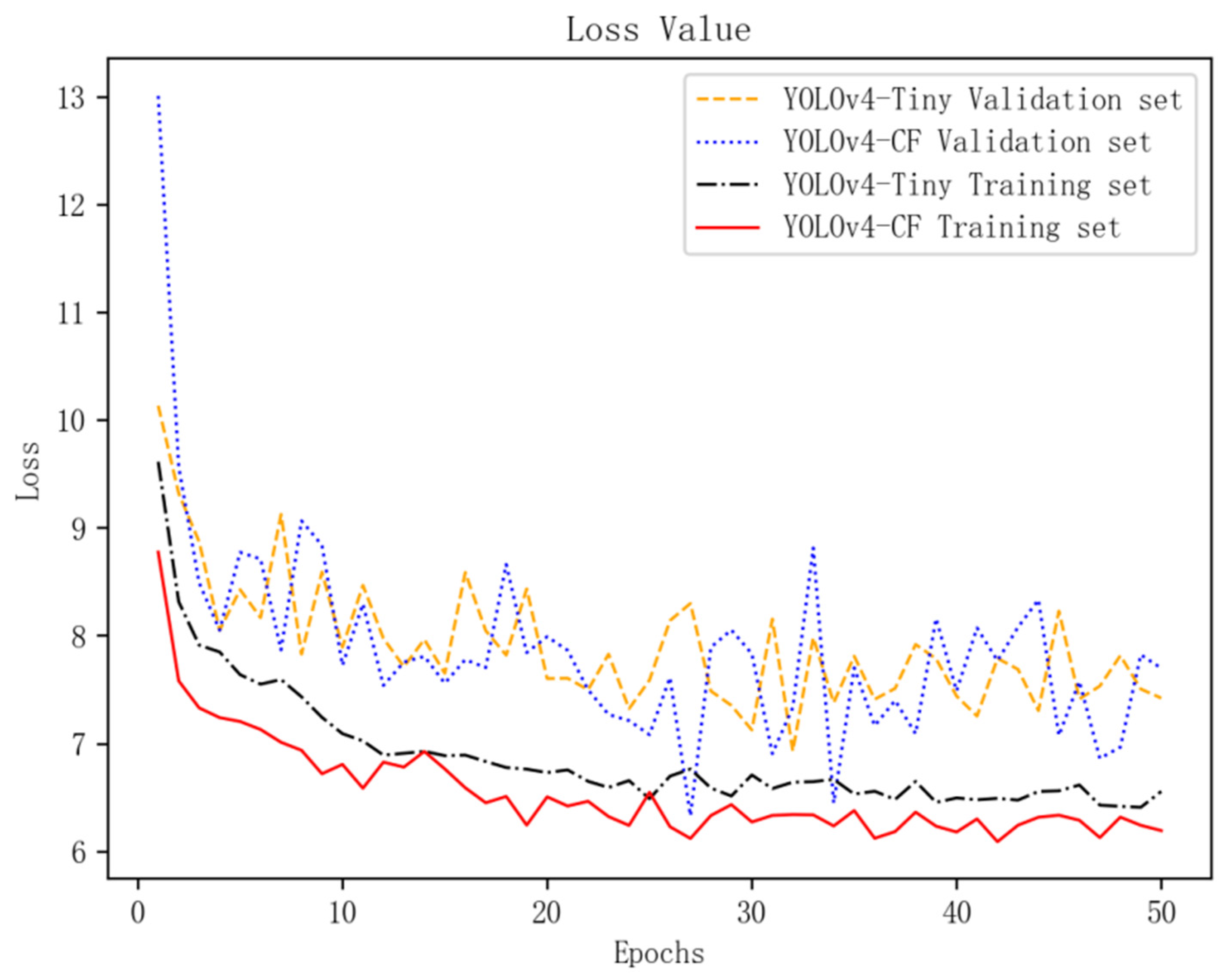

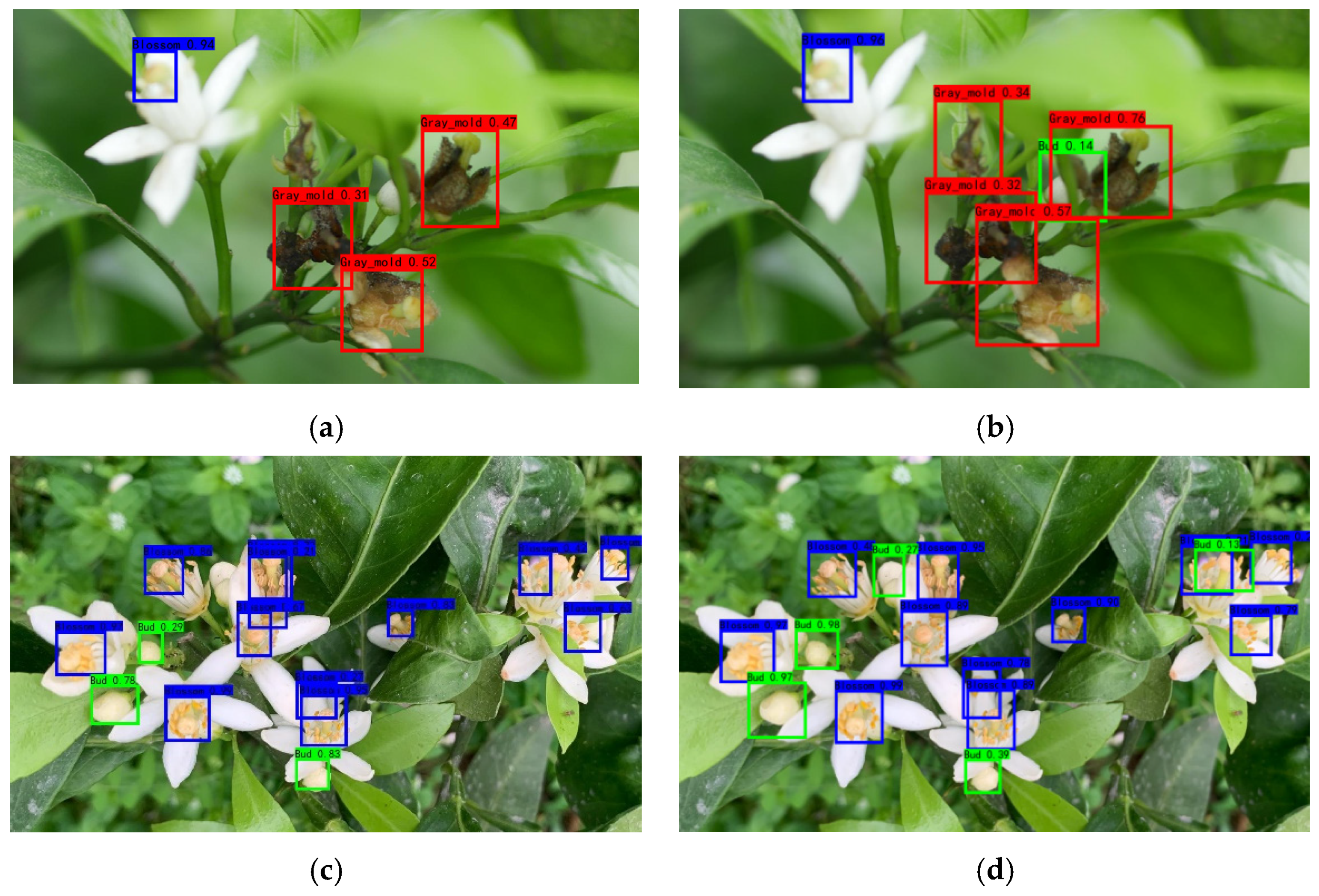

5.1. Analysis of the YOLOv4-CF Model Training Results

5.2. Performance Analysis of the Improved Strategy of the YOLOv4-CF Model

5.3. Resulting Analysis of the YOLOv4-CF + FPGA Model

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lu, Z.X.; Zhu, P.Y.; Gurr, G.M.; Zheng, X.S.; Read, D.M.Y.; Heong, K.L.; Yang, Y.J.; Xu, H.X. Mechanisms for flowering plants to benefit arthropod natural enemies of insect pests: Prospects for enhanced use in agriculture. Insect Sci. 2014, 21, 1–12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Herz, A.; Cahenzli, F.; Penvern, S.; Pfiffner, L.; Tasin, M.; Sigsgaard, L. Managing floral resources in apple orchards for pest control: Ideas, experiences and future directions. Insects 2019, 10, 247. [Google Scholar] [CrossRef] [Green Version]

- Zhao, C.; Wen, C.; Lin, S.; Guo, W.; Long, C. Tomato florescence recognition and detection method based on cascaded neural network. Trans. Chin. Soc. Agric. Eng. 2020, 36, 143–152. [Google Scholar]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Dorj, U.-O.; Lee, M.; Lee, K.-K.; Jeong, G. A novel technique for tangerine yield prediction using flower detection algorithm. Int. J. Pattern Recogn. 2013, 27, 1354007. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Multispecies fruit flower detection using a refined semantic segmentation network. IEEE Robot. Autom. Let. 2018, 3, 3003–3010. [Google Scholar] [CrossRef] [Green Version]

- Sun, K.; Wang, X.; Liu, S.; Liu, C. Apple, peach, and pear flower detection using semantic segmentation network and shape constraint level set. Comput. Electron. Agric. 2021, 185, 106150. [Google Scholar] [CrossRef]

- Liu, H.; Chen, L.; Mu, L.; Gao, Z.; Cui, Y. A Recognition Method of Kiwifruit Flowers Based on K-means Clustering. J. Agric. Mech. Res. 2020, 42, 22–26. [Google Scholar]

- Cui, M.; Chen, S.; Li, M. Research on strawberry flower recognition algorithm based on image processing. Dig. Technol. Appl. 2019, 37, 109–111. [Google Scholar]

- Zheng, K.; Fang, C.; Yuan, S.; Chuang, F.; Li, G. Application research of Mask R-CNN model in the identification of eggplant flower blooming period. Comput. Eng. Appl. 2021, 15, 1–11. [Google Scholar]

- Lin, P.; Lee, W.S.; Chen, Y.M.; Peres, N.; Fraisse, C. A deep-level region-based visual representation architecture for detecting strawberry flowers in an outdoor field. Precis. Agric. 2020, 21, 387–402. [Google Scholar] [CrossRef]

- Xiong, J.; Liu, B.; Zhong, Z.; Chen, S.; Zheng, Z. Litchi flower and leaf segmentation and recognition based on deep semantic segmentation. Trans. Chin. Soc. Agric. Mach. 2021, 52, 252–258. [Google Scholar]

- Deng, Y.; Wu, H.; Zhu, H. Recognition and counting of citrus flowers based on instance segmentation. Trans. Chin. Soc. Agric. Eng. 2020, 36, 200–207. [Google Scholar]

- Wang, Z.; Underwood, J.; Walsh, K.B. Machine vision assessment of mango orchard flowering. Comput. Electr. Agric. 2018, 151, 501–511. [Google Scholar] [CrossRef]

- Wang, C.; Zhou, J.; Xu, C.-Y.; Bai, X. In a deep object detection method for pineapple fruit and flower recognition in cluttered background. In Proceedings of the International Conference on Pattern Recognition and Artificial Intelligence, Zhongshan, China, 19–23 October 2020; pp. 218–227. [Google Scholar]

- Palacios, F.; Bueno, G.; Salido, J.; Diago, M.P.; Hernández, I.; Tardaguila, J. Automated grapevine flower detection and quantification method based on computer vision and deep learning from on-the-go imaging using a mobile sensing platform under field conditions. Comput. Electr. Agric. 2020, 178, 105796. [Google Scholar] [CrossRef]

- Williamson, B.; Tudzynski, B.; Tudzynski, P.; Van Kan, J.A.L. Botrytis cinerea: The cause of grey mould disease. Mol. Plant Pathol. 2007, 8, 561–580. [Google Scholar] [CrossRef] [PubMed]

- Zhu, L.; Wang, X.; Huang, F.; Hou, X.; Xiao, Z.; Pu, Z.; Huang, Z.; Li, H. Investigation of surface defect of Citrus fruits caused by Botrytis-Molded petals. J. Fruit Sci. 2012, 29, 1074–1077+1158. [Google Scholar]

- Que, L.; Zhang, T.; Guo, H.; Jia, C.; Gong, Y.; Chang, L.; Zhou, J. A lightweight pedestrian detection engine with two-stage low-complexity detection network and adaptive region focusing technique. Sensors 2021, 21, 5851. [Google Scholar] [CrossRef]

- Pérez, I.; Figueroa, M. A heterogeneous hardware accelerator for image classification in embedded systems. Sensors 2021, 21, 2637. [Google Scholar] [CrossRef]

- Luo, Y.; Chen, Y. FPGA-Based Acceleration on Additive Manufacturing Defects Inspection. Sensors 2021, 21, 2123. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Berlin, Germany, 2018; pp. 116–131. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–21 June 2018; pp. 6848–6856. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Inverted residuals and linear bottlenecks: Mobile networks for classification, Detection and Segmentation. arXiv 2018, arXiv:1801.04381. [Google Scholar]

- Zhu, J.; Wang, L.; Liu, H.; Tian, S.; Deng, Q.; Li, J. An efficient task assignment framework to accelerate DPU-based convolutional neural network inference on FPGAs. IEEE Access 2020, 8, 83224–83237. [Google Scholar] [CrossRef]

- Vandendriessche, J.; Wouters, N.; da Silva, B.; Lamrini, M.; Chkouri, M.Y.; Touhafi, A. Environmental sound recognition on embedded systems: From FPGAs to TPUs. Electronics 2021, 10, 2622. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.N.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

| Tag Name | Training Set | Test Set | Total |

|---|---|---|---|

| bud | 1005 | 249 | 1254 |

| citrus flower | 1223 | 315 | 1538 |

| gray mold | 615 | 141 | 765 |

| No. | Platform | System | Configuration | Operating Environment |

|---|---|---|---|---|

| 1 | Server | Windows 10 | Intel Core i7-9700 @ 3.00 GHz Eight-core CPU, 16 GB RAM, Nvidia GeForce RTX 2060(6 GB) GPU | The test framework is TensorFlow 2.2.0, Keras 2.3.1, using CUDA 10.1 parallel computing framework with CUDNN 7.6.5 deep neural network acceleration library |

| 2 | PC | Windows 10 | Intel Core i7-8500U @ 1.80 GHz Four-core CPU, 16 GB RAM, Nvidia GeForce MX 450(4 GB) GPU | |

| 3 | FPGA | Linux | Xilinx Zynq UltraScale + MPSoC EV (ZCU104) SoC | Compile environment uses OpenCV and Xilinx AI runtimes |

| Traning | Model | mAP@.5% | AP/% | F1% | Model Size/MB | ||

|---|---|---|---|---|---|---|---|

| Bud | Flower | Gray Mold | |||||

| Initial training | YOLOv4-Tiny | 91.33 | 90.10 | 94.75 | 89.16 | 82.67 | 22.79 |

| YOLOv4-CF(I) | 92.94 | 91.33 | 94.56 | 92.94 | 84.67 | 23.31 | |

| YOLOv4-CF(II) | 93.91 | 91.76 | 95.32 | 94.65 | 86.33 | 23.44 | |

| YOLOv4-CF | 94.42 | 92.17 | 95.67 | 95.42 | 86.33 | 23.44 | |

| Transfer training | YOLOv4-Tiny | 93.61 | 92.17 | 95.28 | 93.39 | 86.00 | 22.79 |

| YOLOv4-CF(I) | 93.61 | 92.20 | 95.22 | 93.42 | 86.67 | 23.31 | |

| YOLOv4-CF(II) | 94.36 | 92.05 | 95.57 | 95.46 | 88.00 | 23.44 | |

| YOLOv4-CF | 95.03 | 92.89 | 96.22 | 95.97 | 89.00 | 23.44 | |

| Method | mAP (%) | Test Time (ms) | Model Size (M) |

|---|---|---|---|

| MobileNet-v3 [32] | 85.76 | 23.93 | 18.43 |

| GhostNet [33] | 88.63 | 27.42 | 11.50 |

| DensNet121 [34] | 92.74 | 42.12 | 39.11 |

| CSPDarkNet53_Tiny [22] | 94.42 | 18.83 | 22.79 |

| CFNet (ours) | 95.03 | 17.93 | 23.44 |

| Platform | Precision/% | Average Precision /% | Model Size /MB | Recognition Speed/ms | |||

|---|---|---|---|---|---|---|---|

| Bud | Flower | Gray Mold | |||||

| Server | 91.47 | 94.65 | 96.20 | 94.11 | 23.44 | 69.22 (CPU) | 33.28 (GPU) |

| FPGA | 89.22 | 93.54 | 95.73 | 92.83 | 5.96 | 58.74 | |

| Platform | Configuration | Speed/FPS | Power/W |

|---|---|---|---|

| Server | CPU/core i7-9700(3.0 GHz Eight-core) | 15 | 75 |

| GPU/RTX 2060(6 GB) | 29 | 96 | |

| PC | CPU/core i7-8550U(1.8 GHz Quad-core) | 10 | 38 |

| GPU/MX150(4 GB) | 16 | 56 | |

| FPGA | ZCU104 | 17 | 20 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, S.; Zhao, Y.; Li, R.; Li, Z.; Fan, R.; Li, Q. Embedded Sensing System for Recognizing Citrus Flowers Using Cascaded Fusion YOLOv4-CF + FPGA. Sensors 2022, 22, 1255. https://doi.org/10.3390/s22031255

Lyu S, Zhao Y, Li R, Li Z, Fan R, Li Q. Embedded Sensing System for Recognizing Citrus Flowers Using Cascaded Fusion YOLOv4-CF + FPGA. Sensors. 2022; 22(3):1255. https://doi.org/10.3390/s22031255

Chicago/Turabian StyleLyu, Shilei, Yawen Zhao, Ruiyao Li, Zhen Li, Renjie Fan, and Qiafeng Li. 2022. "Embedded Sensing System for Recognizing Citrus Flowers Using Cascaded Fusion YOLOv4-CF + FPGA" Sensors 22, no. 3: 1255. https://doi.org/10.3390/s22031255

APA StyleLyu, S., Zhao, Y., Li, R., Li, Z., Fan, R., & Li, Q. (2022). Embedded Sensing System for Recognizing Citrus Flowers Using Cascaded Fusion YOLOv4-CF + FPGA. Sensors, 22(3), 1255. https://doi.org/10.3390/s22031255