Chest X-ray Classification for the Detection of COVID-19 Using Deep Learning Techniques

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

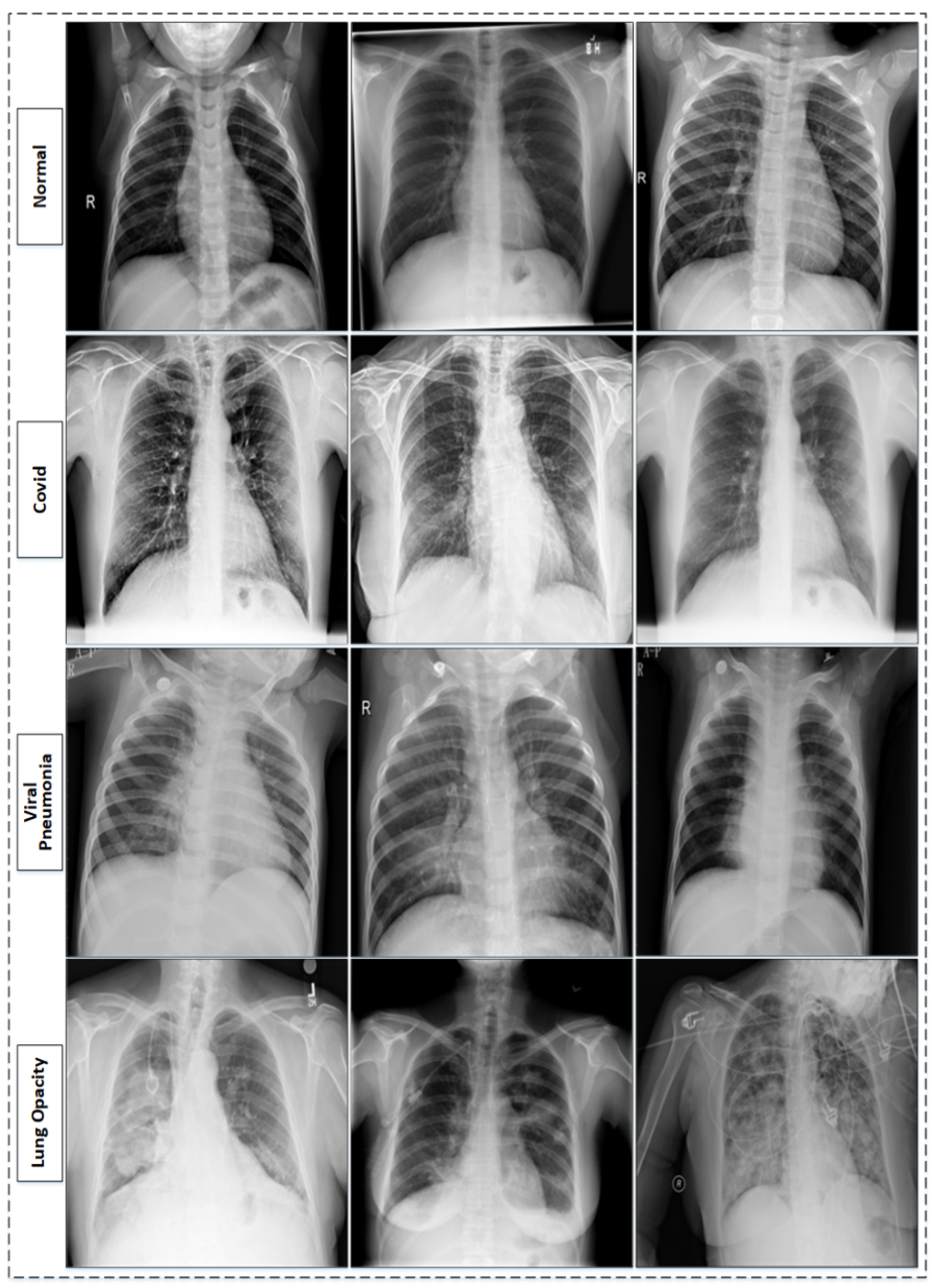

3.1. Dataset

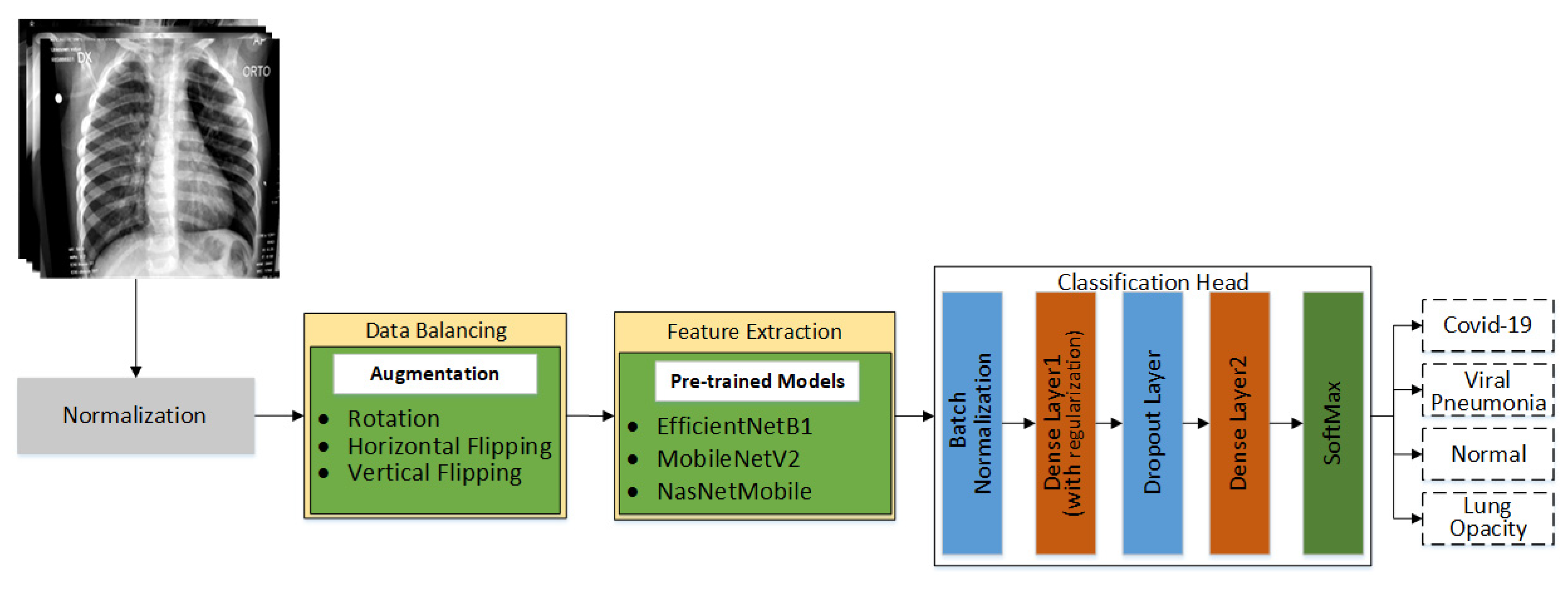

3.2. Proposed Methodolgy

3.2.1. Data Normalization and Augmentation

3.2.2. Feature Extraction and Classification of Chest X-rays

3.2.3. Experimental Setup

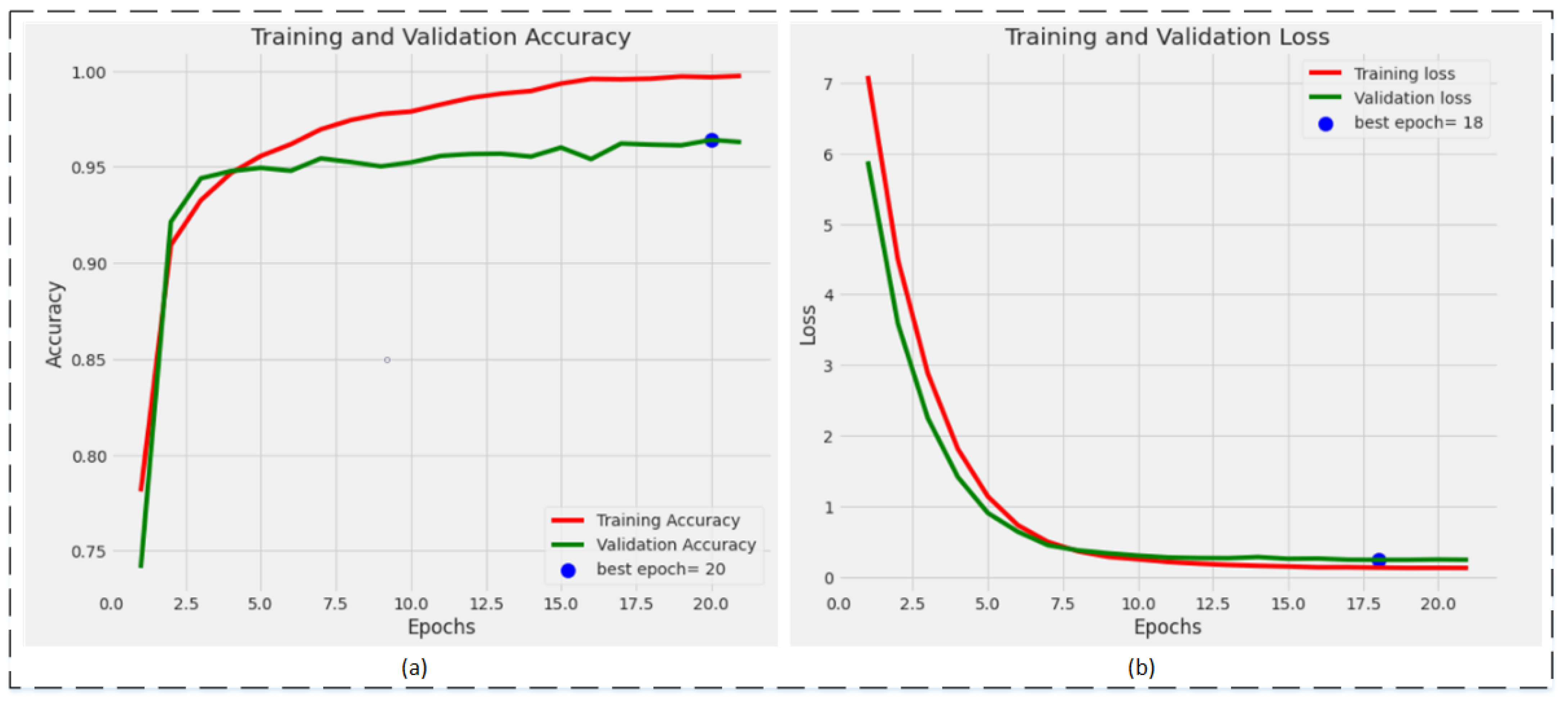

4. Results

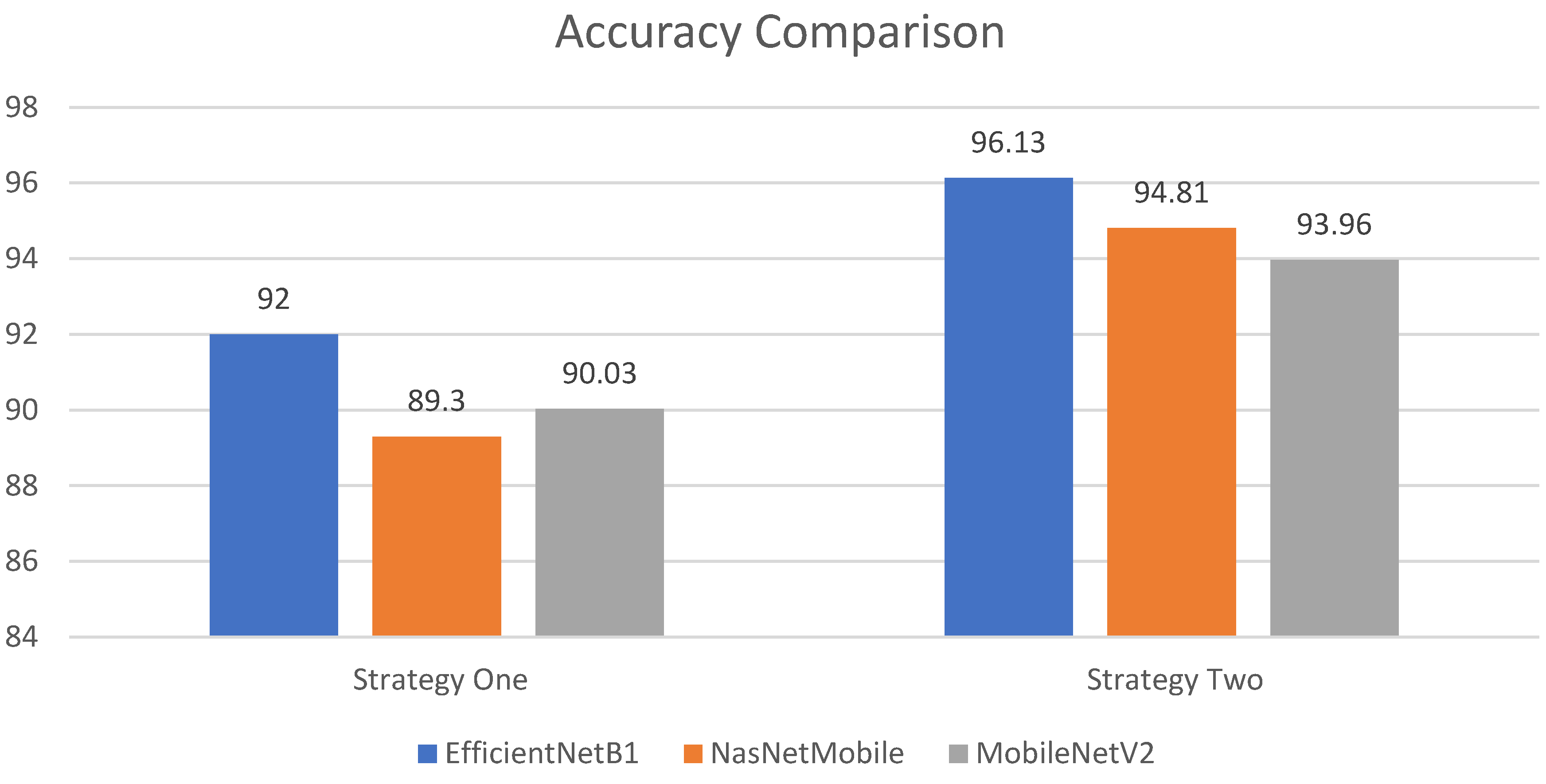

4.1. Classification Results

4.1.1. Analysis and Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, W.; Xu, Y.; Gao, R.; Lu, R.; Han, K.; Wu, G.; Tan, W. Detection of SARS-CoV-2 in different types of clinical specimens. JAMA 2020, 323, 1843–1844. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Paules, C.I.; Marston, H.D.; Fauci, A.S. Coronavirus infections—More than just the common cold. JAMA 2020, 323, 707–708. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Available online: https://www.worldometers.info/coronavirus/coronavirus-death-toll/ (accessed on 8 December 2021).

- Iqbal, H.M.; Romero-Castillo, K.D.; Bilal, M.; Parra-Saldivar, R. The emergence of novel-coronavirus and its replication cycle-an overview. J. Pure Appl. Microbiol. 2020, 14, 13–16. [Google Scholar] [CrossRef] [Green Version]

- Ji, T.; Liu, Z.; Wang, G.; Guo, X.; Lai, C.; Chen, H.; Huang, S.; Xia, S.; Chen, B.; Jia, H.; et al. Detection of COVID-19: A review of the current literature and future perspectives. Biosens. Bioelectron. 2020, 166, 112455. [Google Scholar] [CrossRef]

- Obaro, S.K.; Madhi, S.A. Bacterial pneumonia vaccines and childhood pneumonia: Are we winning, refining, or redefining? Lancet Infect. Dis. 2006, 6, 150–161. [Google Scholar] [CrossRef]

- Ibrahim, A.U.; Ozsoz, M.; Serte, S.; Al-Turjman, F.; Yakoi, P.S. Pneumonia Classification Using Deep Learning from Chest X-ray Images during COVID-19. In Cognitive Computation; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–13. [Google Scholar]

- Marrie, T.J.; Durant, H.; Yates, L. Community-acquired pneumonia requiring hospitalization: 5-year prospective study. Rev. Infect. Dis. 1989, 11, 586–599. [Google Scholar] [CrossRef]

- Zhou, P.; Yang, X.-L.; Wang, X.-G.; Hu, B.; Zhang, L.; Zhang, W.; Si, H.-R.; Zhu, Y.; Li, B.; Huang, C.-L.; et al. A pneumonia outbreak associated with a new coronavirus of probable bat origin. Nature 2020, 579, 270–273. [Google Scholar] [CrossRef] [Green Version]

- Rehman, Z.U.; Khan, M.A.; Ahmed, F.; Damaševičius, R.; Naqvi, S.R.; Nisar, W.; Javed, K. Recognizing apple leaf diseases using a novel parallel real-time processing framework based on MASK RCNN and transfer learning: An application for smart agriculture. IET Image Process. 2021, 15, 2157–2168. [Google Scholar] [CrossRef]

- Khan, M.A.; Khan, M.A.; Ahmed, F.; Mittal, M.; Goyal, L.M.; Hemanth, D.J.; Satapathy, S.C. Gastrointestinal diseases segmentation and classification based on duo-deep architectures. Pattern Recognit. Lett. 2020, 131, 193–204. [Google Scholar] [CrossRef]

- Corizzo, R.; Dauphin, Y.; Bellinger, C.; Zdravevski, E.; Japkowicz, N. Explainable image analysis for decision support in medical healthcare. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; IEEE: New York, NY, USA, 2021; pp. 4667–4674. [Google Scholar]

- Rehman, M.U.; Ahmed, F.; Khan, M.A.; Tariq, U.; Alfouzan, F.A.; Alzahrani, N.M.; Ahmad, J. Dynamic Hand Gesture Recognition Using 3D-CNN and LSTM Networks. Comput. Mater. Contin. 2022, 70, 4675–4690. [Google Scholar] [CrossRef]

- Petrovska, B.; Zdravevski, E.; Lameski, P.; Corizzo, R.; Štajduhar, I.; Lerga, J. Deep learning for feature extraction in remote sensing: A case-study of aerial scene classification. Sensors 2020, 20, 3906. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Mou, L.; Zhu, X.X.; Mandal, M. Skin Lesion Segmentation based on improved U-net. In Proceedings of the 2019 IEEE Canadian Conference of Electrical and Computer Engineering (CCECE), Edmonton, AB, Canada, 5–8 May 2019; IEEE: New York, NY, USA, 2019; pp. 1–4. [Google Scholar]

- Ünlü, E.I.; Çinar, A. Segmentation of Benign and Malign lesions on skin images using U-Net. In Proceedings of the 2021 International Conference on Innovation and Intelligence for Informatics, Computing and Technologies (3ICT), Zallaq, Bahrain, 29–30 September 2021; IEEE: New York, NY, USA, 2021; pp. 165–169. [Google Scholar]

- Ullah, F.; Ansari, S.U.; Hanif, M.; Ayari, M.A.; Chowdhury, M.E.H.; Khandakar, A.A.; Khan, M.S. Brain MR Image Enhancement for Tumor Segmentation Using 3D U-Net. Sensors 2021, 21, 7528. [Google Scholar] [CrossRef] [PubMed]

- Mahmud, T.; Rahman, M.A.; Fattah, S.A. CovXNet: A multi-dilation convolutional neural network for automatic COVID-19 and other pneumonia detection from chest X-ray images with transferable multi-receptive feature optimization. Comput. Biol. Med. 2020, 122, 103869. [Google Scholar] [CrossRef] [PubMed]

- Umair, M.; Khan, M.S.; Ahmed, F.; Baothman, F.; Alqahtani, F.; Alian, M.; Ahmad, J. Detection of COVID-19 Using Transfer Learning and Grad-CAM Visualization on Indigenously Collected X-ray Dataset. Sensors 2021, 21, 5813. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Qin, L.; Xu, Z.; Yin, Y.; Wang, X.; Kong, B.; Bai, J.; Lu, Y.; Fang, Z.; Song, Q. Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology 2020, 296, 200905. [Google Scholar] [CrossRef]

- Abbas, A.; Abdelsamea, M.M.; Gaber, M.M. Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network. Appl. Intell. 2021, 51, 854–864. [Google Scholar] [CrossRef]

- Wang, S.; Kang, B.; Ma, J.; Zeng, X.; Xiao, M.; Guo, J.; Cai, M.; Yang, J.; Li, Y.; Meng, X.; et al. A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19). Eur. Radiol. 2021, 31, 6096–6104. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Shankar, K.; Perumal, E. A novel hand-crafted with deep learning features based fusion model for COVID-19 diagnosis and classification using chest X-ray images. Complex Intell. Syst. 2021, 7, 1277–1293. [Google Scholar]

- Panwar, H.; Gupta, P.K.; Siddiqui, M.K.; Morales-Menendez, R.; Singh, V. Application of deep learning for fast detection of COVID-19 in X-rays using nCOVnet. Chaos Solitons Fractals 2020, 138, 109944. [Google Scholar] [CrossRef]

- Zheng, C.; Deng, X.; Fu, Q.; Zhou, Q.; Feng, J.; Ma, H.; Liu, W.; Wang, X. Deep Learning-Based Detection for COVID-19 from Chest CT Using Weak Label. medRxiv 2020. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Xu, X.; Jiang, X.; Ma, C.; Du, P.; Li, X.; Lv, S.; Yu, L.; Ni, Q.; Chen, Y.; Su, J.; et al. A deep learning system to screen novel coronavirus disease 2019 pneumonia. Engineering 2020, 6, 1122–1129. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Hussain, E.; Hasan, M.; Rahman, M.A.; Lee, I.; Tamanna, T.; Parvez, M.Z. CoroDet: A deep learning based classification for COVID-19 detection using chest X-ray images. Chaos Solitons Fractals 2021, 142, 110495. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.I.; Shah, J.L.; Bhat, M.M. CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest X-ray images. Comput. Methods Programs Biomed. 2020, 196, 105581. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, M.E.; Rahman, T.; Khandakar, A.; Mazhar, R.; Kadir, M.A.; Mahbub, Z.B.; Islam, K.R.; Khan, M.S.; Iqbal, A.; al Emadi, N.; et al. Can AI help in screening viral and COVID-19 pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Acharya, U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef]

- Akter, S.; Shamrat, F.M.; Chakraborty, S.; Karim, A.; Azam, S. COVID-19 detection using deep learning algorithm on chest X-ray images. Biology 2021, 10, 1174. [Google Scholar] [CrossRef]

- COVID-19 Radiography Database-Kaggle. Available online: https://www.kaggle.com/tawsifurrahman/covid19-radiography-database (accessed on 20 November 2021).

- BIMCV-COVID-19, Datasets Related to COVID-19’s Pathology Course. 2020. Available online: https://bimcv.cipf.es/bimcv-projects/bimcv-covid19/#1590858128006-9e640421-6711 (accessed on 20 November 2021).

- COVID-19-Image-Repository. 2020. Available online: https://github.com/ml-workgroup/covid-19-image-repository/tree/master/png (accessed on 20 November 2021).

- Chen, R.; Liang, W.; Jiang, M.; Guan, W.; Zhan, C.; Wang, T.; Tang, C.; Sang, L.; Liu, J.; Ni, Z.; et al. Risk factors of fatal outcome in hospitalized subjects with coronavirus disease 2019 from a nationwide analysis in China. Chest 2020, 158, 97–105. [Google Scholar] [CrossRef]

- Weng, Z.; Chen, Q.; Li, S.; Li, H.; Zhang, Q.; Lu, S.; Wu, L.; Xiong, L.; Mi, B.; Liu, D.; et al. ANDC: An Early Warning Score to Predict Mortality Risk for Patients with Coronavirus Disease 2019. J. Transl. Med. 2020, 18, 328. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Y.; Xiang, P.; Pu, L.; Xiong, H.; Li, C.; Zhang, M.; Tan, J.; Xu, Y.; Song, R.; et al. Neutrophil-to-lymphocyte Ratio Predicts Severe Illness Patients with 2019 Novel Coronavirus in the Early Stage. medRxiv 2020. [CrossRef] [Green Version]

- Huang, I.; Pranata, R. Lymphopenia in severe coronavirus disease-2019 (COVID-19): Systematic review and meta-analysis. J. Intens. Care 2020, 8, 36. [Google Scholar] [CrossRef]

- COVID-CXNet. 2020. Available online: https://github.com/armiro/COVID-CXNet (accessed on 20 November 2021).

- Kaggle. RSNA Pneumonia Detection Challenge. Available online: https://www.kaggle.com/c/rsna-pneumonia-detection-challenge/data (accessed on 20 November 2021).

- Mooney, P. Chest X-ray Images (Pneumonia). 2018. Available online: https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia (accessed on 20 November 2021).

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Citeseer: Princeton, NJ, USA, 2009; Volume 7. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In the Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shelens, J.; Vle, Q. Learning Transferable Architectures for Scalable Image Recognition. arXiv 2017, arXiv:1707.07012v3. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: New York, NY, USA, 2018; pp. 4510–4520. [Google Scholar]

- Zia, M.; Ahmed, F.; Khan, M.A.; Tariq, U.; Jamal, S.S.; Ahmad, J.; Hussain, I. Classification of Citrus Plant Diseases Using Deep Transfer Learning. Comput. Mater. Contin. 2022, 70, 1401–1417. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. Ieee Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Rahman, T.; Khandakar, A.; Qiblawey, Y.; Tahir, A.; Kiranyaz, S.; Kashem, S.B.A.; Islam, M.T.; al Maadeed, S.; Zughaier, S.M.; Khan, M.S.; et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput. Biol. Med. 2021, 132, 104319. [Google Scholar] [CrossRef] [PubMed]

| Data Composition | BIMCV-COVID19+ [36] | German Medical School [37] | SIRM, GitHub, Kaggle, and Twitter [38,39,40,41]. | GitHub [42] | RSNA [43] | Kaggle [44] | Total |

|---|---|---|---|---|---|---|---|

| COVID-19 | 2473 | 183 | 560 | 400 | 3616 | ||

| Lung Opacity | 6012 | 6012 | |||||

| Normal | 8851 | 1341 | 10,192 | ||||

| Pneumonia | 1345 |

| Stage | Operator | Resolution | Channel | Layers |

|---|---|---|---|---|

| 1 | Conv3 × 3 | 224 × 224 | 32 | 1 |

| 2 | MBConv1, k3 × 3 | 112 × 112 | 16 | 1 |

| 3 | MBConv6, k3 × 3 | 112 × 112 | 24 | 2 |

| 4 | MBConv6, k5 × 5 | 56 × 56 | 40 | 2 |

| 5 | MBConv6, k3 × 3 | 28 × 28 | 80 | 3 |

| 6 | MBConv6, k5 × 5 | 14 × 14 | 112 | 3 |

| 7 | MBConv6, k5 × 5 | 14 × 14 | 192 | 4 |

| 8 | MBConv6, k3 × 3 | 7 × 7 | 320 | 1 |

| 9 | Conv1 × 1 & Pooling & FC | 7 × 7 | 1280 | 1 |

| Deep Learning Models | Evaluation Parameters | |||

|---|---|---|---|---|

| Accuracy | Precision | Sensitivity | F1 Score | |

| EfficientNetB1 | 92% | 91.75% | 94.50% | 92.75% |

| NasNetMobile | 89.30% | 89.25% | 91.75% | 91% |

| MobileNetV2 | 90.03% | 92.25% | 92% | 91.75% |

| Deep learning Models | Evaluation Parameters | |||

|---|---|---|---|---|

| Accuracy | Precision | Sensitivity | F1 Score | |

| EfficientNetB1 | 96.13% | 97.25% | 96.50% | 97.50% |

| NasNetMobile | 94.81% | 95.50% | 95% | 95.25% |

| MobileNetV2 | 93.96% | 94.50% | 95% | 94.50% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, E.; Rehman, M.Z.U.; Ahmed, F.; Alfouzan, F.A.; Alzahrani, N.M.; Ahmad, J. Chest X-ray Classification for the Detection of COVID-19 Using Deep Learning Techniques. Sensors 2022, 22, 1211. https://doi.org/10.3390/s22031211

Khan E, Rehman MZU, Ahmed F, Alfouzan FA, Alzahrani NM, Ahmad J. Chest X-ray Classification for the Detection of COVID-19 Using Deep Learning Techniques. Sensors. 2022; 22(3):1211. https://doi.org/10.3390/s22031211

Chicago/Turabian StyleKhan, Ejaz, Muhammad Zia Ur Rehman, Fawad Ahmed, Faisal Abdulaziz Alfouzan, Nouf M. Alzahrani, and Jawad Ahmad. 2022. "Chest X-ray Classification for the Detection of COVID-19 Using Deep Learning Techniques" Sensors 22, no. 3: 1211. https://doi.org/10.3390/s22031211

APA StyleKhan, E., Rehman, M. Z. U., Ahmed, F., Alfouzan, F. A., Alzahrani, N. M., & Ahmad, J. (2022). Chest X-ray Classification for the Detection of COVID-19 Using Deep Learning Techniques. Sensors, 22(3), 1211. https://doi.org/10.3390/s22031211