1. Introduction

The application of computer-aided design and analysis in certain domains, such as signal processing and simulation, has gradually increased. Manual product inspections require considerable labor, and inaccurate testing results might be obtained because of human error which can affect the quality of manufactured products. Therefore, the use of automated optical inspection has increased. With advancements in hardware and software, deep learning models have been combined with optical inspection systems to relieve the bottleneck of defect detection in manufacturing. Technology used to detect metal surface defects has surpassed the limits of the human eye. Image classification through deep learning can improve the accuracy of image detection [

1,

2]. In addition, with the advancement of graphics processing units, the computing power of hardware has considerably increased. The You Only Look Once algorithm [

3] and deep learning frameworks, such as TensorFlow [

4] and PyTorch [

5], have been used for defect detection. Synergistic development using a kernel filter, pooling, or activation function in image classification has promoted advances in deep learning technology. Many studies have employed convolutional neural networks (CNNs) to classify images [

6,

7,

8]. CNNs have deep learning structures and can be easily trained [

9,

10]. Such networks have been used to effectively inspect products and detect defects in images [

11].

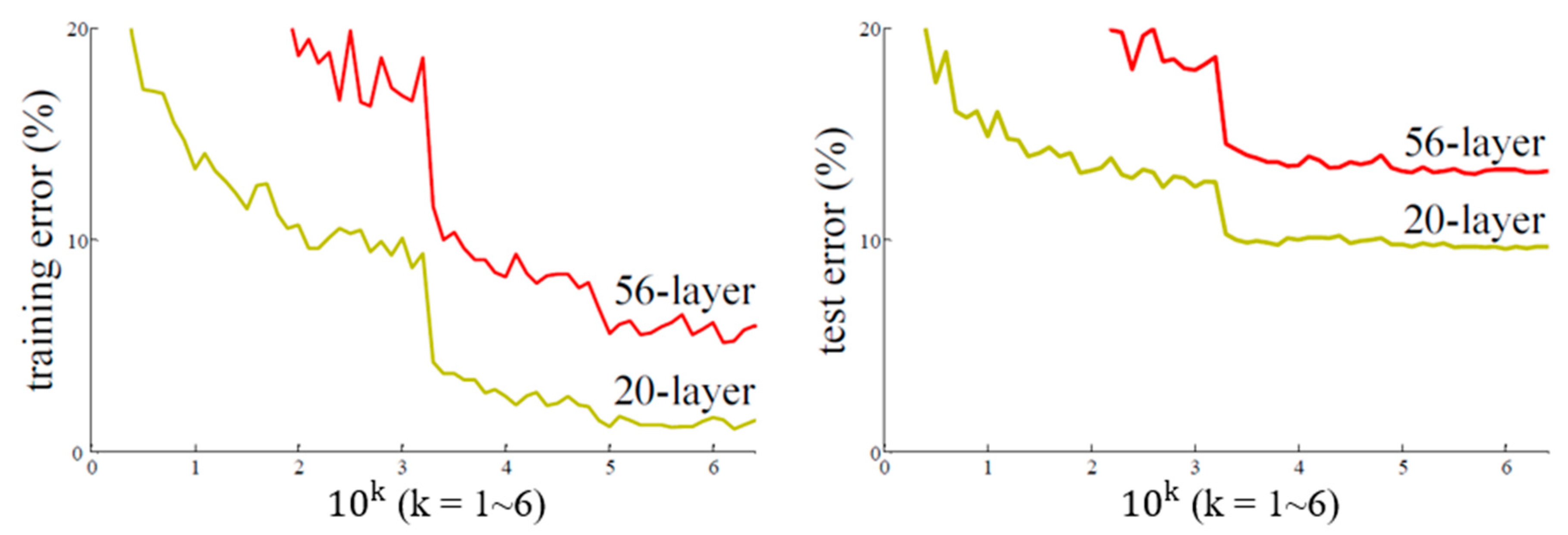

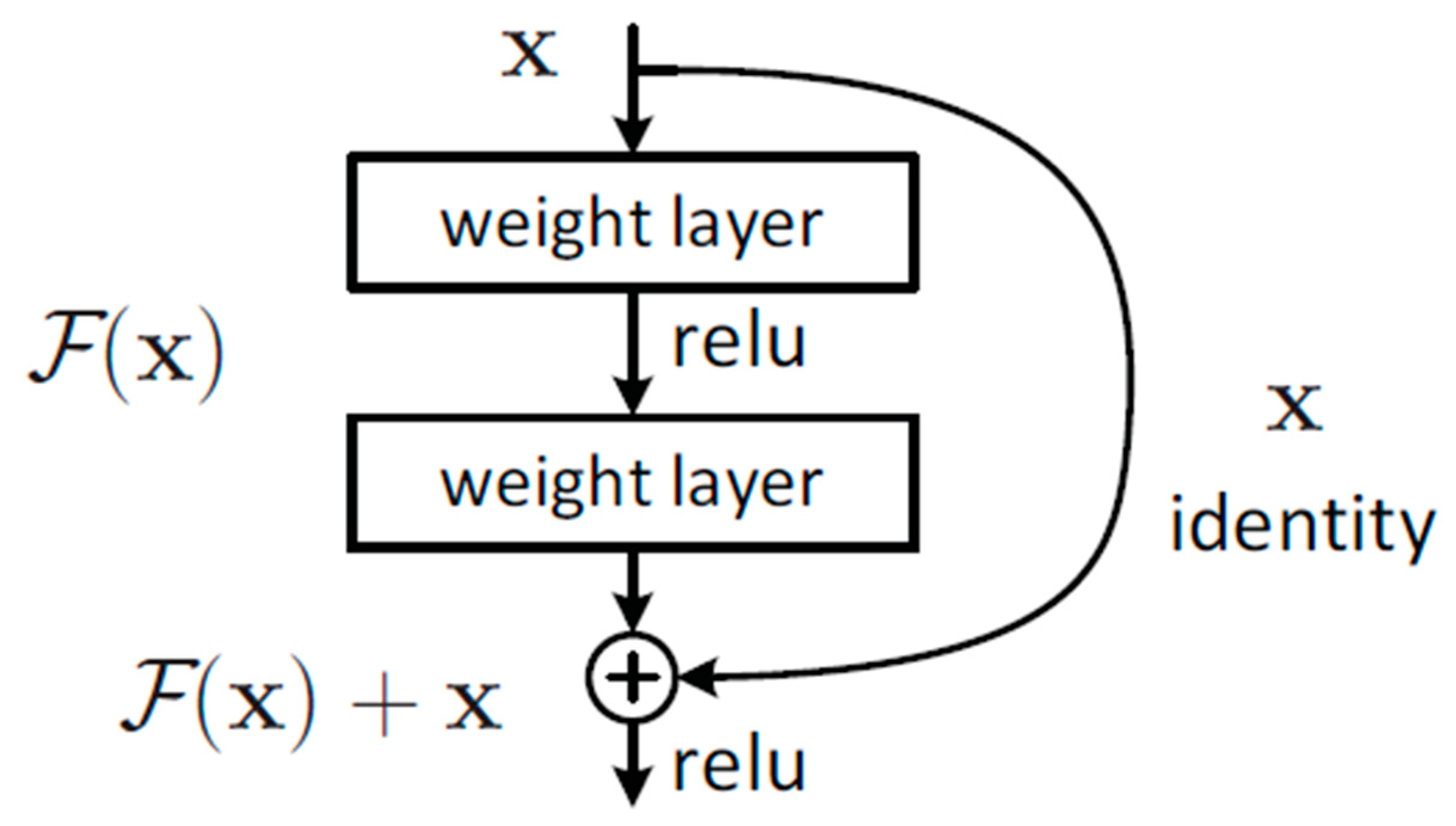

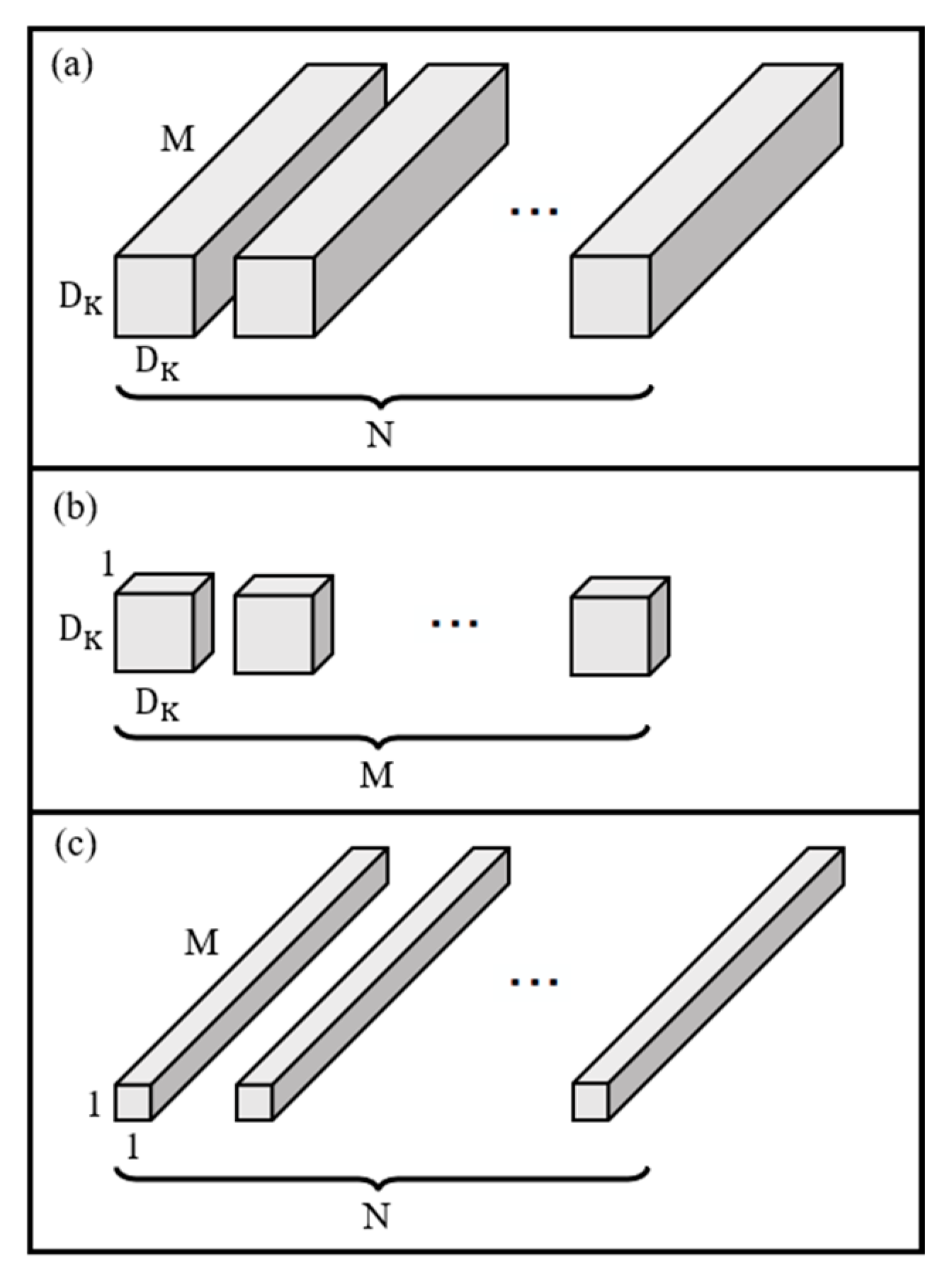

Theoretically, the number of hidden layers of an NN strongly influences network performance. With more layers, a network can work with and extract more complex feature patterns and therefore achieve superior results. However, the accuracy of a network peaks at a certain number of layers and even decreases thereafter. ResNet [

12] uses residual learning to resolve this problem and it contains shortcuts. Therefore, ResNet can suppress the accuracy drop caused by multiple layers in deep networks. When a large kernel is used for feature extraction in convolution operations, numerous parameters are required. MobileNet [

13] uses depthwise separable convolution to divide the convolution kernel into single channels. It can convolve each channel without changing the depth of the input features. Moreover, the aforementioned model can produce output feature maps with the same number of channels as the input feature maps. This model can increase or reduce the dimensionality of feature maps to reduce computational complexity and accelerate calculation while maintaining high accuracy.

A deep learning approach was developed for an optical inspection system for surface defects on extruded aluminum [

14]. A simple camera records extruded profiles during production, and an NN distinguishes immaculate surfaces from surfaces with various common defects. Metal defects can vary in size, shape, and texture, and the defects detected by an NN can be highly similar. In [

15], an automatic segmentation and quantification method using customized deep learning architecture was proposed to detect defects in images of titanium-coated metal surfaces. In [

16], a U-Net convolutional network was developed to segment biomedical images through appropriate preprocessing and postprocessing steps; specifically, the network applied a median filter to input images to eliminate impulse noise. Standard benchmarks were used to evaluate the detection and segmentation performance of the developed model, which achieved an accuracy of 93.46%.

In [

17], a 26-layer CNN was developed to detect surface defects on the components of roller bearings, and the performance of this network was compared with that of MobileNet, VGG-19 [

18], and ResNet-50. VGG-19 achieved a mean average precision (mAP) of 83.86%; however, its processing time was long (i.e., 83.3 ms). MobileNet exhibited the shortest processing speed but the lowest mAP because of the small number of parameters and necessary calculations. The 26-layer CNN achieved a better balance between mAP and processing efficiency than the other three models, with the mAP of this network nearly equal to the highest mAP of ResNet-50. Moreover, the 26-layer CNN required less time for detection than ResNet-50 or VGG-19. In [

19], an entropy calculation method was used in a self-designed DarkNet-53 NN model, and the most suitable kernel size was selected for the convolutional layer. The model was highly accurate in recognizing components and required only a short training time.

In [

20], two types of residual fully connected NNs (RFCNNs) were developed: RFCN-ResNet and RFCN-DenseNet. The performance of these networks in the classification of 24 types of tumors was compared with that of the XGBoost and AutoKeras automated machine-learning (AutoML) methods. RFCN-ResNet and RFCN-DenseNet featured enhancements in feature propagation and encouragement for the reuse of RFCN architectures, while new RFCN architecture generation achieved accuracies of 95.9% and 95.7%, respectively, outperforming XGBoost and AutoKeras by 4.8% and 4.9%, respectively. In another comparison, RFCN-ResNet and RFCN-DenseNet achieved respective accuracies of 95.9% and 96.5% and outperformed XGBoost and AutoKeras by 6.1% and 5.5%, respectively, indicating that RFCN-ResNet and RFCN-DenseNet considerably outperform XGBoost and AutoKeras in modeling genomic data.

In [

21], AutoKeras and a self-designed model were used to analyze water quality. Compared to that of AutoKeras, the accuracy of the developed model was 1.8% and 1% higher in the classification of two-class and multiclass water data, respectively. However, the AutoKeras model exhibited higher efficiency than the developed model and required no manual effort.

The authors of [

22] proposed that random trials are more efficient than trials based on a grid search for optimizing hyperparameters. In Gaussian process analysis, different hyperparameters are crucial for different datasets. Thus, a grid search is a poor choice for the configuration of algorithms for new datasets.

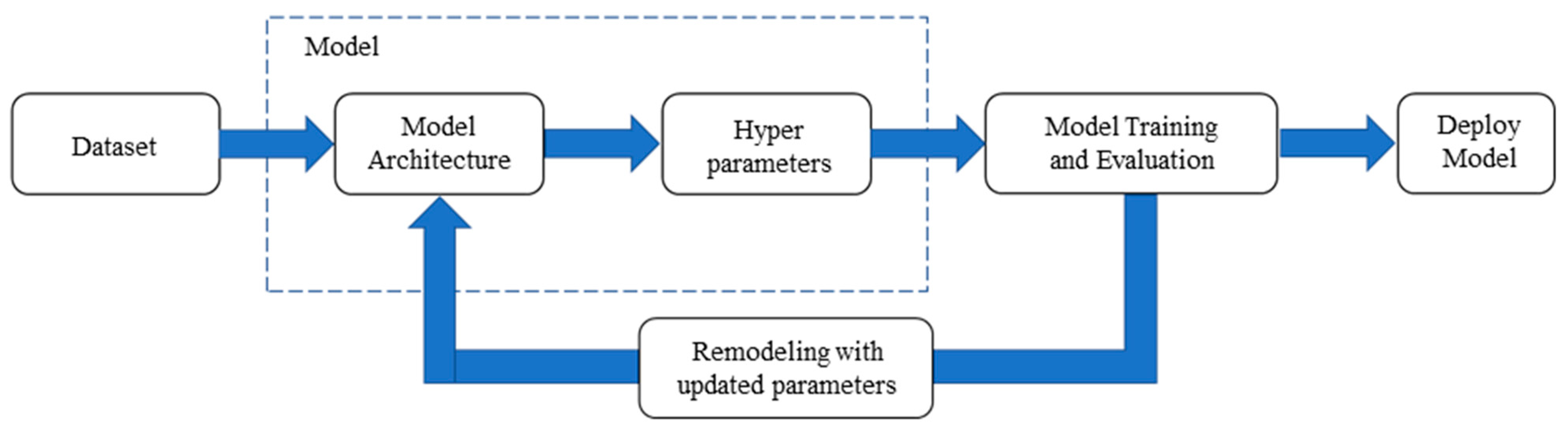

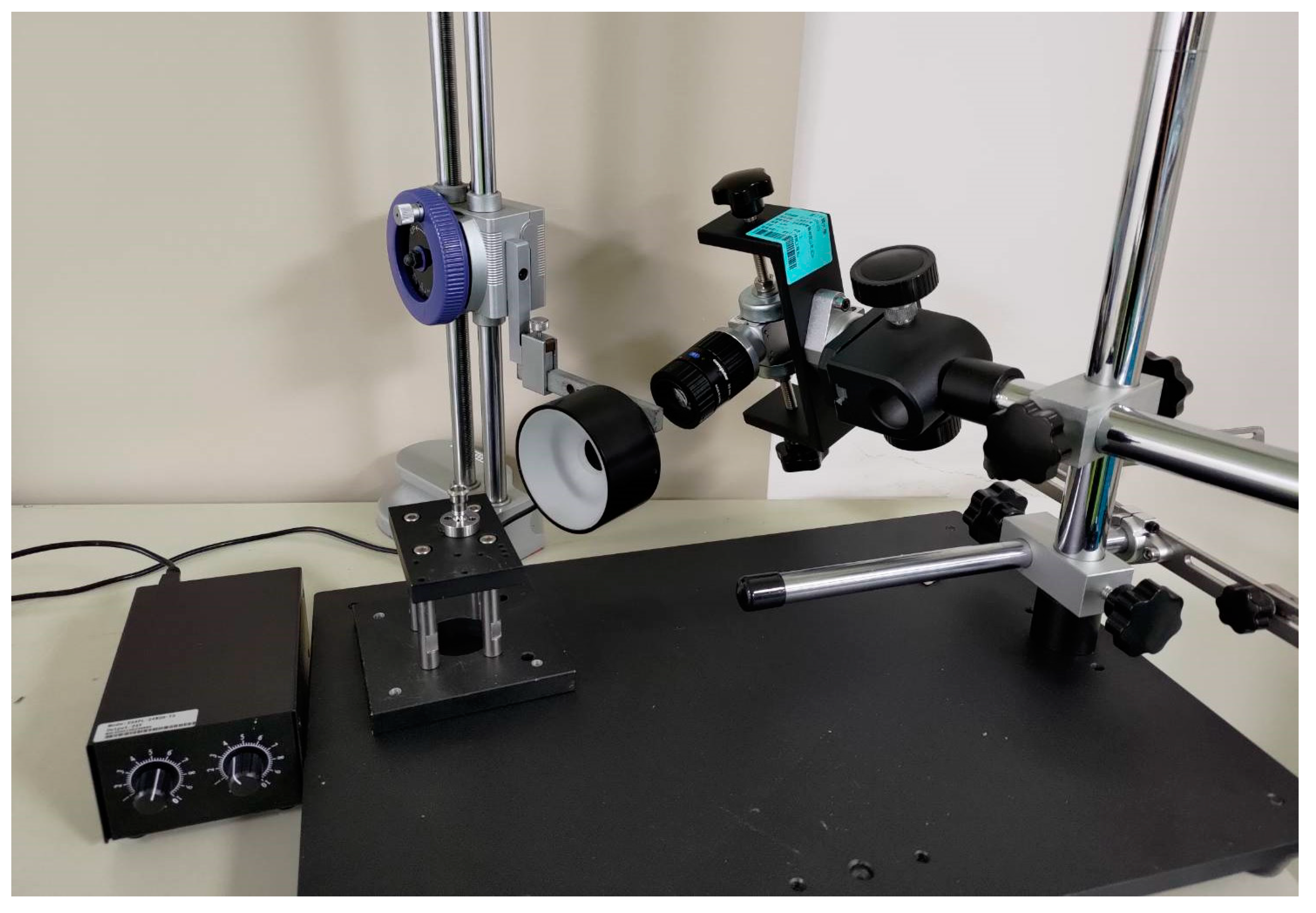

In smart manufacturing, quickly adapting to new complex manufacturing processes and designing appropriate and efficient optimization networks have become crucial. In the present study, industrial machine vision and deep learning were combined to construct an AutoML model to detect defects on metal surfaces to reduce costs in smart manufacturing. The proposed model can be used to develop highly adaptable visual inspection techniques to overcome the bottlenecks caused by current image-processing techniques and thereby advance smart manufacturing.

5. Conclusions

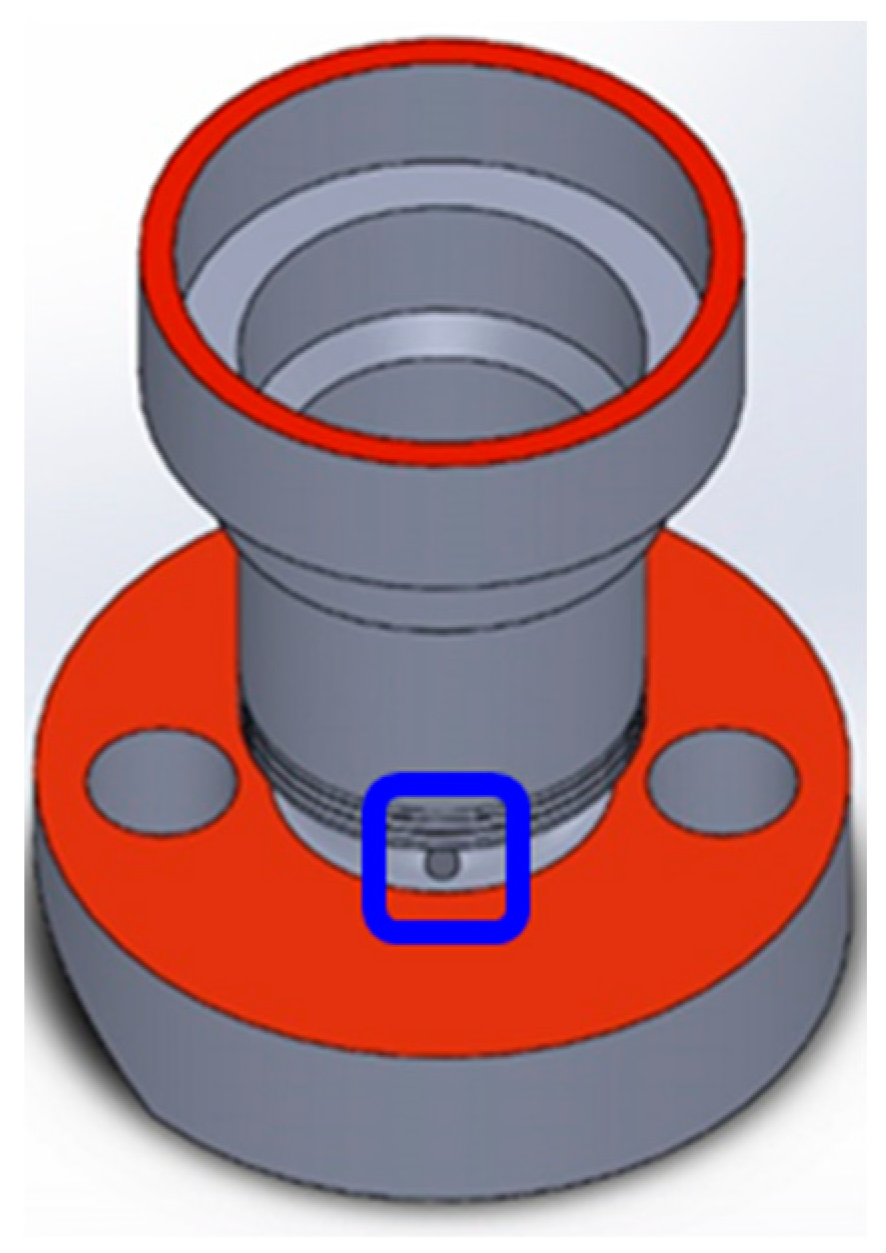

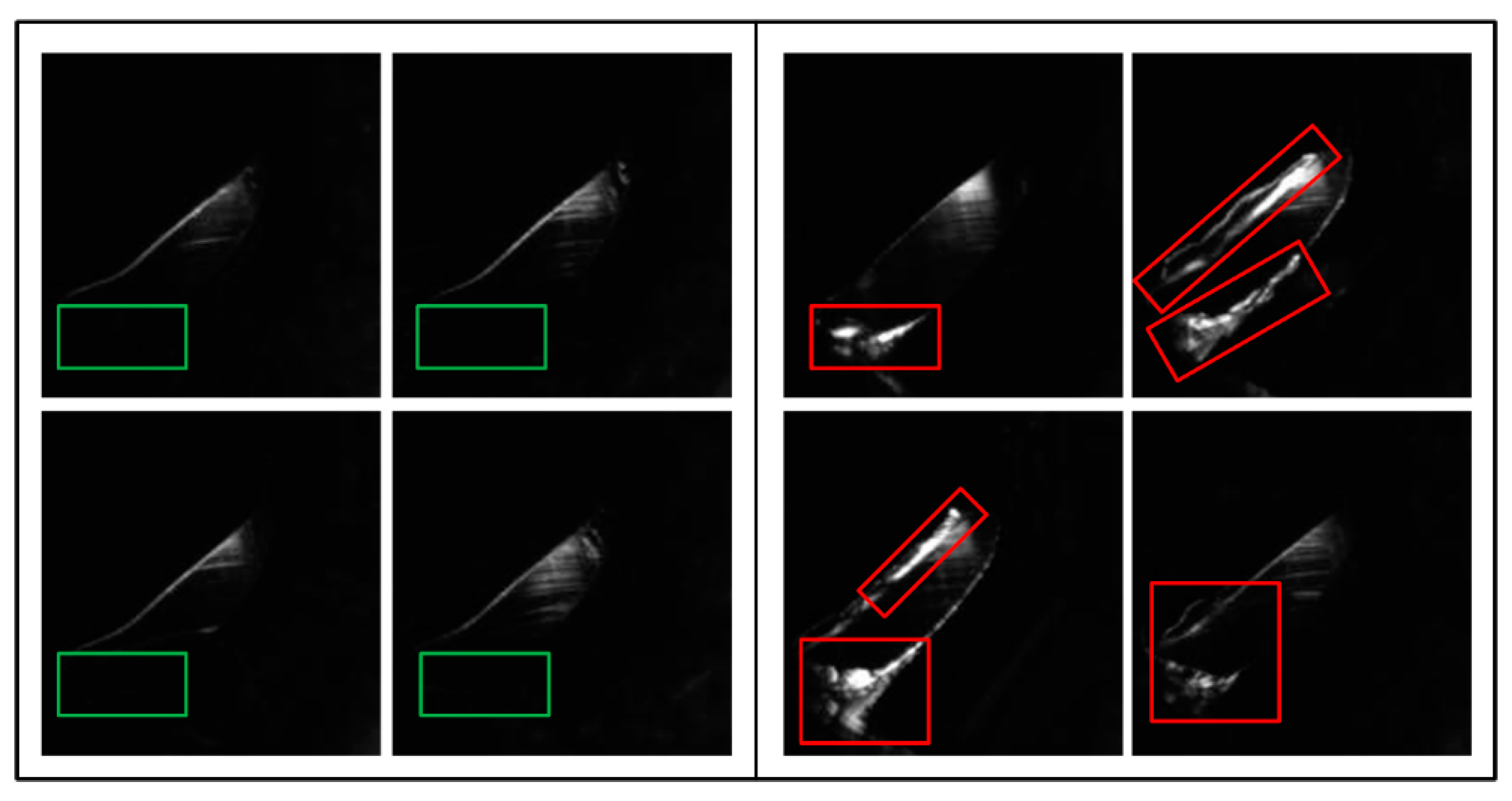

In this study, we detected a burr around the chamfered hole of 304 stainless steel parts produced through CNC machining. To prevent the scratching of the wires that pass through this hole, defects should be detected through imaging; thus, images of the hole were used as the training data in this study. A CNN that can perform TL and AutoML, as well as adopt the AutoKeras model, was designed. Experiments were conducted using these three networks and a training dataset.

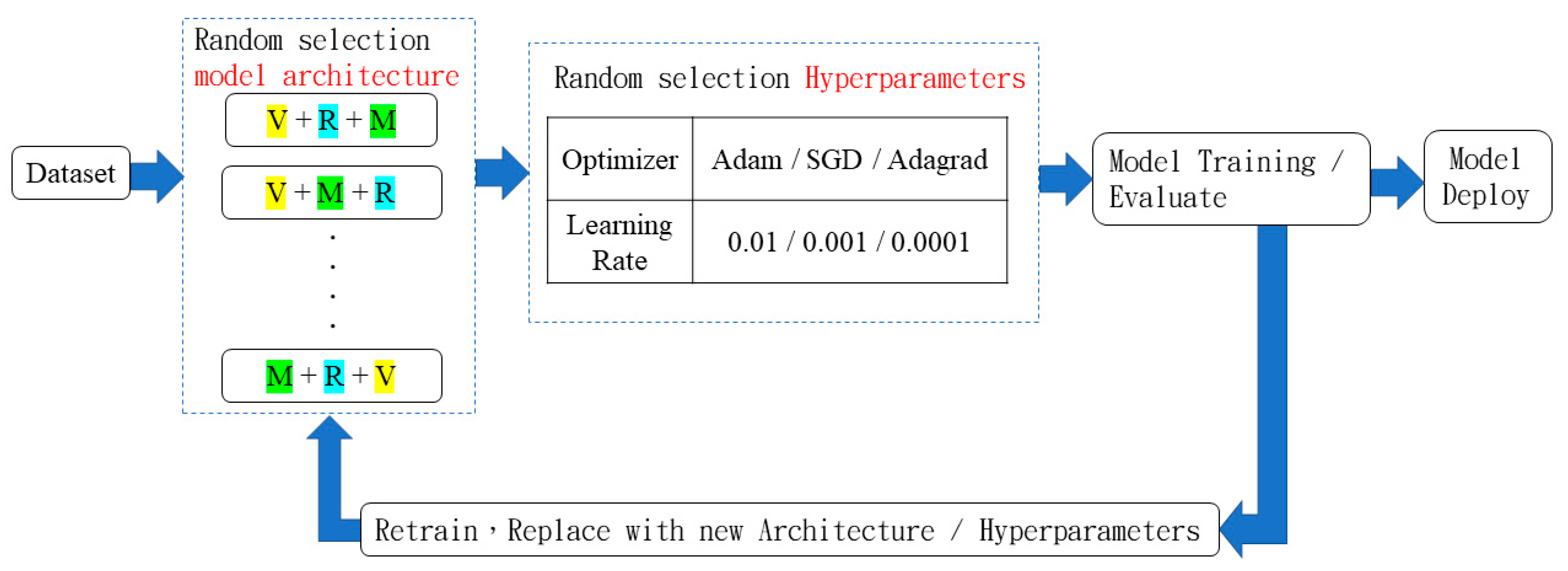

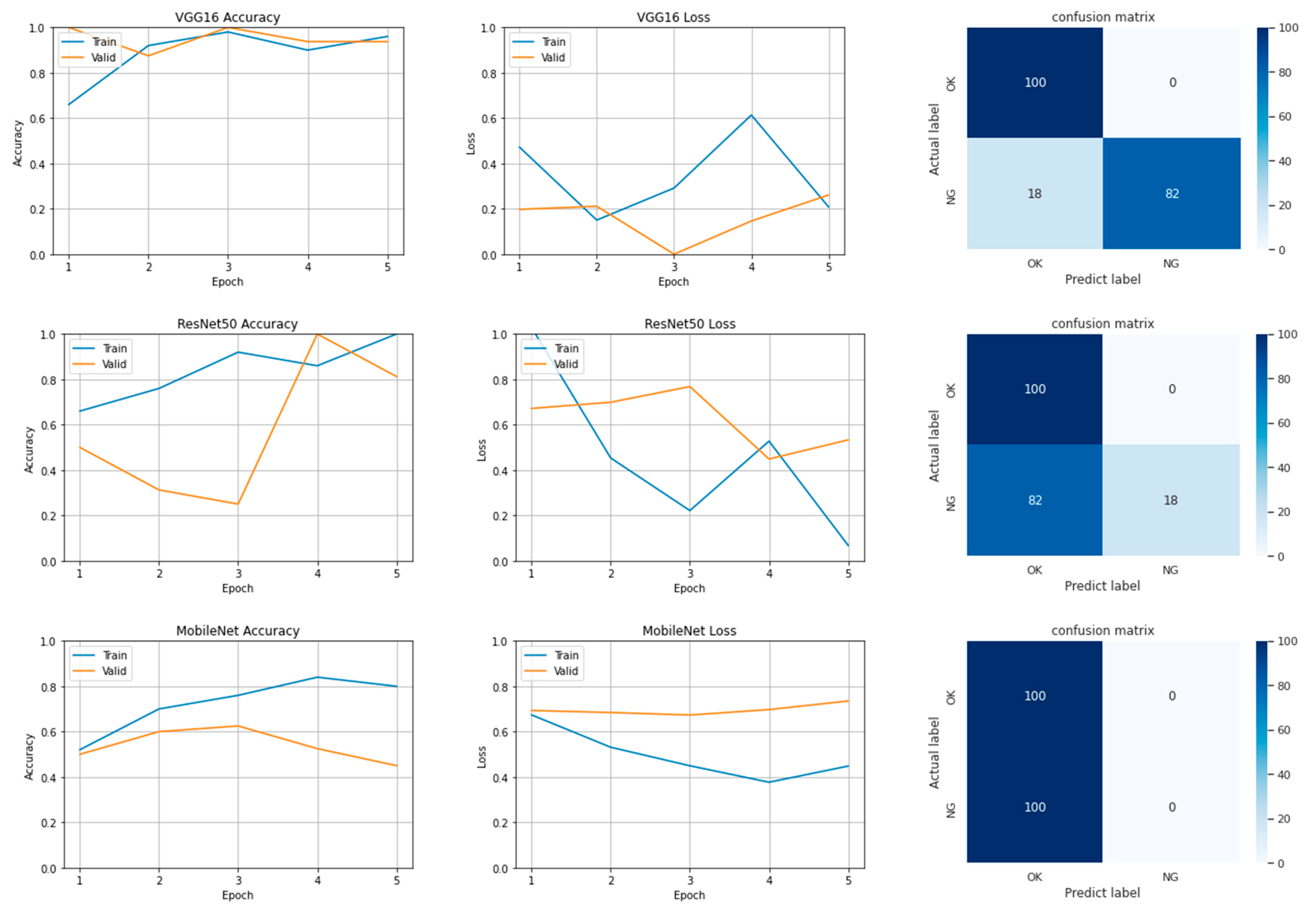

The TL model was trained with the following fixed hyperparameters: the Adam optimizer; batch size = 10; number of epochs = 5; and learning rate = 0.0001. This model used VGG-16, ResNet-50, or MobileNet v1. VGG-16 had the highest accuracy among these in the dataset used in this study. The training and validation accuracies of this model were high. Although the training accuracy of ResNet-50 eventually reached 1, its validation accuracy was low. Moreover, large fluctuations in prediction accuracy were observed when the number of epochs was 3. Presumably, larger network layers should cause the result of apparent stability in prediction convergence. However as the number of epochs increase, actually unstable prediction accuracy was observed when the detection defect is not complicated. The training and validation accuracies of MobileNet v1 were considerably lower than those of the other tested models. The designed AutoML model used random search to obtain a combination of modules to construct the optimal model architecture. It then obtained the hyperparameters after training and established a retraining mechanism so that a new architecture could be selected and retrained if the accuracy of the training results was low. The designed AutoML model trained six models and achieved a final training accuracy of 95.5%. The AutoKeras model required a longer training time but constructed the neural architecture search model in a shorter time. The accuracy and two-layer architecture of the convolutional model selected by AutoKeras indicate that the dataset used was simple and did not require a complex model.

The VGG-16, ResNet-50, and MobileNet v1 models all exhibit the advantage of small architecture that prevents gradient vanishing. Based on the spirit of TL, deploying the above model can provide preliminary prediction accuracy in a few trials. Our designed AutoML model composed of a core layer module, obtained by combining the modules of VGG-16, ResNet-50, and MobileNet v1, can effectively improve defect detection and reduce the associated training costs. Our model has considerable advantages in deploying proof-of-concept in defect detection with the selection of a bettering candidate for the CNN. The results of this study can act as a reference for the development of new diagnostic technology for cutting-edge smart manufacturing.