An Efficient Pest Detection Framework with a Medium-Scale Benchmark to Increase the Agricultural Productivity

Abstract

:1. Introduction

- We develop a medium-scale pest (insects) detection dataset that includes diverse images, captured in a challenging environment, where the object has high visual similarity with the background. The dataset consists of five different classes that allow a network to efficiently detect and recognize the pest species.

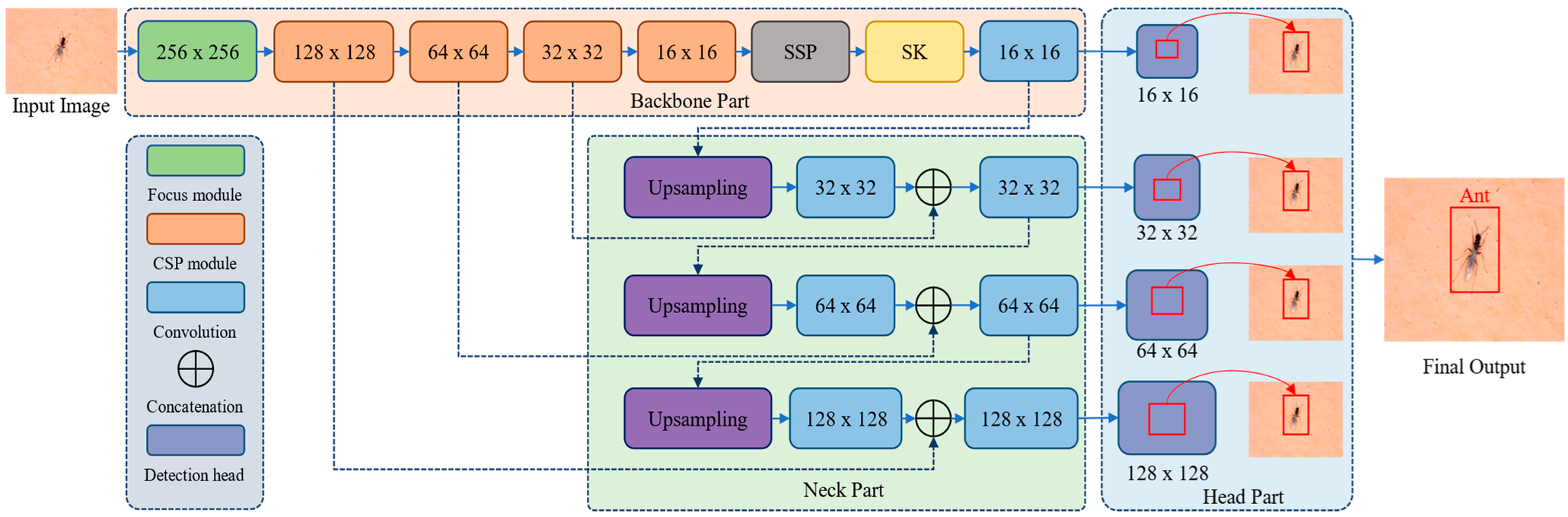

- We propose YOLOv5s models with several modifications such as extending the CSP module, improving the SK Attention module, and modifying the multiscale feature extraction mechanism to efficiently detect pest, and reduce computational cost.

- We perform experiments with various versions of YOLOv5 using a self-created dataset, where the proposed model achieves the best results in terms of model accuracy and time complexity analysis.

2. Related Work

3. The Proposed Method

3.1. Extended CSP Module

3.2. Modified SK Attention Module

3.3. Multiscale Feature Detection

3.4. Psuedo Code Algorithms

| Algorithm 1: Psuedocode of the proposed model |

| Input: Dataset samples S = {(X1, Y1),(X2, Y2),…,(Xn, Yn)}. The S is categorized into a training set (TrainX, TrainY), a validation Set (valx, Valy), and a testing set (testx, testy), where x is the number of pest images, and y is the corresponding image labels. |

| T denoted the number of training epochs. |

| Output: converge model |

| Load the (TrainX, TrainY), and (valx, Valy); |

| Augment the (TrainX, TrainY); |

| Begin: |

| Initialize weights and biases. |

| For m = 1, 2, 3, …, T: |

| Features extraction using CSP |

| Input the feature SK Attention Module |

| Generate the attention map using SK Attention Module |

| Fed the extraction features from SK Attention Module to Multiscale Feature Detection |

| Weight the multiscale feature maps, and calculate the output of the Multiscale Feature Detection. |

| Model fit (Optimizer, (TrainX, TrainY)) → (M(m)) |

| Model evaluate (M(m), (ValX, ValY)) → mAP(m). |

| End For |

| Save the optimal model which has max mAP in T epochs. |

| End |

| Load the testing set; |

| Load the optimal model in terms of object detection performances. |

4. Dataset Collection

5. Experiments and Results

5.1. Experimental Setup

5.2. Convergence Results of the Proposed Model

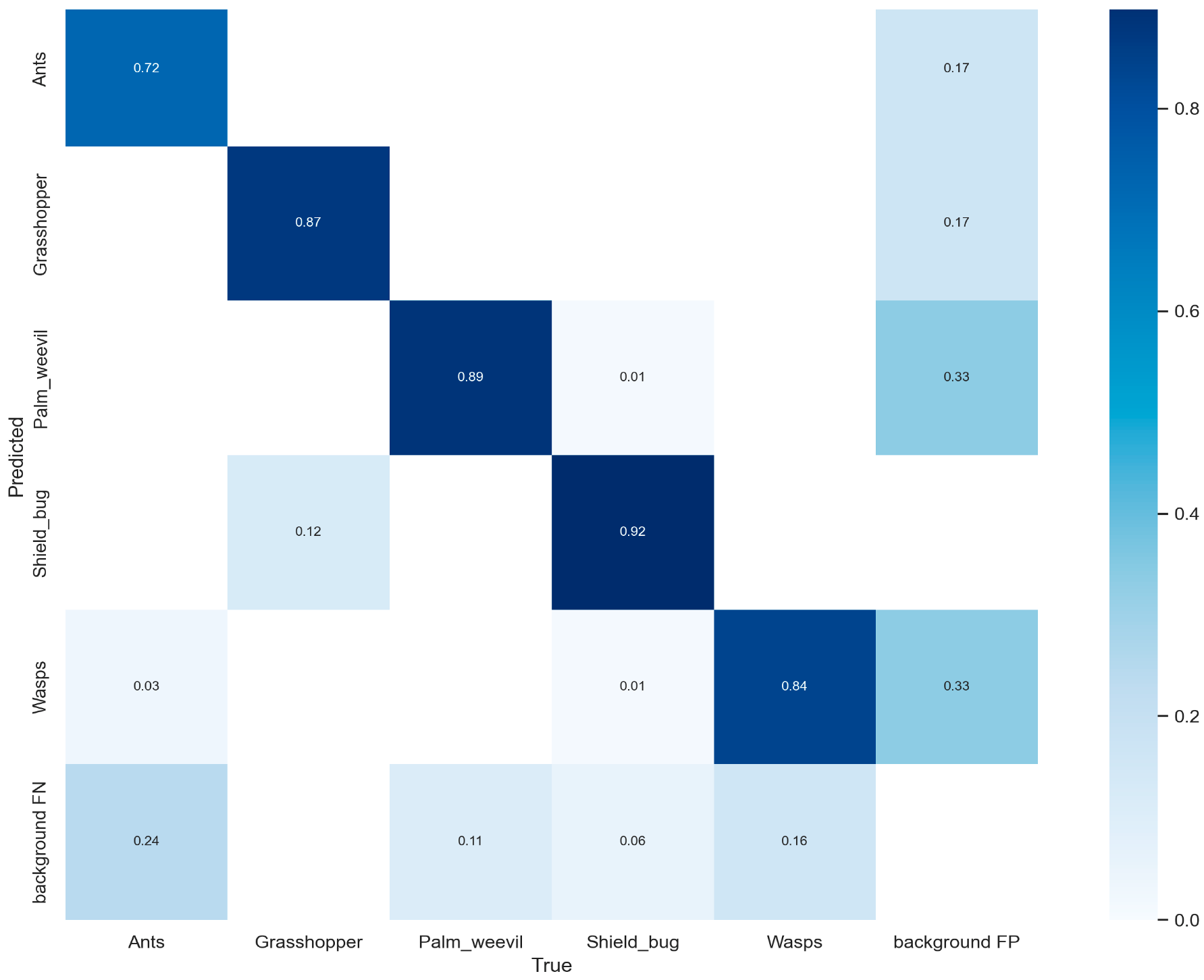

5.3. Comparing the Proposed Model with the Various Versions of YOLOv5 Models and the Current State-of-the-Art Models

5.4. Model Complexity Analysis

5.5. Visual Result of the Proposed Model

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Amiri, A.N.; Bakhsh, A. An effective pest management approach in potato to combat insect pests and herbicide. 3 Biotech 2019, 9, 16. [Google Scholar] [CrossRef]

- Fernández, R.M.; Petek, M.; Gerasymenko, I.; Juteršek, M.; Baebler, S.; Kallam, K.; Giménez, E.M.; Gondolf, J.; Nordmann, A.; Gruden, K.; et al. Insect pest management in the age of synthetic biology. Plant Biotechnol. J. 2022, 20, 25–36. [Google Scholar] [CrossRef]

- Habib, S.; Khan, I.; Aladhadh, S.; Islam, M.; Khan, S. External Features-Based Approach to Date Grading and Analysis with Image Processing. Emerg. Sci. J. 2022, 6, 694–704. [Google Scholar] [CrossRef]

- Zhou, J.; Li, J.; Wang, C.; Wu, H.; Zhao, C.; Teng, G. Crop disease identification and interpretation method based on multimodal deep learning. Comput. Electron. Agric. 2021, 189, 106408. [Google Scholar] [CrossRef]

- Khan, Z.A.; Ullah, W.; Ullah, A.; Rho, S.; Lee, M.Y.; Baik, S.W. An Adaptive Filtering Technique for Segmentation of Tuberculosis in Microscopic Images; IEEE: New York, NY, USA, 2020; pp. 184–187. [Google Scholar]

- Ullah, R.; Hayat, H.; Siddiqui, A.A.; Siddiqui, U.A.; Khan, J.; Ullah, F.; Hassan, S.; Hasan, L.; Albattah, W.; Islam, M.; et al. A Real-Time Framework for Human Face Detection and Recognition in CCTV Images. Math. Probl. Eng. 2022, 2022, 3276704. [Google Scholar] [CrossRef]

- Al-Hiary, H.; Bani-Ahmad, S.; Reyalat, M.; Braik, M.; Alrahamneh, Z. Fast and accurate detection and classification of plant diseases. Int. J. Comput. Appl. 2011, 17, 31–38. [Google Scholar] [CrossRef]

- Nguyen, T.N.; Lee, S.; Nguyen-Xuan, H.; Lee, J. A novel analysis-prediction approach for geometrically nonlinear problems using group method of data handling. Comput. Methods Appl. Mech. Eng. 2019, 354, 506–526. [Google Scholar] [CrossRef]

- Faithpraise, F.; Birch, P.; Young, R.; Obu, J.; Faithpraise, B.; Chatwin, C. Automatic plant pest detection and recognition using k-means clustering algorithm and correspondence filters. Int. J. Adv. Biotechnol. Res. 2013, 4, 189–199. [Google Scholar]

- Rumpf, T.; Mahlein, A.-K.; Steiner, U.; Oerke, E.-C.; Dehne, H.-W.; Plümer, L. Early detection and classification of plant diseases with support vector machines based on hyperspectral reflectance. Comput. Electron. Agric. 2010, 74, 91–99. [Google Scholar] [CrossRef]

- Khan, Z.A.; Hussain, T.; Haq, I.U.; Ullah, F.U.M.; Baik, S.W. Towards efficient and effective renewable energy prediction via deep learning. Energy Rep. 2022, 8, 10230–10243. [Google Scholar] [CrossRef]

- Ullah, W.; Hussain, T.; Khan, Z.A.; Haroon, U.; Baik, S.W. Intelligent dual stream CNN and echo state network for anomaly detection. Knowl.-Based Syst. 2022, 253, 109456. [Google Scholar] [CrossRef]

- Khan, Z.A.; Hussain, T.; Baik, S.W. Boosting energy harvesting via deep learning-based renewable power generation prediction. J. King Saud Univ.-Sci. 2022, 34, 101815. [Google Scholar] [CrossRef]

- Yar, H.; Imran, A.S.; Khan, Z.A.; Sajjad, M.; Kastrati, Z. Towards smart home automation using IoT-enabled edge-computing paradigm. Sensors 2021, 21, 4932. [Google Scholar] [CrossRef] [PubMed]

- Ali, H.; Farman, H.; Yar, H.; Khan, Z.; Habib, S.; Ammar, A. Deep learning-based election results prediction using Twitter activity. Soft Comput. 2022, 26, 7535–7543. [Google Scholar] [CrossRef]

- Rehman, A.; Saba, T.; Kashif, M.; Fati, S.M.; Bahaj, S.A.; Chaudhry, H. A revisit of internet of things technologies for monitoring and control strategies in smart agriculture. Agronomy 2022, 12, 127. [Google Scholar] [CrossRef]

- Malik, M.A.; Awan, M.J.; Saleem, M.R.; Rehman, A.; Alyami, J. A Novel Method for Lung Segmentation of Chest with Convolutional Neural Network. In Prognostic Models in Healthcare: AI and Statistical Approaches; Saba, T., Rehman, A., Roy, S., Eds.; Springer: Singapore, 2022; pp. 239–260. [Google Scholar]

- Sajjad, M.; Khan, Z.A.; Ullah, A.; Hussain, T.; Ullah, W.; Lee, M.Y.; Baik, S.W. A novel CNN-GRU-based hybrid approach for short-term residential load forecasting. IEEE Access 2020, 8, 143759–143768. [Google Scholar] [CrossRef]

- Khan, Z.A.; Hussain, T.; Ullah, A.; Rho, S.; Lee, M.; Baik, S.W. Towards efficient electricity forecasting in residential and commercial buildings: A novel hybrid CNN with a LSTM-AE based framework. Sensors 2020, 20, 1399. [Google Scholar] [CrossRef] [Green Version]

- Shoukat, A.; Akbar, S.; Hassan, S.A.E.; Rehman, A.; Ayesha, N. An Automated Deep Learning Approach to Diagnose Glaucoma using Retinal Fundus Images. In Proceedings of the 2021 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 13–14 December 2021; IEEE: New York, NY, USA, 2021; pp. 120–125. [Google Scholar]

- Shijie, J.; Peiyi, J.; Siping, H. Automatic detection of tomato diseases and pests based on leaf images. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; IEEE: New York, NY, USA, 2017; pp. 2510–2537. [Google Scholar]

- Gandhi, R.; Nimbalkar, S.; Yelamanchili, N.; Ponkshe, S. Plant disease detection using CNNs and GANs as an augmentative approach. In Proceedings of the 2018 IEEE International Conference on Innovative Research and Development (ICIRD), Bangkok, Thailand, 11 May 2018; IEEE: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Leonardo, M.M.; Carvalho, T.J.; Rezende, E.; Zucchi, R.; Faria, F.A. Deep feature-based classifiers for fruit fly identification (Diptera: Tephritidae). In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Paraná, Brazil, 29 October–1 November 2018; IEEE: New York, NY, USA, 2018; pp. 41–47. [Google Scholar]

- Dawei, W.; Limiao, D.; Jiangong, N.; Jiyue, G.; Hongfei, Z.; Zhongzhi, H. Recognition pest by image-based transfer learning. J. Sci. Food Agric. 2019, 99, 4524–4531. [Google Scholar] [CrossRef] [PubMed]

- Khan, Z.A.; Ullah, A.; Ullah, W.; Rho, S.; Lee, M.; Baik, S.W. Electrical energy prediction in residential buildings for short-term horizons using hybrid deep learning strategy. Appl. Sci. 2020, 10, 8634. [Google Scholar] [CrossRef]

- Cheeti, S.; Kumar, G.S.; Priyanka, J.S.; Firdous, G.; Ranjeeva, P.R. Pest Detection and Classification Using YOLO and CNN. Ann. Rom. Soc. Cell Biol. 2021, 25, 15295–15300. [Google Scholar]

- Albattah, W.; Kaka Khel, M.H.; Habib, S.; Islam, M.; Khan, S.; Kadir, K.A. Hajj Crowd Management Using CNN-Based Approach. Comput. Mater. Contin. 2021, 66, 2183–2197. [Google Scholar] [CrossRef]

- Mique, E.L., Jr.; Palaoag, T.D. Rice pest and disease detection using convolutional neural network. In Proceedings of the 2018 International Conference on Information Science and System, San Francisco, CA, USA, 13–16 December 2018; pp. 147–151. [Google Scholar]

- Habib, S.; Alsanea, M.; Aloraini, M.; Al-Rawashdeh, H.S.; Islam, M.; Khan, S. An Efficient and Effective Deep Learning-Based Model for Real-Time Face Mask Detection. Sensors 2022, 22, 2602. [Google Scholar] [CrossRef]

- Nam, N.T.; Hung, P.D. Pest detection on traps using deep convolutional neural networks. In Proceedings of the 2018 International Conference on Control and Computer Vision, Singapore, 15–18 June 2018; pp. 33–38. [Google Scholar]

- Khan, Z.A.; Ullah, A.; Haq, I.U.; Hamdy, M.; Hamdy, M.; Maurod, G.M.; Muhammad, K.; Hijji, M.; Baik, S.W. Efficient short-term electricity load forecasting for effective energy management. Sustain. Energy Technol. Assess. 2022, 53, 102337. [Google Scholar] [CrossRef]

- Li, W.; Zhu, T.; Li, X.; Dong, J.; Liu, J. Recommending Advanced Deep Learning Models for Efficient Insect Pest Detection. Agriculture 2022, 12, 1065. [Google Scholar] [CrossRef]

- Alsanea, M.; Habib, S.; Khan, N.F.; Alsharekh, M.F.; Islam, M.; Khan, S. A Deep-Learning Model for Real-Time Red Palm Weevil Detection and Localization. J. Imaging 2022, 8, 170. [Google Scholar] [CrossRef]

- Koubaa, A.; Aldawood, A.; Saeed, B.; Hadid, A.; Ahmed, M.; Saad, A.; Alkhouja, H.; Ammar, A.; Alkanhal, M. Smart Palm: An IoT framework for red palm weevil early detection. Agronomy 2020, 10, 987. [Google Scholar] [CrossRef]

- Hu, Z.; Xiang, Y.; Li, Y.; Long, Z.; Liu, A.; Dai, X.; Lei, X.; Tang, Z. Research on Identification Technology of Field Pests with Protective Color Characteristics. Appl. Sci. 2022, 12, 3810. [Google Scholar] [CrossRef]

- Burhan, S.A.; Minhas, S.; Tariq, A.; Hassan, M.N. Comparative study of deep learning algorithms for disease and pest detection in rice crops. In Proceedings of the 2020 12th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Bucharest, Romania, 25–27 June 2020; IEEE: New York, NY, USA, 2020; pp. 1–5. [Google Scholar]

- Hansen, O.L.P.; Svenning, J.-C.; Olsen, K.; Dupont, S.; Garner, B.H.; Iosifidis, A.; Price, B.W.; Høye, T.T. Species-level image classification with convolutional neural network enables insect identification from habitus images. Ecol. Evol. 2020, 10, 737–747. [Google Scholar] [CrossRef]

- Chen, J.-W.; Lin, W.-J.; Cheng, H.-J.; Hung, C.-L.; Lin, C.-Y.; Chen, S.-P. A smartphone-based application for scale pest detection using multiple-object detection methods. Electronics 2021, 10, 372. [Google Scholar] [CrossRef]

- Liu, L.; Wang, R.; Xie, C.; Yang, P.; Wang, F.; Sudirman, S.; Liu, W. PestNet: An end-to-end deep learning approach for large-scale multi-class pest detection and classification. IEEE Access 2019, 7, 45301–45312. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Tomato diseases and pests detection based on improved Yolo V3 convolutional neural network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef]

- Legaspi, K.R.B.; Sison, N.W.S.; Villaverde, J.F. Detection and Classification of Whiteflies and Fruit Flies Using YOLO. In Proceedings of the 2021 13th International Conference on Computer and Automation Engineering (ICCAE), Melbourne, Australia, 20–22 March 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar]

- Lim, S.; Kim, S.; Kim, D. Performance effect analysis for insect classification using convolutional neural network. In Proceedings of the 2017 7th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 24–26 November 2017; IEEE: New York, NY, USA, 2017; pp. 210–215. [Google Scholar]

- Karar, M.E.; Alsunaydi, F.; Albusaymi, S.; Alotaibi, S. A new mobile application of agricultural pests recognition using deep learning in cloud computing system. Alex. Eng. J. 2021, 60, 4423–4432. [Google Scholar] [CrossRef]

- Esgario, J.G.; de Castro, P.B.; Tassis, L.M.; Krohling, R.A. An app to assist farmers in the identification of diseases and pests of coffee leaves using deep learning. Inf. Process. Agric. 2022, 9, 38–47. [Google Scholar] [CrossRef]

- Habib, S.; Khan, I.; Islam, M.; Albattah, W.; Alyahya, S.M.; Khan, S.; Hassan, M.K. Wavelet frequency transformation for specific weeds recognition. In Proceedings of the 2021 1st International Conference on Artificial Intelligence and Data Analytics (CAIDA), Riyadh, Saudi Arabia, 6–7 April 2021; IEEE Prince Sultan University: Riyadh, Saudi Arabia, 2021; pp. 97–100. [Google Scholar]

- Ullah, N.; Khan, J.A.; Alharbi, L.A.; Raza, A.; Khan, W.; Ahmad, I. An Efficient Approach for Crops Pests Recognition and Classification Based on Novel DeepPestNet Deep Learning Model. IEEE Access 2022, 10, 73019–73032. [Google Scholar] [CrossRef]

- Yar, H.; Hussain, T.; Khan, Z.A.; Lee, M.Y.; Baik, S.W. Fire Detection via Effective Vision Transformers. J. Korean Inst. Next Gener. Comput. 2021, 17, 21–30. [Google Scholar]

- Yar, H.; Hussain, T.; Agarwal, M.; Khan, Z.A.; Gupta, S.K.; Baik, S.W. Optimized Dual Fire Attention Network and Medium-Scale Fire Classification Benchmark. IEEE Trans. Image Process. 2022, 31, 6331–6343. [Google Scholar] [CrossRef]

- Habib, S.; Hussain, A.; Albattah, W.; Islam, M.; Khan, S.; Khan, R.U.; Khan, K. Abnormal Activity Recognition from Surveillance Videos Using Convolutional Neural Network. Sensors 2021, 21, 8291. [Google Scholar] [CrossRef]

- Jan, H.; Yar, H.; Iqbal, J.; Farman, H.; Khan, Z.; Koubaa, A. Raspberry pi assisted safety system for elderly people: An application of smart home. In Proceedings of the 2020 First International Conference of Smart Systems and Emerging Technologies (SMARTTECH), Riyadh, Saudi Arabia, 3–5 November 2020; IEEE: New York, NY, USA, 2020; pp. 155–160. [Google Scholar]

- Yar, H.; Hussain, T.; Khan, Z.A.; Koundal, D.; Lee, M.Y.; Baik, S.W. Vision sensor-based real-time fire detection in resource-constrained IoT environments. Comput. Intell. Neurosci. 2021, 2021, 5195508. [Google Scholar] [CrossRef]

- Li, D.; Ahmed, F.; Wu, N.; Sethi, A.I. YOLO-JD: A Deep Learning Network for Jute Diseases and Pests Detection from Images. Plants 2022, 11, 937. [Google Scholar] [CrossRef]

- Khan, Z.A.; Hussain, T.; Ullah, F.U.M.; Gupta, S.K.; Lee, M.Y.; Baik, S.W. Randomly Initialized CNN with Densely Connected Stacked Autoencoder for Efficient Fire Detection. Eng. Appl. Artif. Intell. 2022, 116, 105403. [Google Scholar] [CrossRef]

| Class | Number of Images |

|---|---|

| Ants | 392 |

| Grasshopper | 315 |

| Palm_weevil | 148 |

| Shield_bug | 392 |

| Wasps | 318 |

| Models | Classes | Precision | Recall | mAP |

|---|---|---|---|---|

| Faster RCNN | All | 0.92 | 0.89 | 0.924 |

| Ants | 0.73 | 0.74 | 0.76 | |

| Grasshopper | 0.98 | 0.99 | 1 | |

| Palm_weevil | 0.99 | 0.88 | 0.98 | |

| Shield_bug | 0.96 | 0.97 | 1 | |

| Wasps | 0.94 | 0.86 | 0.91 | |

| YoloV3 | All | 0.82 | 0.87 | 0.86 |

| Ants | 0.59 | 0.75 | 0.64 | |

| Grasshopper | 0.86 | 0.84 | 0.91 | |

| Palm_weevil | 0.91 | 0.93 | 0.95 | |

| Shield_bug | 0.88 | 0.91 | 0.93 | |

| Wasps | 0.87 | 0.9 | 0.88 | |

| YoloV4 | All | 0.85 | 0.87 | 0.89 |

| Ants | 0.65 | 0.76 | 0.71 | |

| Grasshopper | 0.87 | 0.83 | 0.93 | |

| Palm_weevil | 0.93 | 0.92 | 0.96 | |

| Shield_bug | 0.9 | 0.94 | 0.95 | |

| Wasps | 0.88 | 0.91 | 0.89 | |

| Yolov5n | All | 0.87 | 0.878 | 0.895 |

| Ants | 0.573 | 0.74 | 0.677 | |

| Grasshopper | 0.923 | 0.875 | 0.944 | |

| Palm_weevil | 1 | 0.983 | 0.995 | |

| Shield_bug | 0.933 | 0.972 | 0.978 | |

| Wasps | 0.922 | 0.821 | 0.881 | |

| Yolov5s | All | 0.906 | 0.835 | 0.901 |

| Ants | 0.797 | 0.679 | 0.781 | |

| Grasshopper | 0.904 | 0.875 | 0.88 | |

| Palm_weevil | 9.965 | 1 | 0.995 | |

| Shield_bug | 0.973 | 0.915 | 0.977 | |

| Wasps | 0.888 | 0.706 | 0.871 | |

| YoloV5m | All | 0.936 | 0.845 | 0.907 |

| Ants | 0.861 | 0.655 | 0.731 | |

| Grasshopper | 0.948 | 1 | 0.995 | |

| Palm_weevil | 1 | 0.816 | 0.995 | |

| Shield_bug | 0.943 | 0.934 | 0.97 | |

| Wasps | 0.93 | 0.821 | 0.846 | |

| Yolov5l | All | 0.849 | 0.89 | 0.917 |

| Ants | 0.723 | 0.721 | 9.756 | |

| Grasshopper | 1 | 0.933 | 0.995 | |

| Palm_weevil | 0.758 | 1 | 0.984 | |

| Shield_bug | 0.885 | 0.958 | 0.965 | |

| Wasps | 0.881 | 0.839 | 0.886 | |

| Yolo5x | All | 0.912 | 0.882 | 0.921 |

| Ants | 0.697 | 0.724 | 0.764 | |

| Grasshopper | 0.969 | 1 | 0.995 | |

| Palm_weevil | 1 | 0.866 | 0.975 | |

| Shield_bug | 0.972 | 0.978 | 0.992 | |

| Wasps | 0.922 | 0.841 | 0.89 | |

| Our model | All | 0.938 | 0.896 | 0.934 |

| Ants | 0.79 | 0.76 | 0.80 | |

| Grasshopper | 0.98 | 1 | 0.996 | |

| Palm_weevil | 1 | 0.886 | 0.98 | |

| Shield_bug | 0.975 | 0.978 | 0.993 | |

| Wasps | 0.947 | 0.856 | 0.9 |

| Model | GFLOPs | Model size | FPS (CPU) |

|---|---|---|---|

| YOLOV5n | 4.2 | 3.65 | 31.02 |

| YOLOV5s | 16 | 14.1 | 21.25 |

| YOLOV5m | 48.3 | 40.2 | 10.16 |

| YOLOV5l | 108.3 | 88.5 | 6.62 |

| YOLOV5x | 204.7 | 165 | 3.90 |

| The proposed model | 4.8 | 13 | 28.60 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aladhadh, S.; Habib, S.; Islam, M.; Aloraini, M.; Aladhadh, M.; Al-Rawashdeh, H.S. An Efficient Pest Detection Framework with a Medium-Scale Benchmark to Increase the Agricultural Productivity. Sensors 2022, 22, 9749. https://doi.org/10.3390/s22249749

Aladhadh S, Habib S, Islam M, Aloraini M, Aladhadh M, Al-Rawashdeh HS. An Efficient Pest Detection Framework with a Medium-Scale Benchmark to Increase the Agricultural Productivity. Sensors. 2022; 22(24):9749. https://doi.org/10.3390/s22249749

Chicago/Turabian StyleAladhadh, Suliman, Shabana Habib, Muhammad Islam, Mohammed Aloraini, Mohammed Aladhadh, and Hazim Saleh Al-Rawashdeh. 2022. "An Efficient Pest Detection Framework with a Medium-Scale Benchmark to Increase the Agricultural Productivity" Sensors 22, no. 24: 9749. https://doi.org/10.3390/s22249749

APA StyleAladhadh, S., Habib, S., Islam, M., Aloraini, M., Aladhadh, M., & Al-Rawashdeh, H. S. (2022). An Efficient Pest Detection Framework with a Medium-Scale Benchmark to Increase the Agricultural Productivity. Sensors, 22(24), 9749. https://doi.org/10.3390/s22249749