Cascading Alignment for Unsupervised Domain-Adaptive DETR with Improved DeNoising Anchor Boxes

Abstract

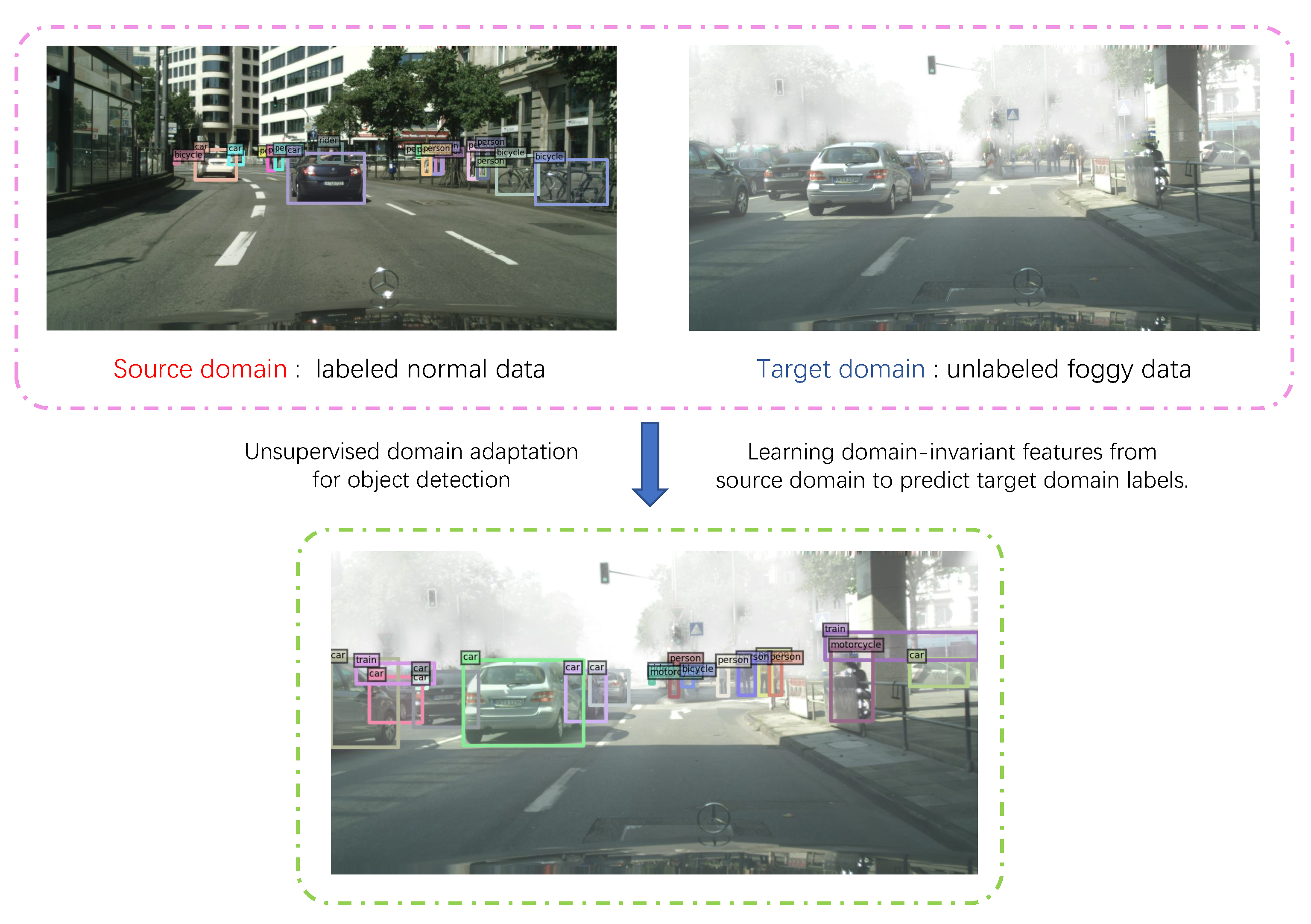

1. Introduction

- We observe that a weak discriminator is a primary reason why alignment of feature distribution on the backbone yields only modest gains and propose AEDD. It directly scopes the backbone to alleviate the domain gaps and guide the ascension of the cross-domain performance of the transformer encoder and decoder.

- A novel weak-restraints loss is proposed to regularize further the category-level token produced by the transformer decoder and boost its discriminability for robust object detection.

- Extensive experiments on challenging domain adaptation scenarios verify the effectiveness of our method with end-to-end training.

2. Related Work

2.1. Object Detection

2.2. Pipeline of DINO

2.3. Domain Adaptation for Object Detection

3. Methods

3.1. Framework Overview

3.2. Attention-Enhanced Double Discriminators

3.3. Weak Restraints on Category-Level Token

3.4. Total Loss

4. Experiments

4.1. Datasets

- Cityscapes [31] has a subset called leftImg8bit, which contains 2975 images for training and 500 images for evaluation with high-quality pixel-level annotations from 50 different cities; consistent with previous work [40], the tightest rectangles of object mask will be used to obtain bounding-box annotation of 8 different object categories for training and evaluation.

- Foggy Cityscapes [32] is a synthetic foggy dataset which simulates fog on real scenes which automatically inherit the semantic annotations of their real, clear counterparts from Cityscapes. In particular, the experiment uses = 0.02, which corresponds approximately to the meteorological optical range of 150 m, to remain in line with previous work.

- Sim10k [44] is a synthetic dataset consisting of 10,000 images produced from the game Grand Theft Auto V, and is excellent for evaluating synthetic to real adaptation.

4.2. Implementation Details

4.3. Comparisons with State-of-the-Art Methods

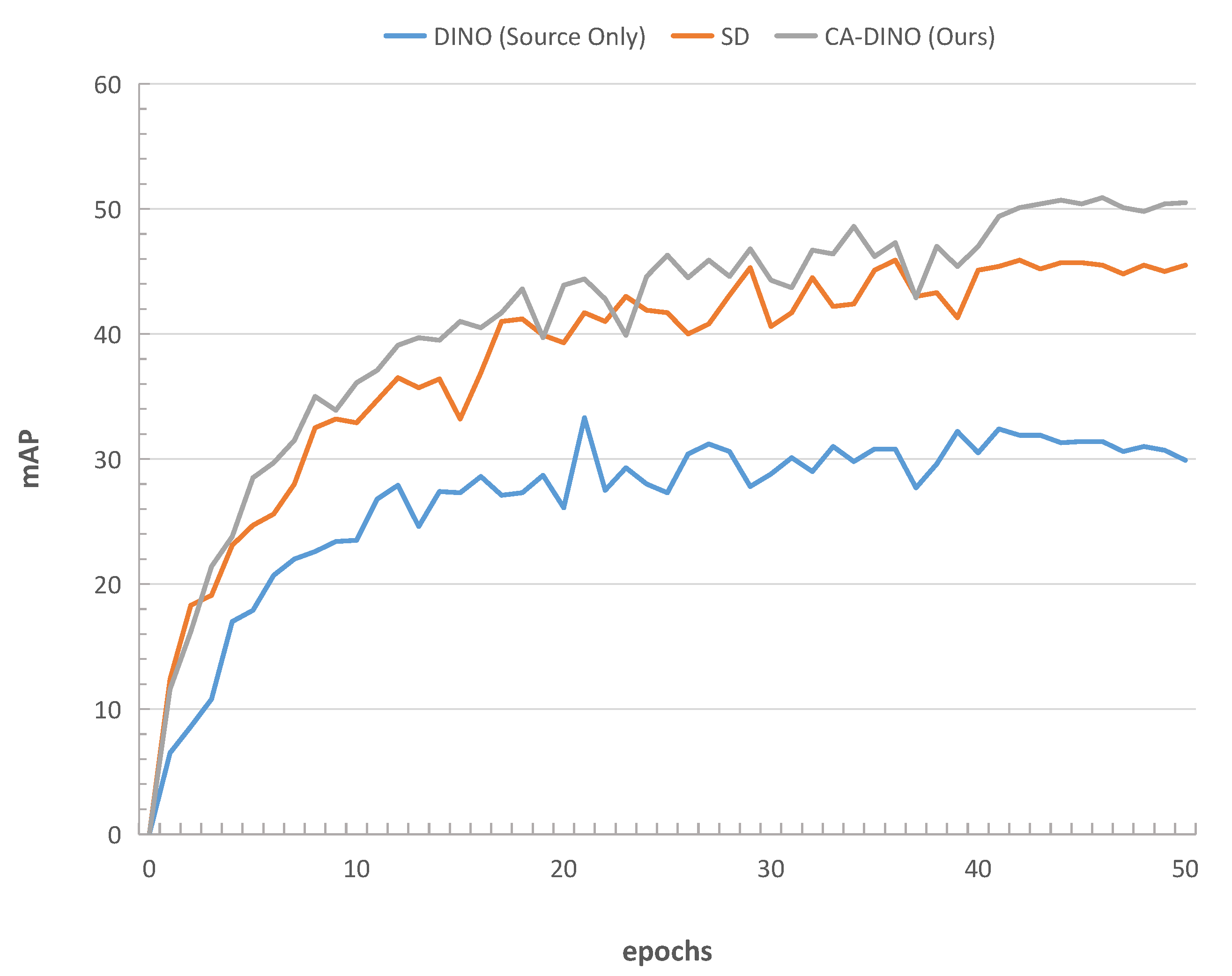

4.3.1. Normal to Foggy

4.3.2. Synthetic to Real

4.4. Ablation Study

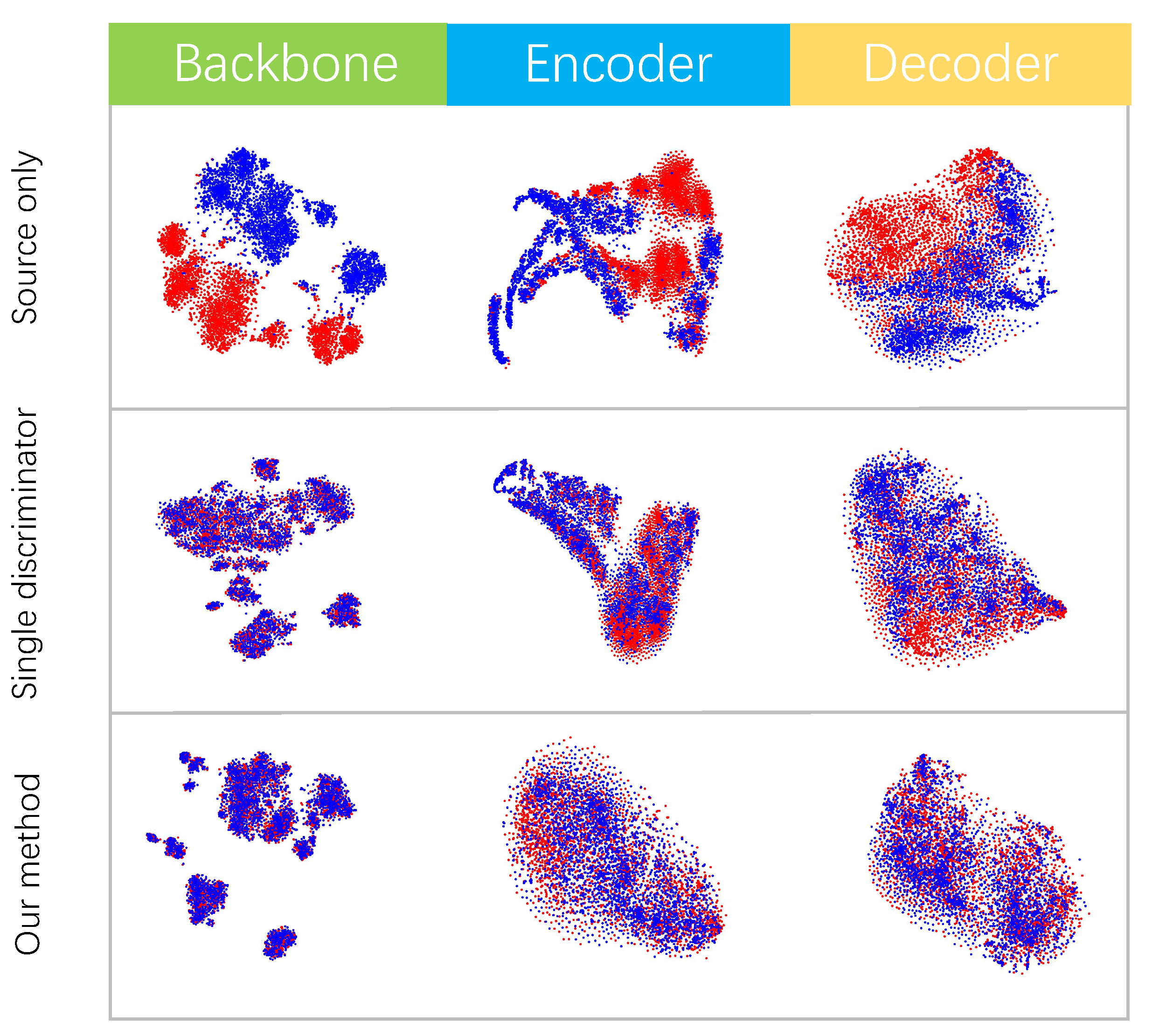

4.5. Visualization and Discussion

| Method | Date | Detector | Person | Rider | Car | Truck | Bus | Train | Mcycle | Bicycle | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|

| DAF [24] | 2018 | Faster RCNN | 29.2 | 40.4 | 43.4 | 19.7 | 38.3 | 28.5 | 23.7 | 32.7 | 32.0 |

| SWDA [23] | 2019 | Faster RCNN | 31.8 | 44.3 | 48.9 | 21.0 | 43.8 | 28.0 | 28.9 | 35.8 | 35.3 |

| SCDA [22] | 2019 | Faster RCNN | 33.8 | 42.1 | 52.1 | 26.8 | 42.5 | 26.5 | 29.2 | 34.5 | 35.9 |

| MTOR [21] | 2019 | Faster RCNN | 30.6 | 41.4 | 44.0 | 21.9 | 38.6 | 40.6 | 28.3 | 35.6 | 35.1 |

| MCAR [52] | 2020 | Faster RCNN | 32.0 | 42.1 | 43.9 | 31.3 | 44.1 | 43.4 | 37.4 | 36.6 | 38.8 |

| GPA [53] | 2020 | Faster RCNN | 32.9 | 46.7 | 54.1 | 24.7 | 45.7 | 41.1 | 32.4 | 38.7 | 39.5 |

| UMT [54] | 2021 | Faster RCNN | 33.0 | 46.7 | 48.6 | 34.1 | 56.5 | 46.8 | 30.4 | 37.3 | 41.7 |

| D-adapt [39] | 2022 | Faster RCNN | 40.8 | 47.1 | 57.5 | 33.5 | 46.9 | 41.4 | 33.6 | 43.0 | 43.0 |

| SA-YOLO [25] | 2022 | YOLOv5 | 36.2 | 41.8 | 50.2 | 29.9 | 45.6 | 29.5 | 30.4 | 35.2 | 37.4 |

| EPM [27] | 2020 | FCOS | 44.2 | 46.6 | 58.5 | 24.8 | 45.2 | 29.1 | 28.6 | 34.6 | 39.0 |

| KTNet [55] | 2021 | FCOS | 46.4 | 43.2 | 60.6 | 25.8 | 41.2 | 40.4 | 30.7 | 38.8 | 40.9 |

| SFA [40] | 2021 | Deformable DETR | 46.5 | 48.6 | 62.6 | 25.1 | 46.2 | 29.4 | 28.3 | 44.0 | 41.3 |

| OAA + OTA [56] | 2022 | Deformable DETR | 48.7 | 51.5 | 63.6 | 31.1 | 47.6 | 47.8 | 38.0 | 45.9 | 46.8 |

| CA-DINO (Ours) | 2022 | DINO | 54.5 | 55.6 | 69.1 | 36.2 | 57.8 | 42.8 | 38.3 | 50.1 | 50.5 |

| Method | Date | Detector | Car AP |

|---|---|---|---|

| DAF [24] | 2018 | Faster RCNN | 41.9 |

| SWDA [23] | 2019 | Faster RCNN | 44.6 |

| SCDA [22] | 2019 | Faster RCNN | 45.1 |

| MTOR [21] | 2019 | Faster RCNN | 46.6 |

| CR-DA [57] | 2020 | Faster RCNN | 43.1 |

| CR-SW [57] | 2020 | Faster RCNN | 46.2 |

| GPA [53] | 2020 | Faster RCNN | 47.6 |

| D-adapt [39] | 2022 | Faster RCNN | 49.3 |

| SA-YOLO [25] | 2022 | YOLOv5 | 42.6 |

| EPM [27] | 2020 | FCOS | 47.3 |

| KTNet [55] | 2021 | FCOS | 50.7 |

| SFA [40] | 2021 | Deformable DETR | 52.6 |

| CA-DINO(Ours) | 2022 | DINO | 54.7 |

| Method | Person | Rider | Car | Truck | Bus | Train | Mcycle | Bicycle | mAP |

|---|---|---|---|---|---|---|---|---|---|

| DINO [18] | 38.2 | 38.2 | 45.2 | 18.2 | 31.9 | 6.0 | 22.3 | 37.9 | 29.9 |

| +WROT | 43.0 | 46.6 | 58.4 | 18.7 | 32.2 | 11.3 | 23.3 | 38.3 | 34.0 |

| +SD +WROT | 51.1 | 52.6 | 64.0 | 26.4 | 51.1 | 36.0 | 35.5 | 47.4 | 45.5 |

| +cam-SD +WROT | 51.8 | 55.0 | 64.5 | 32.6 | 51.7 | 37.8 | 31.8 | 49.0 | 46.8 |

| +sam-SD +WROT | 52.0 | 52.9 | 63.8 | 27.1 | 51.2 | 43.9 | 32.5 | 48.0 | 46.4 |

| +AESD +WROT | 51.7 | 54.7 | 67.5 | 29.7 | 52.0 | 44.0 | 40.3 | 49.1 | 48.6 |

| +AEDD | 55.0 | 55.0 | 68.6 | 32.1 | 58.5 | 34.2 | 37.9 | 50.8 | 49.0 |

| +AEDD +WROT | 54.5 | 55.6 | 69.1 | 36.2 | 57.8 | 42.8 | 38.3 | 50.1 | 50.5 |

| oracle | 58.4 | 54.8 | 77.2 | 36.9 | 56.5 | 39.4 | 40.8 | 51.2 | 51.9 |

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, Y.; Wen, S.; Wang, D.; Meng, J.; Mu, J.; Irampaye, R. MobileYOLO: Real-Time Object Detection Algorithm in Autonomous Driving Scenarios. Sensors 2022, 22, 3349. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, T.; Cavazza, M.; Matsuo, Y.; Prendinger, H. Detecting Human Actions in Drone Images Using YoloV5 and Stochastic Gradient Boosting. Sensors 2022, 22, 7020. [Google Scholar] [CrossRef]

- Wen, L.; Du, D.; Zhu, P.; Hu, Q.; Wang, Q.; Bo, L.; Lyu, S. Detection, tracking, and counting meets drones in crowds: A benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7812–7821. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Liu, S.; Li, F.; Zhang, H.; Yang, X.; Qi, X.; Su, H.; Zhu, J.; Zhang, L. DAB-DETR: Dynamic anchor boxes are better queries for DETR. arXiv 2022, arXiv:2201.12329. [Google Scholar]

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. Dn-detr: Accelerate detr training by introducing query denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 13619–13627. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Cai, Q.; Pan, Y.; Ngo, C.W.; Tian, X.; Duan, L.; Yao, T. Exploring object relation in mean teacher for cross-domain detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11457–11466. [Google Scholar]

- Zhu, X.; Pang, J.; Yang, C.; Shi, J.; Lin, D. Adapting object detectors via selective cross-domain alignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 687–696. [Google Scholar]

- Saito, K.; Ushiku, Y.; Harada, T.; Saenko, K. Strong-weak distribution alignment for adaptive object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6956–6965. [Google Scholar]

- Chen, Y.; Li, W.; Sakaridis, C.; Dai, D.; Van Gool, L. Domain adaptive faster r-cnn for object detection in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3339–3348. [Google Scholar]

- Liang, H.; Tong, Y.; Zhang, Q. Spatial Alignment for Unsupervised Domain Adaptive Single-Stage Object Detection. Sensors 2022, 22, 3253. [Google Scholar] [CrossRef] [PubMed]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Hsu, C.C.; Tsai, Y.H.; Lin, Y.Y.; Yang, M.H. Every pixel matters: Center-aware feature alignment for domain adaptive object detector. In Proceedings of the European Conference on Computer Vision. Springer, Glasgow, UK, 23–28 August 2020; pp. 733–748. [Google Scholar]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 1180–1189. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Sakaridis, C.; Dai, D.; Van Gool, L. Semantic foggy scene understanding with synthetic data. Int. J. Comput. Vis. 2018, 126, 973–992. [Google Scholar] [CrossRef]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Dai, X.; Chen, Y.; Yang, J.; Zhang, P.; Yuan, L.; Zhang, L. Dynamic detr: End-to-end object detection with dynamic attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2988–2997. [Google Scholar]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Sun, L.; Wang, J. Conditional detr for fast training convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3651–3660. [Google Scholar]

- Dai, Z.; Cai, B.; Lin, Y.; Chen, J. Up-detr: Unsupervised pre-training for object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1601–1610. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Jiang, J.; Chen, B.; Wang, J.; Long, M. Decoupled Adaptation for Cross-Domain Object Detection. arXiv 2021, arXiv:2110.02578. [Google Scholar]

- Wang, W.; Cao, Y.; Zhang, J.; He, F.; Zha, Z.J.; Wen, Y.; Tao, D. Exploring sequence feature alignment for domain adaptive detection transformers. In Proceedings of the 29th ACM International Conference on Multimedia, Vitual, 20–24 October 2021; pp. 1730–1738. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 2030–2096. [Google Scholar]

- Sun, B.; Saenko, K. Deep coral: Correlation alignment for deep domain adaptation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 443–450. [Google Scholar]

- Johnson-Roberson, M.; Barto, C.; Mehta, R.; Sridhar, S.N.; Rosaen, K.; Vasudevan, R. Driving in the matrix: Can virtual worlds replace human-generated annotations for real world tasks? arXiv 2016, arXiv:1610.01983. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Huang, P.; Han, J.; Liu, N.; Ren, J.; Zhang, D. Scribble-supervised video object segmentation. IEEE/CAA J. Autom. Sin. 2021, 9, 339–353. [Google Scholar] [CrossRef]

- Zhang, D.; Huang, G.; Zhang, Q.; Han, J.; Han, J.; Yu, Y. Cross-modality deep feature learning for brain tumor segmentation. Pattern Recognit. 2021, 110, 107562. [Google Scholar] [CrossRef]

- Hoyer, L.; Dai, D.; Van Gool, L. Daformer: Improving network architectures and training strategies for domain-adaptive semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 9924–9935. [Google Scholar]

- Zhao, Z.; Guo, Y.; Shen, H.; Ye, J. Adaptive object detection with dual multi-label prediction. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 54–69. [Google Scholar]

- Xu, M.H.; Wang, H.; Ni, B.B.; Tian, Q.; Zhang, W.J. Cross-domain detection via graph-induced prototype alignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12355–12364. [Google Scholar]

- Deng, J.; Li, W.; Chen, Y.; Duan, L. Unbiased mean teacher for cross-domain object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4091–4101. [Google Scholar]

- Tian, K.; Zhang, C.; Wang, Y.; Xiang, S.; Pan, C. Knowledge mining and transferring for domain adaptive object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9133–9142. [Google Scholar]

- Gong, K.; Li, S.; Li, S.; Zhang, R.; Liu, C.H.; Chen, Q. Improving Transferability for Domain Adaptive Detection Transformers. arXiv 2022, arXiv:2204.14195. [Google Scholar]

- Xu, C.D.; Zhao, X.R.; Jin, X.; Wei, X.S. Exploring categorical regularization for domain adaptive object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11724–11733. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Geng, H.; Jiang, J.; Shen, J.; Hou, M. Cascading Alignment for Unsupervised Domain-Adaptive DETR with Improved DeNoising Anchor Boxes. Sensors 2022, 22, 9629. https://doi.org/10.3390/s22249629

Geng H, Jiang J, Shen J, Hou M. Cascading Alignment for Unsupervised Domain-Adaptive DETR with Improved DeNoising Anchor Boxes. Sensors. 2022; 22(24):9629. https://doi.org/10.3390/s22249629

Chicago/Turabian StyleGeng, Huantong, Jun Jiang, Junye Shen, and Mengmeng Hou. 2022. "Cascading Alignment for Unsupervised Domain-Adaptive DETR with Improved DeNoising Anchor Boxes" Sensors 22, no. 24: 9629. https://doi.org/10.3390/s22249629

APA StyleGeng, H., Jiang, J., Shen, J., & Hou, M. (2022). Cascading Alignment for Unsupervised Domain-Adaptive DETR with Improved DeNoising Anchor Boxes. Sensors, 22(24), 9629. https://doi.org/10.3390/s22249629