Abstract

Several behavioural problems exist in office environments, including resource use, sedentary behaviour, cognitive/multitasking, and social media. These behavioural problems have been solved through subjective or objective techniques. Within objective techniques, behavioural modelling in smart environments (SEs) can allow the adequate provision of services to users of SEs with inputs from user modelling. The effectiveness of current behavioural models relative to user-specific preferences is unclear. This study introduces a new approach to behavioural modelling in smart environments by illustrating how human behaviours can be effectively modelled from user models in SEs. To achieve this aim, a new behavioural model, the Positive Behaviour Change (PBC) Model, was developed and evaluated based on the guidelines from the Design Science Research Methodology. The PBC Model emphasises the importance of using user-specific information within the user model for behavioural modelling. The PBC model comprised the SE, the user model, the behaviour model, classification, and intervention components. The model was evaluated using a naturalistic-summative evaluation through experimentation using office workers. The study contributed to the knowledge base of behavioural modelling by providing a new dimension to behavioural modelling by incorporating the user model. The results from the experiment revealed that behavioural patterns could be extracted from user models, behaviours can be classified and quantified, and changes can be detected in behaviours, which will aid the proper identification of the intervention to provide for users with or without behavioural problems in smart environments.

1. Introduction

The introduction and implementation of the Internet of Things (IoT) have allowed connectedness between physical and virtual entities. IoT is a medium where daily devices become smarter, processing becomes more intelligent, and everyday communication becomes clearer [1]. IoT is a large-scale architecture for the information society, allowing state-of-the-art services by connecting virtual and physical things built on existing, developing and connectable information and communication technologies [2]. The IoT concept has been known for its impact in providing many solutions in several domains [1]. From Gartner’s report, more than 25 billion devices will be connected through the IoT by the end of 2021 [3], and there will be an increase in 2022 [4]. In the year 2020, with the outbreak of COVID-19, the Internet of Behaviour (IoB) emerged as office workers resumed work after the lockdown. IoT devices increased in office environments to monitor workers’ compliance with COVID-19 protocols [5]. IoT paved the way for providing solutions to several behavioural problems by utilising data to modify workers’ behaviours.

A systematic review of two studies revealed that 54% of adults in Slovakia have behavioural problems with healthy food consumption and physical activity [6]. Three studies in Slovakia showed that 38% of the total population has behavioural issues with vegetable consumption and smoking. The presence of alcohol misuse and smoking was identified in adults, with a prevalence ratio of 2.89. These statistics were significantly higher in the US, as 10% of young adults (18–25) identified behavioural problems, such as alcohol misuse, in 2017. Petersen [7] reported that 49% of young adults in South Africa were exposed to alcohol abuse before the age of 18, leading to behavioural problems in driving, eating, and violence. There is a need to monitor the behaviour of individuals to identify such issues because of their high incidence and lifelong effects on people, and these are often reflected in many domains, for example, health, resource usage, and office work productivity.

Behavioural modelling has been used to provide solutions to behavioural problems. Behavioural modelling refers to the observation of an individual in certain situations for good and poor behaviours, which generally involves behavioural data collection [8]. The description and manipulation of human behaviour are fundamental to many domains because they help to identify and understand good behaviours and to ensure their sustainability over time. They also identify those behaviours eliciting poor behavioural patterns in people and how these poor behavioural patterns can be modified through discovering, investigating, monitoring, and changing human behavioural patterns [9]. Behavioural modelling occurs in two aspects. The reinforcement of good behaviours, if good behavioural patterns are observed, through the development of sustainable behaviours over a long period, and the provision of adequate interventions for modification if poor behavioural patterns are observed. These two aspects justify behavioural modelling and can be applied to several domains, for example, the office domain, which is the focus of this study.

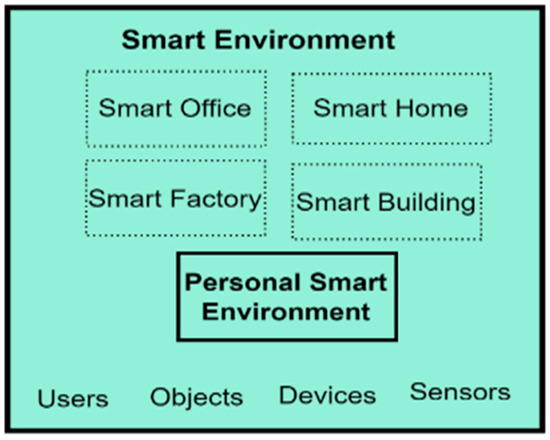

In behavioural modelling using an SE, there are essential concepts that require proper understanding. These are activity, behaviour, good behaviour, and poor behaviour. Fatima [10] defined activity as an action or task performed by a user at a time. An SE is an environment equipped with smart devices such as sensors and sensor networks interconnected through the IoT to provide its users with assistance, maintenance, and services [11]. SEs can be implemented in any form, depending on the purpose for which they were designed. This can be a smart home, smart office, smart city, or a personal smart environment, a group of services accessible within an active space of linked devices, owned and administered by a single user.

Guez [12] defined behaviour as the means by which individuals act, conduct, or express themselves. Pate [13] described behaviour as a reaction to an object that is directly or indirectly observable. Direct observation refers to studying an entity in a physical environment, while indirect observation refers to making decisions and describing a result. These definitions describe behaviour with respect to objects, people, and social norms or how an individual handles and relates to other individuals and entities within an environment. This study will adopt the definitions by [14,15]. Wallace [16] defined behaviour as the recognisable activities of an individual that can involve action and reaction to stimulation. Soto-Mendoza [17] defined behaviour as a recurring series of activities relative to the frequency of recurrence over an extended period.

Good behaviours are behaviours that are generally accepted by individuals or society. They refer to the acceptable conduct of an individual’s emotional, physical, and cognitive processes in a context or environment. A collection of good behaviour generates good behavioural patterns. Good behavioural patterns are equivalent to normal behaviour patterns as they refer to behaviours consistent with a person. The behaviours can vary for an individual, context, location, time, and societal norms [18]. Poor behavioural patterns are studied in contrast to good behaviour patterns and, in most cases, lead to behavioural problems. Behavioural problems in people have created many difficulties in health, home, and offices. However, this study will explore low productivity as a significant behavioural problem in offices that need behavioural modelling. Within office environments, multitasking/interruption and social media are the major factors that affect people’s work productivity. These are behavioural problems because many individuals simultaneously engage in many activities, leading to slow task progress. Workers tend to focus on one task but are interrupted by another task or a colleague. As Pataki-Bitto [19] explained, workplace interruptions have many effects and types. The results can be time loss, error, emotional drain, or mental workload.

These behavioural problems have been previously solved through traditional monitoring of individuals via self-reporting [20] and behavioural modelling using smart environments. This study will focus on the latter because of its capability to minimise biases common to the former. There are many studies on behavioural modelling using smart homes, and most of these studies are data-driven in nature. For example, Lazzari [21] modelled energy consumption behaviours through a smart meter from a smart environment by incorporating XGBoost for classification and ANN for consumption prediction. Alexiou et al. [22] modelled the sedentary behaviour of workers in a smart office using SVM, Naïve Bayes, CNN, and DNN algorithms. Jalal et al. [23] modelled the movement behaviour of occupants in a smart environment through signal denoising and linear SVM for classification. From all these behavioural studies, user modelling remains an open area for research because current behavioural modelling studies do not consider important user aspects. A user model acts as an information source about a user and comprises different assumptions about the behavioural aspects of the user. In the context of behavioural problems, a good user model provides an adequate understanding of human activities and provides insights into why an individual engages in an activity in a particular way, leading to the construction of an effective behavioural model.

In terms of intervention provision in smart environments, Ramallo-González et al. [24] developed educational interventions to minimise excessive energy consumption in a personalised and timed manner through the EnergyPlus software 22.2.0. LeBaron [25] developed the BESI-C system to collect behavioural and physiological data from a smart home and to provide interventions to minimise distress and improve self-efficacy in pain management for cancer patients. Two limitations were observed from these interventions in smart environments: (i) they do not design and deliver messages using preferences, likes, abilities, and disabilities specified within a user model, and (ii) they do not classify observed changes in behaviour before presenting the intervention. These limitations are the focus of the study.

The study will incorporate a new approach to behavioural modelling and intervention through the introduction of a novel behavioural model (PBC model). The PBC model considers, as input, important user aspects within a user model. Additionally, the PBC model classifies the observed changes before intervention provision. From the identified limitations, the questions that the study aims to answer are: RQ1: How can a behavioural model be developed to support positive behaviour change in a personal smart environment? RQ2: How can a user model be extracted from a dataset? RQ3: How can behaviours be modelled from user models? RQ4: How can a behavioural model classify human behaviours in personal smart environments? How can behaviour change be detected in Personal Smart environments? RQ5: How effective is the model in representing behaviours?

The study answered these research questions through the Design Science Research Methodology. The PBC Model was assessed through a naturalistic evaluation, which started with personal smart environment setup, daily activity engagements, data collection, data preprocessing, feature extraction, activity modelling, behavioural modelling, change detection, and change analysis. The significant contributions of this research are the formulation of the PBC model and the incorporation of the user model in behavioural modelling. The study is structured into the following sections: Section 2 will discuss related work in behavioural modelling using SEs. Section 3 will discuss the methodology; the associated theories will be discussed in Section 4. Section 5 will discuss the PBC model, Section 6 will discuss suitable application areas for the PBC model, while Section 7 describes the evaluation of the PBC model. The change-based interventions will be presented via a future publication.

2. Related Work

The section will highlight existing works in user and behavioural models, sequential pattern mining, classification, change detection, and work productivity. These aspects are the techniques that will be incorporated into the study and are indicated in subsequent sections.

2.1. User Modelling

A user model is a source of information with many assumptions about the important behaviour of a system user [26]. In SEs, Gregor et al. [27] classified users into three categories in the disability spectrum. These are fit older people with no disability, older fragile people with few disabilities, and older people with disabilities that impair their functioning capabilities. These people depend on others to function well. User capabilities in this context refer to memory, learning, sensory, physical, elderly experience, and the environment. Additionally, as people age, there is a change in ability, including a decrease in sensory, cognitive, and physical functions, making user modelling a critical issue [27]. However, this categorisation is limited to the elderly; there is a need for a categorisation that will cater for all age groups.

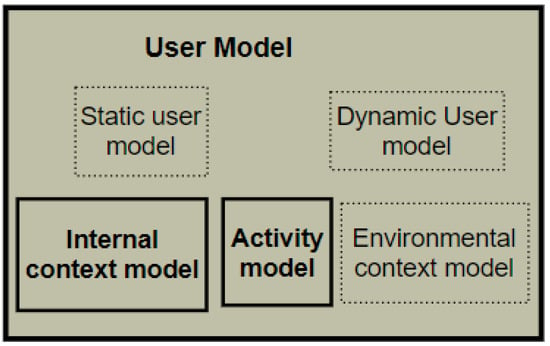

The proliferation of smart technologies has allowed the incorporation of the internet into our daily activities. User modelling in this era includes the description of the user’s context [28], such as observing a user’s attitude. With IoT, users can be understood differently through smart devices. Users’ internal states can be read, analysed, understood, and stored for numerous purposes [29]. As a result, user modelling goals have shifted from passive recognition to active creation because of the accessible collection of cognitive and behavioural information [30]. In the context of behavioural problems and monitoring, “a user model provides an adequate understanding of how users engage in behaviour, reasons for engaging in a behaviour in a specific way; and facilitates the building of an effective behavioural model.” For this study, the Generic User Model, which contains four sub-models, was proposed for this study in a previous paper [31]. These sub-models are: (1) the static user model, for unchanging information, for example, personal and role information; (2) the dynamic user model for changing information such as preferences, interests, likes, and dislikes; (3) the internal context information for health aspects of a user, such as physiology, emotion, cognitive and psychological aspects; (4) the activity model for relevant information that can describe a user or worker’s activities; and (5) the environmental context model for describing the physical features of a person’s environment and everything external to the person, such as the objects, sensors, and devices present. The current study will incorporate the user model presented in [32] in modelling human behaviour for the adequate interpretation of behaviour.

2.2. Behavioural Modelling

Behavioural models are developed to describe human behaviours to assess performance and operation in a specific context [33]. Behavioural modelling helps to improve the functionality of SEs from a non-interactive environment to an interactive and real-time environment based on typical human control factors, thereby enabling decision-making when necessary. This includes people in the control circle because people are self-deterministic and love to be in charge of everything around them [34].

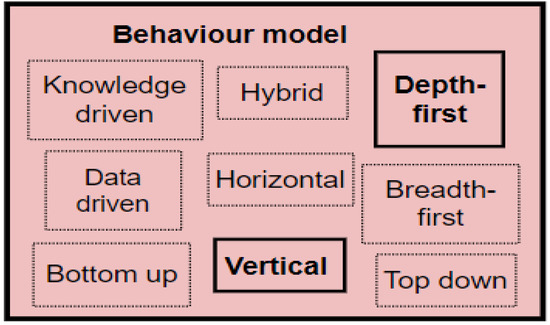

In modelling human behaviour within SEs, a large amount of data is needed to deduce, reason, model, and predict people’s behaviours. Human behavioural modelling is challenging because there is no availability of a complete physical model to describe possible behaviours. This is where data, knowledge, and hybrid modelling techniques come in. Conversely, no single method or model fits it all [34]. A behavioural model can be developed from the top-down by establishing associations between behaviours and tasks using knowledge-driven techniques. Additionally, a behavioural model can follow a bottom-up approach using data-driven techniques or combining both techniques through hybrid modelling.

Regardless of the technique used, the primary goal is to generate the required outcomes [35] appropriately. These outcomes are the identification of good and poor behaviours and the actions/activities that create these outcomes. Therefore, if a behavioural model can recognise and predict human behaviour from a user model, then the patterns in the behavioural model should be classifiable for possible intervention provision. Different scholars have applied several techniques, but there are behavioural factors to consider for modelling. These factors are the contexts and sequential associations among human activities. Contextual factors include temporal associations and location, while activity associations refer to how people relate one activity to another [36]. These aspects are detailed in user modelling [31].

Tsai [37] listed three crucial steps for modelling human behaviours in SEs. These steps are knowledge discovery, activity extraction, and change classification. These three aspects must be incorporated to generate an effective behavioural model. Since human behaviour revolves around daily activities, knowledge discovery of activities becomes crucial, and this can be achieved through data, knowledge, or hybrid approaches. Irrespective of the learning technique, the goal is to differentiate good behavioural patterns from poor ones through activity extraction. Activity extraction deals with associating knowledge about how a user interacts with several activities in an SE to infer behavioural patterns.

Chen, Hoey, and Nugent [38] described a complex procedure for identifying people’s activities using SEs. These are the deployment of sensors in the environment for monitoring workers’ behaviour, collection, storage, and processing of sensed information for activity representation at a suitable level, the creation of computational models from sensed data, and the development and use of algorithms to infer activities. However, two issues arose from this procedure: (i) the absence of behavioural modelling inferences from the user model and (ii) the lack of behavioural classification for proper intervention/service provision. Langensiepen, Lotfi, and Puteh [39] followed this procedure to provide a model for office workers through data collection, data mining for recognising different worker features, and the use of recognised worker’s activities for building a worker profile, which was used to summarise worker activities and to adjust environmental conditions to suit a worker. Conversely, the worker’s model did not allow workers to change environmental conditions individually. Additionally, the worker’s model did not enable workers to adjust their resource usage in the environment.

The behavioural modelling approach given by [37] is similar to the three behavioural modelling steps stipulated by [40]. Still, the significant difference between the two processes is the techniques used in each stage. Yu, Du, Yi, Wang, and Guo [41] specified three essential steps: data capture, modelling/analysis, and evaluation, which can be conducted using sensing devices, social media, and cameras. Modelling and analysis involve making certain assumptions about the data captured and applying these assumptions to understand human behaviour through an inference algorithm. However, a significant issue of this step is an inadequate theoretical demonstration that the model can understand human behaviour with the available data. Lastly, there was no performance evaluation of the model through universally accepted benchmarks; for example, metrics relating to efficient space and time can be used to evaluate a behavioural model [40]. This study will combine these approaches in modelling behaviour by developing and evaluating a behavioural model, specifically, the incorporation of sequential pattern mining for extracting behavioural models from user models in a subsequent section.

2.3. Sequential Pattern Mining

Sequential pattern mining involves searching and extracting patterns from a database. Sequential pattern mining algorithms have been classified based on their search techniques, which can be breadth-first or depth-first [42] and their database format, which can be vertical or horizontal. Breadth-first algorithms use a level-wise approach by scanning a database to identify frequent sequences with a single item, then to find sequences with two items, and so on until there are no more frequent sequences. The search starts from the first nodes and moves to the next level nodes until it arrives at the root nodes [42]. Examples include Generalized Sequential Patterns (GSP), Prefix-Tree for Sequential Patterns (PSP), and the Apriori algorithm. Alibasa [43] used the GSP algorithm to mine frequent digital context patterns from digital technology usage logs for the mood prediction of workers. Depth-first algorithms identify recurring single patterns in a search space by starting with single items and recursively extending the initial frequent single patterns to create larger patterns. When a pattern cannot be extended, the algorithm reverses to identify other patterns using other sequences. Depth-first algorithms start their search from the first node and proceed to the next node in the same path until they reach the end node of the path [42]. Examples include Sequential Pattern Discovery using Equivalence classes (Spade), Prefix-projected Sequential pattern mining (Prefix Span), Sequential Pattern Mining (Spam), Last Position Induction (Lapin), the Co-occurrence Map (CM-Spam), and the Co-occurrence Map (CM-SPADE).

Based on the database format, algorithms in the horizontal family use a format where each row has a sequence identifier and an itemset list. For example, the Apriori and Prefix Span algorithms use the horizontal format for their mining operations. The vertical family algorithms use a vertical database format where sequence identifiers store patterns and the location of the pattern in memory. Such examples include SPADE, Prism, and CM-SPADE [44]. CM-SPADE, as a depth-first and vertical database format algorithm, was used in this research because CM-SPADE uses a vertical database format and a depth-first search technique, which allows fast processing. The CM-SPADE algorithm is an extension of SPADE: SPADE uses an equivalence class and sublattice decomposition to split the search space into several fragments. Each fragment then fits into the memory and is processed independently [45]. At the same time, CM-SPADE uses the basic implementation of SPADE. It also uses a co-occurrence map (CMAP) structure to maintain co-occurrent information for a database and for early filtering out of infrequent candidates to speed up the mining process. Additional parameters to the CM-SPADE algorithm are the support and window size values. These parameters are used to mine frequent item sets [46]. CM-SPADE was reported to be faster than previous sequential pattern mining algorithms. The strengths of CM-SPADE are the early pruning of candidate patterns, better performance because of low-memory requirement, more focused search space, and less candidate pattern generation than the breadth-first algorithms. In SEs, Suryadevara [47] demonstrated the effectiveness of the CM-SPADE algorithm in extracting residents’ behavioural patterns relative to their movement. The incorporation of CM-SPADE in this study is discussed in Section 8.4.

2.4. Classification

Behavioural pattern classification is often conducted via data-driven models. Bakar, Ghayvat, Hasanm, and Mukhopadhyay [48] classified data-driven models into supervised and unsupervised models. Supervised data models use the controlled learning approach, where labelled training data are made available for modelling. Examples include linear regression, Random Forest, Neural networks, and SVM [49]. These algorithms work by taking a known input dataset with identified outputs using trained algorithms to generate a reliable output prediction for a different dataset [50]. For the current study, Random Forest was selected as a suitable classifier for behavioural patterns.

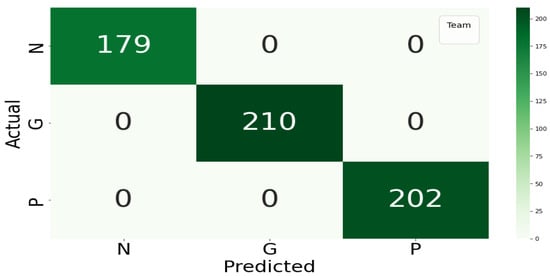

The Random Forest algorithm belongs to supervised machine learning techniques. Random Forest is a hierarchical assembly of classifiers with the decision tree structure. Random Forest classifies datasets using a simple fixed probability to choose the most important feature during a classification task [51]. Random Forest reduces overfitting by merging several overfit evaluators to become ensemble learners. The behavioural pattern classification in this study is a multi-class classification problem where a behavioural pattern is classified into one of good, poor, or neutral. The application of Random Forest for behavioural pattern classification was motivated by the work of Khadse [52], where the authors incorporated the Random Forest algorithm for multi-class classification of an IoT sensor dataset with an accuracy of 91%. Furthermore, Alibasa et al. [43] incorporated Random Forest to classify digital behavioural patterns into mood classes with more than 75% accuracy.

2.5. Change Detection

Change detection has been carried out via machine learning techniques, which can be offline or online. Offline change detection involves the use of historical data for change detection, and because of the requirement for a large dataset, a lengthy training period is required [53]. Offline techniques work by extracting models that describe the data and using these models to set thresholds for normal behaviour. The underlying assumption is that a dataset of a particular length is available. The goal was to ascertain a change in the series only after the data samples had been collected [15]. A significant limitation of offline techniques is dimensionality. As the data dimension increases, efficiency drops. Most real-world problems require that the change be detected in real-time, immediately or before a change occurs. Therefore, there is a need for unsupervised techniques. Examples of offline change detectors include Kernel Principal Component Analysis, Pelt search, outlier Dirichlet mixture model, binary segmentation, dynamic programming, one-class SVM, affinity propagation, k-means clustering, and the window-based search method [54].

Online change detection uses incremental learning, in which only data points within a window are used. In online detection, change is detected as new data streams continuously arrive. Online detectors run synchronously on a data stream by processing and identifying a change as soon as it occurs. As new data points arrive, online change detectors incorporate the new data points through knowledge retention rates for balancing previous and fresh data. With the new data points, new windows are generated and used. New window generation is usually accomplished by calculating the distribution’s probability/thresholds for the current run and updating the probability after another data stream arrives. Online change detectors can capture changes in real time because the probabilities/thresholds, which differentiate normal from change, can be frequently updated. When a change occurs, the probability reduces to zero [55]. Another way of detecting a change through online detectors is by comparing the most recent previous set and the next future set to decide whether there is a change [56].

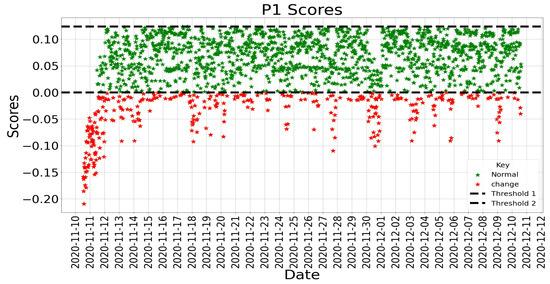

Online change detectors have received much attention and have been adopted because of their ability to save computation cost, time, and storage time [15], thereby making online learners suitable for change detection. However, computationally, they are required to be fast and challenging to modify. Additionally, noise or unrelated data hinder online change detectors during training [53]. Online techniques include Self-Organising Maps (SOM) [57], bilateral PCA [58], Incremental Local Outlier Factor (LOF) [59], global LOF with local subspace outlier detection, Markov Chain modelling [60], K-means clustering [61], Single-Layer Feed-Forward Neural Network (SLFN), [57], Bayesian change point detection [62], Kernel Density Estimation (KDE) PCA [63], Generalised Likelihood Ratio Test (GLRT) [64], one-class support vector machine [65], and Isolation Forest [66]. This study used the Isolation Forest to detect productivity changes based on strength.

Isolation Forest is a collective approach used to identify changes/anomalies. It starts with a random feature and selects a partition between the highest and the lowest values to divide a sample. This process continues until the samples are divided. The Isolation Forest is constructed by including several isolation trees divided into several features. The number of divisions required to separate a sample is the path length from a root to a leaf [67]. Isolation Forest was established on Extra Trees [68], where each separation is random. A sample close to the root (i.e., short path length) can be distinguished effortlessly and is easier to separate from samples closer to the leaves. It is believed that change/anomalies will have a smaller mean path length than normal samples. When there is a sample at the leaf, the score will be close to 0. A shallow sample close to the root will have a score close to 1 [69]. The selection of the Isolation Forest algorithm for detecting changes in productivity was motivated by [70]. Isolation Forest works well for low-dimension data, even with unimportant attributes and contexts where anomalies or changes are absent in the training set [67]. Based on these capabilities, Shiotani and Yamaguchi [70] demonstrated the ability of the Isolation Forest to detect changes in patients’ physical condition (heart rate and dietary intake) in a smart care facility.

2.6. Productivity

Work productivity refers to the output produced with some input or resources. Often, productivity is defined as the ratio of output to input or resources used [71]. However, not all tasks are equal because some tasks do not require many cognitive resources and can be completed within a short time, while others may require much cognitive processing and may be completed in hours or days.

Within HCI, office work productivity has been studied by identifying factors affecting productivity. Specifically, social media usage, distractions, and interruptions have been identified and studied in detail. Borst, Taatgen, and van Rijn [72] studied work productivity by developing a computational cognitive model of task interruption and resumption by focusing on problem-state bottlenecks. The model was based on the memory for goal model, which states that when the central cognition queries the memory, the memory outputs the most active item at that instant. The memory for the goal model was extended by concentrating on the associated content of the problem state for each task through the memory for the problem state model. Czerwinski, Horvitz, and Wilhite [73] identified task complexity, the number of interruptions, task type, and length of absence as factors affecting workers’ return to tasks, while Mark, Czerwinski, and Iqbal [74] revealed that blocking online distractions was more beneficial to workers with low work control (i.e., self-control), low conscientious personality, and the absence of perseverance. In contrast to these studies, Kim et al [75] studied workers’ productivity in work and nonwork contexts and found mundane tasks and chores to be related to distraction and attention, as insufficient attention was associated with work productivity and intensity. The authors also identified several factors that affect productivity for the design of self-tracking applications.

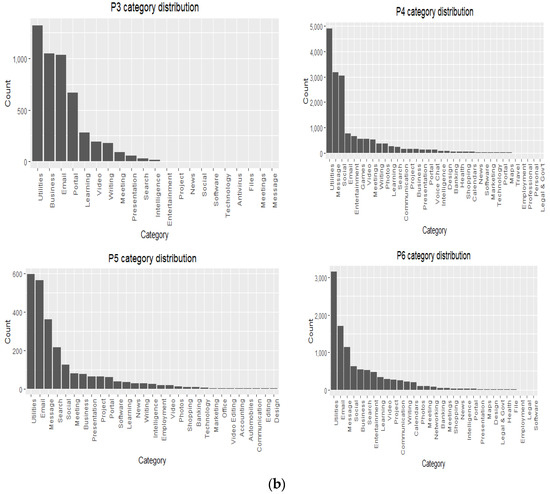

Office work productivity has been assessed through three methods: subjective, indirect, and direct methods. Subjective assessment involves the use of feedback from workers through interviews and surveys. Interviews are used for detailed study purposes and can only be effective when participants are few. A survey is used to gather data by sending questionnaires to many workers. This can be paper-based or through an online link sent via email or social network sites connected to an online survey database [76]. Sun, Lian, and Lan [77] used a subjective method to evaluate workers’ productivity through the self-evaluation of work performance and a fatigue scale to assess workers’ performance and productivity under ambient conditions. Chokka, Bougie, Rampakakis, and Proulx [32] also used the Work Limitations Questionnaire developed [78] to evaluate workers’ productivity. Workers’ productivity evaluation can also be undertaken through indirect methods such as worker’s absenteeism, number of grievances, number of hours worked, etc. These are usually accessed by another individual, usually the managers [79]. Data for indirect evaluation are obtained from the workers’ records, which usually include total time worked on a daily or weekly basis, output for a particular work duration, etc. [80]. Direct methods for productivity assessment are based on tracking workers’ digital device or software usage patterns [81] using tools such as screen life [82], Rescuetime [83], timeaware [84], and metime [85]. These applications enable the remote monitoring of workers’ activity through desktop/mobile applications installed on workers’ devices and phones. They continuously monitor workers’ activities by logging their activity data. From this list of applications, Rescuetime was chosen for this research because it offers more functionality than the others.

The increase in the popularity of these tools has led to quantified self and lifelogging communities, where technology is incorporated into the data collection in a particular aspect of an individual’s life [86], especially work productivity. Using these tools indicates that productivity is a multi-dimensional concept. Users evaluate their productivity in six dimensions: work product, attitude to work, benefit, impact, workers’ state, and compound task. In all these dimensions, it was found that the quantity and quality of conceptual and concrete achievements were mostly considered work products [81]. These tools are limited to tracking simple activities, such as computer/phone usage and digital devices. They cannot track workers’ mobility patterns. This study followed a new direction in monitoring workers’ activities and estimating productivity using the PBC model in a personal SE that supports office tasks. Productivity was quantified by creating weights for each class that each behavioural pattern belongs to, multiplying the weight by the sequence length, and dividing by the sum of the weight.

3. Research Design

The research aimed to develop and evaluate a new behavioural model for use in smart environments. The research followed a quantitative approach through experimentation to evaluate the model. The research design comprises the methodology and methods that were used to answer the research questions and achieve the overall objective of this research. These are explained in the next two sub-sections.

3.1. Methodology

The study incorporated the Design Science Research (DSR) methodology to design the PBC model. DSR involves scientific study and the creation of artefacts as people develop and use them to solve common practical problems [87]. In DSR, artefacts can be constructs, models, instantiations, and methods. Human behavioural studies focus on human behaviour in a context by developing and evaluating theories that seek to explain how and why an individual behaves to reflect the presence of problems. The goal of DSR is to create novel artefacts to solve problems and to ensure that the artefacts can extend the knowledge base of the problem domain [88].

Humans perform their activities in an environment. There is a continuous interaction between humans and their environments. When people behave, they interact with objects in their environment. These objects can be simple tools, technical devices, or systems. Therefore, people cannot be separated from their environments because they depend on the objects in the environment for task execution. The incorporation of DSR to model construction in human behaviour helps to guarantee the synergy between humans and their environment (as a usage context). DSR ensures that the model becomes useful for people and the environment for which it is created [89]. This study developed and evaluated a model as an artefact that can be used for promoting positive behaviours in SEs across several domains. The research falls into model development and evaluation, a core aspect of DSR, in which there are guidelines and activities for conducting model development. The important DSR activities in model development include problem identification, solution objective definition, model design and development, solution demonstration, solution assessment, and communication [89].

A strength of the DSR methodology is the presence of three cycles to enhance a researcher’s understanding of the conduct of high-quality DSR research. The cycles are the relevance cycle, rigour cycle, and design cycle. These cycles evolved due to the initial perceptions of DSR as either an artefact, which focuses on developing new products [90], or design-theory oriented, which focuses on developing new theory as an essential output of DSR [91]. Gregor and Hevner [88] suggested including these two approaches and a good balance between them. Therefore, any research utilising DSR should provide, as outputs, an artefact, and a theory behind the development of the artefact, using the three DSR cycles.

The relevance cycle focuses on understanding the problem and its domain, the requirements to solve the problem, and the opportunities in a specific environment to solve the problem. The domain is made up of people, organisational systems, and technical systems, which are used to recognise opportunities that can be used to solve the problem. When the problem and opportunities are recognised, the requirements for the relevance cycle can be easily defined, leading to the conversion of problems into requirements and objectives of the artefact. DSR also provides the criteria to accept a solution through evaluation by initially identifying the expected functionalities of the artefact and how the artefact in a domain can be improved [92]. The evaluation output will dictate whether further iterations should be performed within the relevance cycle or stopped.

This study incorporated the relevance cycle through the proper problem statement definition of behavioural problems (Section 1), requirements engineering, and identifying opportunities to solve the problem (Section 2.2). The relevance cycle ensures that the personal SE is identified as an application domain. The personal SE is made up of continuous cooperation between organisational systems, people, and technical systems. The organisational systems in this context refer to the organisation’s structure. The organisational system can be functional, divisional, flat, or matrix. The people are the users of the SE. Technical systems refer to various systems or objects present in the SE that enable an individual to perform daily activities. The technical systems can be software, hardware, and IoT devices. The problem and opportunities for solving the problem are identified in the relevance cycle. For this study, the identified problem was behavioural problems. The solution opportunity identified in this research was behavioural modelling through personal SEs. The recognised problem and opportunities are used to describe the requirements needed for the relevance cycle. The requirements for the solution are the artefact’s ability to identify behavioural patterns and classify them into good, poor, or neutral. Additionally, the acceptance criteria for evaluating an artefact were created. If the model can identify and classify behaviours, then the artefact can be considered successful. The criterion became the output of the relevance cycle.

The rigour cycle guarantees a meaningful association between past knowledge and new research by justifying its innovation and contribution [93]. Hevner et al. [94] recommended in-depth reviews of the existing literature to ensure that the contributed artefacts are not duplicates of previous artefacts. Therefore, the rigour cycle is based on the proper selection and incorporation of existing methods, literature, and theories to form a portion of the domain knowledge, develop a new artefact and assess the artefact. The newly created artefact will help expand the knowledge base of the problem domain, which is a significant goal of DSR [88]. The study used the Social Cognitive Theory and the SmartWork model. These are existing behavioural theories and are discussed in Section 4.

The design cycle is the centre of DSR research [92]. According to Apiola and Sutinen [95], it is a construct–evaluate cycle for solution development because the design cycle iterates in artefact building and evaluation [93]. These iterations can happen more frequently than the rigour or relevance cycles. Major activities in the design cycle are artefact construction and artefact evaluation to check whether the requirements are met. The design cycle uses the outputs from the relevance and rigour cycles as input. These outputs are requirements, evaluation techniques, methods, and theories. However, maintaining a balance between rigour and relevance cycles becomes an important issue. Balance maintenance will help the researcher focus on the research direction. Balance maintenance can be undertaken by ensuring that the problem definition at the relevance cycle fuels the discovery of existing knowledge, theory, or models at the rigour cycle. Therefore, existing theories must refer to the identified problem.

The design cycle involves building and designing an artefact and evaluating the artefact using the criteria stipulated in the relevance cycle to evaluate the artefact. The evaluation can be carried out several times, using each evaluation result as feedback to refine the artefact. Across the three DSR cycles, the study ensured a balance by using the knowledge base relevant to the problem domain. The study used requirement analysis and existing previous theories to develop a behavioural model in Section 5. The study also evaluated the behavioural model through a naturalistic-summative evaluation in Section 7.

3.2. Methods

The following methods were used for the research.

3.2.1. Literature Review

A literature review revealed current user problems and available solutions using smart environments. With the literature review, gaps in the existing solutions were identified. The identified gaps enabled proper problem identification and motivation, as the relevance and design cycles of DSR stipulated. The output of problem identification is a problem statement, and the design of the PBC Model, with the main goal of answering RQ1.

3.2.2. Experimentation

An experiment was conducted to answer RQ2, RQ3, RQ4, and RQ5. These RQs focused on how well the PBC Model identified the user model, behavioural patterns, and behaviour classification. The experiment evaluated the PBC model, as specified by the design cycle of DSR. The study experimented in a manner that allowed the capturing of behaviours from personal smart environment users. The environment included Rescuetime as a soft sensor and Fitbit as a hard sensor to capture users’ daily work activities and their heart rates while working. The details of the experiment were specified within the discussion on naturalistic evaluation.

3.3. Sampling Criteria

The study included the following criteria for volunteers to participate in the study.

- Age must be minimum of 20 years;

- Must be computer literate;

- Must be users of desktop and smartphone;

- Must not have any impairment or special needs;

- Essential experience in computer and internet usage;

- Must be non-teaching staff;

- Must not belong to a population with special needs.

The data collection method for the research followed a quantitative method through experimentation. The experiment and procedures for the data collection and analysis are described in Section 7.

4. Theoretical Background

With the incorporation of the rigour cycle of DSR, the following theories were consulted and used in the formulation of the new behavioural model.

4.1. The Social Cognitive Theory

The Social Cognitive Theory (SCT) states that the interaction among current behaviours, personal (affective and cognitive influences), and the environment determines an individual’s behaviour, mutually called “Triadic Reciprocal Causation” [96]. The SCT specifies the three determinants that interact with and affect behaviour. These are personal (cognitive, affective, and biological events), behavioural, and environmental factors [97]. A typical interaction is when personal factors control how people reinforce and model behaviours from others in an environment; these behaviours, in turn, control behaviours that people display in specific situations.

Environmental factors within the SCT came from the Social Learning and Imitation Theory (SLI) [98], which states that people are stimulated to behave in response to different responses, cues, rewards, and drivers from the environment. A very direct and recent precursor of SCT is the Social Learning Theory by [14], which describes how people learn through social methods of observing, replicating, and displaying the behaviours of others from the environment. The major effect of the environment on behaviour is social influence through behavioural mimicking in the environment. Learning as a social process signifies the primary goal of SCT and suggests that knowledge and skill acquisition comes from observational learning from role models within the environment. The mastery of new knowledge and skills is of higher interest than the object or the result of the learning process [97]. Therefore, the existence of humans depends on the repetition of behaviours from others in an environment with no impediments and when self-efficacy is high. Saleem, Feng, and Luqman [99] used the SCT to reveal that excessive social network use elicits cognitive–emotional preoccupation, which leads to poorer job performance in office workers. They also revealed that cognitive–emotional preoccupation increases task, process, and relationship conflict in offices as stressors from excessive use of social networks.

4.2. The SmartWork Model

Kocsis et al. [100] introduced the SmartWork model to assess aged workers’ health, emotion/stress, cognitive, task models, and workability. Workers’ health, emotion/stress, cognitive, task models, and workability were modelled from the remote monitoring of workers’ activities to evaluate their cognitive and functional decline by their employers for decision-making. The proposed SmartWork model comprises unobtrusive sensing\worker-centric block, a workers’ block, and the smart services block. The unobtrusive sensing framework is equivalent to the SE concept, with the functional purpose of collecting data relating to the worker’s physiological, lifestyle, and contextual aspects. The worker-centric AI block is equivalent to the user model, comprising a workability model, computational models, a self-adaptation model, and a dynamic simulation tool.

The workability model was designed to extract workability from the heterogeneous data within the sensing framework. The workability estimate determines whether a person is entirely disabled, partially disabled, or fit for work. The computational models were designed to model a worker’s functional, cognitive, workability, and work task models. The self-adaptation model was developed to enhance a worker’s self-adaptation and management from the computational models. The dynamic simulation is a tool to allow training, decision support, intervention and on-the-fly resilient work management, work stress coping, and workers’ training. The AI decision support allows efficient decision-making based on the workability model. It aids work completion and team optimisation through nonrigid work practices. Risk assessment allows the specific assessment of risks that are related to training and health. It also allows adequate decision support and intervention provisions for employers or top managers. The smart services were designed to deliver smart services through continuously reporting workers’ health and lifestyle.

Despite the high potential of the SmartWork model, several limitations were observed: (i) The narrow scope on older workers limits the model. A good model should be functional for all age groups in any SE. (ii) The worker-centric AI block was designed to model worker-specific parameters, but there was inadequate capturing of worker-specific parameters into the suitable models. For example, the worker’s functional, cognitive, workability, and work task aspects were captured within the computational models of the worker-centric AI block.

These limitations were resolved by adequately structuring user-specific parameters into appropriate user model components, as undertaken in our previous work [31]. Other limitations are (i) the inclusion of the AI decision support and risk prediction models in the worker-centric AI block is inadequate. These models are services to be provided to top managers or employees to help them determine a worker’s state before assigning tasks to them. Therefore, better structuring of top managers’ services is recommended but out of the scope of this research. (ii) In terms of implementation, there was an absence of behavioural modelling, implying that the service provision was based on user models. Additionally, the absence of behavioural modelling implies no behavioural classification before service delivery. These limitations can be resolved by incorporating the behaviour model and classification as core components. (iii) The SmartWork model focused on modelling workers’ workability through score allocation but not on improving workability or productivity through services. (iv) The service component of the SmartWork model focuses on providing reports to the manager or the worker to determine the worker’s workability and to foster a manager’s decision-making concerning the assignment of a new task to a worker.

These limitations can be resolved by including change-based interventions to improve individuals’ behaviours. The limitations in the SmartWork model led to the formulation of the PBC model, which is discussed in Section 5.

5. The Positive Behaviour Change Model

The SCT and the SmartWork model provided a theoretical grounding for developing a new model. The SmartWork model is the only extensive model in SEs that caters for aged workers’ workability, and it is similar to the proposed behavioural model in this research. However, these aspects were well differentiated and structured into appropriate models in the following ways: (i) Workers’ health modelling was modelled within the worker-centric AI block of the SmartWork model [100]. This study modelled health as an internal context within user modelling based on a previous study [31]. (ii) Cognitive modelling was modelled within the worker-centric AI block of the SmartWork model [101]. In this study, human cognition was modelled using the internal context within user modelling based on a previous study [31]. (iii) Emotion and stress modelling was within the worker-centric AI block of the SmartWork model [101]. This study modelled emotion and stress using the internal context within user modelling based on a previous study [31]. (iv) Work task was modelled within the worker-centric AI block of the SmartWork model [101] through work task requirements and task history. In this study, a work task was modelled as an activity, and activity modelling existed as a separate contextual entity within the developed Generic User Model [31]. (v) Services were provided as separate components and structured according to the purpose they were designed to meet. These services include care provision, teamwork management, and guidance provision. [101]. In the current study, services are equivalent to the interventions provided to users in a SE. Intervention exists as a significant component within the PBC model. However, the goal of the intervention component is to improve people’s behaviours in any SE.

From the limitations specified in Section 4.2, and the dissimilarities highlighted above, the Positive Behaviour Change (PBC) model was designed to promote positive behaviours in SEs, with the following assumptions: (i) User modelling is essential for behavioural modelling. A user model provides specific information describing the users’ static, dynamic, internal, external, and activities in their environment. (ii) Within the user model, there is a need for an objective assessment of activity models. (iii) There is a need for behavioural modelling from the user model, which will comprise behavioural patterns. (iv) Behaviour/behavioural patterns must be classified to identify the appropriate intervention type. (v) Sustainability is needed for the identified good behaviours and change for identified poor behaviours.

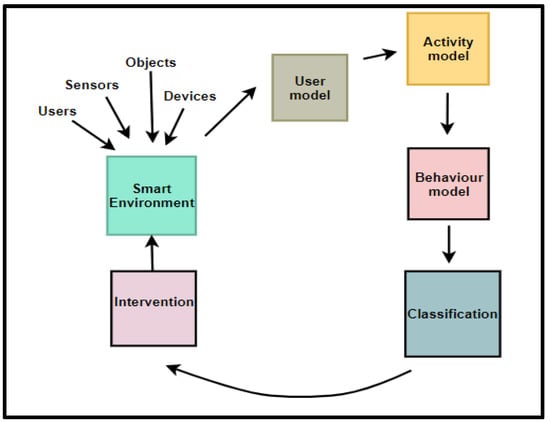

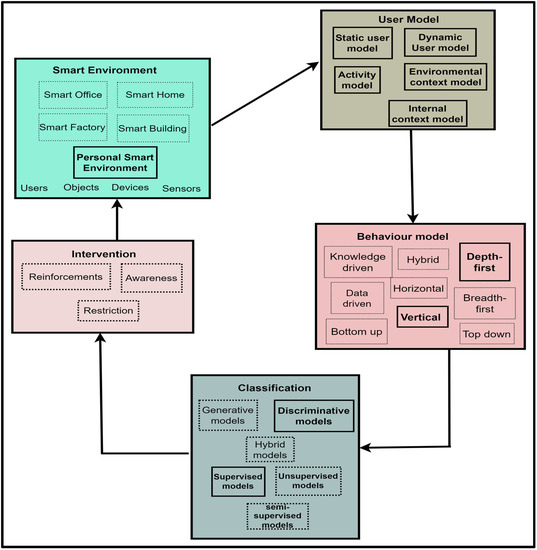

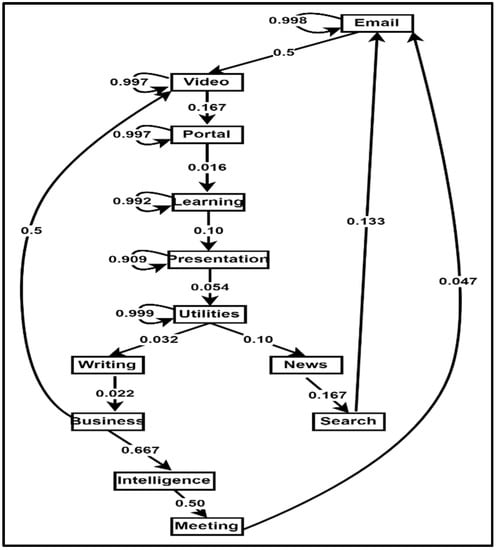

From these assumptions, this study proposes the PBC model, which comprises six components, as shown in Figure 1.

Figure 1.

The Positive Behaviour Change Model v.1.

Communication is one of the activities in any research incorporating the DSR methodology. Therefore, the PBC model v.1 was communicated via a poster presentation. Feedback was received from reviewers. The feedback identified behaviour pattern extraction as a duplicate component because it is a core activity within the behavioural model. Therefore, behavioural pattern extraction was removed. The refined PBC model v.2 is given in Figure 2.

Figure 2.

The Positive Behaviour Change (PBC) Model.

Figure 2 represents the condensed view of the PBC model, which is a model that focuses on modelling user behaviour and providing appropriate interventions to effect and sustain positive behaviours in SEs. The components of the PBC model are explained in subsequent sections.

5.1. PBC Model Components

The components of the PBC Model are explained in subsequent sections.

5.1.1. Smart Environment

The SE can be a personal SE, a smart home, a smart office, or a building set up to provide personalised assistive services for its users through IoT and unobtrusive monitoring objects. The SE was included as a component in the PBC model because the SCT emphasises the importance of an individual’s environment on behaviour by positing that humans are active agents that influence the environment and are also influenced by the environment [96].

Additionally, the SmartWork model emphasises the inclusion of the SE through the unobtrusive sensing block, which comprises all smart devices that aid peoples’ task engagement and capture users’ lifestyle, physiology, and context information. The SE is a concept and an avenue for service delivery to humans. For evaluation purposes, this study used personal SEs, which allow official tasks to be carried out by workers. A personal SE was chosen because of its minimised procurement cost and easy setup in any natural environment. Furthermore, the performance of personal SEs is not affected by fixed spaces, making their services available in any geographic space in which the user is. Service delivery in fixed spaces is possible because the services developed for personal SEs are accessible via smartphones, making them available everywhere [102]. For this study, the digital activity data generated from the personal SE were used for building user models. The expanded SE component of the PBC model is shown in Figure 3.

Figure 3.

The Expanded SE Component of the PBC Model.

5.1.2. User Model

The user model provides information about users of SEs. The following theories motivated the inclusion of a user model in the PBC model. (i) The SCT emphasizes the effect of personal factors, such as cognitive, affective, and biological events, as determinants of behaviour [96]. (ii) The SmartWork model, where the health, emotion, stress, and cognitive aspects of workers were captured within the worker-centric AI block. (iii) In SE, Vlachostergiou et al. [103] emphasized the significance of the internal context of a user in producing observable behaviour.

These are essential aspects of user modelling, but other aspects must be captured. In this study, the user model consists of several sub-models, such as SUM, DUM, internal context, activity context, and environmental context models. The details of these sub-models have been explained in a previous publication [31]. For this study, the specific output from the user model are the internal context and activity models. Users’ behaviours and behavioural deviation are built from the activity model, while the internal context provides an understanding of the users’ health and feeling during behavioural expression. The expanded User model of the PBC model v.2 is illustrated in Figure 4.

Figure 4.

The Expanded User Model.

5.1.3. Behavioural Model

The behavioural model recognises behavioural patterns from the identified activity sets within the activity model. The behavioural model is included because Patrono [104] emphasises the importance of behavioural models in SE for the early identification of risky behaviours. Section 2.2 identified knowledge, data, and hybrid approaches to behavioural modelling in SEs if the machine learning/data mining route is followed. On the other hand, the techniques can be breadth-first, depth-first, vertical, or horizontal approaches if the sequential pattern mining route is followed. The study followed the sequential pattern route in modelling behaviours. The expanded behavioural model of the PBC model is shown in Figure 5, while the behavioural modelling conducted in this research is indicated in Section 8.4.

Figure 5.

The Expanded Behavioural Model component.

5.1.4. Classification

The Classification component is a module that classifies recognised behavioural patterns as received from the behavioural model. The last step specified by Suryadevara [37] was the inclusion of classification as a component. Previous models have focused on correcting deviations or changes from good behaviours through behavioural change detection techniques without proper knowledge of what a good or poor behaviour is, leading to minimal effects on people. In this study, a classification component was included because of the importance of classifying a behavioural pattern.

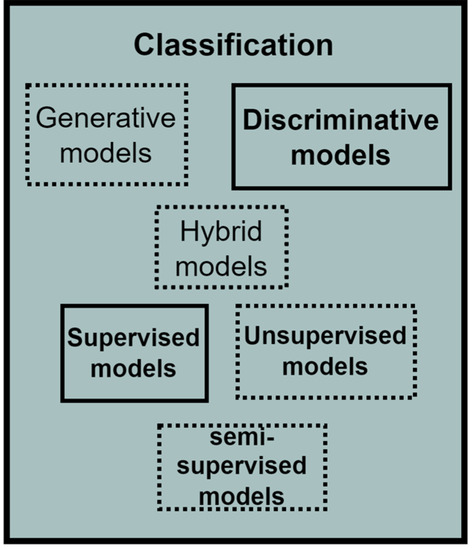

The classification component consists of several classifiers in the machine learning domain, which are usually data-driven. Depending on the probability distribution estimation, the classifiers within the data-driven category can be generative, discriminative, or hybrid. The data-driven techniques can also be supervised, unsupervised, or semi-supervised depending on the availability of label or class information in which the data or patterns are to be classified. The study incorporated a Random Forest classifier, which uses a discriminative approach in probability estimation and a supervised approach because of its requirement for labelled data. The expanded classification component of the PBC model is given in Figure 6, while the classification conducted in this study is explained in Section 8.5.

Figure 6.

The Expanded Classification Component.

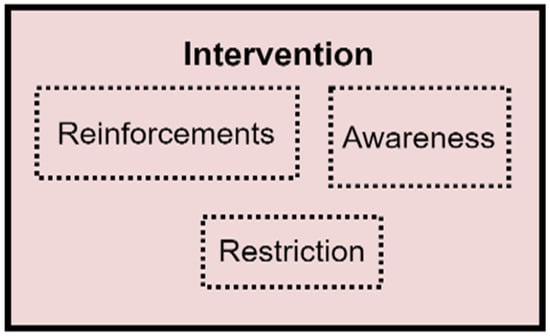

5.1.5. Interventions

The Intervention component implements interventions to be provided for the user, depending on the value of the quantified attribute evaluated and the change observed. Three interventions were identified to be appropriate for improving people’s behaviours. These are reinforcement, awareness, and restriction. However, these intervention options are not within the scope of this study, and they will be investigated in a future study. The expanded Intervention component of the PBC model is indicated in Figure 7.

Figure 7.

The expanded Intervention component.

From the extended PBC model components in Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6, the Extended PBC model is represented in Figure 8 below.

Figure 8.

The Extended PBC Model.

Figure 8 represents the Expanded PBC model. Thick continuous lines represent the PBC model components incorporated in this research. Dotted lines indicate the unused subcomponents, which can be used for future behavioural modelling studies, depending on the specific aspect of SE users to be observed. The following points are to be noted by users of the PBC Model.

- The components of the PBC model are not limited to the techniques specified within its components. Therefore, incorporators can use more advanced techniques that are suitable for the problem domain being studied.

- In using the PBC Model, users can incorporate divide and conquer techniques, whereby the problem is divided into sub-problems according to the PBC Model’s components and sub-solutions are provided for the sub-problems. These sub-solutions can be combined to form the real solution to the problem under study.

Therefore, the RQ1 was answered through the development of the PBC model, comprising a smart environment, user model, behavioural model, classification, and intervention as core components required for behavioural modelling.

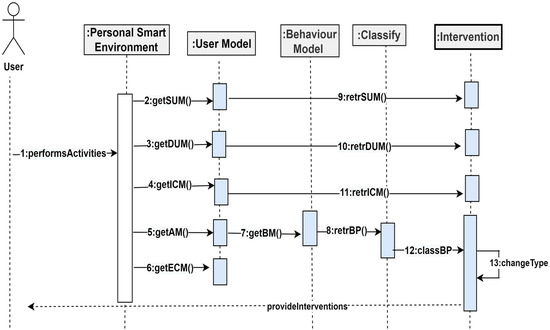

5.2. Model Interactions

The components of the PBC model will interact with each other. These interactions are depicted in Figure 9 below.

Figure 9.

Component Interactions.

Figure 9 shows the various interactions among the components of the PBC model. From Figure 9, a user in a smart environment can perform activities, which will be captured by the sensors in the smart environment, together with personal, role, preferences, likes, dislikes, personality, cognitive, psychological, health, and environmental attributes. These attributes will be modelled by the functions getSUM(), getDUM(), getICM(), getAM(), and getECM() within the environment to extract the static and dynamic user models, internal context, activity models, and the environmental context models. From the activity model, the function retrBP() constructs behavioural patterns from the activity sets. Additionally, the function classBP() will classify the behavioural patterns, which are used for change detection through the function changeType. The intervention component will determine the kind of change and returns change-based interventions to the user within the smart environment.

6. Intended Use

The PBC model was developed to monitor and promote positive behaviours in any SE. The intended usage and typical use cases are discussed in subsequent sections.

6.1. Work Behaviour Monitoring for Productivity

Section 1 highlighted multitasking/interruption, social media use, and low productivity as specific behavioural problems in offices. Therefore, workers must be monitored for these behaviours using smart devices in office environments. Attaran [105] emphasized providing stable workplace services with positive user experiences associated with people’s work. These user experiences can be obtained through worker engagement that will aid in the timely completion of tasks and faster achievement of work outcomes to increase productivity. These goals can be achieved by incorporating the PBC model into smart workspaces through constant modelling and monitoring to ensure the timely completion of tasks and by minimizing unnecessary engagement in behaviours that can reduce productivity. Using the PBC model will help workers be cognizant of productive and non-productive work behaviours. This section will not provide typical use cases for this intended use because the domain was used to evaluate the PBC model in Section 7.3.

6.2. Patient Monitoring in Smart Healthcare

Smart healthcare is an essential aspect of health that drives good healthcare delivery. Smart healthcare integrates information from different areas of patients’ lives to present an all-inclusive view of patients’ health in real-time [106]. Good healthcare services and resources can be delivered through the PBC model to achieve meaningful health improvement. Specifically, the behaviours of patients admitted to a smart healthcare facility can be continuously monitored for vital signs and to check for compliance to behaviours that will promote quick recovery. Such behaviours are adherence to drugs, timely breakfast/lunch/dinner, adequate sleep, and physical activity where applicable. The identification of sudden deterioration in these aspects can help healthcare workers in the determination and recommendation of appropriate services. This section will not provide a typical use case for this intended use because the domain is similar to health monitoring in smart homes or offices, which is discussed in Section 6.3.

6.3. Health Monitoring in Smart Homes or Offices

Taiwo and Ezugwu [107] advocated for a smart healthcare support system in homes to capture and record user health parameters. Within the smart health care support system, pulse, weight, blood pressure, glucose level, and body temperature can be the parameters for proper health modelling. With these capabilities incorporated into a smart home/office, the PBC model can monitor the health of different categories of people at home or in the office. Therefore, the overall health of an individual can be modelled. Additionally, young adults battling behavioural problems such as smoking, drug use, and physical inactivity can be monitored. Older adults with age-related health conditions such as dementia can be modelled and monitored. If there are deviations in health, a family member or a health caregiver can be contacted. A timely reminder can be sent for medication taking based on the information within the user model. The reminder can also motivate individuals for timely engagement in positive behaviours to promote their health at home or in the office. The modelling of health behaviours through the PBC model can provide immediate actions through reflections on behaviours that promote or deteriorate good health and well-being. The following use case illustrates how the PBC model can be used for health monitoring at home.

- Model: PBC model

- Actor: User

- Environment: Smart home

- Scenario: A user living in a smart home has temperature, door and window, and motion sensors. Additionally, he uses a glucose sensor for glucose monitoring. There is a simple mobile app that allows him to enter his glucose levels after each test and his daily food intake. The mobile app is connected via Wi-Fi to the central display unit at home, where the user can visualise his food intake patterns.

- PBC model description: The smart environment component of the PBC model shall supply the temperature, window and door opening, and motion data, while the glucose data will be supplied through the glucose sensors attached to the body. The user will input the glucose and food intake data into the glucose-tracking mobile app on her smartphone. A typical food intake for a day can be bread, noodles, rice, pizza, salad, pasta, and vegetables, but there may be other food intake data for previous days. The user model component of the PBC model will extract the SUM, DUM, and activity model from the food intake data with their calorie estimation. Additionally, the blood glucose levels will be extracted from the glucose mobile app, serving as an internal context (health) model. The behavioural model component of the PBC model shall model user behaviours from the activity model. For example, rice–pizza–bread can be a typical behavioural pattern with calorie estimation. The classification component shall model the glucose levels using standard estimates of blood glucose to determine normal and abnormal blood glucose levels. Therefore, the output of the classification component will be a list of normal and abnormal blood glucose values, with their associated behavioural patterns (food intake patterns) and calorie estimates. The blood glucose model and the associated behavioural patterns will be used by the intervention component of the PBC model to determine the kind of blood glucose intervention to provide to the user within the smart environment.

6.4. Resource Use Monitoring in Smart Buildings

Lazzari [21] suggested using behaviour models to extract resource consumption usage in homes or offices. With increasing cost and reduced availability, resources such as electricity and water are vital components of human life. The PBC model can model and monitor people’s behaviours while using these two essential resources. Resource-use behaviour in consumption patterns can be modelled by initially gathering resource use data from smart meters installed in a SE, extracting consumption patterns from the data, and appliance usage from the consumption data. These aspects are the specific behaviour model that the PBC model can provide. Checking for over-consumption of these resources and communicating the information relating to appliance usage to users to promote better consumption of these resources is necessary. Furthermore, the PBC model can be incorporated into resource use modelling by checking for negligence when users are away from the building—for example, checking whether switches or taps are turned off to avoid waste. The user model within the PBC model can be used to control environmental conditions, such that the devices adjust to user preferences and manage resource usage through threshold checking. The following use case illustrates how the PBC model can be used for resource use monitoring in a smart building, which can be a home or an office.

- Model: PBC model

- Actor: User

- Environment: Smart home

- Scenario: A user living in a smart home has motion, a smart kettle, MircoWave, humidity, temperature sensors, a smart meter, a sensor attached to a cooker in her home and a smartwatch. Sensors are attached to all appliances in the house. The smart home has a smart display, which is an interface for interacting with its inhabitants. The user supplies her personal information through the smart display. The interface shows the user’s personal health and profiles, all stored inside the smart home database. The smart meter is connected to all appliances to capture energy consumption from the appliances. The user performs activities such as cooking, cleaning, ironing, watching movies, and listening to music. During cold days, the user uses heaters to warm up her environment and to maintain a balanced body temperature.

- PBC model description: The user model component of the PBC model shall extract SUM from personal details, DUM from previously observed attributes from the database, internal context from the smartwatch through the smartwatch’s mobile app, activity from appliance usage, and environmental context models from the environmental attributes captured by sensors and stored in a database. User activities can be modelled from appliance usage. A typical activity model can comprise an iron, cooker, TV, and other previous activities. The behavioural model component of the PBC model shall extract behavioural patterns from the activity model. For example, cooker–refrigerator–iron–TV and TV–cooking–ironing can be typical behavioural patterns, with appliance usage information to monitor energy consumption. The classification component shall model energy consumption data using behavioural patterns to determine normal and high consumption. This classification will be used by the intervention component of the PBC model to determine the kind of energy conservation intervention to provide to the user.

6.5. Cultural Heritage

Cultural heritage institutions specialise in preserving a society’s tangible and intangible assets. These can be in the form of culture, values, and traditions. The advances in IoT drive recent advances in cultural heritage institutions by developing creative ways to present cultural content to visitors [108]. With the implementation of IoT for smart cultural heritage homes, more personalised and flexible experiences that consider visitor profile (user model) and behaviour are in high demand concerning environmental factors and the content to be presented to visitors. The PBC model is at the centre of the requirements because of the essential components for understanding the visitors and their contexts. Incorporating the PBC model will maximise cultural user experiences based on visitors’ preferences, interests, and capabilities. Other domains in which the PBC model can be used are students’ performance modelling in smart learning environments and consumer behaviour modelling in smart shopping environments. The following use case describes how the PBC model can be used in the cultural heritage domain.

- Model: PBC Model

- Actor: Visitor

- Environment: Smart Museum

- Scenario: A smart museum has exhibition displays, a touch screen, cameras, motion, temperature, beacon (a location sensor). A visitor has a smartphone, which is Bluetooth enabled. For a regular visitor in a smart museum, his face and location information will be tracked through sensors. On the first visit, visitors will supply personal information, preferences and personality, and cognitive-related information through the touch screen hung on the museum wall. Visitors move to several sections of the smart museum to view different exhibitions through the exhibition displays.

- PBC model description: The user model of the PBC Model shall extract SUM from personal details and DUM from previously observed preferences and likes. Internal context can be extracted through the personality and cognitive information supplied by the visitor. The user model component of the PBC model will extract the activity model from the location sensor. For example, a typical activity model can consist of art, culture, history, science, and war. The behavioural model component of the PBC model shall extract behavioural patterns from the activity model. For example, an extracted behavioural pattern can be war–science–culture–art. There can be a typical behavioural pattern with higher frequency for a visitor. This pattern can be used by the classification component to detect changes in exhibition views, such that the content of the displays can be updated in real-time to reflect the change for the next exhibition to be viewed or for future visits. Furthermore, a visitor close to a location sensor connected to a smartphone via Bluetooth can receive exhibition messages so that the visitor can be aware of the various exhibitions available and advice regarding navigating through the museum.

This study used productivity modelling and monitoring to evaluate the PBC model, as discussed in subsequent sections.

7. Materials and Methods

The study focused on behavioural modelling and monitoring in SEs, specifically using office environments that support smart objects. The evaluation aims to demonstrate the PBC model’s ability to model human behaviours through pattern recognition and classifying the identified behavioural patterns. Therefore, the study evaluated the PBC model through an experiment using office environments with personal smart devices installed. Therefore, personal smart office environments (PSOE) were used. A personal smart office environment was chosen because of the following reasons: (i) There is only one full smart environment in the research institution. (ii) The participants cannot be moved from their natural working environment to the university’s SE, as this will introduce some biases into the study design and responses. (iii) Full SEs cannot be implemented in each participant’s office due to cost implications. (iv) PSOEs can be set up in each participant’s natural offices with minimal costs using simple sensors. The experiment is described in subsequent sections.

7.1. Evaluation Objective

The evaluation objective of this study was to instantiate the PBC model as an artefact produced from DSR and to evaluate the core components of the PBC model in a naturalistic environment.

7.2. Evaluation Process

The evaluation steps specified in the Framework for Evaluation in Design Science (FEDS) by Venable et al. [109] were adopted to identify the evaluation goals, select suitable evaluation technique(s), decide on the properties to evaluate, and plan each evaluation episode. These steps are summarised in Table 1.

Table 1.

Evaluation Process.

Table 1 shows the evaluation process that was followed by the study. Step 1 focused on identifying the evaluation goal, which mapped to rigour evaluation, where the evaluation ensures that the artefact is usable in a realistic environment (office environment). In step 2, the human risk and effectiveness strategy was chosen because it helps to evaluate an artefact’s effectiveness through a naturalistic evaluation. In step 3, the evaluation properties for evaluating an artefact were selected. These are user model creation, behavioural pattern extraction, behaviour classification, and change detection. In step 4, the plan for the selection evaluation is decided. For the naturalistic–summative evaluation, experimentation with office workers was carried out. The details of the experiment are discussed in Section 7.3.

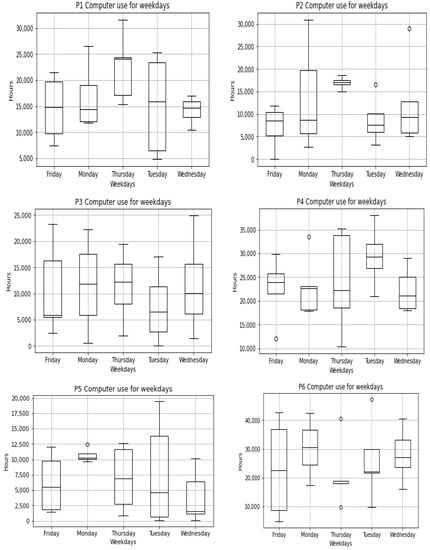

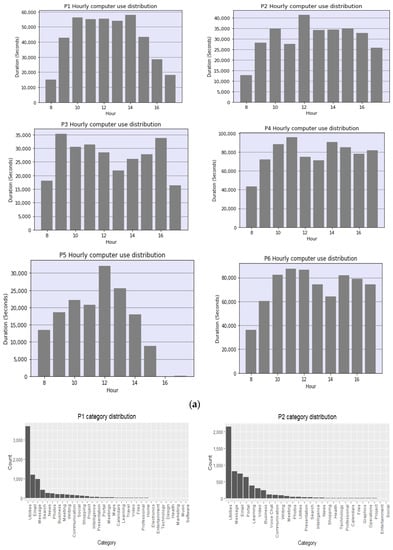

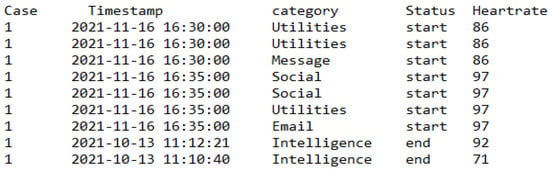

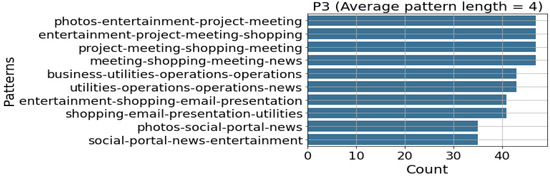

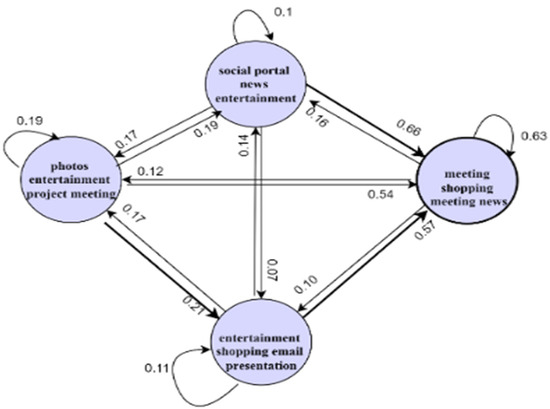

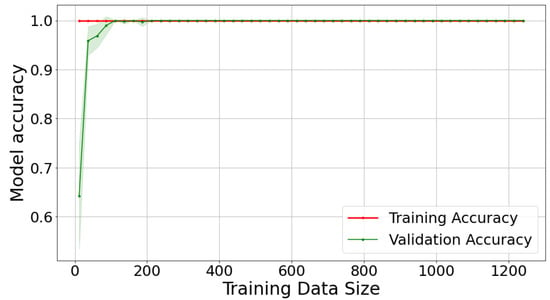

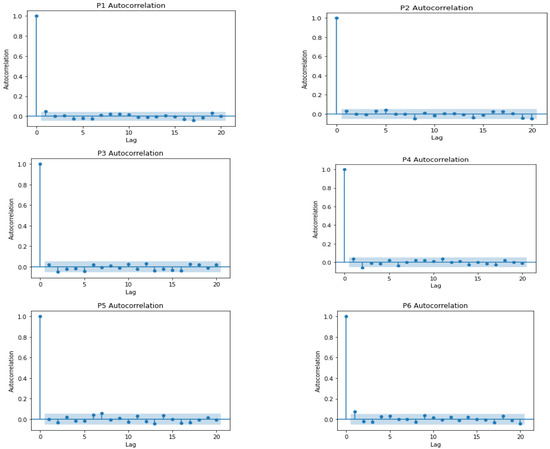

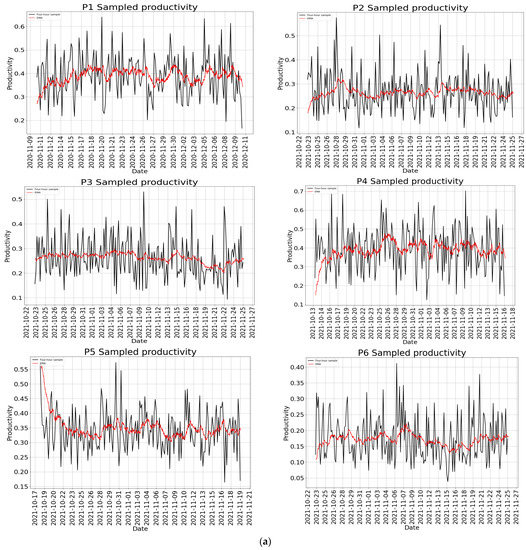

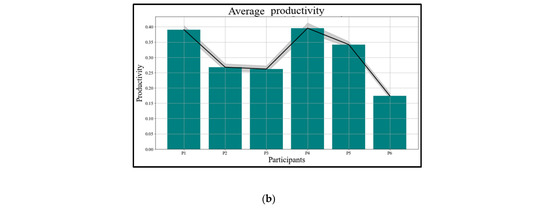

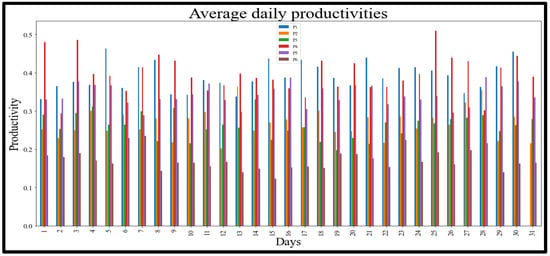

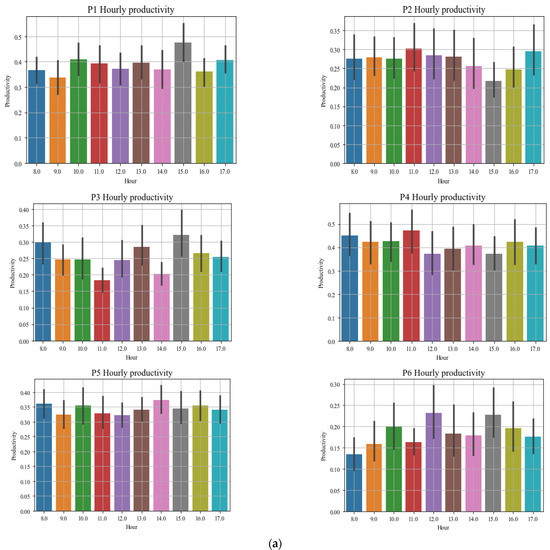

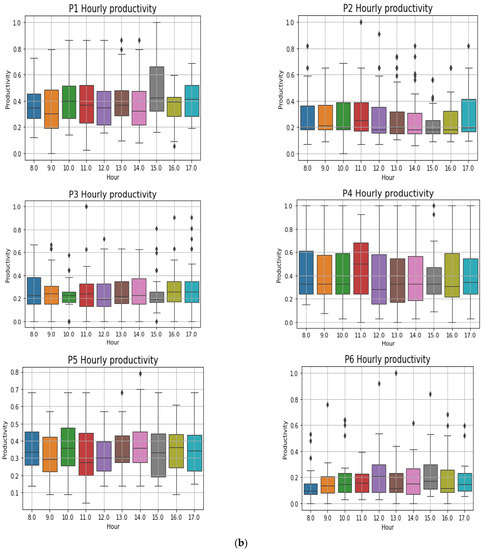

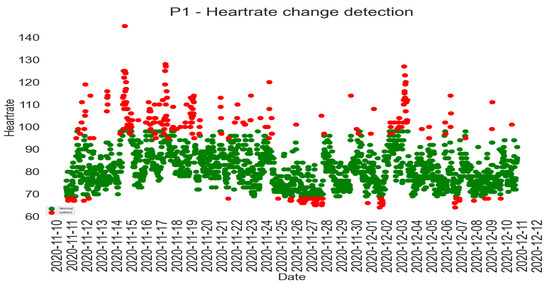

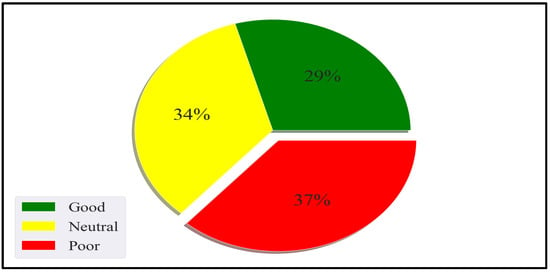

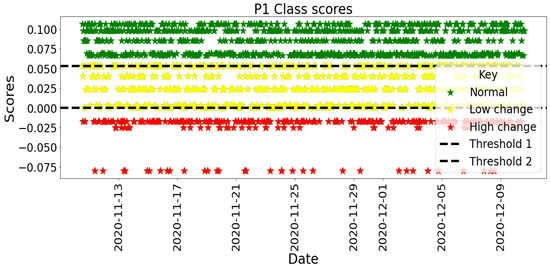

7.3. Naturalistic-Summative Evaluation

The naturalistic–summative evaluation design incorporated the instantiation of the PBC model through the implementation of personal SEs in offices to collect the daily digital work data while at work. The evaluation was designed in a way that allowed non-intrusive data collection, i.e., as participants are working, the digital activities are logged without diverting their attention or disturbing them. The activity dataset included desktop/mobile phone and internet use, social media use, email, and interrupt/multitasking activities. Additionally, the study collected participants’ heart rate data as a vital sign and an internal context to reflect their health status while engaging in their office tasks. These datasets were collected for 4 weeks per participant, spanning from November 2020 to April 2021.

7.3.1. Participants

Data acquisition for the study involved collecting digital activity data, acting as primary data. Before the commencement of data collection, Nelson Mandela University’s Ethics Committee approved the data collection (H20-SCI-CSS-006). Invitations were sent to the Physical and Support Staff (PASS) of Nelson Mandela University, South Africa. Within the invitation, the eligibility criteria stated in Section 3.3 were included for consideration and participation.