Challenges in Implementing Low-Latency Holographic-Type Communication Systems

Abstract

1. Introduction

- Systematized review of HTC system implementation challenges and comparison between already implemented HTC systems;

- Development of a conceptual framework for a smart HTC system.

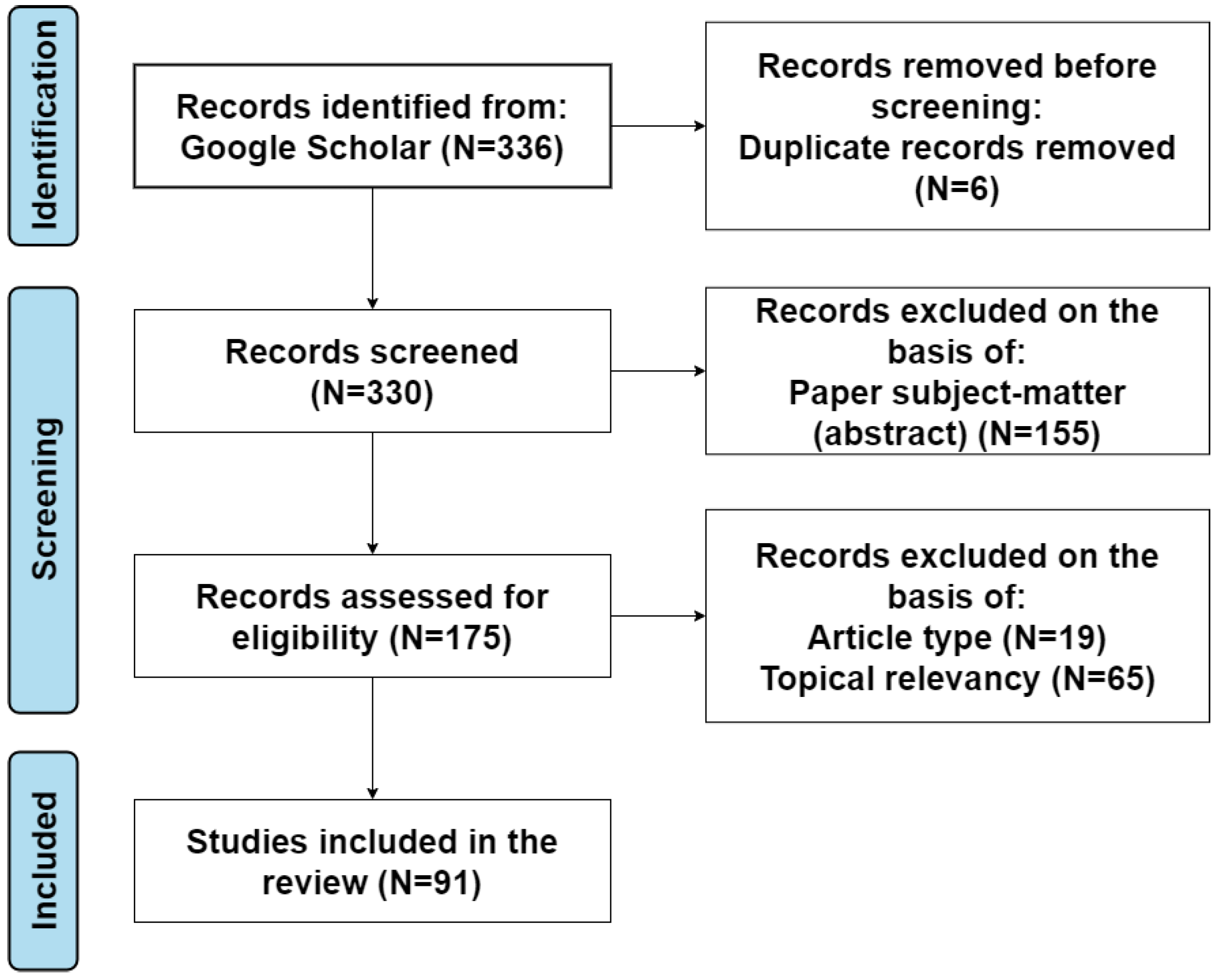

1.1. Literature Review and Selection Methodology

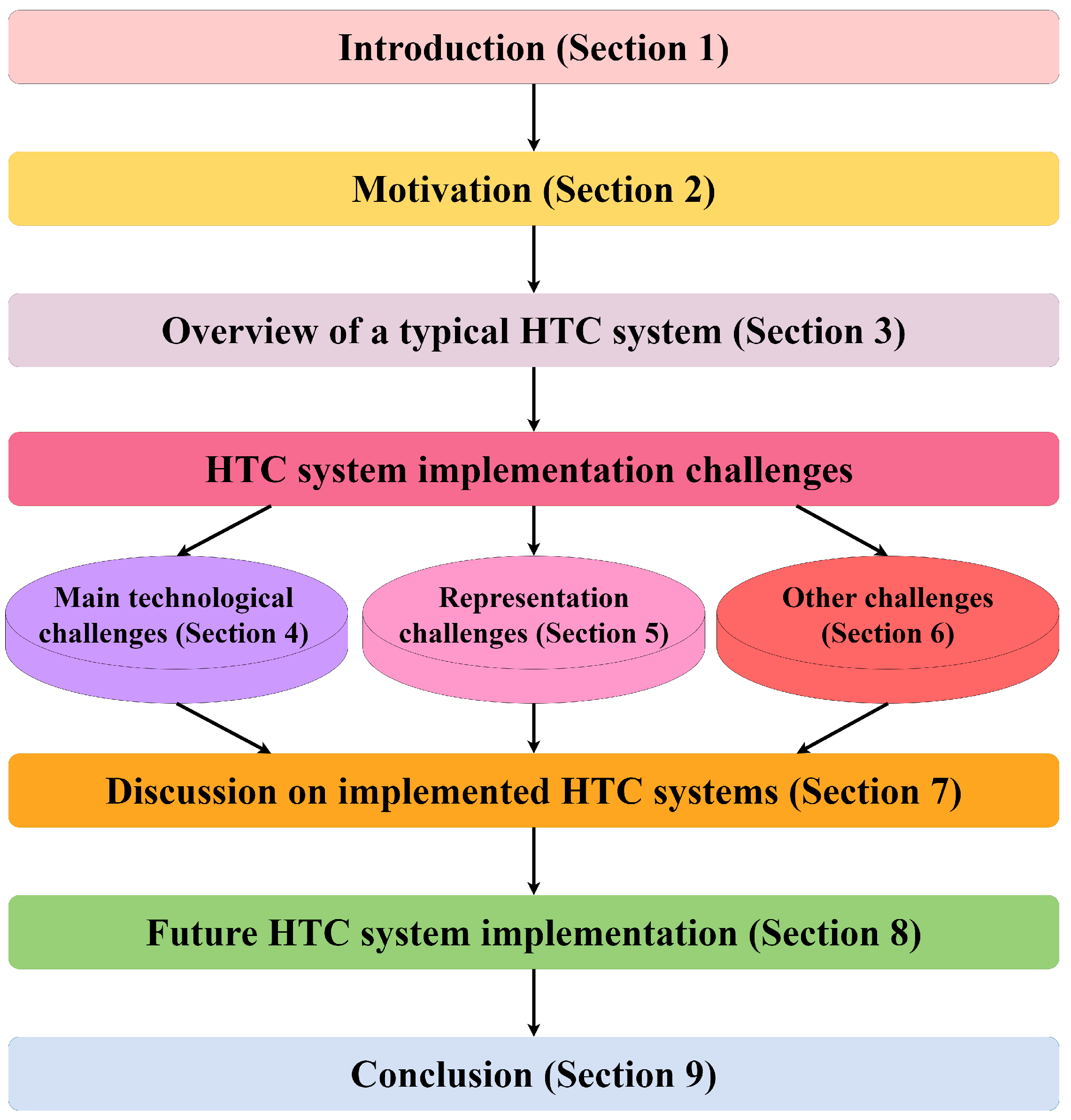

1.2. Paper Structure

2. Motivation

| Reference | Year | Topic | Main Research Aspects |

|---|---|---|---|

| [3] | 2019 | Current state of collaborative MR technologies | A review of MR collaborative technologies according to their application area, types of display devices, collaboration setup, and user interaction and experience aspects. |

| [8] | 2019 | Revisiting collaboration through MR | A review of MR collaboration according to time and space dimensions, participants’ role symmetry, artificiality, collaboration focus, and collaboration scenario. Review of foreseeable direction for improving collaborative experience. |

| [9] | 2019 | A survey of AR/MR-based co-design in manufacturing | A review of state-of-the-art AR/MR-based co-designed systems in manufacturing in terms of remote and co-located collaboration, real-time multi-user collaboration, and independent view collaboration. Reviewing research challenges and future trends. |

| [13] | 2020 | A survey of collaborative work in AR | A review of papers along the dimensions of space, time, role symmetry, technology symmetry, and input and output modalities. |

| [14] | 2020 | A review of extended reality (XR) in spatial sciences | Review of research challenges and future directions in XR domain in terms of technology (display devices, tracking, control devices and paradigms for 3D user interface, visual realism, level of detail and graphic processing, bottlenecks in reconstruction and role of artificial intelligence (AI) in automation), design (visualization and interaction design), and human factors. |

| [15] | 2020 | A review of MR | A review following MR application framework that comprises a layer for main system components, a layer for architectural issues for component integration, an application layer, and a user interface layer. |

| [1] | 2021 | A review of AR/MR remote collaboration on physical tasks | A review of AR/MR remote collaboration papers from the following aspects: collection and classification research, using 3D scene reconstruction environment and live panorama, periodicals and conducting research, local and remote user interfaces, features of user interfaces, architecture and shared non-verbal cues, application, and toolkits. Review of limitations and open research issues in the field. |

| [4] | 2021 | A review of the existing methods and systems for remote collaboration | A review of Microsoft Hololens applications divided by application area, visualization technology (AR and MR), and functionality (visualization, interaction, and immersion). |

| [7] | 2021 | A survey of synchronous AR, VR, and MR systems for remote collaboration | A review of the recent developments of synchronous remote collaboration systems in terms of environment (meeting, design, and remote expert), avatars (cartoon, realistic, full body, head and hands, upper body, reconstructed model, video, AR annotations, hands only, and audio avatar), and interaction (media sharing, shared 3D object manipulation, 2D drawing, mid-air 3D drawing, AR annotations, AR viewpoint sharing, hand and gestures, shared gaze awareness, and conveying facial expression). |

| [10] | 2021 | A survey of spatially faithful telepresence | A review of the status of natural perception support, mobility and viewpoints serving, transmitting and displaying volumetric videos, viewpoint-on-demand, photo realism vs virtual world approaches, glass-based vs screen displays, and XR’s role in 3D telepresence. |

| [11] | 2021 | A review of the existing methods and systems for remote collaboration | A review of the following remote collaboration aspects: electronic devices (video cameras, projectors, display devices, HMD, smart devices, and robot devices); displaying technologies (2D video stream, 3D view, 360° panorama, AR, VR, and MR); and communication cues (annotations, pointing, gestures, and gaze). |

| [12] | 2021 | A review of the current state of holography and 3D displays | A review of recent accomplishments made in the field of holographic 3D displays, holographic data transmission, and rendering hardware. |

| [17] | 2021 | “Telelife” and the challenges of remote living | Research on the “Telelife” challenges of remote interfaces, smart homes, learning, collaborating, privacy, security, accessibility, adoption, and ethics. Reviewing the grand technical challenges facing the “Telelife” implementation. |

| [16] | 2022 | Scalable XR in the dimensions of collaboration, visualization, and interaction | A review of scalable XR according to its collaboration support features, consistent and accessible visualization, and intuitive interaction techniques. Review of future research directions in the face of general research topics, scalability between different devices, scalability between different degrees of virtuality, and scalability between different number of collaborators. |

| THIS PAPER | A review of HTC system implementation challenges | A review of the challenges to the HTC system implementation systematized in the following three groups: main technological challenges (input and output (I/O) challenges, data processing challenges, data transmission challenges, and HTC system scalability challenges), representation challenges (avatar embodiment challenges, gesture support challenges, gaze support challenges, and emotions support challenges), and other challenges (HTC system evaluation challenges and security and privacy challenges). Comparison between already existing HTC systems. Proposal of a conceptual framework for future HTC system implementation. | |

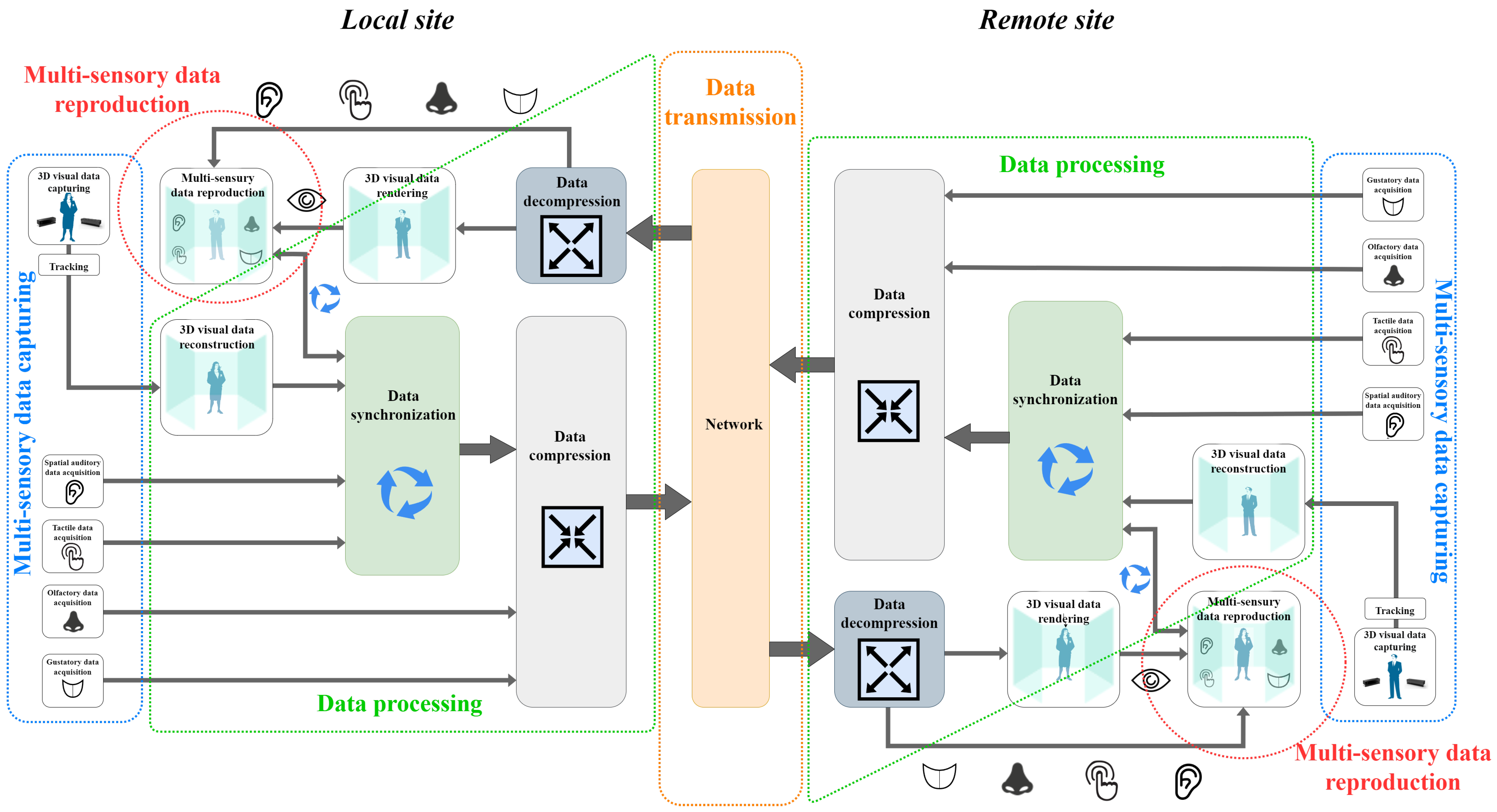

3. Overview of a Basic HTC System

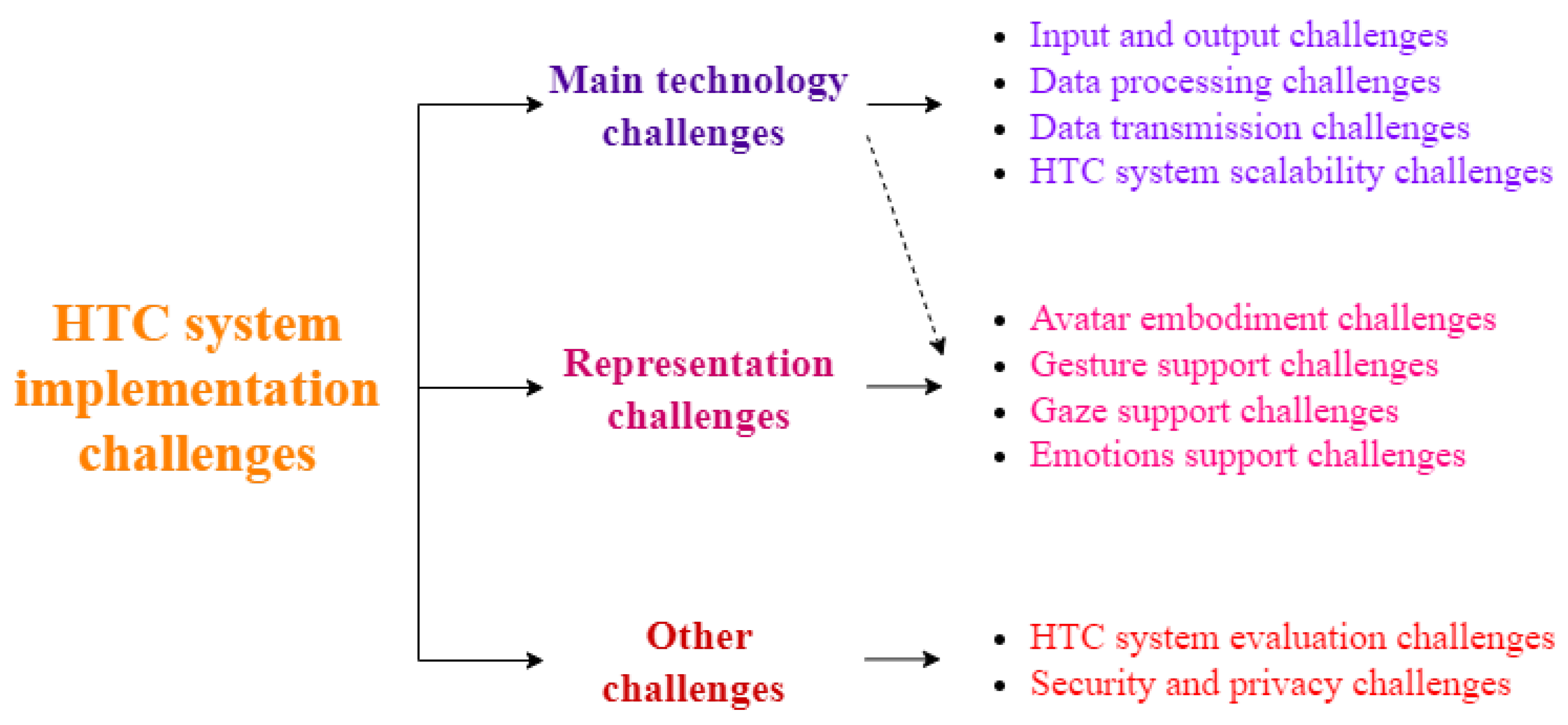

4. Main Technological Challenges in Implementing HTC

4.1. I/O Technologies

| Group of Challenges | Challenges |

|---|---|

| Visual input | Depth cameras: low precision, low resolution, distance range limitations, noise addition, and narrow FoV [21]; |

| Multi-camera set up: need of calibration, alignment errors, lower reconstruction precision, time expense, and hurdle installation; | |

| 360° capturing: limited user movements [64]; | |

| Computational and transmission overload for higher frame rates. | |

| Visual output | HMDs: less resolution and narrower FoV than human eye visual system [45]; |

| Limited battery life, limited usage to a single user at a time, heaviness, inconvenience, invasiveness, low availability, high set up cost, and great requirements on computation hardware [40] ; | |

| Convergence–accommodation conflict, discomfort, nausea, dizziness, oculomotor, and disorientation [12,42,44]; | |

| Social acceptability of HDM, tracking isolation and exclusion, shared experience and shared space, and ethical implications of public MR [43]; | |

| LFD and volumetric displays: limited panel volume, limited depth cue, and great amount of data [12,40,41]; | |

| Simultaneous provision of highly immersive experience supported by very high resolution within very large FoV and possibility of real-time interaction [44]. | |

| Other types of I/O technologies | Need for multi-sensory interactions [17,49,68,71,72]; |

| Need for video and audio consistency (need for spatial audio) [17,67,68]; | |

| Integration of tactility in HTC systems and difficulties in providing bidirectional tactile sensation and haptic feedback [52,69,70]; | |

| Limited incorporation of smell and taste and high cost of integration of sensory reality pods. |

4.2. Data Processing

4.3. Data Transmission of Holographic Data

4.4. System Scalability

| Group of Challenges | Challenges |

|---|---|

| Data processing | Processing of great amount of data, high-quality reconstruction (in multi-camera set up), and efficient compression/decompression techniques in a reasonable amount of time; |

| Requirement of great computational power; | |

| Trade-off between compression latency and network latency [12,23,25,26,27,31]; | |

| Advantaging network edge computing [26,38,39]; | |

| Multi-sensor stream synchronization of local site signals [27]; | |

| Synchronization of streams coming from multiple sensors [37,39]. | |

| Transmission | Ultra-high bandwidth, efficient modulation techniques, and higher frequency bands; |

| Applying adaptive streaming [35,46]; | |

| Semantic knowledge and view-point prediction [26]; | |

| Low-latency transmission fewer than 15 ms motion-to-photon latency and fewer than 50–100 ms end-to-end latency [64,76,77,78,79,80,81,82,83]; | |

| Development of an optimized mechanism for holographic data transmission [27]; | |

| Network structure optimization [27,91,92,93]. | |

| Scalability | Increasing technology requirements—higher bandwidth, insurance of low latency, and synchronization across many users; |

| Need for centralized control [46]; | |

| Considering scalability between different devices, between a different number of collaborators, and between different degrees of virtuality [16]. |

5. Representation Challenges

5.1. Avatar Embodiment

5.2. Gesture Support

5.3. Gaze Support

5.4. Emotion Support

6. Other Challenges

6.1. Evaluation (Subjective and Objective)

6.2. Security, Privacy, and Ethics

7. Discussion of Implemented HTC Systems

8. Future Direction—A Framework for a New Intelligent and Capable HTC System

8.1. User Site

8.2. Server Site

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| 5G | Fifth-generation mobile network |

| AI | Artificial intelligence |

| AR | Augmented reality |

| CMR | Collaborative mixed reality |

| DASH | Dynamic adaptive streaming over HTTP |

| FOV | Field of view |

| HMD | Head-mounted display |

| HTC | Holographic-type communications |

| I/O | Input/output |

| IETF | Internet Engineering Task Force |

| IF | Influencing factor |

| ITU | International Telecommunication Union |

| ML | Machine learning |

| MPEG | Moving Picture Experts Group |

| MR | Mixed reality |

| PCC | Point-cloud compression |

| PME | Physical meeting environment |

| PUN | Photon unity network |

| QoE | Quality of experience |

| QoS | Quality of service |

| QUIC | Quick UDP Internet connections |

| RADI | Realistic adaptive dynamic integration |

| RTSP | Real-time streaming protocol |

| TCP | Transmission control protocol |

| ToF | Time of flight |

| UDP | User datagram protocol |

| VME | Virtual meeting environment |

| VR | Virtual reality |

| WebRTC | Web Real-time communication |

| XR | Extended reality |

References

- Wang, P.; Bai, X.; Billinghurst, M.; Zhang, S.; Zhang, X.; Wang, S.; He, W.; Yan, Y.; Ji, H. AR/MR remote collaboration on physical tasks: A review. Robot. Comput.-Integr. Manuf. 2021, 72, 102071. [Google Scholar] [CrossRef]

- El Essaili, A.; Thorson, S.; Jude, A.; Ewert, J.C.; Tyudina, N.; Caltenco, H.; Litwic, L.; Burman, B. Holographic communication in 5g networks. Ericsson Technol. Rev. 2022, 2022, 2–11. [Google Scholar] [CrossRef]

- de Belen, R.A.J.; Nguyen, H.; Filonik, D.; Del Favero, D.; Bednarz, T. A systematic review of the current state of collaborative mixed reality technologies: 2013–2018. AIMS Electron. Electr. Eng. 2019, 3, 181–223. [Google Scholar] [CrossRef]

- Park, S.; Bokijonov, S.; Choi, Y. Review of microsoft hololens applications over the past five years. Appl. Sci. 2021, 11, 7259. [Google Scholar] [CrossRef]

- Orts-Escolano, S.; Rhemann, C.; Fanello, S.; Chang, W.; Kowdle, A.; Degtyarev, Y.; Kim, D.; Davidson, P.L.; Khamis, S.; Dou, M.; et al. Holoportation: Virtual 3d Teleportation in Real-Time. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Bend, OR, USA, 29 October–2 November 2016; pp. 741–754. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Syst. Rev. 2021, 10, 89. [Google Scholar] [CrossRef]

- Schäfer, A.; Reis, G.; Stricker, D. A Survey on Synchronous Augmented, Virtual and Mixed Reality Remote Collaboration Systems. ACM Comput. Surv. (CSUR) 2021. [Google Scholar] [CrossRef]

- Ens, B.; Lanir, J.; Tang, A.; Bateman, S.; Lee, G.; Piumsomboon, T.; Billinghurst, M. Revisiting collaboration through mixed reality: The evolution of groupware. Int. J. Hum.-Comput. Stud. 2019, 131, 81–98. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, S.; Billinghurst, M.; Bai, X.; He, W.; Wang, S.; Sun, M.; Zhang, X. A comprehensive survey of AR/MR-based co-design in manufacturing. Eng. Comput. 2020, 36, 1715–1738. [Google Scholar] [CrossRef]

- Valli, S.; Hakkarainen, M.; Siltanen, P. Advances in Spatially Faithful (3D) Telepresence. In Augmented Reality and Its Application; IntechOpen: London, UK, 2021. [Google Scholar]

- Druta, R.; Druta, C.; Negirla, P.; Silea, I. A review on methods and systems for remote collaboration. Appl. Sci. 2021, 11, 10035. [Google Scholar] [CrossRef]

- Blanche, P.A. Holography, and the future of 3D display. Light Adv. Manuf. 2021, 2, 446–459. [Google Scholar] [CrossRef]

- Sereno, M.; Wang, X.; Besançon, L.; Mcguffin, M.J.; Isenberg, T. Collaborative work in augmented reality: A survey. IEEE Trans. Vis. Comput. Graph. 2020, 28, 2530–2549. [Google Scholar] [CrossRef] [PubMed]

- Çöltekin, A.; Lochhead, I.; Madden, M.; Christophe, S.; Devaux, A.; Pettit, C.; Lock, O.; Shukla, S.; Herman, L.; Stachoň, Z.; et al. Extended reality in spatial sciences: A review of research challenges and future directions. ISPRS Int. J. Geo-Inf. 2020, 9, 439. [Google Scholar] [CrossRef]

- Rokhsaritalemi, S.; Sadeghi-Niaraki, A.; Choi, S.M. A review on mixed reality: Current trends, challenges and prospects. Appl. Sci. 2020, 10, 636. [Google Scholar] [CrossRef]

- Memmesheimer, V.M.; Ebert, A. Scalable extended reality: A future research agenda. Big Data Cogn. Comput. 2022, 6, 12. [Google Scholar] [CrossRef]

- Orlosky, J.; Sra, M.; Bektaş, K.; Peng, H.; Kim, J.; Kos’ myna, N.; Hollerer, T.; Steed, A.; Kiyokawa, K.; Akşit, K. Telelife: The future of remote living. arXiv 2021, arXiv:2107.02965. [Google Scholar] [CrossRef]

- Sence, I.R. Compare Cameras. Available online: https://www.intelrealsense.com/compare-depth-cameras/ (accessed on 27 September 2022).

- Microsoft. Azure Kinect and Kinect Windows v2 Comparison. Available online: https://learn.microsoft.com/en-us/azure/kinect-dk/windows-comparison (accessed on 27 September 2022).

- Tölgyessy, M.; Dekan, M.; Chovanec, L.; Hubinskỳ, P. Evaluation of the azure Kinect and its comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Hackernoon. 3 Common Types of 3D Sensors: Stereo, Structured Light, and ToF. Available online: https://hackernoon.com/3-common-types-of-3d-sensors-stereo-structured-light-and-tof-194033f0 (accessed on 27 September 2022).

- He, Y.; Chen, S. Recent advances in 3D data acquisition and processing by time-of-flight camera. IEEE Access 2019, 7, 12495–12510. [Google Scholar] [CrossRef]

- Fujihashi, T.; Koike-Akino, T.; Chen, S.; Watanabe, T. Wireless 3D Point Cloud Delivery Using Deep Graph Neural Networks. In Proceedings of the ICC 2021-IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar]

- Manolova, A.; Tonchev, K.; Poulkov, V.; Dixir, S.; Lindgren, P. Context-aware holographic communication based on semantic knowledge extraction. Wirel. Pers. Commun. 2021, 120, 2307–2319. [Google Scholar] [CrossRef]

- Fujihashi, T.; Koike-Akino, T.; Watanabe, T.; Orlik, P.V. HoloCast+: Hybrid digital-analog transmission for graceful point cloud delivery with graph Fourier transform. IEEE Trans. Multimed. 2021, 24, 2179–2191. [Google Scholar] [CrossRef]

- van der Hooft, J.; Vega, M.T.; Wauters, T.; Timmerer, C.; Begen, A.C.; De Turck, F.; Schatz, R. From capturing to rendering: Volumetric media delivery with six degrees of freedom. IEEE Commun. Mag. 2020, 58, 49–55. [Google Scholar] [CrossRef]

- Clemm, A.; Vega, M.T.; Ravuri, H.K.; Wauters, T.; De Turck, F. Toward truly immersive holographic-type communication: Challenges and solutions. IEEE Commun. Mag. 2020, 58, 93–99. [Google Scholar] [CrossRef]

- Cao, C.; Preda, M.; Zaharia, T. 3D Point Cloud Compression: A Survey. In Proceedings of the 24th International Conference on 3D Web Technology, Los Angeles, CA, USA, 26–28 July 2019; pp. 1–9. [Google Scholar]

- Huang, T.; Liu, Y. 3d Point Cloud Geometry Compression on Deep Learning. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 890–898. [Google Scholar]

- Liu, H.; Yuan, H.; Liu, Q.; Hou, J.; Liu, J. A comprehensive study and comparison of core technologies for MPEG 3-D point cloud compression. IEEE Trans. Broadcast. 2019, 66, 701–717. [Google Scholar] [CrossRef]

- Schwarz, S.; Preda, M.; Baroncini, V.; Budagavi, M.; Cesar, P.; Chou, P.A.; Cohen, R.A.; Krivokuća, M.; Lasserre, S.; Li, Z.; et al. Emerging MPEG standards for point cloud compression. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 9, 133–148. [Google Scholar] [CrossRef]

- Graziosi, D.; Nakagami, O.; Kuma, S.; Zaghetto, A.; Suzuki, T.; Tabatabai, A. An overview of ongoing point cloud compression standardization activities: Video-based (V-PCC) and geometry-based (G-PCC). APSIPA Trans. Signal Inf. Process. 2020, 9, e13. [Google Scholar] [CrossRef]

- Wang, J.; Ding, D.; Li, Z.; Ma, Z. Multiscale Point Cloud Geometry Compression. In Proceedings of the 2021 Data Compression Conference (DCC), Snowbird, UT, USA, 23–26 March 2021; pp. 73–82. [Google Scholar]

- Wang, J.; Zhu, H.; Liu, H.; Ma, Z. Lossy point cloud geometry compression via end-to-end learning. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4909–4923. [Google Scholar] [CrossRef]

- Zhu, W.; Ma, Z.; Xu, Y.; Li, L.; Li, Z. View-dependent dynamic point cloud compression. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 765–781. [Google Scholar] [CrossRef]

- Yu, S.; Sun, S.; Yan, W.; Liu, G.; Li, X. A Method Based on Curvature and Hierarchical Strategy for Dynamic Point Cloud Compression in Augmented and Virtual Reality System. Sensors 2022, 22, 1262. [Google Scholar] [CrossRef]

- Anmulwar, S.; Wang, N.; Pack, A.; Huynh, V.S.H.; Yang, J.; Tafazolli, R. Frame Synchronisation for Multi-Source Holograhphic Teleportation Applications-An Edge Computing Based Approach. In Proceedings of the 2021 IEEE 32nd Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Helsinki, Finland, 13–16 September 2021; pp. 1–6. [Google Scholar]

- Qian, P.; Huynh, V.S.H.; Wang, N.; Anmulwar, S.; Mi, D.; Tafazolli, R.R. Remote Production for Live Holographic Teleportation Applications in 5G Networks. IEEE Trans. Broadcast. 2022, 68, 451–463. [Google Scholar] [CrossRef]

- Selinis, I.; Wang, N.; Da, B.; Yu, D.; Tafazolli, R. On the Internet-Scale Streaming of Holographic-Type Content with Assured User Quality of Experiences. In Proceedings of the 2020 IFIP Networking Conference (Networking), Paris, France, 22–25 June 2020; pp. 136–144. [Google Scholar]

- Pan, X.; Xu, X.; Dev, S.; Campbell, A.G. 3D Displays: Their Evolution, Inherent Challenges and Future Perspectives. In Proceedings of the Future Technologies Conference, Jeju, Korea, 22–24 April 2021; pp. 397–415. [Google Scholar]

- Kara, P.A.; Tamboli, R.R.; Doronin, O.; Cserkaszky, A.; Barsi, A.; Nagy, Z.; Martini, M.G.; Simon, A. The key performance indicators of projection-based light field visualization. J. Inf. Disp. 2019, 20, 81–93. [Google Scholar] [CrossRef]

- Saredakis, D.; Szpak, A.; Birckhead, B.; Keage, H.A.; Rizzo, A.; Loetscher, T. Factors associated with virtual reality sickness in head-mounted displays: A systematic review and meta-analysis. Front. Hum. Neurosci. 2020, 14, 96. [Google Scholar] [CrossRef]

- Gugenheimer, J.; Mai, C.; McGill, M.; Williamson, J.; Steinicke, F.; Perlin, K. Challenges Using Head-Mounted Displays in Shared and Social Spaces. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–8. [Google Scholar]

- Chang, C.; Bang, K.; Wetzstein, G.; Lee, B.; Gao, L. Toward the next-generation VR/AR optics: A review of holographic near-eye displays from a human-centric perspective. Optica 2020, 7, 1563–1578. [Google Scholar] [CrossRef] [PubMed]

- VRcompare. VRcompare—The Internet’s Largest VR & AR Headset Database. Available online: https://vr-compare.com/ (accessed on 7 October 2022).

- Cernigliaro, G.; Martos, M.; Montagud, M.; Ansari, A.; Fernandez, S. PC-MCU: Point Cloud Multipoint Control Unit for Multi-User Holoconferencing Systems. In Proceedings of the 30th ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, Istanbul, Turkey, 10–11 June 2020; pp. 47–53. [Google Scholar]

- Pakanen, M.; Alavesa, P.; van Berkel, N.; Koskela, T.; Ojala, T. “Nice to see you virtually”: Thoughtful design and evaluation of virtual avatar of the other user in AR and VR based telexistence systems. Entertain. Comput. 2022, 40, 100457. [Google Scholar] [CrossRef]

- Korban, M.; Li, X. A Survey on Applications of Digital Human Avatars toward Virtual Co-presence. arXiv 2022, arXiv:2201.04168. [Google Scholar]

- Yu, K.; Gorbachev, G.; Eck, U.; Pankratz, F.; Navab, N.; Roth, D. Avatars for teleconsultation: Effects of avatar embodiment techniques on user perception in 3D asymmetric telepresence. IEEE Trans. Vis. Comput. Graph. 2021, 27, 4129–4139. [Google Scholar] [CrossRef]

- Gamelin, G.; Chellali, A.; Cheikh, S.; Ricca, A.; Dumas, C.; Otmane, S. Point-cloud avatars to improve spatial communication in immersive collaborative virtual environments. Pers. Ubiquitous Comput. 2021, 25, 467–484. [Google Scholar] [CrossRef]

- Lee, L.H.; Braud, T.; Zhou, P.; Wang, L.; Xu, D.; Lin, Z.; Kumar, A.; Bermejo, C.; Hui, P. All one needs to know about metaverse: A complete survey on technological singularity, virtual ecosystem, and research agenda. arXiv 2021, arXiv:2110.05352. [Google Scholar]

- Wang, Y.; Wang, P.; Luo, Z.; Yan, Y. A novel AR remote collaborative platform for sharing 2.5 D gestures and gaze. Int. J. Adv. Manuf. Technol. 2022, 119, 6413–6421. [Google Scholar] [CrossRef]

- Jing, A.; May, K.W.; Naeem, M.; Lee, G.; Billinghurst, M. EyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–7. [Google Scholar]

- Bai, H.; Sasikumar, P.; Yang, J.; Billinghurst, M. A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 21 April 2020; pp. 1–13. [Google Scholar]

- Ayodele, E.; Zaidi, S.A.R.; Zhang, Z.; Scott, J.; McLernon, D. A Review of Deep Learning Approaches in Glove-Based Gesture Classification. In Machine Learning, Big Data, and IoT for Medical Informatics; Elsevier: Amsterdam, The Netherlands, 2021; pp. 143–164. [Google Scholar]

- De Guzman, J.A.; Thilakarathna, K.; Seneviratne, A. Security and privacy approaches in mixed reality: A literature survey. ACM Comput. Surv. (CSUR) 2019, 52, 1–37. [Google Scholar] [CrossRef]

- Happa, J.; Glencross, M.; Steed, A. Cyber security threats and challenges in collaborative mixed-reality. Front. ICT 2019, 6, 5. [Google Scholar] [CrossRef]

- Mazurczyk, W.; Bisson, P.; Jover, R.P.; Nakao, K.; Cabaj, K. Challenges and novel solutions for 5G network security, privacy and trust. IEEE Wirel. Commun. 2020, 27, 6–7. [Google Scholar] [CrossRef]

- Vijayakumar, S.; Corcoran, P.; Flynn, R.; Murray, N. AI-Derived Quality of Experience Prediction Based on Physiological Signals for Immersive Multimedia Experiences: Research Proposal. In Proceedings of the 13th ACM Multimedia Systems Conference, Athlone, Ireland, 14–17 June 2022; pp. 403–407. [Google Scholar]

- Akhtar, Z.; Siddique, K.; Rattani, A.; Lutfi, S.L.; Falk, T.H. Why is multimedia quality of experience assessment a challenging problem? IEEE Access 2019, 7, 117897–117915. [Google Scholar] [CrossRef]

- Kougioumtzidis, G.; Poulkov, V.; Zaharis, Z.D.; Lazaridis, P.I. A Survey on Multimedia Services QoE Assessment and Machine Learning-Based Prediction. IEEE Access 2022, 10, 19507–19538. [Google Scholar] [CrossRef]

- Brunnström, K.; Beker, S.A.; De Moor, K.; Dooms, A.; Egger, S.; Garcia, M.N.; Hossfeld, T.; Jumisko-Pyykkö, S.; Keimel, C.; Larabi, M.C.; et al. Qualinet White Paper on Definitions of Quality of Experience. 2013. Available online: https://hal.archives-ouvertes.fr/hal-00977812/document (accessed on 18 October 2022).

- ITU. Vocabulary for Performance, Quality of Service and Quality of Experience—QoE and QoS Definitions Vocabulary for Performance, Quality of Service and Quality of Experience, Document Rec. ITU-T P.10/G.100. 2017. Available online: https://www.itu.int/rec/T-REC-P.10 (accessed on 8 September 2022).

- Rhee, T.; Thompson, S.; Medeiros, D.; Dos Anjos, R.; Chalmers, A. Augmented virtual teleportation for high-fidelity telecollaboration. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1923–1933. [Google Scholar] [CrossRef] [PubMed]

- Holografika. Pioneering 3D Light Field Displays. Available online: https://holografika.com/ (accessed on 18 October 2022).

- Lawrence, J.; Goldman, D.B.; Achar, S.; Blascovich, G.M.; Desloge, J.G.; Fortes, T.; Gomez, E.M.; Häberling, S.; Hoppe, H.; Huibers, A.; et al. Project Starline: A high-fidelity telepresence system. ACM Trans. Graph. 2021, 40, 242. [Google Scholar] [CrossRef]

- Yoon, L.; Yang, D.; Chung, C.; Lee, S.H. A Full Body Avatar-Based Telepresence System for Dissimilar Spaces. arXiv 2021, arXiv:2103.04380. [Google Scholar]

- Montagud, M.; Li, J.; Cernigliaro, G.; El Ali, A.; Fernández, S.; Cesar, P. Towards socialVR: Evaluating a novel technology for watching videos together. Virtual Real. 2022, 26, 1–21. [Google Scholar] [CrossRef]

- Ozioko, O.; Dahiya, R. Smart tactile gloves for haptic interaction, communication, and rehabilitation. Adv. Intell. Syst. 2022, 4, 2100091. [Google Scholar] [CrossRef]

- Wang, J.; Qi, Y. A Multi-User Collaborative AR System for Industrial Applications. Sensors 2022, 22, 1319. [Google Scholar] [CrossRef]

- Regenbrecht, H.; Park, N.; Duncan, S.; Mills, S.; Lutz, R.; Lloyd-Jones, L.; Ott, C.; Thompson, B.; Whaanga, D.; Lindeman, R.W.; et al. Ātea Presence—Enabling Virtual Storytelling, Presence, and Tele-Co-Presence in an Indigenous Setting. IEEE Technol. Soc. Mag. 2022, 41, 32–42. [Google Scholar] [CrossRef]

- Kim, D.; Jo, D. Effects on Co-Presence of a Virtual Human: A Comparison of Display and Interaction Types. Electronics 2022, 11, 367. [Google Scholar] [CrossRef]

- Sensiks. Sensory Reality Pods & Platform. Available online: https://www.sensiks.com/ (accessed on 19 October 2022).

- Agiwal, M.; Roy, A.; Saxena, N. Next generation 5G wireless networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2016, 18, 1617–1655. [Google Scholar] [CrossRef]

- van der Hooft, J.; Wauters, T.; De Turck, F.; Timmerer, C.; Hellwagner, H. Towards 6dof http Adaptive Streaming through Point Cloud Compression. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2405–2413. [Google Scholar]

- Blackwell, C.J.; Khan, J.; Chen, X. 54-6: Holographic 3D Telepresence System with Light Field 3D Displays and Depth Cameras over a LAN. In Proceedings of the SID Symposium Digest of Technical Papers; Wiley Online Library: Hoboken, NJ, USA, 2021; Volume 52, pp. 761–763. [Google Scholar]

- Roth, D.; Yu, K.; Pankratz, F.; Gorbachev, G.; Keller, A.; Lazarovici, M.; Wilhelm, D.; Weidert, S.; Navab, N.; Eck, U. Real-Time Mixed Reality Teleconsultation for Intensive Care Units in Pandemic Situations. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021; pp. 693–694. [Google Scholar]

- Langa, S.F.; Montagud, M.; Cernigliaro, G.; Rivera, D.R. Multiparty Holomeetings: Toward a New Era of Low-Cost Volumetric Holographic Meetings in Virtual Reality. IEEE Access 2022, 10, 81856–81876. [Google Scholar] [CrossRef]

- Kachach, R.; Perez, P.; Villegas, A.; Gonzalez-Sosa, E. Virtual Tour: An Immersive Low Cost Telepresence System. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 504–506. [Google Scholar]

- Fadzli, F.E.; Ismail, A.W. A Robust Real-Time 3D Reconstruction Method for Mixed Reality Telepresence. Int. J. Innov. Comput. 2020, 10. [Google Scholar] [CrossRef]

- Vellingiri, S.; White-Swift, J.; Vania, G.; Dourty, B.; Okamoto, S.; Yamanaka, N.; Prabhakaran, B. Experience with a Trans-Pacific Collaborative Mixed Reality Plant Walk. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 238–245. [Google Scholar]

- Li, R. Towards a New Internet for the Year 2030 and Beyond. In Proceedings of the Third annual ITU IMT-2020/5G Workshop and Demo Day, Switzerland, Geneva, 18 July 2018; pp. 1–21. [Google Scholar]

- Qualcomm, Ltd. VR And AR Pushing Connectivity Limits; Qualcomm, Ltd.: San Diego, CA, USA, 2018. [Google Scholar]

- Jansen, J.; Subramanyam, S.; Bouqueau, R.; Cernigliaro, G.; Cabré, M.M.; Pérez, F.; Cesar, P. A Pipeline for Multiparty Volumetric Video Conferencing: Transmission of Point Clouds over Low Latency DASH. In Proceedings of the 11th ACM Multimedia Systems Conference, Istanbul, Turkey, 8–11 June 2020; pp. 341–344. [Google Scholar] [CrossRef]

- Gasques, D.; Johnson, J.G.; Sharkey, T.; Feng, Y.; Wang, R.; Xu, Z.R.; Zavala, E.; Zhang, Y.; Xie, W.; Zhang, X.; et al. ARTEMIS: A Collaborative Mixed-Reality System for Immersive Surgical Telementoring. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–14. [Google Scholar]

- Quin, T.; Limbu, B.; Beerens, M.; Specht, M. HoloLearn: Using Holograms to Support Naturalistic Interaction in Virtual Classrooms. In Proceedings of the 1st International Workshop on Multimodal Immersive Learning Systems, MILeS 2021, Virtual, 20–24 September 2021. [Google Scholar]

- Gunkel, S.N.; Hindriks, R.; Assal, K.M.E.; Stokking, H.M.; Dijkstra-Soudarissanane, S.; Haar, F.T.; Niamut, O. VRComm: An End-to-End Web System for Real-Time Photorealistic Social VR Communication. In Proceedings of the 12th ACM Multimedia Systems Conference, Istanbul, Turkey, 28 September–1 October 2021; pp. 65–79. [Google Scholar]

- Kim, H.i.; Kim, T.; Song, E.; Oh, S.Y.; Kim, D.; Woo, W. Multi-Scale Mixed Reality Collaboration for Digital Twin. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; pp. 435–436. [Google Scholar]

- Fadzli, F.; Kamson, M.; Ismail, A.; Aladin, M. 3D Telepresence for Remote Collaboration in Extended Reality (xR) Application. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Chennai, India, 16–17 September 2020; Volume 979, p. 012005. [Google Scholar]

- Olin, P.A.; Issa, A.M.; Feuchtner, T.; Grønbæk, K. Designing for Heterogeneous Cross-Device Collaboration and Social Interaction in Virtual Reality. In Proceedings of the 32nd Australian Conference on Human-Computer Interaction, Sydney, NSW, Australia, 2–4 December 2020; pp. 112–127. [Google Scholar]

- Ericsson. The Spectacular Rise of Holographic Communication; Ericsson: Stockholm, Sweden, 2022. [Google Scholar]

- ITU. Representative Use Cases and Key Network Requirements for Network 2030; ITU: Geneva, Switzerland, 2020. [Google Scholar]

- Huawei. Communications Network 2030; Huawei: Shenzhen, China, 2021. [Google Scholar]

- Lee, Y.; Yoo, B. XR collaboration beyond virtual reality: Work in the real world. J. Comput. Des. Eng. 2021, 8, 756–772. [Google Scholar] [CrossRef]

- Kim, H.; Young, J.; Medeiros, D.; Thompson, S.; Rhee, T. TeleGate: Immersive Multi-User Collaboration for Mixed Reality 360 Video. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021; pp. 532–533. [Google Scholar]

- Jasche, F.; Kirchhübel, J.; Ludwig, T.; Tolmie, P. BeamLite: Diminishing Ecological Fractures of Remote Collaboration through Mixed Reality Environments. In Proceedings of the C&T’21: Proceedings of the 10th International Conference on Communities & Technologies-Wicked Problems in the Age of Tech, Seattle, WA, USA, 20–25 June 2021; pp. 200–211. [Google Scholar]

- Weinmann, M.; Stotko, P.; Krumpen, S.; Klein, R. Immersive VR-Based Live Telepresence for Remote Collaboration and Teleoperation. Available online: https://www.dgpf.de/src/tagung/jt2020/proceedings/proceedings/papers/50_DGPF2020_Weinmann_et_al.pdf (accessed on 18 October 2022).

- He, Z.; Du, R.; Perlin, K. Collabovr: A Reconfigurable Framework for Creative Collaboration in Virtual Reality. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Porto de Galinhas, Brazil, 9–13 November 2020; pp. 542–554. [Google Scholar]

- Jones, B.; Zhang, Y.; Wong, P.N.; Rintel, S. Belonging there: VROOM-ing into the uncanny valley of XR telepresence. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–31. [Google Scholar]

- Hoppe, A.H.; van de Camp, F.; Stiefelhagen, R. Shisha: Enabling shared perspective with face-to-face collaboration using redirected avatars in virtual reality. Proc. ACM Hum.-Comput. Interact. 2021, 4, 1–22. [Google Scholar] [CrossRef]

- Rekimoto, J.; Uragaki, K.; Yamada, K. Behind-the-Mask: A Face-Through Head-Mounted Display. In Proceedings of the 2018 International Conference on Advanced Visual Interfaces, Castiglione della Pescaia, Italy, 29 May–1 June 2018; pp. 1–5. [Google Scholar]

- Zhao, Y.; Xu, Q.; Chen, W.; Du, C.; Xing, J.; Huang, X.; Yang, R. Mask-Off: Synthesizing Face Images in the Presence of Head-Mounted Displays. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 267–276. [Google Scholar]

- Song, G.; Cai, J.; Cham, T.J.; Zheng, J.; Zhang, J.; Fuchs, H. Real-Time 3D Face-Eye Performance Capture of a Person Wearing VR Headset. In Proceedings of the 26th ACM international conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 923–931. [Google Scholar]

- Nijholt, A. Capturing Obstructed Nonverbal Cues in Augmented Reality Interactions: A Short Survey. In Proceedings of the International Conference on Industrial Instrumentation and Control, Kolkata, India, 15–17 October 2022; pp. 1–9. [Google Scholar]

- Chen, S.Y.; Lai, Y.K.; Xia, S.; Rosin, P.; Gao, L. 3D face reconstruction and gaze tracking in the HMD for virtual interaction. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Lombardi, S.; Saragih, J.; Simon, T.; Sheikh, Y. Deep appearance models for face rendering. ACM Trans. Graph. (ToG) 2018, 37, 1–13. [Google Scholar] [CrossRef]

- IETF. RFC 2386—A Framework for QoS-Based Routing in the Internet; IETF: Fremont, CA, USA, 1998. [Google Scholar]

- Chin, S.Y.; Quinton, B.R. Dynamic Object Comprehension: A Framework For Evaluating Artificial Visual Perception. arXiv 2022, arXiv:2202.08490. [Google Scholar]

- Wang, P.; Bai, X.; Billinghurst, M.; Zhang, S.; Wei, S.; Xu, G.; He, W.; Zhang, X.; Zhang, J. 3DGAM: Using 3D gesture and CAD models for training on mixed reality remote collaboration. Multimed. Tools Appl. 2021, 80, 31059–31084. [Google Scholar] [CrossRef]

- Han, B.; Kim, G.J. AudienceMR: Extending the Local Space for Large-Scale Audience with Mixed Reality for Enhanced Remote Lecturer Experience. Appl. Sci. 2021, 11, 9022. [Google Scholar] [CrossRef]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A skinned multi-person linear model. ACM Trans. Graph. (TOG) 2015, 34, 1–16. [Google Scholar] [CrossRef]

- Osman, A.A.; Bolkart, T.; Black, M.J. Star: Sparse Trained Articulated Human Body Regressor. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 598–613. [Google Scholar]

| Group of Challenges | Challenges |

|---|---|

| Avatar Embodiment | High sense of presence and realism, detailed avatar faces and facial expressions, completeness of avatar bodies and their position, fidelity of movements and gestures, locomotion synchronization between the avatar and the user, sense of body ownership and recognizing users’ self-movements, and avatar placement and cooperation in the interaction space [51]; |

| Need for higher visual and behavioral avatar fidelity [24,47,49,50,54,66,67,68,77,96,99,100]. | |

| Gestures Support | Dynamic and accurate body tracking and replicating gestures and body movements by virtual avatars (human to avatar synchronization) [67]; |

| Calibration and synchronization between different users’ coordinate systems. | |

| Gazes support | Dynamic and accurate eye tracking; |

| Gaze direction estimation and visualization [53]. | |

| Emotions support | Dynamic face capturing/tracking and lip to voice synchronization; |

| HMD face occlusions [101,102,103,104,105,106]. |

| Group of Challenges | Challenges |

|---|---|

| Evaluation | QoS—not enough to assess overall system performance experienced by the end users; |

| OoE—tangle of many different factors impacting the overall system performance [60,61]; | |

| Evaluation by a very large sample of user participants, preliminarily trained or not, and performing different tasks in variable conditions; | |

| Evaluation of variable subjective sense modalities as appearance realism of avatars or/and environments, behavioral realism, feeling of presence and immersion, system usability, ease of use, experienced time for task performance, rate of successfully performed tasks, etc; | |

| New evaluation metrics compatible with the 3D data format [108]; | |

| Developing ML/AI QoE assessment models [59,61]. | |

| Security and Privacy | Neglecting the enhancement of security and privacy in HTC systems [56]; |

| Need for securing more informative 3D data and representing human bodies and environments (size, shape, surface texture, object placement and orientation, etc); | |

| Need for securing acquired user sensitive data (biometric data such as face shape, iris or retina data, hand geometry, fingerprints, or behavior data such as specific facial expressions, body movements, or fine-grained user movements [51]); | |

| Need for securing application-specific data from privacy leakage; | |

| Improving security at the network level while dealing with a great amount of data [58]; | |

| Increasing user trust in immersive applications, especially for purposes different than just plain communication. |

| Reference | Input | Networking | Output |

|---|---|---|---|

| [47] | Xsens suits, HTC Vive with hand controllers | PUN | A tablet device, ODG R7 smart glasses, and HTC Vive |

| [49] | Microsoft Azure Kinect, Microsoft HoloLens 2 eye and hand tracking, SALSA LipSync, HTC VIVE Pro Eye camera, HTC lipsync facial tracker, and HTC VIVE trackers | RTSP | HTC Vive Pro Eye HMD, and Microsoft HoloLens 2 |

| [50] | HTC Vive head tracking, Vive Controllers, 4 OptiTrack Prime 13 cameras, and Microsoft Kinect V2 | Unity Multiplayer and Networking API | HTC Vive and CAVE display |

| [52] | Leap Motion, aGlass gaze tracking, and Cameras | Not discussed | HTC HMD, aGlass, and Projector |

| [53] | HoloLens 2 eye tracking, 360° Ricoh Theta camera, and Vive Pro Eye VR HMD | Not discussed | VR HMD |

| [64] | 360° Ricoh Theta V, Samsung 360° Round, Insta360° Pro 2, HMDtracking, and Oculus Touch controllers | LAN using UDP with 1 Gbps Cat6 Ethernet cable | Oculus Rift CV1, video see-through HMD (Vive Pro) by attaching a ZED Mini camera to the front of the HMD |

| [66] | Stereo cameras, Monochrome tracking cameras, RGBD capture pods, and microphone array | WebRTC | Head-tracked autostereoscopic display |

| [67] | RGB-D ZED mini camera, Vive Pro HMD tracking, Vive trackers, and Hi5 VR Gloves | PUN | HTC Vive Pro headset |

| [68] | Microsoft Kinect v2 | RabbitMQ | VR HMD |

| [70] | CAD model processing and scene creation | FFmpeg library for video transmission | Tablets |

| [71] | RGB-D cameras, Immersive trackable HMD | Not discussed | Immersive trackable HMD |

| [72] | Depth sensor and microphone array | Not discussed | HTC Vive, large TV display |

| [76] | Microsoft Azure Kinect, microphones, and ZED stereo cameras | Gbit Ethernet LAN, WebRTC | Looking Glass LFD |

| [77] | Microsoft Azure Kinect, HMD eye tracking, and Vive trackers | RTSP | Microsoft Hololens 2 and HTC Vive Pro Eye |

| [78] | Microsoft Azure Kinect | Either DASH or socket-based connections managed by an orchestrator | HMD Oculus Quest 2 |

| [79] | Ricoh Theta-V 360° camera | 4G or 5G connection, RTSP | Samsung VR HMD, Samsung Galaxy S8 smartphone |

| [80] | Intel RealSense D415, VR HMD eye tracking, and Leap Motion | 10 Gb Ethernet connection with Draco5 library | HTC Vive Pro Eye and Magic Leap One |

| [81] | Microsoft Kinect | WAN through 100 Gbps optical links | Oculus Rift |

| [84] | Intel Realsense D415 cameras and Intel Realsense D435 | MPEG DASH | Oculus Rift |

| [85] | Microsoft Azure Kinect, Intel RealSence cameras, an OptiTrack optical marker system, IMU-equipped gloves, and wireless pen | Network Library for Unity implementing UDP and TCP transmission, WebRTC | Microsoft HoloLens v1 and HTC Vive Pro |

| [86] | Intel RealSense, Microsoft Azure Kinect | WebRTC | Browser-based platform |

| [87] | Microsoft Kinect v2, Microsoft Azure Kinect, and Intel RealSense | WebRTC | VR headset |

| [88] | Apple iPhone 12 Pro, Hand trackers | PUN | Microsoft HoloLens 2 and Oculus Quest 2 |

| [89] | Intel RealSense D435, Intel RealSense T265, Leap Motion, and Oculus Touch device | PUN | Project NorthStar HMD and Oculus Rift |

| [90] | HTC Vive head tracking, Leap Motion, and Huawei smartphone | Photon Networking Engine | HTC Vive and Huawei smartphone |

| [94] | Intel RealSense Camera, Leap Motion, VR Controllers (Vive, Oculus), and Motion Trackers (Ptitrack and Kinect) | Not discussed | VR HMD and AR tablet |

| [95] | MR 360° camera, HTC Vive controller | Not discussed | Large semi-encompassing immersive display |

| [96] | AR, VR headset tracking, Vive controllers, and Microsoft HoloLens hand tracking | Not discussed | Microsoft HoloLens, HTC Vive |

| [97] | RGB-D sensors in mobile phones and Microsoft Kinect | Not discussed | VR HMD |

| [98] | Touch controllers | UDP network | Oculus Rift CV1 |

| [99] | 360° camera, Windows MR handheld motion controllers, and HP Windows MR/VR headset sensors | not discussed | Microsoft HoloLens and HP Windows MR VR headset |

| [100] | HTC Vive Pro head tracking and Vive controllers | 1 GBit Ethernet connection | HTC Vive Pro |

| [109] | Logitech Web camera and Leap Motion | Unity 3D, WampServer, and Microsoft’s Mixed Reality Toolkit | Microsoft HoloLens and HTC Vive Eye Pro Kit |

| [110] | Zed mini depth camera | not discussed | Samsung Odyssey+ |

| Reference | Scalability | Avatar Embodiment | Non-Verbal Cues | User Study | Latency Evaluation | Security and Privacy |

|---|---|---|---|---|---|---|

| [47] | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ |

| [49] | ✗ | ✓ (both type of avatars) | ✓ (limited finer gestures and gaze) | ✓ | ✓ (566.30 ms for predesigned avatars, and 502.31 ms for photorealistic avatars) | ✗ |

| [50] | ✓ | ✓ (both type of avatars) | ✓ (gestures) | ✓ | ✗ | ✗ |

| [52] | ✗ | ✗ | ✓ (gestures and gaze) | ✓ | ✗ | ✓ |

| [53] | ✗ | ✓ (pre-designed avatars) | ✓ (eye gaze) | ✓ | ✗ | ✗ |

| [64] | ✗ | ✓ (pre-designed avatar) | ✓ (gesture and gaze) | ✓ | ✓ (1.2 s) | ✗ |

| [66] | ✗ | ✓ (realistic avatar) | ✓ (eye contact, hand gestures, and body language) | ✓ | ✓ (off-to-on transition of an LED (105.8 ms)) | ✗ |

| [67] | ✗ | ✓ (pre-designed avatar) | ✓ (gestures and pose) | ✓ | ✗ | ✗ |

| [68] | ✗ | ✓ (realistic avatar) | ✗ | ✓ | ✗ | ✗ |

| [70] | ✓ | ✗ | ✗ | ✓ | ✗ | ✓ |

| [71] | ✓ | ✓ (realistic avatar) | ✗ | ✗ | ✓ (0.5 ms) | ✗ |

| [72] | ✗ | ✓ (pre-designed avatar) | ✓ (database-based facial expressions, gestures, and gaze) | ✓ | ✗ | ✗ |

| [76] | ✗ | ✓ | ✗ | ✗ | ✓ (210–230 ms for the 29–30 fps in duplex mode) | ✗ |

| [77] | ✓ | ✓ (both types of avatars) | ✓ (animating eye movements) | ✗ | ✓ (300–400 ms) | ✗ |

| [78] | ✓ (yes—up to 6 users) | ✓ (realistic avatar) | ✗ | ✓ | ✓ (180.5 ms and 251.2 ms for 2 and 4 point clouds) | ✗ |

| [79] | ✗ | ✗ | ✓ (gaze) | ✓ | ✓ (2K, 6 Mbps on 400 ms, 4K for about 1 s) | ✗ |

| [80] | ✗ | ✗ (pre-designed avatar) | ✓ (gaze and gestures and head position) | ✓ | ✓ (about 300 ms and fewer than 10 ms for the cues) | ✗ |

| [81] | ✗ | ✓ (realistic avatar) | ✓ (gestures) | ✓ | ✓ | ✗ |

| [84] | not discussed | ✓ (realistic avatar) | ✗ | ✗ | ✓ | ✗ |

| [85] | ✗ | ✓ (pre-designed avatar) | ✓ (gestures) | ✓ | ✗ | ✗ |

| [86] | ✓ | ✓ (realistic avatars) | ✗ | ✗ | ✗ | ✗ |

| [87] | ✓ | ✓ (realistic avatar) | ✗ | ✗ | ✓ (depth transmission evaluation) | ✗ |

| [88] | ✓ | ✓ (pre-designed avatar) | ✓ (head and hand poses) | ✗ | ✗ | ✗ |

| [89] | ✗ | ✓ (realistic avatar) | ✓(gestures) | ✓ | ✓ | ✓ |

| [90] | ✓ | ✓ (pre-designed avatar) | ✓ (gestures) | ✓ | ✗ | ✗ |

| [94] | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ |

| [95] | ✓ | ✓ (realistic avatar) | ✓ (gestures, facial expressions, and body language) | ✓ | ✗ | ✗ |

| [96] | ✓ | ✓ (pre-designed avatar) | ✓ (gestures) | ✓ | ✗ | ✗ |

| [97] | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ |

| [98] | ✓ | ✓ (pre-designed avatar) | not discussed | ✓ | ✓ (about 10 ms) | ✗ |

| [99] | ✗ | ✓ (pre-designed avatar) | ✓ (gestures and head gaze, mouth flapping, and periodically blinking) | ✗ | ✗ | ✗ |

| [100] | ✗ | ✓ (pre-designed avatar) | ✓ (gestures and head gaze) | ✓ | ✗ | ✗ |

| [109] | ✗ | ✗ | ✓ (gestures) | ✓ | ✗ | ✗ |

| [110] | ✗ | ✓ (pre-designed avatars) | ✗ | ✓ | ✗ | ✗ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Petkova, R.; Poulkov, V.; Manolova, A.; Tonchev, K. Challenges in Implementing Low-Latency Holographic-Type Communication Systems. Sensors 2022, 22, 9617. https://doi.org/10.3390/s22249617

Petkova R, Poulkov V, Manolova A, Tonchev K. Challenges in Implementing Low-Latency Holographic-Type Communication Systems. Sensors. 2022; 22(24):9617. https://doi.org/10.3390/s22249617

Chicago/Turabian StylePetkova, Radostina, Vladimir Poulkov, Agata Manolova, and Krasimir Tonchev. 2022. "Challenges in Implementing Low-Latency Holographic-Type Communication Systems" Sensors 22, no. 24: 9617. https://doi.org/10.3390/s22249617

APA StylePetkova, R., Poulkov, V., Manolova, A., & Tonchev, K. (2022). Challenges in Implementing Low-Latency Holographic-Type Communication Systems. Sensors, 22(24), 9617. https://doi.org/10.3390/s22249617