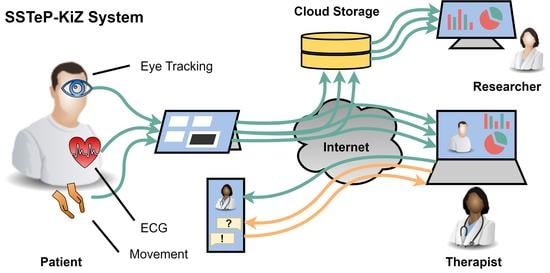

The SSTeP-KiZ System—Secure Real-Time Communication Based on Open Web Standards for Multimodal Sensor-Assisted Tele-Psychotherapy

Abstract

1. Introduction

- The synchronous recording of multiple wearable sensors at patient’s home, namely ECG, eye tracking, ego-centric video, and movement sensors.

- Real-time online streaming and processing of sensor data with an insightful visualization for the therapist.

- Questionnaire features to measure the therapy progress with gamification features for an intrinsic motivation of filling them out.

- A secure implementation of data storing and streaming.

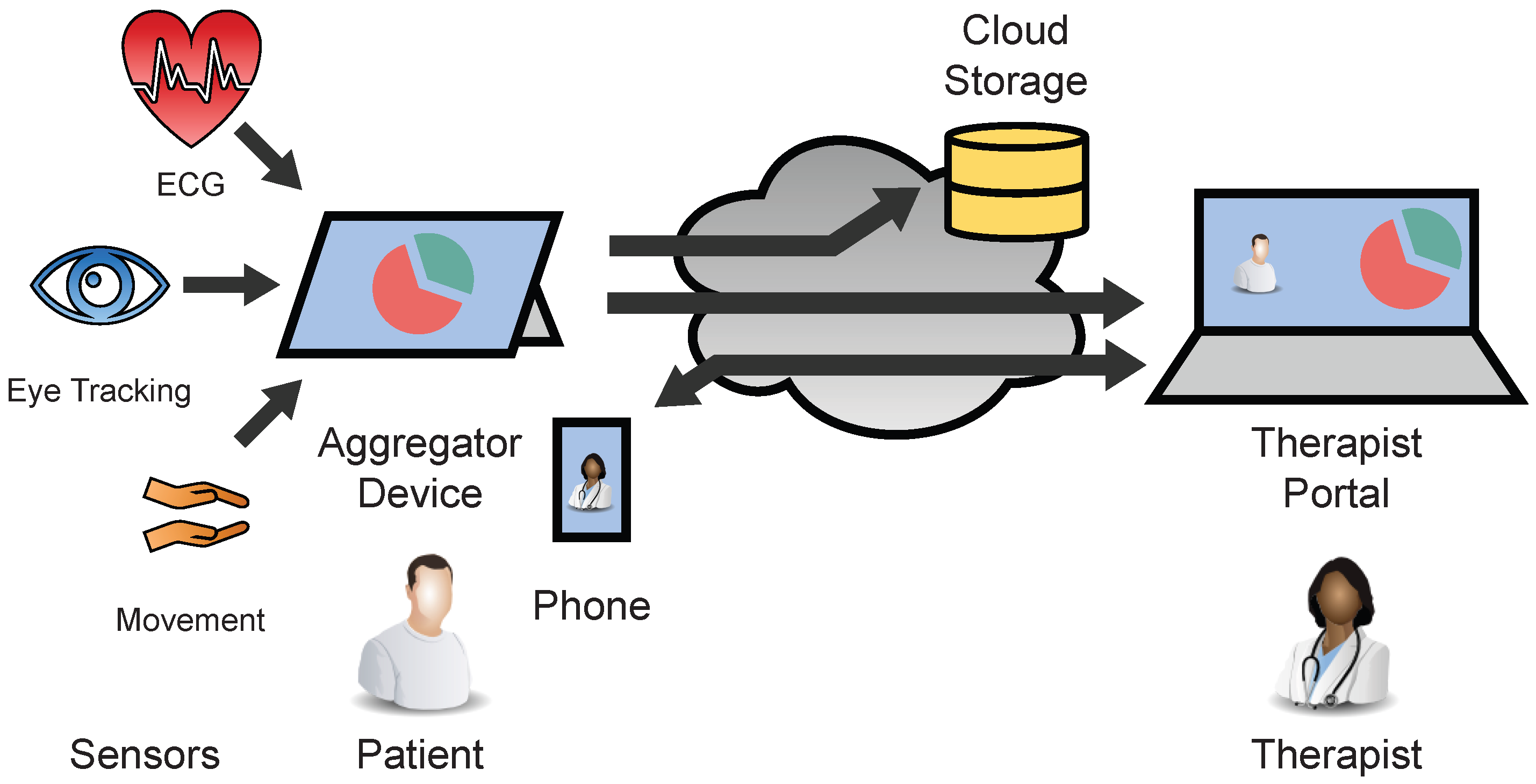

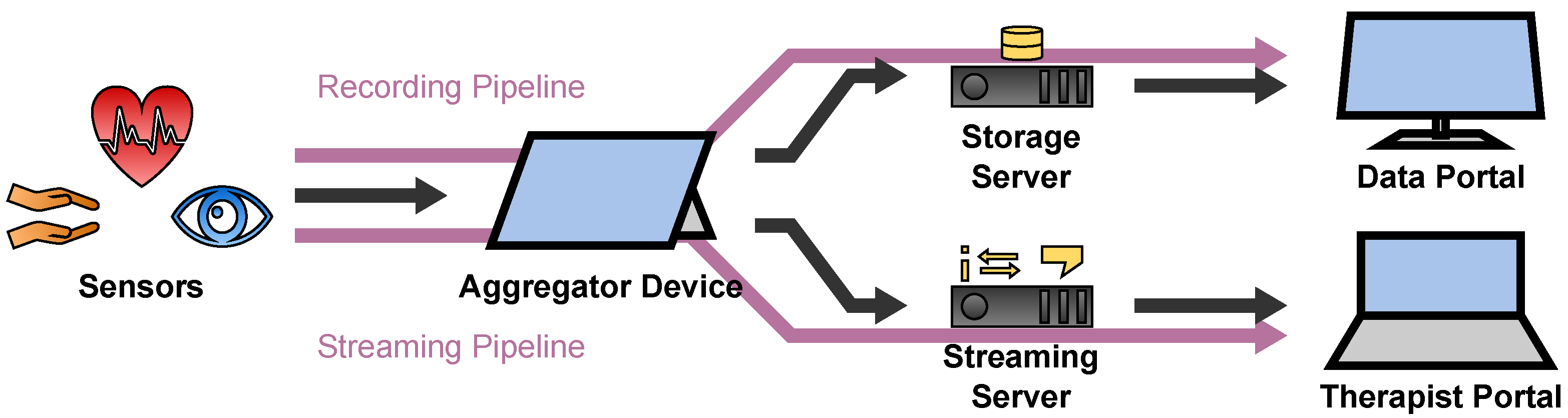

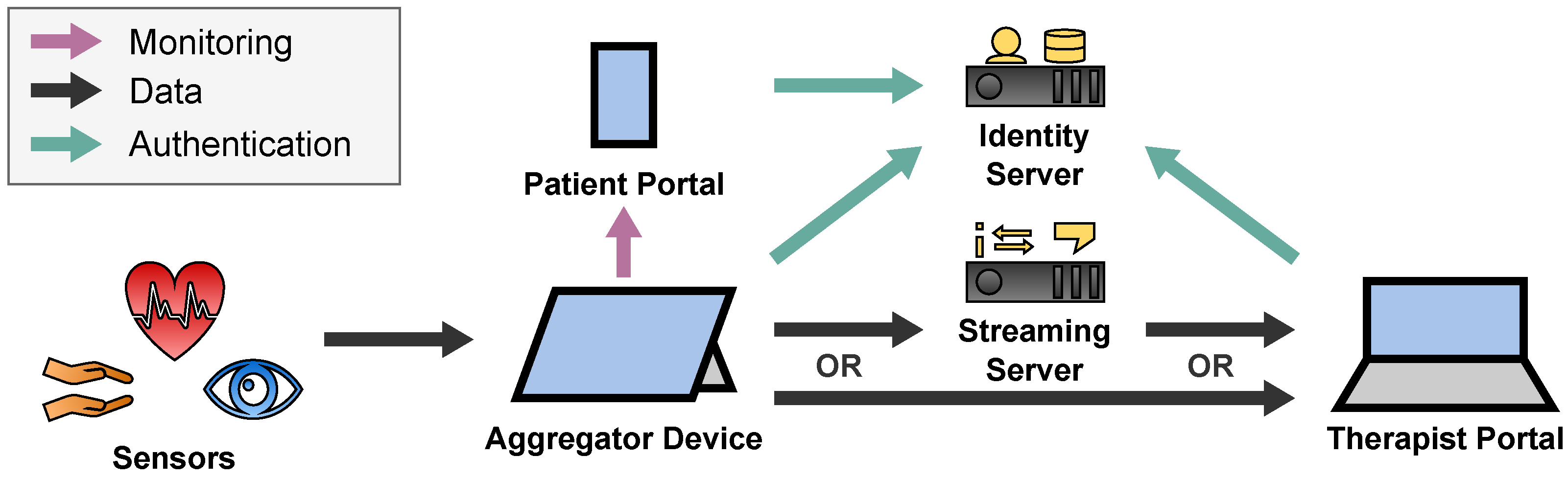

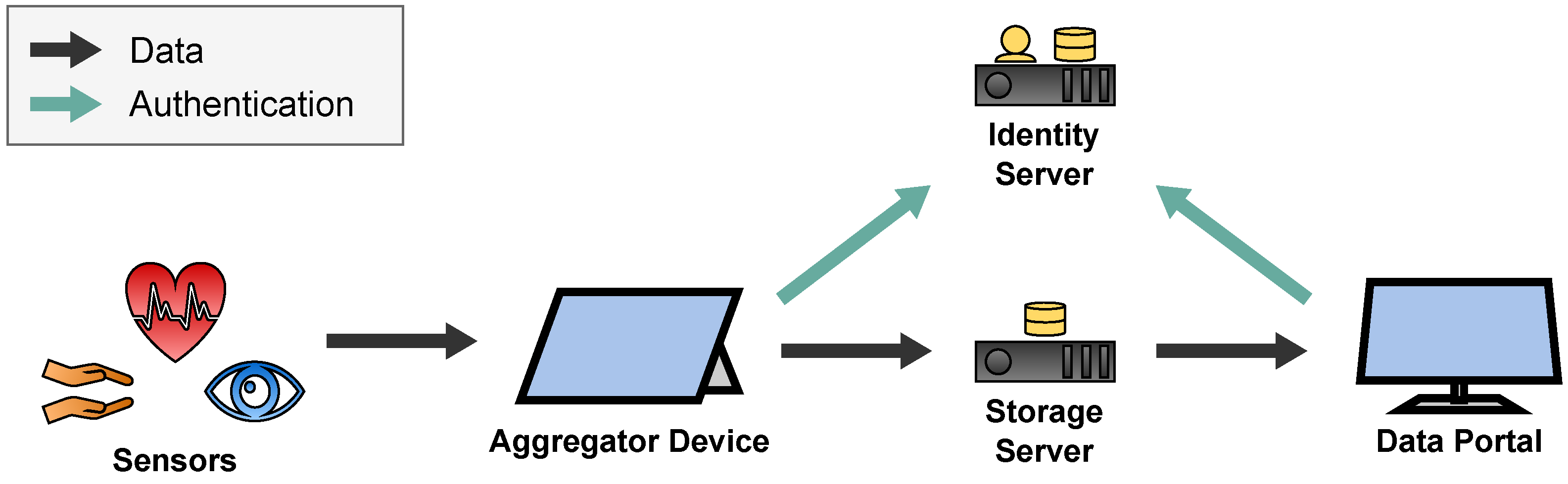

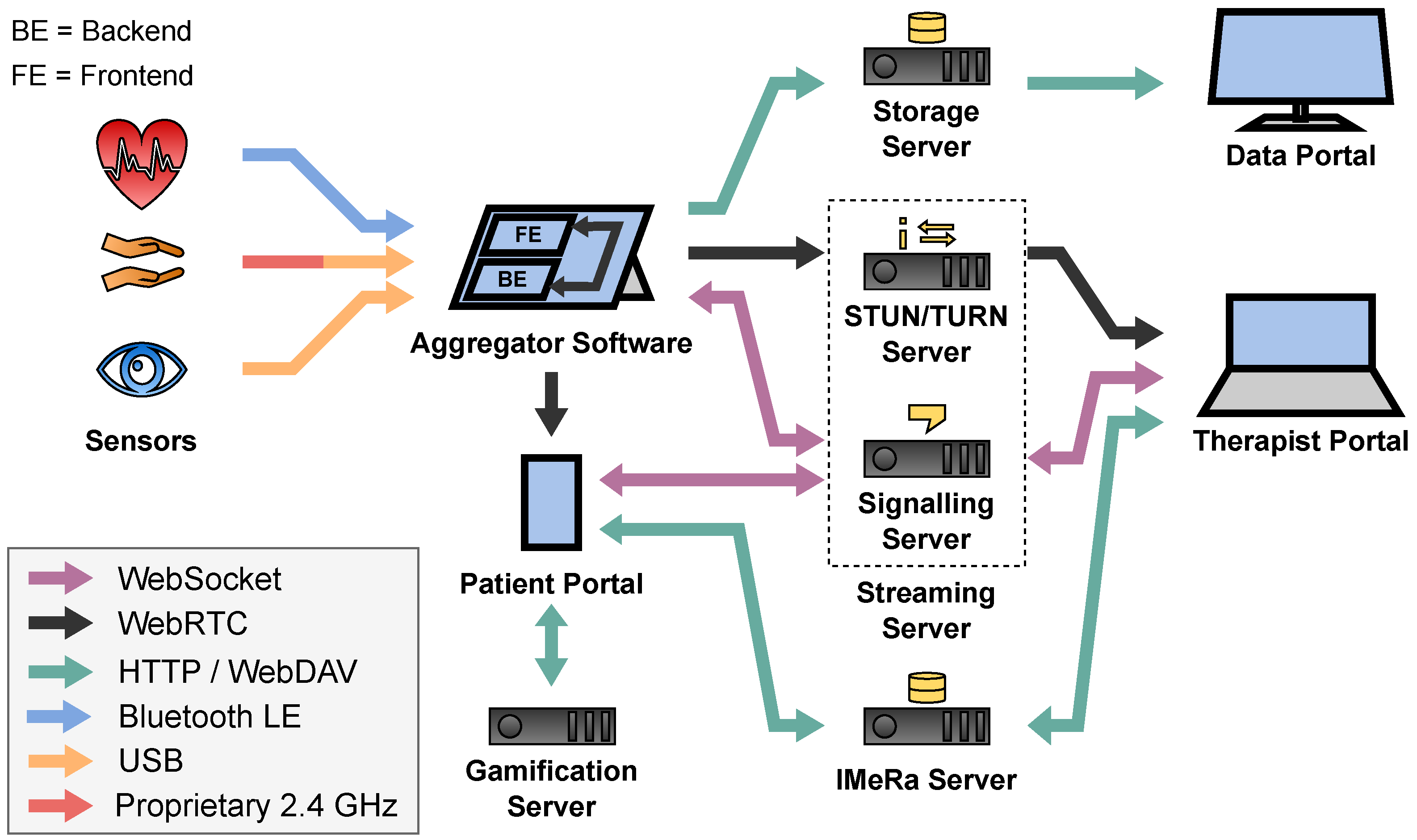

2. Architecture

- The transport architecture for the real-time streaming and the recording of the sensor data including the used sensors and how they transfer their data.

- The Questionnaire architecture where therapists can create questionnaires, send them to the patients and retrieve their responses including gamification elements to motivate the patients.

- The administration and security architecture where administrators can manage the overall system from one place.

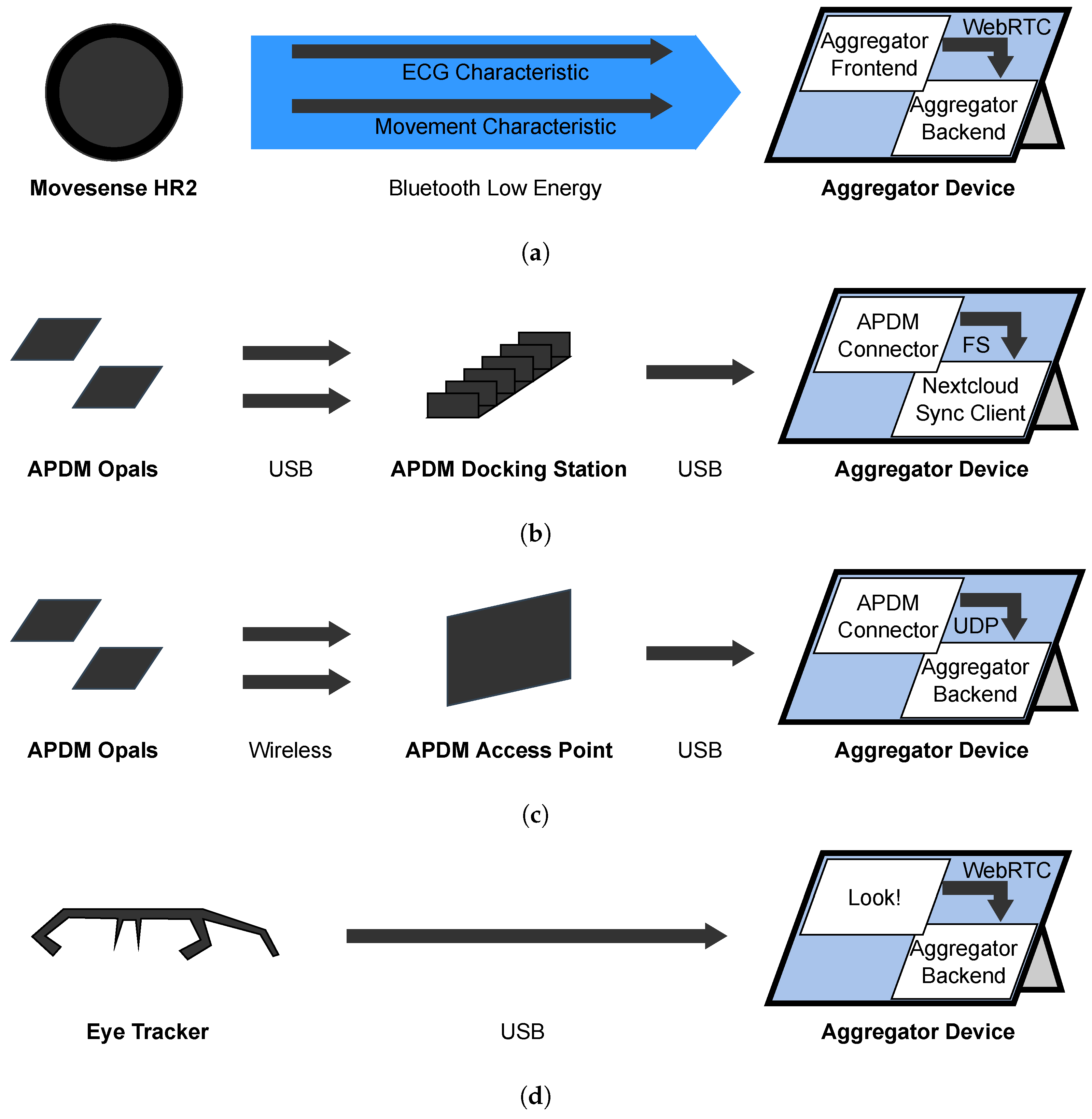

2.1. Sensor Transport Overview

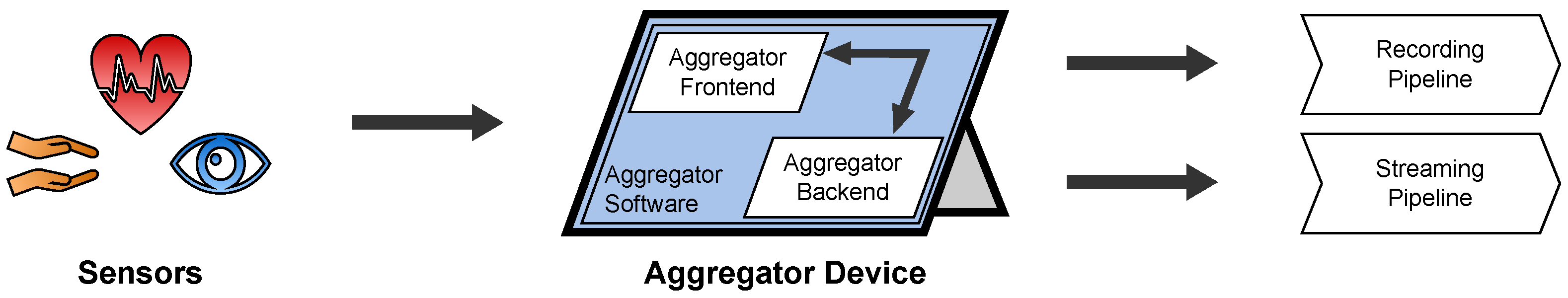

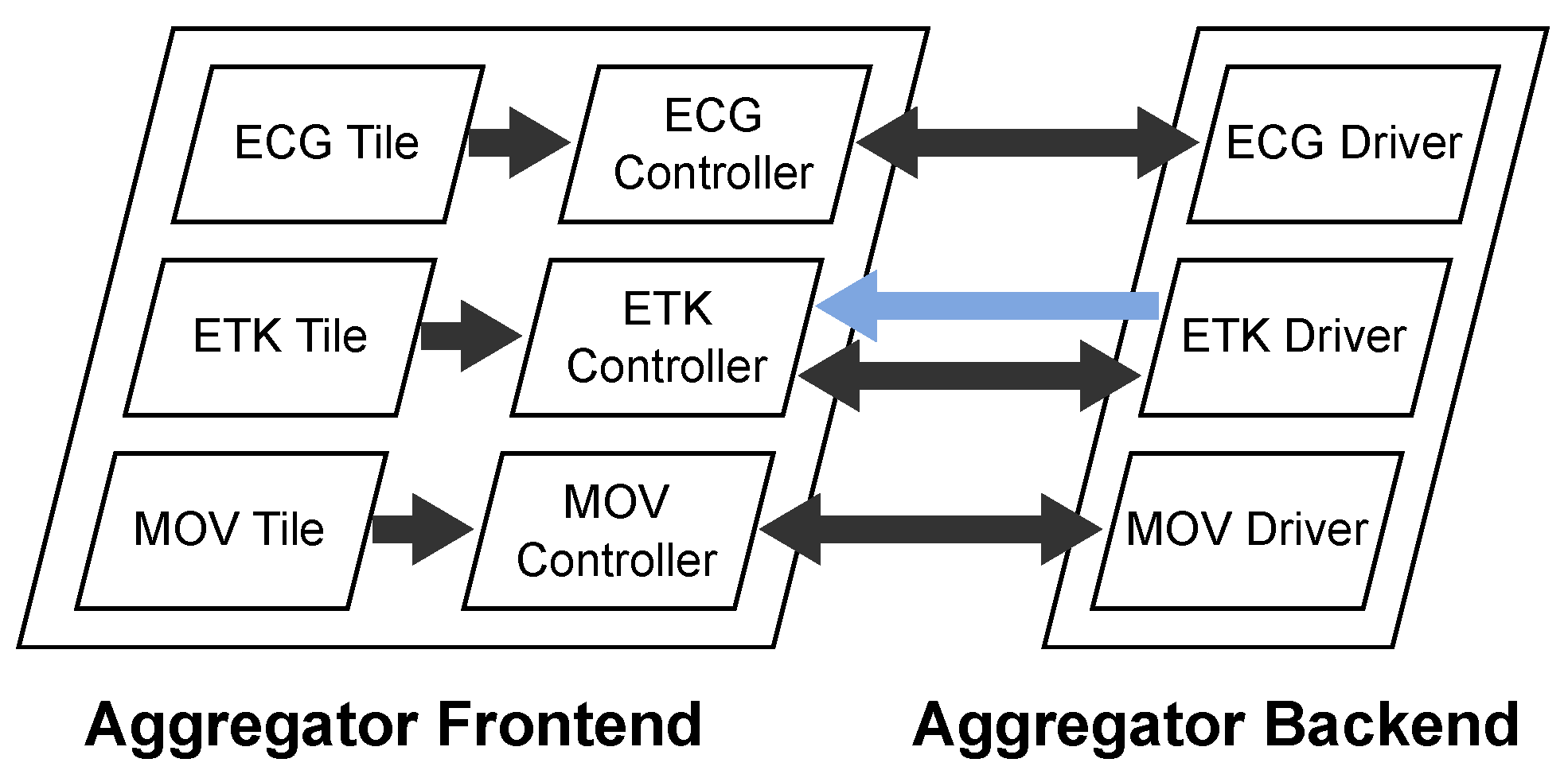

2.2. Aggregator

2.2.1. Overview

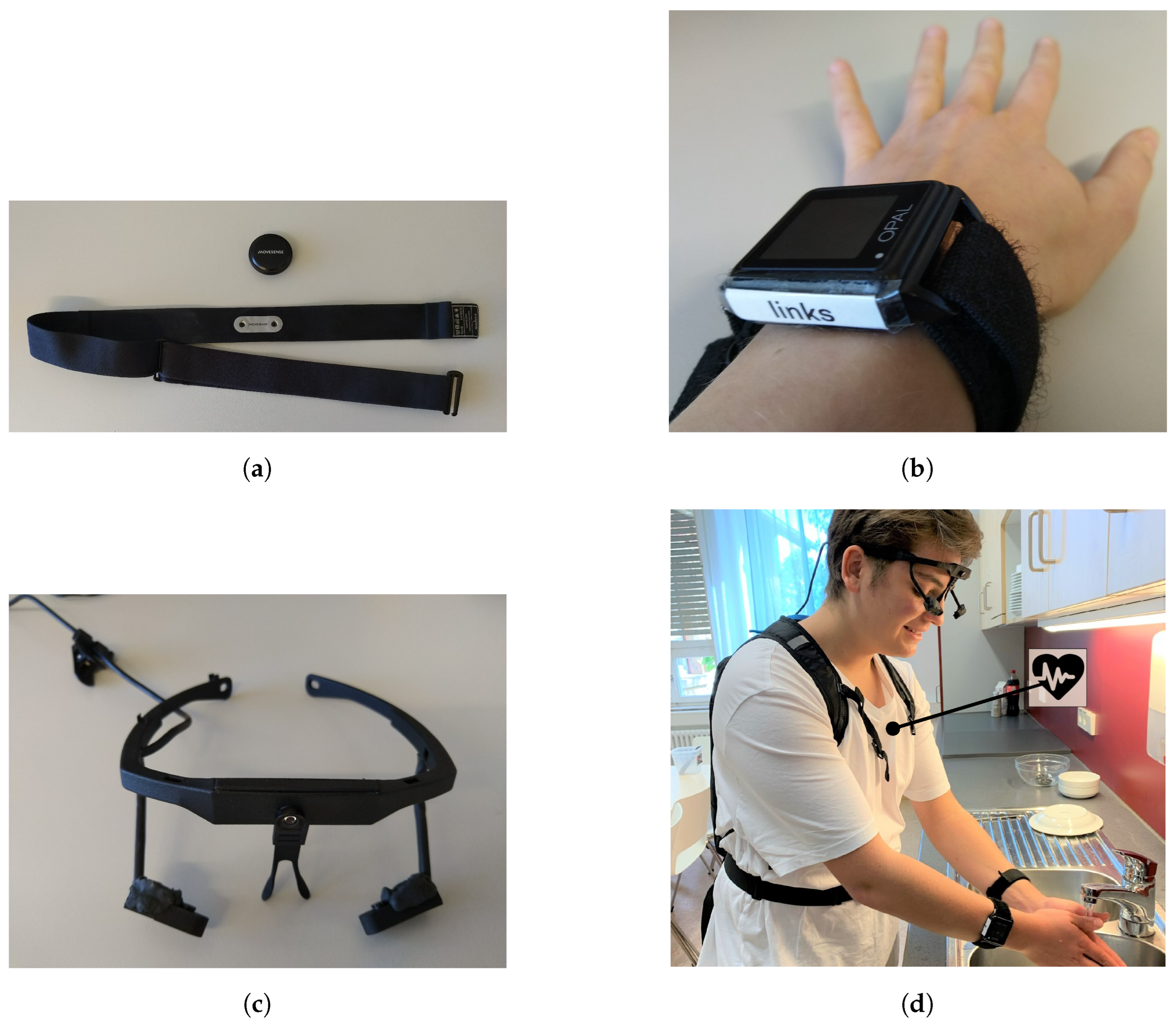

2.2.2. Hardware

- We enable BitLocker to encrypt the whole internal storage and verify the OS integrity to prevent booting an eventually manipulated OS.

- We lock the boot order to prevent booting another OS than the Windows installation.

- We restricted the UEFI firmware to boot only operating systems signed by Microsoft.

- We protect access to the UEFI firmware with a strong individual password to prevent unauthorized changes of the boot configuration.

- To prevent unauthorized logins, the tablets have only two Windows accounts: an Administrator account and a non-administrator patient account.

- The passwords of both accounts are strong (20 characters consisting of uppercase, lowercase, special letters, and digits) and individual for each device and patient.

- For a better user experience, patients can log in to their accounts with a 6-digit PIN or via Windows Hello face recognition.

- We prevent brute-force attacks with the following measures: if the PIN was entered wrong ten times, patients must use their password. After ten failed password tries, the device is locked for 30 min.

- Patients are forced to use the tablet only at home to add a kind of physical access control layer.

- Before a tablet is handed over to another patient, we format its disk entirely, clear the TPM’s key storage and reinstall the device with new UEFI and Administrator passwords.

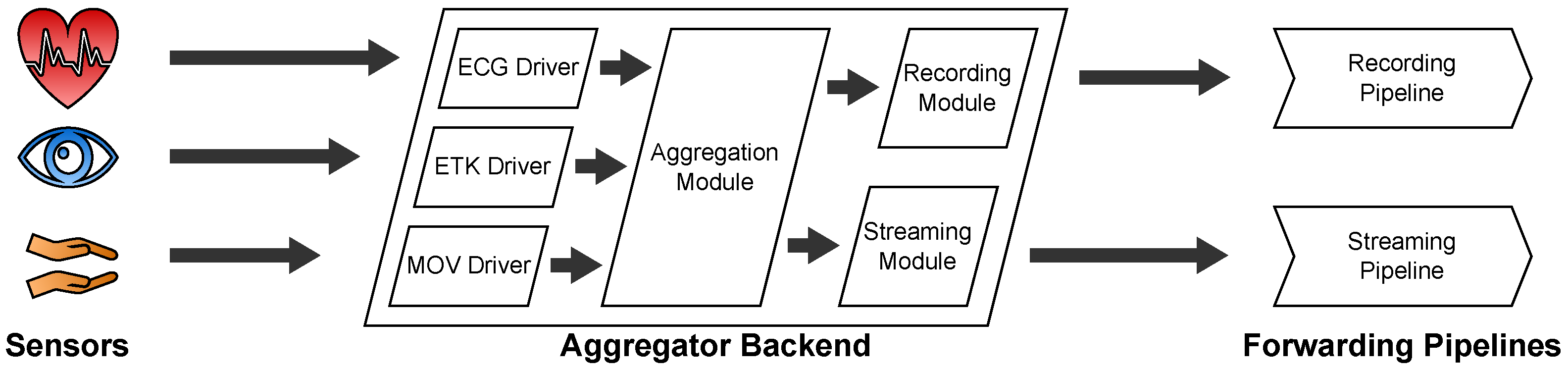

2.2.3. Backend

- If recording is on, it forwards the data to the Recording Module. The Recording Module stores the data on the internal storage of the Aggregator Device where the data continue their way through the Recording Pipeline.

- If there is an active streaming connection to a therapist, it forwards the data to the Streaming Module. The Streaming Module awaits incoming streaming connection requests from therapists via a WebSocket connection which is implemented with the Socket.IO [11] library. From there, the data continue their way through the Streaming Pipeline.

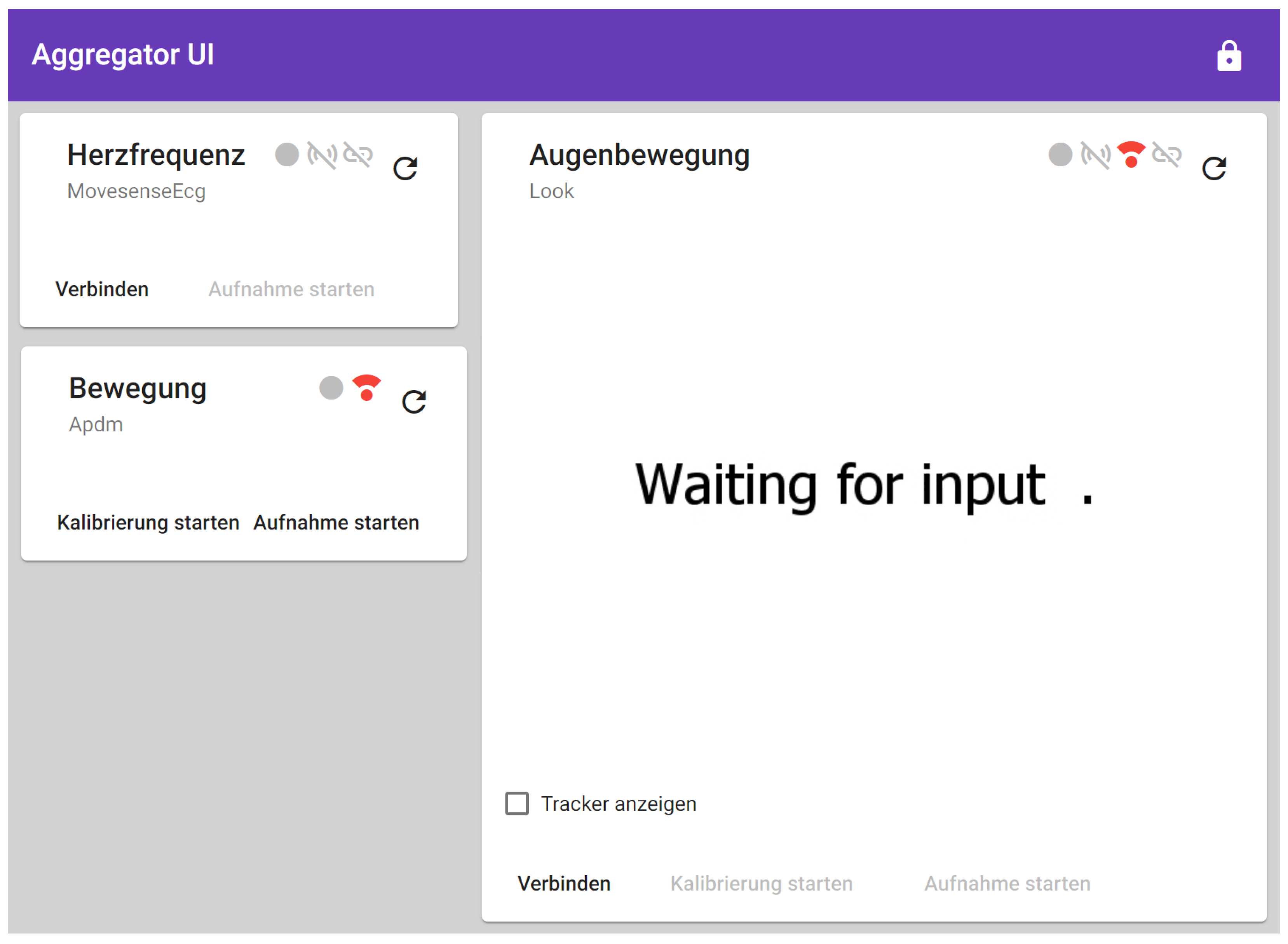

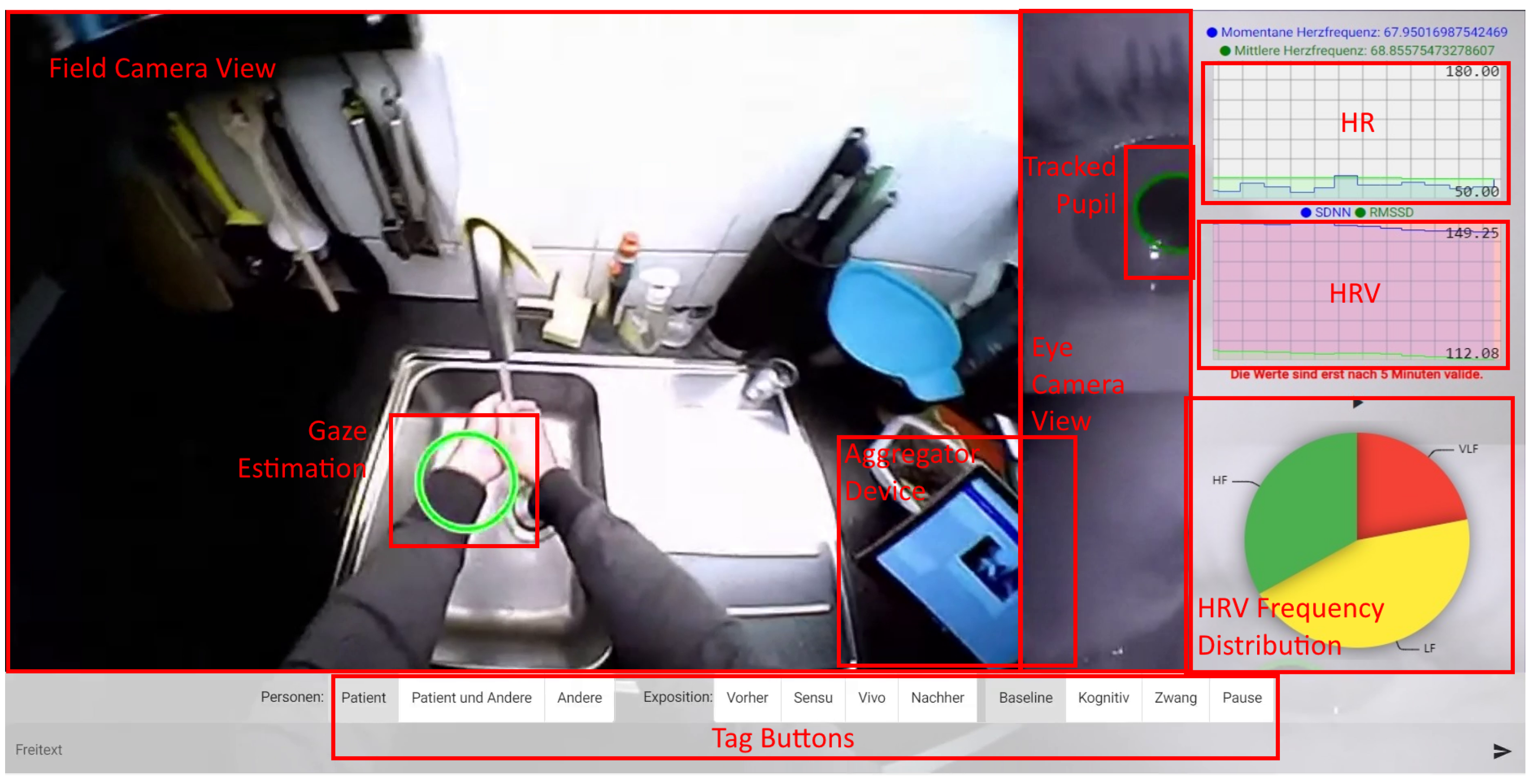

2.2.4. Frontend

2.3. Sensors

2.3.1. Movesense HR Sensor—Utilization

- The acceleration with a 3-axis accelerometer configured with a sampling rate of 52 Hz.

- The angular velocity with a 3-axis gyroscope configured with a sampling rate of 52 Hz.

- The magnetic field with a 3-axis magnetometer configured with a sampling rate of 52 Hz.

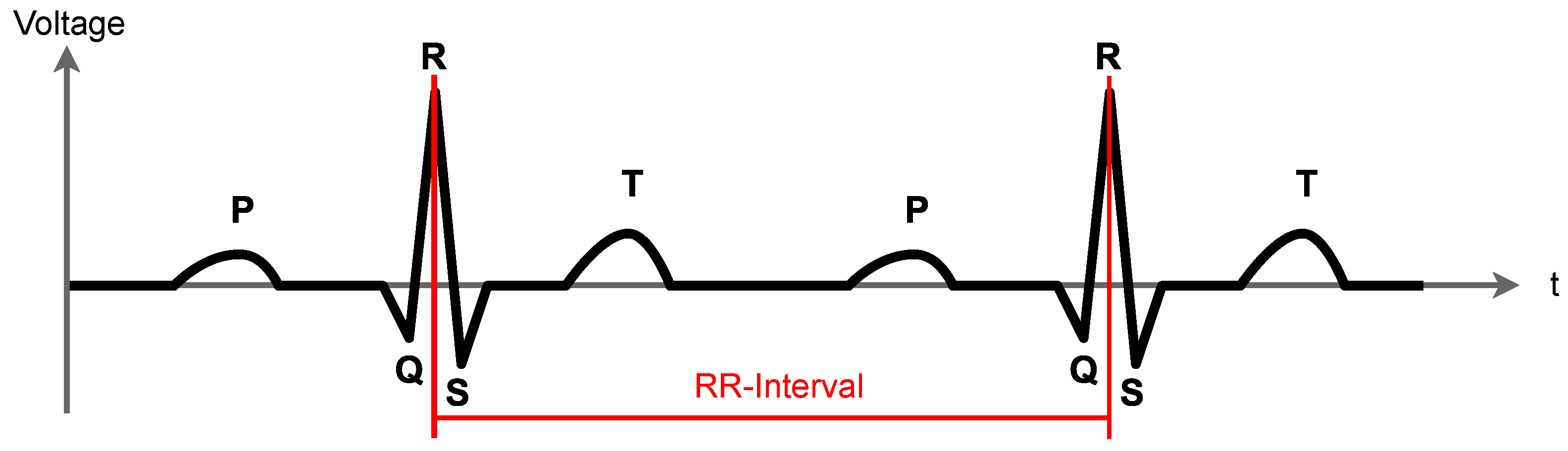

- The heart’s electrical activity with a single channel ECG configured with a sampling rate of 250 Hz.

- High HRV: patient is relaxed.

- Low HRV: patient is stressed.

2.3.2. Movesense HR Sensor—Implementation

- The ECG characteristic with the 16 bit UUID 0x2BDD which contains a set of measurements, encoded with values concatenated in the following order and as depicted in Figure 10:

- The relative timestamp as a 32 bit unsigned integer. It indicates how many milliseconds the last sample in the array was measured after startup of the sensor.

- The array of voltage samples with n times a 16 bit signed integer. They encode a sequence of ECG voltages in millivolts (mV).

- The movement characteristic with the 16 bit UUID 0x2BE2 which contains a set of measurements, encoded with values concatenated in the following order and as depicted in Figure 11:

- The relative timestamp as a 32 bit unsigned integer. It indicates how many milliseconds the last sample was measured after startup of the sensor.

- The array of movement samples with n times movement samples containing values in the following order:

- (a)

- The acceleration to the x, y, and z direction concatenated as 16 bit integer values in centimeter per seconds squared (cm/s).

- (b)

- The angular velocity around the x, y, and z axis concatenated as 16 bit integer values in decidegree per second (d/s).

- (c)

- The magnetic field to the x, y, and z axis concatenated as 16 bit integer values in centigauss (cgauss).

2.3.3. APDM Movement Sensors—Utilization

- The acceleration with a 3-axis accelerometer at a sampling rate of 128 Hz.

- The angular velocity with a 3-axis gyroscope at a sampling rate of 128 Hz.

- The magnetic field with a 3-axis magnetometer at a sampling rate of 128 Hz.

2.3.4. APDM Movement Sensors—Implementation

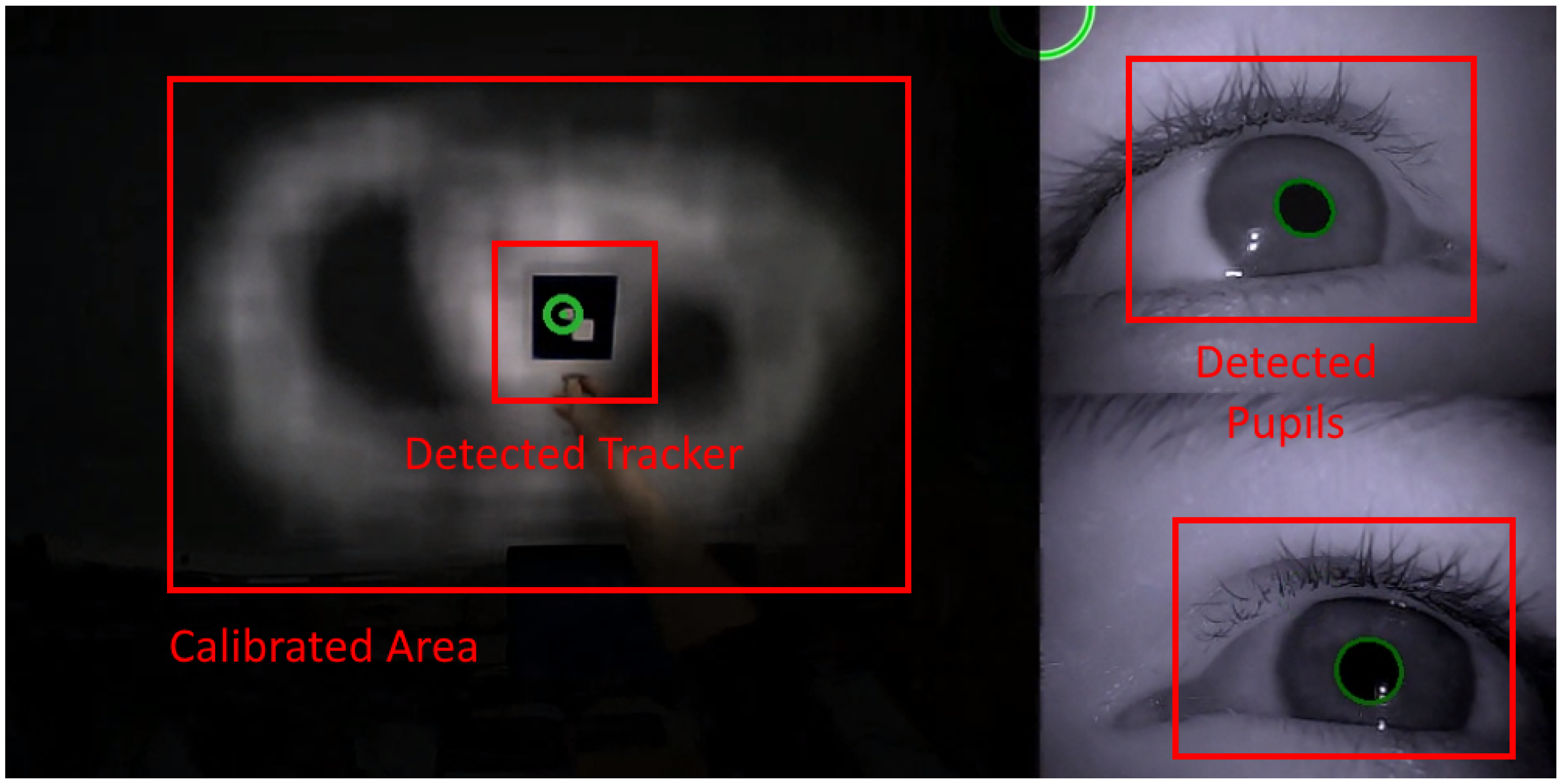

2.3.5. Look! Eye Tracker—Utilization

2.3.6. Look! Eye Tracker—Implementation

- Two infrared eye cameras with 30 frames per second and a resolution of 320 by 240 pixels.

- One field camera with 30 frames per second and a resolution of 640 by 480 pixels.

2.3.7. Look! Eye Tracker—Calibration

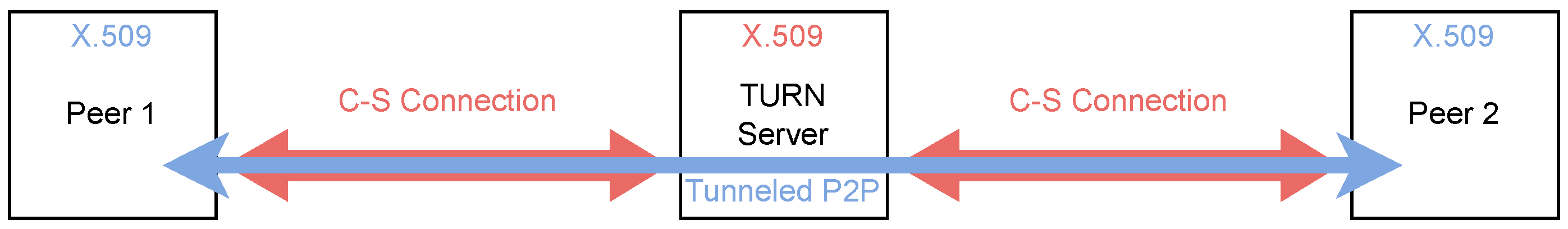

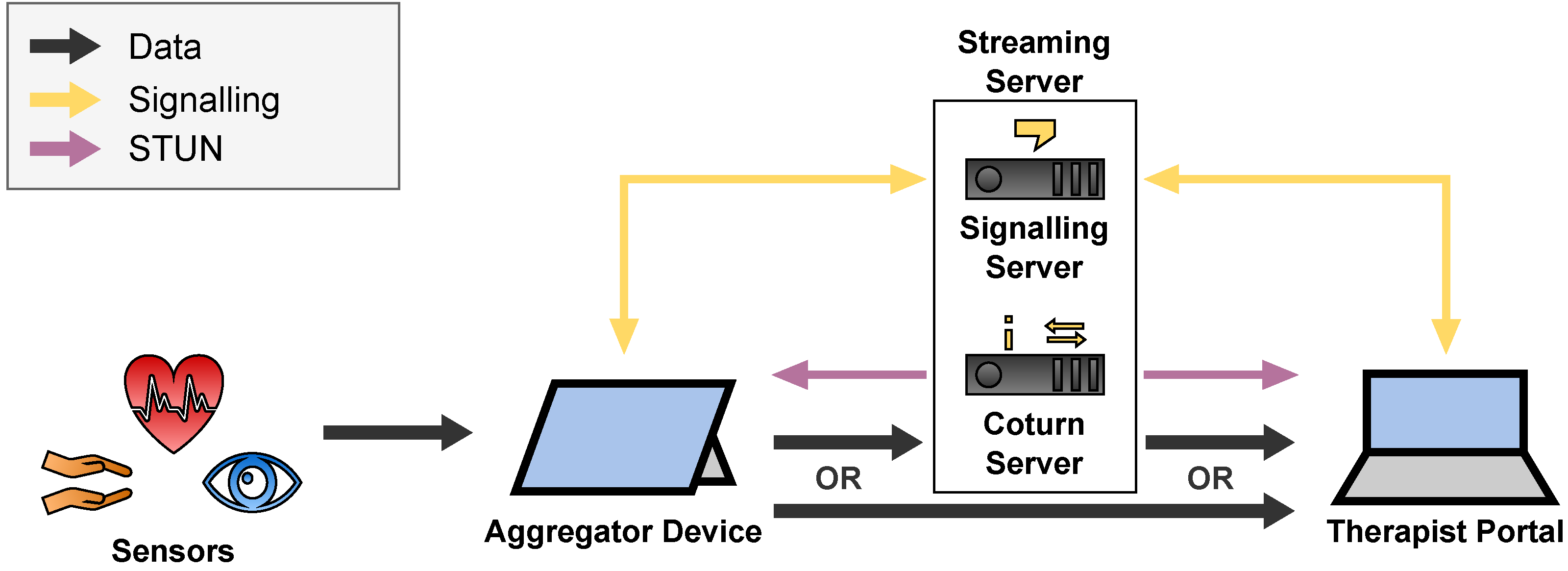

2.4. Streaming Server and Architecture

2.4.1. Overview

2.4.2. Streaming Components

- A Signalling Server to which the clients hold a WebSocket connect to exchange session descriptions and ICE candidates.

- A STUN Server which the clients use to gather the ICE candidates and to detect NATs.

- A TURN Server via which the clients can relay their traffic around NATs and firewall restrictions or to hide their IP addresses.

2.4.3. Security

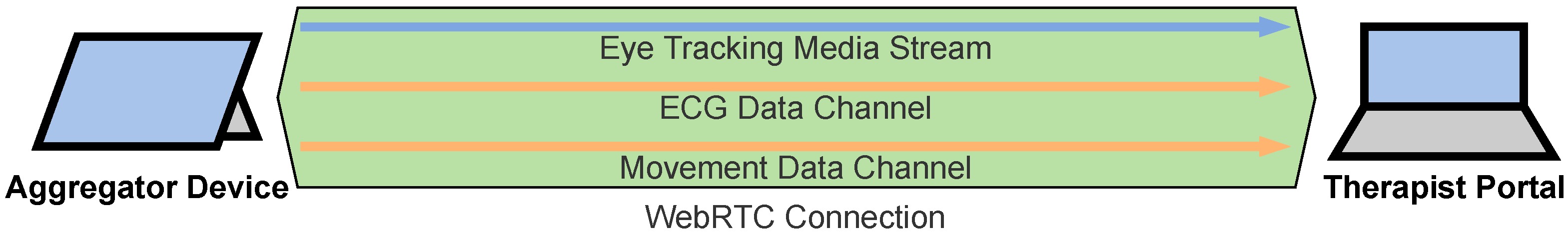

2.4.4. Sensor Data Channels

- The eye tracking video is streamed with RTP via UDP with a Media Stream. The Therapist Portal directly displays this video.

- The ECG evaluations are streamed JSON-encoded via SCTP with a Data Channel. The Data Channel has a maximum packet lifetime of 1 sec and does not care about ordered communication since the ECG values are timestamped so that the correct order can be reconstructed. The Therapist Portal visualizes the HR and HRV values as diagrams.

- The movement values are streamed JSON-encoded via SCTP with a Data Channel. The Data Channel has a maximum packet lifetime of 1 sec and does not care about ordered communication since the movement values are timestamped so that the correct order can be reconstructed. The Therapist Portal does not visualize these values yet since this would not be helpful for the therapists but we expect that the machine learning evaluations will help us to detect and display anomalies to the therapist in future.

2.4.5. Therapist Portal

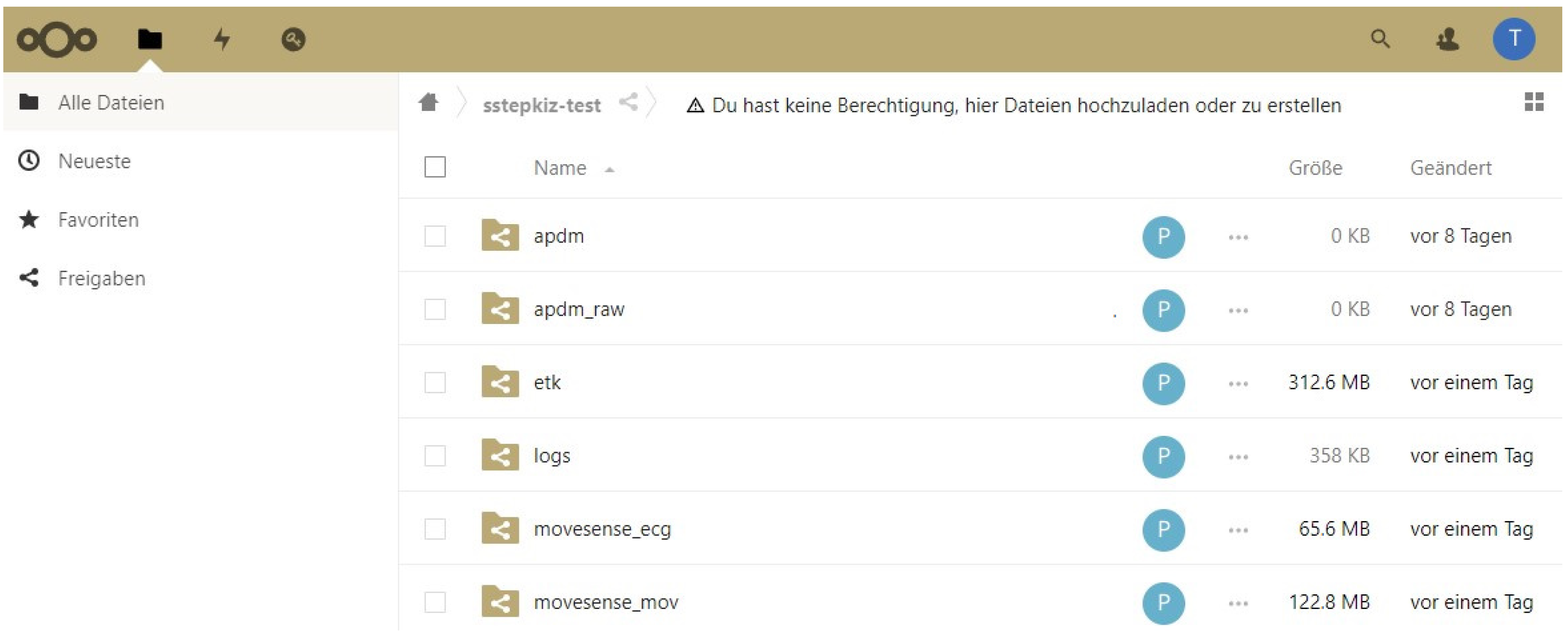

2.5. Recording Architecture

2.5.1. Overview

2.5.2. Storage Server

2.5.3. Security

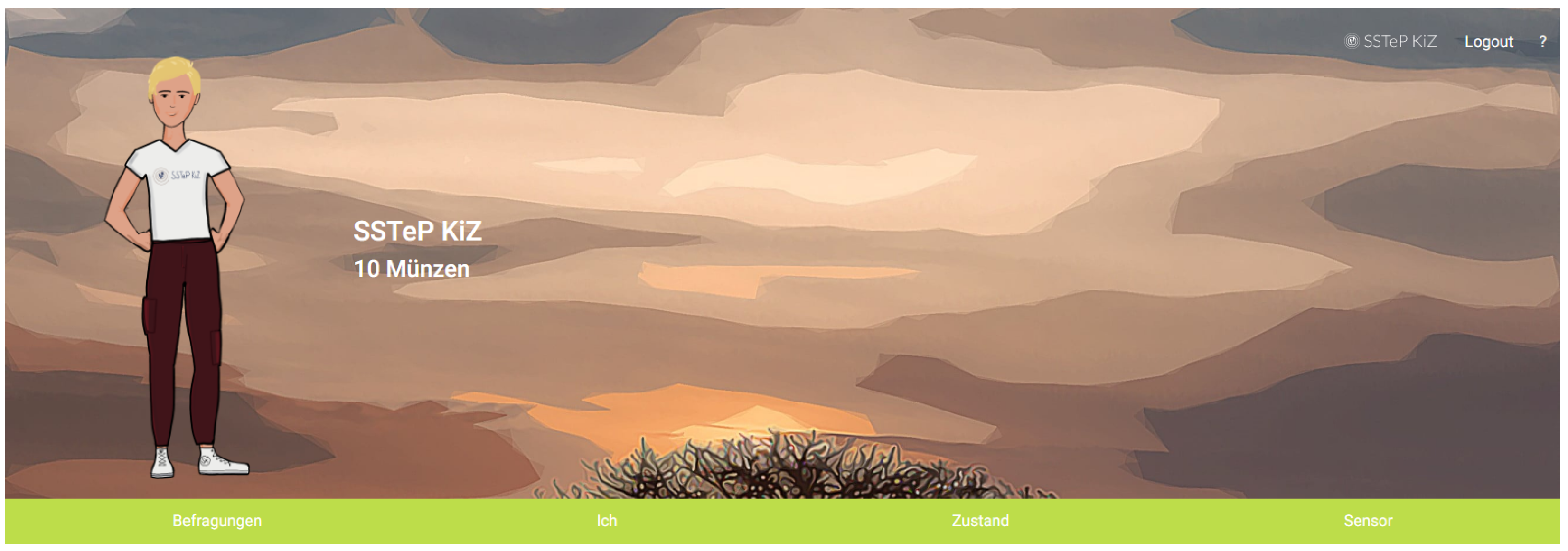

2.6. Questionnaire Architecture

2.6.1. Overview

- Therapists create questionnaires in the Therapist Portal.

- The Therapist Portal uploads these questionnaires to the IMeRa Server which stores structured study data of the medical center.

- Patients answer questionnaires in the Patient Portal.

- The Patient Portal therefore downloads the questions from the IMeRa Server and uploads the answers.

- Therapists evaluate answers in the Therapist Portal.

- The Therapist Portal therefore downloads the answers from the IMeRa Server

2.6.2. IMeRa Server

2.6.3. Patient Portal

2.6.4. Gamification

2.7. Administration and Security

2.7.1. Identity Server

- Configure specific scopes for the Signalling Server, IMeRa Server, and Gamification Server, which act as OAuth Resource Servers,

- Register the Therapist Portal, Patient Portal, Aggregator Software, and the Administrator Portal as OAuth Clients, and

- Manage the patient’s and therapist’s user accounts.

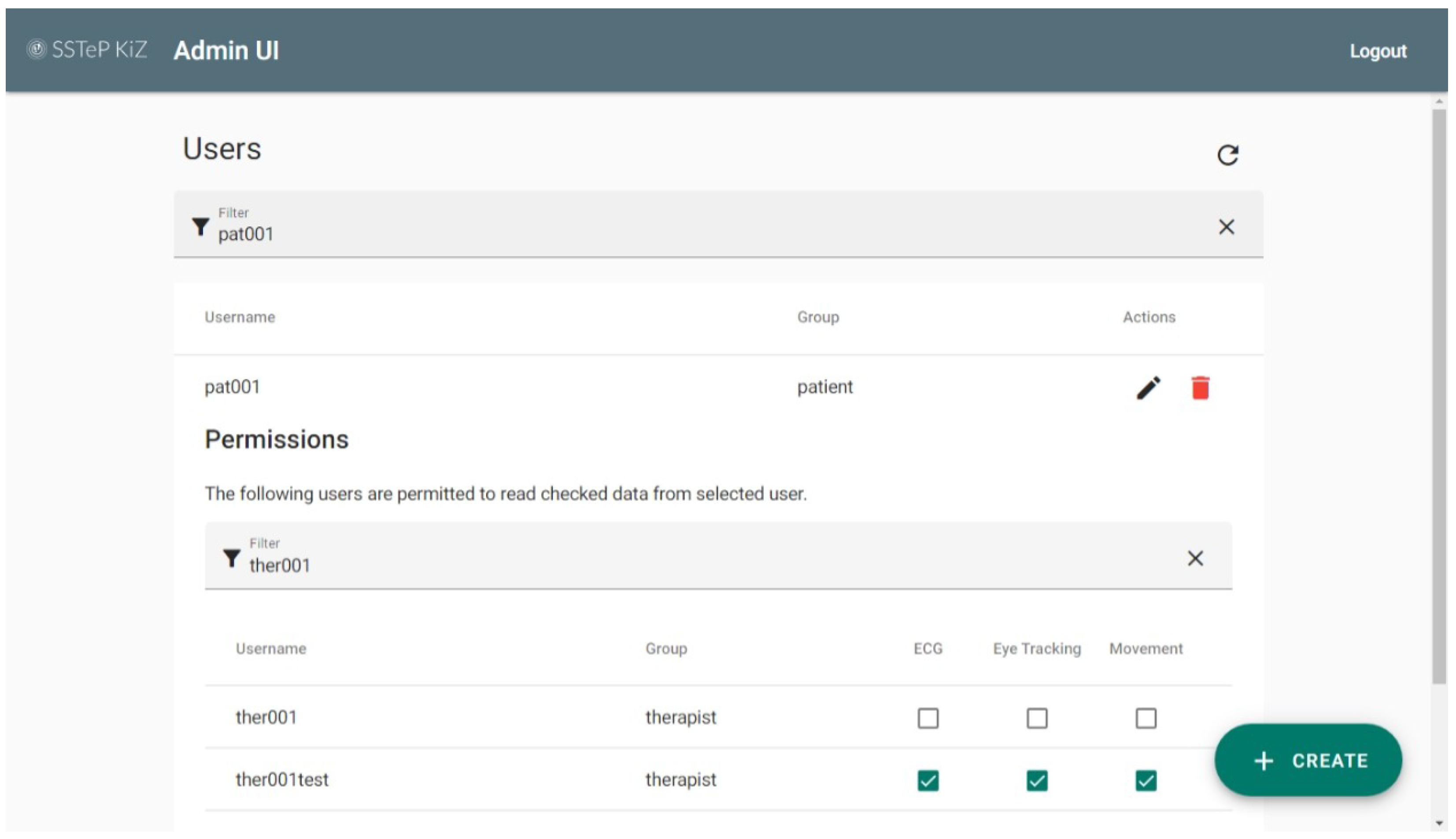

2.7.2. Administration Portal

- The user account on the Authorization Server.

- The user’s profile on the Gamification Server.

- The user’s profile on the IMeRa platform.

- The user’s streaming permissions on the Signalling Server.

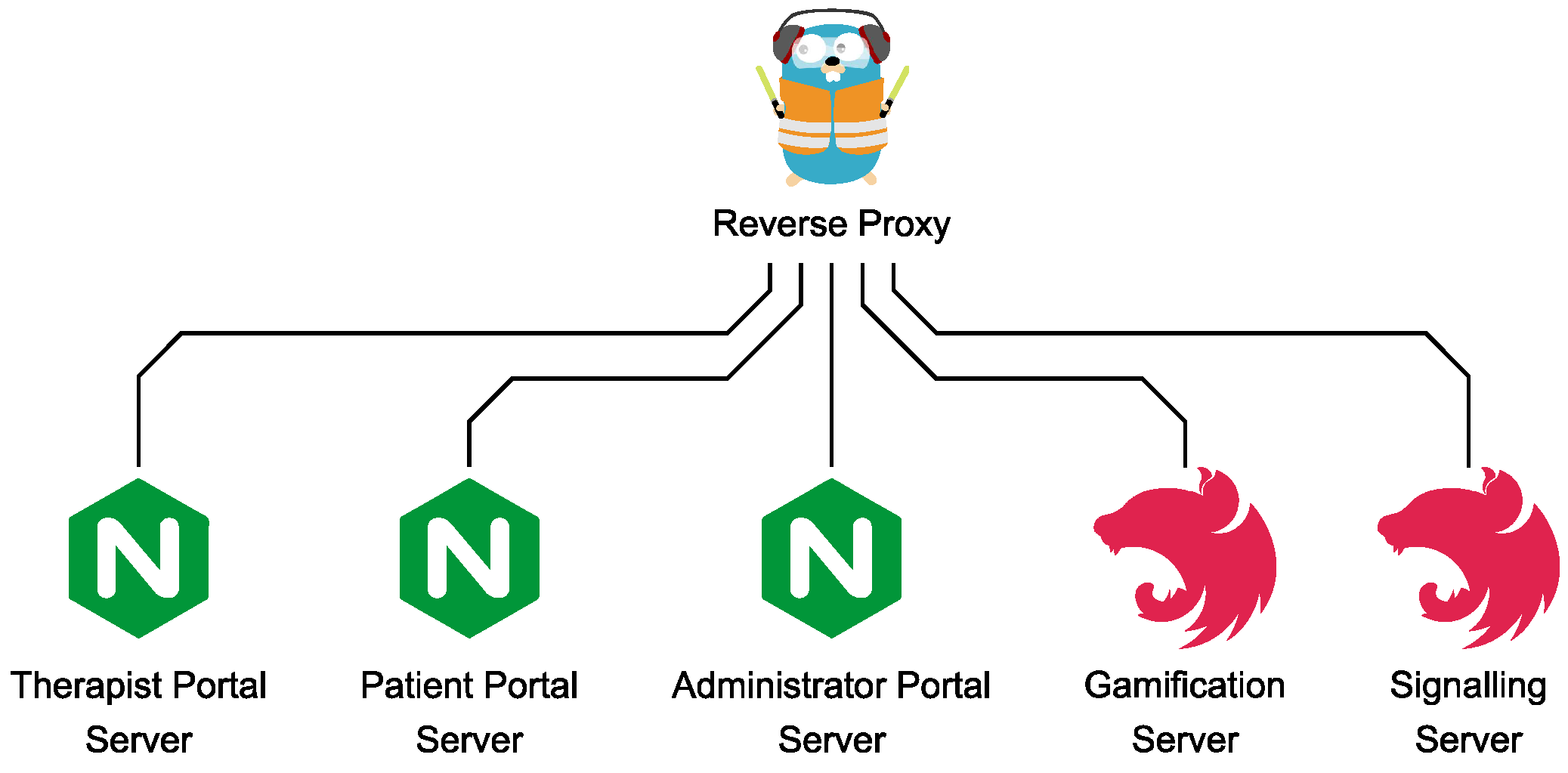

2.7.3. Server Administration

2.7.4. Reverse Proxy

2.7.5. Client Administration

3. Evaluations

3.1. Storage Requirements

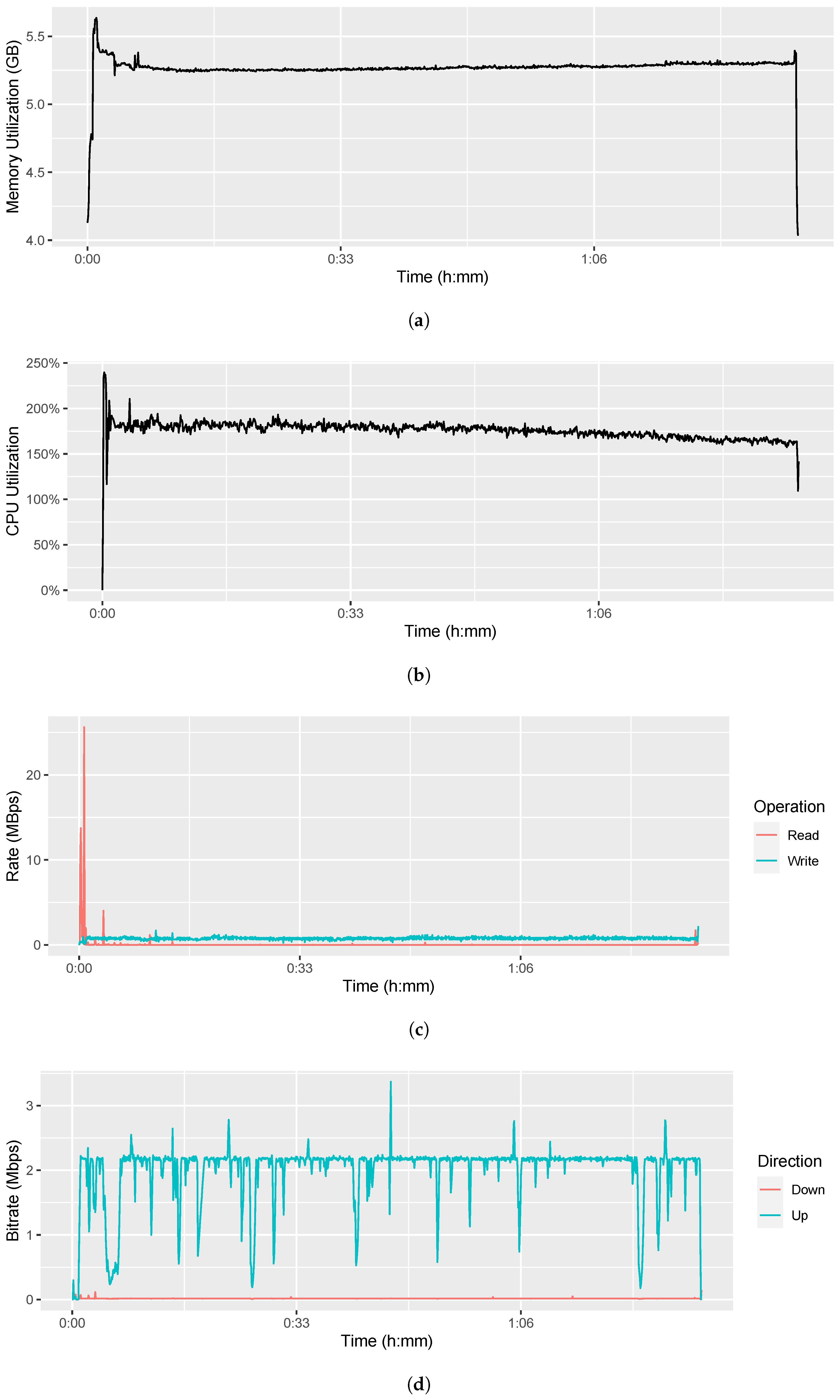

3.2. Aggregator System Performance

3.2.1. Memory Utilization

3.2.2. CPU Utilization

3.2.3. Energy Consumption

3.2.4. I/O Disk Utilization

3.3. Bandwidth Consumption

3.3.1. Bandwidth Consumption without Limitations

3.3.2. Bandwidth Consumption with Limitations

4. Summary

4.1. Application

4.2. Legal Aspects

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACME | Automatic Certificate Management Environment |

| API | Application Programming Interface |

| AS | Authorization Server |

| AT | Access Token |

| BLE | Bluetooth Low Energy |

| bpm | Beats per minute |

| CA | Certificate Authority |

| CSV | Comma-Separated Values |

| DTLS | Datagram Transport Layer Security |

| ECG | Electrocardiogram |

| GAP | Generic Access Profile |

| GATT | Generic ATTribute profile |

| HDF | Hierarchical Data Format |

| HR | Heart Rate |

| HRV | Heart Rate Variability |

| HTTP | HyperText Transfer Protocol |

| HTTPS | HyperText Transfer Protocol Secure |

| JSON | JavaScript Object Notation |

| ICE | Interactive Connection Establishment |

| IP | Internet Protocol |

| mTLS | Mutual Transport Layer Security |

| NAT | Network Address Translation |

| OCD | Obsessive-Compulsive Disorder |

| OS | Operating System |

| P2P | Peer-to-Peer |

| PKI | Public Key Infrastructure |

| PR | Protected Resource |

| REST | REpresentational State Transfer |

| RO | Resource Owner |

| RS | Resource Server |

| RTP | Real-Time Transport Protocol |

| SCTP | Stream Control Transmission Protocol |

| SDP | Session Description Protocol |

| SIG | Special Interest Group |

| SSO | Single Sign-On |

| STUN | Session Traversal Utility for NAT |

| TCP | Transmission Control Protocol |

| TLS | Transport Layer Security |

| TSV | Tab-Separated Values |

| TURN | Traversal Using Relays around NAT |

| UDP | User Datagram Protocol |

| UI | User Interface |

| USB | Universal Serial Bus |

| UUID | Universally Unique IDentifier. |

| VM | Virtual Machine |

| WebDAV | Web-based Distributed and Versioning |

| WebRTC | Web Real-Time Communication |

| WWW | World Wide Web |

Appendix A. Technologies

Appendix A.1. Bluetooth Low Energy (BLE)

Appendix A.2. Transport Layer Protocols

Appendix A.2.1. User Datagram Protocol (UDP)

Appendix A.2.2. Transmission Control Protocol (TCP)

Appendix A.2.3. Stream Control Transmission Protocol (SCTP)

Appendix A.3. Transport Layer Security Protocols

- Authenticity: the sender of a message is non-repudiable.

- Confidentiality: only communication partners can read the sent messages.

- Integrity: modifications of messages in transfer are detectable.

Appendix A.3.1. Digital Signatures

Appendix A.3.2. Certificate-Based Authentication

Appendix A.3.3. Transport Layer Security (TLS)

Appendix A.3.4. Datagram Transport Layer Security (DTLS)

Appendix A.4. HTTP Communication

Appendix A.5. Real-Time Communication

Appendix A.5.1. Network Address Translation (NAT)

Appendix A.5.2. Interactive Connection Establishment (ICE)

Appendix A.5.3. Session Description Protocol (SDP)

Appendix A.5.4. Web Real-Time Communication (WebRTC)

- Data Channels for text-based and binary data transfer via configurable SCTP (see Appendix A.2.3) connections.

- Media Streams for real-time audio and video streaming with the Real-time Transport Protocol (RTP) via UDP, as specified in RFC 3550 [71]. WebRTC automatically handles the bit-rate adaption to the available bandwidth by adjusting the media quality.

Appendix A.6. OAuth

Appendix A.6.1. Authentication and Authorization

- Authentication is when an entity proves his identity.

- Authorization is when an entity proves permissions to access specific resources.

Appendix A.6.2. OAuth Components

- Resource Owner (RO): the user who owns Protected Resources (PR), e.g., files.

- Client: the application that the RO authorizes to access its PRs.

- Authorization Server (AS): the server which verifies the identity of a RO and issues a signed Access Token (AT) to the Client after the RO granted that.

- Resource Server (RS): stores the RO’s PRs and verifies ATs of requesting Clients to be valid and sufficient to access the PRs.

Appendix A.6.3. Access Token Validation

- Verify that the issuer of the AT is a trusted AS.

- Verify that the AT is signed by the trusted AS.

- Verify that the RS is the audience, which is stated in the AT.

- Verify that the scope of the AT is sufficient to access the requested resources.

Appendix A.6.4. OpenID Connect

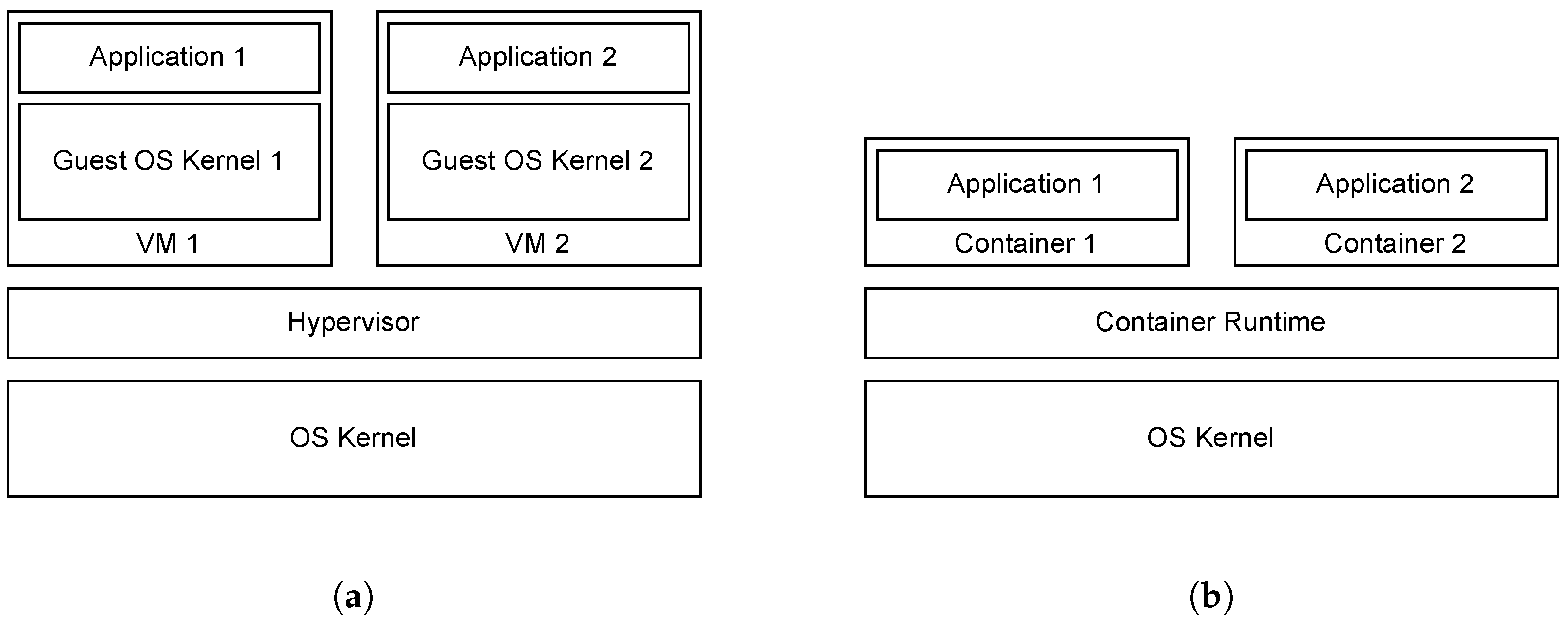

Appendix A.7. Service Administration

Appendix A.7.1. Virtualization

Appendix A.7.2. Containerization

References

- Lin, T.; Heckman, T.G.; Anderson, T. The efficacy of synchronous teletherapy versus in-person therapy: A meta-analysis of randomized clinical trials. Clin. Psychol. Sci. Pract. 2022, 29, 167–178. [Google Scholar] [CrossRef]

- Békés, V.; van Doorn, K.A.; Zilcha-Mano, S.; Prout, T.; Hoffman, L. Psychotherapists’ Acceptance of Telepsychotherapy: A Machine Learning Approach. Eur. Psychiatry 2022, 65, S168. [Google Scholar] [CrossRef]

- Békés, V.; van Doorn, K.A.; Bothe, B. Assessing patients’ attitudes towards telepsychotherapy: The development of the unified theory of acceptance and use of technology–patient version. Clin. Psychol. Psychother. 2022. [Google Scholar] [CrossRef] [PubMed]

- Swartz, H.A. Telepsychotherapy: The New Normal. Am. J. Psychother. 2021, 74, 2–3. [Google Scholar] [CrossRef] [PubMed]

- Abramowitz, J.S.; Taylor, S.; McKay, D. Obsessive-compulsive disorder. Lancet 2009, 374, 491–499. [Google Scholar] [CrossRef] [PubMed]

- Krebs, G.; Heyman, I. Obsessive-compulsive disorder in children and adolescents. Arch. Dis. Child. 2014, 100, 495–499. [Google Scholar] [CrossRef] [PubMed]

- Hollmann, K.; Allgaier, K.; Hohnecker, C.S.; Lautenbacher, H.; Bizu, V.; Nickola, M.; Wewetzer, G.; Wewetzer, C.; Ivarsson, T.; Skokauskas, N.; et al. Internet-based cognitive behavioral therapy in children and adolescents with obsessive compulsive disorder: A feasibility study. J. Neural Transm. 2021, 128, 1445–1459. [Google Scholar] [CrossRef] [PubMed]

- Vidyo Connect. Available online: https://www.vidyo.com/video-conferencing-solutions/product/desktop-video-calling (accessed on 10 November 2022).

- Node.js. Available online: https://nodejs.org/ (accessed on 10 November 2022).

- Nest.js. Available online: https://nestjs.com/ (accessed on 10 November 2022).

- Socket.io. Available online: https://socket.io/ (accessed on 10 November 2022).

- Angular. Available online: https://angular.io/ (accessed on 10 November 2022).

- Kwon, O.; Jeong, J.; Kim, H.B.; Kwon, I.H.; Park, S.Y.; Kim, J.E.; Choi, Y. Electrocardiogram Sampling Frequency Range Acceptable for Heart Rate Variability Analysis. Healthcare Inform. Res. 2018, 24, 198–206. [Google Scholar] [CrossRef]

- Castaldo, R.; Montesinos, L.; Melillo, P.; James, C.; Pecchia, L. Ultra-short term HRV features as surrogates of short term HRV: A case study on mental stress detection in real life. BMC Med Inform. Decis. Mak. 2019, 19. [Google Scholar] [CrossRef] [PubMed]

- Grant, R.; Ruiz-Henríquez, O. Web Bluetooth; Draft Community Group Report; W3C: Cambridge, MA, USA, 2022. [Google Scholar]

- APDM Wearable Sensors. Available online: https://apdm.com/wearable-sensors/ (accessed on 10 November 2022).

- Thierfelder, A.; Primbs, J.; Severitt, B.; Hohnecker, C.S.; Kühnhausen, J.; Alt, A.K.; Pascher, A.; Wörz, U.; Passon, H.; Seemann, J.; et al. Multimodal Sensor-Based Identification of Stress and Compulsive Actions in Children with Obsessive-Compulsive Disorder for Telemedical Treatment. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Glasgow, UK, 11–15 July 2022. [Google Scholar] [CrossRef]

- Ilg, W.; Seemann, J.; Giese, M.; Traschütz, A.; Schöls, L.; Timmann, D.; Synofzik, M. Real-life gait assessment in degenerative cerebellar ataxia: Toward ecologically valid biomarkers. Neurology 2020, 95, e1199–e1210. [Google Scholar] [CrossRef] [PubMed]

- Thierfelder, A.; Seemann, J.; John, N.; Harmuth, F.; Giese, M.; Schüle, R.; Schöls, L.; Timmann, D.; Synofzik, M.; Ilg, W. Real-Life Turning Movements Capture Subtle Longitudinal and Preataxic Changes in Cerebellar Ataxia. Mov. Disord. 2022, 37, 1047–1058. [Google Scholar] [CrossRef] [PubMed]

- Kübler, T.C. Look! Blickschulungsbrille-Technical Documentation; Technical Report; Look! ET: Tübingen, Germany, 2021. [Google Scholar]

- Coturn. Available online: https://github.com/coturn/coturn/ (accessed on 10 November 2022).

- Rescorla, E.; Modadugu, N. Datagram Transport Layer Security Version 1.2. RFC 6347, RFC Editor. 2012. Available online: http://www.rfc-editor.org/rfc/rfc6347.txt (accessed on 10 November 2022).

- Cooley, D. Commercial National Security Algorithm (CNSA) Suite Profile for TLS and DTLS 1.2 and 1.3. RFC 9151, RFC Editor. 2022. Available online: http://www.rfc-editor.org/rfc/rfc9151.txt (accessed on 10 November 2022).

- Technische Richtlinie TR-02102-2 Kryptographische Verfahren: Empfehlungen und Schlüssellängen; Technical Report; Bundesamt für Sicherheit in der Informationstechnik: Bonn, Germany, 2022.

- NGINX. Available online: https://nginx.com/ (accessed on 10 November 2022).

- Nextcloud. Available online: http://nextcloud.com/ (accessed on 10 November 2022).

- van Berkel, N.; Goncalves, J.; Hosio, S.; Kostakos, V. Gamification of Mobile Experience Sampling Improves Data Quality and Quantity. Proc. ACM Interact. Mobile Wearable Ubiquitous Technol. 2017, 1, 1–21. [Google Scholar] [CrossRef]

- IMeRa. Available online: https://www2.medizin.uni-tuebingen.de/nfmi/imera/imera_start.html (accessed on 10 November 2022).

- Spring. Available online: https://spring.io/ (accessed on 10 November 2022).

- Keycloak. Available online: http://keycloak.org/ (accessed on 10 November 2022).

- Jones, M.; Jones, J.; Lundberg, E.; Hodges, J.; Kumar, A. Web Authentication: An API for accessing Public Key Credentials—Level 2. W3C recommendation, W3C. 2021. Available online: https://www.w3.org/TR/2021/REC-webauthn-2-20210408/ (accessed on 10 November 2022).

- Docker. Available online: https://www.docker.com/ (accessed on 10 November 2022).

- Docker Compose. Available online: https://docs.docker.com/compose/ (accessed on 10 November 2022).

- Traefik Proxy. Available online: https://traefik.io/traefik/ (accessed on 10 November 2022).

- NetLimiter. Available online: https://www.netlimiter.com/ (accessed on 10 November 2022).

- SSTeP-KiZ Source Code. Available online: https://github.com/uni-tue-kn/sstepkiz (accessed on 10 November 2022).

- Movesense ECG Firmware. Available online: https://github.com/JonasPrimbs/movesense-ble-ecg-firmware (accessed on 10 November 2022).

- IEEE Std 802.15.4-2011 (Revision of IEEE Std 802.15.4-2006); IEEE Standard for Local and metropolitan area networks–Part 15.4: Low-Rate Wireless Personal Area Networks (LR-WPANs); IEEE: Piscataway, NJ, USA, 2011; pp. 1–314. [CrossRef]

- Bluetooth SIG. Generic Access Profile; Technical Report; Bluetooth SIG: Kirkland, WA, USA, 2021. [Google Scholar]

- Bluetooth SIG. Heart Rate Service; Technical Report; Bluetooth SIG: Kirkland, WA, USA, 2011. [Google Scholar]

- Postel, J. User Datagram Protocol. RFC 768, RFC Editor. 1980. Available online: http://www.rfc-editor.org/rfc/rfc768.txt (accessed on 10 November 2022).

- Postel, J. Transmission Control Protocol. RFC 793, RFC Editor. 1981. Available online: http://www.rfc-editor.org/rfc/rfc793.txt (accessed on 10 November 2022).

- Braden, R. Requirements for Internet Hosts-Communication Layers. RFC 1122, RFC Editor. 1989. Available online: http://www.rfc-editor.org/rfc/rfc1122.txt (accessed on 10 November 2022).

- Ramakrishnan, K.; Floyd, S.; Black, D. The Addition of Explicit Congestion Notification (ECN) to IP. RFC 3168, RFC Editor. 2001. Available online: http://www.rfc-editor.org/rfc/rfc3168.txt (accessed on 10 November 2022).

- Gont, F.; Yourtchenko, A. On the Implementation of the TCP Urgent Mechanism. RFC 6093, RFC Editor. 2011. Available online: http://www.rfc-editor.org/rfc/rfc6093.txt (accessed on 10 November 2022).

- Gont, F.; Bellovin, S. Defending against Sequence Number Attacks. RFC 6528, RFC Editor. 2012. Available online: http://www.rfc-editor.org/rfc/rfc6528.txt (accessed on 10 November 2022).

- Stewart, R.; Tüxen, M.; Nielsen, K. Stream Control Transmission Protocol. RFC 9260, RFC Editor. 2022. Available online: http://www.rfc-editor.org/rfc/rfc9260.txt (accessed on 10 November 2022).

- Stewart, R.; Xie, Q.; Morneault, K.; Sharp, C.; Schwarzbauer, H.; Taylor, T.; Rytina, I.; Kalla, M.; Zhang, L.; Paxson, V. Stream Control Transmission Protocol. RFC 2960, RFC Editor. 2000. Available online: http://www.rfc-editor.org/rfc/rfc2960.txt (accessed on 10 November 2022).

- Stewart, R. Stream Control Transmission Protocol. RFC 4960, RFC Editor. 2007. Available online: http://www.rfc-editor.org/rfc/rfc4960.txt (accessed on 10 November 2022).

- Barnes, R.; Hoffman-Andrews, J.; McCarney, D.; Kasten, J. Automatic Certificate Management Environment (ACME). RFC 8555, RFC Editor. 2019. Available online: http://www.rfc-editor.org/rfc/rfc8555.txt (accessed on 10 November 2022).

- Cooper, D.; Santesson, S.; Farrell, S.; Boeyen, S.; Housley, R.; Polk, W. Internet X.509 Public Key Infrastructure Certificate and Certificate Revocation List (CRL) Profile. RFC 5280, RFC Editor. 2008. Available online: http://www.rfc-editor.org/rfc/rfc5280.txt (accessed on 10 November 2022).

- Housley, R.; Ford, W.; Polk, T.; Solo, D. Internet X.509 Public Key Infrastructure Certificate and CRL Profile. RFC 2459, RFC Editor. 1999. Available online: http://www.rfc-editor.org/rfc/rfc2459.txt (accessed on 10 November 2022).

- Housley, R.; Polk, W.; Ford, W.; Solo, D. Internet X.509 Public Key Infrastructure Certificate and Certificate Revocation List (CRL) Profile. RFC 3280, RFC Editor. 2002. Available online: http://www.rfc-editor.org/rfc/rfc3280.txt (accessed on 10 November 2022).

- Yee, P. Updates to the Internet X.509 Public Key Infrastructure Certificate and Certificate Revocation List (CRL) Profile. RFC 6818, RFC Editor. 2013. Available online: http://www.rfc-editor.org/rfc/rfc6818.txt (accessed on 10 November 2022).

- Melnikov, A.; Chuang, W. Internationalized Email Addresses in X.509 Certificates. RFC 8398, RFC Editor. 2018. Available online: http://www.rfc-editor.org/rfc/rfc8398.txt (accessed on 10 November 2022).

- Housley, R. Internationalization Updates to RFC 5280. RFC 8399, RFC Editor. 2018. Available online: http://www.rfc-editor.org/rfc/rfc8399.txt (accessed on 10 November 2022).

- Rescorla, E. The Transport Layer Security (TLS) Protocol Version 1.3. RFC 8446, RFC Editor. 2018. Available online: http://www.rfc-editor.org/rfc/rfc8446.txt (accessed on 10 November 2022).

- Rescorla, E.; Tschofenig, H.; Modadugu, N. The Datagram Transport Layer Security (DTLS) Protocol Version 1.3. RFC 9147, RFC Editor. 2022. Available online: http://www.rfc-editor.org/rfc/rfc9147.txt (accessed on 10 November 2022).

- Fielding, R.T.; Gettys, J.; Mogul, J.C.; Nielsen, H.F.; Masinter, L.; Leach, P.J.; Berners-Lee, T. Hypertext Transfer Protocol–HTTP/1.1. RFC 2616, RFC Editor. 1999. Available online: http://www.rfc-editor.org/rfc/rfc2616.txt (accessed on 10 November 2022).

- Thomson, M.; Benfield, C. HTTP/2. RFC 9113, RFC Editor. 2022. Available online: http://www.rfc-editor.org/rfc/rfc9113.txt (accessed on 10 November 2022).

- Rescorla, E. HTTP Over TLS. RFC 2818, RFC Editor. 2000. Available online: http://www.rfc-editor.org/rfc/rfc2818.txt (accessed on 10 November 2022).

- Dusseault, L. HTTP Extensions for Web Distributed Authoring and Versioning (WebDAV). RFC 4918, RFC Editor. 2007. Available online: https://www.rfc-editor.org/rfc/rfc4918.txt (accessed on 10 November 2022).

- Fette, I.; Melnikov, A. The WebSocket Protocol. RFC 6455, RFC Editor. 2011. Available online: http://www.rfc-editor.org/rfc/rfc6455.txt (accessed on 10 November 2022).

- Srisuresh, P.; Holdrege, M. IP Network Address Translator (NAT) Terminology and Considerations. RFC 2663, RFC Editor. 1999. Available online: http://www.rfc-editor.org/rfc/rfc2663.txt (accessed on 10 November 2022).

- Keranen, A.; Holmberg, C.; Rosenberg, J. Interactive Connectivity Establishment (ICE): A Protocol for Network Address Translator (NAT) Traversal. RFC 8445, RFC Editor. 2018. Available online: http://www.rfc-editor.org/rfc/rfc8445.txt (accessed on 10 November 2022).

- Petit-Huguenin, M.; Salgueiro, G.; Rosenberg, J.; Wing, D.; Mahy, R.; Matthews, P. Session Traversal Utilities for NAT (STUN). RFC 8489, RFC Editor. 2020. Available online: http://www.rfc-editor.org/rfc/rfc8489.txt (accessed on 10 November 2022).

- Reddy, T.; Johnston, A.; Matthews, P.; Rosenberg, J. Traversal Using Relays around NAT (TURN): Relay Extensions to Session Traversal Utilities for NAT (STUN). RFC 8656, RFC Editor. 2020. Available online: http://www.rfc-editor.org/rfc/rfc8656.txt (accessed on 10 November 2022).

- Begen, A.; Kyzivat, P.; Perkins, C.; Handley, M. SDP: Session Description Protocol. RFC 8866, RFC Editor. 2021. Available online: http://www.rfc-editor.org/rfc/rfc8866.txt (accessed on 10 November 2022).

- Petit-Huguenin, M.; Nandakumar, S.; Holmberg, C.; Keränen, A.; Shpount, R. Session Description Protocol (SDP) Offer/Answer Procedures for Interactive Connectivity Establishment (ICE). RFC 8839, RFC Editor. 2021. Available online: http://www.rfc-editor.org/rfc/rfc8839.txt (accessed on 10 November 2022).

- Boström, H.; Jennings, C.; Bruaroey, J.I. WebRTC 1.0: Real-Time Communication Between Browsers. W3C recommendation, W3C. 2021. Available online: https://www.w3.org/TR/2021/REC-webrtc-20210126/ (accessed on 10 November 2022).

- Schulzrinne, H.; Casner, S.; Frederick, R.; Jacobson, V. RTP: A Transport Protocol for Real-Time Applications. RFC 3550, RFC Editor. 2003. Available online: http://www.rfc-editor.org/rfc/rfc3550.txt (accessed on 10 November 2022).

- Hardt, D. The OAuth 2.0 Authorization Framework. RFC 6749, RFC Editor. 2012. Available online: http://www.rfc-editor.org/rfc/rfc6749.txt (accessed on 10 November 2022).

- Jones, M.; Bradley, J.; Sakimura, N. JSON Web Token (JWT). RFC 7519, RFC Editor. 2015. Available online: http://www.rfc-editor.org/rfc/rfc7519.txt (accessed on 10 November 2022).

| Sensor | Type | Sample Rate | Resolution | Connectivity | |

|---|---|---|---|---|---|

| eye tracker | Left Eye | video | 30 Hz | 640 × 480 b/w | USB |

| Right Eye | 30 Hz | 640 × 480 b/w | |||

| Field | 30 Hz | 640 × 480 color | |||

| APDM Opal | Acceleration | 3-axes | 20–128 Hz | g– g | Proprietary GHz and USB |

| Angular Velocity | 20–128 Hz | deg/s | |||

| Magnetic Field | 20–128 Hz | Gauss | |||

| Movesense HR2 | ECG | 1-channel | 125–512 Hz | Bluetooth / | |

| Acceleration | 3-axes | –208 Hz | g– g | ||

| Angular Velocity | –208 Hz | deg/s | |||

| Magentic Field | –208 Hz | gauss |

| Server | Location | Services |

|---|---|---|

| IMeRa | Medical Datacenter Tübingen | IMeRa Server and DB |

| Keycloak | Medical Datacenter Tübingen | Keycloak Server and DB |

| Nextcloud | Datacenter Frankfurt | Nextcloud Server and DB, coturn |

| Services | University Datacenter Tübingen | Traefik, Coturn, Signalling, Gamification Server, and Admin, Therapist, and Patient Portal’s Nginx |

| Sensor | Requirements | Percentage |

|---|---|---|

| Eye Tracking (video) | MBph | % |

| Eye Tracking (gaze estimation) | MBph | % |

| APDM Movement | MBph | % |

| Movesense Movement | MBph | % |

| Movesense ECG | MBph | % |

| Total | 2710.1 MBph | 100% |

| Application | CPU Utilization |

|---|---|

| Look Eye Tracking | % |

| Aggregator Frontend | % |

| Aggregator Backend | % |

| Others | % |

| Windows Defender | % |

| Windows System | % |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Primbs, J.; Ilg, W.; Thierfelder, A.; Severitt, B.; Hohnecker, C.S.; Alt, A.K.; Pascher, A.; Wörz, U.; Lautenbacher, H.; Hollmann, K.; et al. The SSTeP-KiZ System—Secure Real-Time Communication Based on Open Web Standards for Multimodal Sensor-Assisted Tele-Psychotherapy. Sensors 2022, 22, 9589. https://doi.org/10.3390/s22249589

Primbs J, Ilg W, Thierfelder A, Severitt B, Hohnecker CS, Alt AK, Pascher A, Wörz U, Lautenbacher H, Hollmann K, et al. The SSTeP-KiZ System—Secure Real-Time Communication Based on Open Web Standards for Multimodal Sensor-Assisted Tele-Psychotherapy. Sensors. 2022; 22(24):9589. https://doi.org/10.3390/s22249589

Chicago/Turabian StylePrimbs, Jonas, Winfried Ilg, Annika Thierfelder, Björn Severitt, Carolin Sarah Hohnecker, Annika Kristin Alt, Anja Pascher, Ursula Wörz, Heinrich Lautenbacher, Karsten Hollmann, and et al. 2022. "The SSTeP-KiZ System—Secure Real-Time Communication Based on Open Web Standards for Multimodal Sensor-Assisted Tele-Psychotherapy" Sensors 22, no. 24: 9589. https://doi.org/10.3390/s22249589

APA StylePrimbs, J., Ilg, W., Thierfelder, A., Severitt, B., Hohnecker, C. S., Alt, A. K., Pascher, A., Wörz, U., Lautenbacher, H., Hollmann, K., Barth, G. M., Renner, T., & Menth, M. (2022). The SSTeP-KiZ System—Secure Real-Time Communication Based on Open Web Standards for Multimodal Sensor-Assisted Tele-Psychotherapy. Sensors, 22(24), 9589. https://doi.org/10.3390/s22249589