Robot-Assisted Rehabilitation Architecture Supported by a Distributed Data Acquisition System

Abstract

1. Introduction

- Limiting viral transmission: Since the SARS-CoV-2 outbreak, the use of robots in hospitals has grown significantly, mostly to limit the spread of viruses [4]. These autonomous systems have the benefit of having intrinsic immunity to viruses, with minimal risk of disease transmission via human–robot–human contact. This ability is extremely valuable for pandemic control, as the robot may be used for cleaning, transportation, and telemedicine [5].

- Patient monitoring and pressure relief: Using onboard and/or external sensors, highlighting improvement or deterioration in the patient’s health, and can even perform some diagnostics for the most advanced robots. In addition, the robotic alternative can allow clinicians to be freed from laborious and repetitive tasks.

2. Related Works

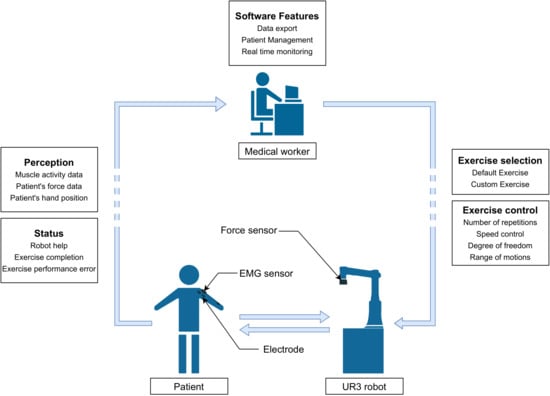

3. Rehabilitation System Architecture

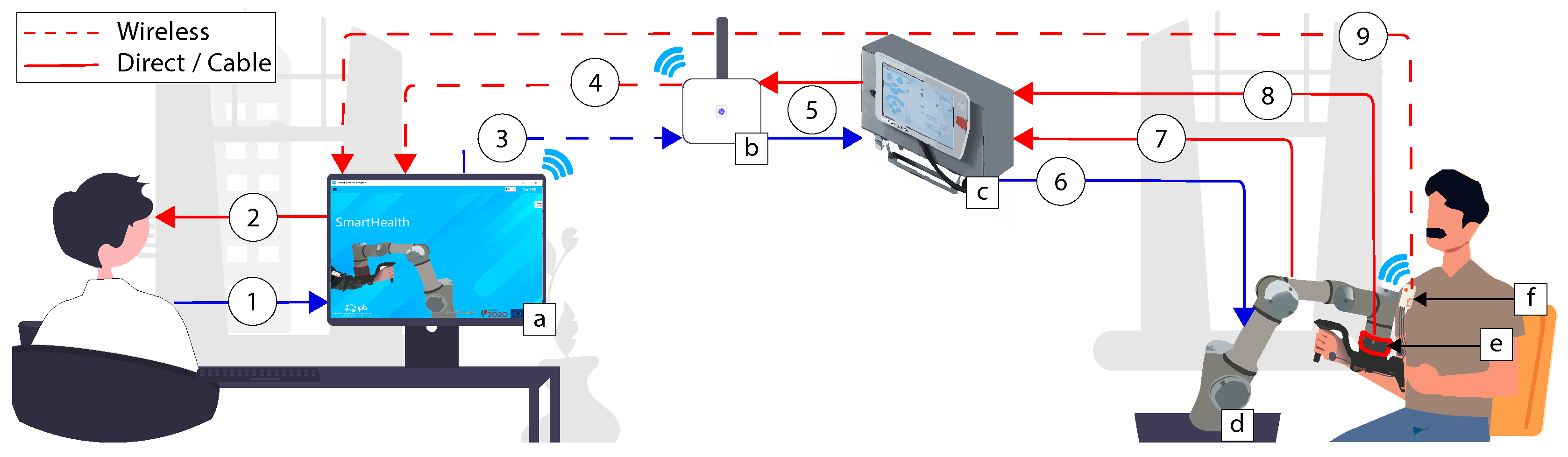

- (a) SmartHealth software: The software can be installed on any computer using the windows operating system. Several real-time loops are responsible for data reading, displaying, and taking action inside the software;

- (b) Router: In the case of wireless communication, a router linked via Ethernet cable to the UR3 robotic arm is necessary. The physiotherapist needs only to connect to the computer with the router network. This router act as a bridge to transmit the information between the robot and the computer. Nevertheless, a direct Ethernet connection can also be used, allowing one to ride off the router;

- (c) Control box and Teach pendant: The teach pendant is generally applied to communicate easily with the robot using the UR programming interface. Through this architecture, the teach pendant is useless. Nevertheless, the control box is still required;

- (d) UR3: Universal Robots is the robotic arm responsible for performing upper limb rehabilitation and driving the patient’s hand;

- (e) Robotiq FT300: It is an external 6 DOF force and torque sensor that allows monitoring of the patient’s force during the rehabilitation sessions;

- (f) EMG sensor: The EMG sensor allows real-time monitoring and transfer of the patient’s muscular activity via Bluetooth.

- (1): The physiotherapist assigns the rehabilitation method to be performed and introduces the patient’s characteristics and specificities;

- (2): Patient and exercise monitoring via the graphical software interface;

- (3): Robot command. The command sent can be a moving command, composed of a specific position/speed in , followed by other parameters such as arm acceleration, arm speed, and blending with the previous set-point. It can be a data request, for example, the actual robot position, force, torque, etc.;

- (4): The data received following the requested command in (3). It can be an acknowledgment of the requested command, force, torque, position, or joint angle data;

- (5): Bi-directional data transmission between the router and robotic arm control box;

- (6): The continuation of the data sequence transmitted in (3);

- (7) and (8): The origin of the data transmitted to (4);

- (9): A bi-directional data transmission, consisting of Bytes sent to/received from the EMG Shimmer sensor.

3.1. SmartHealth Software

3.2. Control Flowchart

3.3. Safety Strategy

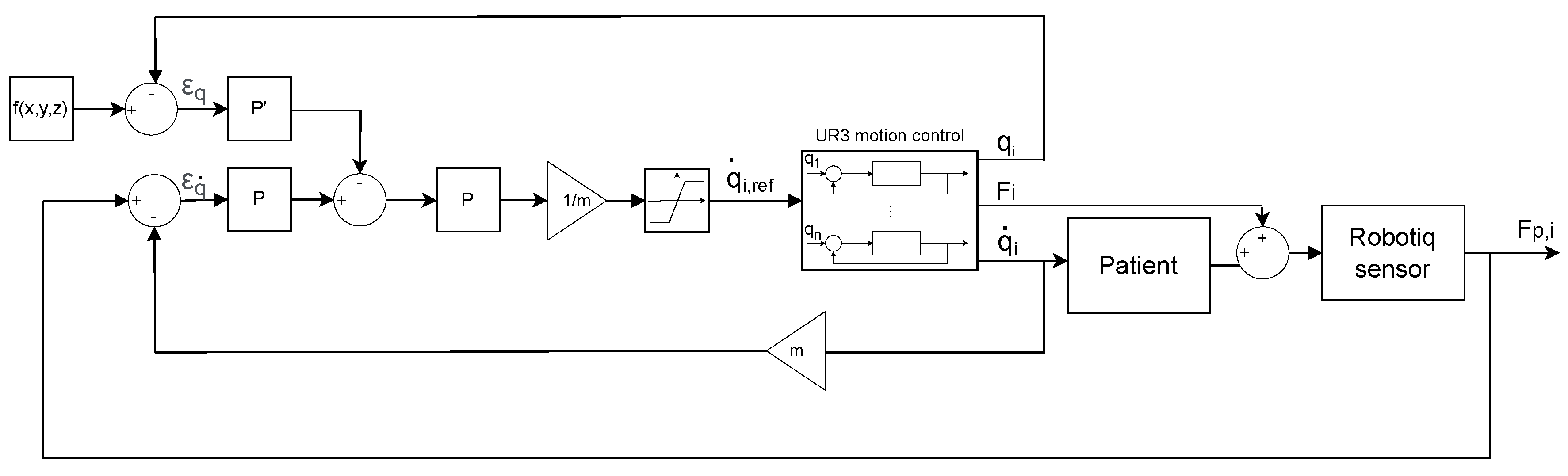

3.4. Control Strategy

3.5. Shimmer EMG Device

4. Results and Discussion

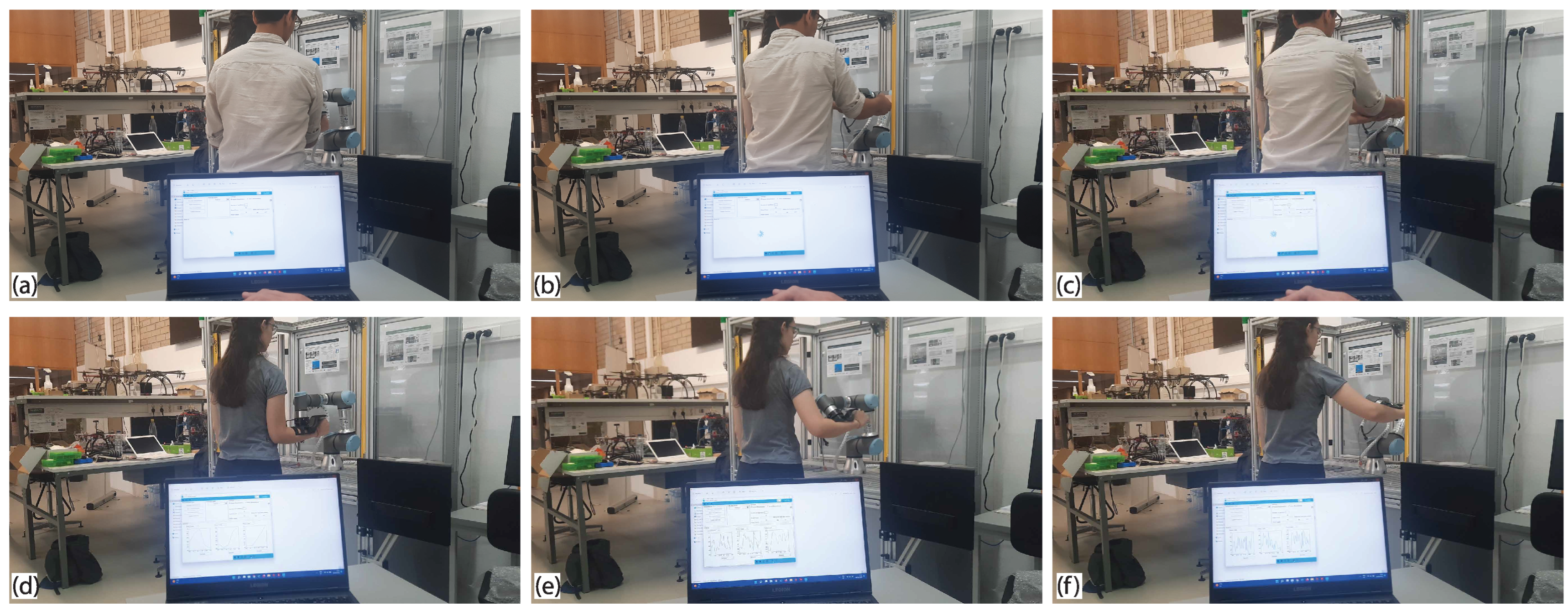

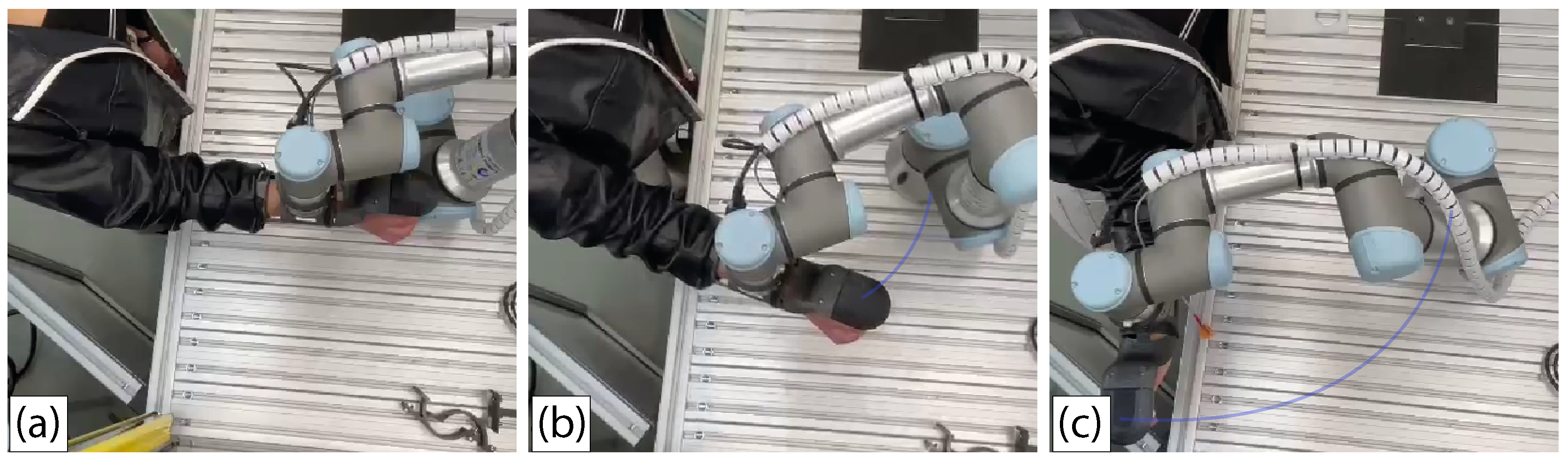

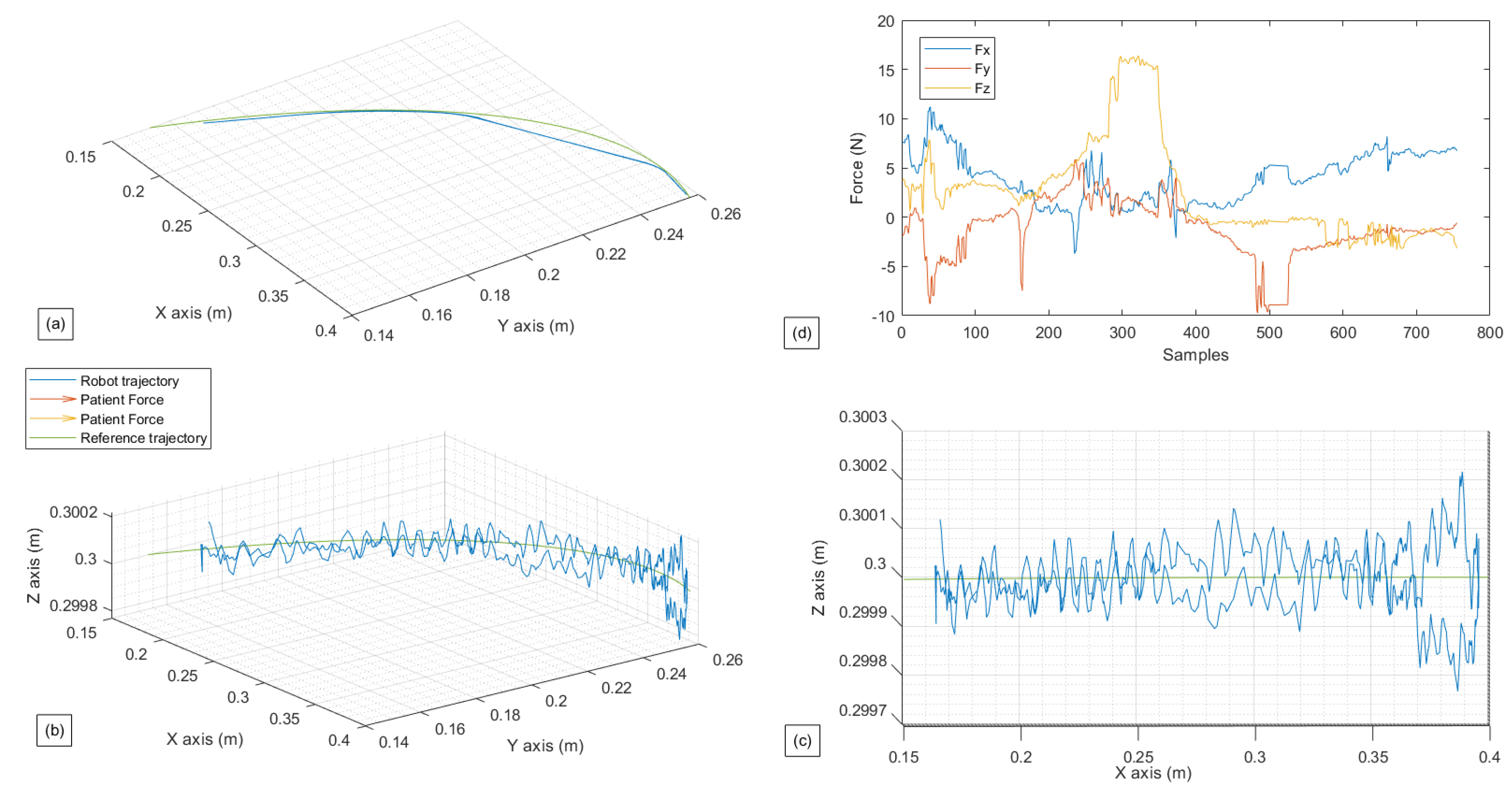

- The first exercise is a Lateral Shoulder Rotation exercise in a passive mode, where it is intended for the patient to offer less or no force;

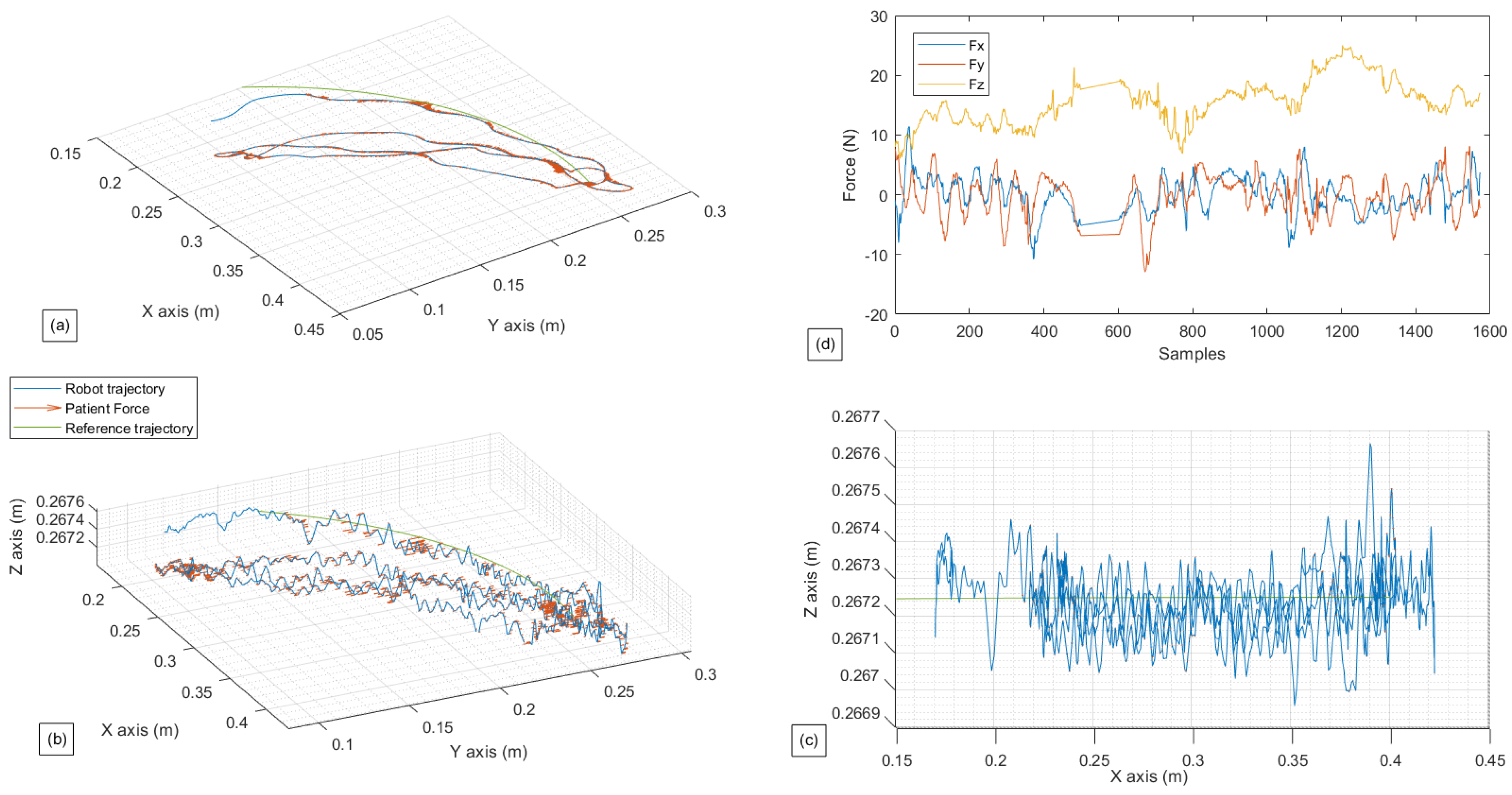

- The second exercise is a Lateral Shoulder Rotation exercise in a restricted active-assisted mode, where the robot movement is restricted only to a 2D planner environment and ();

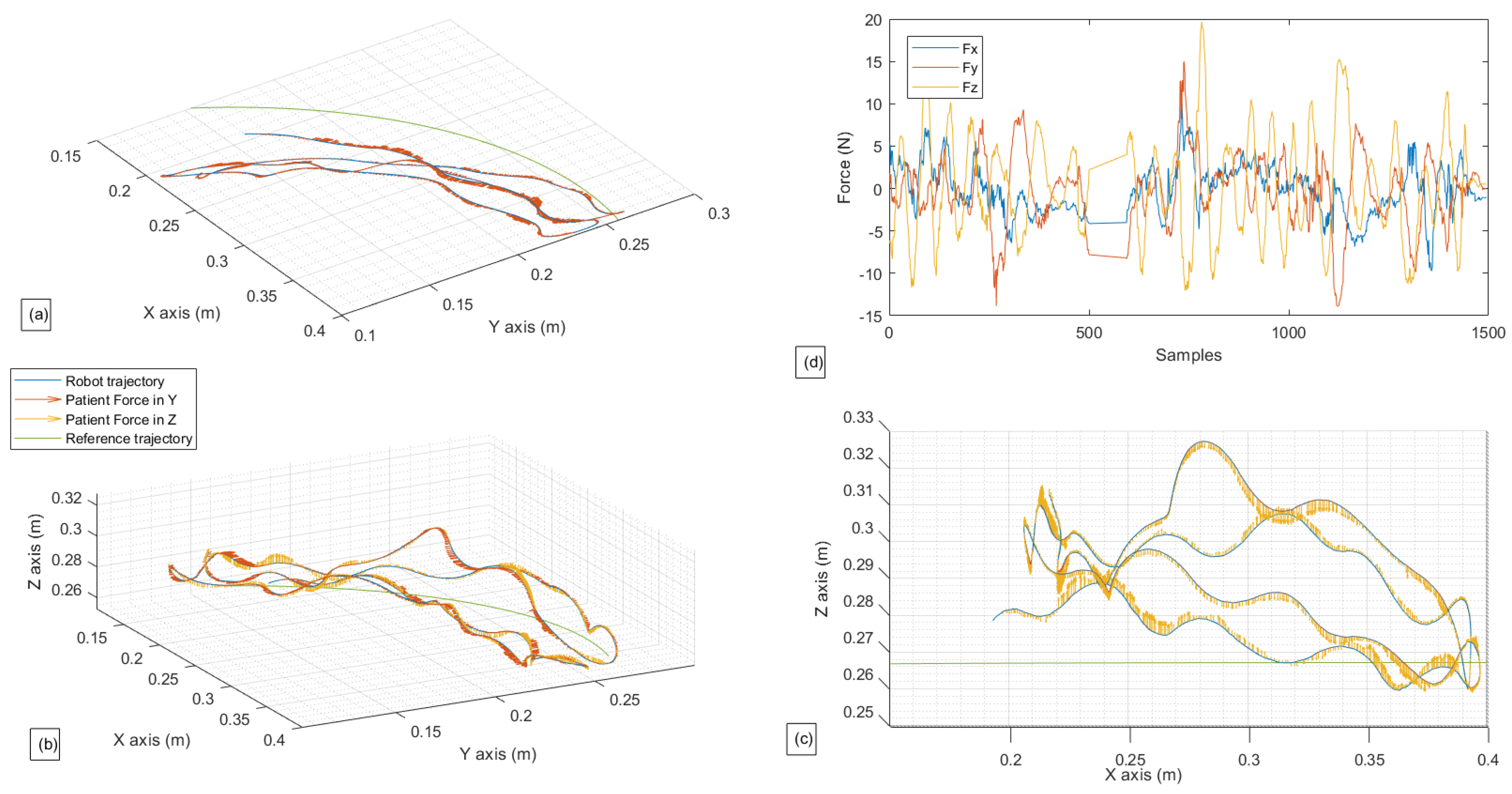

- The third exercise is a Lateral Shoulder Rotation exercise in a free active-assisted mode, where the robot’s movements are totally free in , with restriction applied only in and ;

- The fourth exercise is an ADL; the chosen exercise consists of mimicking the action of taking a cup, drinking from it, and putting it back. This exercise can be performed thanks to one of the functionalities offered by the software which is the “Custom exercise”. With this functionality, it is possible to manually create any exercise by simply moving the robotic arm; its movements are then recorded and reproduced.

4.1. Experimental Settings

4.2. Experimental Tests

5. Conclusions and Future Work

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DOA | Degrre of Autonomy |

| EMG | Electromyography |

| ADL | Activity of Daily Living |

| ROM | Range of Motion |

| HRC | Human Robot Collaboration |

| 2D | Two dimensional |

| 3D | Three dimensional |

| DOF | Degree of Freedom |

| IoT | Internet of Things |

| GUI | Graphical User Interface |

| MQTT | Message Queue Telemetry Transfer |

| cmd | command |

| TCP | Tool Center Point |

| TCP/IP | Transmission Control Protocol/Internet Protocol |

| CSV | Comma Separated Value |

References

- Chellal, A.A.; Lima, J.; Fernandes, F.P.; Gonçalves, J.; Pacheco, M.F.; Monteiro, F.C. Overview of robotic based system for rehabilitation and healthcare. In International Conference on Optimization, Learning Algorithms and Applications; Springer: Bragança, Portugal, 2021; pp. 515–530. [Google Scholar]

- Li, M.; Zhang, J.; Zuo, G.; Feng, G.; Zhang, X. Assist-as-needed control strategy of bilateral upper limb rehabilitation robot based on GMM. Machines 2022, 10, 76. [Google Scholar] [CrossRef]

- Laut, J.; Porfiri, M.; Raghavan, P. The present and future of robotic technology in rehabilitation. Curr. Phys. Med. Rehabil. Rep. 2016, 4, 312–319. [Google Scholar] [CrossRef] [PubMed]

- Zanchettin, A.M.; Facciotti, F. A collaborative robotic solution to partly automate SARS-CoV-2 serological tests in small facilities. Slas Technol. 2022, 27, 100–106. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Guo, D.; Long, F.; Mateos, L.A.; Ding, H.; Xiu, Z.; Tan, H. Robots under COVID-19 pandemic: A comprehensive survey. IEEE Access 2020, 9, 1590–1615. [Google Scholar] [CrossRef] [PubMed]

- Song, W.K.; Lee, H.Y.; Kim, J.S.; Yoon, Y.S.; Bien, Z. KARES: Intelligent rehabilitation robotic system for the disabled and the elderly. In Proceedings of the 20th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Hong Kong, China, 1 November 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 2682–2685. [Google Scholar]

- Bejczy, A.K. Towards development of robotic aid for rehabilitation of locomotion-impaired subjects. In Proceedings of the First Workshop on Robot Motion and Control (RoMoCo’99), Kiekrz, Poland, 28–29 June 1999; IEEE: Piscataway, NJ, USA, 1999; pp. 9–16. [Google Scholar]

- Krebs, H.I.; Volpe, B.T.; Williams, D.; Celestino, J.; Charles, S.K.; Lynch, D.; Hogan, N. Robot-aided neurorehabilitation: A robot for wrist rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2007, 15, 327–335. [Google Scholar] [CrossRef]

- AKim, B.; Deshpande, A.D. Controls for the shoulder mechanism of an upper-body exoskeleton for promoting scapulohumeral rhythm. In Proceedings of the 2015 IEEE International Conference on Rehabilitation Robotics, Singapore, 11–14 August 2015; IEEE: Piscataway, NJ, USA, 2017; pp. 538–542. [Google Scholar]

- Al-Rahmani, N.A.; Mohan, D.M.; Awad, M.I.; Wasti, S.A.; Hussain, I.; Khalaf, K. Lower-Limb Robotic Assistance Devices for Drop Foot: A Review. IEEE Access 2022, 10, 51964–51994. [Google Scholar] [CrossRef]

- Kim, K.T.; Choi, Y.; Cho, J.H.; Lee, S. Feasibility and Usability Study of a Robot-Assisted Complex Upper and Lower Limb Rehabilitation System in Patients with Stroke. Preprints 2022, 2022030311. [Google Scholar] [CrossRef]

- Yoon, J.; Novandy, B.; Yoon, C.H.; Park, K.J. A 6-DOF gait rehabilitation robot with upper and lower limb connections that allows walking velocity updates on various terrains. IEEE/ASME Trans. Mechatron. 2010, 15, 201–215. [Google Scholar] [CrossRef]

- Díaz, I.; Catalan, J.M.; Badesa, F.J.; Justo, X.; Lledo, L.D.; Ugartemendia, A.; Gil, J.J.; Díez, J.; García-Aracil, N. Development of a robotic device for post-stroke home tele-rehabilitation. Adv. Mech. Eng. 2018, 10, 1–8. [Google Scholar] [CrossRef]

- Wu, J.; Gao, J.; Song, R.; Li, R.; Li, Y.; Jiang, L. The design and control of a 3DOF lower limb rehabilitation robot. Mechatronics 2016, 33, 13–22. [Google Scholar] [CrossRef]

- Wang, H.; Lin, M.; Jin, Z.; Yan, H.; Liu, G.; Liu, S.; Hu, X. A 4-DOF workspace lower limb rehabilitation robot: Mechanism design, human joint analysis and trajectory planning. Appl. Sci. 2020, 10, 4542. [Google Scholar] [CrossRef]

- Wu, K.Y.; Su, Y.Y.; Yu, Y.L.; Lin, C.H.; Lan, C.C. A 5-degrees-of-freedom lightweight elbow-wrist exoskeleton for forearm fine-motion rehabilitation. IEEE/ASME Trans. Mechatron. 2019, 24, 2684–2695. [Google Scholar] [CrossRef]

- Lin, C.H.; Lien, W.M.; Wang, W.W.; Chen, S.H.; Lo, C.H.; Lin, S.Y.; Fu, L.C.; Lai, J.S. NTUH-II robot arm with dynamic torque gain adjustment method for frozen shoulder rehabilitation. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 3555–3560. [Google Scholar]

- Fernandes, L.D.; Lima, J.L.; Leitão, P.; Nakano, A.Y. Using a collaborative robot to the upper limb rehabilitation. In Iberian Robotics Conference; Springer: Cham, Switzerland, 2019; pp. 429–440. [Google Scholar]

- Fernandes, L.; Brito, T.; Piardi, L.; Lima, J.; Leitão, P. A Real Framework to Apply Collaborative Robots in Upper Limb Rehabilitation. In Proceedings of the 13th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2020), Valletta, Malta, 24–26 February 2020; SCITEPRESS: Setúbal, Portugal, 2020; pp. 176–183. [Google Scholar]

- Zimmermann, Y.; Forino, A.; Riener, R.; Hutter, M. ANYexo: A versatile and dynamic upper-limb rehabilitation robot. IEEE Robot. Autom. Lett. 2019, 4, 3649–3656. [Google Scholar] [CrossRef]

- Zimmermann, Y.; Küçüuktabak, E.-B.; Farshidian, F.; Riener, R.; Hutter, M. Towards Dynamic Transparency: Robust Interaction Force Tracking Using Multi-Sensory Control on an Arm Exoskeleton. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 7417–7424. [Google Scholar]

- Kwakkel, G.; Wagenaar, R.C.; Kollen, B.J.; Lankhorst, G.J. Predicting disability in stroke—A critical review of the literature. Age Ageing 1996, 25, 479–489. [Google Scholar] [CrossRef]

- WHO. Health Organization: WHO Guidelines on Health-Related Rehabilitation; WHO: Geneva, Switzerland, 2014. [Google Scholar]

- Siciliano, B.; Khatib, O. (Eds.) Springer Handbook of Robotics; Springer: Berlin, Germany, 2016. [Google Scholar]

- Kebria, P.M.; Al-Wais, S.; Abdi, H.; Nahavandi, S. Kinematic and dynamic modelling of UR5 manipulator. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 004229–004234. [Google Scholar]

- Universal Robots: UR3 Service Manual “Original instructions”, 3rd ed.; 2019. Available online: https://s3-eu-west-1.amazonaws.com/ur-support-site/15735/UR3_Service_Manual_en.pdf (accessed on 13 June 2022).

- Scimmi, L.S.; Melchiorre, M.; Mauro, S.; Pastorelli, S.P. Implementing a vision-based collision avoidance algorithm on a UR3 Robot. In Proceedings of the 9th 2019 23rd International Conference on Mechatronics Technology (ICMT), Fisciano, Italy, 23–26 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Mybotshop: Sales and Service Partner in the Field of Teaching and Research Robotics. Available online: https://www.mybotshop.de/Universal-Robots-UR3-UR3e_1 (accessed on 16 November 2022).

- Haidegger, T. Autonomy for surgical robots: Concepts and paradigms. IEEE Trans. Med Robot. Bionics 2019, 1, 65–76. [Google Scholar] [CrossRef]

- Miao, M.; Gao, X.; Zhu, W. A construction method of lower limb rehabilitation robot with remote control system. Appl. Sci. 2021, 11, 867. [Google Scholar] [CrossRef]

- Gattupalli, S.; Lioulemes, A.; Gieser, S.N.; Sassaman, P.; Athitsos, V.; Makedon, F. Magni: A real-time robot-aided game-based tele-rehabilitation system. In International Conference on Universal Access in Human-Computer Interaction; Springer: Cham, Switzerland, 2016; pp. 344–354. [Google Scholar]

- Bouteraa, Y.; Ben Abdallah, I.; Ibrahim, A.; Ahanger, T.A. Development of an IoT-based solution incorporating biofeedback and fuzzy logic control for elbow rehabilitation. Appl. Sci. 2020, 10, 7793. [Google Scholar] [CrossRef]

- Bouteraa, Y.; Ben Abdallah, I.; Alnowaiser, K.; Islam, M.R.; Ibrahim, A.; Gebali, F. Design and Development of a Smart IoT-Based Robotic Solution for Wrist Rehabilitation. Micromachines 2022, 13, 973. [Google Scholar] [CrossRef] [PubMed]

- Meng, Q.; Zhang, H.; Yu, H. An internet of things framework based on upper limb rehabilitation robots for rehabilitation. In International Conference on Intelligent and Interactive Systems and Applications; Springer: Cham, Switzerland, 2018; pp. 752–759. [Google Scholar]

- Zhao, Y.; Liang, C.; Gu, Z.; Zheng, Y.; Wu, Q. A new design scheme for intelligent upper limb rehabilitation training robot. Int. J. Environ. Res. Public Health 2020, 17, 2948. [Google Scholar] [CrossRef] [PubMed]

- Kosar, T.; Lu, Z.; Mernik, M.; Horvat, M.; Črepinšek, M. A Case Study on the Design and Implementation of a Platform for Hand Rehabilitation. Appl. Sci. 2021, 11, 389. [Google Scholar] [CrossRef]

- Lai, Y.; Sutjipto, S.; Clout, M.D.; Carmichael, M.G.; Paul, G. GAVRe2: Towards data-driven upper-limb rehabilitation with adaptive-feedback gamification. In Proceedings of the 2018 IEEE international conference on robotics and biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 164–169. [Google Scholar]

- Chellal, A.A.; Lima, J.; Gonçalves, J.; Fernandes, F.P.; Pacheco, M.F.; Monteiro, F.C.; Valente, A. SmartHealth: A Robotic Control Software for Upper Limb Rehabilitation. In APCA International Conference on Automatic Control and Soft Computing; Springer: Cham, Switzerland, 2002; pp. 667–676. [Google Scholar]

- Hocine, N.; Gouaïch, A.; Cerri, S.A.; Mottet, D.; Froger, J.; Laffont, I. Adaptation in serious games for upper-limb rehabilitation: An approach to improve training outcomes. User Model. User-Adapt. Interact. 2015, 25, 65–98. [Google Scholar] [CrossRef]

- Kyrkjebø, E.; Laastad, M.J.; Stavdahl, Ø. Feasibility of the UR5 industrial robot for robotic rehabilitation of the upper limbs after stroke. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Brito, T.; Queiroz, J.; Piardi, L.; Fernandes, L.A.; Lima, J.; Leitão, P. A machine learning approach for collaborative robot smart manufacturing inspection for quality control systems. Procedia Manuf. 2020, 51, 11–18. [Google Scholar] [CrossRef]

- Franco, T.; Sestrem, L.; Henriques, P.R.; Alves, P.; Varanda Pereira, M.J.; Brandão, D.; Silva, A. Motion Sensors for Knee Angle Recognition in Muscle Rehabilitation Solutions. Sensors 2022, 22, 7605. [Google Scholar] [CrossRef] [PubMed]

- Burns, A.; Doheny, E.P.; Greene, B.R.; Foran, T.; Leahy, D.; O’Donovan, K.; McGrath, M.J. SHIMMER™: An extensible platform for physiological signal capture. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 3759–3762. [Google Scholar]

- Realtime Technologies. Shimmer: Shimmer User Manual; Revision 3; Realtime Technologies: Dublin, Ireland, 2017. [Google Scholar]

- Bandy, W.D.; Sanders, B. Therapeutic Exercise for Physical Therapist Assistants; Lippincott Williams & Wilkins: Baltimore, MD, USA, 2008. [Google Scholar]

- Randazzo, L.; Iturrate, I.; Perdikis, S.; Millán, J.D.R. mano: A wearable hand exoskeleton for activities of daily living and neurorehabilitation. IEEE Robot. Autom. Lett. 2017, 3, 500–507. [Google Scholar] [CrossRef]

| Feature | Symbol | Value | Unit |

|---|---|---|---|

| Force Measurement Range | ±300 | N | |

| Moments Measurement Range | ±30 | N.m | |

| Data Output Rate | F | 100 | Hz |

| Weight | m | 0.3 | kg |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chellal, A.A.; Lima, J.; Gonçalves, J.; Fernandes, F.P.; Pacheco, F.; Monteiro, F.; Brito, T.; Soares, S. Robot-Assisted Rehabilitation Architecture Supported by a Distributed Data Acquisition System. Sensors 2022, 22, 9532. https://doi.org/10.3390/s22239532

Chellal AA, Lima J, Gonçalves J, Fernandes FP, Pacheco F, Monteiro F, Brito T, Soares S. Robot-Assisted Rehabilitation Architecture Supported by a Distributed Data Acquisition System. Sensors. 2022; 22(23):9532. https://doi.org/10.3390/s22239532

Chicago/Turabian StyleChellal, Arezki Abderrahim, José Lima, José Gonçalves, Florbela P. Fernandes, Fátima Pacheco, Fernando Monteiro, Thadeu Brito, and Salviano Soares. 2022. "Robot-Assisted Rehabilitation Architecture Supported by a Distributed Data Acquisition System" Sensors 22, no. 23: 9532. https://doi.org/10.3390/s22239532

APA StyleChellal, A. A., Lima, J., Gonçalves, J., Fernandes, F. P., Pacheco, F., Monteiro, F., Brito, T., & Soares, S. (2022). Robot-Assisted Rehabilitation Architecture Supported by a Distributed Data Acquisition System. Sensors, 22(23), 9532. https://doi.org/10.3390/s22239532