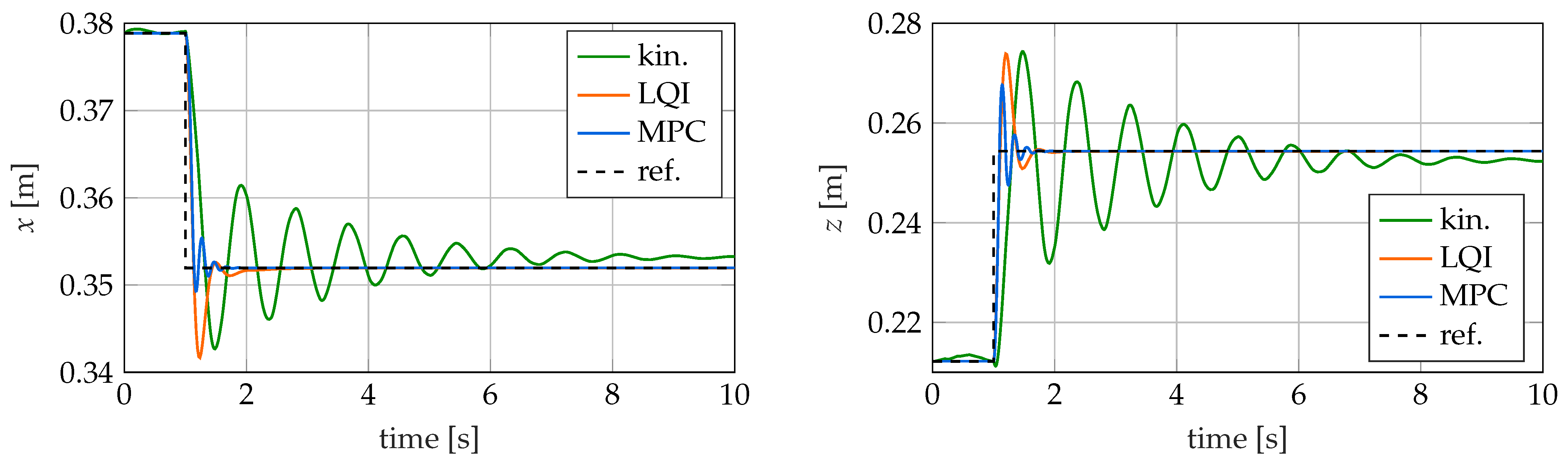

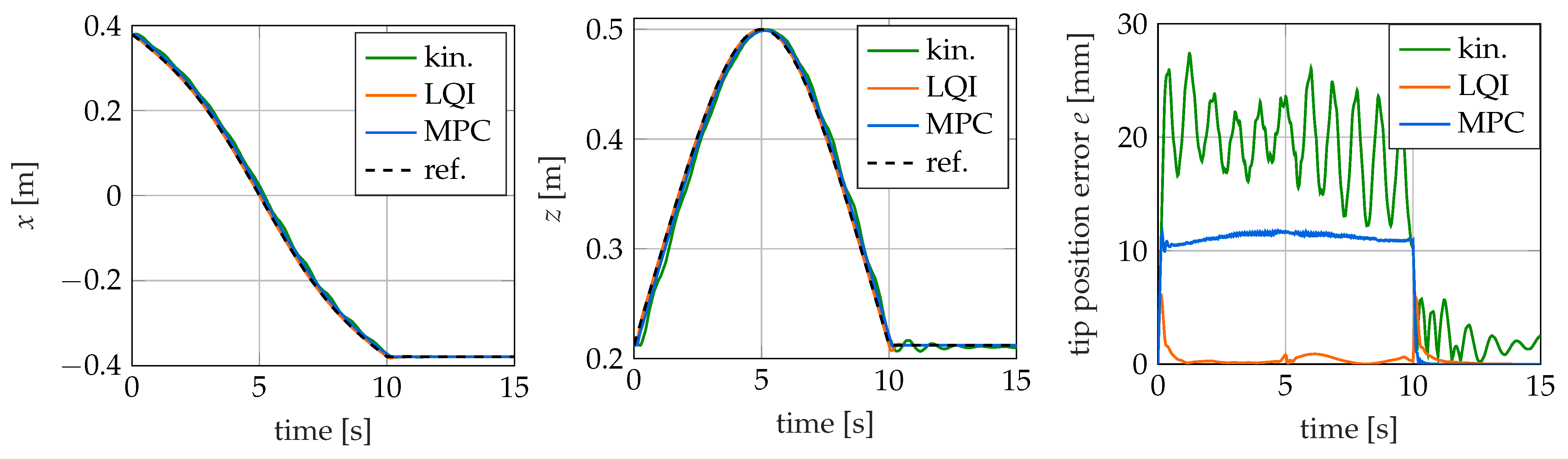

In the following, the three examined control methods are briefly presented.

3.1. Kinematic Control

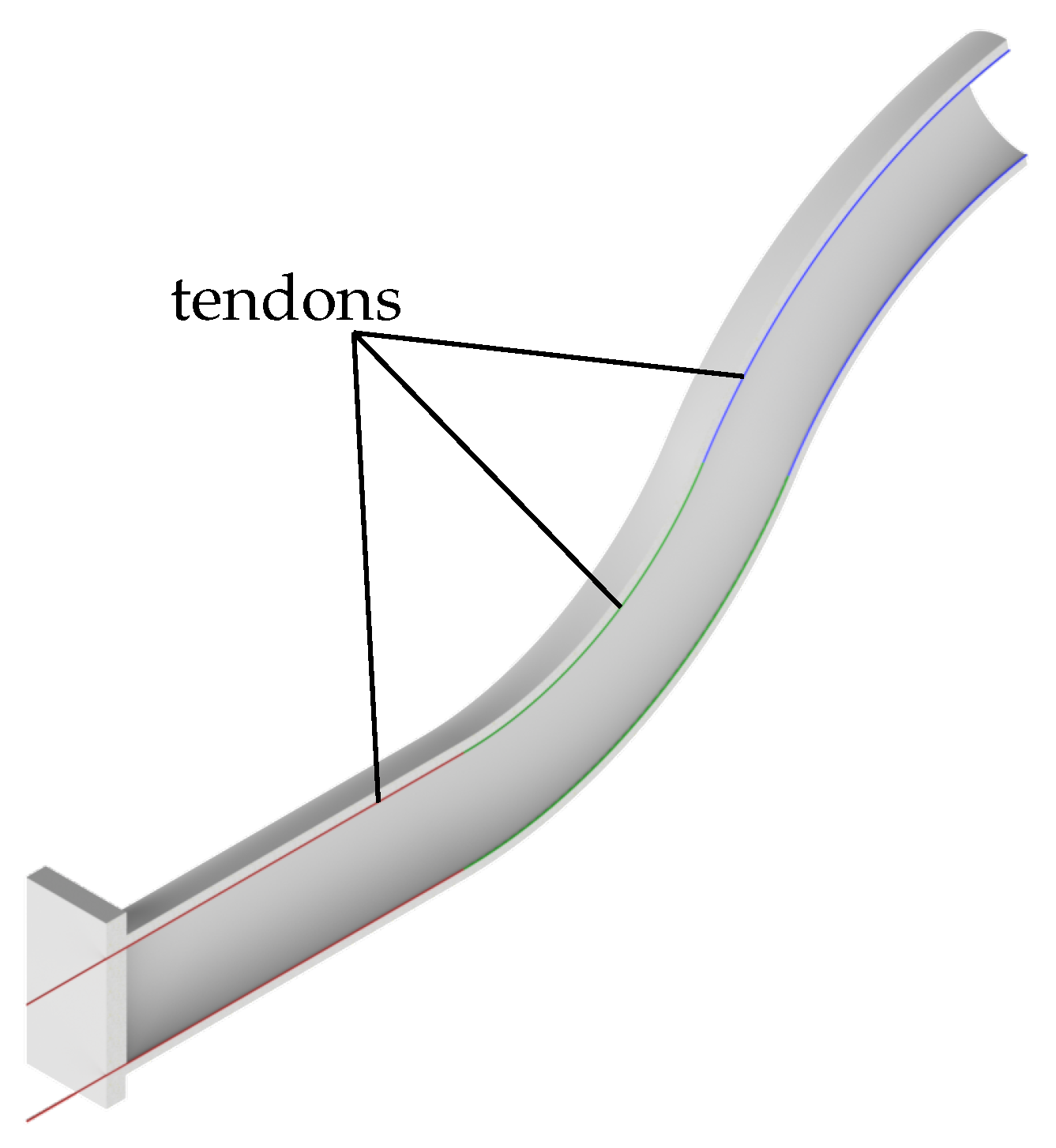

The first considered controller uses a model-free, kinematic approach based on [

4,

5]. Here, a shallow neural network is used to learn the global inverse kinematics of a soft robot. This can then be used to determine the necessary control values for a desired configuration of the robot. The dynamics of the system are neglected.

In general, the direct kinematics of the soft robot can be denoted as

with the vector of the control variables

and the resulting steady-state tip position

. To determine the inverse kinematics, the direct kinematics from Equation (

19) have to be inverted. Since the considered soft robot is kinematically redundant, there exists no unique global solution for this; the function

is surjective. However, the inverse differential kinematics

can be used to obtain a locally unique solution [

41]. Here,

is the Jacobian-matrix of

with respect to the control variables

. The variations of the tip position

and the control variables

are denoted

and

, respectively. Following [

5], Equation (

20) can be discretized such that

Here,

is the vector of control variables that reaches the positions

starting from the current position

with the corresponding control variables

. In general, Equation (

21) only holds if the difference between the control variables

and

is infinitesimally small. In practice, [

41] shows that the equation also provides usable approximate solutions for larger distances.

For the kinematic control, Equation (

21) must be solved for the control variable vector

. This results in

with

as the Moore–Penrose pseudoinverse of the Jacobian matrix

. The mapping function of

,

and

to

is denoted as

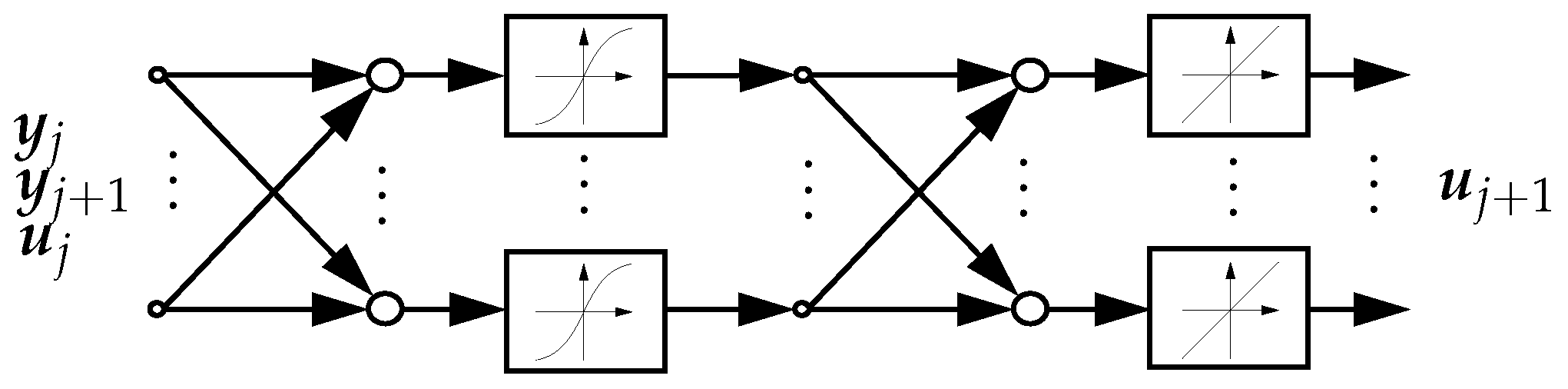

in the following. It represents a locally valid inversion of the kinematics of the soft robot. For the soft robot model considered in this contribution, the function of the local inverse kinematics

cannot be determined analytically. Therefore, in the following, it is approximated with a neural network with one hidden layer. The structure of the neural network is shown in

Figure 4. The input layer has seven neurons. The hidden layer has 30 neurons and uses the tangent hyperbolicus as activation function. The output layer has three neurons and uses a linear activation function. The training is performed with Bayesian regularization.

For the training of the neural network, a sufficient number of value pairs of the mapping

are required. To determine the required training data, the simulation model described in

Section 2.1 is used. For the generation of one pair of values, the vector of control variables

and the vector of varied control variables

are chosen randomly such that

with

and

with

. Then, the robot is excited with the vector of control variables

, and the resulting initial position

is determined after the transient processes have decayed. Subsequently, the control variables are changed to the new vector of control variables

, which results in the corresponding position

. In this way, a complete pair of values of the mapping

is determined. This procedure is repeated 10,000 times to collect the training data for the neural network. This takes about

on a PC with an “Intel Core i7 - 6700K” processor. The resulting workspace of the soft robot is shown in

Figure 5 and is reasonable and realistic for this type of robot.

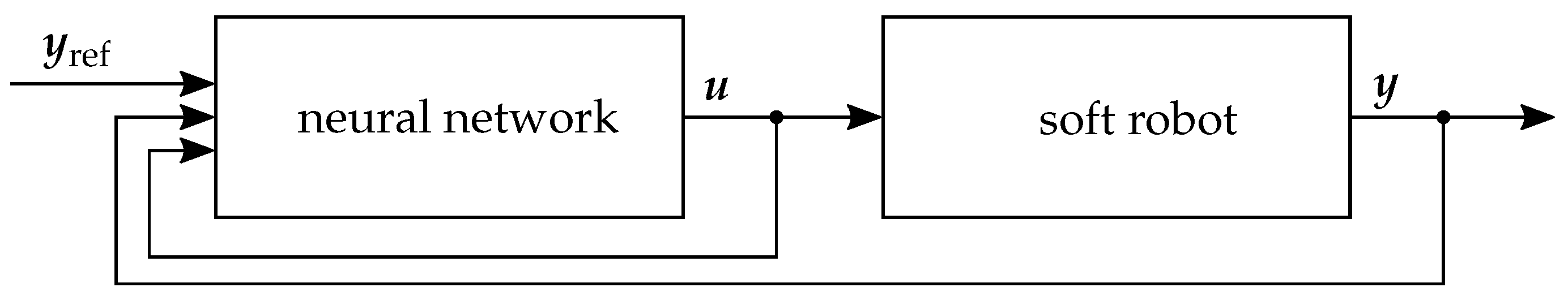

The trained neural network can now be used as a controller for the soft robot, as shown in

Figure 6. The inputs of the neural network are the current tip position

, the current control variables

and the desired tip position

. The outputs of the neural network are the control variables

that are required to archive the desired tip position. The controller runs with a sample frequency of

. The reason for this comparatively low frequency is the kinematic behavior of the controller neglecting the dynamics of the soft robot. With each change in the control output

, the dynamics of the system are excited; however, the kinematic controller assumes that the steady-state position is reached instantaneously. With the low sample frequency, the transients can at least partly decay before the measurements for the next control step are taken, which improves the performance and the stability of the controller. On the other hand, if the sample frequency of the controller is chosen even lower, the robot can only move very slowly.

3.2. Linear Quadratic Control with Gain Scheduling

The second controller considered is a linear quadratic controller with integral action (LQI controller) and gain scheduling. This is an approach from optimal control that has not been applied to soft robotics so far. In general, for the control of linear time-invariant systems of the form

LQI controllers can be used [

42]. Here,

is the state vector,

the input vector and

the output vector. The dynamic behavior of the linear system and the relationship between the input vector

, the states

and the output

are described by the matrices

,

and

. As LQI controllers are extensively discussed in the literature, e.g., [

43,

44], they are not described in further detail here.

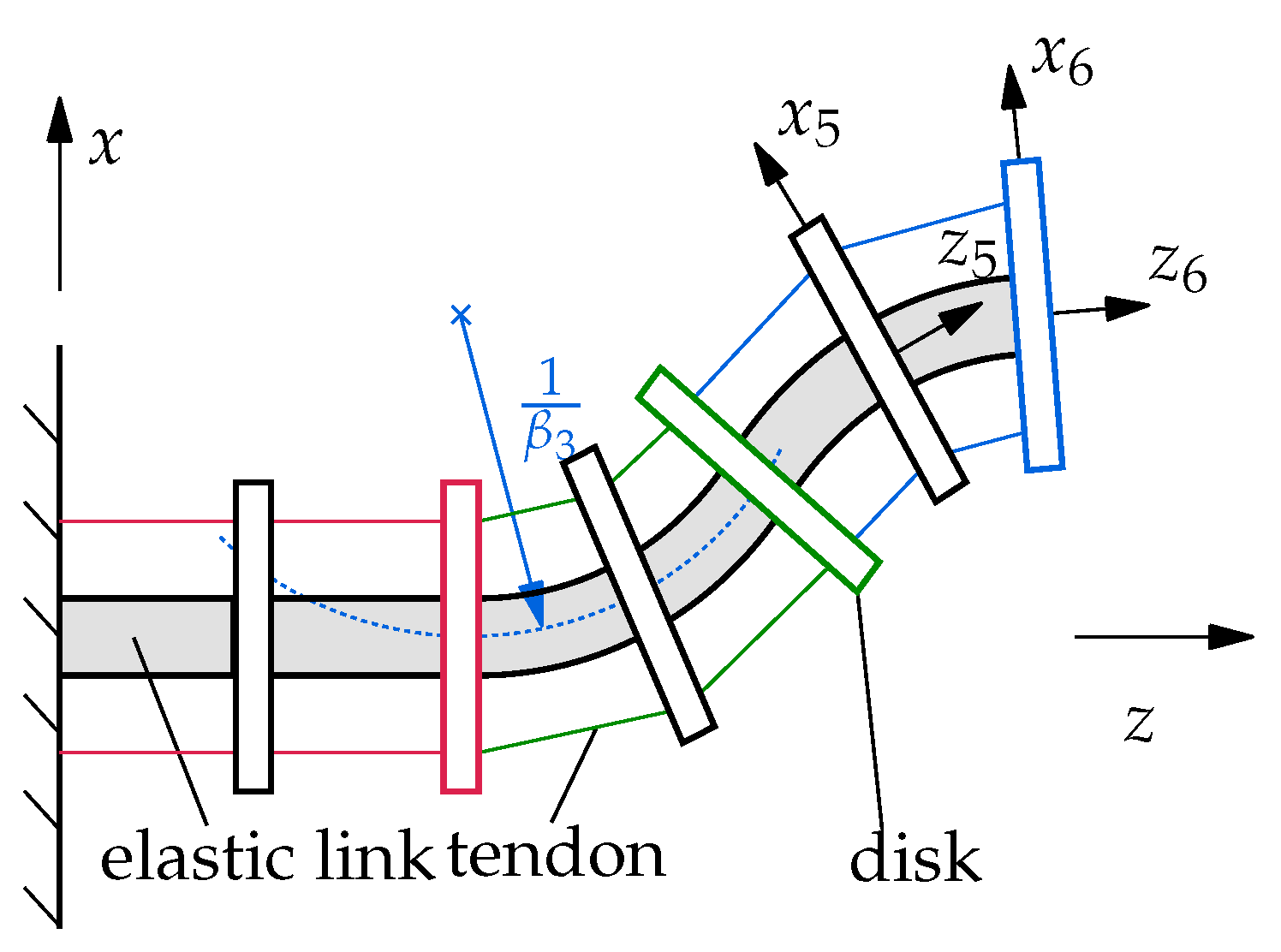

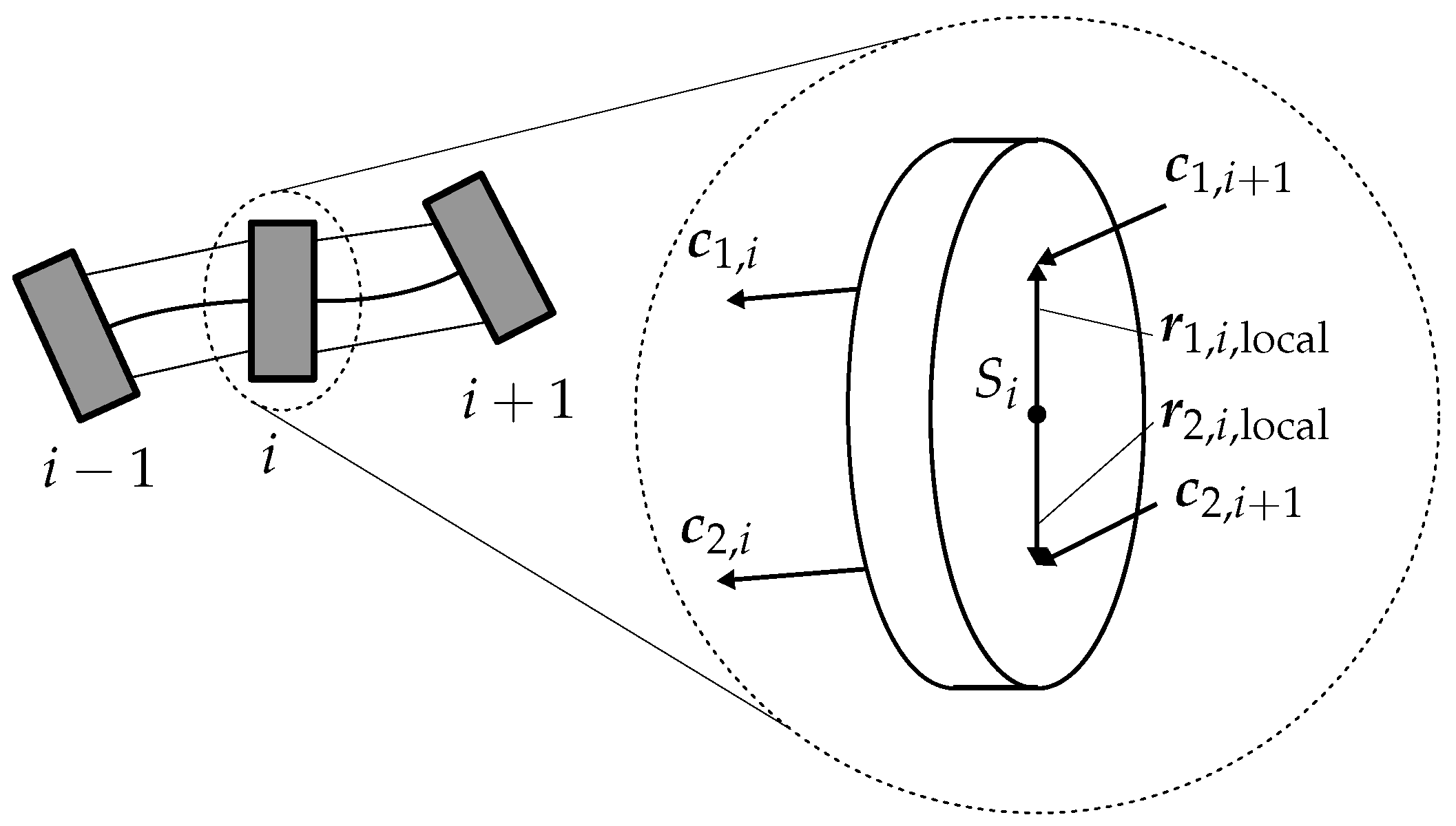

The controller considered so far requires a linear model of the system to be controlled for the design. However, the soft robot used in this contribution has nonlinear behavior, which can be described as

In order to derive an LQI controller for such a nonlinear system, it has to be linearized [

45]. In this paper, the nonlinear system is therefore linearized around 2288 operation points OP, which are regularly distributed in the workspace. The operation points are chosen such that the velocities of the soft robot are zero in these points. They are shown in

Figure 7. Each operation point consists of a state vector

, a control variable vector

and the corresponding system output

. However, as long as no external forces are considered, as in this contribution, three parameters are sufficient to describe an operation point because the steady-state configuration of the soft robot then only depends on the three tendon forces

. Here, the curvature of the first, third and fifth segment,

,

and

, is chosen. These can be collected in the scheduling vector

.

Given the state function

, the output function

and the operating point, the matrices of the state space representation of the linearized system can be determined. These are calculated, as shown by [

45], among others, as

This results in the linearized system

with

,

and

.

For each of the linearized systems in the operation points, a separate LQI controller is now designed. For the control of the soft robot, in each time step, a trilinear interpolation is performed between the eight controllers spanning the cuboid in the three-dimensional parameter space in which the current scheduling parameter

lies. In

Figure 8, the block diagram of the LQI controller with gain scheduling is shown. The control law results in

Here, the gain matrix of the controller is subdivided into the gain of the integrated control error for the integral behavior and the gain matrix for the proportional and derivative behavior of the controller.

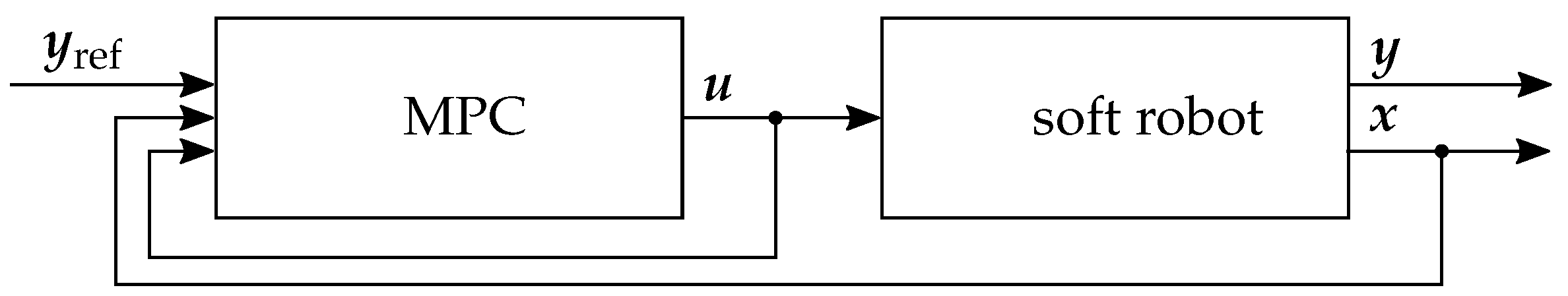

3.3. Model Predictive Control

The third control method considered is a model predictive controller (MPC). The block diagram of this controller is shown in

Figure 9. This discrete, model-based method is, e.g., used by [

25] for the control of a soft robot. The MPC solves an optimization problem in each timestep of the control in order to determine the best possible control output

u. For this purpose, in every timestep, the controller optimizes the control output for the next

c timesteps (control horizon) such that the control error and control effort over the next

p timesteps (prediction horizon) is minimized. The optimization problem is described by

with the cost function

where

Here,

,

and

are weighting matrices to specify the influence of the different cost terms. In total,

variables have to be computed in an optimization, where

m is the number of control variables. The parameters for the optimization are obtained by combining the control variables of the individual time points in the control horizon in the vector

Here, is the control variable that applies to the time step with index n in the control horizon. To solve the optimization problem, in every timestep, the nonlinear soft robot model integrated into the controller has to be evaluated several times.

In this paper, a sample time of , a prediction horizon of steps and a control horizon of steps are chosen. Usually, better control results can be archived with a shorter sample time and longer prediction and control horizons. However, the computational costs also increase because the optimization problem is harder to solve.

The controller is implemented in

Simulink with the

Model Predictive Control Toolbox. The internal model is integrated with the explicit Euler method with stepsize

. The optimization problem is solved with the

Matlab function

fmincon. It uses the SQP method for the minimization [

46]. With the chosen implementation of the MPC in

Simulink, a time delay of one control step (

) is induced. However, with a different implementation of the MPC, this could be avoided.