PU-MFA: Point Cloud Up-Sampling via Multi-Scale Features Attention

Abstract

1. Introduction

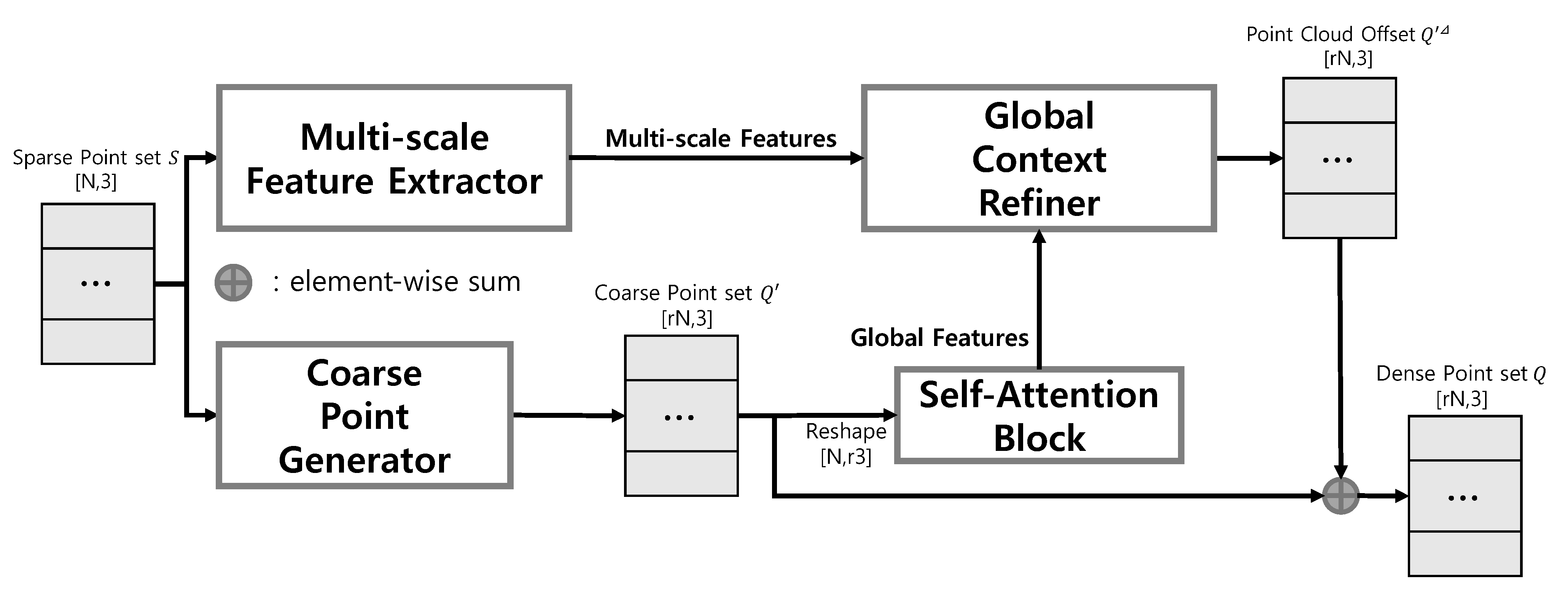

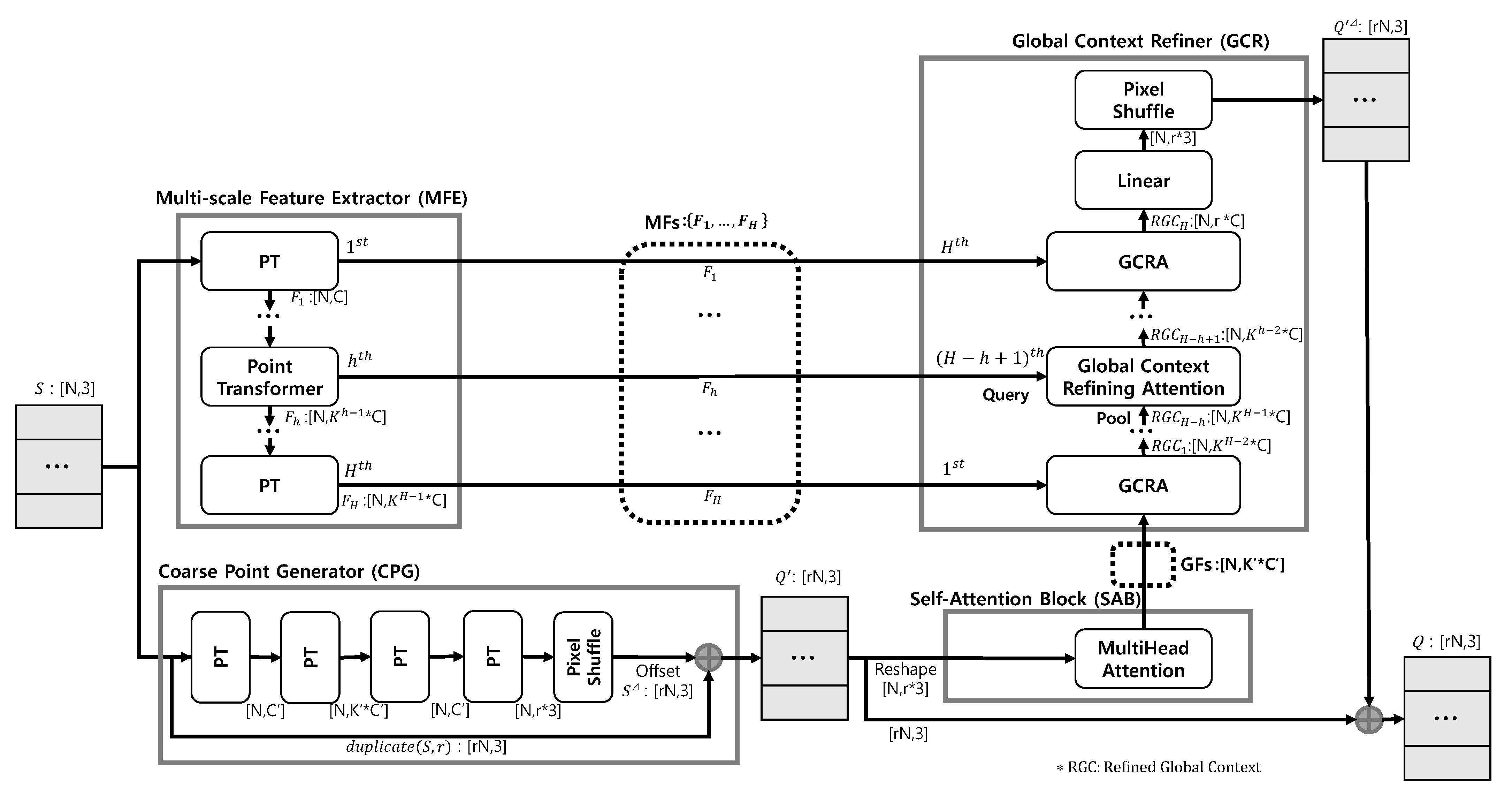

- This paper proposes a point cloud up-sampling method of U-Net structure using Multi-scale Features (MFs) adaptively to Global Features (GFs).

- Global Context Refining Attention (GCRA), a structure for effectively combining MFs and attention mechanisms, is proposed. To the best of the authors’ knowledge, this is the first MultiHead Cross-Attention (MCA) mechanism proposed in point cloud up-sampling.

- This study demonstrates the effect of MFs by visualizing the attention map of GCRA in ablation studies.

2. Related Work

2.1. Optimization-Based Point Cloud Up-Sampling

2.2. Learning-Based Point Cloud Up-Sampling

3. Problem Description

4. Method

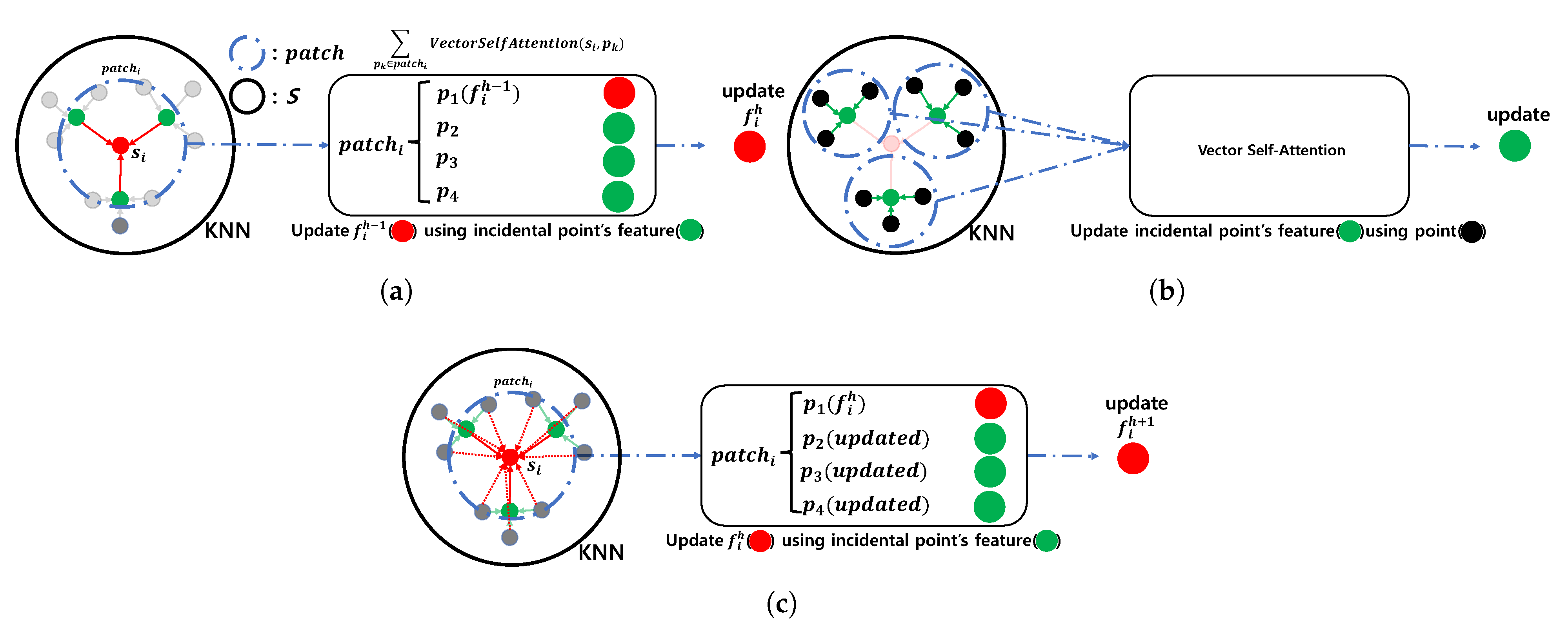

4.1. Multi-Scale Feature Extractor

Point Transformer

4.2. Global Context Refiner

Global Context Refining Attention

4.3. Coarse Point Generator

4.4. Self-Attention Block

5. Experimental Settings

5.1. Datasets

5.2. Loss Function

5.3. Metric

5.4. Comparison Methods

5.5. Implementation Details

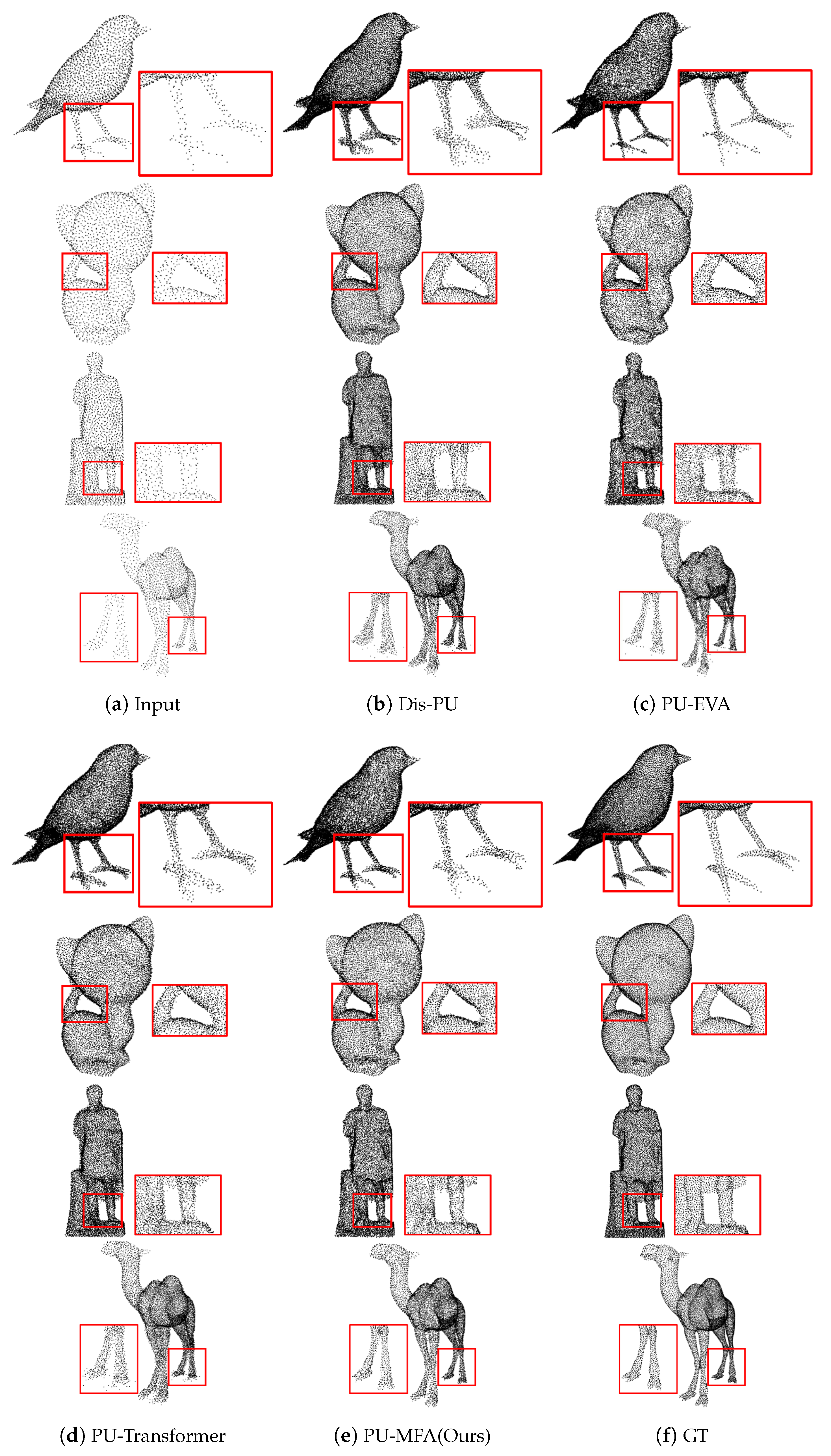

6. Experimental Results

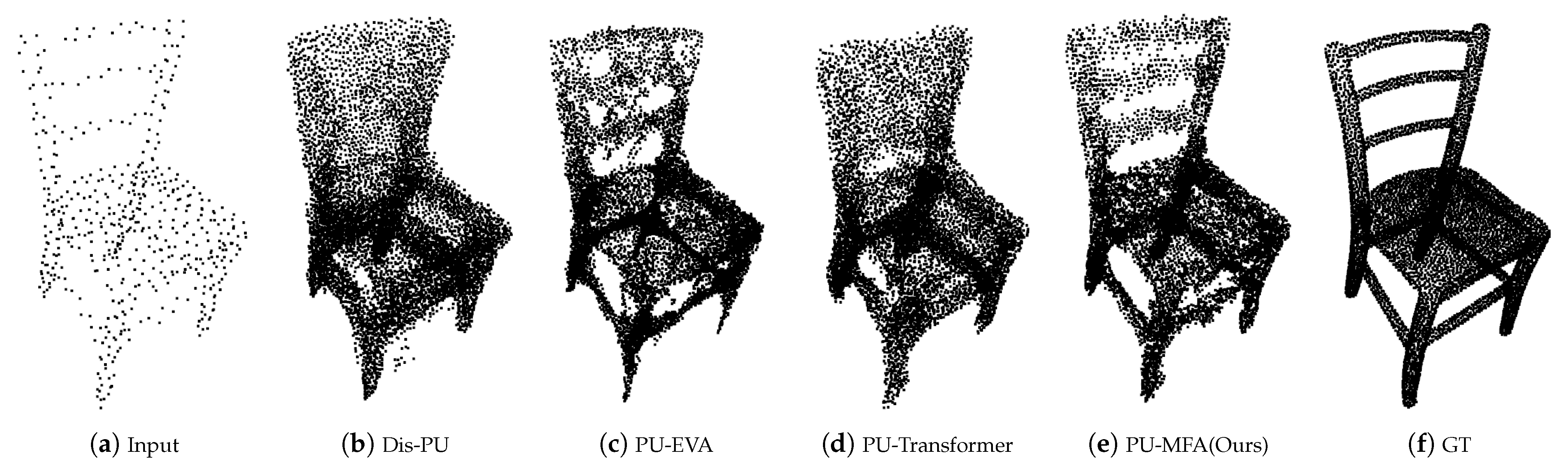

6.1. Results on 3D Synthetic Datasets

6.2. Results on Real-Scanned Datasets

6.3. Ablation Study

6.3.1. Effect of Components

6.3.2. Multi-Scale Features Attention Analysis

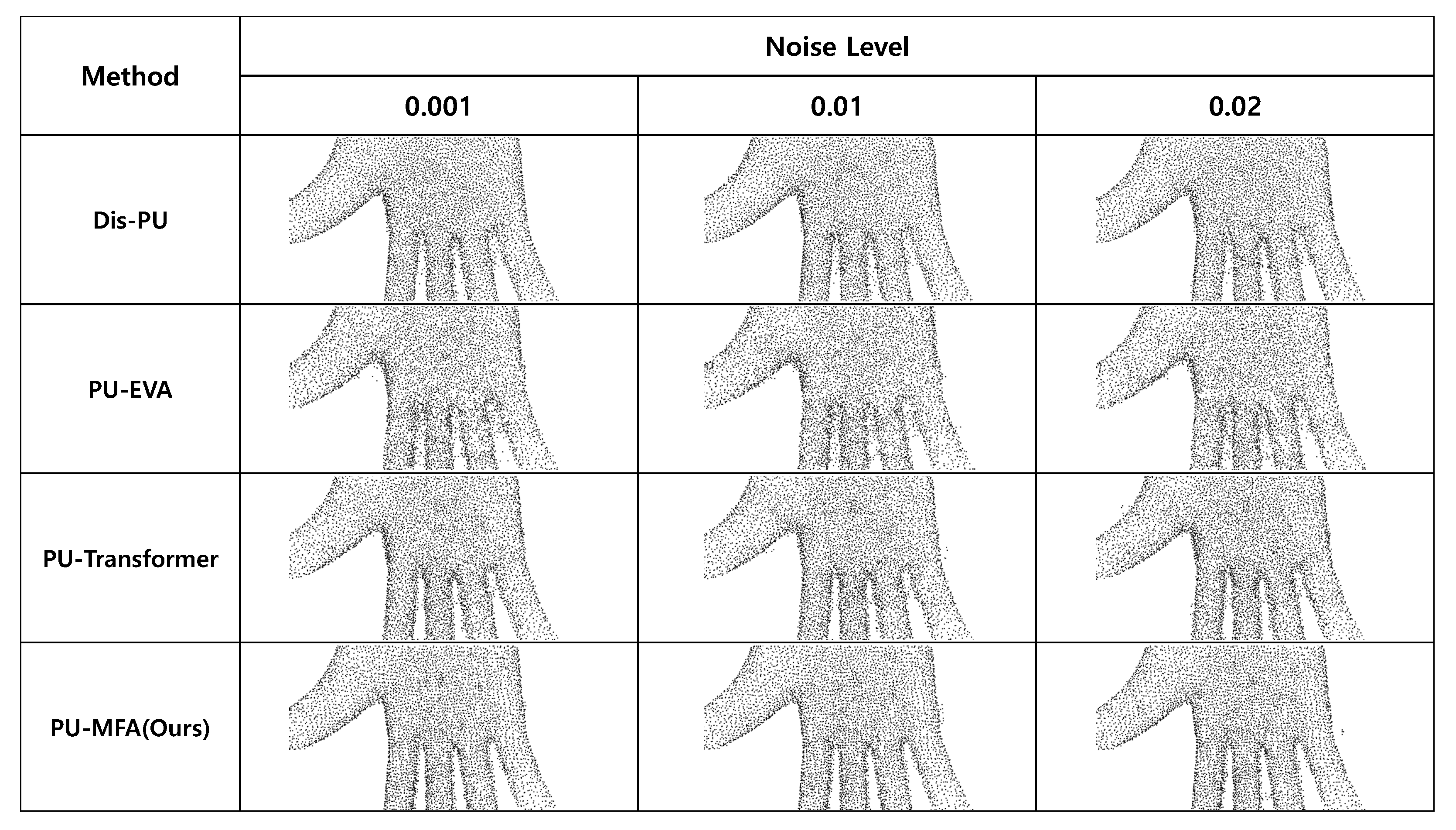

6.3.3. Effect of Noise

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 16. [Google Scholar] [CrossRef]

- Lim, H.; Yeon, S.; Ryu, S.; Lee, Y.; Kim, Y.; Yun, J.; Jung, E.; Lee, D.; Myung, H. A Single Correspondence Is Enough: Robust Global Registration to Avoid Degeneracy in Urban Environments. arXiv 2022, arXiv:2203.06612. [Google Scholar]

- Niu, B.; Wen, W.; Ren, W.; Zhang, X.; Yang, L.; Wang, S.; Zhang, K.; Cao, X.; Shen, H. Single image super-resolution via a holistic attention network. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 191–207. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1833–1844. [Google Scholar]

- Qiu, S.; Anwar, S.; Barnes, N. PU-Transformer: Point Cloud Upsampling Transformer. arXiv 2021, arXiv:2111.12242. [Google Scholar]

- Luo, L.; Tang, L.; Zhou, W.; Wang, S.; Yang, Z.X. PU-EVA: An Edge-Vector Based Approximation Solution for Flexible-Scale Point Cloud Upsampling. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16208–16217. [Google Scholar]

- Qian, G.; Abualshour, A.; Li, G.; Thabet, A.; Ghanem, B. Pu-gcn: Point cloud upsampling using graph convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11683–11692. [Google Scholar]

- Alexa, M.; Behr, J.; Cohen-Or, D.; Fleishman, S.; Levin, D.; Silva, C.T. Computing and rendering point set surfaces. IEEE Trans. Vis. Comput. Graph. 2003, 9, 3–15. [Google Scholar] [CrossRef]

- Lipman, Y.; Cohen-Or, D.; Levin, D.; Tal-Ezer, H. Parameterization-free projection for geometry reconstruction. ACM Trans. Graph. (TOG) 2007, 26, 22-es. [Google Scholar] [CrossRef]

- Li, R.; Li, X.; Fu, C.W.; Cohen-Or, D.; Heng, P.A. Pu-gan: A point cloud upsampling adversarial network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 7203–7212. [Google Scholar]

- Yu, L.; Li, X.; Fu, C.W.; Cohen-Or, D.; Heng, P.A. Pu-net: Point cloud upsampling network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2790–2799. [Google Scholar]

- Yifan, W.; Wu, S.; Huang, H.; Cohen-Or, D.; Sorkine-Hornung, O. Patch-based progressive 3d point set upsampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5958–5967. [Google Scholar]

- Li, R.; Li, X.; Heng, P.A.; Fu, C.W. Point cloud upsampling via disentangled refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 344–353. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Huang, H.; Li, D.; Zhang, H.; Ascher, U.; Cohen-Or, D. Consolidation of unorganized point clouds for surface reconstruction. ACM Trans. Graph. (TOG) 2009, 28, 1–7. [Google Scholar] [CrossRef]

- Huang, H.; Wu, S.; Gong, M.; Cohen-Or, D.; Ascher, U.; Zhang, H. Edge-aware point set resampling. ACM Trans. Graph. (TOG) 2013, 32, 1–12. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5105–5114. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Qian, Y.; Hou, J.; Kwong, S.; He, Y. PUGeo-Net: A geometry-centric network for 3D point cloud upsampling. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 752–769. [Google Scholar]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.; Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16259–16268. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Wen, X.; Li, T.; Han, Z.; Liu, Y.S. Point cloud completion by skip-attention network with hierarchical folding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1939–1948. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1936–1945. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International Conference on Machine Learning; PMLR: Lille, France, 2015; pp. 448–456. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Nguyen, A.D.; Choi, S.; Kim, W.; Lee, S. Graphx-convolution for point cloud deformation in 2d-to-3d conversion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8628–8637. [Google Scholar]

- Yu, X.; Rao, Y.; Wang, Z.; Liu, Z.; Lu, J.; Zhou, J. Pointr: Diverse point cloud completion with geometry-aware transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 12498–12507. [Google Scholar]

- Wu, T.; Pan, L.; Zhang, J.; Wang, T.; Liu, Z.; Lin, D. Density-aware chamfer distance as a comprehensive metric for point cloud completion. arXiv 2021, arXiv:2111.12702. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Vancouver, BC, Canada, 2019; pp. 8024–8035. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Symbol | Description |

|---|---|

| S | Sparse point set |

| Element of S | |

| Offset of S | |

| Element of | |

| D | Ground truth point set |

| Element of D | |

| Coarse point set | |

| Element of | |

| Offset of | |

| Element of | |

| Q | Dense point set |

| Element if Q | |

| N | Input patch size |

| r | Up-sampling ratio |

| H | Depth of layer |

| Set of point wise feature extracted from Point Transformer | |

| Point-wise feature extracted from Point Transformer | |

| C | Channel |

| Expansion rate | |

| Patch created through KNN based on | |

| Neighbor size of KNN |

| Method | ×4 (2048→ 8192) | ×16 (512→ 8192) | ||||||

|---|---|---|---|---|---|---|---|---|

| CD | HD | P2F | #Params (M) | CD | HD | P2F | #Params (M) | |

| Dis-PU | 0.2703 | 5.501 | 4.346 | 2.115 | 1.341 | 28.47 | 20.68 | 2.115 |

| PU-EVA | 0.2969 | 4.839 | 5.103 | 2.198 | 0.8662 | 14.54 | 15.54 | 2.198 |

| PU-Transformer | 0.2671 | 3.112 | 4.202 | 2.202 | 1.034 | 21.61 | 17.56 | 2.202 |

| PU-MFA (Ours) | 0.2326 | 1.094 | 2.545 | 2.172 | 0.5010 | 5.414 | 9.111 | 2.172 |

| Method | Time per Batch (sec/batch) |

|---|---|

| Dis-PU | 0.02659 |

| PU-EVA | 0.02360 |

| PU-Transformer | 0.02244 |

| PU-MFA (Ours) | 0.02331 |

| Case | Contribution | Metric | ||||

|---|---|---|---|---|---|---|

| MHA | MFE | MFs | CD | HD | P2F | |

| 1 | 0.3349 | 4.461 | 4.926 | |||

| 2 | √ | 0.2473 | 1.101 | 2.829 | ||

| 3 | √ | √ | 0.2500 | 2.735 | 2.737 | |

| 4 | √ | √ | √ | 0.2362 | 1.094 | 2.545 |

| Method | Various Noise Levels Test at ×4 Up-Sampling (CD with ) | |||||

|---|---|---|---|---|---|---|

| 0 | 0.001 | 0.005 | 0.01 | 0.015 | 0.02 | |

| Dis-PU | 0.2703 | 0.2751 | 0.2975 | 0.3257 | 0.3466 | 0.3706 |

| PU-EVA | 0.2969 | 0.2991 | 0.3084 | 0.3167 | 0.3203 | 0.3268 |

| PU-Transformer | 0.2671 | 0.2717 | 0.2905 | 0.3134 | 0.3331 | 0.3585 |

| PU-MFA (Ours) | 0.2326 | 0.2376 | 0.2547 | 0.2764 | 0.2989 | 0.3195 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H.; Lim, S. PU-MFA: Point Cloud Up-Sampling via Multi-Scale Features Attention. Sensors 2022, 22, 9308. https://doi.org/10.3390/s22239308

Lee H, Lim S. PU-MFA: Point Cloud Up-Sampling via Multi-Scale Features Attention. Sensors. 2022; 22(23):9308. https://doi.org/10.3390/s22239308

Chicago/Turabian StyleLee, Hyungjun, and Sejoon Lim. 2022. "PU-MFA: Point Cloud Up-Sampling via Multi-Scale Features Attention" Sensors 22, no. 23: 9308. https://doi.org/10.3390/s22239308

APA StyleLee, H., & Lim, S. (2022). PU-MFA: Point Cloud Up-Sampling via Multi-Scale Features Attention. Sensors, 22(23), 9308. https://doi.org/10.3390/s22239308