Abstract

With recent advancements in artificial intelligence (AI) and next-generation communication technologies, the demand for Internet-based applications and intelligent digital services is increasing, leading to a significant rise in cyber-attacks such as Distributed Denial-of-Service (DDoS). AI-based DoS detection systems promise adequate identification accuracy with lower false alarms, significantly associated with the data quality used to train the model. Several works have been proposed earlier to select optimum feature subsets for better model generalization and faster learning. However, there is a lack of investigation in the existing literature to identify a common optimum feature set for three main AI methods: machine learning, deep learning, and unsupervised learning. The current works are compromised either with the variation of the feature selection (FS) method or limited to one type of AI model for performance evaluation. Therefore, in this study, we extensively investigated and evaluated the performance of 15 individual FS methods from three major categories: filter-based, wrapper-based, and embedded, and one ensemble feature selection (EnFS) technique. Furthermore, the individual feature subset’s quality is evaluated using supervised and unsupervised learning methods for extracting a common best-performing feature subset. According to our experiment, the EnFS method outperforms individual FS and provides a universal best feature set for all kinds of AI models.

1. Introduction

Internet Service Providers (ISPs) are experiencing a significant increase in their network traffic in recent years. Such an increase in traffic which is mainly driven by Internet-based applications and digital services often leads to a rise in cyber attacks [1]. Attackers often look for network vulnerabilities and loopholes to gain unauthorized access to the internet infrastructure and services to launch their cyber attacks.

One of the most common cyber attacks in today’s Internet is DDoS (Distributed Denial of Service), which floods a network with unwanted messages, making it impossible for the target server to serve genuine requests from legitimate clients. The recent increase in DDoS attacks has led to an urgent need to take safeguards measures against those attacks. Artificial Intelligence (AI)-based Intrusion Detection Systems (IDSs) have been proposed to guard against such attackers. However, the AI-based IDS requires historical data for learning and detecting attacks in the future. The accuracy of the attack detection models depends on the data quality and complexity. Most of the historical data contains many insignificant features which are irrelevant to the target variable. These redundant features increase the training time of the model and have a detrimental influence on the model’s performance. To address this issue, the Feature Selection (FS) method is used in conjunction with IDS to remove unnecessary features from the dataset, enhancing the model generalization capabilities and minimizing execution time. One of the most critical aspects of AI-based IDS is choosing an optimum feature set that can efficiently classify the target in the dataset and improve accuracy. FS methods not only extract the best features but also increase model interpretability and reduce the overfitting chances of the model [2]. Meanwhile, efficient machine learning applications require FS, as explained in detail with four different types of dataset: (i) conventional data with flat features, (ii) structured features, (iii) heterogeneous data, and (iv) streaming data. Therefore, different FS methods extract a distinct feature set from a dataset, producing diverse detection accuracy for various AI models from supervised and unsupervised learning categories.

In this paper, we investigated and evaluated the performance of an Ensemble Feature Selection (EnFS) approach for both supervised and unsupervised learning models to extract a standard optimal feature set. The EnFS method combines the features selected by the individual FS method to choose the best feature subset from the dataset [3]. In addition, the EnFS technique ensures optimum results in performance and robustness [4]. For our EnFS, we used three primary types of FS techniques such as (i) Filter-based, (ii) Wrapper-based, and (iii) Embedded Filter-based. The Filter-based FS method uses the data properties to select features based on a particular measure. In the Wrapper-based FS method, a model is first trained on a subset of features, and feature selection is dependent on a search criterion in this technique. Then, we determine whether to add or remove features based on the previous model’s conclusions. The Embedded Filter-based FS method combines both the filter and wrapper techniques. Finally, the integrated feature selection approach uses its algorithm to pick the optimal feature subset from the dataset. In this work, we studied fifteen FS methods from three primary FS methods explained above and used their extracted feature sets to train supervised models, including Machine Learning (ML) and Deep Learning (DL) and unsupervised model to identify the best FS method.

The ensemble method works on several techniques: (i) Majority Voting, (ii) Bagging and Posting, (iii) Boosting, (iv) Random Forest, and (v) Stacking [5]. In this paper, we used the Majority Voting (MV) technique for our ensemble method. It uses several feature selection methods to select the dominant features and allocate a vote to each one. Then, it adds up the total votes for each feature and uses the plurality voting technique to choose the optimum features. After that, we compared the detection model performance based on all fifteen individual feature sets, the optimum feature set obtained from EnFS, and the original feature set from our dataset. Our ensemble-based feature set outperforms unique feature sets for both supervised and unsupervised models. We validated the improved performance of the EnFS selection technique using both supervised (ML and DL) and unsupervised models. The current works are primarily focused on implementing ensemble methods only on supervised models such as Bayesian Networks, Regression Trees [6], Support Vector Machine [7], K-Nearest-Neighbors [8], or unsupervised models such as One-Class SVMs (OCSVM) [9,10], Isolation Forest and Local Outlier Factor [11] separately. However, the implementation of all three primary FS methods in both supervised (machine learning and deep learning) and unsupervised models are not reported in the literature. Earlier, we investigated the performance of different FS methods using only supervised models [12]. In this paper, we extended our preliminary work by implementing unsupervised models. In addition, the supervised learning models are enhanced by incorporating more detection mechanisms. The main research contributions of this work are as follow:

- Implement a wide variety of unique feature selection methods from three major categories: filter-based, wrapper-based, and embedded. We also fine-tuned the hyper-parameters for the feature selection method using the grid search technique. Finally, we compare the performance of individual feature selection methods using stater-of-art machine learning, deep learning, and unsupervised learning models.

- Ensemble feature sets extracted from individual feature selection methods based on majority voting. Since different feature subset performs differently for a different classification model, we try to combine them to find a better common feature subset for all major types of the attack detection algorithm.

- Evaluate the performance of the ensemble feature selection method using machine learning, deep learning, and unsupervised learning and compares the performance with the individual feature selection method to extract an optimal feature set.

The rest of the paper is organized as follows: The literature overview of existing intrusion detection systems and related work in machine learning, deep learning, and unsupervised learning is described in Section 2. Next, Section 3 describes the research methodology, including data handling, feature selection, classification model configuration, and experimental environment settings. Next, the experimental result and discussion to compare individual FS method and EnFS method performance is presented in Section 4. Lastly, Section 5 brings our article to a conclusion by drawing some potential research directions.

2. Literature Review

Machine Learning is a method by which machines learn how to do tasks without being explicitly programmed to do so each time, resulting in the ability to learn and progress without external intervention. The computer can be trained to be intelligent in various efficient ways, one of which is better interpretations of the inputs extracted by FS methods during the training process. The feature selection is crucial for the machine learning model as a preprocessing step before doing classifications or regression. This technique helps eliminate unnecessary and insignificant attributes from the data collection, intending to improve the model performance.

2.1. Supervised Techniques

Tsai et al. [13] provided a review on 55 papers about ML-based intrusion detection and performed a comparative analysis of the existing works based on the classifiers (single, hybrid, and ensemble classifiers) and datasets they used. Mukkamala et al. [14] conducted a comparative study on selective two algorithms such as Artificial Neural Network (ANN) and Support Vector Machine (SVM). They also experimented with an ensemble classification model consisting of ANN and SVM. Their work showed ensemble classification outperformed individual classifiers in intrusion detection. Chebrolu et al. [6] proposed a lightweight intrusion detection technique using ensemble learning and two FS methods. Amiri et al. [7] investigated both linear and non-linear measures to estimate the feature goodness for FS algorithms. They proposed two FS methods called Linear Correlation-based Feature Selection (LCFS) and Modified Mutual Information-based Feature Selection (MMIFS). They evaluated the performance of their proposed FS techniques based on the classification results from the Least Squares Support Vector Machine (LSSVM) model trained by the KDD’99 dataset. Gomes et al. [15] discussed upcoming trends in ensemble learning with the identification of open-sourced tools. In addition, they proposed a taxonomy of ensemble methods for data stream classification. Sagi, Omer, and Lior Rokach [16] reviewed ensemble learning approaches, tools, and techniques. Their research focused on big data compatibility, model transformation, and integration with Deep Neural Networks (DNN) and recommended corresponding popular algorithms. Gao et al. [17] developed an ensemble adaptive voting algorithm for improving detection accuracy using the NSL-KDD dataset. They also summarized a comparative analysis between their proposed Multitree algorithm with existing algorithms. Tu Pham et al. [18] designed an improved IDS using the ensemble feature selection technique, and their model provides high detection accuracy using the NSL-KDD dataset. They used bagging and boosting methods for extracting the optimum feature set from the original dataset. Das et al. [8] claimed an efficient DDoS detection system based on ensemble technique with high accuracy. Their ensemble framework consists of four supervised machine learning classifiers trained on the NSL-KDD dataset. In ref. [19], they present an end-to-end model for cyberattack detection and cyberattack classification using deep learning-based recurrent models. The suggested model retrieves the hidden layer features from recurrent models and then uses a kernel-based principal component analysis (KPCA) features extraction method to choose the best features. An ensemble meta-classifier is then employed to classify the data once the best features from the recurrent models have been combined.

Chandrashekhar and Sahin [20] provided an elaborated study on three primary FS techniques such as filter-based, wrapper-based, and embedded. They analyzed the performance of different feature selection techniques for both supervised and unsupervised learning. Sheikhpour et al. [21] used labeled and unlabeled datasets while exploring the semi-supervised FS method. In their research work, they provided two different hierarchical structured taxonomies for semi-supervised FS methods. Khalid et al. [22] performed a detailed survey on feature selection and extraction techniques to reduce the data dimensionality so that model can learn better from the dataset. They surveyed the existing works on dimensionality reduction techniques used in machine learning and discussed their applicability based on research criteria. Luis et al. [23] proposed an FS algorithm evaluation technique that can estimate the correctness score of a particular FS method with a corresponding explanation. Their evaluation method calculated the algorithm’s score based on relevance, irrelevance, redundancy, and size of the dataset and compared it with related algorithms. The whole simulation was executed precisely in a similar experimental environment. Their study shows that these selective criteria of FSA show firm dependence on data analysis. Adams and Beling [24] demonstrated that FS methods developed for Gaussian Mixture Models (GMM) could be adapted for Hidden Markov Models (HMM) and vice versa. Lin et al. [25] described an efficient intrusion detection mechanism by using the particular advantages of SVM, DT, and Simulated Annealing (SA) and evaluated performance based on the KDD’99 dataset. In the proposed model, SA helps to select the best features from the dataset and optimizes the parameters for SVM and DT. Their model shows the highest accuracy with the minimum number of features in comparison with the existing works. Os-anaiye et al. [26] proposed an ensemble-based multi-iterated FS technique that provides essential features for detecting DDoS attacks in cloud computing. Their feature ensemble framework used four filtered methods: information gain, gain ratio, chi-squared, and ReliefF. The extracted feature by their ensemble method exhibits higher accuracy for the NSL-KDD dataset. Das et al. [27] used the NSL-KDD dataset and provided an ensemble framework for producing optimal feature sets for ML algorithms. They also showed a comparative performance analysis between their work and existing techniques. Dash and Liu [28] extracted features from the dataset by using several feature selection techniques. They trained several ML algorithms such as Naive Bayes (NB), SVM, DT, etc., using extracted features for efficient attack detection.

2.2. Unsupervised Techniques

Unsupervised learning is mostly used for exploratory analysis and dimensionality reductions. It can discover the structure in data and can be well used for anomaly detection. The IDS using unsupervised learning has been one of the researched topics lately. Yousef et al. [9]. They stated that the highest overall performance was from the One-class SVM (OCSVM) on the standard Reuters dataset. In comparison to all other algorithms, the OCSVM method is less computationally costly than neural networks. Wang et al. [29] performed research on a hybrid model by using STIDE and Markov Chain kernels, combined with OCSVM. Their proposed method improved classification results and overcame some kernels’ drawbacks such as over-simplicity, overfitting, the requirement of pure normal data, and reliance on the threshold when used standalone. Mhamdi et al. [10] proposed that training with unlabeled or imbalanced data has a high potential for identifying DDoS attacks using SVM. Results show that OCSVM outperforms DL techniques when combined with auto-encoders. Similar research was conducted by Erfani et al. [30] on high-dimensional data set using DBN (Deep Belief Networks) and OCSVM, which showed prominently better results than deep autoencoders. Further, Vasudevan et al. [31] gave a hierarchical approach for constructing a mathematical model that combines unsupervised (Local Outlier Factor (LOF) and OCSVM) and supervised learning techniques. Lazarevic et al. [32] evaluated Local Outlier Factor (LOF), K-Nearest Neighbors (KNN), PCA (Principal Component Analysis), and unsupervised SVM for intrusion detection using the KDD’99 dataset. During the studies of OCSVM, Amer et al. [33] explained SVM-based algorithms had performed reasonably well for unsupervised anomaly detection. Especially the OCSVM is a suitable candidate for investigation when applying unsupervised anomaly detection in practice. LOF is a common density-based method, which is not appropriate for large-scale, high-dimensional datasets because of its high temporal complexity. The increase in computational power of LOF for faster intrusion detection using GPU is explained by Alshawabkeh et al. [34] which showed 100 times speedup in computational time of the system if compared to the CPU results. Karev et al. [35] used Isolation Forest (ISOF) for anomaly detection utilizing HTTP sessions. A two-layer ensemble model using LOF and ISOF is proposed by Cheng et al. [11] for working on skewed datasets and evaluated on factors such as pruning, efficacy, and accuracy. The results outperformed the normal LOF and ISOF results when performed separately. Xiaoling et al. [36] proposed SPIF (Isolation Forest and Spark), which works well with parallelization. Further broadening the aspect into an ensemble learning, Elghazel et al. [37] proposed a novel technique called Random Cluster Ensemble (RCE), which aimed to identify the out-of-bag feature significance from an ensemble of partitions. Both bagging and random subspaces were combined to create an ensemble of component clustering. They further tested RCE using a recursive feature removal strategy on nineteen benchmark datasets and found that it outperformed RCE without RFE (Recursive feature Elimination).

The importance of extracting the best feature set from the original data is evident from the above discussion. However, there are several limitations in the current works. A comparative analysis among existing literature has been summarized in Table 1. Firstly, a limited number of FS algorithms have been considered for the best feature selection. Secondly, a lack of variation in FS method type is apparent in the literature; they used either filter-based, wrapper-based, or embedded FS methods. Although a couple of works consider three major FS categories, the total number of FS methods was significantly lower. In addition, they experimented with only a supervised learning model for performance evaluation. Lastly, maximum works are considered one type of detection model to compare the performance of different FS methods. However, it is inefficient to design an optimum FS method for each model type, such as machine learning, deep learning, and unsupervised learning. In this work, we tried to extend the existing works by incorporating a significantly large set of individual FS methods from three major categories. In addition, the performance of the individual FS methods is evaluated using a wide variety of AI models from both supervised and unsupervised models. Finally, we applied an ensemble technique on individual FS methods to extract a common optimum feature set for all types of the prediction model.

Table 1.

Comparative Summary of Existing Works.

3. Methodology

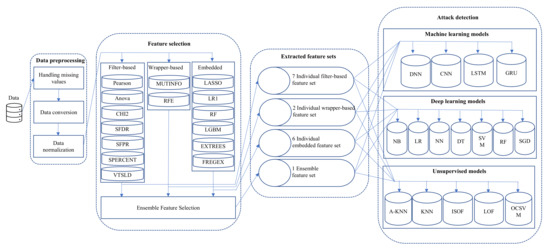

In this section, we describe our methodology, depicted in Figure 1. It consists of three main component: data preprocessing, individual and ensemble feature selection, and attack detection using machine learning, deep learning, and unsupervised learning. We considered UNSW-NB15 dataset for our experiment that is discussed in Section 3.1. However, the dataset requires some preprocessing steps before using them to training our attack detection model, explained in Section 3.2. Then, we describe feature selection component of our methodology in Section 3.3. Next, we summarized models used in the experiment and their evaluation metrics in Section 3.4 and Section 3.5 respectively. Finally, the configuration of our experimental environment is elaborated in Section 3.6.

Figure 1.

High-level framework of proposed methodology.

3.1. Dataset

We used the UNSW-NB15 dataset [40] created by the Australian Centre for Cyber Security (ACCS) in the Cyber Range Lab. The IXIA Perfect Storm tool was used to assemble raw network traffic for the UNSW-NB15 dataset. Our experiment focused specifically on DDoS attack classification. All other irrelevant attacks data were removed from the dataset before starting our investigation. Two different dataset configurations were considered respectively for our supervised and unsupervised classification model. The training and testing dataset are almost balanced for our supervised learning models, consisting of 112,001 and 69,996 data instances, respectively. For unsupervised learning, we used 99% benign data and 1% malicious data in the training phase. On the other hand, a random 1000 samples were utilized from the testing dataset to verify our model performance. The overall class distribution of our experimental data is summarized in Table 2.

Table 2.

Dataset Summary.

3.2. Data Preprocessing

Data preprocessing is one of the essential steps for designing an efficient machine learning model, and it includes data cleaning, conversion, scaling, and feature extraction. Firstly, the missing values, null values, and infinity values are dropped off from the dataset. Then, we converted categorical features into numerical values so that the machine learning model can process them for future inference. A technique called Label Encoding from the Scikit-Learn [41] library was used to perform the conversion, which replaces each value in the category with a number in a sequence. Finally, a data normalization or feature scaling technique is applied to our dataset, converting all input data to a standard scale. This step is crucial so that the machine learning model might not give more importance to larger range values than smaller ones and make the inference wrong. Therefore, we applied the Min-Max scaling process, which converts each feature to a given range (e.g., 0 to 1).

3.3. Ensemble Feature Selection

A dataset consists of P columns (features), which describe the behavior of Q rows, i.e., data instance in the problem domain, and a target variable y. The process of selecting a feature subset , which is most essential to classify the target variable, is called Feature Selection (FS). Each FS algorithm selects a different subset of features that give us different accuracy in the machine learning model. So, we used an ensemble-based feature selection method that combines multiple feature subsets extracted by other FS methods to generate an optimal feature subset based on feature ranking [42].

We select a wide variety of feature selection methods from three major categories: Filter-based, wrapper-based, and embedded. A total of 15 different individual feature selection methods have been chosen from these categories. Among them, seven approaches are considered from the filter-based type, and they are Pearson’s correlation (PEARSON), Chi-Square (CHI2), SelectFDR (SFDR), ANOVA, Select Percentile (SPERCENT), SelectFPR (SFPR), and Variance Threshold (VTSLD). Next, we have selected two wrapper-based feature selection methods: Recursive Feature Elimination (RFE) and Mutual Information (MUTINFO) for our experiment. Finally, the rest of the methods are considered from the embedded category. A total of six embedded algorithms are used to extract features in our experiment, and they are Logistic Regression (LRL1), Extra Tree (EXTREES), LASSO Regression (LASSO), Random Forests (RF), univariate linear regression based on F-statistics (FREGEX), and Light GBM (LGBM). Each feature selection technique chooses a unique feature set most appropriate for the target categorization. We grid-search different lengths of feature subsets, and in the hyper-parameter configurations of our FS approach, we used a number of the best features, including 10, 15, and 20, and value 20 produced the best results. We then combined all the feature sets chosen by the individual FS approach to create an ensemble feature set. The features from each unique FS method are incorporated into the majority voting (MV) method. The features selected by the MV approach are those that received votes from more than 50% of the potential FS method.

3.4. Attack Detection Models

Several machine learning models from both supervised (machine learning and deep learning) and unsupervised categories were implemented to evaluate the performance of EnFS and individual FS methods. There are seven machine learning, four deep learning, and five unsupervised learning models implemented in this experiment to measure and compare the performance based on the various feature sets such as individual feature set, ensemble feature, and original feature set. We used Naive Bayes (NB), Logistic Regress (LR), Neural Network (NN), Decision Tree (DT), Random Forest (RF), Support Vector Machine (SVM), and Stochastic Gradient Descent (SGD) as a supervised machine learning model. In addition, four deep learning models, such as Deep Neural Network (DNN), Convolutional Neural Network (CNN), Long Short Term Memory (LSTM), and Gated Recurrent Unit (GRU), also used as a supervised model to classify the attack. Finally, the DDoS attacks were classified using unsupervised learning models such as One-Class Support Vector Machines (OCSVM), Isolation Forest (ISOF), K Nearest Neighbors (KNN), Average KNN (A_KNN), and Local Outlier Factor (LOF). The hyper-parameters for all classification models are summarized in Table 3. The table contains three separate sections representing Supervised Learning (Machine learning), Supervised Learning (Deep learning), and Unsupervised Learning models parameters used in our experiment. We considered a significant combination of different detection model and feature set to evaluate the performance of feature selection methods. Table 4 summarize the total number of classification models categorized by model category and feature selection type. Total of 119 machine learning models have been considered in our experimentation with five different feature categories. Similarly, we considered total of 68 and 85 deep learning and unsupervised learning model respectively for further analysis.

Table 3.

Hyper-parameters setting for classification models.

Table 4.

Total number of models considered for performance evaluation.

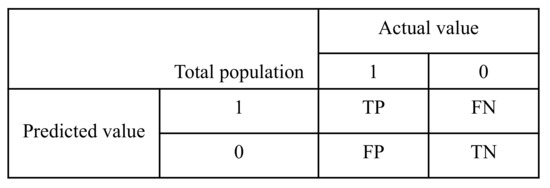

3.5. Evaluation Metrics

We used different evaluation metrics, e.g., Accuracy, Precision, Recall, F-1 Score, and total training time (Time) in second to estimate the performance of our classification models. There are four measurement parameters in the confusion or error matrix shown in Figure 2: True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN), which are used to define the evaluation metrics stated above.

Figure 2.

Confusion Matrix.

Here, the accuracy can be defined as the percentage of true attack detection over total data samples. Precision measures how often the model can correctly identify the DoS attack from the dataset. Reall is the measurement of how many of the DoS samples from dataset the model does distinguish correctly. Finally, F-1 score is the harmonic average of precision and recall.

3.6. Software and Hardware Preliminaries

We used Python and the machine learning library scikit-learn [41], Tensorflow-Keras [43] to conduct the experiments in computers with the configuration of Intel (R) i3-8130U CPU@2.20 GHz, 8 GB memory, and 64-bit Windows operating system.

4. Results and Discussion

In this section, we analyze and discuss our experimental results. Our experiment is divided mainly into three phases: (i) individual feature selection, (ii) ensemble feature selection and (iii) performance evaluation using supervised and unsupervised models, discussed following.

4.1. Individual Feature Selection

In this phase, a wide variety of feature selection methods from three major categories has been selected for the feature extraction from the original dataset. However, the dataset consists of a total of 42 features which are not all contributing significantly to detecting the attack. So It is necessary to select a feature subset that is highly correlated with the target variable. Hence, we experimented with different feature selection methods for a better comparative analysis. However, some feature selection methods, such as Pearson correlation, chi2, accept the length of the expected feature subset as a parameter. Therefore, a grid search technique has been implemented in our experiment to test different feature subset sizes such as 10, 15, and 20 for choosing the optimum feature-length for those feature selection methods. We found 20 is the best-performing parameter for them. The individual result for each feature selection algorithm is summarized in Table 5. According to our experimental results, some feature selection algorithms returns comparatively smaller feature subset. For example, the SPERCENT and EXTREES extracted only 9 and 8 features respectively. However, we only validate the quality of these feature subset when we used them for our attacks classification task. Therefore, we used each feature subset for wide variety of machine learning models training so that we can evaluate their performance better.

Table 5.

Selected features by individual FS method.

4.2. Ensemble Feature Selection

After extracting all individual feature subsets, we applied an ensemble technique to find out a subset of features selected by most candidate feature selection methods. A feature is chosen for the ensemble feature set when selected by at least 50% of the individual feature selection methods. Since our ensemble technique is a filter-based approach, we engaged more selectors in the election process for better judgment. For example, our feature selection phase employed 15 different methods. A feature is included in the ensemble feature set when voted by more than or equal to eight individual feature selection methods. Table 6 represents the ensemble feature set with their corresponding total vote as count. The ensemble method reduced the original feature set length by more than 60% for the detection model. From Table 5, we can find some feature selection methods which return smaller feature subset than our ensemble approach. However, the actual performance should be measure with the combination of feature set, corresponding accuracy and execution time. In the next section, we evaluate the performance of individual feature using different models.

Table 6.

Ensemble feature set selected by most the FS method.

4.3. Performance Evaluation

We implemented three major types of AI models: machine learning, deep learning, and unsupervised learning to evaluate the performance of the feature selection method to identify any best-performing common feature set for all. Each particular detection model has been trained using 17 different feature sets, including 15 individual feature sets, one ensemble feature, and one original feature set. That indicates a significant combination consisting of various models and feature subsets to evaluate the performance illustrated in Table 4. For example, 119 different (each of seven models trained and evaluated on 17 feature sets) machine learning models were investigated to determine the best performing model and the corresponding feature set. In addition, we analyzed 68 (four models with 17 feature sets) and 85 (five models with 17 feature sets) models, respectively, in deep learning and unsupervised learning. All our experimental results reported in Table 7, Table 8, Table 9 and Table 10 are evaluated based on classification model accuracy, F1-score, precision, recall, and total training time in second (Time).

Table 7.

Individual FS method best performance summary in machine learning.

Table 8.

Individual machine learning model best performance summary.

Table 9.

Individual FS method best performance summary in deep learning.

Table 10.

Individual deep learning model best performance summary.

The best performing machine model for all individual feature sets is summarized in Table 7 where each row represents the performance of a particular FS method and their corresponding machine learning model. According to our experimental results, most of the feature selection method performs well when it combines with the neural network (NN) architecture and it is 47.1% (8 out of 17). The neural network has more generalization capability compared to tree-based algorithms such as decision tree (DT) or random forest (RF) when it trains with larger dataset. However, the NN model is comparatively difficult to find the right hyper-parameters and architecture to avoid the overfitting. In addition, the required time to train the NN model is significantly larger compared to DT or RF as the number of trainable parameters is high for NN. Random forest (RF) algorithms also performs very well with 6 individual feature sets which is more than 35.3% of our feature selection methods. Other 17.6% feature selection methods provide best performance when they trained with decision tree (DT) algorithm. Since RF algorithm consider multiple decision tree to make the final prediction, they generally perform well than DT but it takes more training time. The average training time for NN model is 117.29 s which is the highest among three best classifiers for all individual feature sets while the DT took the lowest average training time of 1.02 s and RF takes 11.09 s.

The ensemble feature set (EN) trained using the neural network (NN) model shows the best performance with an accuracy of 87.2%. The CHI2 feature set provided a similar accuracy of 87.2% but the model training time (180.5 s) is significantly higher (more than double) than the EN feature set training time (78.32 s). The EN feature set provides the overall best performance in terms of accuracy and significantly lower execution time. According to our experimental results, the performance of machine learning model is highly depends on the feature set used to train. For example, the NN model provided best performance when it trains with EN feature set but similar model gave only 71% accuracy with feature set extracted by LASSO method. Table 8 summarizes the best-performing machine learning model with their corresponding feature set. Most of the model (4 out 7) performance (accuracy) is better using the SFPR feature set, although their accuracy is below 80%, which is significantly low in comparison to the best accuracy (87.2%). This result shows that selecting a suitable machine learning model with an efficient feature set is essential for the best performance.

The best performing deep learning model for all particular feature sets is shown in Table 9 where the EN feature set performs better with an accuracy of 86.8 % using the LSTM model. LSTM is one the best performing model considering all individual feature set results but it execution time was comparatively large than other three models. On the other hand, the DNN took lesser execution time but the average prediction accuracy was less than 80%. The number of trainable parameters is considerably huge in LSTM compared to DNN that results in more training time and better accuracy for LSTM. GRU and CNN performance was very close to each other based on number feature sets where they performed well. In addition, their detection accuracy was close and they are respectively 82% and 83% for GRU and CNN. However, the average execution time was almost 67% more in GRU compared to CNN. Table 10 summarizes the best performance (in terms of accuracy) of deep learning models with their respective feature sets. In the case of deep learning models, the EN feature set shows better performance in most cases. It increases the detection accuracy by more than 4% compared with the individual feature set.

The performance of best performing unsupervised learning models for all the feature selection methods is described in Table 11. For example, the A-KNN model with the EN feature set gives us the best accuracy of 76%. The individual best performance for all unsupervised models is shown in Table 12. The accuracy is the same (76%) for both A-KNN and KNN, but the execution time is short in A-KNN. The length of the feature set for KNN is 20 while the ensemble feature set for A-KNN has comparatively smaller set of feature with 13 elements. As a result, we noticed a improved execution time with ensemble feature set with better accuracy. Compared with the individual feature selection method, the EN feature provided a minimum of 8% more accuracy.

Table 11.

Individual FS method best performance summary for unsupervised learning.

Table 12.

Individual unsupervised learning model best performance summary.

A comparison between existing works and our research is summarized in Table 13. All referred results in the comparison table reported the performance on the UNSW-NB15 dataset. Compared to the current work, we performed an extensive analysis of DDoS detection in the same dataset for three main categories of AI models: machine learning (ML), deep learning (DL), and unsupervised learning (UL). The existing works implement one type of detection model at most without any feature selection, while we compared the performance of ensemble feature selection techniques for three diverse models. It is necessary to extract important features for better generalization and running time. That is why we extracted a common best-performing feature set for three different detection models, giving better accuracy and reduced execution time. For example, in [38], they used both ML and DL methods for performance evaluation, but our proposed DL model outperformed by 10% more accurate detection. In the case of ML models, our performance was lower, but it was very close, with a gap of only 3% in the case of model accuracy. In [44], they considered only DL models for DDoS detection with an accuracy of 86% while our proposed model outperformed by 1% with an accuracy of 87%. Compared to the machine learning model in [45], our model accuracy was lower by 4%. However, we could not compare the running time, although it is essential to evaluate the performance.

Table 13.

Comparision with the existing works.

5. Conclusions

Our research explains the importance of selecting an optimal feature set to classify DDoS attacks using AI-based techniques. Existing works analyzed and compared the performance of different FS methods using either machine learning, deep learning, or unsupervised learning. In this work, we performed a comprehensive analysis of a substantial number of FS methods using three main types of AI methods. Our experiment studied 15 individual feature sets, one ensemble feature set, and one original feature set to identify an optimal set of features. The Majority Voting technique ensembles the individual results from different FS methods. It selects features voted by more than 50% of candidate FS methods. The extracted feature sets were used to train seven ML algorithms, four DL models, and five unsupervised models to evaluate the performance of individual FS and EnFS. After analyzing the result, we concluded that our EnFS technique outperforms the FS method in all categories (ML, DL, and UL). However, our results also concluded that only the optimum feature set is insufficient for the best performance. Therefore, the right combination of optimal feature set and classification model is critical for the best classification result. There is some limitation in our proposed methodology. For example, our proposed model should validate with well-known cyber-attack datasets such as NSL-KDD, CICIDS, etc. Furthermore, in the case of feature selection, we grid search the hyper-parameters for the feature selection methods, which we can optimize using dynamic selection for better results. Therefore, We plan to extend our experiment with other well-known datasets in the cyber-security domain as part of our future work. Usage of multiple datasets to validate the approach would be more effective to choose combination of feature selection and detection models. Furthermore, as part of our ongoing research, adversarial machine learning (AML) might be included in the future to maintain the entire system secure while eliminating belligerent adversaries via the safe use of ML techniques in adversarial circumstances.

Author Contributions

Conceptualization, S.S.; methodology, S.S.; software, S.S.; validation, S.S.; formal analysis, S.S.; investigation, S.S.; writing—original draft preparation, S.S., A.T.P. and A.S.; writing—review and editing, S.S., A.T.P. and A.S.; supervision, A.H.; project administration, A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

UNSW-NB15 dataset at https://research.unsw.edu.au/projects/unsw-nb15-dataset (accessed on 23 October 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tunggal, A. Why Is Cybersecurity Important? UpGuard. 2021. Available online: https://www.upguard.com/blog/cybersecurity-important (accessed on 15 November 2021).

- Brownlee, J. Feature Selection to Improve Accuracy and Decrease Training Time. Available online: https://machinelearningmastery.com/feature-selection-to-improve-accuracy-and-decrease-training-time/ (accessed on 15 November 2021).

- Hoque, N.; Singh, M.; Bhattacharyya, D. EFS-MI: An ensemble feature selection method for classification. Complex Intell. Syst. 2018, 4, 105–118. [Google Scholar] [CrossRef]

- Pes, B. Ensemble feature selection for high-dimensional data: A stability analysis across multiple domains. Neural Comput. Appl. 2020, 32, 5951–5973. [Google Scholar] [CrossRef]

- Li, J.; Cheng, K.; Wang, S.; Morstatter, F.; Trevino, R.; Tang, J.; Liu, H. Feature selection: A data perspective. ACM Comput. Surv. (CSUR) 2017, 50, 1–45. [Google Scholar] [CrossRef]

- Chebrolu, S.; Abraham, A.; Thomas, J. Feature deduction and ensemble design of intrusion detection systems. Comput. Secur. 2005, 24, 295–307. [Google Scholar] [CrossRef]

- Amiri, F.; Yousefi, M.; Lucas, C.; Shakery, A.; Yazdani, N. Mutual information-based feature selection for intrusion detection systems. J. Netw. Comput. Appl. 2011, 34, 1184–1199. [Google Scholar] [CrossRef]

- Das, S.; Mahfouz, A.; Venugopal, D.; Shiva, S. DDoS intrusion detection through machine learning ensemble. In Proceedings of the 2019 IEEE 19th International Conference on Software Quality, Reliability and Security Companion (QRS-C), Sofia, Bulgaria, 22–26 July 2019; pp. 471–477. [Google Scholar]

- Manevitz, L.; Yousef, M. One-class SVMs for document classification. J. Mach. Learn. Res. 2001, 2, 139–154. [Google Scholar]

- Tang, T.; Mhamdi, L.; Zaidi, S.; El-moussa, F.; McLernon, D.; Ghogho, M. A deep learning approach combining auto-encoder with one-class SVM for DDoS attack detection in SDNs. In Proceedings of the International Conference on Communications and Networking, Chongqing, China, 15–17 November 2019. [Google Scholar]

- Cheng, Z.; Zou, C.; Dong, J. Outlier detection using isolation forest and local outlier factor. In Proceedings of the Conference on Research in Adaptive and Convergent Systems, Chongqing, China, 24–27 September 2019; pp. 161–168. [Google Scholar]

- Saha, S.; Priyoti, A.; Sharma, A.; Haque, A. Towards an Optimal Feature Selection Method for AI-Based DDoS Detection System. In Proceedings of the 2022 IEEE 19th Annual Consumer Communications Networking Conference (CCNC), Las Vegas, TX, USA, 8–11 January 2022; pp. 425–428. [Google Scholar]

- Tsai, C.; Hsu, Y.; Lin, C.; Lin, W. Intrusion detection by machine learning: A review. Expert Syst. Appl. 2009, 36, 11994–12000. [Google Scholar] [CrossRef]

- Mukkamala, S.; Sung, A.; Abraham, A. Intrusion detection using ensemble of soft computing paradigms. In Intelligent Systems Design and Applications; Springer: Berlin/Heidelberg, Germany, 2003; pp. 239–248. [Google Scholar]

- Gomes, H.; Barddal, J.; Enembreck, F.; Bifet, A. A survey on ensemble learning for data stream classification. ACM Comput. Surv. (CSUR) 2017, 50, 1–36. [Google Scholar] [CrossRef]

- Sagi, O.; Rokach, L. Ensemble learning: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1249. [Google Scholar] [CrossRef]

- Gao, X.; Shan, C.; Hu, C.; Niu, Z.; Liu, Z. An adaptive ensemble machine learning model for intrusion detection. IEEE Access 2019, 7, 82512–82521. [Google Scholar] [CrossRef]

- Pham, N.; Foo, E.; Suriadi, S.; Jeffrey, H.; Lahza, H. Improving performance of intrusion detection system using ensemble methods and feature selection. In Proceedings of the Australasian Computer Science Week Multiconference, Brisbane, QLD, Australia, 29 January–2 February 2018; pp. 1–6. [Google Scholar]

- Ravi, V.; Chaganti, R.; Alazab, M. Recurrent deep learning-based feature fusion ensemble meta-classifier approach for intelligent network intrusion detection system. Comput. Electr. Eng. 2022, 102, 108156. [Google Scholar] [CrossRef]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Sheikhpour, R.; Sarram, M.; Gharaghani, S.; Chahooki, M. A survey on semi-supervised feature selection methods. Pattern Recognit. 2017, 64, 141–158. [Google Scholar] [CrossRef]

- Khalid, S.; Khalil, T.; Nasreen, S. A survey of feature selection and feature extraction techniques in machine learning. In Proceedings of the 2014 Science and Information Conference, London, UK, 27–29 August 2014; pp. 372–378. [Google Scholar]

- Molina, L.; Belanche, L.; Nebot, À. Feature selection algorithms: A survey and experimental evaluation. In Proceedings of the 2002 IEEE International Conference on Data Mining, Maebashi City, Japan, 9–12 December 2002; pp. 306–313. [Google Scholar]

- Adams, S.; Beling, P. A survey of feature selection methods for Gaussian mixture models and hidden Markov models. Artif. Intell. Rev. 2019, 52, 1739–1779. [Google Scholar] [CrossRef]

- Lin, S.; Ying, K.; Lee, C.; Lee, Z. An intelligent algorithm with feature selection and decision rules applied to anomaly intrusion detection. Appl. Soft Comput. 2012, 12, 3285–3290. [Google Scholar] [CrossRef]

- Osanaiye, O.; Cai, H.; Choo, K.; Dehghantanha, A.; Xu, Z.; Dlodlo, M. Ensemble-based multi-filter feature selection method for DDoS detection in cloud computing. EURASIP J. Wirel. Commun. Netw. 2016, 2016, 1–10. [Google Scholar] [CrossRef]

- Das, S.; Venugopal, D.; Shiva, S.; Sheldon, F. Empirical evaluation of the ensemble framework for feature selection in ddos attack. In Proceedings of the 2020 International Conference on Edge Computing and Scalable Cloud (EdgeCom), New York, NY, USA, 1–3 August 2020; pp. 56–61. [Google Scholar]

- Dash, M.; Liu, H. Feature selection for classification. Intell. Data Anal. 1997, 1, 131–156. [Google Scholar] [CrossRef]

- Wang, Y.; Wong, J.; Miner, A. Anomaly intrusion detection using one class SVM. In Proceedings of the Fifth Annual IEEE SMC Information Assurance Workshop, West Point, NY, USA, 10–11 June 2004; pp. 358–364. [Google Scholar]

- Erfani, S.; Rajasegarar, S.; Karunasekera, S.; Leckie, C. High-dimensional and large-scale anomaly detection using a linear one-class SVM with deep learning. Pattern Recognit. 2016, 58, 121–134. [Google Scholar] [CrossRef]

- Vasudevan, A.; Selvakumar, S. Local outlier factor and stronger one class classifier based hierarchical model for detection of attacks in network intrusion detection dataset. Front. Comput. Sci. 2016, 10, 755–766. [Google Scholar] [CrossRef]

- Lazarevic, A.; Ertoz, L.; Kumar, V.; Ozgur, A.; Srivastava, J. A comparative study of anomaly detection schemes in network intrusion detection. In Proceedings of the 2003 SIAM international Conference on Data Mining, San Francisco, CA, USA, 1–3 May 2003; pp. 25–36. [Google Scholar]

- Amer, M.; Goldstein, M.; Abdennadher, S. Enhancing one-class support vector machines for unsupervised anomaly detection. In Proceedings of the ACM SIGKDD Workshop on Outlier Detection and Description, Chicago, IL, USA, 10–14 August 2013; pp. 8–15. [Google Scholar]

- Alshawabkeh, M.; Jang, B.; Kaeli, D. Accelerating the local outlier factor algorithm on a GPU for intrusion detection systems. In Proceedings of the 3rd Workshop on General-Purpose Computation on Graphics Processing Units, Pittsburg, PA, USA, 14 March 2010; pp. 104–110. [Google Scholar]

- Karev, D.; McCubbin, C.; Vaulin, R. Cyber threat hunting through the use of an isolation forest. In Proceedings of the 18th International Conference on Computer Systems and Technologies, Ruse, Bulgaria, 23–24 June 2017; pp. 163–170. [Google Scholar]

- Tao, X.; Peng, Y.; Zhao, F.; Zhao, P.; Wang, Y. A parallel algorithm for network traffic anomaly detection based on Isolation Forest. Int. J. Distrib. Sens. Netw. 2018, 14, 1550147718814471. [Google Scholar] [CrossRef]

- Elghazel, H.; Aussem, A. Unsupervised feature selection with ensemble learning. Mach. Learn. 2015, 98, 157–180. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Alazab, M.; Soman, K.; Poornachandran, P.; Al-Nemrat, A.; Venkatraman, S. Deep learning approach for intelligent intrusion detection system. IEEE Access 2019, 7, 41525–41550. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Soman, K.; Poornachandran, P. Evaluating effectiveness of shallow and deep networks to intrusion detection system. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Manipal, Karnataka, India, 13–16 September 2017; pp. 1282–1289. [Google Scholar]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Alotaibi, B.; Alotaibi, M. Consensus and majority vote feature selection methods and a detection technique for web phishing. J. Ambient. Intell. Humaniz. Comput. 2020, 12, 717–727. [Google Scholar] [CrossRef]

- Ketkar, N. Introduction to keras. In Deep Learning With Python; Apress: Berkeley, CA, USA, 2017; pp. 97–111. [Google Scholar]

- Moustafa, N.; Slay, J. The significant features of the UNSW-NB15 and the KDD99 data sets for network intrusion detection systems. In Proceedings of the 2015 4th International Workshop on Building Analysis Datasets and Gathering Experience Returns For Security (BADGERS), Kyoto, Japan, 5 November 2015; pp. 25–31. [Google Scholar]

- Kasongo, S.; Sun, Y. Performance analysis of intrusion detection systems using a feature selection method on the UNSW-NB15 dataset. J. Big Data 2020, 7, 1–20. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).