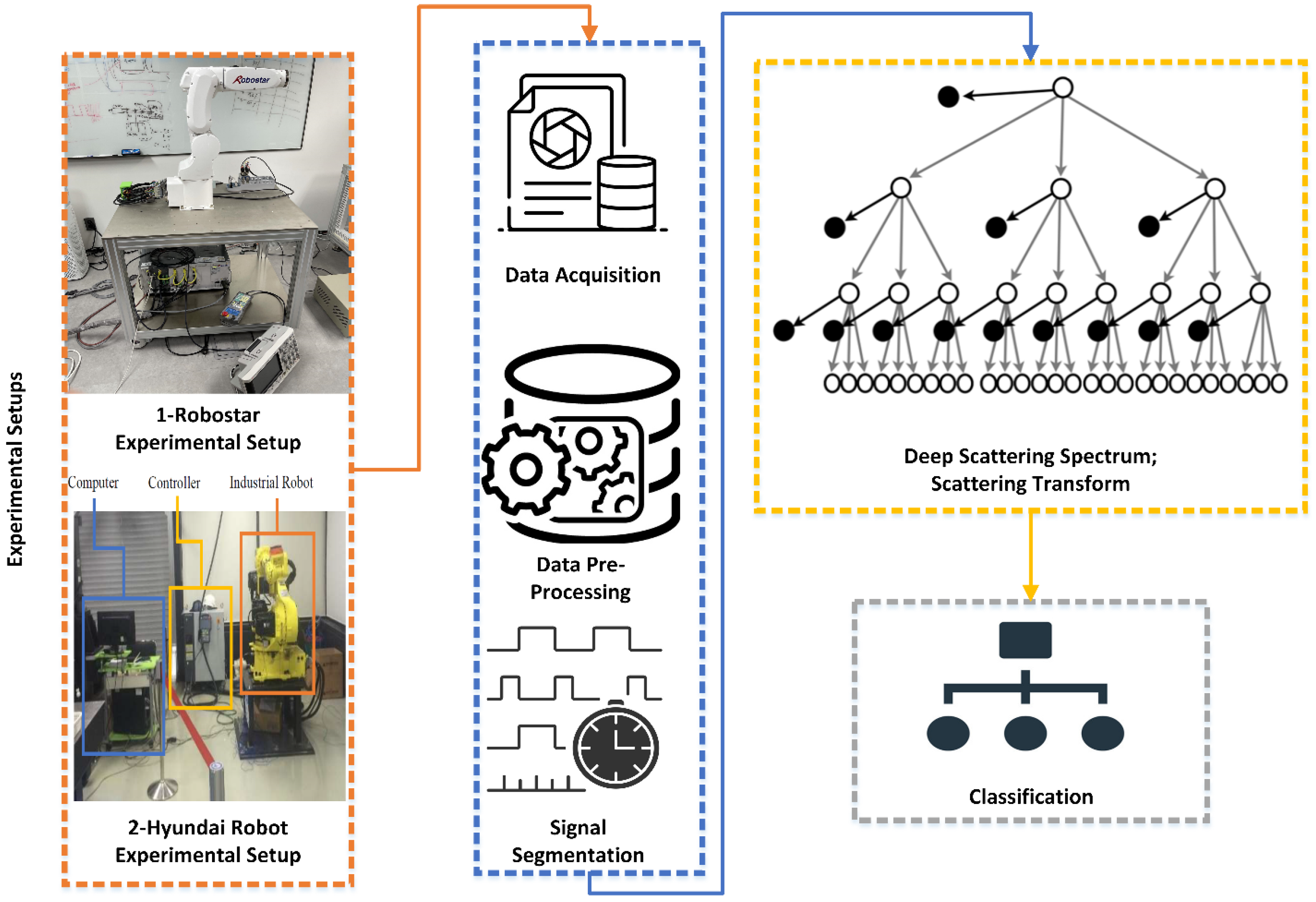

Deep Scattering Spectrum Germaneness for Fault Detection and Diagnosis for Component-Level Prognostics and Health Management (PHM)

Abstract

1. Introduction

2. Materials and Methods

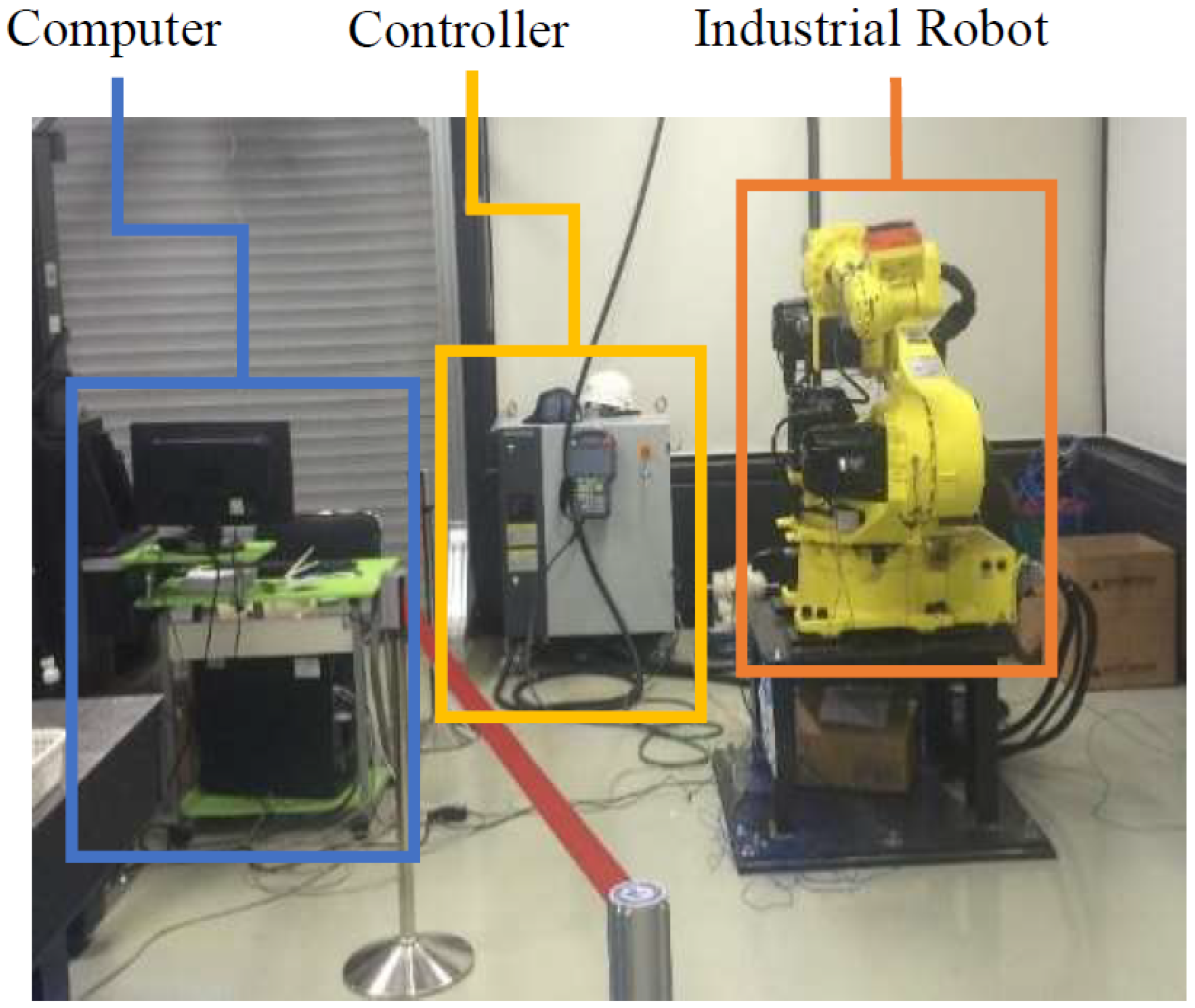

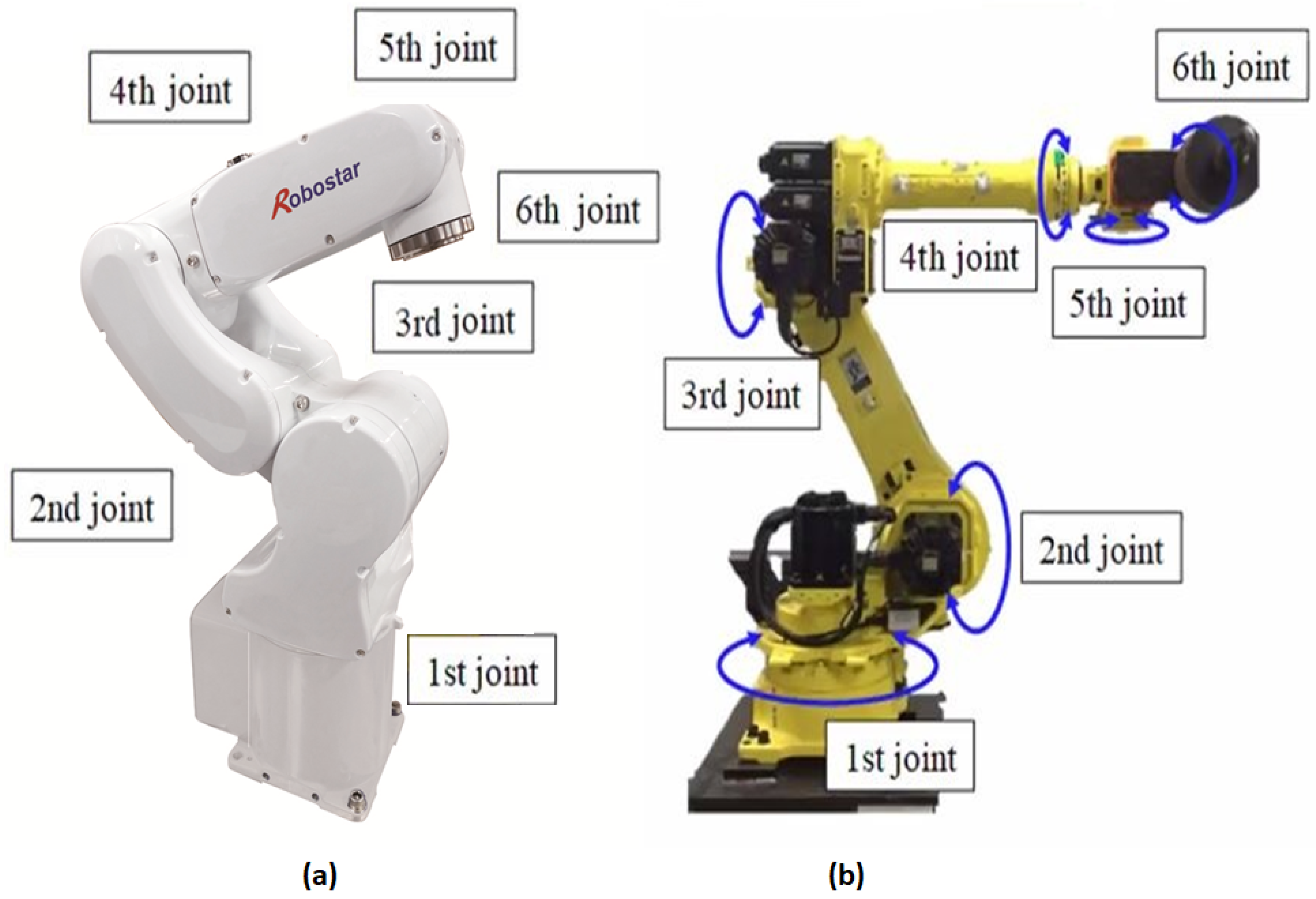

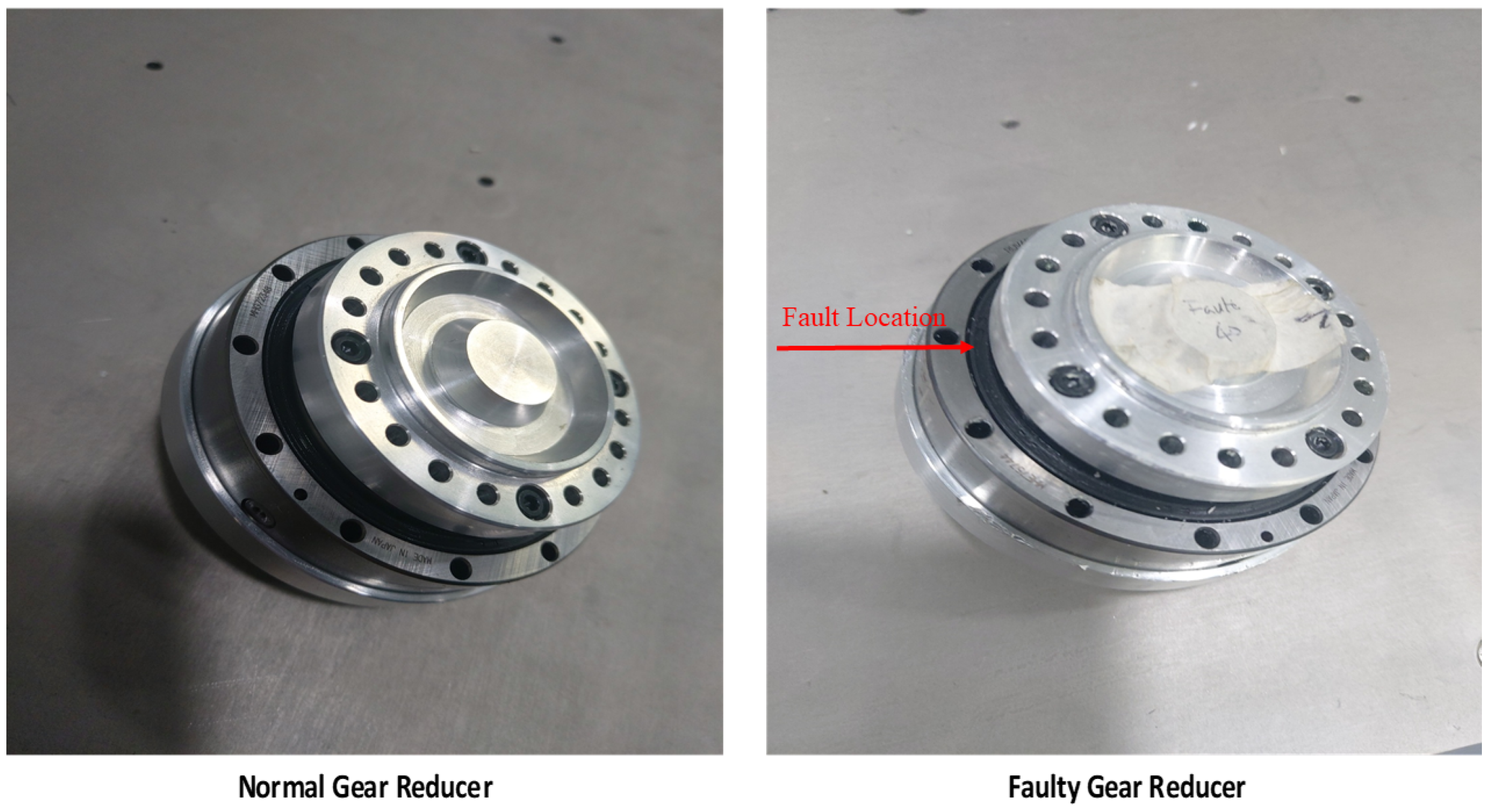

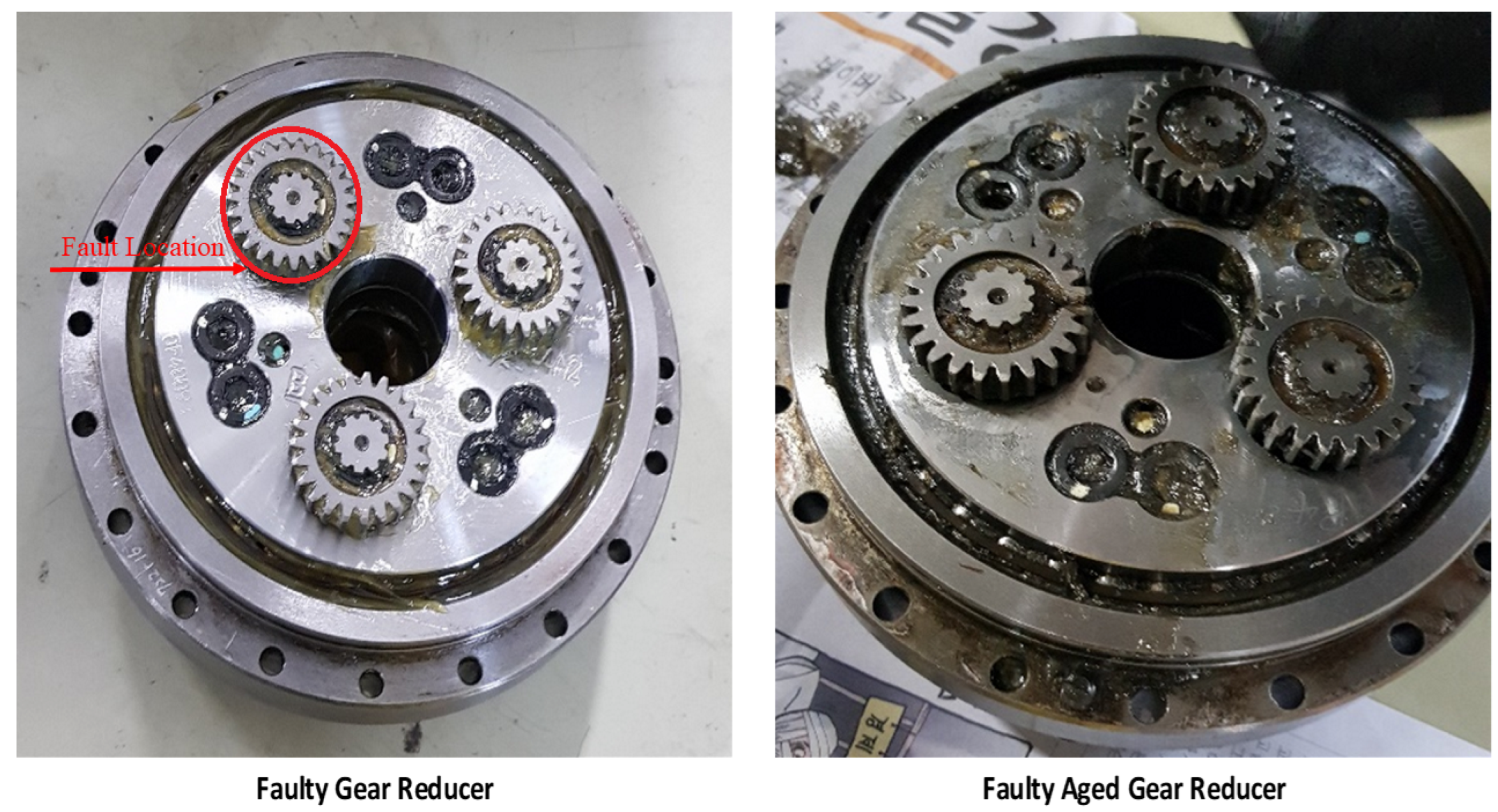

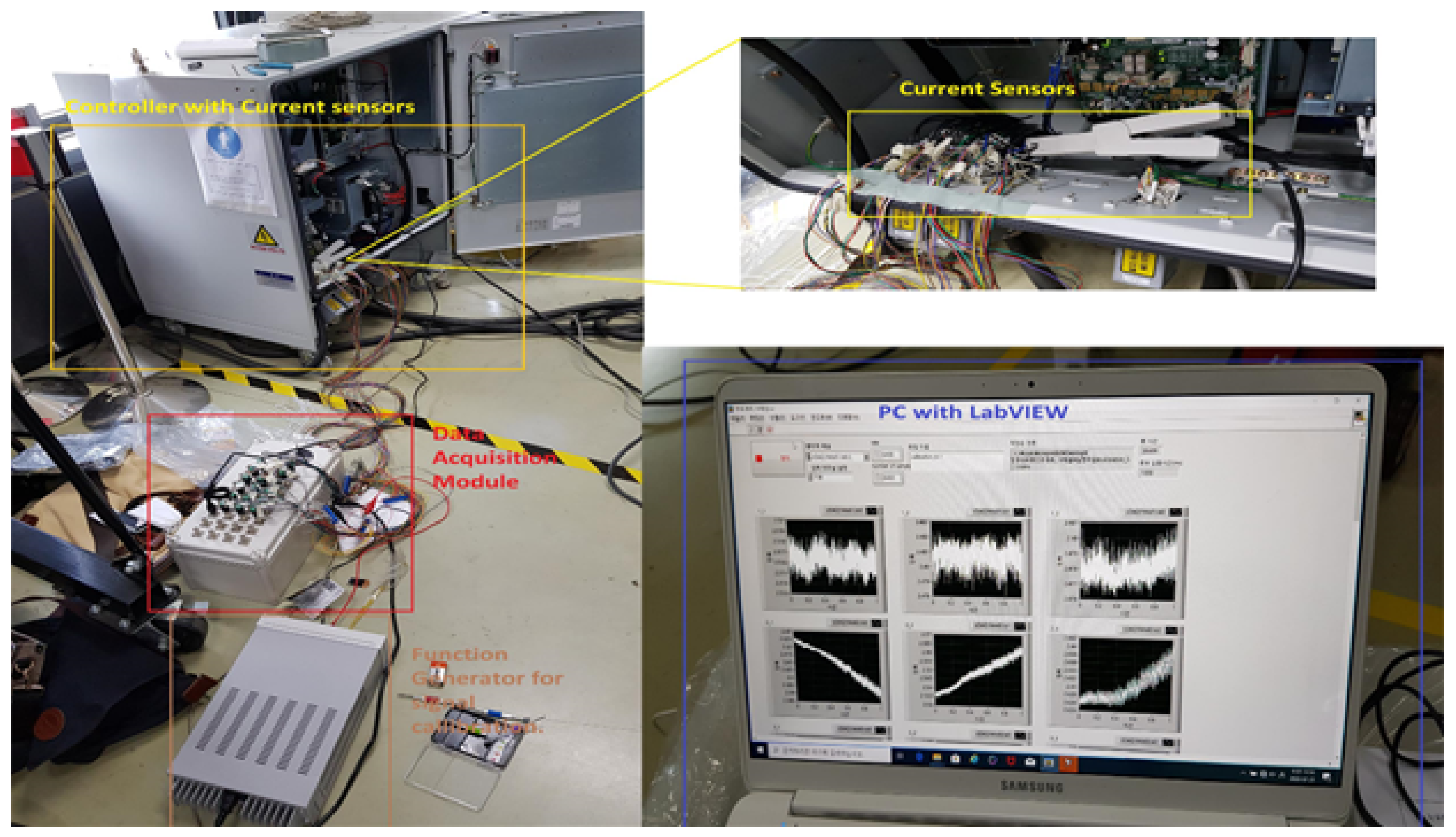

2.1. Experimental Setups

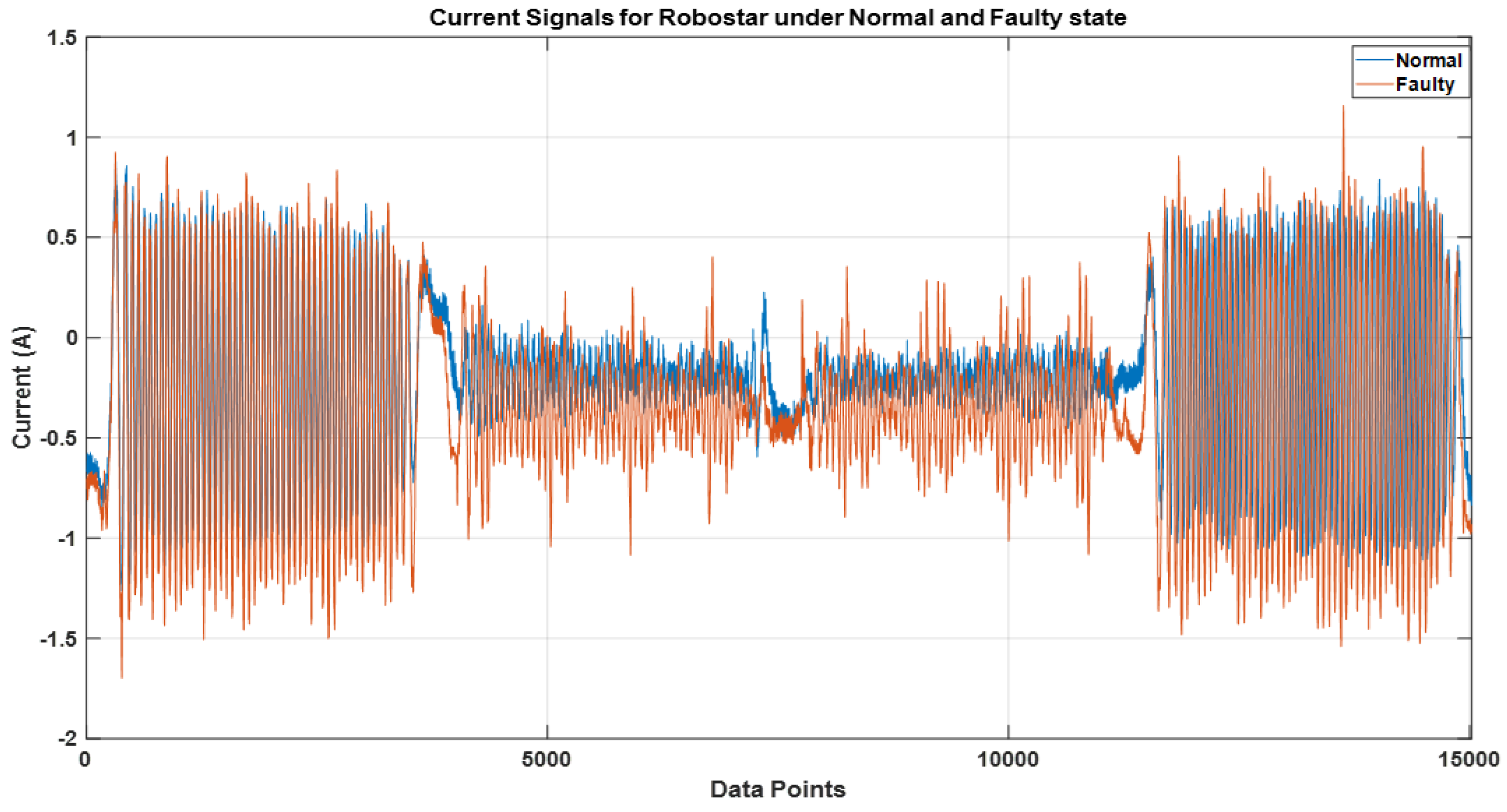

2.2. Data Acquisition

2.3. Data Pre-Processing

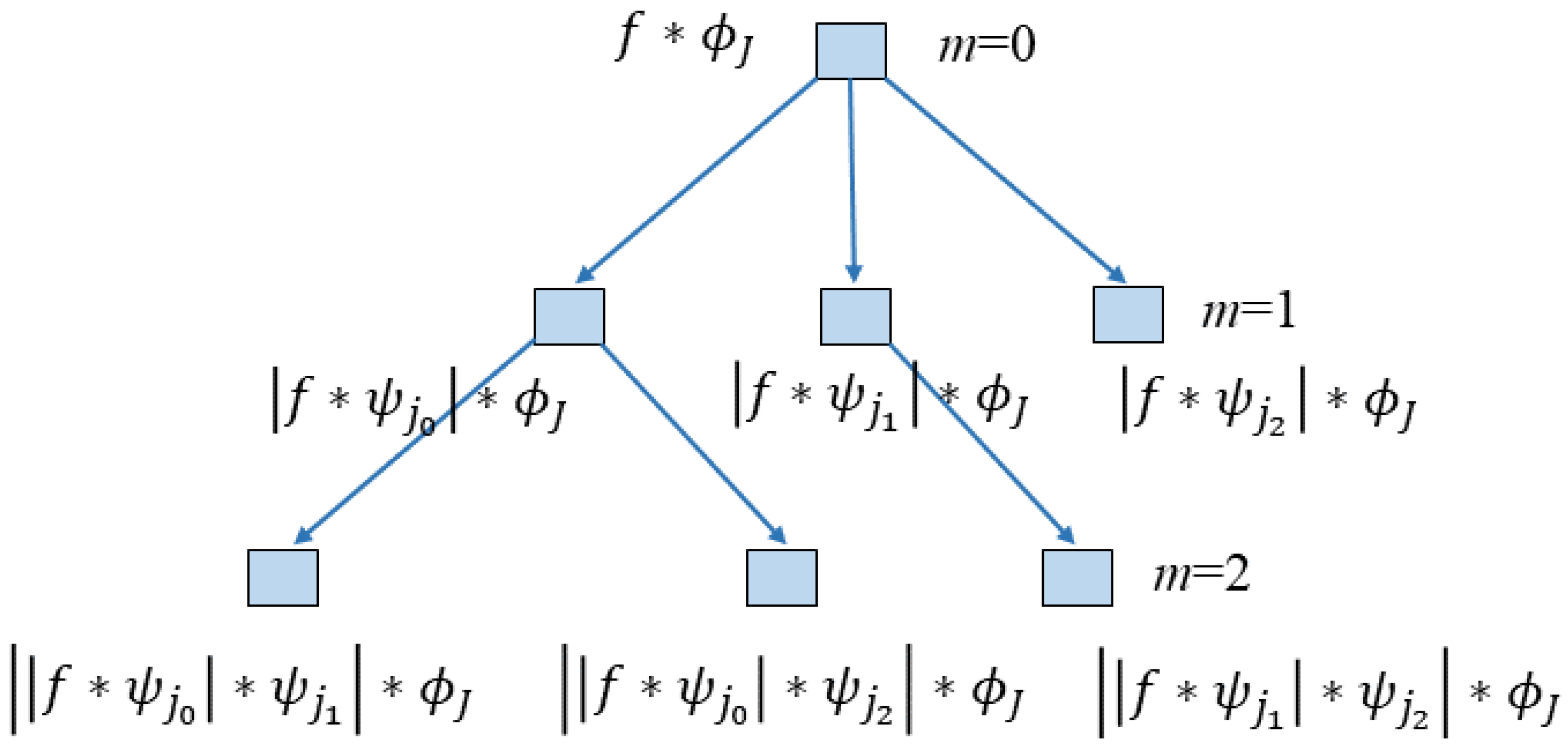

2.4. Deep Scattering Spectrum

3. Results and Discussion

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, J.; Wu, F.; Zhao, W.; Ghaffari, M.; Liao, L.; Siegel, D. Prognostics and health management design for rotary machinery systems—Reviews, methodology and applications. Mech. Syst. Signal Process. 2014, 42, 314–334. [Google Scholar] [CrossRef]

- Lall, P.; Lowe, R.; Goebel, K. Prognostics and health monitoring of electronic systems. In Proceedings of the 12th International Conference on Thermal, Mechanical & Multi-PhysicsSimulation and Experiments in Microelectronics and Microsystems, Linz, Austria, 18–20 April 2011. [Google Scholar]

- Bittencourt, A.C. Modeling and Diagnosis of Friction and Wear in Industrial Robots. Ph.D. Thesis, Linköping University Electronic Press, Linköping, Sweden, 2014. [Google Scholar]

- Abichou, B.; Voisin, A.; Iung, B. Bottom-up capacities inference for health indicator fusion within multi-level industrial systems. In Proceedings of the 2012 IEEE Conference on Prognostics and Health Management, Denver, CO, USA, 18–21 June 2012; pp. 1–7. [Google Scholar]

- Fan, J.; Yung, K.C.; Pecht, M. Physics-of-Failure-Based Prognostics and Health Management for High-Power White Light-Emitting Diode Lighting. IEEE Trans. Device Mater. Reliab. 2011, 11, 407–416. [Google Scholar] [CrossRef]

- Pecht, M.; Gu, J. Physics-of-failure-based prognostics for electronic products. Trans. Inst. Meas. Control 2009, 31, 309–322. [Google Scholar] [CrossRef]

- Yin, S.; Ding, S.X.; Xie, X.; Luo, H. A Review on Basic Data-Driven Approaches for Industrial Process Monitoring. IEEE Trans. Ind. Electron. 2014, 61, 6418–6428. [Google Scholar] [CrossRef]

- Hu, C.; Youn, B.D.; Wang, P.; Yoon, J.T. Ensemble of data-driven prognostic algorithms for robust prediction of remaining useful life. Reliab. Eng. Syst. Saf. 2012, 103, 120–135. [Google Scholar] [CrossRef]

- Zhou, W.; Habetler, T.G.; Harley, R.G. Bearing Condition Monitoring Methods forElectric Machines: A General Review. In Proceedings of the IEEE International Symposium on Diagnostics for Electric Machines, Cracow, Poland, 6–8 September 2007; pp. 3–6. [Google Scholar]

- Hamadache, M.; Lee, D.; Veluvolu, K.C. Rotor Speed-Based Bearing Fault Diagnosis (RSB-BFD) Under Variable Speed and Constant Load. IEEE Trans. Ind. Electron. 2015, 62, 6486–6495. [Google Scholar] [CrossRef]

- Rohan, A.; Kim, S.H. RLC Fault Detection Based on Image Processing and Artificial Neural Network. Int. J. Fuzzy Log. Intell. Syst. 2019, 19, 78–87. [Google Scholar] [CrossRef]

- Rohan, A.; Kim, S.H. Fault Detection and Diagnosis System for a Three-Phase Inverter Using a DWT-Based Artificial Neural Network. Int. J. Fuzzy Log. Intell. Syst. 2016, 16, 238–245. [Google Scholar] [CrossRef]

- Rohan, A.; Rabah, M.; Kim, S.H. An Integrated Fault Detection and Identification System for Permanent Magnet Synchronous Motor in Electric Vehicles. Int. J. Fuzzy Log. Intell. Syst. 2018, 18, 20–28. [Google Scholar] [CrossRef]

- Kankar, P.K.; Sharma, S.C.; Harsha, S.P. Fault diagnosis of ball bearings using machine learning methods. Expert Syst. Appl. 2011, 38, 1876–1886. [Google Scholar] [CrossRef]

- Kumar, J.; Ramkumar, N.K.; Verma, S.; Dixit, S. Detection and classification for faults in drilling process using vibration analysis. In Proceedings of the International Conference Prognostics Health Manage, Cheney, WA, USA, 22–25 June 2014; pp. 1–6. [Google Scholar]

- Lee, J.; Choi, H.; Park, D.; Chung, Y.; Kim, H.Y.; Yoon, S. Fault detection and diagnosis of railway point machines by sound analysis. Sensors 2016, 16, 549. [Google Scholar] [CrossRef] [PubMed]

- Kemalkar, K.; Bairagi, V.K. Engine fault diagnosis using sound analysis. In Proceedings of the International Conference Automatic Control and Dynamic Optimization Techniques (ICACDOT), Pune, India, 9–10 September 2016; pp. 943–946. [Google Scholar]

- Rohan, A.; Raouf, I.; Kim, H.S. Rotate Vector (RV) Reducer Fault Detection and Diagnosis System: Towards Component Level Prognostics and Health Management (PHM). Sensors 2020, 20, 6845. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Wang, H.; Song, L.; Wang, P.; Cui, L.; Lin, T. An adaptive data fusion strategy for fault diagnosis based on the convolutional neural network. Measurement 2020, 165, 108122. [Google Scholar] [CrossRef]

- Gou, L.; Li, H.; Zheng, H.; Li, H.; Pei, X. Aeroengine Control System Sensor Fault Diagnosis Based on CWT and CNN. Math. Probl. Eng. 2020, 2020, 5357146. [Google Scholar] [CrossRef]

- Khamparia, A.; Gupta, D.; Nguyen, N.G.; Khanna, A.; Pandey, B.; Tiwari, P. Sound Classification Using Convolutional Neural Network and Tensor Deep Stacking Network. IEEE Access 2019, 7, 7717–7727. [Google Scholar] [CrossRef]

- Wang, J.; Zhuang, J.; Duan, L.; Cheng, W. A multi-scale convolution neural network for featureless fault diagnosis. In Proceedings of the International Symposium on Flexible Automation (ISFA), Cleveland, OH, USA, 1–3 August 2016; pp. 65–70. [Google Scholar]

- Liu, H.; Li, L.; Tran, J.M.T.; Lundgren, J. Rolling bearing fault diagnosis based on STFT-deep learning and sound signals. Shock. Vib. 2016, 2016, 12. [Google Scholar]

- Janssens, O.; Slavkovikj, V.; Vervisch, B.; Stockman, K.; Loccufier, M.; Verstockt, S.; de Walle, R.V.; Hoecke, S.V. Convolutional Neural Network Based Fault Detection for Rotating Machinery. J. Sound Vib. 2016, 377, 331–345. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhi, G.; Chen, W.; Qian, Q.; He, D.; Sun, B.; Sun, W. A new tool wear condition monitoring method based on deep learning under small samples. Measurement 2022, 189, 110622. [Google Scholar] [CrossRef]

- Zhou, Y.; Kumar, A.; Parkash, C.; Vashishtha, G.; Tang, H.; Xiang, J. A novel entropy-based sparsity measure for prognosis of bearing defects and development of a sparsogram to select sensitive filtering band of an axial piston pump. Measurement 2022, 203, 111997. [Google Scholar] [CrossRef]

- Ziani, R.; Hammami, A.; Chaari, F.; Felkaoui, A.; Haddar, M. Gear fault diagnosis under non-stationary operating mode based on EMD, TKEO, and Shock Detector. Comptes Rendus Mec. 2019, 347, 663–675. [Google Scholar] [CrossRef]

- Sapena-Bano, A.; Pineda-Sanchez, M.; Puche-Panadero, R.; Martinez-Roman, J.; Matic, D. Fault Diagnosis of Rotating Electrical Machines in Transient Regime Using a Single Stator Current’s FFT. IEEE Trans. Instrum. Meas. 2015, 64, 3137–3146. [Google Scholar] [CrossRef]

- Rai, V.K.; Mohanty, A.R. Bearing fault diagnosis using FFT of intrinsic mode functions in Hilbert–Huang transform. Mech. Syst. Signal Process. 2007, 21, 2607–2615. [Google Scholar] [CrossRef]

- Portnoff, M. Time-frequency representation of digital signals and systems based on short-time Fourier analysis. IEEE Trans. Acoust. Speech Signal Process. 1980, 28, 55–69. [Google Scholar] [CrossRef]

- Tarasiuk, T. Hybrid Wavelet-Fourier Spectrum Analysis. IEEE Trans. Power Deliv. 2004, 19, 957–964. [Google Scholar] [CrossRef]

- Anatonio-Daviu, J.A.; Riera-Guasp, M.; Floch, J.R.; Palomares, M.P. Validation of a New Method for the Diagnosis of Rotor Bar Failures via Wavelet Transform in Industrial Induction Machines; IEEE: Piscataway, NJ, USA, 2006; Volume 42. [Google Scholar]

- Anden, J.; Mallat, S. Deep Scattering Spectrum. IEEE Trans. Signal Process. 2014, 62, 4114–4128. [Google Scholar] [CrossRef]

- Chudáčcek, V.C.J.; Mallat, S.; Abry, P.; Doret, M. Scattering transform for intrapartum fetal heart rate characterization and acidosis detection. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society EMBC, Osaka, Japan, 3–7 July 2013; pp. 2898–2901. [Google Scholar]

- Orfanidis, S.J. Introduction to Signal Processing; Prentice Hall: Englewood Cliffs, NJ, USA, 1996. [Google Scholar]

- Schafer, R. What Is a Savitzky-Golay Filter? [Lecture Notes]. IEEE Signal Process. Mag. 2011, 28, 111–117. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shapiro, L.G. Computer and Robot Vision; Addison-Wesley Pub Co.: Boston, MA, USA, 1992; Volume 1, pp. 158–205. [Google Scholar]

- van den Boomgaard, R.; van Balen, R. Methods for fast morphological image transforms using bitmapped binary images. CVGIP Graph. Models Image Process. 1992, 54, 252–258. [Google Scholar] [CrossRef]

- Dau, T.; Kollmeier, B.; Kohlrausch, A. Modeling auditory processing of amplitude modulation. I. Detection and masking with narrow-band carriers. J. Acoust. Soc. Am. 1997, 102, 2892–2905. [Google Scholar] [CrossRef]

- Chi, T.; Ru, P.; Shamma, S.A. Multiresolution spectrotemporal analysis of complex sounds. J. Acoust. Soc. Am. 2005, 118, 887–906. [Google Scholar] [CrossRef]

- Mesgarani, N.; Slaney, M.; Shamma, S.A. Discrimination of speech from nonspeech based on multiscale spectro-temporal Modulations. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 920–930. [Google Scholar] [CrossRef]

- Bruna, J.; Mallat, S. Invariant Scattering Convolution Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1872–1886. [Google Scholar] [CrossRef] [PubMed]

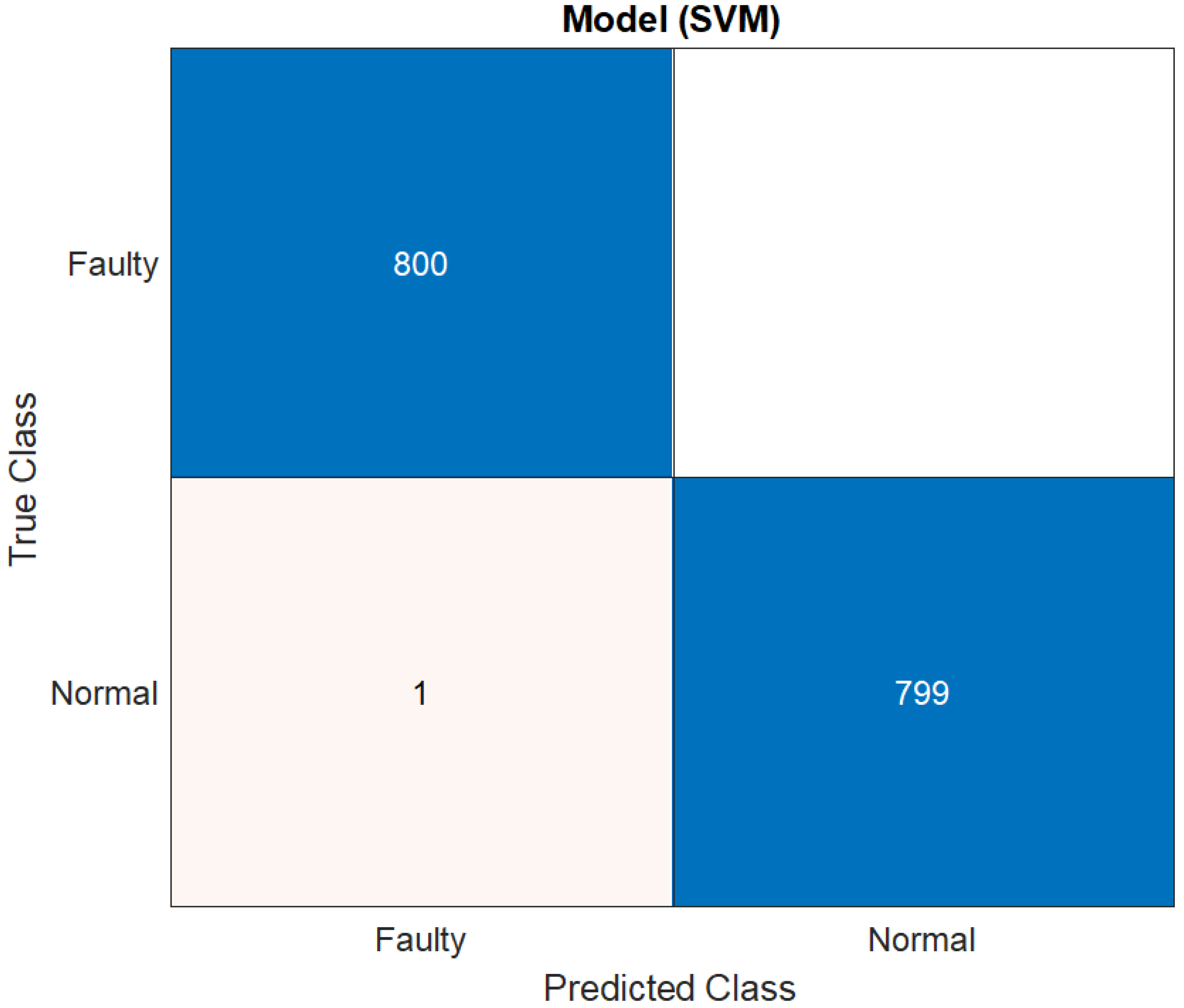

| Robostar Fault Detection | Metrics | |||||

|---|---|---|---|---|---|---|

| Classifier | Number of Classes | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F-Score (%) |

| SVM | 99.9 | 99.806 | 99.943 | 99.829 | 99.796 | |

| Decision Tree | 99 | 98.906 | 99.043 | 98.929 | 98.896 | |

| Ensemble | 99.6 | 99.506 | 99.643 | 99.529 | 99.496 | |

| KNN | 2 | 99.4 | 99.306 | 99.443 | 99.329 | 99.296 |

| Discriminant Analysis | 97.7 | 97.606 | 97.743 | 97.629 | 97.596 | |

| Naïve Bayes | 82 | 81.906 | 82.043 | 81.929 | 81.896 | |

| Average Performance Score | 96.26 | 96.17 | 96.30 | 96.19 | 96.16 | |

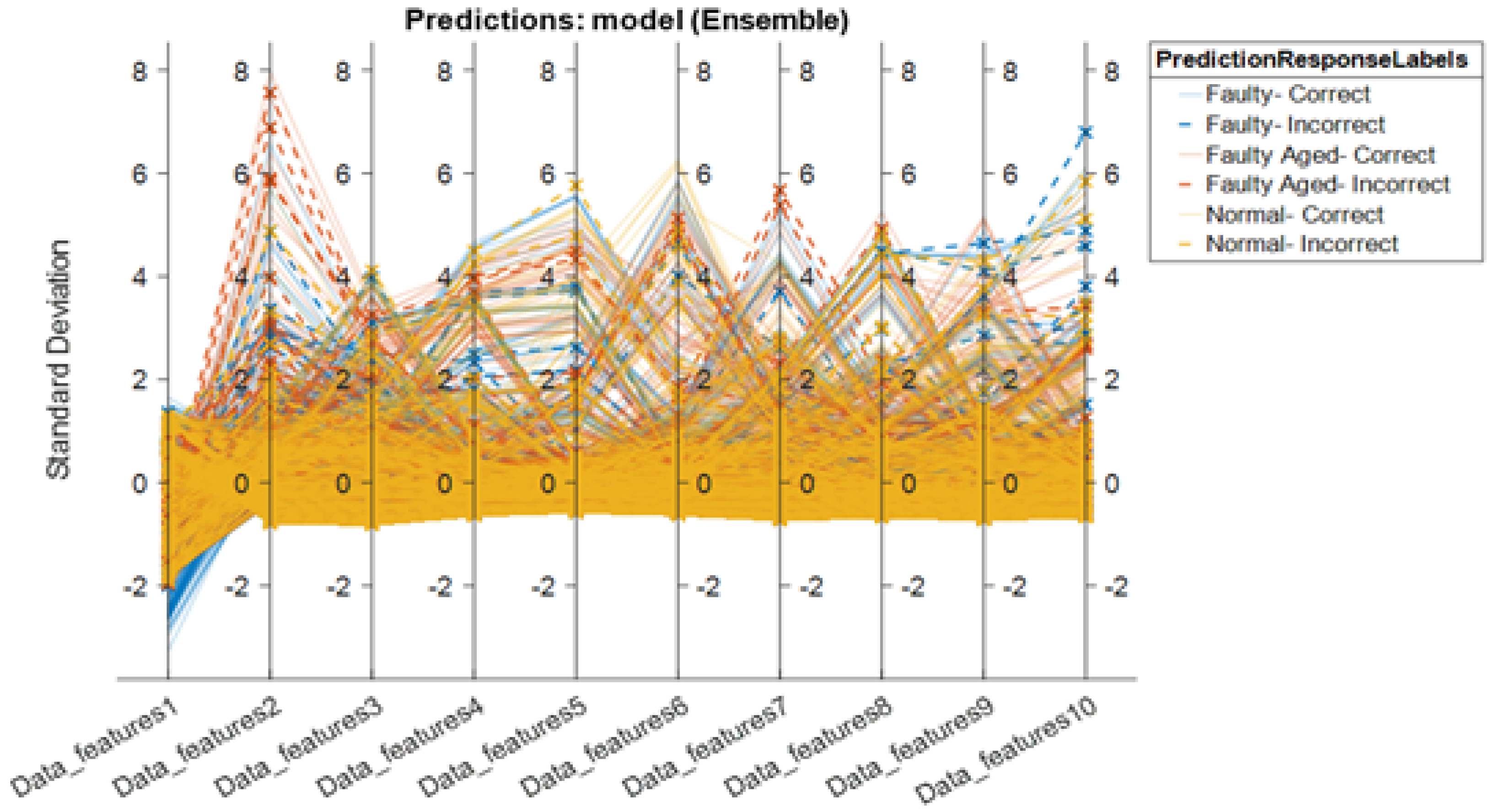

| Hyundai Robot Fault Detection | Metrics | |||||

|---|---|---|---|---|---|---|

| Classifier | Number of Classes | Accuracy (%) | Sensitivity (%) | Speciftcity (%) | Precision (%) | F-Score (%) |

| Ensemble | 88.1 | 88.006 | 88.143 | 88.029 | 87.996 | |

| Discriminant Analysis | 85.6 | 85.506 | 85.643 | 85.529 | 85.496 | |

| SVM | 83.2 | 83.106 | 83.243 | 83.129 | 83.096 | |

| KNN | 3 | 80.3 | 80.206 | 80.343 | 80.229 | 80.196 |

| Decision Tree | 68.3 | 68.206 | 68.343 | 68.229 | 68.196 | |

| Naive Bayes | 48.9 | 48.806 | 48.943 | 48.829 | 48.796 | |

| Average Performance Score | 75.733 | 75.639 | 75.776 | 75.662 | 75.629 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rohan, A. Deep Scattering Spectrum Germaneness for Fault Detection and Diagnosis for Component-Level Prognostics and Health Management (PHM). Sensors 2022, 22, 9064. https://doi.org/10.3390/s22239064

Rohan A. Deep Scattering Spectrum Germaneness for Fault Detection and Diagnosis for Component-Level Prognostics and Health Management (PHM). Sensors. 2022; 22(23):9064. https://doi.org/10.3390/s22239064

Chicago/Turabian StyleRohan, Ali. 2022. "Deep Scattering Spectrum Germaneness for Fault Detection and Diagnosis for Component-Level Prognostics and Health Management (PHM)" Sensors 22, no. 23: 9064. https://doi.org/10.3390/s22239064

APA StyleRohan, A. (2022). Deep Scattering Spectrum Germaneness for Fault Detection and Diagnosis for Component-Level Prognostics and Health Management (PHM). Sensors, 22(23), 9064. https://doi.org/10.3390/s22239064