A Study on Fast and Low-Complexity Algorithms for Versatile Video Coding

Abstract

1. Introduction

2. Overview and Complexity Analysis of VVC/H.266 Standard

2.1. VVC/H.266 Standard

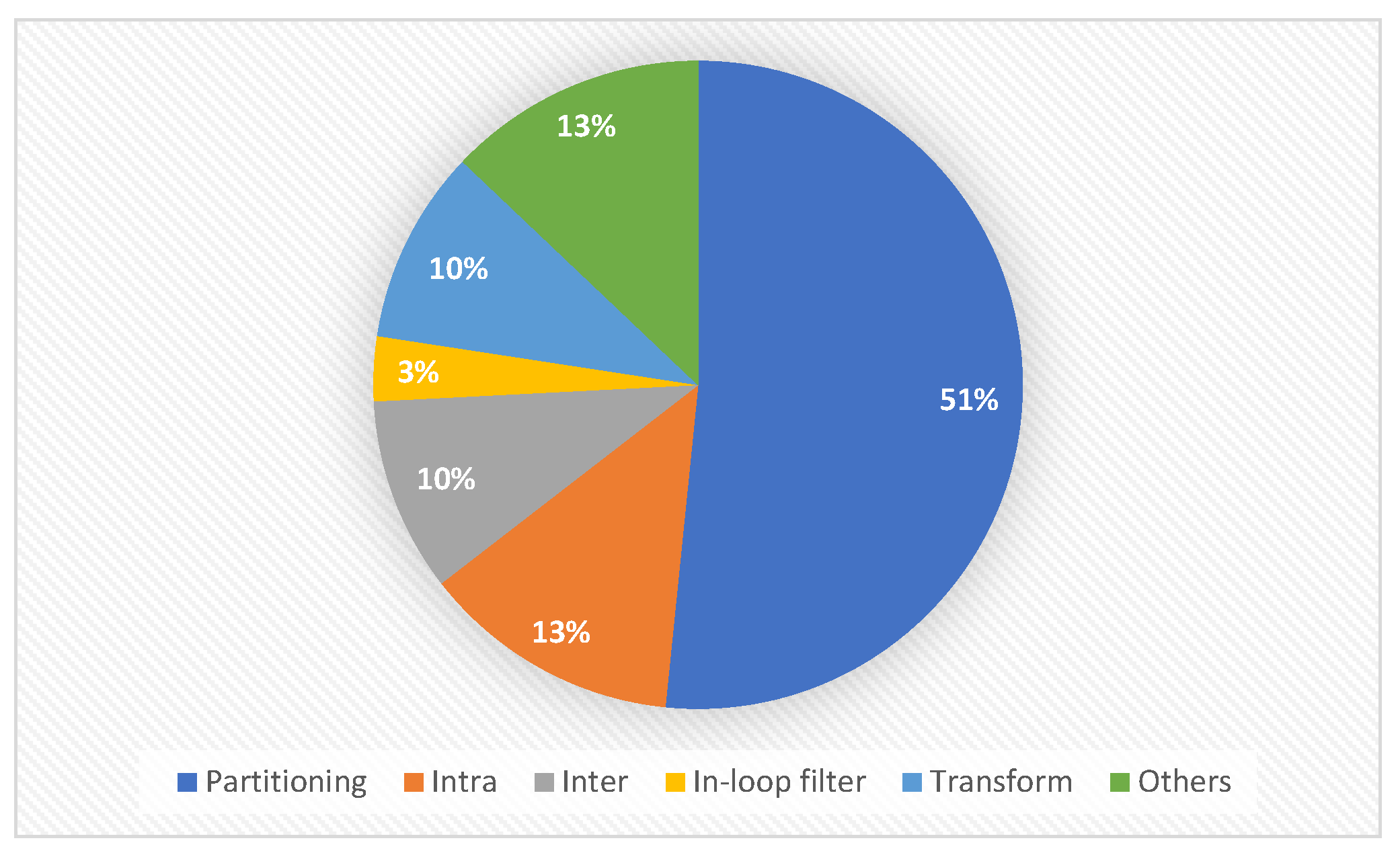

2.2. Complexity Analysis

3. Fast and Low-Complexity Coding for VVC/H.266

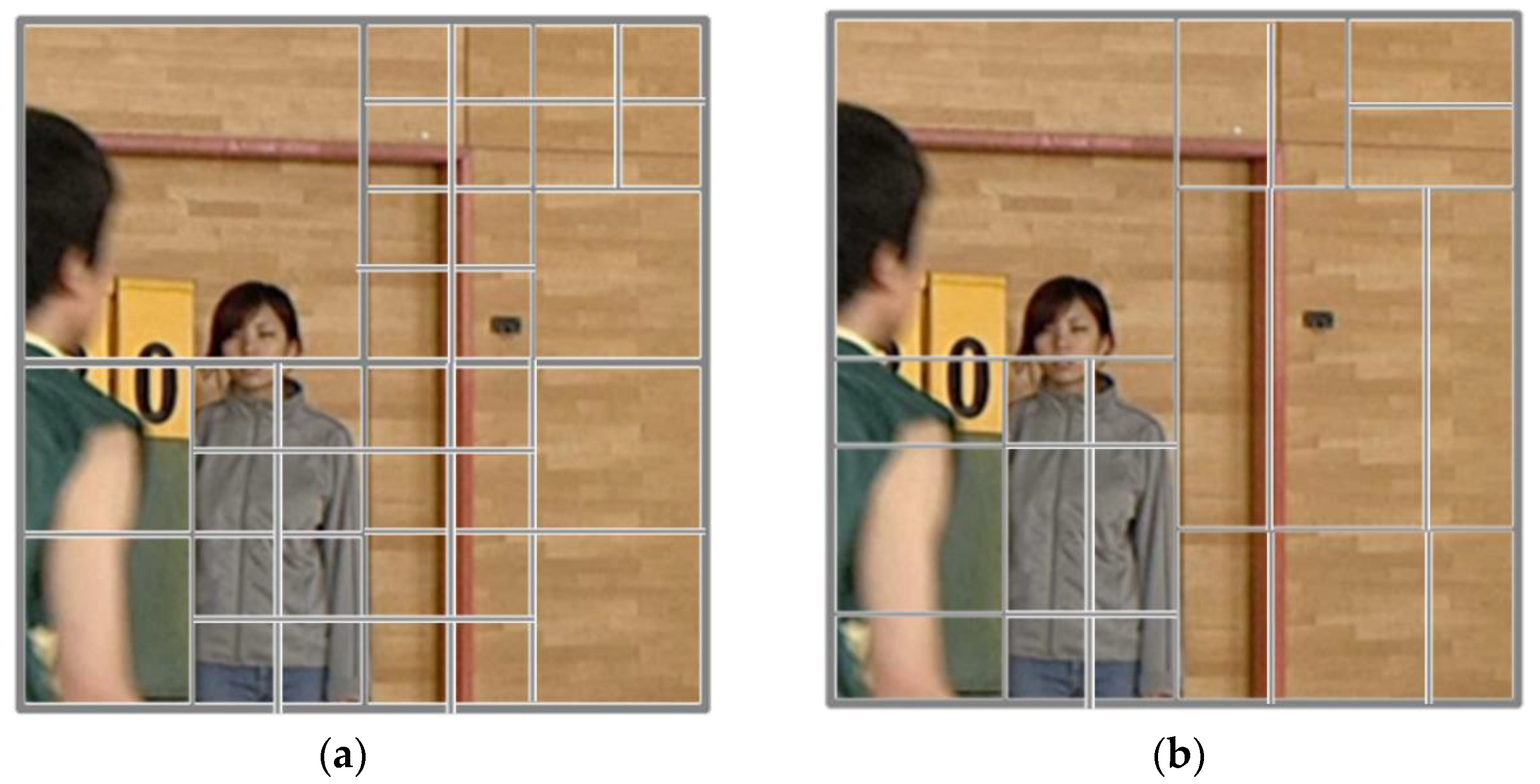

3.1. VVC/H.266 Block Partitioning

3.2. Fast Method on Early Split Mode Decision

3.3. Fast Method Applied to Early CU Depth Decision

3.4. Fast Method for Coding Tools

3.5. Platform Dependent Low-Complexity Methods

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Acronym | Description | Acronym | Description |

| AI | All intra | LB | Low delay with B-slices |

| AME | Affine motion estimation | LGBM | Light gradient boosting machine |

| ASM | Angular second moment | LP | Low delay with P-slices |

| ASSD | Average sum of the square difference | MPEG | Moving picture experts group |

| AVC | Advance video coding | MRL | Multiple reference line |

| BDBR | Bjøntegaard delta bitrates | MTS | Multiple transform selection |

| BT | Binary tree | MTT | Multi-type tree |

| CNN | Convolutional neural network | PLT | Palette mode |

| CTC | Common test condition | QP | Quantization parameter |

| CTU | Coding tree unit | QT | Quadtree |

| CU | Coding unit | RA | Random access |

| DBF | Deblocking filter | RD | Rate distortion |

| GPM | Geometric partitioning mode | RDO | Rate distortion optimization |

| HBT | Horizontal binary tree | RFC | Random forest classifier |

| HEVC | High-efficiency video coding | RMD | Rough mode decision |

| HTT | Horizontal ternary tree | SCC | Screen content coding |

| HVS | Human visual system | SW | Software |

| IBC | Intra-block copy | TT | Tri-tree |

| ISP | Intra sub-partition | UHD | Ultra-high-definition |

| JCT-VC | Joint collaborative team on video coding | VBT | Vertical binary tree |

| JND | Just noticeable difference | VCEG | Video coding experts group |

| JRMD | Rough mode decision-based cost | VTT | Vertical ternary tree |

| JVET | Joint video exploration team | VVC | Versatile video coding |

References

- MARKETSANDMARKETS. Available online: https://www.marketsandmarkets.com/Market-Reports/augmented-reality-virtual-reality-market-1185.html (accessed on 1 September 2022).

- CISCO. Available online: http://www.cisco.com/c/en/us/solutions/collateral/service-provider/visual-networking-index-vni/complete-white-paper-c11-481360.html (accessed on 1 September 2022).

- ISO/IEC 11172-2; Information Technology—Coding of Moving Pictures and Associated Audio for Digital Storage Media at up to About 1,5Mbit/s—Part 2: Video. ISO/IEC JTC 1: Geneva, Switzerland, 1993.

- Recommendation ITU-T H.262 and ISO/IEC 13818-2; Information Technology—Generic Coding of Moving Pictures and Associated Audio Information: Video. ITU-T: Geneva, Switzerland; ISO/IEC JTC 1: Geneva, Switzerland, 1995.

- Recommendation ITU-T H.264 and ISO/IEC 14496-10 (AVC); Advanced Video Coding for Generic Audio-Visual Services. ITU-T: Geneva, Switzerland; ISO/IEC JTC 1: Geneva, Switzerland, 2003.

- STATISTA. Available online: https://www.statista.com/statistics/710673/worldwide-video-codecs-containers-share-online/ (accessed on 1 September 2022).

- Recommendation ITU-T H.265 and ISO/IEC 23008-2 (HEVC); High Efficiency Video Coding. ITU-T: Geneva, Switzerland; ISO/IEC JTC 1: Geneva, Switzerland, 2013.

- Ronan, P.; Eric, T.; Mickaël, R. Hybrid broadband/broadcast ATSC 3.0 SHVC distribution chain. In Proceedings of the 2018 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Valencia, Spain, 6–8 June 2018; pp. 1–5. [Google Scholar]

- Haskell, B.G.; Puri, A.; Netravali, A.N. Digital Video: An Introduction to MPEG-2; Springer Science & Business Media: Berlin, Germany, 1996. [Google Scholar]

- Wiegand, T.; Sullivan, G.J.; Bjontegaard, G.; Luthra, A. Overview of the H. 264/AVC video coding standard. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 560–576. [Google Scholar] [CrossRef]

- Sullivan, G.J.; Ohm, J.R.; Han, W.J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Bross, B.; Chen, J.; Ohm, J.R.; Sullivan, G.J.; Wang, Y.K. Developments in international video coding standardization after avc, with an overview of versatile video coding (vvc). Proc. IEEE 2021, 109, 1463–1493. [Google Scholar] [CrossRef]

- Recommendation ITU-T H.266 and ISO/IEC 23090-3 (VVC); Versatile Video Coding. ITU-T: Geneva, Switzerland; ISO/IEC JTC 1: Geneva, Switzerland, 2020.

- An, J.; Huang, H.; Zhang, K.; Huang, Y.-W.; Lei, S. Quadtree Plus Binary Tree Structure Integration with JEM Tools; doc. JVET-B0023; Joint Video Exploration Team: Geneva, Switzerland, 2016. [Google Scholar]

- Li, X.; Chuang, H.-C.; Chen, J.; Karczewicz, M.; Zhang, L.; Zhao, X.; Said, A. Multi-Type-Tree; doc. JVET-D0117; Joint Video Exploration Team: Geneva, Switzerland, 2016. [Google Scholar]

- Zhao, L.; Zhao, X.; Li, X.; Liu, S. CE3-Related: Unification of Angular Intra Prediction for Square and Non-Square Blocks; doc. JVET-L0279; Joint Video Exploration Team: Geneva, Switzerland, 2018. [Google Scholar]

- Van der Auwera, G.; Heo, J.; Filippov, A. CE3: Summary Report on Intra Prediction and Mode Coding; doc. JVET-L0023; Joint Video Exploration Team: Geneva, Switzerland, 2018. [Google Scholar]

- Bross, B.; Keydel, P.; Schwarz, H.; Marpe, D.; Wiegand, T.; Zhao, L.; Zhao, X.; Li, X.; Liu, S.; Chang, Y.-J.; et al. CE3: Multiple Reference Line Intra Prediction (Test 1.1.1, 1.1.2, 1.1.3 and 1.1.4); doc. JVET-L0283; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Helle, P.; Pfaff, J.; Schäfer, M.; Rischke, R.; Schwarz, H.; Marpe, D.; Wiegand, T. Intra picture prediction for video coding with neural networks. In Proceedings of the 2019 Data Compression Conference (DCC), Snowbird, UT, USA, 26–29 March 2019; pp. 448–457. [Google Scholar]

- Li, J.; Wang, M.; Zhang, L.; Zhang, K.; Wang, S.; Wang, S.; Gao, W. Sub-sampled cross-component prediction for chroma component coding. In Proceedings of the 2020 Data Compression Conference (DCC), Snowbird, UT, USA, 24–27 March 2020; pp. 203–212. [Google Scholar]

- De-Luxán-Hernández, S.; George, V.; Ma, J.; Nguyen, T.; Schwarz, H.; Marpe, D.; Wiegand, T. An intra subpartition coding mode for VVC. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1203–1207. [Google Scholar]

- Zhang, L.; Zhang, K.; Liu, H.; Wang, Y.; Zhao, P.; Hong, D. CE4: History-Based Motion Vector Prediction (Test 4.4.7); doc. JVET-L0266; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Jeong, S.; Park, M.W.; Piao, Y.; Park, M.; Choi, K. CE4: Ultimate Motion Vector Expression (Test 4.5.4); doc. JVET-L0054; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Chen, H.; Yang, H.; Chen, J. Symmetrical Mode for Biprediction, Joint Video Experts Team; doc. JVET-J0063; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Chen, J.; Chien, W.-J.; Hu, N.; Seregin, V.; Karczewicz, M.; Li, X. Enhanced Motion Vector Difference Coding; doc. JVET-D0123; Joint Video Exploration Team: Geneva, Switzerland, 2016. [Google Scholar]

- Gao, H.; Esenlik, S.; Alshina, E.; Steinbach, E. Geometric partitioning mode in versatile video coding: Algorithm review and analysis. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 3603–3617. [Google Scholar] [CrossRef]

- Su, Y.-C.; Chen, C.-Y.; Huang, Y.-W.; Lei, S.-M.; He, Y.; Luo, J.; Xiu, X.; Ye, Y. CE4-Related: Generalized Bi-Prediction Improvements Combined from JVET-L0197 and JVET-L0296; doc. JVET-L0646; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Chiang, M.-S.; Hsu, C.-W.; Huang, Y.-W.; Lei, S.-M. CE10.1.1: Multi-hypothesis Prediction for Improving AMVP Mode, Skip or Merge Mode, and Intra Mode; doc. JVET-L0100; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Li, L.; Li, H.; Liu, D.; Li, Z.; Yang, H.; Lin, S.; Wu, F. An efficient four-parameter affine motion model for video coding. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 1934–1948. [Google Scholar] [CrossRef]

- Chen, H.; Yang, H.; Chen, J. CE4: Separate List for Sub-Block Merge Candidates (Test 4.2.8); doc. JVET-L0369; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Sethuraman, S. CE9: Results of DMVR Related Tests CE9.2.1 and CE9.2.2; JVET-M0147; Joint Video Experts Team: Geneva, Switzerland, 2019. [Google Scholar]

- Alshin, A.; Elshina, E. Bi-directional optical flow for future video codec. In Proceedings of the 2016 Data Compression Conference (DCC), Snowbird, UT, USA, 30 March–1 April 2016. [Google Scholar]

- He, Y.; Luo, J. CE4-2.1: Prediction Refinement with Optical Flow for Affine Mode; doc. JVET-O0070; Joint Video Experts Team: Geneva, Switzerland, 2019. [Google Scholar]

- Choi, K.; Piao, Y.; Kim, C. CE6: AMT with Reduced Transform Types (Test 1.5); doc. JVET-K0171; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Zhao, Y.; Yang, H.; Chen, J. CE6: Spatially Varying Transform (Test 6.1.12.1); doc. JVET-K0139; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Koo, M.; Salehifar, M.; Lim, J.; Kim, S.-H. Low frequency nonseparable transform (LFNST). In Proceedings of the 2019 Picture Coding Symposium (PCS), Ningbo, China, 12–15 November 2019. [Google Scholar]

- Schwarz, H.; Nguyen, T.; Marpe, D.; Wiegand, T. CE7: Transform Coefficient Coding and Dependent Quantization (Tests 7.1.2, 7.2.1); doc. JVET-K0071; Joint Video Experts Team: Geneva, Switzerland, 2018. [Google Scholar]

- Karczewicz, M.; Hu, N.; Taquet, J.; Chen, C.; Misra, K.; Andersson, K.; Yin, P.; Lu, T.; François, E.; Chen, J. VVC In-Loop Filters. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3907–3925. [Google Scholar] [CrossRef]

- VVC Reference Software. Available online: https://vcgit.hhi.fraunhofer.de/jvet/VVCSoftware_VTM/-/tags/ (accessed on 1 September 2022).

- Bossen, F.; Boyce, J.; Suehring, K.; Li, X.; Seregin, V. JVET Common Test Conditions and Software Reference Configurations for SDR Video; doc. JVET-N1010; Joint Video Experts Team: Geneva, Switzerland, 2019. [Google Scholar]

- HEVC Reference Software. Available online: https://vcgit.hhi.fraunhofer.de/jct-vc/HM/-/tags/ (accessed on 1 September 2022).

- Chen, W.; Chen, Y.; Chernyak, R.; Choi, K.; Hashimoto, R.; Huang, Y.; Jang, H.; Liao, R.; Liu, S. JVET AHG Report: Tool Reporting Procedure (AHG13); doc. JVET-T0013; Joint Video Experts Team: Geneva, Switzerland, 2020. [Google Scholar]

- Bjøntegaard, G. Improvement of BD-PSNR Model; doc. VCEG-AI11; ITU-T SG16/Q6: Geneva, Switzerland, 2008. [Google Scholar]

- Park, S.-H.; Kang, J.-W. Context-based ternary tree decision method in versatile video coding for fast intra coding. IEEE Access 2019, 7, 172597–172605. [Google Scholar] [CrossRef]

- Park, S.-H.; Kang, J. Kang. Fast multi-type tree partitioning for versatile video coding using a lightweight neural network. IEEE Trans. Multimed. 2020, 23, 4388–4399. [Google Scholar] [CrossRef]

- Zhao, J.; Cui, T.; Zhang, Q. Fast CU partition decision strategy based on human visual system perceptual quality. IEEE Access 2021, 9, 123635–123647. [Google Scholar] [CrossRef]

- Zhang, Q.; Guo, R.; Jiang, B.; Su, R. Fast CU decision-making algorithm based on DenseNet network for VVC. IEEE Access 2021, 9, 119289–119297. [Google Scholar] [CrossRef]

- Saldanha, M.; Sanchez, G.; Marcon, C.; Agostini, L. Configurable Fast Block Partitioning for VVC Intra Coding Using Light Gradient Boosting Machine. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3947–3960. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, Y.; Huang, L.; Jiang, B. Fast CU partition and intra mode decision method for H.266/VVC. IEEE Access 2020, 8, 117539–117550. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhao, Y.; Jiang, B.; Huang, L.; Wei, T. Fast CU partition decision method based on texture characteristics for H.266/VVC. IEEE Access 2020, 8, 203516–203524. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhao, Y.; Jiang, B.; Wu, Q. Fast CU Partition Decision Method Based on Bayes and Improved De-Blocking Filter for H.266/VVC. IEEE Access 2021, 9, 70382–70391. [Google Scholar] [CrossRef]

- Fan, Y.; Chen, J.; Sun, H.; Katto, J.; Jing, M. A fast QTMT partition decision strategy for VVC intra prediction. IEEE Access 2020, 8, 107900–107911. [Google Scholar] [CrossRef]

- Yang, H.; Shen, L.; Dong, X.; Ding, Q.; An, P.; Jiang, G. Low complexity CTU partition structure decision and fast intra mode decision for versatile video coding. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1668–1682. [Google Scholar] [CrossRef]

- Tang, N.; Cao, J.; Liang, F.; Wang, J.; Liu, H.; Wang, X.; Du, X. Fast CTU partition decision algorithm for VVC intra and inter coding. In Proceedings of the IEEE Asia Pacific Conference on Circuits and Systems (APCCAS), Bangkok, Thailand, 11–14 November 2019; pp. 361–364. [Google Scholar]

- Liu, Z.; Qian, H.; Zhang, M. A Fast Multi-tree Partition Algorithm Based on Spatial-temporal Correlation for VVC. In Proceedings of the 2022 Data Compression Conference (DCC), Snowbird, UT, USA, 22–25 March 2022. [Google Scholar]

- Li, T.; Xu, M.; Tang, R.; Chen, Y.; Xing, Q. DeepQTMT: A deep learning approach for fast QTMT-based CU partition of intra-mode VVC. IEEE Trans. Image Process 2021, 30, 5377–5390. [Google Scholar] [CrossRef]

- Chen, M.; Lee, C.; Tsai, Y.; Yang, C.; Yeh, C.; Kau, L.; Chang, C. A fast QTMT partition decision strategy for VVC intra prediction. IEEE Access 2022, 10, 42141–42150. [Google Scholar] [CrossRef]

- Yeo, W.; Kim, B. CNN-based Fast Split Mode Decision Algorithm for Versatile Video Coding (VVC) Inter Prediction. J. Multimed. Inf. Syst. 2021, 8, 147–158. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, P.; Peng, B.; Ling, N.; Lei, J. A CNN-based fast inter coding method for VVC. IEEE Signal Process. Lett. 2021, 28, 1260–1264. [Google Scholar] [CrossRef]

- Dong, X.; Shen, L.; Yu, M.; Yang, H. Fast intra mode decision algorithm for versatile video coding. IEEE Trans. Multimed. 2021, 24, 400–414. [Google Scholar] [CrossRef]

- Tan, E.; Aramvith, S.; Onoye, T. Low complexity mode selection for H. 266/VVC intra coding. ICT Express 2021, 8, 83–90. [Google Scholar] [CrossRef]

- Park, J.; Kim, B.; Jeon, B. Fast VVC Intra Subpartition based on Position of Reference Pixels. In Proceedings of the 2022 International Conference on Electronics, Information, and Communication (ICEIC), Jeju, Republic of Korea, 6–9 February 2022. [Google Scholar]

- Tsang, S.H.; Kwong, N.W.; Chan, Y.L. FastSCCNet: Fast Mode Decision in VVC Screen Content Coding via Fully Convolutional Network. In Proceedings of the 2020 IEEE International Conference on Visual Communications and Image Processing (VCIP), Macau, China, 4 December 2020; pp. 177–180. [Google Scholar]

- Park, S.-H.; Kang, J.-W. Fast affine motion estimation for versatile video coding (VVC) encoding. IEEE Access 2019, 7, 158075–158084. [Google Scholar] [CrossRef]

- Zhang, M.; Deng, S.; Liu, Z. A fast geometric prediction merge mode decision algorithm based on CU gradient for VVC. In Proceedings of the 2022 Data Compression Conference (DCC), Snowbird, UT, USA, 22–25 March 2022. [Google Scholar]

- Guan, X.; Sun, X. VVC Fast ME Algorithm Based on Spatial Texture Features and Time Correlation. In Proceedings of the 2021 International Conference on Digital Society and Intelligent Systems (DSInS), Chengdu, China, 3–4 December 2021. [Google Scholar]

- Fu, T.; Zhang, H.; Mu, F.; Chen, H. Two-stage fast multiple transform selection algorithm for VVC intra coding. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 61–66. [Google Scholar]

- Choi, K.; Le, T.; Choi, Y.; Lee, J. Low-Complexity Intra Coding in Versatile Video Coding. IEEE Trans. Consum. Electron. 2022, 68, 119–126. [Google Scholar] [CrossRef]

- Kammoun, A.; Hamidouche, W.; Philippe, P.; Déforges, O.; Belghith, F.; Masmoudi, N.; Jean-François, N. Forward-inverse 2D hardware implementation of approximate transform core for the VVC standard. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4340–4354. [Google Scholar] [CrossRef]

- Hamidouche, W.; Philippe, P.; Fezza, S.; Haddou, M.; Pescador, F.; Menard, D. Hardware-Friendly Multiple Transform Selection Module for the VVC Standard. IEEE Trans. Consum. Electron. 2022, 68, 96–106. [Google Scholar] [CrossRef]

- Wieckowski, A.; Brandenburg, J.; Hinz, T.; Bartnik, C.; George, V.; Hege, G.; Helmrich, C.; Henkel, A.; Lehmann, C.; Stoffers, C.; et al. VVenc: An open and optimized VVC encoder implementation. In Proceedings of the 2021 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shenzhen, China, 5–9 July 2021. [Google Scholar]

- Wieckowski, A.; Bross, B.; Marpe, D. Fast partitioning strategies for VVC and their implementation in an Open Optimized Encoder. In Proceedings of the 2021 Picture Coding Symposium (PCS), Bristol, UK, 29 June–2 July 2021. [Google Scholar]

- Brandenburg, J.; Wieckowski, A.; Henkel, A.; Bross, B.; Marpe, D. Pareto-Optimized Coding Configurations for VVenC, a Fast and Efficient VVC Encoder. In Proceedings of the 2021 IEEE 23rd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 6–8 October 2021. [Google Scholar]

| Paper | Tech Area | Key Feature | Anchor | Scenario | T (%) | BDBR (%) |

|---|---|---|---|---|---|---|

| [44] | Intra partition, Fast split mode decision | Bayesian probability approach, Adaptive TT skipping method | VTM4.0 | AI | −34 | 1.02 |

| [45] | Intra partition, Fast split mode decision | CNN model, Adaptive TT skipping method | VTM4.0 | AI | −27 | 0.44 |

| [46] | Intra partition, Fast split mode decision | JND model, Adaptive split mode skipping method | VTM7.0 | AI | −48 | 0.79 |

| [47] | Intra partition, Fast split mode decision | CNN model, Split mode estimation | VTM10.0 | AI | −46 | 1.86 |

| [48] | Intra partition, Fast split mode decision | CNN model, Split mode estimation | VTM10.0 | AI | −54 | 1.42 |

| Paper | Tech Area | Key Feature | Anchor | Scenario | T (%) | BDBR (%) |

|---|---|---|---|---|---|---|

| [49] | Intra partition, Fast depth decision, Fast split mode decision | Forest classifier model, Canny operator-based texture analysis | VTM4.0 | AI | −54 | 0.93 |

| [50] | Intra partition, Fast depth decision, Deblocking filter | JRMD and intra-mode analysis, SAD-based texture analysis | VTM7.0 | AI | −48.58 | 0.91 |

| [51] | Intra partition, Fast depth decision, Fast split mode decision | JRMD-based depth analysis, DBF texture information analysis | VTM11.0 | AI | −56.08 | 1.3 |

| [52] | Intra partition, Fast depth decision, Fast split mode decision, Intra-mode selection | SAD and Sobel operator-based texture analysis | VTM7.0 | AI | −49.27 | 1.63 |

| [53] | Intra partition, Inter partition, Fast depth decision, Fast split mode decision | Texture information analysis, Trained model, Gradient descent-based search | VTM2.0 | AI | −62 | 1.93 |

| [54] | Inter partition, Fast depth decision, Fast split mode decision | Canny operator-based texture analysis, Temporal correlation analysis | VTM4.0 | AIRA | −36−31 | 0.711.34 |

| [55] | Intra partition, Fast depth decision | Temporal correlation analysis | VTM11.2 | RA | −22 | 1.34 |

| [56] | Intra partition, Fast depth decision | CNN model, Split mode, and depth estimation | VTM7.0 | AI | −46 | 1.32 |

| [57] | Inter partition, Inter-mode decision, Fast depth decision, Fast split mode decision | Forest classifier model, Human visual system analysis | VTM7.0 | AI | −41 | 1.14 |

| [58] | Inter partition, Inter-mode decision, Fast depth decision, Fast split mode decision | CNN model, Split mode, and depth estimation | VTM11.0 | RA | −12 | 1.01 |

| [59] | Intra partition, Fast depth decision, Fast split mode decision | CNN model, Split mode, and depth estimation | VTM6.0 | RA | −31 | 3.18 |

| Paper | Tech Area | Key Feature | Anchor | Scenario | TS (%) | BDBR (%) |

|---|---|---|---|---|---|---|

| [60] | Intra-prediction, Fast depth decision | Learning-based classifier, Intra-prediction estimation | VTM10.0 | AI | −53 | 0.93 |

| [61] | Intra-mode | SATD-based intra-mode estimation | VTM5.0 | AI | −21 | 0.88 |

| [62] | Intra-prediction, ISP | ISP and MRL analysis | VTM14.0 | AI | −4 | 0.04 |

| [63] | Intra-prediction, IBC, PLT | CNN model, Local block analysis | VTM9.2 | AI | −30 | 2.42 |

| [64] | Inter-prediction, AME | Statistical analysis | VTM3.0 | RA | −37 | 0.1 |

| [65] | Inter-prediction, GPM | Sobel operator-based analysis, Direction analysis | VTM8.0 | RA | −14 | 0.14 |

| [66] | Inter-prediction, AME | Prewitt operator-based analysis, Histogram analysis | VTM11.0 | RA | −15.5 | 0.55 |

| [67] | Transform, MTS | DCT cost analysis | VTM3.0 | AI | −23 | 0.16 |

| [68] | Framework | Down/upsampling, Tool on/off analysis | VTM12.0 | AI | −69 | −4.6 |

| Paper | Tech Area | Key Feature | Anchor | Scenario | Performance | BDBR (%) |

|---|---|---|---|---|---|---|

| [69] | Transform, Hardware implementation | Low-cost DCT-II implementation, Approximate DST-VII, DCT-VIII | VTM3.0 | AI | 12% of Alms, 22% of registers, and 30% of DSP blocks | 0.15 |

| [70] | Transform, Hardware implementation | Low-cost DCT-II implementation, Approximate DST-VII, DCT-VIII | VTM3.0 | AIRA | 5.37%, 68%, 84%, and 92% of multiplication savings with respect to transform sizes N = 8, 16, 32, and 64 | 0.090.01 |

| [71] | Software implementation | Five predefined presetting different encoding speed/compression quality offsets | VTM12.0 | RA | 30 × faster | 12 |

| [72] | Software implementation, Partition | Split mode and depth estimation | VTM12.0 | RA | 42% speedup of encoding | 1.3 |

| [73] | Software implementation, Tool combination | Pareto set, Pre-grouping tools and options | HM16.22 | RA | 25% speedup of encoding | −38 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, K. A Study on Fast and Low-Complexity Algorithms for Versatile Video Coding. Sensors 2022, 22, 8990. https://doi.org/10.3390/s22228990

Choi K. A Study on Fast and Low-Complexity Algorithms for Versatile Video Coding. Sensors. 2022; 22(22):8990. https://doi.org/10.3390/s22228990

Chicago/Turabian StyleChoi, Kiho. 2022. "A Study on Fast and Low-Complexity Algorithms for Versatile Video Coding" Sensors 22, no. 22: 8990. https://doi.org/10.3390/s22228990

APA StyleChoi, K. (2022). A Study on Fast and Low-Complexity Algorithms for Versatile Video Coding. Sensors, 22(22), 8990. https://doi.org/10.3390/s22228990