A Combined mmWave Tracking and Classification Framework Using a Camera for Labeling and Supervised Learning

Abstract

:1. Introduction

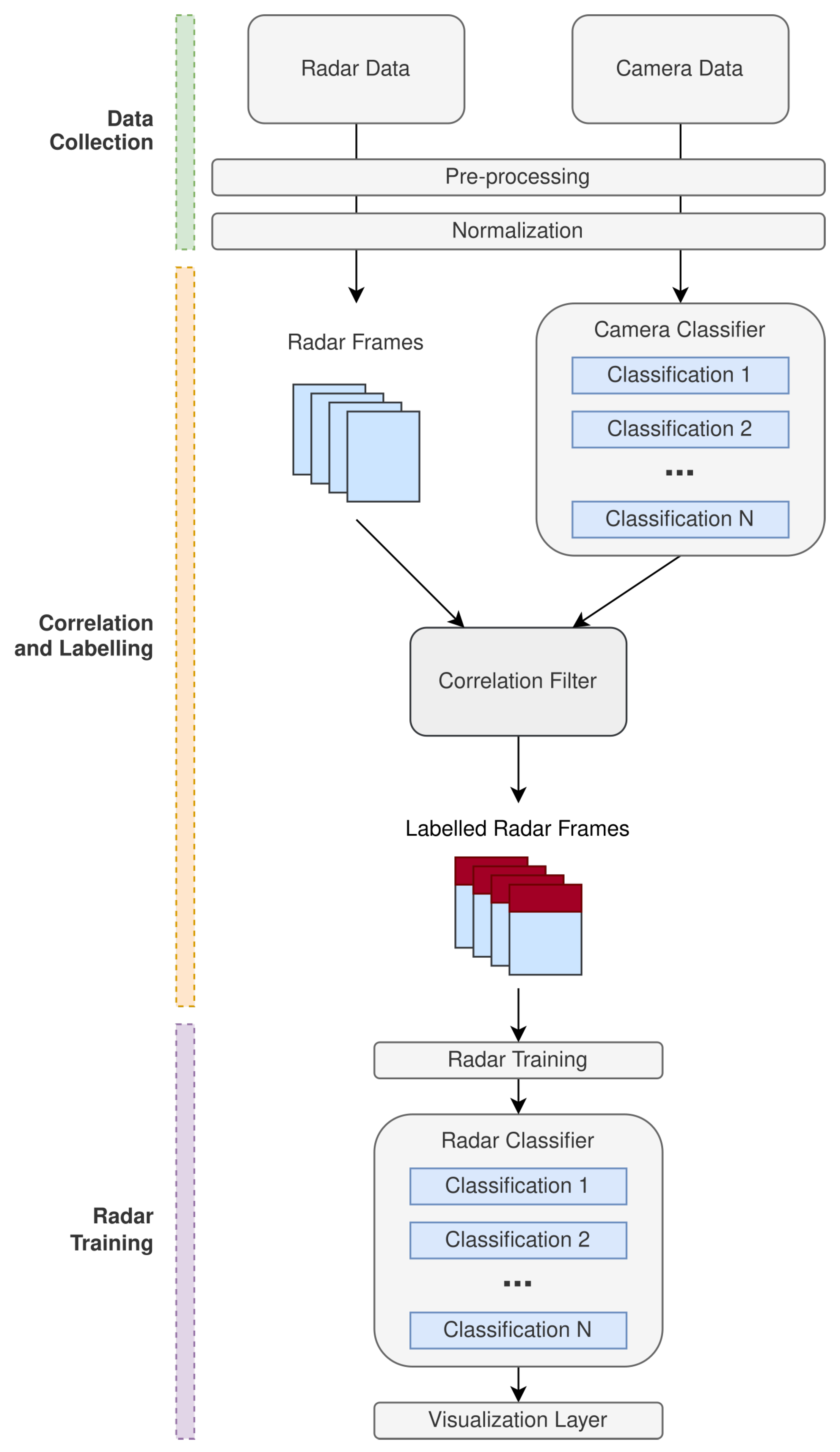

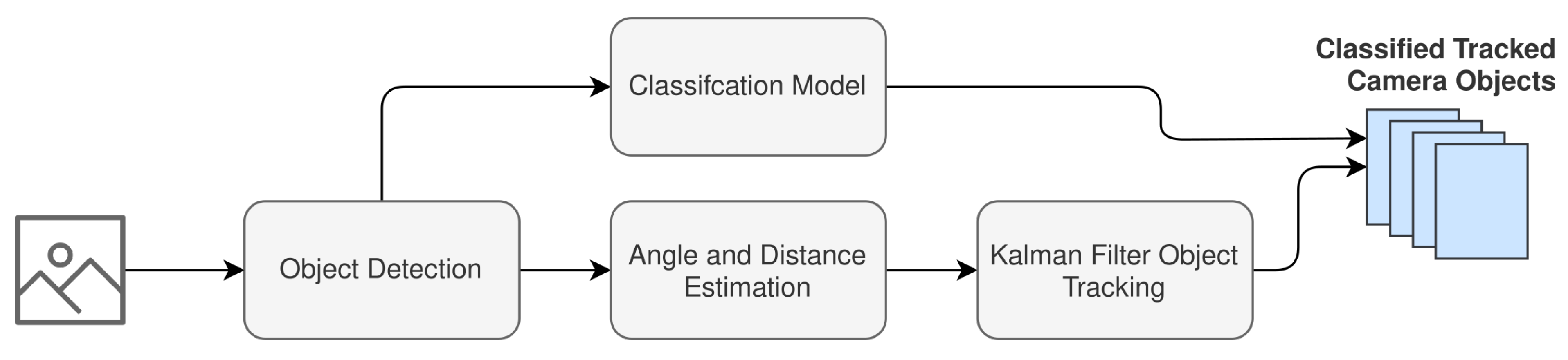

- System architecture.

- Fusion depth.

- Fusion process.

- Fusion algorithm.

- The radar training with a camera labeling framework that we present is generalized by definition as it is not specific to a given classification problem in either the radar or camera domain. The agnostic nature of the framework we propose is the first of its kind (that we are aware of) in the context of mmWave radar. Existing approaches are either specific to the task of object detection or specific to the classification problem that the given authors are attempting to solve.

- The framework that we present in Section 2.1 is the first of its kind that includes a suggested approach towards all stages in the processing chain involved in achieving a radar classifier. Existing approaches usually have a focus on presenting a framework that only shows a means for labeling camera data, usually specific to the task at hand, and applying it to either raw or pre-processed radar data. Our framework also satisfies that objective but takes the labeled data further and demonstrates how this labeled radar data can be used in a teacher-and-student-based approach to form a standalone radar classifier.

- To demonstrate the feasibility of the framework proposed, we also demonstrate a practical implementation of our proposed framework. In our example implementation, we demonstrate how a pre-trained camera classifier can be used to label raw mmWave data for human activity recognition in conjunction with performing mmWave multiple-object tracking. The correlation technique that we devised and utilized is unique and a looser form of the calibration that takes place between the camera and radar. This removes the need for tight coupling between raw radar points and points in the vision domain.

1.1. Review of Related Literature

- Radar point filtering—where noise and undesirable data is acknowledged and filtered from the radar data. The work of [17] presents an approach that demonstrates calibration involving the filtering of undesired data points based on speed and angular velocity.

- Error calibration—refers to the processes implemented to overcome errors in the calibrated data. There are many methods that have been devised to attempt to overcome calibration error. One approach presented by [18] demonstrates an Extended Kalman Filter that is used to model the measurement errors present in the independent sensors.

2. Materials and Methods

2.1. Radar Training with Camera Labeling and a Supervision Methodology

2.1.1. Problem Space

2.1.2. Proposed Approach

- Data collection.

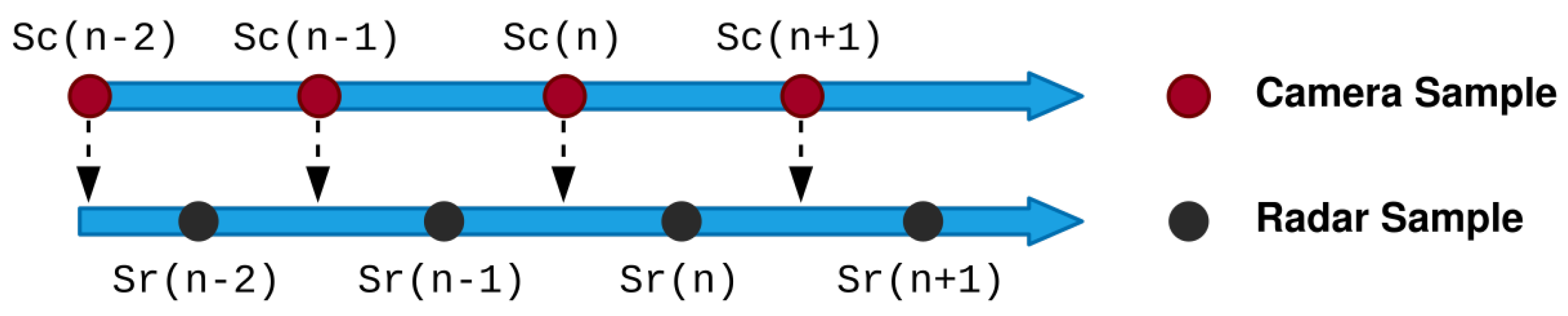

- Correlation and labeling.

- Radar training.

2.2. System Design and Implementation

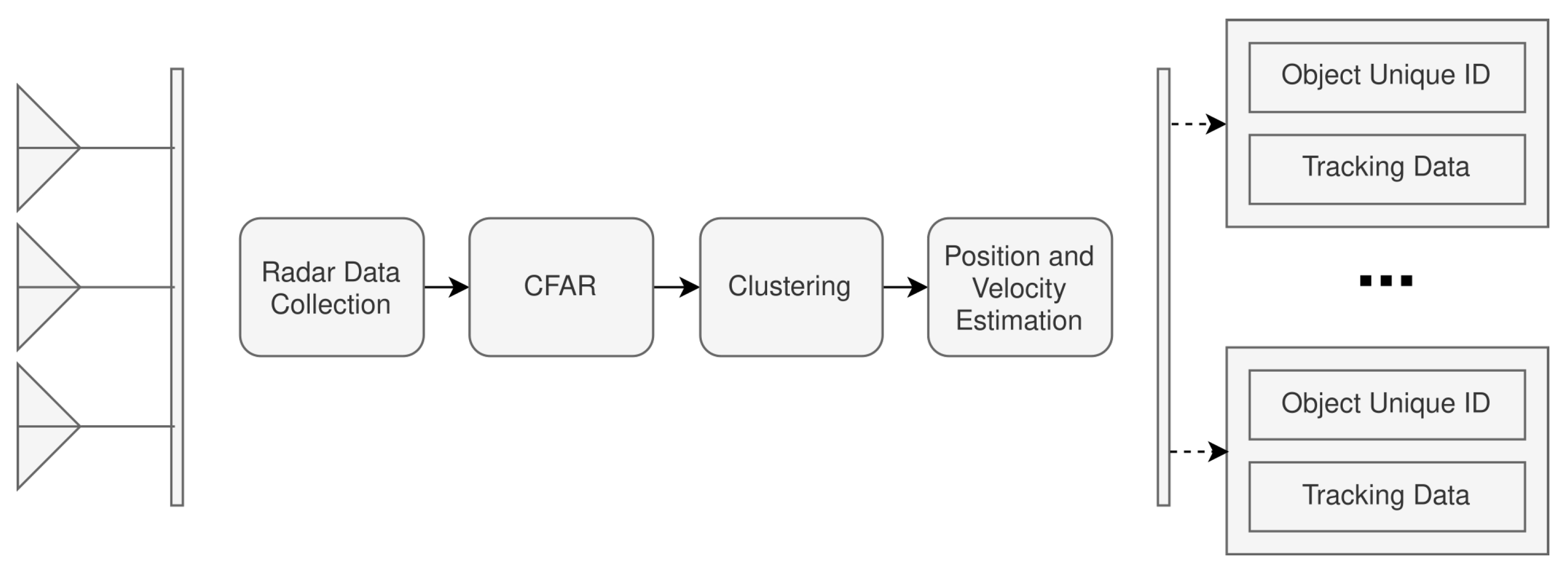

- Radar Pipeline.

- Camera Pipeline.

- Fused Pipeline.

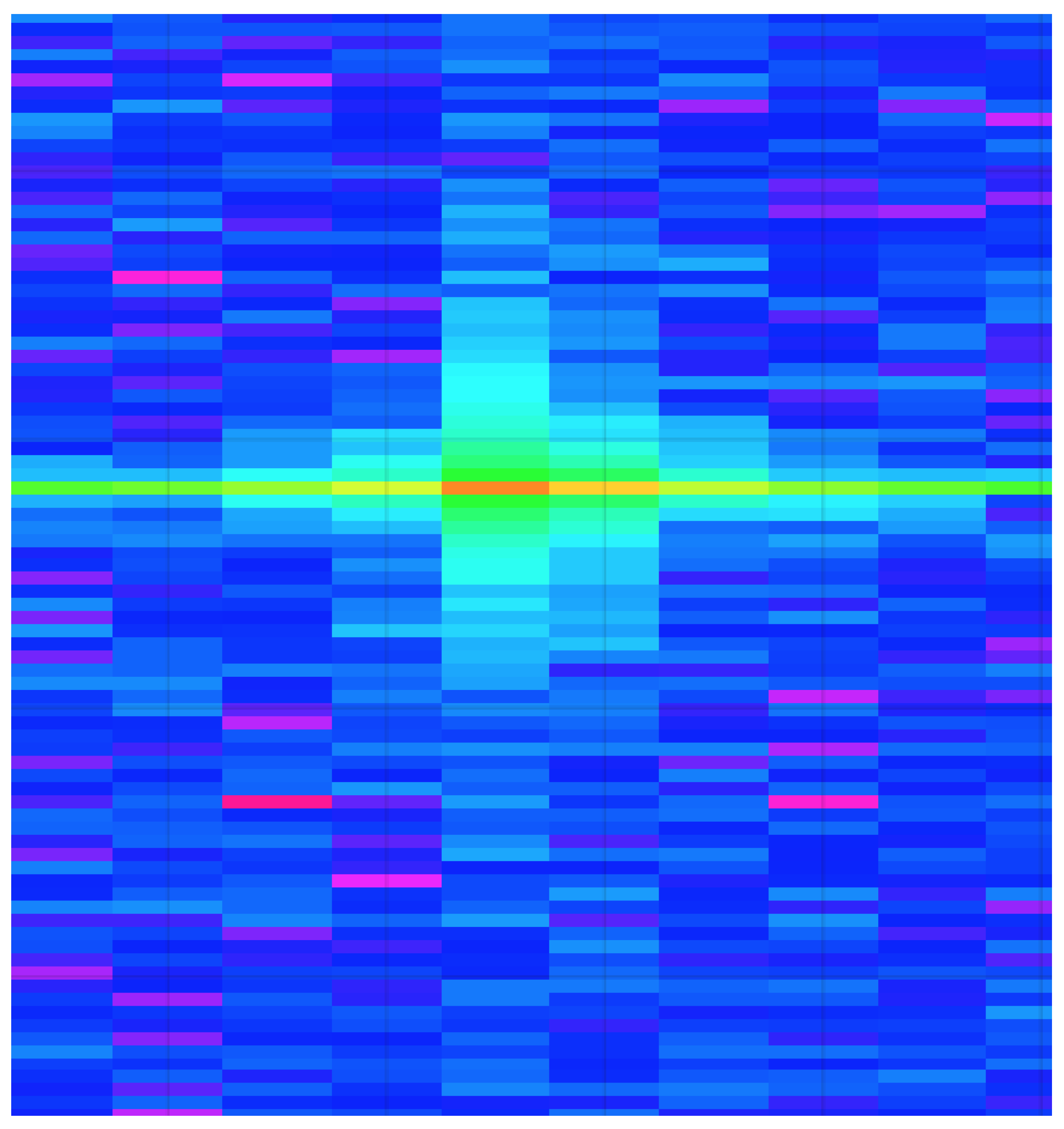

2.2.1. Radar Pipeline

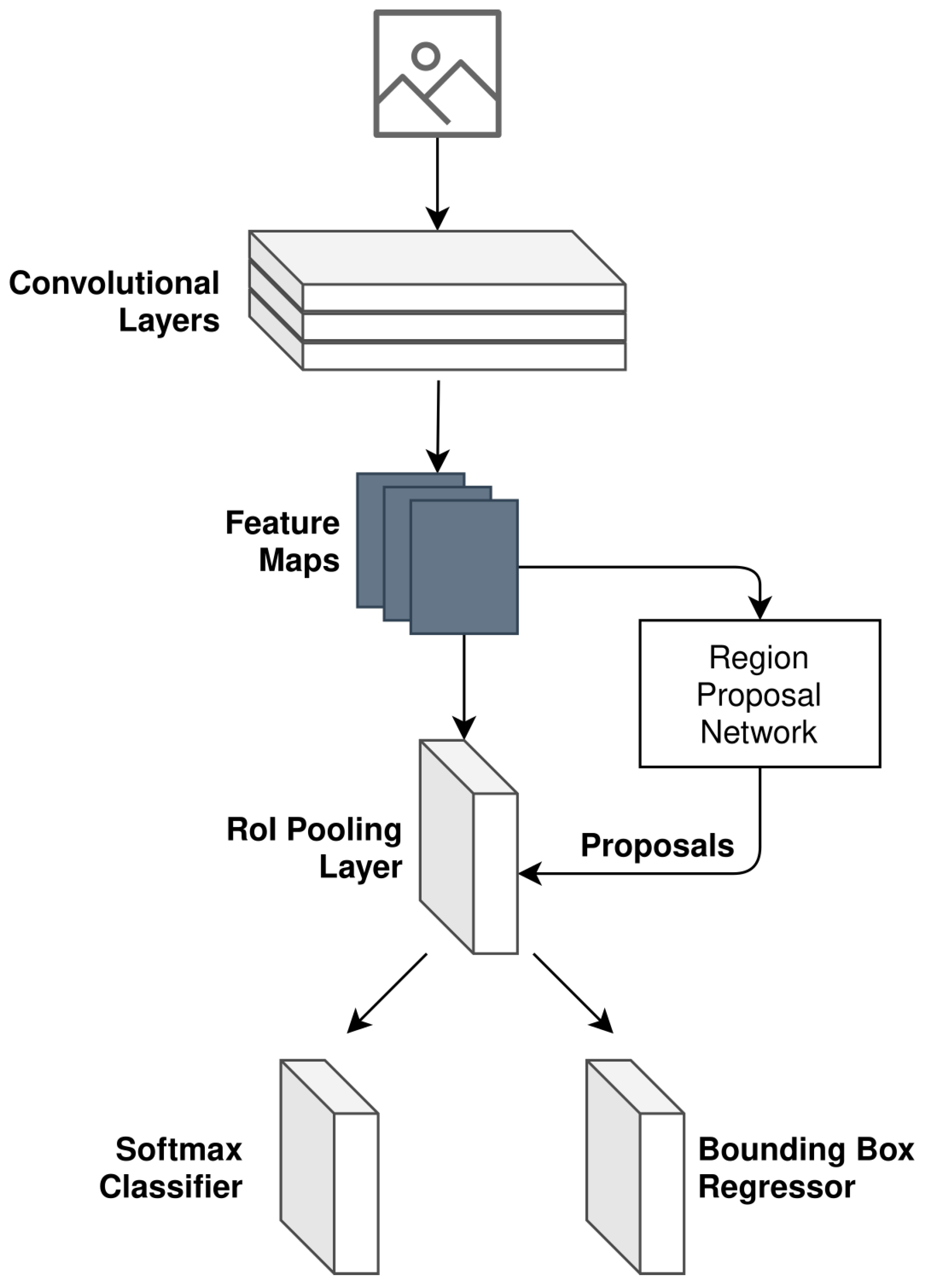

2.2.2. Camera Pipeline

- Formulate a 2D skeleton for each detected object in the field of view.

- Classify the human activity that is occurring using the 2D skeleton.

- Walking.

- Running.

- Falling.

2.2.3. Fused Pipeline

3. Results

- No targets in the field of view.

- A single target in the field of view.

- Multiple targets in the field of view.

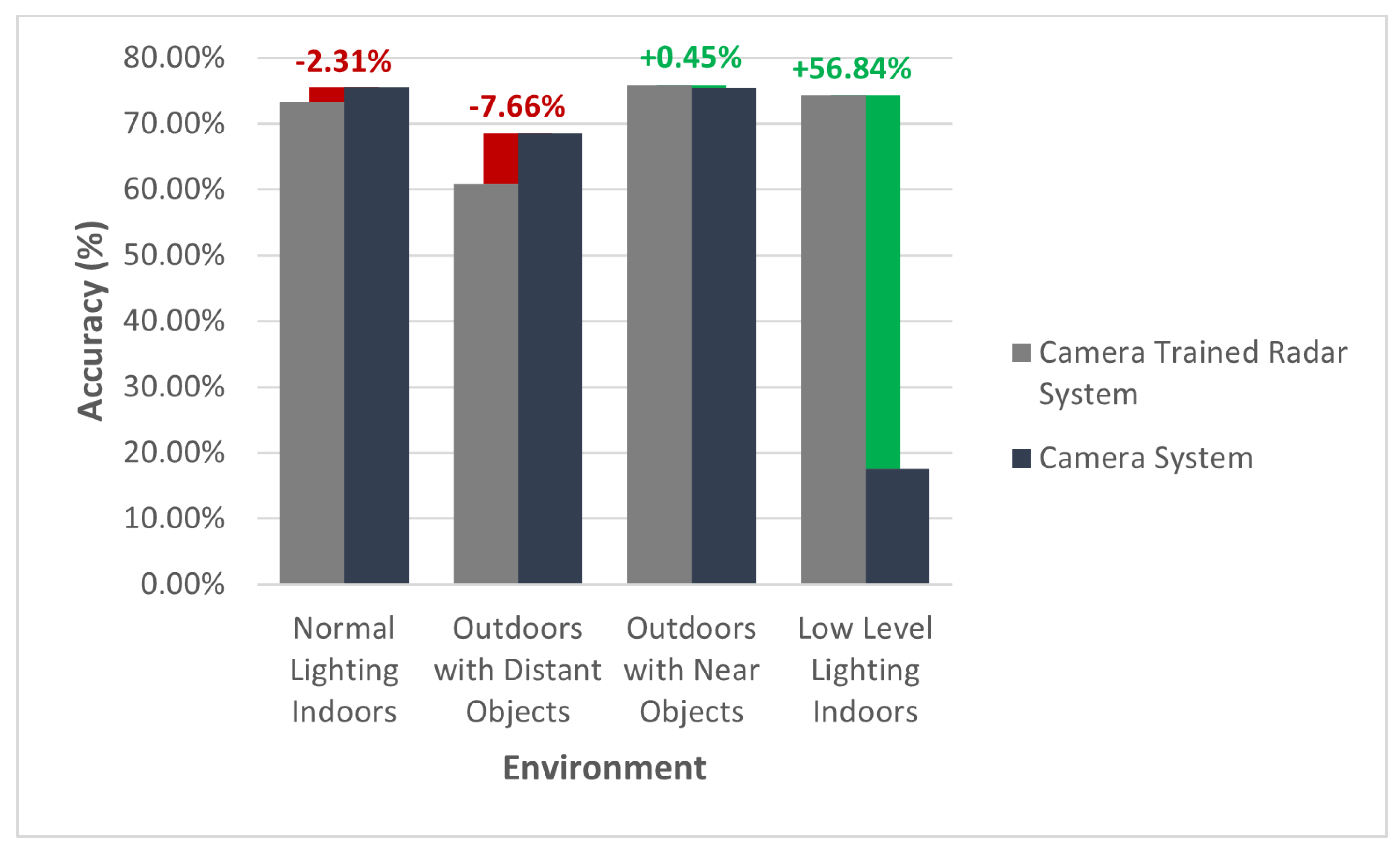

- Camera-Trained Radar Classifier: A radar classifier trained using camera-labeled data via the framework proposed in this paper.

- Trained Standalone Camera System: A camera classifier that is used to label the frames for the camera trained standalone radar classifier.

- Manually Labeled Radar Classifier: A radar classifier, of the same design as the camera trained standalone radar classifier that was trained using manually labeled radar data.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADC | Analog-to-Digital Converter |

| ANN | Artificial Neural Network |

| CFAR | Constant False Alarm Rate |

| CNN | Convolutional Neural Network |

| DBSCAN | Density-based Spatial Clustering of Applications with Noise |

| FFT | Fast Fourier Transformation |

| FMCW | Frequency-Modulated Continuous-Wave |

| mmWave | Millimeter Wave |

| Msps | Mega-samples per second |

| R-CNN | Region-based Convolutional Neural Network |

References

- Wang, B.; Qi, Z.; Ma, G.; Cheng, S. Vehicle detection based on information fusion of radar and machine vision. Automot. Eng. 2015, 37, 674–678. [Google Scholar]

- Zhou, Y.; Dong, Y.; Hou, F.; Wu, J. Review on Millimeter-Wave Radar and Camera Fusion Technology. Sustainability 2022, 14, 5114. [Google Scholar] [CrossRef]

- Alessandretti, G.; Broggi, A.; Cerri, P. Vehicle and Guard Rail Detection Using Radar and Vision Data Fusion. IEEE Trans. Intell. Transp. Syst. 2007, 8, 95–105. [Google Scholar] [CrossRef] [Green Version]

- Zhao, L. Multi-sensor information fusion technology and its applications. Infrared 2021, 42, 21. [Google Scholar]

- Cao, C.; Gao, J.; Liu, Y.C. Research on Space Fusion Method of Millimeter Wave Radar and Vision Sensor. Procedia Comput. Sci. 2020, 166, 68–72. [Google Scholar] [CrossRef]

- Song, C.; Son, G.; Kim, H.; Gu, D.; Lee, J.H.; Kim, Y. A Novel Method of Spatial Calibration for Camera and 2D Radar Based on Registration. In Proceedings of the 2017 6th IIAI International Congress on Advanced Applied Informatics (IIAI-AAI), Hamamatsu, Japan, 9–13 July 2017; pp. 1055–1056. [Google Scholar] [CrossRef]

- Junlong, H. Unified Calibration Method for Millimeter-Wave Radar and Machine Vision. Int. J. Eng. Res. Technol. 2018, V7, 10. [Google Scholar] [CrossRef]

- Liu, X.; Cai, Z. Advanced obstacles detection and tracking by fusing millimeter wave radar and image sensor data. In Proceedings of the ICCAS 2010, Gyeonggi-do, Korea, 27–30 October 2010; pp. 1115–1120. [Google Scholar] [CrossRef]

- Wang, T.; Xin, J.; Zheng, N. A Method Integrating Human Visual Attention and Consciousness of Radar and Vision Fusion for Autonomous Vehicle Navigation. In Proceedings of the 2011 IEEE Fourth International Conference on Space Mission Challenges for Information Technology, Palo Alto, CA, USA, 2–4 August 2011; pp. 192–197. [Google Scholar] [CrossRef]

- Ji, Z.; Prokhorov, D. Radar-vision fusion for object classification. In Proceedings of the 2008 11th International Conference on Information Fusion, Cologne, Germany, 30 June–3 July 2008; pp. 1–7. [Google Scholar]

- Zhang, X.; Zhou, M.; Qiu, P.; Huang, Y.; Li, J. Radar and vision fusion for the real-time obstacle detection and identification. Ind. Robot. Int. J. Robot. Res. Appl. 2019, 46, 391–395. [Google Scholar] [CrossRef]

- Lim, T.Y.; Markowitz, S.A.; Do, M.N. RaDICaL: A Synchronized FMCW Radar, Depth, IMU and RGB Camera Data Dataset With Low-Level FMCW Radar Signals. IEEE J. Sel. Top. Signal Process. 2021, 15, 941–953. [Google Scholar] [CrossRef]

- Wei, Z.; Zhang, F.; Chang, S.; Liu, Y.; Wu, H.; Feng, Z. MmWave Radar and Vision Fusion for Object Detection in Autonomous Driving: A Review. Sensors 2022, 22, 2542. [Google Scholar] [CrossRef] [PubMed]

- Kato, T.; Ninomiya, Y.; Masaki, I. An obstacle detection method by fusion of radar and motion stereo. IEEE Trans. Intell. Transp. Syst. 2002, 3, 182–188. [Google Scholar] [CrossRef]

- Guo, X.p.; Du, J.s.; Gao, J.; Wang, W. Pedestrian Detection Based on Fusion of Millimeter Wave Radar and Vision. In Proceedings of the 2018 International Conference on Artificial Intelligence and Pattern Recognition, Beijing, China, 18–20 August 2018; pp. 38–42. [Google Scholar] [CrossRef]

- Streubel, R.; Yang, B. Fusion of stereo camera and MIMO-FMCW radar for pedestrian tracking in indoor environments. In Proceedings of the 2016 19th International Conference on Information Fusion (FUSION), Heidelberg, Germany, 5–8 July 2016; pp. 565–572. [Google Scholar]

- Huang, W.; Zhang, Z.; Li, W.; Tian, J. Moving Object Tracking Based on Millimeter-wave Radar and Vision Sensor. J. Appl. Sci. Eng. 2018, 21, 609–614. [Google Scholar] [CrossRef]

- Richter, E.; Schubert, R.; Wanielik, G. Radar and vision based data fusion - Advanced filtering techniques for a multi object vehicle tracking system. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 120–125. [Google Scholar] [CrossRef]

- Lim, T.Y.; Ansari, A.; Major, B.; Fontijne, D.; Hamilton, M.; Gowaikar, R.; Subramanian, S. Radar and camera early fusion for vehicle detection in advanced driver assistance systems. In Proceedings of the Machine Learning for Autonomous Driving Workshop at the 33rd Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 2, p. 7. [Google Scholar]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A Deep Learning-based Radar and Camera Sensor Fusion Architecture for Object Detection. In Proceedings of the 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 15–17 October 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Winterling, T.; Lombacher, J.; Hahn, M.; Dickmann, J.; Wöhler, C. Optimizing labelling on radar-based grid maps using active learning. In Proceedings of the 2017 18th International Radar Symposium (IRS), Prague, Czech, 28–30 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Fang, H.S.; Xie, S.; Tai, Y.W.; Lu, C. RMPE: Regional Multi-person Pose Estimation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2353–2362. [Google Scholar] [CrossRef]

| Architecture Type | Description | Benefits & Limitations |

|---|---|---|

| Centralized | This refers to an architecture where the individual raw data of both the camera and mmWave radar is obtained independently and converged in a central processor for processing. | Benefits: Low information loss, original data preserved, simple structure, and a high processing rate.Limitations: Independent sensor units, large communication bandwidth required, high computing power needed by a centralized unit, and a single point of failure. |

| Distributed | This refers to an approach where each the radar and camera process their own data independently and send the post-processed data to a central fusion unit to then perform fusion on the post-processed data. | Benefits: Reducing the transmission time, reduced pressure on the fusion center, higher reliability resistance, and low communication bandwidth.Limitations: Data collection units also require the capability of processing the data, and the central processor is operating on post-processed data resulting in reduced flexibility. |

| Hybrid | The hybrid fusion approach refers to an architecture where some sensors follow the centralized approach, as defined above, and others follow the distributed approach, also as defined above. Measurements from all sensors are combined into a hybrid measurement, which in turn, is used to update the final data. | Benefits: Advantages of both centralized and distributed are retained as well as flexibility in satisfying varying requirements.Limitations: Complex data structures, increased computational and communication load, and high design requirements. |

| Fusion Depth | Description |

|---|---|

| Low level | This class of fusion depth is best considered to be at the data level. It refers to a level of fusion that takes the raw data from each sensor to form a synthetic dataset illustrating a raw fused state, ready to be further processed. |

| Medium level | This refers to a class of fusion that takes place once several primitive features have been derived for each sensor independently and are fused to form a feature super set. |

| High level | This fusion level is considered an advanced form of fusion. Fusion at this level takes place once independent outcomes have been derived for each sensor, and the fused result is an expression of the combined sensor specific outcomes. |

| Activity | Distribution |

|---|---|

| Running | 26.69% |

| Walking | 25.02% |

| Falling | 23.34% |

| Unknown | 24.95% |

| Environment | Trained Similarity |

|---|---|

| Normal lighting indoors | 97.69% ↓ |

| Outdoors with distant objects | 92.34% ↓ |

| Outdoors with near objects | 99.55% ↑ |

| Low level lighting indoors | 43.16% ↑ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pearce, A.; Zhang, J.A.; Xu, R. A Combined mmWave Tracking and Classification Framework Using a Camera for Labeling and Supervised Learning. Sensors 2022, 22, 8859. https://doi.org/10.3390/s22228859

Pearce A, Zhang JA, Xu R. A Combined mmWave Tracking and Classification Framework Using a Camera for Labeling and Supervised Learning. Sensors. 2022; 22(22):8859. https://doi.org/10.3390/s22228859

Chicago/Turabian StylePearce, Andre, J. Andrew Zhang, and Richard Xu. 2022. "A Combined mmWave Tracking and Classification Framework Using a Camera for Labeling and Supervised Learning" Sensors 22, no. 22: 8859. https://doi.org/10.3390/s22228859

APA StylePearce, A., Zhang, J. A., & Xu, R. (2022). A Combined mmWave Tracking and Classification Framework Using a Camera for Labeling and Supervised Learning. Sensors, 22(22), 8859. https://doi.org/10.3390/s22228859