LiDAR and Deep Learning-Based Standing Tree Detection for Firebreaks Applications

Abstract

1. Introduction

1.1. Background

1.2. Related Work

- (1)

- It proposes a dataset for stumpage detection produced based on the KITTI dataset. It is also demonstrated that LiDAR point cloud data are a valid data source for standing wood detection.

- (2)

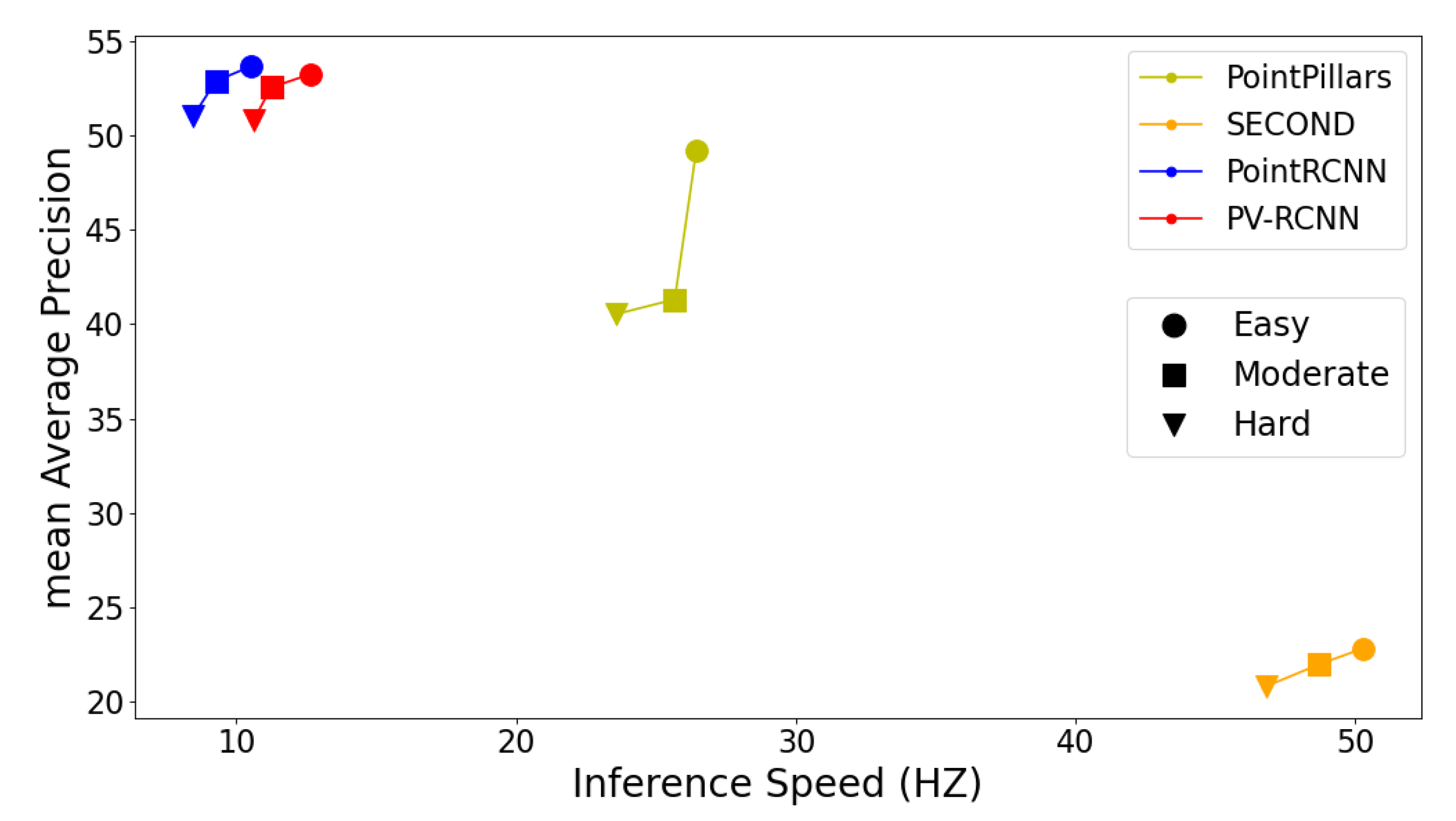

- A deep learning approach in combination with LiDAR is developed to achieve standing timber detection, and analyze the detection accuracy and effectiveness of four-point cloud object detection networks, including the PointPillars, PointRCNN, PVRCNN, and SECOND, on the above dataset.

2. Materials and Methods

2.1. Data Acquisition and Processing

2.2. Methods

2.2.1. PiontPillars

2.2.2. PiontRCNN

2.2.3. SECOND

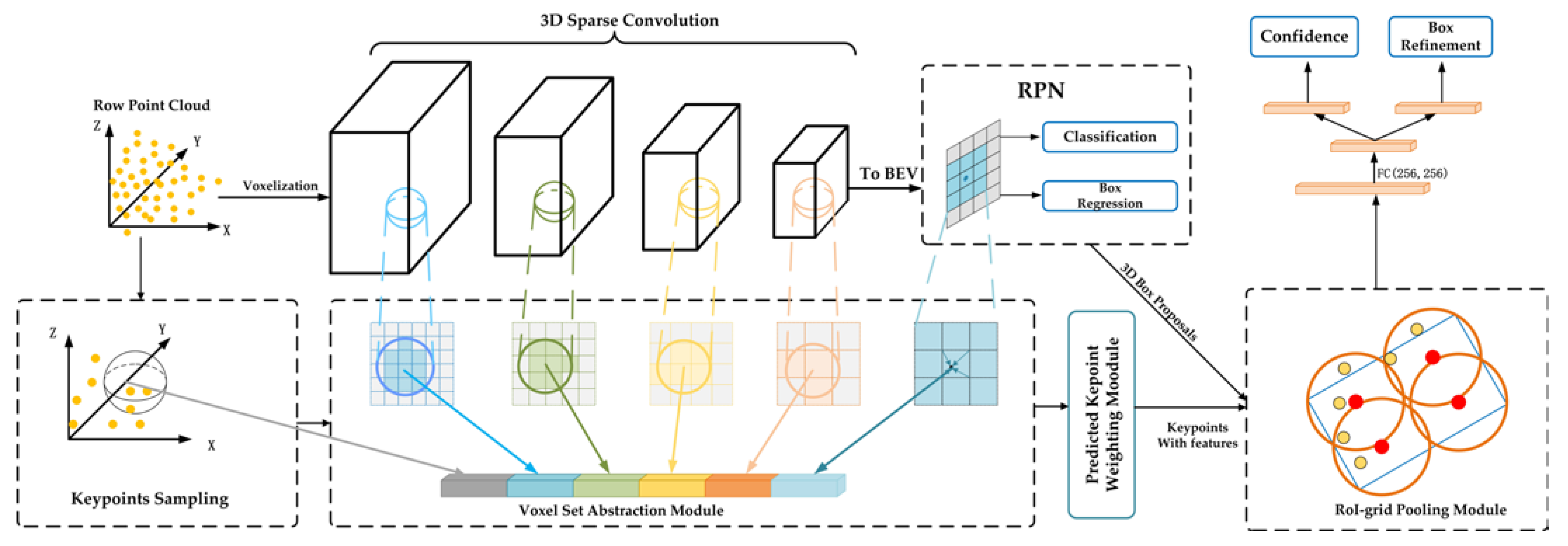

2.2.4. PV-RCNN

2.3. Training Strategy

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Green, L.R. Fuelbreaks and Other Fuel Modification for Wildland Fire Control; US Department of Agriculture, Forest Service: Washington, DC, USA, 1977.

- Van Wagtendonk, J.W. Use of a Deterministic Fire Growth Model to Test Fuel Treatments. In Sierra Nevada Ecosystem Project: Final Report to Congress, Volume II; University of California-Davis, Wildland Resources Center: Davis, CA, USA, 1996; Volume 43. [Google Scholar]

- Agee, J.K.; Bahro, B.; Finney, M.A.; Omi, P.N.; Sapsis, D.B.; Skinner, C.N.; van Wagtendonk, J.W.; Phillip Weatherspoon, C. The Use of Shaded Fuelbreaks in Landscape Fire Management. For. Ecol. Manag. 2000, 127, 55–66. [Google Scholar] [CrossRef]

- Rigolot, E.; Castelli, L.; Cohen, M.; Costa, M.; Duche, Y. Recommendations for fuel-break design and fuel management at the wildland urban interface: An empirical approach in south eastern France. In Proceedings of the Institute of Mediterranean Forest Ecosystems and Forest Products Warm International Workshop, Athènes, Greece, 15–16 May 2004; pp. 131–142. [Google Scholar]

- Dennis, F.C. Fuelbreak Guidelines for Forested Subdivisions & Communities. Ph.D. Thesis, Colorado State University, Fort Collins, CO, USA, 2005. [Google Scholar]

- Mooney, C. Fuelbreak Effectiveness in Canada’s Boreal Forests: A Synthesis of Current Knowledge; FPInnovations: Vancouver, BC, Canada, 2010; 53p. [Google Scholar]

- Zhang, H.; Xu, M.; Zhuo, L.; Havyarimana, V. A Novel Optimization Framework for Salient Object Detection. Vis. Comput. 2016, 32, 31–41. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA; pp. 886–893. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. arXiv 2013, arXiv:1311.2524. [Google Scholar]

- Tian, Y.; Luo, P.; Wang, X.; Tang, X. Pedestrian Detection Aided by Deep Learning Semantic Tasks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5079–5087. [Google Scholar]

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and Localization Methods for Vision-Based Fruit Picking Robots: A Review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep Learning for LiDAR Point Clouds in Autonomous Driving: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3412–3432. [Google Scholar] [CrossRef]

- Liu, Z.; Zhao, X.; Huang, T.; Hu, R.; Zhou, Y.; Bai, X. TANet: Robust 3D Object Detection from Point Clouds with Triple Attention. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Zamanakos, G.; Tsochatzidis, L.; Amanatiadis, A.; Pratikakis, I. A comprehensive survey of LIDAR-based 3D object detection methods with deep learning for autonomous driving. Comput. Graph. 2021, 99, 153–181. [Google Scholar] [CrossRef]

- Zheng, W.; Tang, W.; Jiang, L.; Fu, C. SE-SSD: Self-Ensembling Single-Stage Object Detector from Point Cloud. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 14489–14498. [Google Scholar] [CrossRef]

- Zheng, X.; Zhu, J. Efficient LiDAR Odometry for Autonomous Driving. IEEE Robot. Autom. Lett. 2021, 6, 8458–8465. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, S.; Shen, X.; Jia, J. Fast Point R-CNN. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9774–9783. [Google Scholar] [CrossRef]

- Ye, M.; Xu, S.; Cao, T. HVNet: Hybrid Voxel Network for LiDAR Based 3D Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1628–1637. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. arXiv 2016, arXiv:1612.00593. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. arXiv 2017, arXiv:1706.02413. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Wang, C.; Cheng, M.; Sohel, F.; Bennamoun, M.; Li, J. NormalNet: A Voxel-Based CNN for 3D Object Classification and Retrieval. Neurocomputing 2019, 323, 139–147. [Google Scholar] [CrossRef]

- Cheng, Y.-T.; Lin, Y.-C.; Habib, A. Generalized LiDAR Intensity Normalization and Its Positive Impact on Geometric and Learning-Based Lane Marking Detection. Remote Sens. 2022, 14, 4393. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jiang, H.; Learned-Miller, E. Face Detection with the Faster R-CNN. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May 2017–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 650–657. [Google Scholar]

- Emin, M.; Anwar, E.; Liu, S.; Emin, B.; Mamut, M.; Abdukeram, A.; Liu, T. Objection detection-Based Tree Recognition in a Spruce Forest Area with a High Tree Density—Implications for Estimating Tree Numbers. Sustainability 2021, 13, 3279. [Google Scholar] [CrossRef]

- Liao, K.; Li, Y.; Zou, B.; Li, D.; Lu, D. Examining the Role of UAV Lidar Data in Improving Tree Volume Calculation Accuracy. Remote Sens. 2022, 14, 4410. [Google Scholar] [CrossRef]

- Wang, M.; Im, J.; Zhao, Y.; Zhen, Z. Multi-Platform LiDAR for Non-Destructive Individual Aboveground Biomass Estimation for Changbai Larch (Larix olgensis Henry) Using a Hierarchical Bayesian Approach. Remote Sens. 2022, 14, 4361. [Google Scholar] [CrossRef]

- Sparks, A.M.; Smith, A.M.S. Accuracy of a LiDAR-Based Individual Tree Detection and Attribute Measurement Algorithm Developed to Inform Forest Products Supply Chain and Resource Management. Forests 2022, 13, 3. [Google Scholar] [CrossRef]

- Guerra-Hernandez, J.; Gonzalez-Ferreiro, E.; Sarmento, A.; Silva, J.; Nunes, A.; Correia, A.C.; Fontes, L.; Tomé, M.; Diaz-Varela, R. Short Communication. Using High Resolution UAV Imagery to Estimate Tree Variables in Pinus Pinea Plantation in Portugal. For. Syst. 2016, 25, eSC09. [Google Scholar] [CrossRef]

- Zhang, C.; Xia, K.; Feng, H.; Yang, Y.; Du, X. Tree Species Classification Using Deep Learning and RGB Optical Images Obtained by an Unmanned Aerial Vehicle. J. For. Res. 2021, 32, 1879–1888. [Google Scholar] [CrossRef]

- Wang, H.; Lin, Y.; Xu, X.; Chen, Z.; Wu, Z.; Tang, Y. A Study on Long-Close Distance Coordination Control Strategy for Litchi Picking. Agronomy 2022, 12, 1520. [Google Scholar] [CrossRef]

- Palenichka, R.M.; Zaremba, M.B. Scale-Adaptive Segmentation and Recognition of Individual Trees Based on LiDAR Data. In Image Analysis and Recognition; Springer: Berlin/Heidelberg, Germany, 2007; pp. 1082–1092. [Google Scholar]

- Mohan, M.; Leite, R.V.; Broadbent, E.N.; Wan Mohd Jaafar, W.S.; Srinivasan, S.; Bajaj, S.; Dalla Corte, A.P.; do Amaral, C.H.; Gopan, G.; Saad, S.N.M.; et al. Individual tree detection using UAV-lidar and UAV-SfM data: A tutorial for beginners. Open Geosci. 2021, 13, 1028–1039. [Google Scholar] [CrossRef]

- La, H.P.; Eo, Y.D.; Chang, A.; Kim, C. Extraction of Individual Tree Crown Using Hyperspectral Image and LiDAR Data. KSCE J. Civ. Eng. 2015, 19, 1078–1087. [Google Scholar] [CrossRef]

- Zhao, Z.; Feng, Z.; Liu, J.; Li, Y. Stand Parameter Extraction Based on Video Point Cloud Data. J. For. Res. 2021, 32, 1553–1565. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3354–3361. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar] [CrossRef]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection from Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12689–12697. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection from Point Cloud. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10526–10535. [Google Scholar] [CrossRef]

| Nets | Layer 1 | Layer 2 | Layer 3 |

|---|---|---|---|

| PointPillars | Feature net Converting point clouds to sparse pseudo-images | Backbone 2D convolutional processing for high-level representation | Detection head Detect and return to 3D frame |

| SECOND | Features extractor Extraction of voxel characteristics | Sparse convolution Sparse point cloud convolution | RPN Detect and regress target 3D frames |

| PointRCNN | Point wise feature vector Extraction of voxel characteristics | Former attractions split There are two convolutional layers composed. Classify the points | Generate 3D frames Detect and regress target 3D frames |

| PV-RCNN | Voxelization Voxelize point cloud data | Sparse convolution 3D convolution of point clouds | RPN Detect and regress target 3D frames |

| Networks | PointPillars | PointRCNN | SECOND | PV-RCNN | |

|---|---|---|---|---|---|

| Training Parameter | |||||

| Epoch | 160 | 70 | 160 | 80 | |

| Initial learning rate | 0.0002 | 0.0002 | 0.0003 | 0.001 | |

| Minimum learning rate | 0.0002 | 2.05 × 10−8 | 0.0003 | 1.00 × 10−7 | |

| Maximum learning rate | 0.0002 | 0.002 | 0.00294 | 0.01 | |

| Batch size | 2 | 4 | 4 | 2 | |

| Index Level | Difficult | Moderate | Easy |

|---|---|---|---|

| Pixels height | >25 | >25 | >40 |

| Degree of shading | Hard to detect | Partially visible | All visible |

| Maximum cut-off | <50% | <30% | <15% |

| Method | Modality | Tree | Pedestrian | ||||

|---|---|---|---|---|---|---|---|

| Easy | Moderate | Hard | Easy | Moderate | Hard | ||

| PiontPillars | LiDAR | 49.24% | 41.32% | 40.54% | 52.08% | 43.53% | 41.49% |

| PiontRCNN | LiDAR | 53.68% | 52.90% | 51.05% | 49.43% | 41.78% | 38.63% |

| SECOND | LiDAR | 22.80% | 21.96% | 20.78% | 51.07% | 42.56% | 37.29% |

| PV-RCNN | LiDAR | 53.28% | 52.56% | 50.82% | 54.29% | 47.19% | 43.49% |

| Model\Degree | Easy | Moderate | Hard |

|---|---|---|---|

| PointPillars | 0.038 s | 0.039 s | 0.042 s |

| SECOND | 0.020 s | 0.021 s | 0.021 s |

| PointRCNN | 0.095 s | 0.108 s | 0.118 s |

| PV-RCNN | 0.079 | 0.089 | 0.094 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Wang, X.; Zhu, J.; Cheng, P.; Huang, Y. LiDAR and Deep Learning-Based Standing Tree Detection for Firebreaks Applications. Sensors 2022, 22, 8858. https://doi.org/10.3390/s22228858

Liu Z, Wang X, Zhu J, Cheng P, Huang Y. LiDAR and Deep Learning-Based Standing Tree Detection for Firebreaks Applications. Sensors. 2022; 22(22):8858. https://doi.org/10.3390/s22228858

Chicago/Turabian StyleLiu, Zhiyong, Xi Wang, Jiankai Zhu, Pengle Cheng, and Ying Huang. 2022. "LiDAR and Deep Learning-Based Standing Tree Detection for Firebreaks Applications" Sensors 22, no. 22: 8858. https://doi.org/10.3390/s22228858

APA StyleLiu, Z., Wang, X., Zhu, J., Cheng, P., & Huang, Y. (2022). LiDAR and Deep Learning-Based Standing Tree Detection for Firebreaks Applications. Sensors, 22(22), 8858. https://doi.org/10.3390/s22228858