Review and Evaluation of Eye Movement Event Detection Algorithms

Abstract

:1. Introduction

2. Eye Movement Events

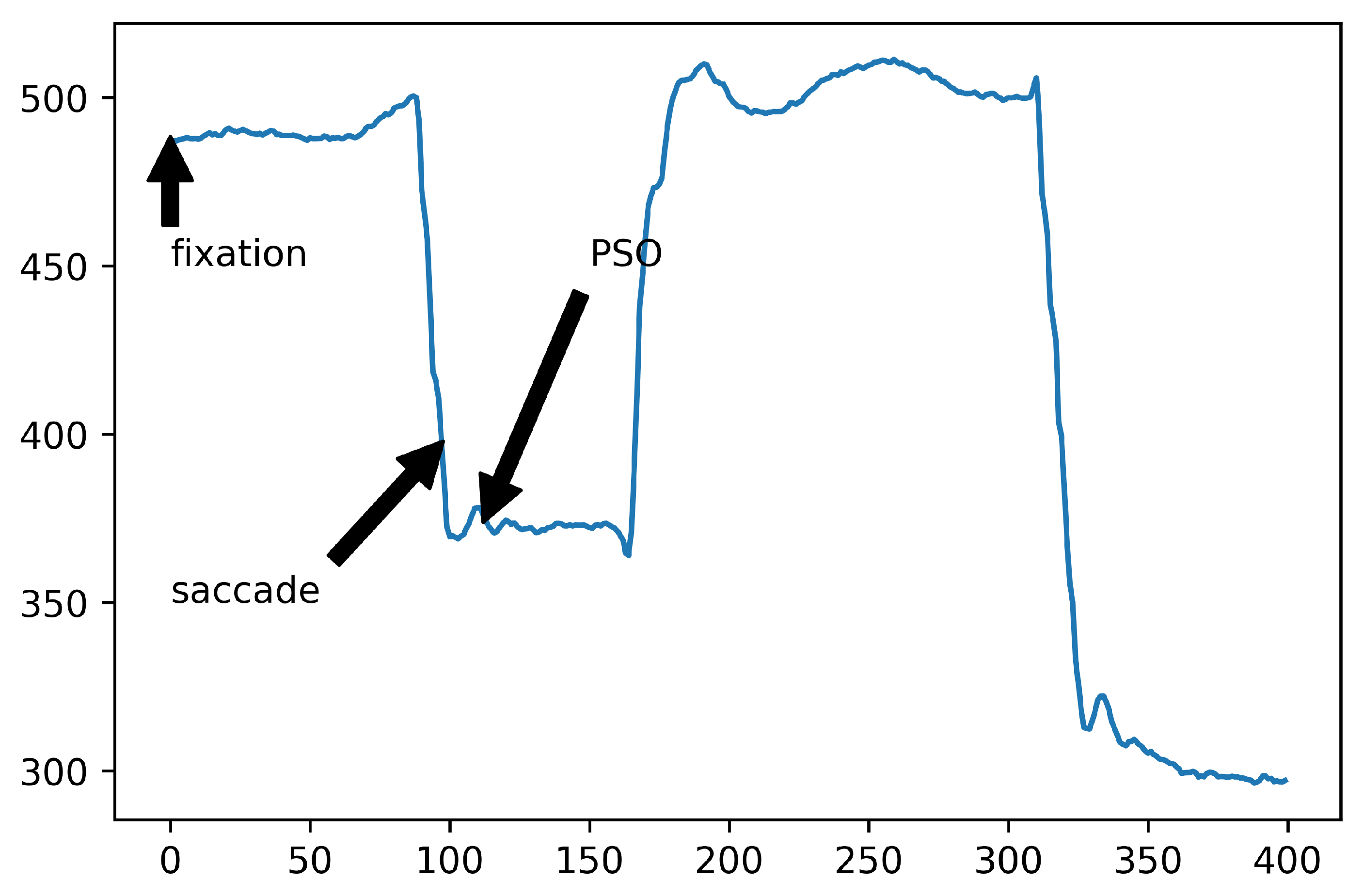

2.1. Fixations

2.2. Saccades

2.3. Smooth Pursuits

2.4. Post-Saccadic Oscillations

2.5. Glissades

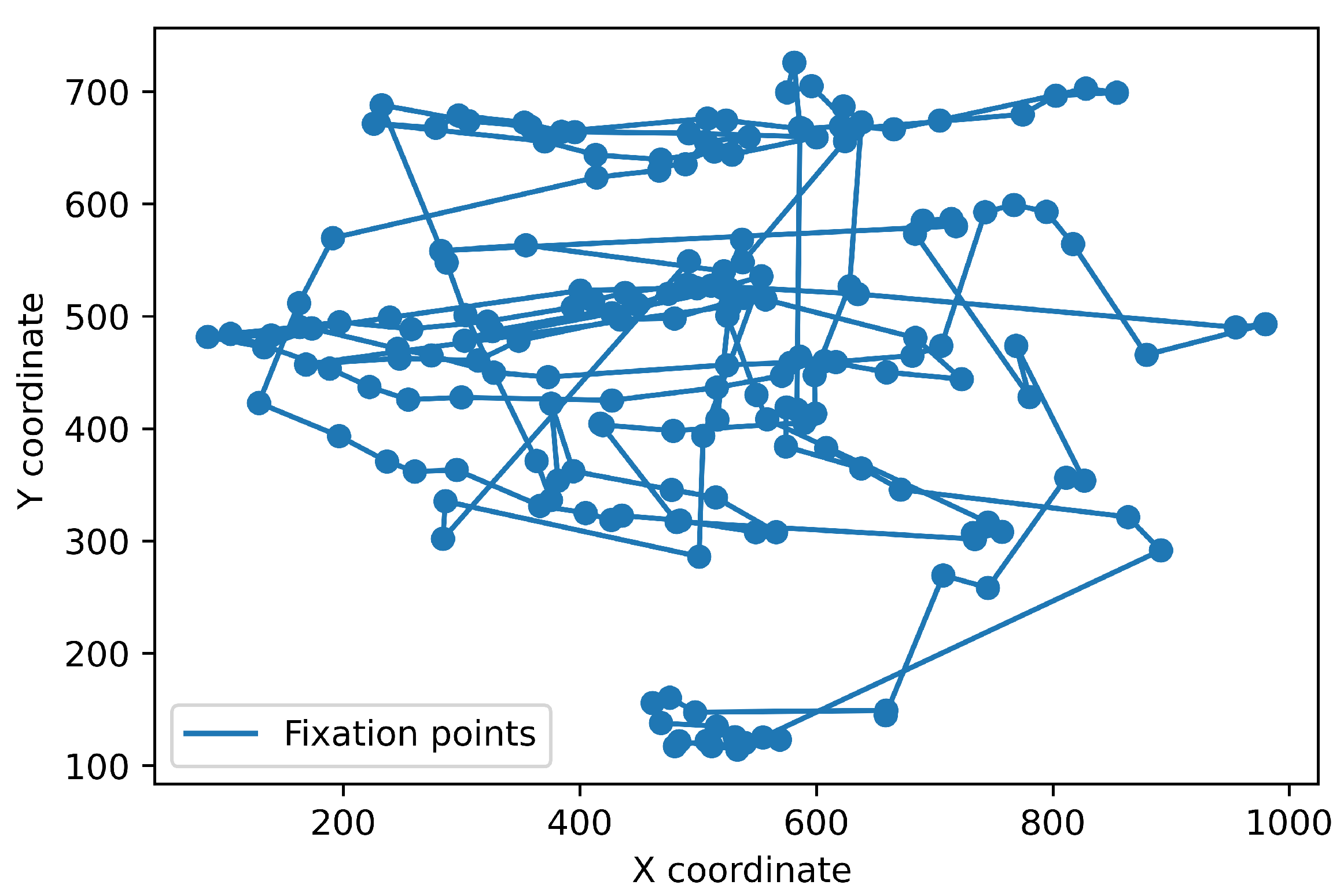

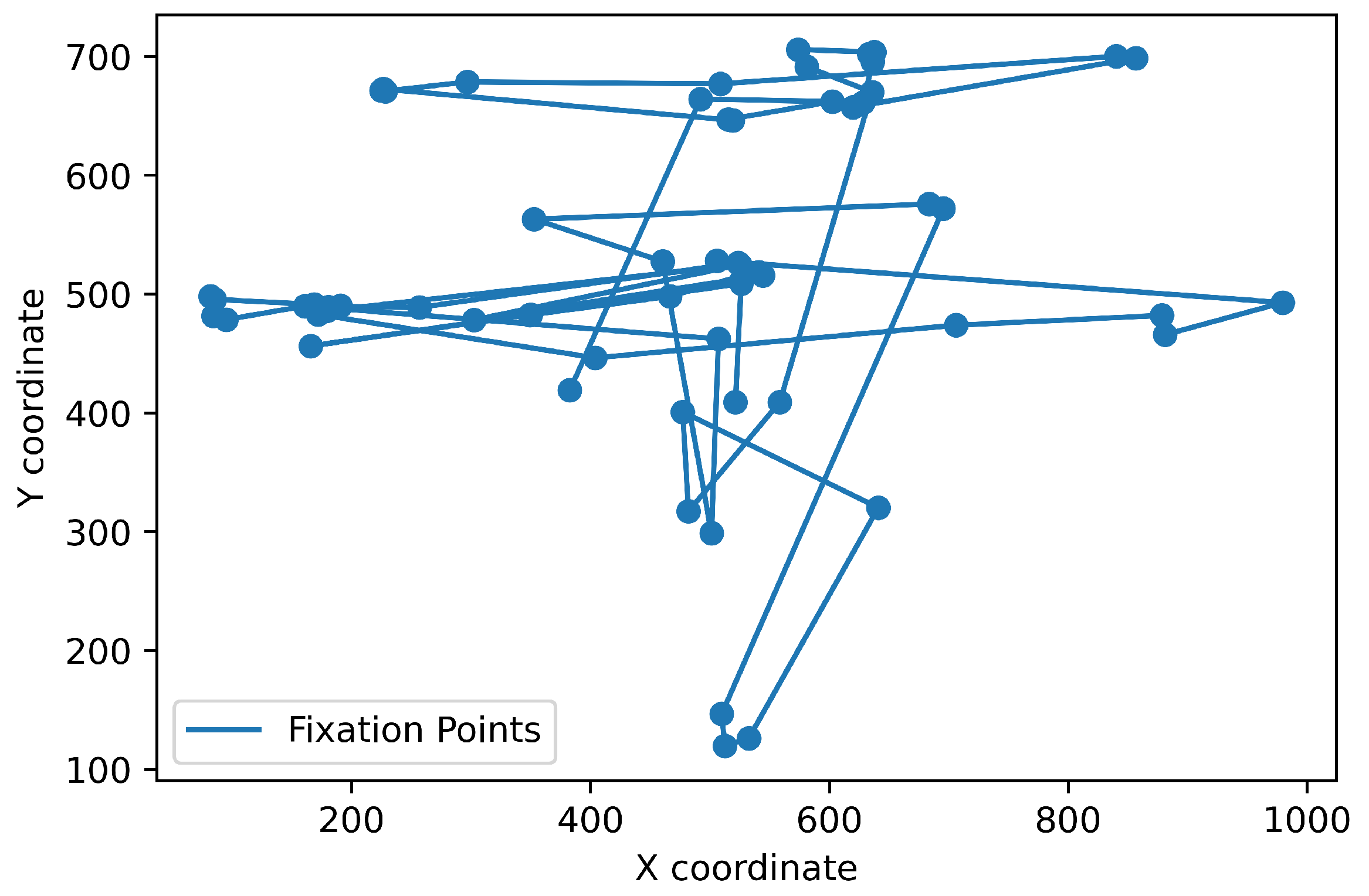

3. Dataset

4. Classic Event Detection Methods

4.1. Manual Human Classification

4.2. Dispersion Threshold-Based Event Detection Methods

4.3. Velocity Threshold-Based Methods

4.4. Fixation and Saccade Detection with the Presence of Smooth Pursuit

4.5. Automated Velocity Threshold Data Driven Event Classification Method

5. Machine Learning-Based Event Detection Methods

5.1. Event Classification Using Random Forest Classifier

5.2. Using Convolutional Neural Networks

5.3. Using Recurrent Neural Networks

6. Comparison

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Klaib, A.F.; Alsrehin, N.O.; Melhem, W.Y.; Bashtawi, H.O.; Magableh, A.A. Eye tracking algorithms, techniques, tools and applications with an emphasis on machine learning and Internet of Things technologies. Expert Syst. Appl. 2021, 166, 114037. [Google Scholar] [CrossRef]

- Punde, P.A.; Jadhav, M.E.; Manza, R.R. A study of eye tracking technology and its applications. In Proceedings of the 2017 1st International Conference on Intelligent Systems and Information Management (ICISIM), Aurangabad, India, 5–6 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 86–90. [Google Scholar]

- Naqvi, R.A.; Arsalan, M.; Park, K.R. Fuzzy system-based target selection for a NIR camera-based gaze tracker. Sensors 2017, 17, 862. [Google Scholar] [CrossRef] [Green Version]

- Naqvi, R.A.; Arsalan, M.; Batchuluun, G.; Yoon, H.S.; Park, K.R. Deep learning-based gaze detection system for automobile drivers using a NIR camera sensor. Sensors 2018, 18, 456. [Google Scholar] [CrossRef] [Green Version]

- Braunagel, C.; Geisler, D.; Stolzmann, W.; Rosenstiel, W.; Kasneci, E. On the necessity of adaptive eye movement classification in conditionally automated driving scenarios. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016. [Google Scholar]

- Kasneci, E.; Kübler, T.C.; Kasneci, G.; Rosenstiel, W.; Bogdan, M. Online Classification of Eye Tracking Data for Automated Analysis of Traffic Hazard Perception. In Proceedings of the ICANN, Sofia, Bulgaria, 10–13 September 2013. [Google Scholar]

- Larsson, L. Event Detection in Eye-Tracking Data for Use in Applications with Dynamic Stimuli. Ph.D. Thesis, Department of Biomedical Engineering, Faculty of Engineering LTH, Lund University, Lund, Sweden, 4 March 2016. [Google Scholar]

- Hartridge, H.; Thomson, L. Methods of investigating eye movements. Br. J. Ophthalmol. 1948, 32, 581. [Google Scholar] [CrossRef] [Green Version]

- Monty, R.A. An advanced eye-movement measuring and recording system. Am. Psychol. 1975, 30, 331–335. [Google Scholar] [CrossRef]

- Zemblys, R.; Niehorster, D.C.; Holmqvist, K. gazeNet: End-to-end eye-movement event detection with deep neural networks. Behav. Res. Methods 2019, 51, 840–864. [Google Scholar] [CrossRef] [Green Version]

- Salvucci, D.D.; Goldberg, J.H. Identifying fixations and saccades in eye-tracking protocols. In Proceedings of the 2000 Symposium on Eye Tracking Research & Applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 71–78. [Google Scholar]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; Van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; OUP: Oxford, UK, 2011. [Google Scholar]

- Bahill, A.T.; Clark, M.R.; Stark, L. The main sequence, a tool for studying human eye movements. Math. Biosci. 1975, 24, 191–204. [Google Scholar] [CrossRef]

- Matin, E. Saccadic suppression: A review and an analysis. Psychol. Bull. 1974, 81, 899. [Google Scholar] [CrossRef]

- Krekelberg, B. Saccadic suppression. Curr. Biol. 2010, 20, R228–R229. [Google Scholar]

- Wyatt, H.J.; Pola, J. Smooth pursuit eye movements under open-loop and closed-loop conditions. Vis. Res. 1983, 23, 1121–1131. [Google Scholar]

- Deubel, H.; Bridgeman, B. Fourth Purkinje image signals reveal eye-lens deviations and retinal image distortions during saccades. Vis. Res. 1995, 35, 529–538. [Google Scholar] [CrossRef] [Green Version]

- Nyström, M.; Hooge, I.; Holmqvist, K. Post-saccadic oscillations in eye movement data recorded with pupil-based eye trackers reflect motion of the pupil inside the iris. Vis. Res. 2013, 92, 59–66. [Google Scholar] [CrossRef]

- Flierman, N.A.; Ignashchenkova, A.; Negrello, M.; Thier, P.; De Zeeuw, C.I.; Badura, A. Glissades are altered by lesions to the oculomotor vermis but not by saccadic adaptation. Front. Behav. Neurosci. 2019, 13, 194. [Google Scholar] [CrossRef] [Green Version]

- Nyström, M.; Holmqvist, K. An adaptive algorithm for fixation, saccade and glissade detection in eyetracking data. Behav. Res. Methods 2010, 42, 188–204. [Google Scholar] [CrossRef] [Green Version]

- Kapoula, Z.; Robinson, D.; Hain, T. Motion of the eye immediately after a saccade. Exp. Brain Res. 1986, 61, 386–394. [Google Scholar] [CrossRef]

- Weber, R.B.; Daroff, R.B. Corrective movements following refixation saccades: Type and control system analysis. Vis. Res. 1972, 12, 467–475. [Google Scholar] [CrossRef]

- Andersson, R.; Sandgren, O. ELAN Analysis Companion (EAC): A software tool for time-course analysis of ELAN-annotated data. J. Eye Mov. Res. 2016, 9, 1–8. [Google Scholar] [CrossRef]

- Andersson, R.; Larsson, L.; Holmqvist, K.; Stridh, M.; Nyström, M. One algorithm to rule them all? An evaluation and discussion of ten eye movement event-detection algorithms. Behav. Res. Methods 2017, 49, 616–637. [Google Scholar] [CrossRef] [Green Version]

- Dalveren, G.G.M.; Cagiltay, N.E. Evaluation of ten open-source eye-movement classification algorithms in simulated surgical scenarios. IEEE Access 2019, 7, 161794–161804. [Google Scholar] [CrossRef]

- Hooge, I.T.; Niehorster, D.C.; Nyström, M.; Andersson, R.; Hessels, R.S. Is human classification by experienced untrained observers a gold standard in fixation detection? Behav. Res. Methods 2018, 50, 1864–1881. [Google Scholar] [CrossRef] [Green Version]

- Kasprowski, P.; Harezlak, K.; Kasprowska, S. Development of diagnostic performance & visual processing in different types of radiological expertise. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; pp. 1–6. [Google Scholar]

- Negi, S.; Mitra, R. Fixation duration and the learning process: An eye tracking study with subtitled videos. J. Eye Mov. Res. 2020, 15. [Google Scholar] [CrossRef] [PubMed]

- Yan, Y.; Yuan, H.; Wang, X.; Xu, T.; Liu, H. Study on driver’s fixation variation at entrance and inside sections of tunnel on highway. Adv. Mech. Eng. 2015, 7, 273427. [Google Scholar] [CrossRef]

- Stark, L. Scanpaths revisited: Cognitive models, direct active looking. In Eye Movements: Cognition and Visual Perception; Routledge: Abingdon, UK, 1981; pp. 193–226. [Google Scholar]

- Widdel, H. Operational Problems in Analysing Eye Movements. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1984; Volume 22, pp. 21–29. [Google Scholar]

- Shic, F.; Scassellati, B.; Chawarska, K. The incomplete fixation measure. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications, Savannah, GA, USA, 26–28 March 2008; pp. 111–114. [Google Scholar]

- Hareżlak, K.; Kasprowski, P. Evaluating quality of dispersion based fixation detection algorithm. In Information Sciences and Systems 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 97–104. [Google Scholar]

- Blignaut, P. Fixation identification: The optimum threshold for a dispersion algorithm. Attention Perception Psychophys. 2009, 71, 881–895. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sen, T.; Megaw, T. The effects of task variables and prolonged performance on saccadic eye movement parameters. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1984; Volume 22, pp. 103–111. [Google Scholar]

- Erkelens, C.J.; Vogels, I.M. The initial direction and landing position of saccades. In Studies in Visual Information Processing; Elsevier: Amsterdam, The Netherlands, 1995; Volume 6, pp. 133–144. [Google Scholar]

- Komogortsev, O.V.; Karpov, A. Automated classification and scoring of smooth pursuit eye movements in the presence of fixations and saccades. Behav. Res. Methods 2013, 45, 203–215. [Google Scholar] [CrossRef] [Green Version]

- Lopez, J.S.A. Off-the-Shelf Gaze Interaction. Ph.D. Thesis, IT University of Copenhagen, Copenhagen, Denmark, 2009. [Google Scholar]

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Zemblys, R. Eye-movement event detection meets machine learning. Biomed. Eng. 2017, 2016, 20. [Google Scholar]

- Zemblys, R.; Niehorster, D.C.; Komogortsev, O.; Holmqvist, K. Using machine learning to detect events in eye-tracking data. Behav. Res. Methods 2018, 50, 160–181. [Google Scholar] [CrossRef] [Green Version]

- Hoppe, S.; Bulling, A. End-to-end eye movement detection using convolutional neural networks. arXiv 2016, arXiv:1609.02452. [Google Scholar]

- Startsev, M.; Agtzidis, I.; Dorr, M. 1D CNN with BLSTM for automated classification of fixations, saccades and smooth pursuits. Behav. Res. Methods 2019, 51, 556–572. [Google Scholar] [CrossRef]

- Galley, N.; Betz, D.; Biniossek, C. Fixation Durations-Why Are They so Highly Variable? [Das Ende von Rational Choice? Zur Leistungsfähigkeit der Rational-Choice-Theorie]. Ph.D. Thesis, GESIS—Leibniz-Institut für Sozialwissenschaften, Köln, Germany, 2015. [Google Scholar]

| RA\MN | Fixation | Saccade | PSO |

|---|---|---|---|

| fixation | 4111 | 4 | 54 |

| saccade | 28 | 444 | 10 |

| PSO | 26 | 14 | 297 |

| Classes | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Fixation | 97% | 99% | 97% | 98% |

| Saccade | 92% | 87% | 91% | 89% |

| PSO | 76% | 64% | 76% | 69% |

| Classes | Accuracy | Precison | Recall | F1-Score |

|---|---|---|---|---|

| Fixation | 99% | 98% | 99% | 99% |

| Saccade | 89% | 93% | 89% | 91% |

| PSO | 75% | 83% | 75% | 79% |

| Performance Metrics | IVT [11] | IDT [11] | RF | CNN | Coder MN [26] | Coder RA [26] |

|---|---|---|---|---|---|---|

| Fixation Accuracy | 92% | 95% | 97% | 99% | 99% | 99% |

| Saccade Accuracy | 87% | 93% | 92% | 89% | 92% | 96% |

| PSO Accuracy | - | - | 76% | 75% | 88% | 82% |

| Fixation F1-score | 94% | 96% | 99% | 99% | 99% | 99% |

| Saccade F1-score | 60% | 66% | 87% | 91% | 94% | 94% |

| PSO F1-Score | - | - | 64% | 79% | 85% | 85% |

| Fixation Recall | 92% | 95% | 97% | 99% | 99% | 99% |

| Saccade Recall | 87% | 93% | 92% | 89% | 92% | 96% |

| PSO Recall | - | - | 76% | 75% | 88% | 82% |

| Fixation Precision | 96% | 98% | 99% | 98% | 99% | 99% |

| Saccade Precision | 46% | 51% | 87% | 93% | 96% | 92% |

| PSO precision | - | - | 64% | 83% | 82% | 88% |

| Cochen’s Kappa | 0.5 | 0.6 | 0.83 | 0.88 | 1 | 0.90 |

| Algorithms | Strengths | Weaknesses |

|---|---|---|

| Human Coders [26] | Manual coding is still a common method for evaluating event detection algorithms and manually classified data are used as training data for machine learning algorithms. | Time consuming, different coders may use different subjective selection rules that give different results because parameters and threshold values are set manually by the coder. |

| I-VT [11] | Simple to implement and understand. Uses one threshold value which is velocity to identify events from raw input data. Performs very well for fixation and saccade identification in single identification step. Low computational resources. | Although it is simple, I-VT is rarely used in real implementations. It is sensitive to noisy signals with many outliers. Finding optimum threshold value is challenging as there is no standard optimum threshold value. Identifies fixations and saccades only. |

| I-DT [11] | The first automated event detection algorithm. Performs fixation and saccade identification with human level identification performance. I-DT is frequently available in commercial software. | Performance is affected by choice of threshold values. Choosing a dispersion calculation method is challenging as different dispersion calculation methods affect the dispersion value. Designed for fixation and saccade identification only. |

| RF | No threshold value is needed. Performs multi-class classifications so may be used for various events. It is a fully automated event classification method. Performs fixation and saccade identification with human level performance. | Requires a significant amount of correctly annotated data for training. In our implementation only velocity features were used to identify events as fixation, saccade and PSO. The classification result for PSO was because of misclassification between saccade and PSO due to the similarity of saccade and PSO in terms of velocity. |

| CNN | Like RF, CNN also addresses threshold-based detection method problems. Performs single step end-to-end detection without human intervention. Performs at human level detection for fixation identification. | Requires for training even more correctly annotated data than the RF algorithm. We used only velocity parameters to identify events from input data. Smooth pursuit was not considered because the velocity parameter that we used is not sufficient to identify smooth pursuit from fixation as both of them are low velocity movement types. Other parameters such as direction or movement patterns should be used to identify smooth pursuit. CNN performed worse than RF and I-DT for saccade detection. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Birawo, B.; Kasprowski, P. Review and Evaluation of Eye Movement Event Detection Algorithms. Sensors 2022, 22, 8810. https://doi.org/10.3390/s22228810

Birawo B, Kasprowski P. Review and Evaluation of Eye Movement Event Detection Algorithms. Sensors. 2022; 22(22):8810. https://doi.org/10.3390/s22228810

Chicago/Turabian StyleBirawo, Birtukan, and Pawel Kasprowski. 2022. "Review and Evaluation of Eye Movement Event Detection Algorithms" Sensors 22, no. 22: 8810. https://doi.org/10.3390/s22228810

APA StyleBirawo, B., & Kasprowski, P. (2022). Review and Evaluation of Eye Movement Event Detection Algorithms. Sensors, 22(22), 8810. https://doi.org/10.3390/s22228810