Sensor Fusion for Social Navigation on a Mobile Robot Based on Fast Marching Square and Gaussian Mixture Model

Abstract

:1. Introduction

- Multiple sensors are fused for mapping and socially navigating scenarios: a 2D LiDAR, a 3D LiDAR and an RGBD camera.

- Three-dimensional information is captured to extract a geometric and topological map, which will serve as the basis for path planning and navigation.

- A navigation strategy using the fast marching square method is designed, considering static and dynamic objects.

- A behavior for narrow passage trespassing is designed to avoid collisions in robots with high dimensionality.

- People are detected and modeled using Gaussian functions considering social distance. A differentiation is made between individuals and groups. Additionally, the Gaussian model is fully considered, with no discretization step required. The use of fast marching square facilitates the addition of Gaussians into the model.

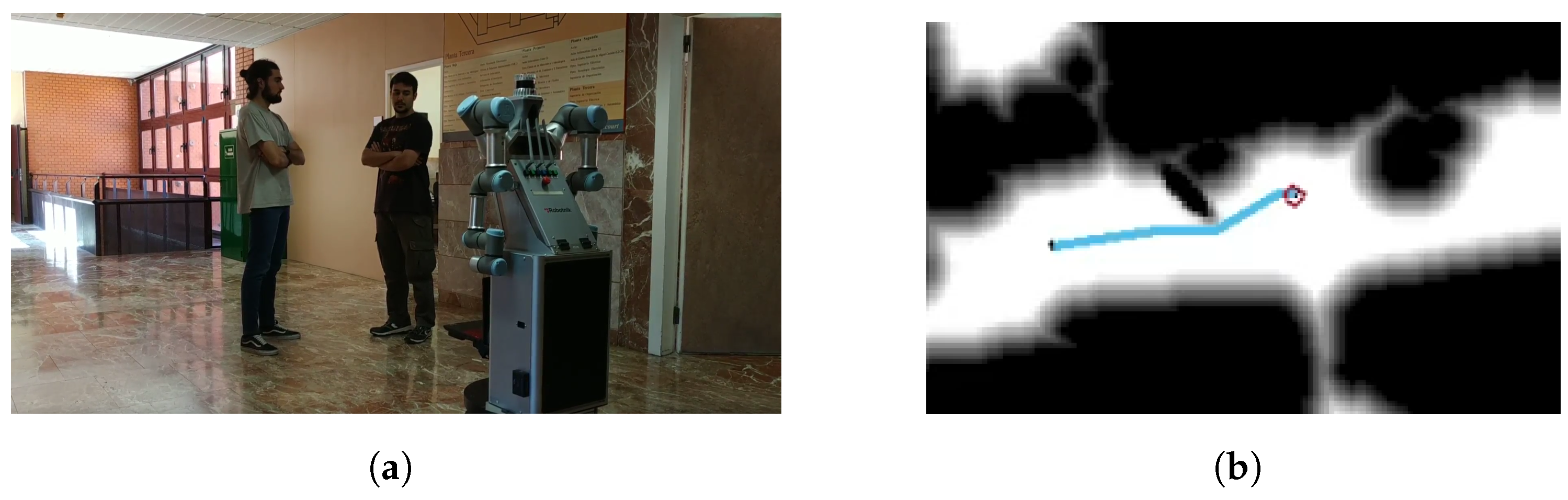

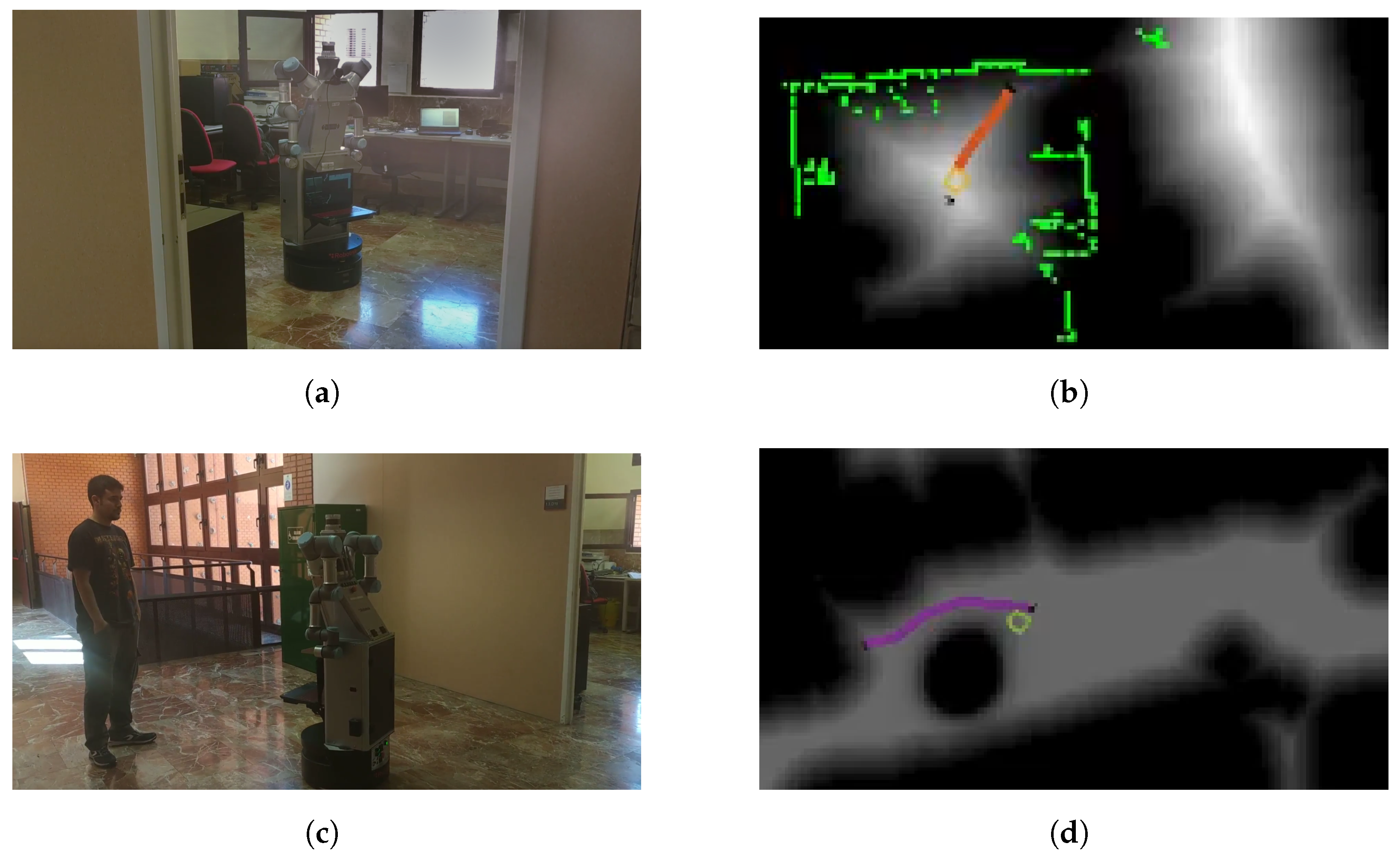

- The method is tested on a real scenario in which a domestic robot coexists with people.

2. Materials and Methods

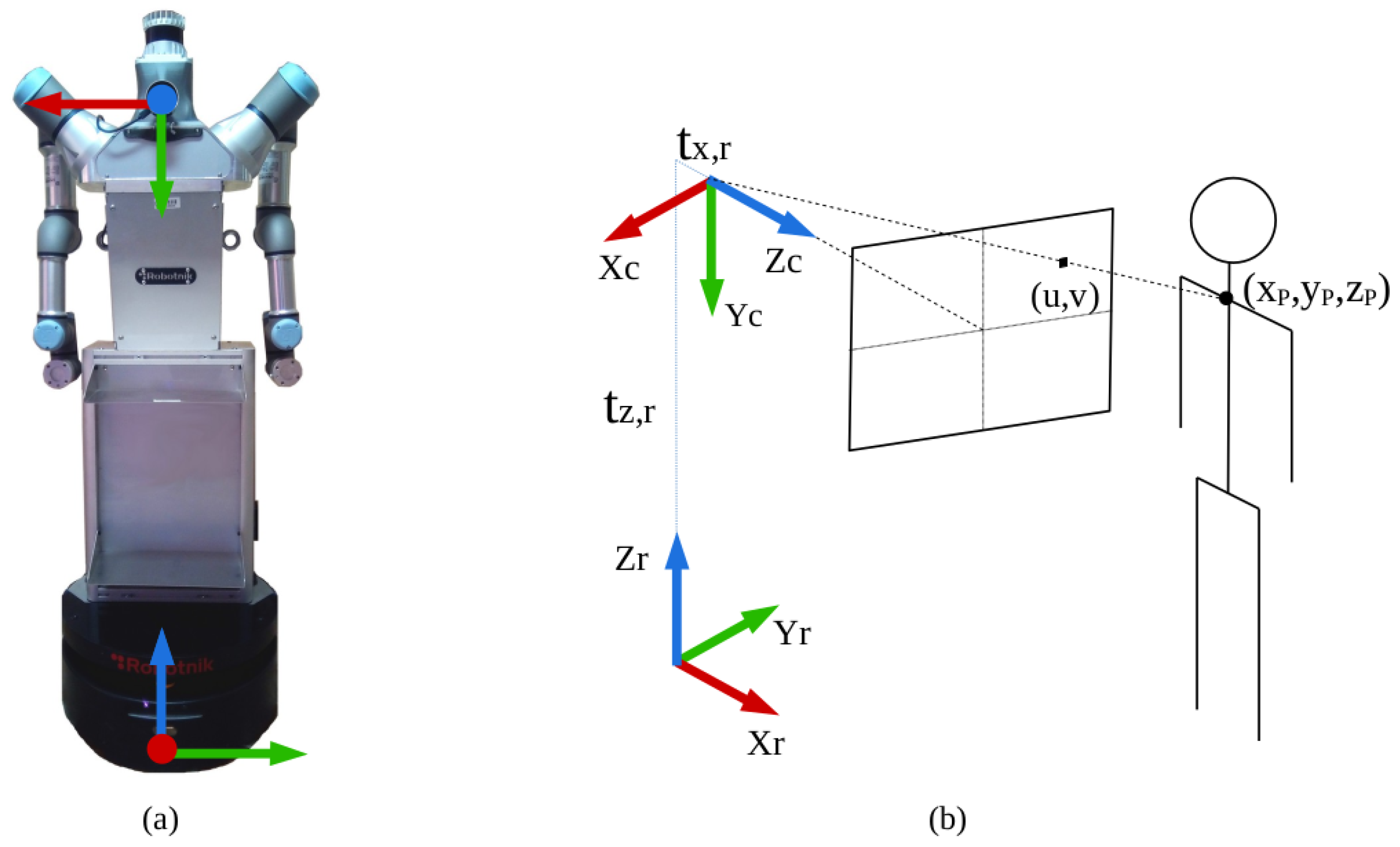

2.1. Robotic Platform

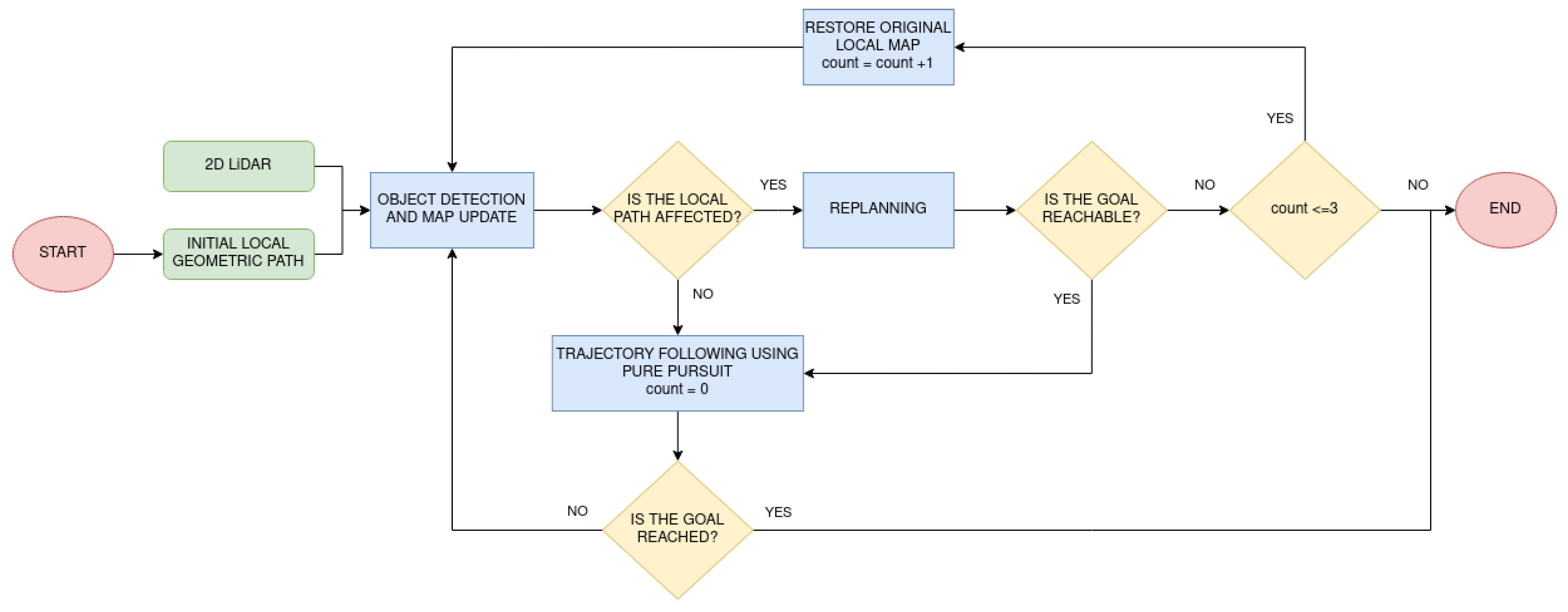

2.2. Navigation System

- Mapping: the robot needs to construct an environment representation based on sensor information. For this application, a multi-map system is proposed. It is formed by two layers, geometric and topological.

- Localization: a precise localization is required to know where the robot is placed. In this work, it is applied on the geometric map level.

- Path planning: since this module is highly influenced by the selected mapping procedure, it is based on a geometric and topological level as well. The planner finds a global topological plan and local geometric paths.

- Plan execution: once the three above-mentioned modules are available, the robot needs to follow the calculated instructions. Hence, this module is performed in real time. This implies taking into account unknown static and dynamic objects that interfere with previous knowledge. Additionally, given the high dimensions of the robotic platform, specific behaviors need to be defined to avoid collisions in narrow zones. Finally, this module includes the social navigation perspective by detecting people and modeling their personal space.

2.2.1. Mapping Based on 3D Information

2.2.2. Localization

2.2.3. Path Planning

2.2.4. Plan Execution

2.2.5. Modeling People for Social Navigation Strategies

People Detection

3D Pose Estimation

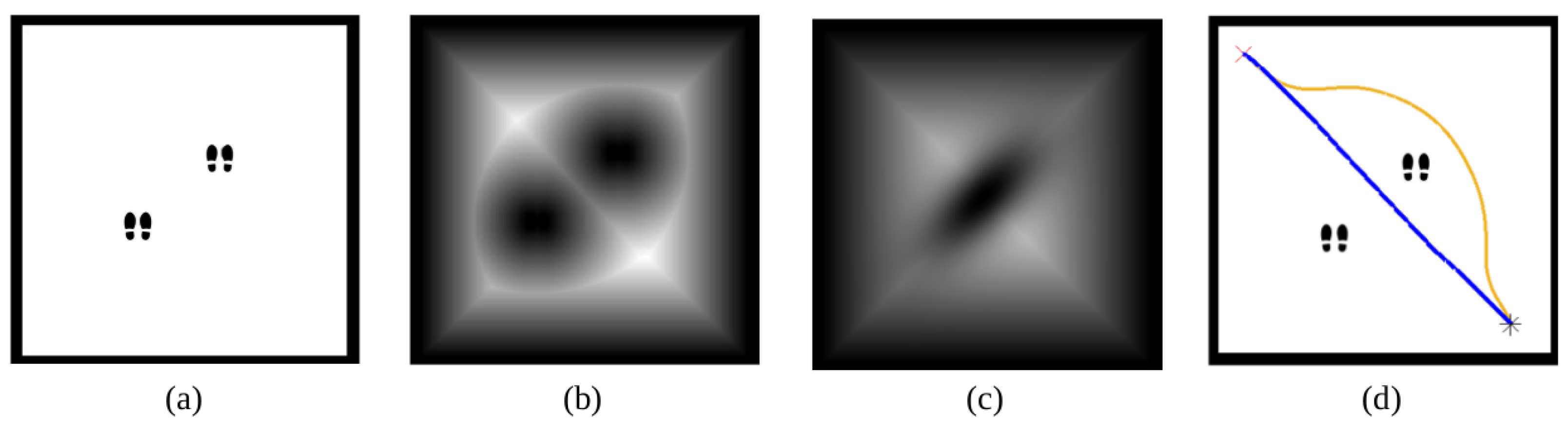

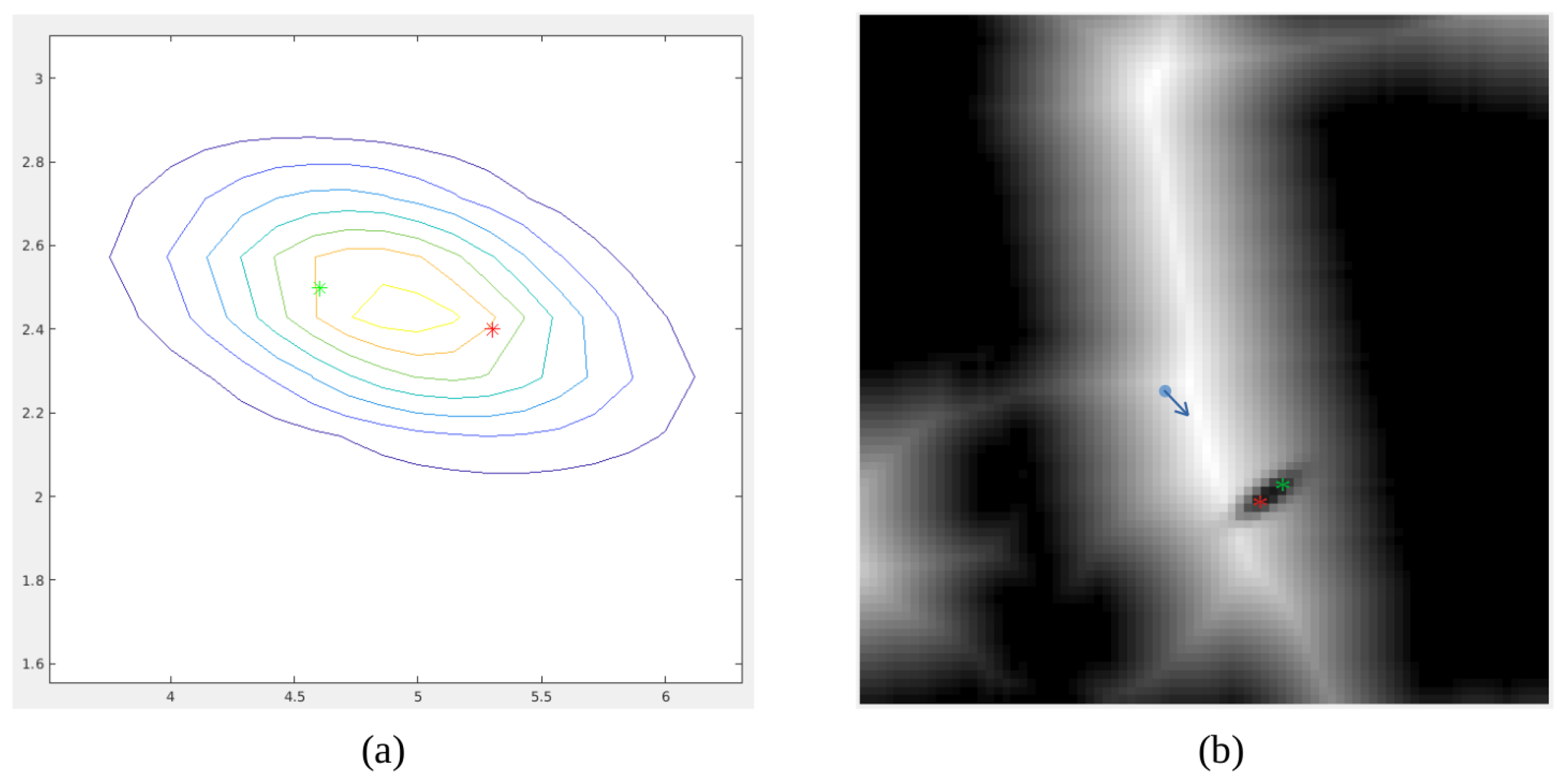

Modeling Personal Space

- Public distance: This distance is defined for values over 210 cm. In this distance the communication needs to be with high voice volume and eye contact is minimized.

- Social distance: It is maintained during more formal interactions. Its value is between 122 and 210 cm and prevents all kinds of contact, except visual and auditory.

- Personal distance: This distance is maintained during interactions with people with a higher level of confidence than in the other two cases, for example, with friends. The value of this distance is 46–122 cm, generating a better capacity to interact, without any opposition.

- Intimate distance: The value of this distance is 0–46 cm. It is commonly used in close relationships, given that a clear invasion of the personal space of the other person occurs. Due to proximity, the vision is blurred and other sensory signals are used, such as touch.

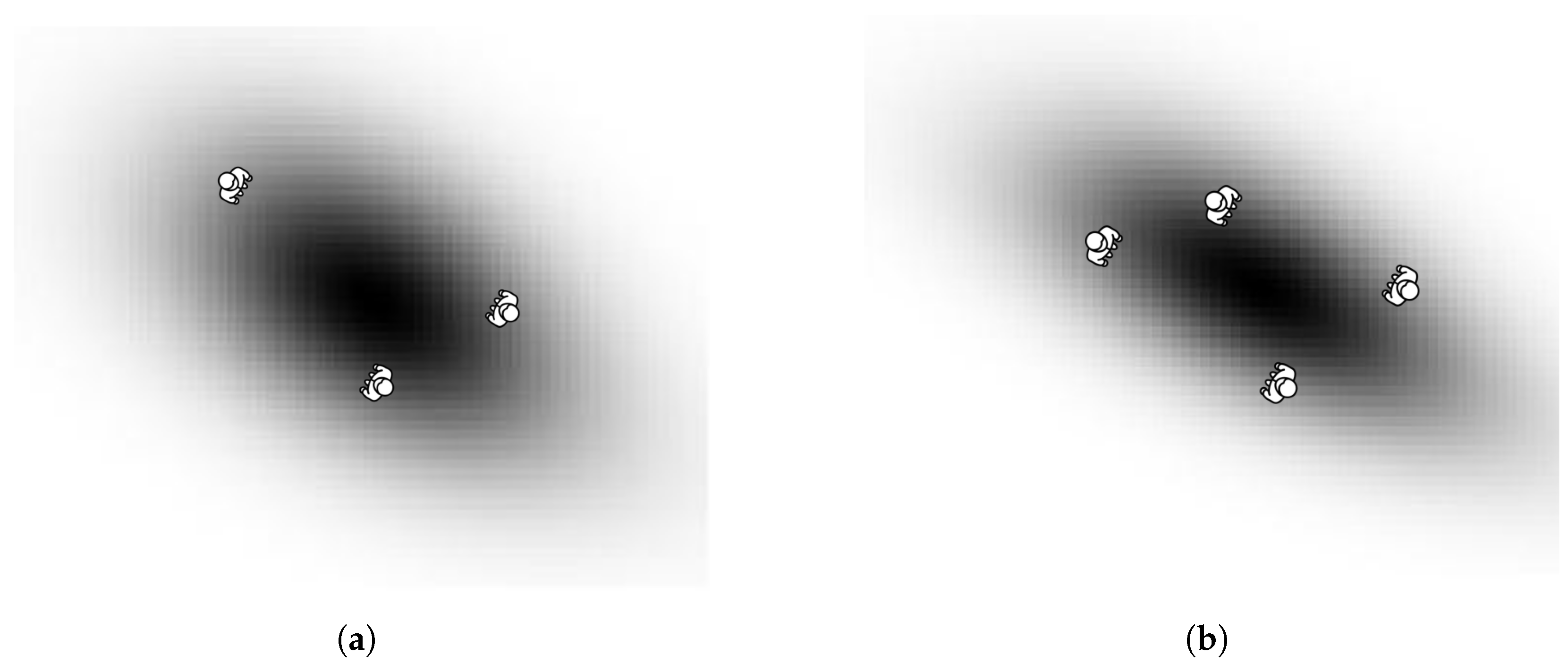

One Person Case

Group of People Case

Inclusion of the Gaussian Models to the Velocity Map

3. Results

3.1. Mapping an Indoor Scenario

3.2. Single-Sensor Navigation Strategy

3.3. People Detection and Modeling Performance

3.4. Multi-Sensor-Based Social Navigation

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mavrogiannis, C.; Baldini, F.; Wang, A.; Zhao, D.; Trautman, P.; Steinfeld, A.; Oh, J. Core challenges of social robot navigation: A survey. arXiv 2021, arXiv:2103.05668. [Google Scholar]

- Charalampous, K.; Kostavelis, I.; Gasteratos, A. Recent trends in social aware robot navigation: A survey. Robot. Auton. Syst. 2017, 93, 85–104. [Google Scholar] [CrossRef]

- Kivrak, H.; Cakmak, F.; Kose, H.; Yavuz, S. Social navigation framework for assistive robots in human inhabited unknown environments. Eng. Sci. Technol. Int. J. 2021, 24, 284–298. [Google Scholar] [CrossRef]

- Ferrer, G.; Garrell, A.; Sanfeliu, A. Social-aware robot navigation in urban environments. In Proceedings of the European Conference on Mobile Robots, Barcelona, Spain, 25–27 September 2013; pp. 331–336. [Google Scholar]

- Vega, A.; Manso, L.J.; Macharet, D.G.; Bustos, P.; Núñez, P. Socially aware robot navigation system in human-populated and interactive environments based on an adaptive spatial density function and space affordances. Pattern Recognit. Lett. 2019, 118, 72–84. [Google Scholar] [CrossRef] [Green Version]

- Daza, M.; Barrios-Aranibar, D.; Diaz-Amado, J.; Cardinale, Y.; Vilasboas, J. An approach of social navigation based on proxemics for crowded environments of humans and robots. Micromachines 2021, 12, 193. [Google Scholar] [CrossRef] [PubMed]

- Sousa, R.M.D.; Barrios-Aranibar, D.; Diaz-Amado, J.; Patiño-Escarcina, R.E.; Trindade, R.M.P. A New Approach for Including Social Conventions into Social Robots Navigation by Using Polygonal Triangulation and Group Asymmetric Gaussian Functions. Sensors 2022, 22, 4602. [Google Scholar] [CrossRef]

- Valera, H.A.; Luštrek, M. Social Path Planning Based on Human Emotions. In Workshops at 18th International Conference on Intelligent Environments; IOS Press: Amsterdam, The Netherlands, 2022; Volume 31, p. 56. [Google Scholar]

- Che, Y.; Okamura, A.M.; Sadigh, D. Efficient and trustworthy social navigation via explicit and implicit robot–human communication. IEEE Trans. Robot. 2020, 36, 692–707. [Google Scholar] [CrossRef] [Green Version]

- HOKUYO Products Detail—UST-10/20LX. Available online: https://www.hokuyo-aut.jp/search/single.php?serial=167 (accessed on 4 November 2022).

- OUSTER—OS0 Sensor. Available online: https://ouster.com/products/scanning-lidar/os0-sensor/ (accessed on 4 November 2022).

- LiDAR Camera L515. Available online: https://www.intelrealsense.com/lidar-camera-l515/ (accessed on 4 November 2022).

- Salichs, M.A.; Moreno, L. Navigation of mobile robots: Open questions. Robotica 2000, 18, 227–234. [Google Scholar] [CrossRef]

- Ibrahim, M.Y.; Fernandes, A. Study on mobile robot navigation techniques. In Proceedings of the 2004 IEEE International Conference on Industrial Technology, 2004. IEEE ICIT ’04, Hammamet, Tunisia, 8–10 December 2004; Volume 1, pp. 230–236. [Google Scholar]

- Crespo, J.; Castillo, J.C.; Mozos, O.M.; Barber, R. Semantic information for robot navigation: A survey. Appl. Sci. 2020, 10, 497. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, P.; Mora, A.; Garrido, S.; Barber, R.; Moreno, L. Multi-LiDAR Mapping for Scene Segmentation in Indoor Environments for Mobile Robots. Sensors 2022, 22, 3690. [Google Scholar] [CrossRef]

- Santos, L.C.; Aguiar, A.S.; Santos, F.N.; Valente, A.; Petry, M. Occupancy Grid and Topological Maps Extraction from Satellite Images for Path Planning in Agricultural Robots. Robotics 2020, 9, 77. [Google Scholar] [CrossRef]

- Tang, L.; Wang, Y.; Ding, X.; Yin, H.; Xiong, R.; Huang, S. Topological local-metric framework for mobile robots navigation: A long term perspective. Auton. Robot. 2019, 43, 197–211. [Google Scholar] [CrossRef]

- Mora, A.; Prados, A.; Barber, R. Segmenting Maps by Analyzing Free and Occupied Regions with Voronoi Diagrams. In Proceedings of the 19th International Conference on Informatics in Control, Automation and Robotics—ICINCO, Lisbon, Portugal, 14–16 July 2022; pp. 395–402. [Google Scholar]

- Navigation—ROS Wiki. Available online: http://wiki.ros.org/navigation (accessed on 27 September 2022).

- Dijkstra, E.W. A note on two problems in connection with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef] [Green Version]

- Garrido, S.; Moreno, L.; Abderrahim, M.; Blanco, D. FM2: A real-time sensor- based feedback controller for mobile robots. Int. J. Robot. Autom. 2009, 24, 48. [Google Scholar]

- Coulter, R.C. Implementation of the Pure Pursuit Path Tracking Algorithm; Carnegie Mellon UNIV Robotics INST: Pittsburgh, PA, USA, 1992. [Google Scholar]

- Li, X.; Onie, S.; Liang, M.; Larsen, M.; Sowmya, A. Towards building a visual behaviour analysis pipeline for suicide detection and prevention. Sensors 2022, 22, 4488. [Google Scholar] [CrossRef]

- De Bock, J.; Verstockt, S. Video-based analysis and reporting of riding behavior in cyclocross segments. Sensors 2021, 21, 7619. [Google Scholar] [CrossRef]

- Samaan, G.H.; Wadie, A.R.; Attia, A.K.; Asaad, A.M.; Kamel, A.E.; Slim, S.O.; Abdallah, M.S.; Cho, Y.I. MediaPipe’s Landmarks with RNN for Dynamic Sign Language Recognition. Electronics 2022, 11, 3228. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- ultralytics/yolov5—GitHub. Available online: https://github.com/ultralytics/yolov5 (accessed on 12 October 2022).

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for autonomous landing spot detection in faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- On-Device, Real-Time Body Pose Tracking with MediaPipe BlazePose. Google AI Blog. Available online: https://ai.googleblog.com/2020/08/on-device-real-time-body-pose-tracking.html (accessed on 12 October 2022).

- image_geometry. ROS Wiki. Available online: http://wiki.ros.org/image_geometry (accessed on 12 October 2022).

- Spatial Algorithms and Data Structures (scipy.spatial)—SciPy v1.9.2 Manual. Available online: https://docs.scipy.org/doc/scipy/reference/spatial.html (accessed on 12 October 2022).

- Sorokowska, A.; Sorokowski, P.; Hilpert, P.; Cantarero, K.; Frackowiak, T.; Ahmadi, K.; Pierce, J.D., Jr. Preferred interpersonal distances: A global comparison. J. Cross-Cult. Psychol. 2017, 48, 577–592. [Google Scholar] [CrossRef] [Green Version]

- Remland, M.S.; Jones, T.S.; Brinkman, H. Interpersonal distance, body orientation, and touch: Effects of culture, gender, and age. J. Soc. Psychol. 1995, 135, 281–297. [Google Scholar] [CrossRef] [PubMed]

- Evans, G.W.; Howard, R.B. Personal space. Psychol. Bull. 1973, 80, 334–344. [Google Scholar] [CrossRef] [PubMed]

- Amaoka, T.; Laga, H.; Saito, S.; Nakajima, M. Personal Space Modeling for Human-Computer Interaction. In International Conference on Entertainment Computing; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5790, pp. 60–72. [Google Scholar]

- Horn, R.A.; Johnson, C.R. Matrix Analysis; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Müller, S.; Wengefeld, T.; Trinh, T.Q.; Aganian, D.; Eisenbach, M.; Gross, H.M. A multi-modal person perception framework for socially interactive mobile service robots. Sensors 2020, 20, 722. [Google Scholar] [CrossRef]

- Liang, J.; Patel, U.; Sathyamoorthy, A.J.; Manocha, D. Realtime collision avoidance for mobile robots in dense crowds using implicit multi-sensor fusion and deep reinforcement learning. arXiv 2020, arXiv:2004.03089. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mora, A.; Prados, A.; Mendez, A.; Barber, R.; Garrido, S. Sensor Fusion for Social Navigation on a Mobile Robot Based on Fast Marching Square and Gaussian Mixture Model. Sensors 2022, 22, 8728. https://doi.org/10.3390/s22228728

Mora A, Prados A, Mendez A, Barber R, Garrido S. Sensor Fusion for Social Navigation on a Mobile Robot Based on Fast Marching Square and Gaussian Mixture Model. Sensors. 2022; 22(22):8728. https://doi.org/10.3390/s22228728

Chicago/Turabian StyleMora, Alicia, Adrian Prados, Alberto Mendez, Ramon Barber, and Santiago Garrido. 2022. "Sensor Fusion for Social Navigation on a Mobile Robot Based on Fast Marching Square and Gaussian Mixture Model" Sensors 22, no. 22: 8728. https://doi.org/10.3390/s22228728