MSA-MOT: Multi-Stage Association for 3D Multimodality Multi-Object Tracking

Abstract

1. Introduction

- We propose a novel tracking method MSA-MOT for 3D MOT in complex scenes, in which we improve the association scheme by utilizing multi-stage association, and, thus, achieve precise tracking over a long period of time.

- In the multi-stage association method, the proposed hierarchical matching module successively associates the high- and low-reliability detections, alleviating the long-standing problem of incorrect association. In addition, a customized track management module is proposed for managing tracklets based on the information provided by the matching module, effectively addressing the severe identity switch in tracking.

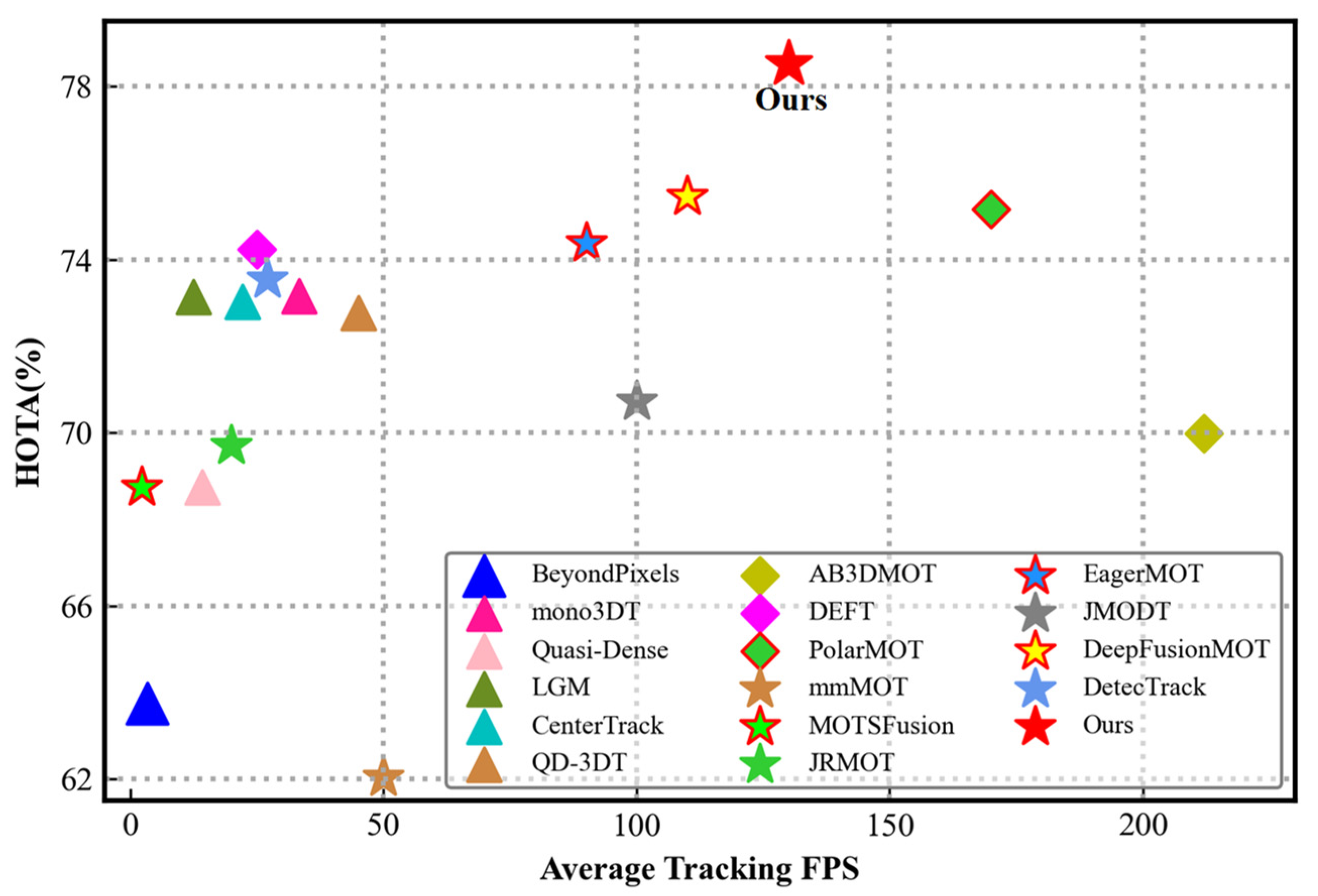

- Extensive experiments are conducted on the challenging KITTI benchmark. The results show that MSA-MOT achieves state-of-the-art performance (78.52% on HOTA, 97.11% on sAMOTA, and 130 FPS), which demonstrates the effectiveness of our novel multi-stage association method.

2. Related Work

2.1. 2D MOT

2.2. Single-Modality 3D MOT

2.3. Multimodality 3D MOT

3. Methods

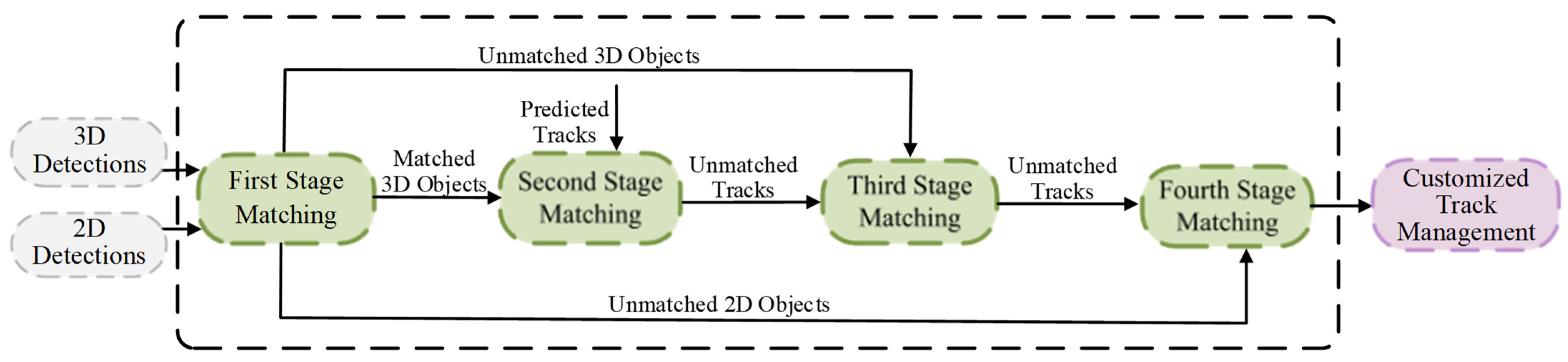

3.1. Overall Framework

3.2. Hierarchical Matching Module

3.2.1. First Stage of Matching

3.2.2. Second Stage of Matching

3.2.3. Third Stage of Matching

3.2.4. Fourth Stage of Matching

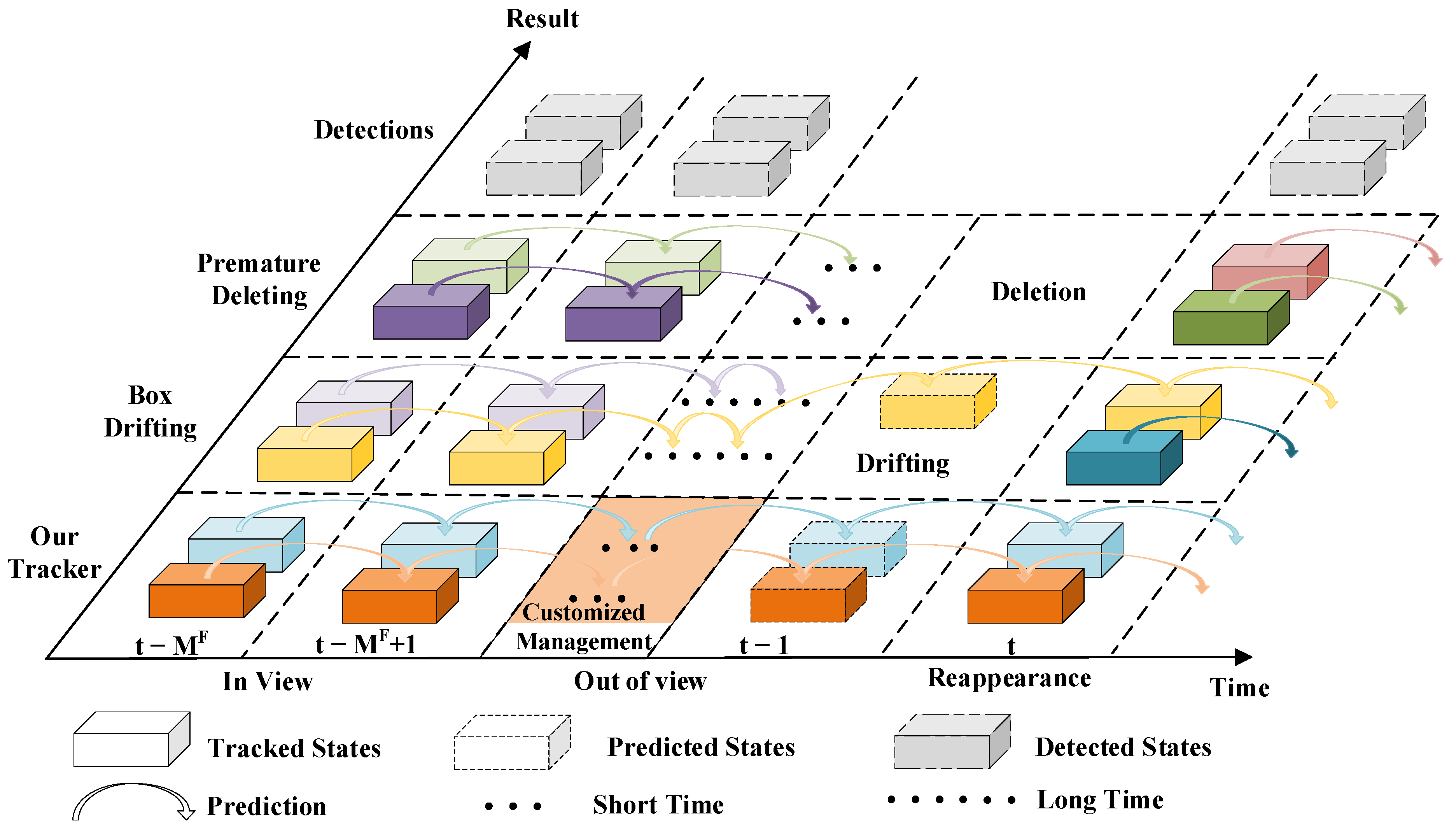

3.3. Customized Track Management Module

4. Experiments

4.1. Dataset

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Comparison with the State-of-the-Art Methods

4.4.1. Quantitative Comparison

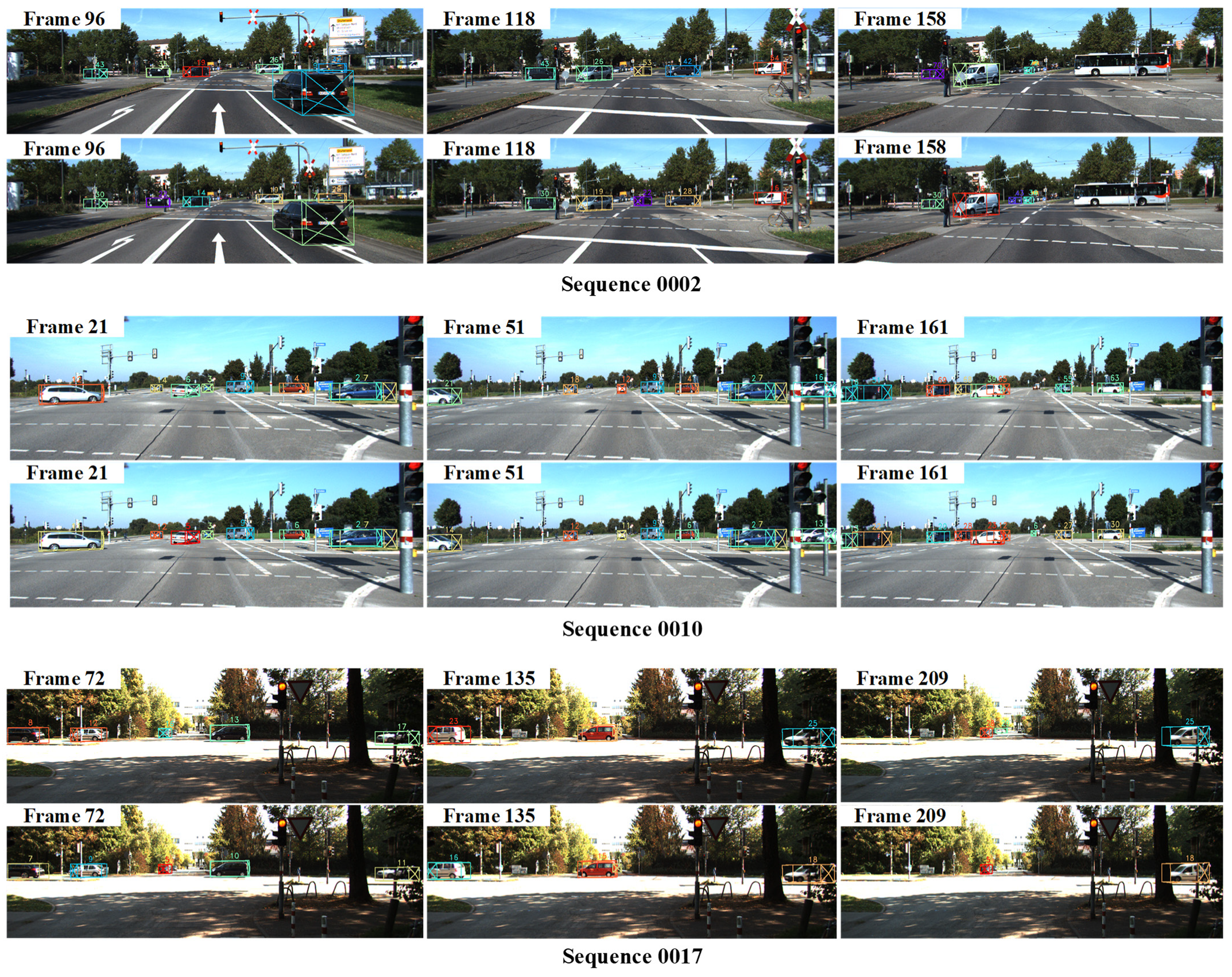

4.4.2. Qualitative Comparison

4.5. Ablation Experiments

4.5.1. Component-Wise Analysis

4.5.2. Hierarchical Matching Module

4.5.3. Track Management Module

4.6. Exploration Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nie, J.; He, Z.; Yang, Y.; Gao, M.; Dong, Z. Learning Localization-aware Target Confidence for Siamese Visual Tracking. IEEE Trans. Multimed. 2022; early access. [Google Scholar]

- Nie, J.; Wu, H.; He, Z.; Gao, M.; Dong, Z. Spreading Fine-grained Prior Knowledge for Accurate Tracking. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6186–6199. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking with a Deep Association Metric. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Bae, S.-H.; Yoon, K.-J. Confidence-based data association and discriminative deep appearance learning for robust online multi-object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 595–610. [Google Scholar] [CrossRef] [PubMed]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3D point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4338–4364. [Google Scholar] [CrossRef]

- Weng, X.; Wang, J.; Held, D.; Kitani, K. 3D Multi-Object Tracking: A Baseline and New Evaluation Metrics. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; pp. 10359–10366. [Google Scholar]

- Pöschmann, J.; Pfeifer, T.; Protzel, P. Factor Graph Based 3D Multi-Object Tracking in Point Clouds. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; pp. 10343–10350. [Google Scholar]

- Hu, H.-N.; Yang, Y.-H.; Fischer, T.; Darrell, T.; Yu, F.; Sun, M. Monocular quasi-dense 3D object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2022; early access. [Google Scholar]

- Luo, C.; Yang, X.; Yuille, A. Exploring Simple 3d Multi-Object Tracking for Autonomous Driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 10488–10497. [Google Scholar]

- Huang, K.; Hao, Q. Joint Multi-Object Detection and Tracking with Camera-LiDAR Fusion for Autonomous Driving. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 28–30 September 2021; pp. 6983–6989. [Google Scholar]

- Shenoi, A.; Patel, M.; Gwak, J.; Goebel, P.; Sadeghian, A.; Rezatofighi, H.; Martín-Martín, R.; Savarese, S. Jrmot: A Real-Time 3D Multi-Object Tracker and a New Large-Scale Dataset. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; pp. 10335–10342. [Google Scholar]

- Chiu, H.-k.; Li, J.; Ambruş, R.; Bohg, J. Probabilistic 3D Multi-Modal, Multi-Object Tracking for Autonomous Driving. In Proceedings of the IEEE International Conference on Robotics and Automation, Xi’an, China, 30 May–5 June 2021; pp. 14227–14233. [Google Scholar]

- Gautam, S.; Meyer, G.P.; Vallespi-Gonzalez, C.; Becker, B.C. SDVTracker: Real-Time Multi-Sensor Association and Tracking for Self-Driving Vehicles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 3012–3021. [Google Scholar]

- Zhang, W.; Zhou, H.; Sun, S.; Wang, Z.; Shi, J.; Loy, C.C. Robust Multi-Modality Multi-Object Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 2365–2374. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int J Rob Res 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. Fairmot: On the Fairness of Detection and Re-Identification in Multiple Object tracking. Int. J. Comput. Vision 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Wu, H.; Nie, J.; He, Z.; Zhu, Z.; Gao, M. One-Shot Multiple Object Tracking in UAV Videos Using Task-Specific Fine-Grained Features. Remote Sens. 2022, 14, 3853. [Google Scholar] [CrossRef]

- Wu, H.; Nie, J.; Zhu, Z.; He, Z.; Gao, M. Leveraging temporal-aware fine-grained features for robust multiple object tracking. J. Supercomput. 2022; early access. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Fluids Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Wu, J.; Cao, J.; Song, L.; Wang, Y.; Yang, M.; Yuan, J. Track to Detect and Segment: An Online Multi-Object Tracker. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12352–12361. [Google Scholar]

- Pang, B.; Li, Y.; Zhang, Y.; Li, M.; Lu, C. Tubetk: Adopting Tubes to Track Multi-Object in a One-Step Training Model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6308–6318. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast Encoders for Object Detection from Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3D Object Proposal Generation and Detection from Point Cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Liu, Z.; Tang, H.; Amini, A.; Yang, X.; Mao, H.; Rus, D.; Han, S. BEVFusion: Multi-Task Multi-Sensor Fusion with Unified Bird’s-Eye View Representation. arXiv 2022, arXiv:2205.13542. [Google Scholar]

- Kuhn, H.W. The Hungarian Method for the assignment problem. Nav. Res. Logist. 2005, 52, 7–21. [Google Scholar] [CrossRef]

- Chiu, H.-k.; Prioletti, A.; Li, J.; Bohg, J. Probabilistic 3D multi-object tracking for autonomous driving. arXiv 2020, arXiv:2001.05673. [Google Scholar]

- Mark, H.L.; Tunnell, D. Qualitative near-infrared reflectance analysis using Mahalanobis distances. Anal. Chem. 1985, 57, 1449–1456. [Google Scholar] [CrossRef]

- Zhai, G.; Kong, X.; Cui, J.; Liu, Y.; Yang, Z. FlowMOT: 3D multi-object tracking by scene flow association. arXiv 2020, arXiv:2012.07541. [Google Scholar]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Tracking Objects as Points. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 474–490. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3D Object Detection and Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Wu, H.; Han, W.; Wen, C.; Li, X.; Wang, C. 3D multi-object tracking in point clouds based on prediction confidence-guided data association. IEEE Trans. Intell. Transp. Syst. 2021, 23, 5668–5677. [Google Scholar] [CrossRef]

- Kim, A.; Brasó, G.; Ošep, A.; Leal-Taixé, L. PolarMOT: How Far Can Geometric Relations Take Us in 3D Multi-Object Tracking? arXiv 2022, arXiv:2208.01957. [Google Scholar]

- Scheel, A.; Knill, C.; Reuter, S.; Dietmayer, K. Multi-Sensor Multi-Object Tracking of Vehicles using High-Resolution Radars. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 558–565. [Google Scholar]

- Scheel, A.; Dietmayer, K. Tracking multiple vehicles using a variational radar model. IEEE Trans. Intell. Transport. Syst. 2018, 20, 3721–3736. [Google Scholar] [CrossRef]

- Zhang, L.; Mao, D.; Niu, J.; Wu, Q.J.; Ji, Y. Continuous tracking of targets for stereoscopic HFSWR based on IMM filtering combined with ELM. Remote Sens. 2020, 12, 272. [Google Scholar] [CrossRef]

- Marinello, N.; Proesmans, M.; Van Gool, L. TripletTrack: 3D Object Tracking Using Triplet Embeddings and LSTM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, New Orleans, LA, USA, 19–20 June 2022; pp. 4500–4510. [Google Scholar]

- Weng, X.; Wang, Y.; Man, Y.; Kitani, K.M. Gnn3Dmot: Graph Neural Network for 3D Multi-Object Tracking with 2D-3D Multi-Feature Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6499–6508. [Google Scholar]

- Zeng, Y.; Ma, C.; Zhu, M.; Fan, Z.; Yang, X. Cross-Modal 3D Object Detection and Tracking for Auto-Driving. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 27 September–1 October 2021; pp. 3850–3857. [Google Scholar]

- Koh, J.; Kim, J.; Yoo, J.H.; Kim, Y.; Kum, D.; Choi, J.W. Joint 3D Object Detection and Tracking Using Spatio-Temporal Representation of Camera Image and Lidar Point Clouds. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 22 February–1 March 2022; pp. 1210–1218. [Google Scholar]

- Nabati, R.; Harris, L.; Qi, H. CFTrack: Center-based Radar and Camera Fusion for 3D Multi-Object Tracking. In Proceedings of the IEEE Intelligent Vehicles Symposium Workshops, Nagoya, Japan, 11–17 July 2021; pp. 243–248. [Google Scholar]

- Kim, A.; Ošep, A.; Leal-Taixé, L. Eagermot: 3D Multi-Object Tracking Via Sensor Fusion. In Proceedings of the IEEE International Conference on Robotics and Automation, Xi’an, China, 30 May–5 June 2021; pp. 11315–11321. [Google Scholar]

- Wang, X.; Fu, C.; Li, Z.; Lai, Y.; He, J. DeepFusionMOT: A 3D Multi-Object Tracking Framework Based on Camera-LiDAR Fusion with Deep Association. IEEE Robot 2022, 7, 8260–8267. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. arXiv 2021, arXiv:2110.06864. [Google Scholar]

- Pirsiavash, H.; Ramanan, D.; Fowlkes, C.C. Globally-optimal Greedy Algorithms for Tracking a Variable Number of Objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 21–23 June 2011; pp. 1201–1208. [Google Scholar]

- Luiten, J.; Osep, A.; Dendorfer, P. Hota: A higher order metric for evaluating multi-object tracking. Int. J. Comput. Vis. 2021, 129, 548–578. [Google Scholar] [CrossRef]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The clear mot metrics. EURASIP J. Image Video Process 2008, 1–10. [Google Scholar] [CrossRef]

- Shi, W.; Rajkumar, R. Point-gnn: Graph Neural Network For 3D Object Detection in a Point Cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1711–1719. [Google Scholar]

- Ren, J.; Chen, X.; Liu, J.; Sun, W.; Pang, J.; Yan, Q.; Tai, Y.-W.; Xu, L. Accurate Single Stage Detector using Recurrent Rolling Convolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5420–5428. [Google Scholar]

- Sharma, S.; Ansari, J.A.; Murthy, J.K.; Krishna, K.M. Beyond Pixels: Leveraging Geometry and Shape Cues for Online Multi-Object Tracking. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–26 May 2018; pp. 3508–3515. [Google Scholar]

- Hu, H.-N.; Cai, Q.-Z.; Wang, D.; Lin, J.; Sun, M.; Krahenbuhl, P.; Darrell, T.; Yu, F. Joint Monocular 3D Vehicle Detection and Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 5390–5399. [Google Scholar]

- Luiten, J.; Fischer, T.; Leibe, B. Track to reconstruct and reconstruct to track. IEEE Robot. Autom. Lett. 2020, 5, 1803–1810. [Google Scholar] [CrossRef]

- Pang, J.; Qiu, L.; Li, X.; Chen, H.; Li, Q.; Darrell, T.; Yu, F. Quasi-Dense Similarity Learning for Multiple Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 164–173. [Google Scholar]

- Wang, G.; Gu, R.; Liu, Z.; Hu, W.; Song, M.; Hwang, J.-N. Track without Appearance: Learn Box and Tracklet Embedding with Local and Global Motion Patterns for Vehicle Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 9876–9886. [Google Scholar]

- Pang, Z.; Li, Z.; Wang, N. SimpleTrack: Understanding and Rethinking 3D Multi-object Tracking. arXiv 2021, arXiv:2111.09621. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

| Method | Publication | Input | HOTA (%) | AssA (%) | MOTA (%) | IDSW | FPS |

|---|---|---|---|---|---|---|---|

| BeyondPixels [50] | ICRA’18 | 2D + 3D | 63.75 | 56.40 | 82.68 | 934 | 3.3 |

| mmMOT [15] | ICCV’19 | 2D + 3D | 62.05 | 54.02 | 83.23 | 733 | 50 |

| mono3DT [51] | ICCV’19 | 2D | 73.16 | 74.18 | 84.28 | 379 | 33.3 |

| AB3DMOT [7] | IROS’20 | 3D | 69.99 | 69.33 | 83.61 | 113 | 212 |

| MOTSFusion [52] # | RA-L’20 | 2D + 3D | 68.74 | 66.16 | 84.24 | 415 | 2.3 |

| JRMOT [12] | IROS’20 | 2D + 3D | 69.61 | 66.89 | 76.95 | 271 | 20 |

| CenterTrack [30] | ECCV’20 | 2D | 73.02 | 71.20 | 88.83 | 254 | 22.2 |

| Quasi-Dense [53] | CVPR’21 | 2D | 68.45 | 65.49 | 84.93 | 313 | 14.3 |

| LGM [54] | ICCV’21 | 2D | 73.14 | 72.31 | 87.60 | 448 | 12.5 |

| JMODT [11] | IROS’21 | 2D + 3D | 70.73 | 68.76 | 85.35 | 350 | 100 |

| EagerMOT [42] # | ICRA’21 | 2D + 3D | 74.39 | 74.16 | 87.82 | 239 | 90 |

| TripletTrack [37] | CVPRW’22 | 2D | 73.58 | 74.66 | 84.32 | 322 | - |

| QD-3DT [9] | TPAMI’22 | 2D | 72.77 | 72.19 | 85.94 | 206 | 45 |

| PolarMOT [33] # | ECCV’22 | 3D | 75.16 | 76.95 | 85.0 | 462 | 170 |

| DeepFusionMOT [43]# | RA-L’22 | 2D + 3D | 75.46 | 80.06 | 84.64 | 84 | 110 |

| DetecTrack [40] | AAAI’22 | 2D + 3D | 73.54 | 75.25 | 85.52 | - | 27 |

| MSA-MOT | Ours | 2D + 3D | 78.52 | 82.56 | 88.01 | 91 | 130 |

| Method | Publication | Modality | sAMOTA (%) | AMOTA (%) | MOTA (%) | IDS |

|---|---|---|---|---|---|---|

| FANTrack | IV’19 | 3D + 2D | 82.97 | 40.03 | 74.30 | 35 |

| mmMOT | ICCV’19 | 3D + 2D | 70.61 | 33.08 | 74.07 | 10 |

| AB3DMOT | IROS’20 | 3D | 93.28 | 45.43 | 86.24 | 0 |

| GNN3DMOT | CVPR’20 | 3D + 2D | 93.68 | 45.27 | 84.70 | 0 |

| EagerMOT# | ICRA’20 | 3D + 2D | 94.94 | 48.84 | 96.61 | 2 |

| PC-TCNN | IJCAI’21 | 3D | 95.44 | 47.64 | - | 1 |

| PolarTrack# | ECCV’22 | 3D | 94.32 | - | 93.93 | 31 |

| DetecTrack | AAAI’22 | 3D + 2D | 96.49 | 48.87 | 91.46 | - |

| DeepFusionMOT# | RA-L’22 | 3D + 2D | 91.80 | 44.62 | 91.30 | 1 |

| MSA-MOT | Ours | 3D + 2D | 97.11 | 50.10 | 96.83 | 0 |

| MSM | CTM | HOTA (%) | DetA (%) | AssA (%) | MOTA (%) | IDSW | |

|---|---|---|---|---|---|---|---|

| EagerMOT | 78.04 | 76.80 | 79.51 | 87.25 | 91 | ||

| Ours | √ | 79.03 | 77.39 | 80.90 | 88.23 | 66 | |

| √ | √ | 79.73 | 77.50 | 82.09 | 88.49 | 46 |

| Affinity | HOTA (%) | AssA (%) | MOTA (%) | IDSW | ||||

|---|---|---|---|---|---|---|---|---|

| Ours | Eager | Ours | Eager | Ours | Eager | Ours | Eager | |

| 3D-IoU | 78.83 | 77.16 | 80.34 | 77.76 | 88.00 | 86.67 | 206 | 234 |

| 3D-GIoU | 79.41 | 77.82 | 81.50 | 79.08 | 88.45 | 87.09 | 95 | 126 |

| Ours | 79.73 | 78.12 | 82.09 | 79.67 | 88.49 | 87.27 | 46 | 79 |

| Frames | HOTA (%) | AssA (%) | MOTA (%) | IDSW |

|---|---|---|---|---|

| 5 | 79.06 | 80.89 | 88.40 | 56 |

| 8 | 79.24 | 81.23 | 88.48 | 50 |

| 11 | 79.73 | 82.09 | 88.49 | 46 |

| 14 | 79.65 | 82.06 | 88.48 | 46 |

| 17 | 79.64 | 82.02 | 88.45 | 46 |

| Method | Criteria | sAMOTA (%) | AMOTA (%) | MOTA (%) | |||

|---|---|---|---|---|---|---|---|

| Car | Pedestrian | Car | Pedestrian | Car | Pedestrian | ||

| AB3DMOT | IoUthres = 0.25 | 93.28 | 75.85 | 45.43 | 31.04 | 86.24 | 70.90 |

| IoUthres = 0.5 | 90.38 | 70.95 | 42.79 | 27.31 | 84.02 | 65.06 | |

| IoUthres = 0.7 | 69.81 | - | 27.26 | - | 57.06 | - | |

| EagerMOT | IoUthres = 0.25 | 94.94 | 92.95 | 48.84 | 45.96 | 96.61 | 93.14 |

| IoUthres = 0.5 | 95.42 | 90.57 | 48.93 | 43.79 | 94.67 | 90.66 | |

| IoUthres = 0.7 | 85.13 | 64.49 | 39.06 | 21.91 | 84.04 | 64.67 | |

| MSA-MOT | IoUthres = 0.25 | 97.11 | 93.61 | 50.10 | 46.31 | 96.83 | 94.63 |

| IoUthres = 0.5 | 96.99 | 91.92 | 49.85 | 44.01 | 94.84 | 91.29 | |

| IoUthres = 0.7 | 86.85 | 66.77 | 39.90 | 23.58 | 84.16 | 64.60 | |

| 3D Detector | Car | Bicycle | ||||

|---|---|---|---|---|---|---|

| SAMOTA (%) | MOTA (%) | IDs | SAMOTA (%) | MOTA (%) | IDs | |

| Point-GNN | 97.21 | 96.68 | 0 | 94.11 | 94.47 | 17 |

| PointRCNN | 97.18 | 95.44 | 0 | 81.37 | 81.95 | 2 |

| PV-RCNN | 94.56 | 95.54 | 0 | 94.63 | 95.18 | 6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Z.; Nie, J.; Wu, H.; He, Z.; Gao, M. MSA-MOT: Multi-Stage Association for 3D Multimodality Multi-Object Tracking. Sensors 2022, 22, 8650. https://doi.org/10.3390/s22228650

Zhu Z, Nie J, Wu H, He Z, Gao M. MSA-MOT: Multi-Stage Association for 3D Multimodality Multi-Object Tracking. Sensors. 2022; 22(22):8650. https://doi.org/10.3390/s22228650

Chicago/Turabian StyleZhu, Ziming, Jiahao Nie, Han Wu, Zhiwei He, and Mingyu Gao. 2022. "MSA-MOT: Multi-Stage Association for 3D Multimodality Multi-Object Tracking" Sensors 22, no. 22: 8650. https://doi.org/10.3390/s22228650

APA StyleZhu, Z., Nie, J., Wu, H., He, Z., & Gao, M. (2022). MSA-MOT: Multi-Stage Association for 3D Multimodality Multi-Object Tracking. Sensors, 22(22), 8650. https://doi.org/10.3390/s22228650