Abstract

Unmanned underwater operations using remotely operated vehicles or unmanned surface vehicles are increasing in recent times, and this guarantees human safety and work efficiency. Optical cameras and multi-beam sonars are generally used as imaging sensors in underwater environments. However, the obtained underwater images are difficult to understand intuitively, owing to noise and distortion. In this study, we developed an optical and sonar image fusion system that integrates the color and distance information from two different images. The enhanced optical and sonar images were fused using calibrated transformation matrices, and the underwater image quality measure (UIQM) and underwater color image quality evaluation (UCIQE) were used as metrics to evaluate the performance of the proposed system. Compared with the original underwater image, image fusion increased the mean UIQM and UCIQE by 94% and 27%, respectively. The contrast-to-noise ratio was increased six times after applying the median filter and gamma correction. The fused image in sonar image coordinates showed qualitatively good spatial agreement and the average IoU was 75% between the optical and sonar pixels in the fused images. The optical-sonar fusion system will help to visualize and understand well underwater situations with color and distance information for unmanned works.

1. Introduction

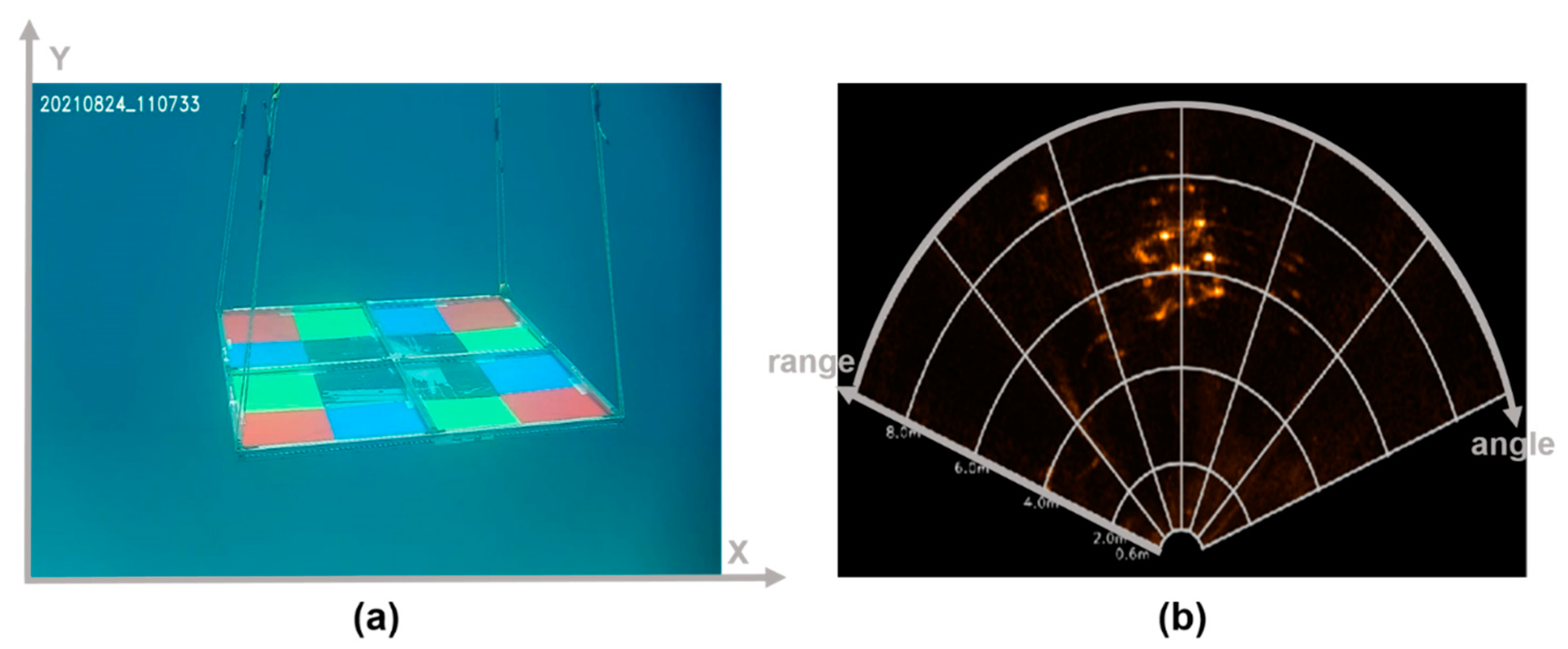

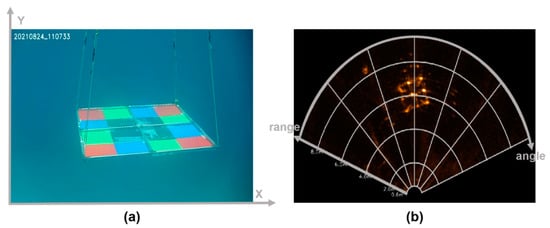

Recently, the need for unmanned vehicles, such as remotely operated vehicles and autonomous underwater vehicles, has increased due to the high safety and efficiency in tasks, such as surveying underwater structures and acquiring seabed data. For unmanned vehicles, imaging sensors are essential for visualizing underwater situations. Underwater optical cameras and multi-beam imaging sonars are the most commonly used imaging sensors. As shown in Figure 1, optical and sonar images are expressed in Cartesian and fan-shaped image coordinates, respectively. An optical camera provides an intuitive expression of underwater situations using red, green, and blue (RGB) color signals of the measured light signal. Since the light reaching the camera undergoes physical distortions, such as attenuation and reflection by water and floating particles, underwater optical images suffer from color casting and low visibility [1]. Multi-beam sonars provide images expressed in the fan-shaped image coordinate of the direction (angle, θ) and distance (range, r) calculated from the time-of-flight of the sonic beam. Sonic beams are well transmitted through water, but it is difficult to understand sonar images due to the low signal-to-noise ratio [2]. Several studies have been conducted to improve the quality of underwater optical and sonar images. Table 1 summarizes the single-enhancement techniques for optical and sonar images.

Figure 1.

Two image coordinate systems, (a) Cartesian and (b) fan-shaped image coordinate systems of optical and multi-beam sonar sensors.

To compensate for wavelength-dependent color casting and low visibility, three representative approaches of single-image enhancement have been reported: conventional image processing-based, image formation model (IFM)-based, and deep learning-based enhancements. Conventional image processing algorithms, such as contrast-limited adaptive histogram equalization (CLAHE), homomorphic filtering, empirical mode decomposition, and multi-processing step-based techniques have been applied to underwater images for color compensation, histogram equalization, and boundary enhancement. However, these algorithms do not consider the spatial variance of the degradation over the field of view [3,4,5,6]. The IFM can be simplified as shown in Equation (1), where I is the measured light intensity of a pixel in the image, J is the restored light intensity, t is the light transmission map, and A is the background light:

To restore from based on Equation (1), it is necessary to estimate the transmission map and background light relevant to the flight path of the measured light and the physical characteristics of the underwater environment. The dark channel prior (DCP) and gradient domain transform restore underwater images with physical prior knowledge of light, or through domain transform to estimate the transmission map [7,8,9]. IFM-based enhancement is effective in removing haziness in underwater images. However, there is a tradeoff between computational complexity and enhancement performance. Recently, deep learning methods, such as convolutional neural networks (CNNs) and generative adversarial networks (GANs) have been applied for transmission map estimation and white balancing [10,11,12]. The trained generator network of the underwater GAN and fast underwater image-enhancement GAN are enhanced from the underwater image to the cleaner image. Although uncertainty is minimized due to inaccurate prior knowledge and computational complexity of accurate IFMs, the performance of deep learning-based enhancement depends on robust construction of training datasets.

Single-image enhancement techniques for multi-beam sonar images have been proposed to remove noise and increase image contrast. Conventionally, the quality of sonar images is improved using filters or deep learning for noise removal and contrast enhancement. The median filter, which chooses a median value among the ascending-sorted pixel values in a kernel, is conventionally applied to remove random noise from the sonar image [13]. Gabor filters improve contrast and reduce noise in underwater sonar images [14]. A new adaptive cultural algorithm (NACA) optimized the filtering parameter for denoising sonar images [15]. In addition, CNNs are actively applied for noise reduction, crosstalk removal, and increasing the image resolution [16,17,18].

There have been some reports on fusion techniques for multiple imaging sensors. Two different datasets measured from acoustic and stereo cameras were fused by extrinsic calibration and feature matching [19,20]. The data measured from two 3D imaging sensors, an acoustic camera and stereo camera, were aligned and fused using point-to-point correspondence [19]. Opti-acoustic stereo imaging is performed by matching the structural features of the edges and specific points in the data measured from multiple sensors [20].

Table 1.

Summary of single-image enhancement techniques for optical and multi-beam sonar images.

Table 1.

Summary of single-image enhancement techniques for optical and multi-beam sonar images.

| Image Type | Enhancement Method [Reference] | Description |

|---|---|---|

| Optical image | Empirical mode decomposition [3] | Decompose the color spectrum components of underwater images, and improve the images by applying different weights on the color spectrum components |

| CLAHE-mix [4] | Apply CLAHE on the image in RGB and HSV color models and combine two contrast-enhanced images by Euclidean norm | |

| Image fusion [5] | Apply three successive steps of white balancing, contrast and edge enhancing, and fusing | |

| CLAHE-HF [6] | Enhance contrast of underwater images by CLAHE, and reduce noise by homomorphic filtering (HF) | |

| Red channel restoration model [7] | Apply a red channel model, which is a variation of DCP, to improve the most attenuated red channel signal of the underwater image | |

| Underwater IFM-based algorithm [8] | Recover the original image with the determined transmission map of direct transmitted, forward and backward scattered light | |

| DCP and depth transmission map [9] | Fuse DCP and depth map, which are the difference between the bright and the dark channels and the difference of wavelength-dependent light absorption, respectively | |

| UGAN [10] | Train underwater GAN (UGAN) from the paired clean and underwater images to learn the difference between the paired images, and generate enhanced underwater images using the trained UGAN | |

| CNNs for estimation of transmission and global ambient light [11] | Train two parallel CNN branches to estimate the blue channel transmission map and global ambient light signal | |

| FUnIE-GAN [12] | Train fast underwater image enhancement GAN (FUnIE-GAN) to learn global content, color, texture, and style information of underwater images | |

| Sonar image | Median filter [13] | Reduce noise in sonar images by median filter |

| Gabor filter [14] | Improve edge signal in sonar images by Gabor filter | |

| NACA [15] | Apply adaptive initialization algorithm to obtain a better initial clustering center and quantum inspired shuffled frog leaping algorithm to update cultural individuals | |

| CNN based auto encoder [16] | Train auto encoder from 13,650 multi-beam sonar images for enhancing resolution and denoising | |

| GAN based algorithm [17] | Train GAN using high- and low-resolution sonar image pairs for enhancing resolution | |

| YOLO [18] | Train you only look once (YOLO) network from the crosstalk noise sonar image dataset, and then remove the detected crosstalk noise |

In this study, we developed an underwater optical-sonar fusion system that can simultaneously record optical and multi-beam sonar images, enhance both images, and then fuse the RGB color of the enhanced optical image and the distance of the enhanced sonar image. For optical image enhancement, we chose the image fusion method according to reports on the qualitative and quantitative comparisons of different single image enhancement techniques [21,22]. For sonar image enhancement, median filter and gamma correction method were applied to reduce noise and to enhance contrast because they are conventionally used on sonar images [23]. For image fusion, we performed geometric calibration with an RGB phantom, and estimated the transformation matrix between different optical and sonar image coordinates.

2. Materials and Methods

2.1. Underwater Optical-Sonar Fusion System

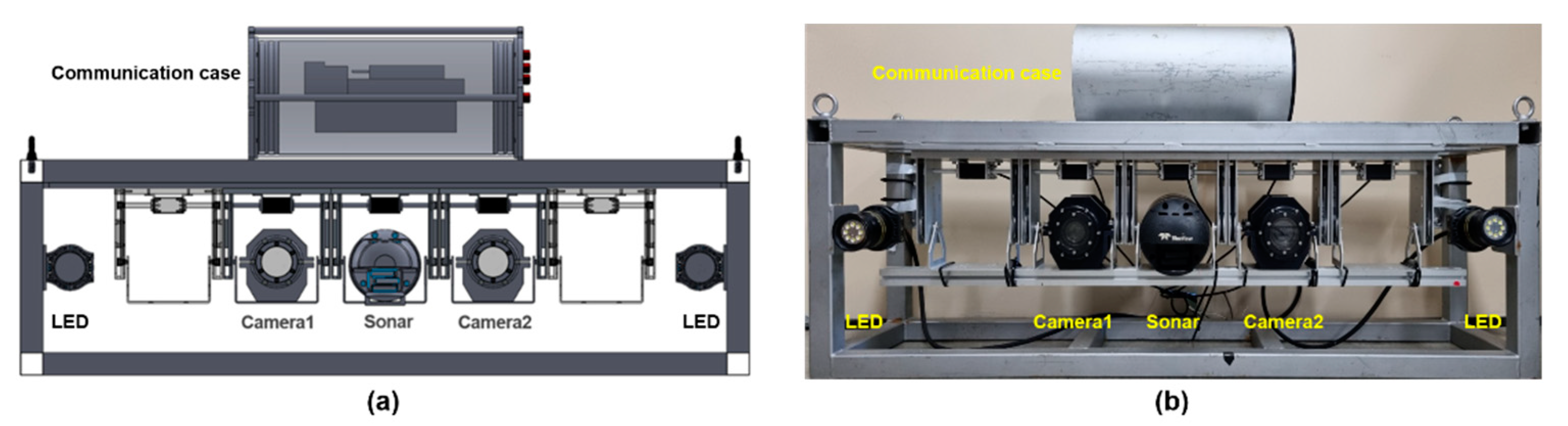

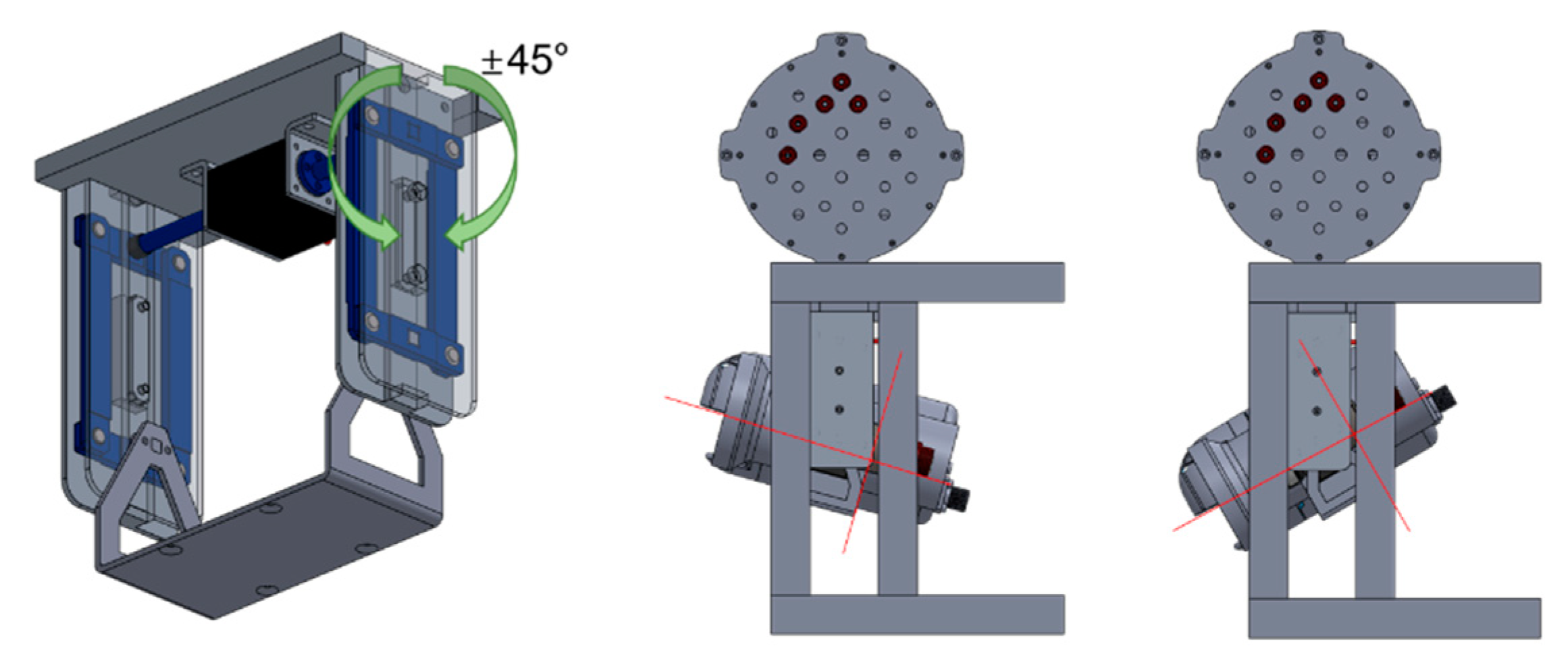

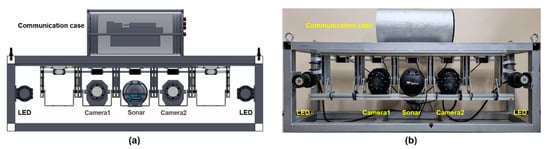

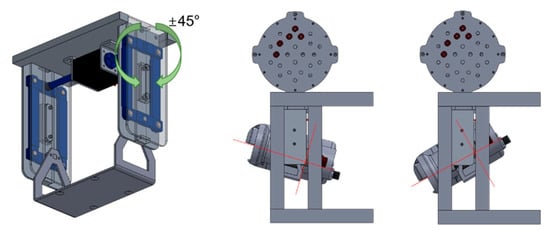

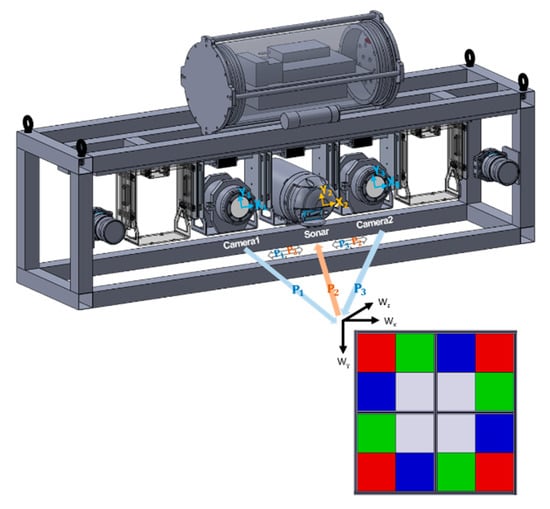

We developed an optical-sonar fusion system comprising two underwater cameras (Otaq, Eagle IPZ/4000, Lancaster, UK), a multi-beam sonar (Teledyne marine, Blueview M900-2250, Daytona Beach, FL, USA), two light-emitting diode (LED) lights (Deepsea power & light, LED SeaLite, San Diego, CA, USA), and a communication case, as shown in Figure 2. The communication box is an aluminum watertight case with power and data communication cables. Table 2 summarizes the specifications of the imaging sensors and LED lights. Each imaging sensor was attached to a movable bracket, which was equipped with a servo motor (Cehai Tech, D30, Qingdao, China) to control the tilting sensors. As shown in Figure 3, the bracket is designed for ±45° tilting with a servo motor operating at up to 30 kgf.cm torque and 270° angle. The optical-sonar fusion system weighs approximately 80 kg and 30 kg in air and water, respectively.

Figure 2.

Optical-sonar fusion system, (a) schematic design and (b) real hardware comprising two underwater cameras and one multi-beam sonar.

Table 2.

Specifications of two imaging sensors and the light in the optical-sonar fusion system.

Figure 3.

Schematic of the bracket equipped with a servo motor for tilting imaging sensors.

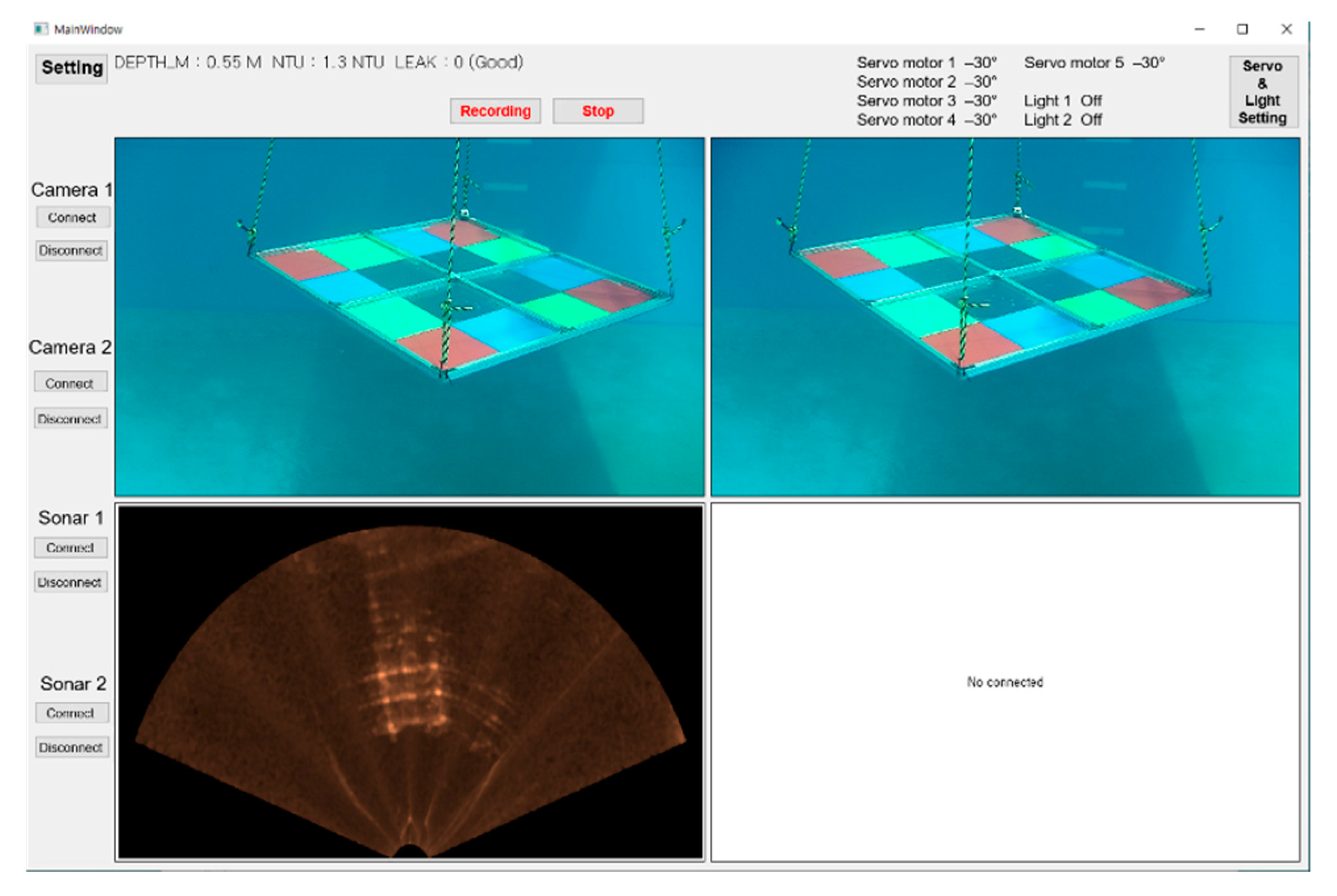

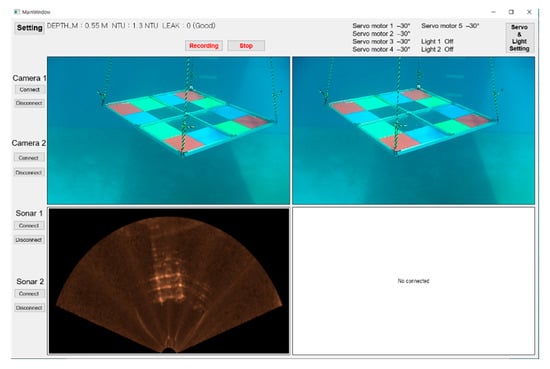

Figure 4 shows a graphical user interface software supporting real-time visualization and simultaneous acquisition of optical and sonar images, turning on/off lights, and performing single-enhancement and optical-sonar fusion.

Figure 4.

Software to control the display and acquisition of optical and sonar images, light operation, and enhancing and fusing both images.

2.2. Enhancement of Underwater Optical and Sonar Images

In this study, we applied image fusion comprising three successive image processing steps: balancing, enhancing contrast and sharpness, and fusing two enhanced images to improve the color tone and sharpness of underwater optical images [5]. The first step, white balancing, compensates for the values of the red and green color channels of a pixel, and via Equations (2) and (3) to reduce the difference between the average RGB color channels (). The weights, and , were experimentally determined in the range of 1.8 to 2.3 and 1.3 to 1.8, respectively:

In the second step, CLAHE and the unsharp masking principle (UMP) were adopted to enhance the contrast and sharpness of the white-balanced image. CLAHE performs histogram equalization on the multiple sub-patches of an image and combines the equalized sub-patches. In Equation (4), the UMP sharpens the white-balanced image by weighted addition of the difference between the original and Gaussian filtered images:

Finally, the enhanced image () was obtained by the weighted sum of the enhanced image by CLAHE and UMP in Equation (5). The weights and are determined using the normalized Laplacian contrast and saturation factors:

To reduce noise and enhance the contrast of multi-beam sonar images, we applied a median filter and gamma correction. The median filter selects the median value among the pixels in a 5 5 patch, and the center pixel in the patch is replaced by the median value. Consequently, values that are significantly higher or lower than the neighboring pixels in the patch can be removed. Gamma correction in Equation (6) corrects the input sonar pixel, with a nonlinear weight (). In this study, we set to 0.2:

2.3. Calibration and Fusion of Underwater Optical-Sonar Fusion System

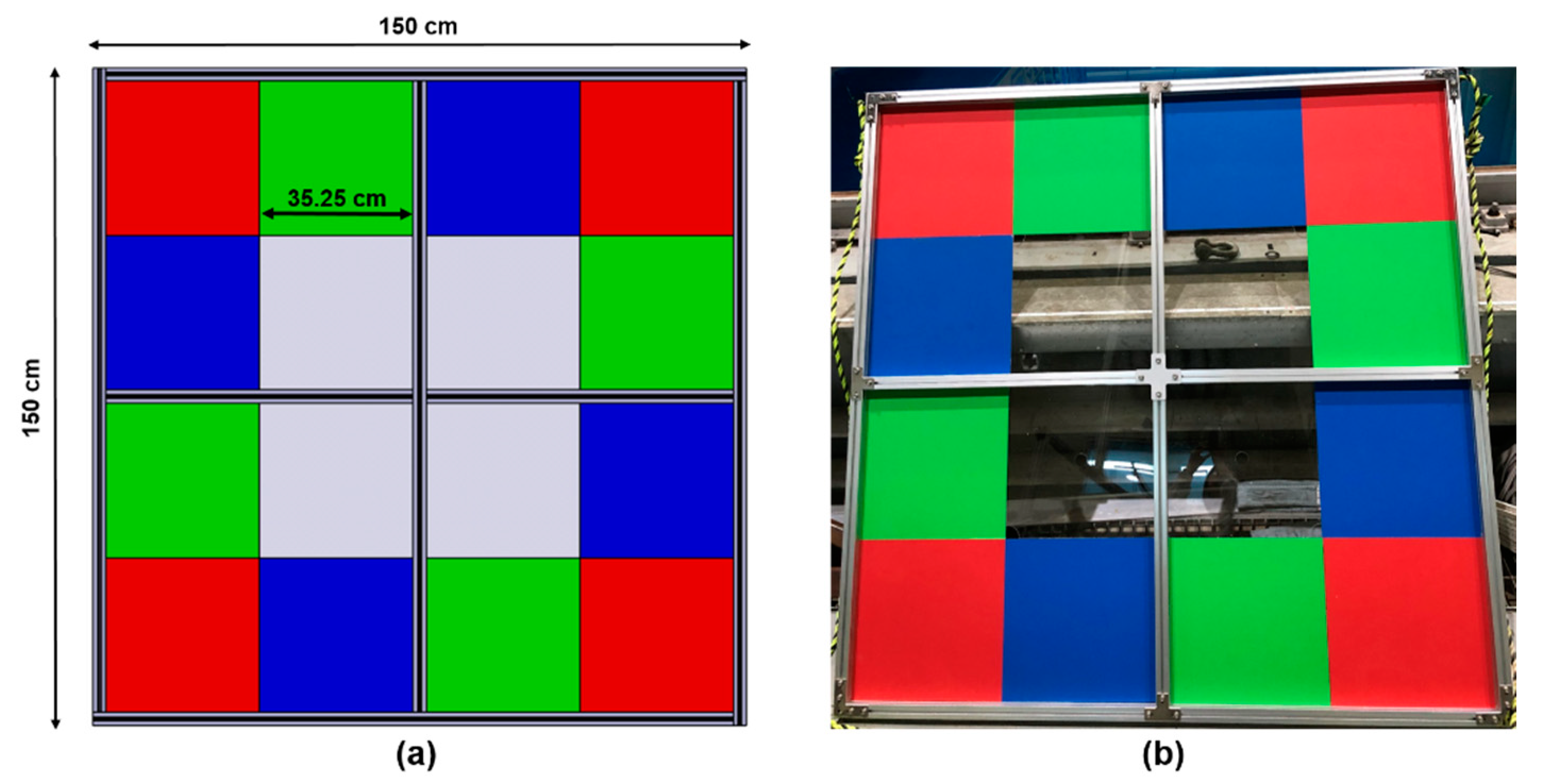

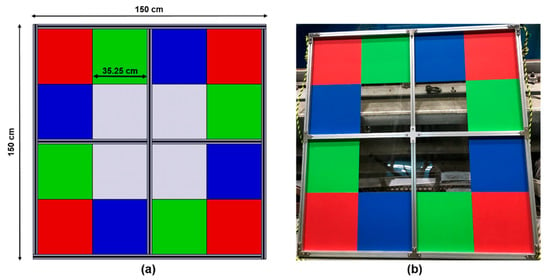

To fuse two different image coordinates, we first need to calibrate the two image sensors geometrically. For geometric calibration, we designed an RGB phantom that can be captured by optical and sonar image sensors, as shown in Figure 5. The size of the RGB phantom is 1.5 × 1.5 m in width and height, and the colored RGB aluminum plates and the transparent acrylic plates are aligned to construct various color and shape patterns. Each plate was 35.25 cm in width and height, and the weight of the RGB phantom was 40 kg in air.

Figure 5.

Calibration phantom, (a) schematic and (b) real RGB phantom for geometric calibration of underwater optical-sonar fusion system.

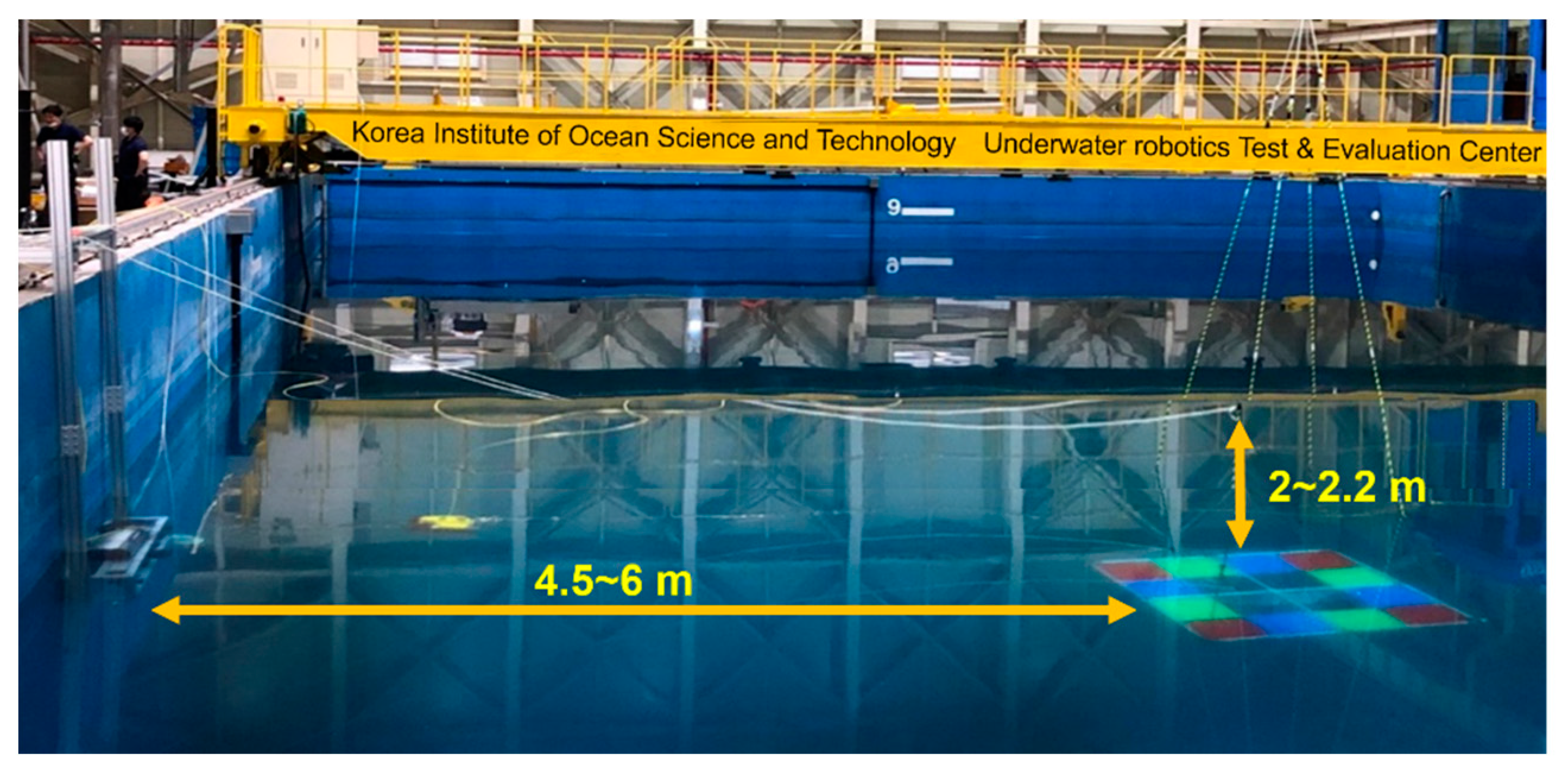

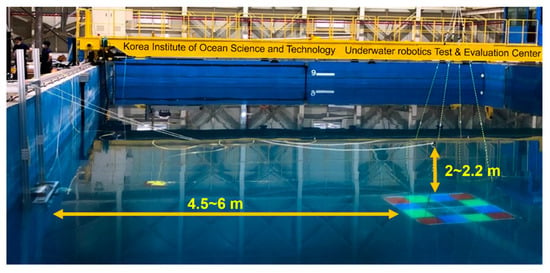

Figure 6 shows the experimental setup in the water tank (Underwater test and evaluation center, Pohang, Korea). The water tank is 20 m in width, 35 m in height, and the maximum depth is 9.6 m. The optical-sonar fusion system was installed on the wall of water tank 0.5 m below water surface. We obtained simultaneous optical and sonar images of the RGB phantom. The phantom was located at a distance of 4.5 m and at a depth of 2 m. At a depth of 2.2 m from the water surface, the RGB phantom was moved at a distance of 5, 5.5, and 6 m from the image fusion system. At each distance and depth, the phantom was rotated by an angle of 15, 30, and 45 degrees on the left and the right. Table 3 summarizes 28 different locations of RGB phantom to acquire calibration data. By acquiring the calibration image data with the phantom placed at various locations in field of view, the calibrated transformation matrices could reflect the different geometric relationships between the corresponding pixels in sonar and optical image coordinates.

Figure 6.

Experimental setup of the RGB phantom and optical-sonar fusion system for acquisition of calibration data.

Table 3.

Different setup locations of the RGB phantom for acquisition of geometric calibration data.

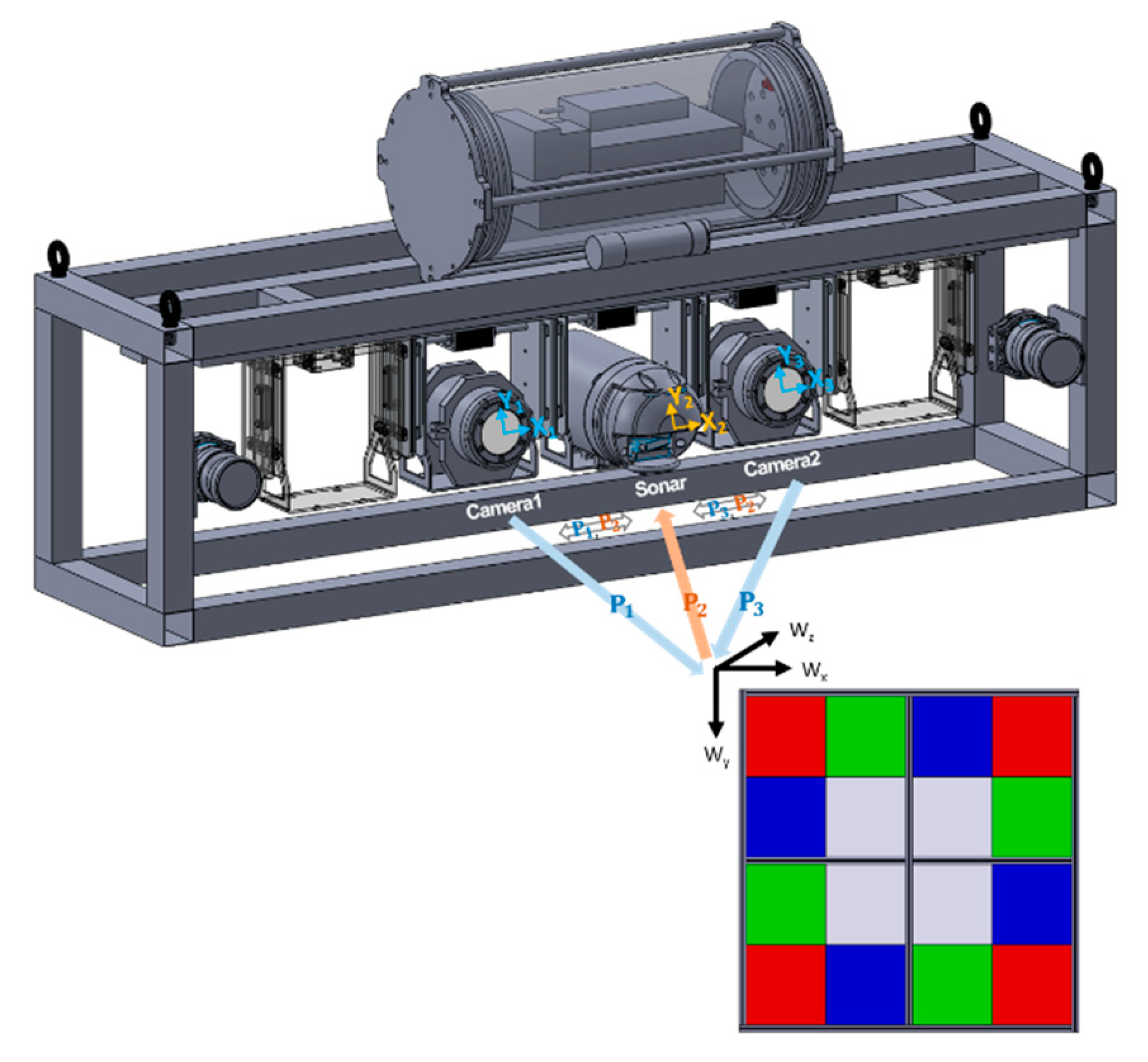

Using calibration data, we estimated transformation matrices, between the world coordinate and two optical () and a sonar () image coordinate, as shown in Figure 7. Equation (7) represents the transformation from the world to image coordinates [24]:

Figure 7.

World coordinates (), optical, and sonar image coordinates ().

Each transformation matrix in Equation (7) comprises an extrinsic parameter matrix and an intrinsic parameter matrix. The extrinsic matrix, which indicates the location of the optical camera and sonar in world coordinates, is represented by a rotation () and a translation () matrix. The intrinsic matrix () expresses the characteristics of the image sensors, such as focal length, principal point, and skew coefficient, based on the pinhole camera model. Unlike optical images, sonar images are expressed in fan-shaped coordinate systems with the shooting angle of multi-sonic beams and the distance measured from the time-of-flight of the returned sonic beams. Thus, to transform the optical image coordinates to sonar image coordinates, we successively conducted three coordinate conversion steps: (i) from optical image to world coordinates, (ii) from world to sonar Cartesian coordinates (θ, r), (iii) from sonar Cartesian coordinates to sonar image fan-shaped coordinates (), as in Equation (8):

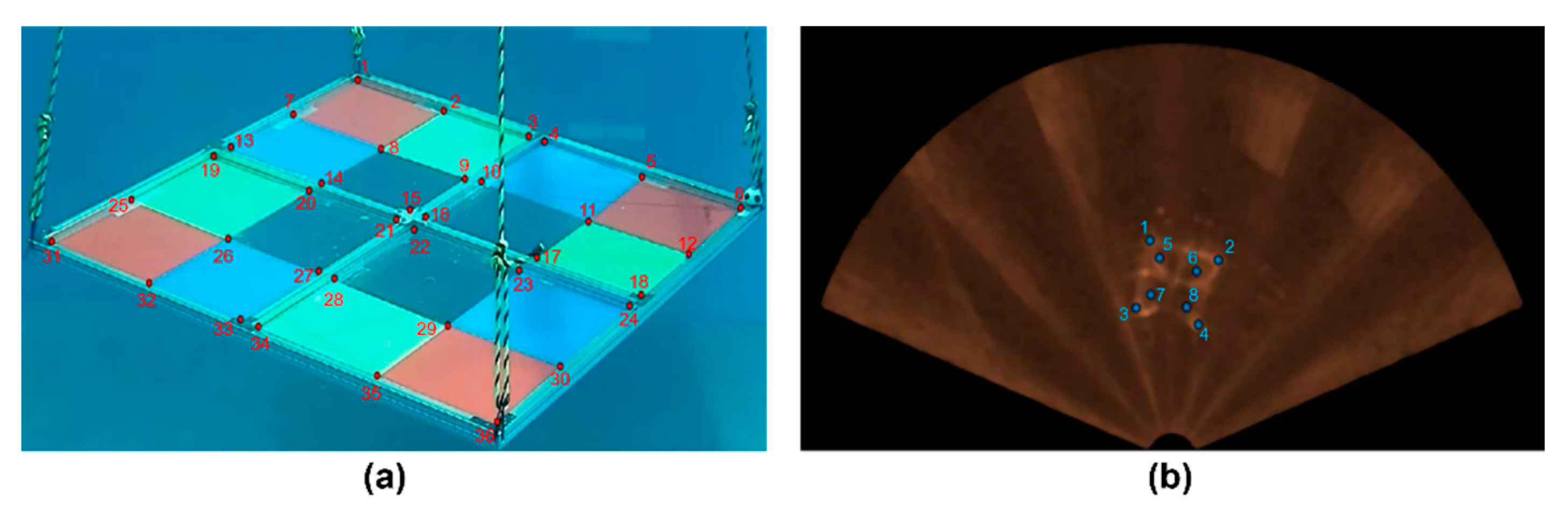

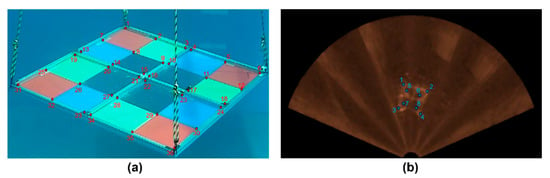

The acquired 28 calibration data of the simultaneous optical and sonar image pairs were used to estimate the transformation matrices in the Cartesian coordinate system. As shown in Figure 8, we manually extracted 36 and 8 corner image points from each simultaneously acquired optical and sonar image. The color difference of the plates in the RGB phantom creates corners in the optical images, and the material difference between the plates creates corners in sonar images.

Figure 8.

Corner detection numbering, (a) thirty-six optical and (b) eight sonar corner image points for estimating coordinate transformation matrices.

3. Results

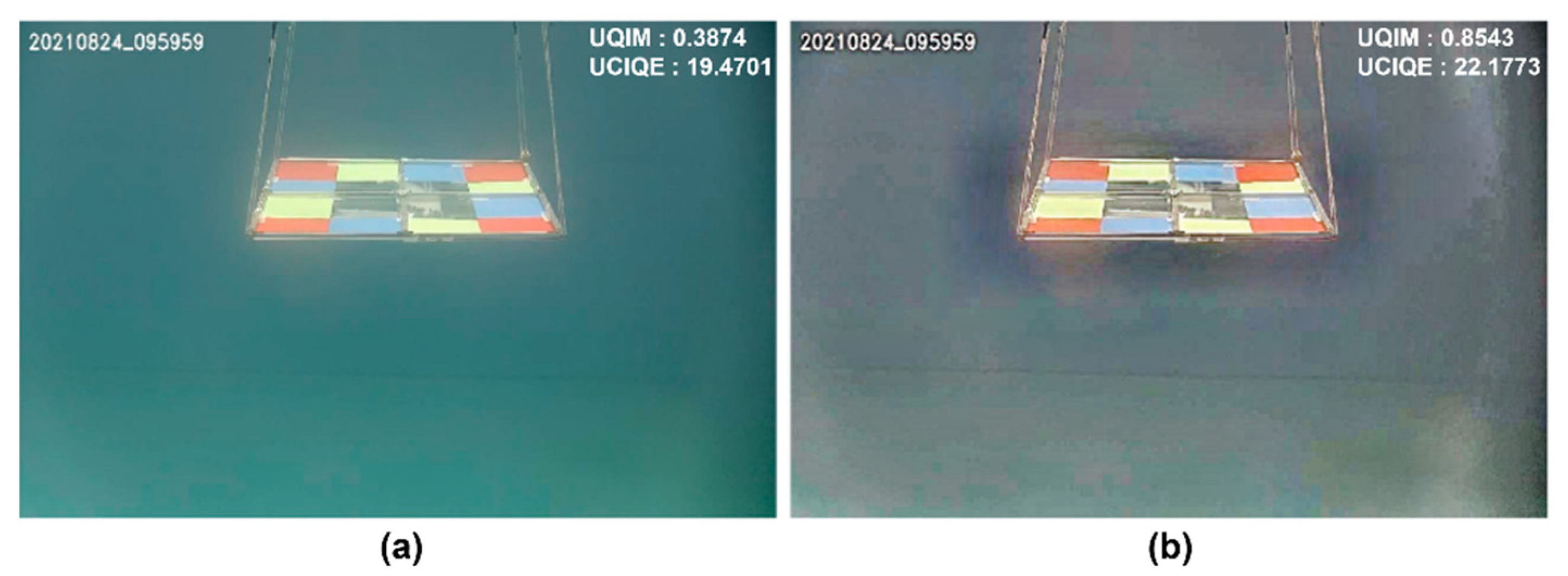

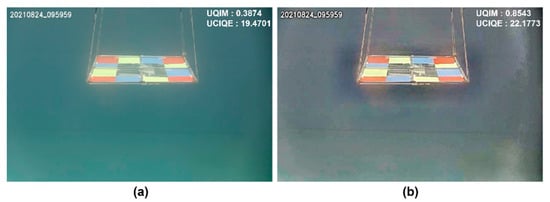

Figure 9 qualitatively compares the original underwater optical image and the image enhanced by the image fusion technique. The greenish background in the original underwater image in Figure 9a was mitigated in the enhanced image (Figure 9b), and the overall color tone and edge signals corresponding to the RGB phantom were improved. In addition, the underwater image quality measure (UIQM) and underwater color image quality evaluation (UCIQE) were computed to quantitatively evaluate the optical color image quality [25,26]. In Equation (9), the UIQM is defined as the weighted sum of colorfulness (), sharpness () and contrast (). UCIQE in Equation (10) is defined as the weighted sum of the chroma (), luminance (), and saturation (). The UIQM values are 0.39 and 0.85 before and after applying image fusion enhancement, respectively. UCIQE is evaluated as 19.47 and 22.18 before and after enhancement, respectively:

Figure 9.

Single optical image enhancement, (a) original underwater optical image and (b) its enhanced image by image fusion.

The performance of image fusion was evaluated with 28 image pairs acquired from two cameras for calibration by calculating the mean and standard deviation (SD) of the UIQM and UCIQE. The mean and SD of UIQM and UCIQE of 56 underwater images were 0.56 0.13 and 19.96 1.15, respectively. After image fusion enhancement, the increased UIQM and UCIQE were 1.1 0.17 and 25.53 2.35, respectively. Compared with the original underwater image, image fusion increased the mean UIQM and UCIQE by 94% and 27%, respectively.

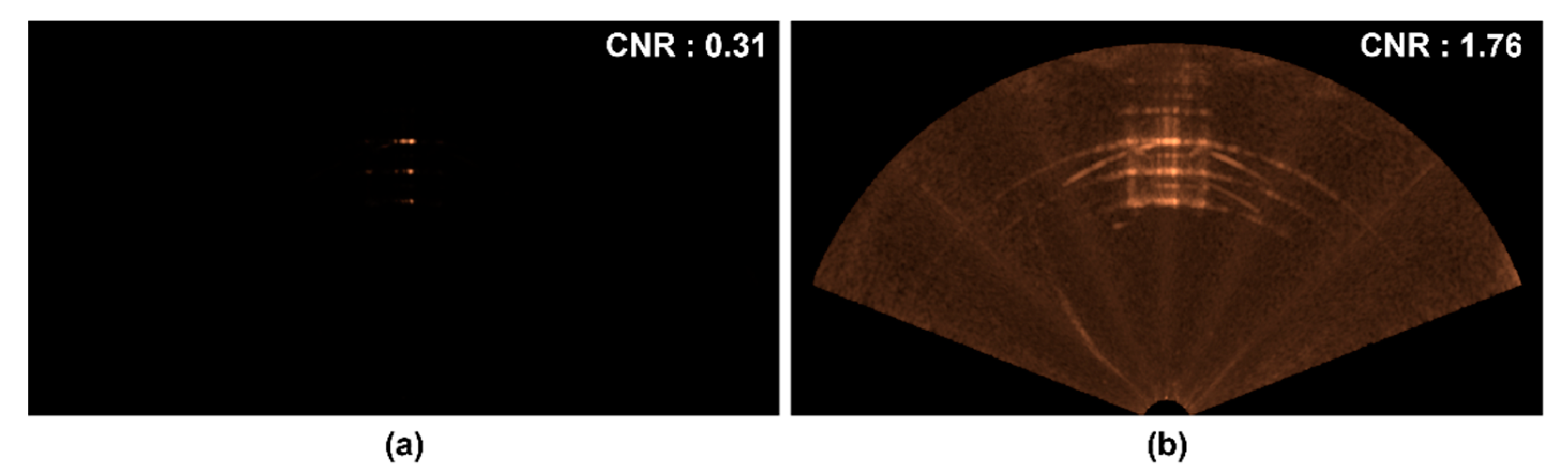

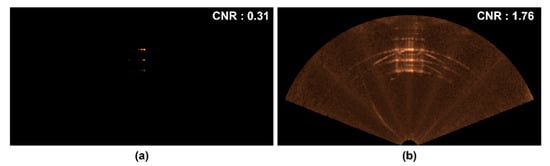

Figure 10 shows the original sonar image and the enhanced image by median filter and gamma correction. The image after median filter and gamma correction showed more clear distinction of RGB phantom to the background than the original sonar image. In addition, we calculated the contrast-to-noise ratio (CNR) to evaluate the quality of sonar images using Equation (11) [27]. and are the average pixel values of region of interest (ROI) drawn on the background and on the RGB phantom regions of the sonar image, respectively. The noise term, , is the SD of the background ROI. The CNRs of before and after enhancement are 0.31 and 1.76, respectively. The CNR for 28 original sonar images was 0.33 0.05 (mean SD), and the mean CNR was increased by six times after applying median filter and gamma correction (2.03 0.12):

Figure 10.

Single sonar image enhancement, (a) original sonar image and (b) its enhanced image by median filter (5 × 5) and gamma correction ( = 0.2).

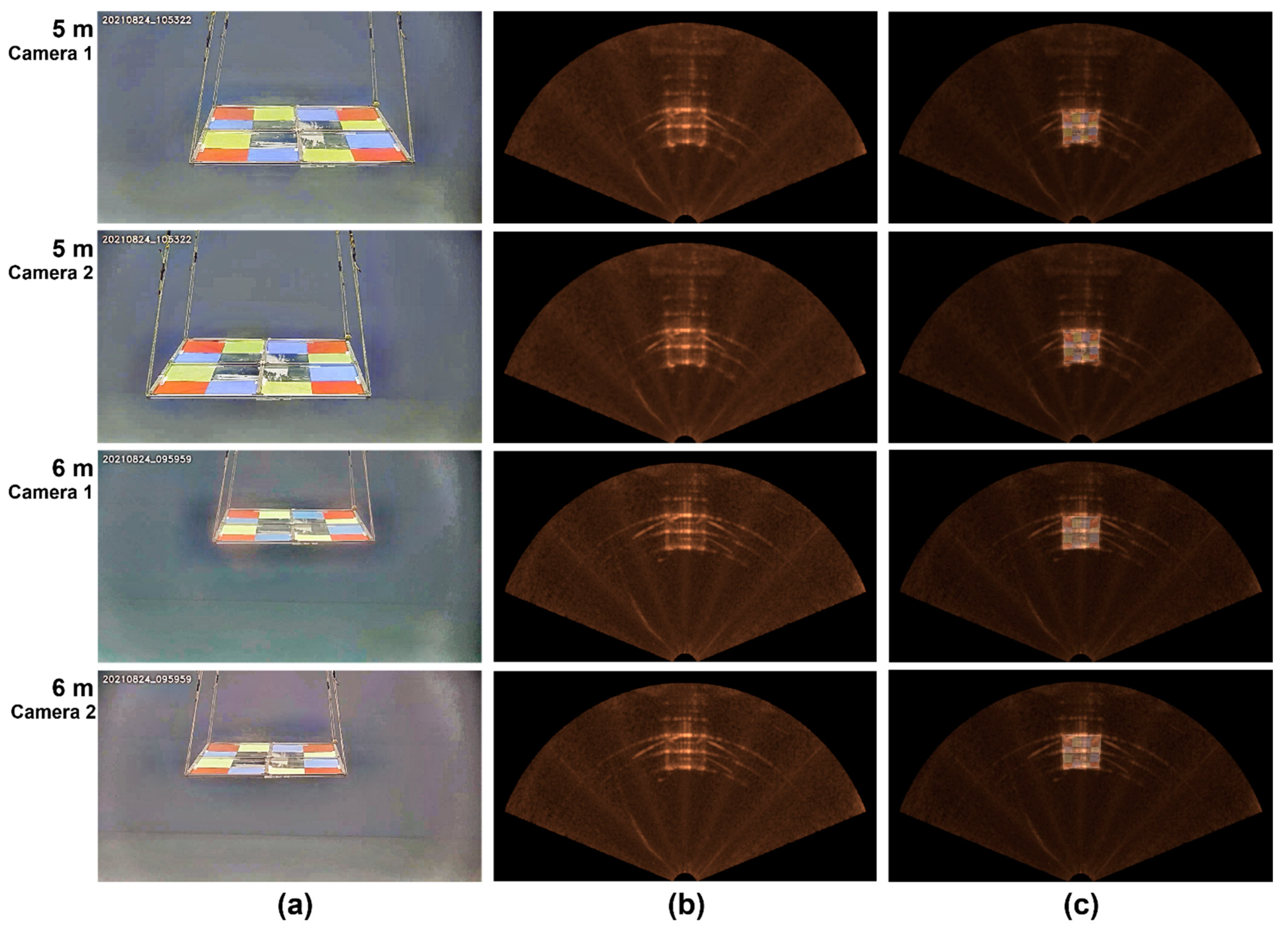

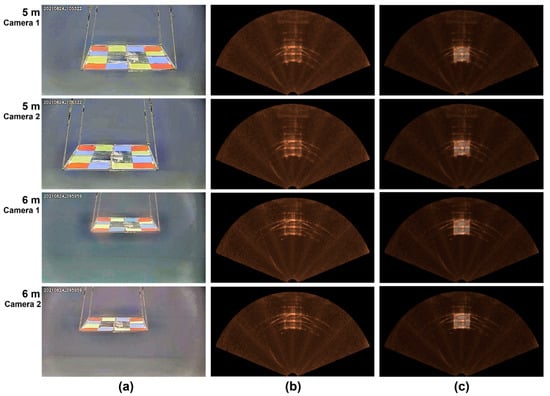

Figure 11 shows the fused optical-sonar images with the calibrated transformation matrices after single-image enhancement. Both optical and sonar images were obtained simultaneously at a distance of 5 and 6 m from the optical-sonar fusion system. The fused image showed good spatial agreement between the optical and sonar images. The fused optical and sonar image in the fan-shaped coordinate provides simultaneously not only RGB color of the interested object, but also the distance. In order to evaluate quantitatively the spatial agreement between the optical and sonar images, Equation (12) calculates intersection over union (IoU). IoU is the intersected () to the union () area rate of the sonar and optical pixels corresponding to the RGB phantom region in the fused image. In Table 4, IoU at the distances of 5 and 6 m were 69 and 81% on average, respectively:

Figure 11.

Enhanced and fused optical and sonar images taken at different distances of 5 and 6 m from the fusion system, (a) enhanced optical images (top and bottom rows are images measured from camera 1 and 2 at each distance, respectively), (b) enhanced sonar images, (c) overlayered optical color image on the sonar image.

Table 4.

IoU result value by distance and camera.

4. Conclusions

We developed an optical-sonar fusion system with two underwater cameras and a multi-beam sonar and proposed a geometric calibration method for two different imaging sensors. In addition, we studied single-image enhancement techniques and multi-data fusion of optical cameras and multi-beam sonars, which are mainly used as imaging sensors for underwater tasks. To compensate for the color casting and low visibility of the optical images, we adopted image fusion comprising three steps: white balancing, contrast and edge enhancement, and enhanced image fusion. Noise reduction and contrast enhancement of the sonar images were conducted using a median filter and gamma correction. The single-enhancement techniques of optical and sonar images increased the visibility of the objects of interest in both images, and the figure of merit for performance evaluation was higher than that of the original underwater images. Both the enhanced optical and sonar images were fused with calibrated transformation matrices between different imaging coordinates. The fused image in sonar image coordinates showed qualitatively good spatial agreement and the average IoU was 75% between the optical and sonar pixels in the fused images. The calibration and fusion methods proposed in this study can be applied to other sonar systems, such as synthetic aperture sonar, by estimating the transformation matrix between two image coordinates from the paired corner points extracted from the simultaneously measured sonar and optical images [28,29]. Single-image enhancement techniques and the optical-sonar fusion system will help to visualize and understand underwater situations with color and distance information for unmanned works.

Author Contributions

Conceptualization, H.-G.K. and S.M.K.; methodology, H.-G.K., J.S. and S.M.K.; software, H.-G.K. and S.M.K.; validation, H.-G.K., J.S. and S.M.K.; formal analysis, H.-G.K. and S.M.K.; investigation, H.-G.K., J.S. and S.M.K.; resources, J.S. and S.M.K.; data curation, H.-G.K. and S.M.K.; writing—original draft preparation, H.-G.K.; writing—review and editing, H.-G.K., J.S. and S.M.K.; visualization, H.-G.K., J.S. and S.M.K.; supervision, S.M.K.; project administration, S.M.K.; funding acquisition, S.M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was a part of the project titled ‘Development of unmanned remote construction aided system for harbor infrastructure’, (20210658) funded by the Ministry of Oceans and Fisheries, Korea, a part of the project titled ‘Research on key IoT technologies for real time ocean data sensing’, (PEA0032) funded by the Korea Institute of Ocean Science and Technology and a part of the National Research Foundation of Korea (Development of Underwater Stereo Camera Stereoscopic Visualization Technology, NRF-2021R1A2C2006682) with funding from the Ministry of Science and ICT in 2022.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mobley, C.D.; Mobley, C.D. Light and Water: Radiative Transfer in Natural Waters; Academic Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Blondel, P. The Handbook of Sidescan Sonar; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Çelebi, A.T.; Ertürk, S. Visual enhancement of underwater images using empirical mode decomposition. Expert Syst. Appl. 2012, 39, 800–805. [Google Scholar] [CrossRef]

- Hitam, M.S.; Awalludin, E.A.; Yussof, W.N.J.H.W.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International conference on computer applications technology (ICCAT), Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 2017, 27, 379–393. [Google Scholar] [CrossRef] [PubMed]

- Luo, M.; Fang, Y.; Ge, Y. An effective underwater image enhancement method based on CLAHE-HF. J. Phys. Conf. Ser. 2019, 1237, 032009. [Google Scholar] [CrossRef]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Park, E.; Sim, J.Y. Underwater image restoration using geodesic color distance and complete image formation model. IEEE Access 2020, 8, 157918–157930. [Google Scholar] [CrossRef]

- Yu, H.; Li, X.; Lou, Q.; Lei, C.; Liu, Z. Underwater image enhancement based on DCP and depth transmission map. Multimed. Tools Appl. 2020, 79, 20373–20390. [Google Scholar] [CrossRef]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 7159–7165. [Google Scholar]

- Wang, K.; Hu, Y.; Chen, J.; Wu, X.; Zhao, X.; Li, Y. Underwater image restoration based on a parallel convolutional neural network. Remote Sens. 2019, 11, 1591. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Johannsson, H.; Kaess, M.; Englot, B.; Hover, F.; Leonard, J. Imaging sonar-aided navigation for autonomous underwater harbor surveillance. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4396–4403. [Google Scholar]

- Chen, J.; Gong, Z.; Li, H.; Xie, S. A detection method based on sonar image for underwater pipeline tracker. In Proceedings of the 2011 Second International Conference on Mechanic Automation and Control Engineering, Inner Mongolia, China, 15–17 July 2011; pp. 3766–3769. [Google Scholar]

- Wang, X.; Li, Q.; Yin, J.; Han, X.; Hao, W. An adaptive denoising and detection approach for underwater sonar image. Remote Sens. 2019, 11, 396. [Google Scholar] [CrossRef]

- Kim, J.; Song, S.; Yu, S.C. Denoising auto-encoder based image enhancement for high resolution sonar image. In Proceedings of the 2017 IEEE Underwater Technology (UT), Busan, Korea, 21–24 February 2017; pp. 1–5. [Google Scholar]

- Sung, M.; Kim, J.; Yu, S.C. Image-based super resolution of underwater sonar images using generative adversarial network. In Proceedings of the TENCON 2018–2018 IEEE Region 10 Conference, Jeju, Korea, 28–31 October 2018; pp. 457–461. [Google Scholar]

- Sung, M.; Cho, H.; Kim, T.; Joe, H.; Yu, S.C. Crosstalk removal in forward scan sonar image using deep learning for object detection. IEEE Sens. J. 2019, 19, 9929–9944. [Google Scholar] [CrossRef]

- Lagudi, A.; Bianco, G.; Muzzupappa, M.; Bruno, F. An alignment method for the integration of underwater 3D data captured by a stereovision system and an acoustic camera. Sensors 2016, 16, 536. [Google Scholar] [CrossRef]

- Babaee, M.; Negahdaripour, S. 3-D object modeling from 2-D occluding contour correspondences by opti-acoustic stereo imaging. Comput. Vis. Image. Underst. 2015, 132, 56–74. [Google Scholar] [CrossRef]

- Kim, S.M. Single image-based enhancement techniques for underwater optical imaging. J. Ocean Eng. Technol. 2020, 34, 442–453. [Google Scholar] [CrossRef]

- Kim, H.G.; Seo, J.M.; Kim, S.M. Comparison of GAN Deep Learning Methods for Underwater Optical Image Enhancement. J. Ocean Eng. Technol. 2022, 36, 32–40. [Google Scholar] [CrossRef]

- Shin, Y.S.; Cho, Y.; Lee, Y.; Choi, H.T.; Kim, A. Comparative Study of Sonar Image Processing for Underwater Navigation. J. Ocean Eng. Technol. 2016, 30, 214–220. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Bechara, B.; McMahan, C.A.; Moore, W.S.; Noujeim, M.; Geha, H.; Teixeira, F.B. Contrast-to-noise ratio difference in small field of view cone beam computed tomography machines. J. Oral Sci. 2012, 54, 227–232. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Yang, P. An improved imaging algorithm for multi receiver SAS system with wide-bandwidth signal. Remote Sens. 2021, 13, 5008. [Google Scholar] [CrossRef]

- Bülow, H.; Birk, A. Synthetic aperture sonar (SAS) without navigation: Scan registration as basis for near field synthetic imaging in 2D. Sensors 2020, 20, 4440. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).