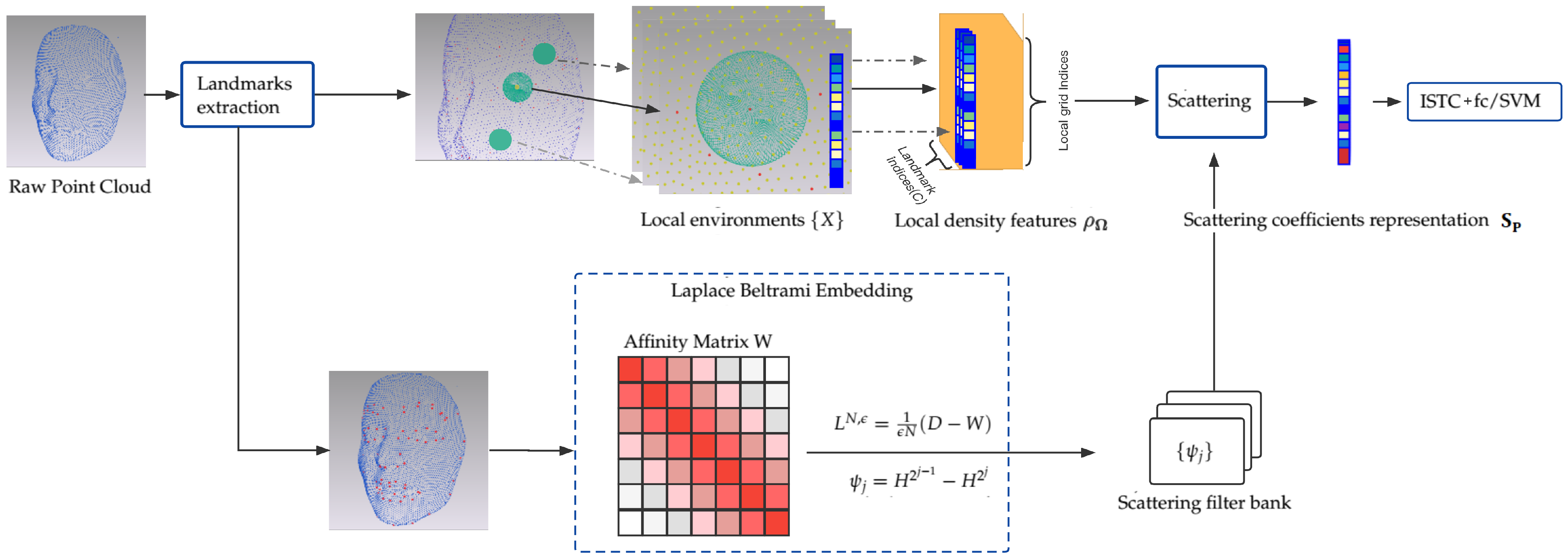

The overall framework is illustrated in

Figure 1. First, manual landmark points (22 for the Bosphorus dataset and 83 for the 3D-BUFE dataset) are obtained from raw point clouds. Our method then treats them as the starting point for two synchronous routes. In the first route (the upper route in

Figure 1), we consider each landmark as the origin of a discretized Gaussian kernel with adaptive width, followed by a KNN search to determine its neighbors, the Euclidean radial distances of which inversely contribute to computing the smoothed local spatial representation. By and large, this function is one form of the classical kernel density estimation [

30] scheme, which preserves the simplicity while regularizing the scattered position representation into a continuous form without losing resolution. Though this description is extrinsic in terms of sensitivity to local permutation of the indexing/order, the manifold scattering transform can help to regularize the underlying overall geometry of the expression manifold; see

Section 3.1 for details of this spatial density descriptor.

The second route (the lower route in

Figure 1) involves finding a common structure in order to identify the expression from the raw point cloud representation. This structure should devote itself to representing identity-unrelated parts. In 3D FER, a self-evident condition is that both expressions and other identity-unrelated behaviors affect mostly local regions, which can include stretching, local rotation, and other diffeomorphisms. In this intuition, we find that diagonalizing the affinity matrix of the landmarks set and then computing the corresponding diffusion structures (similar to graph network embedding solutions [

31,

32], which imitate a discrete approximation Laplacian Beltrami Operator (LBO) on a manifold) has a good chance of achieving this goal. Note that because the landmark set is quite small, the eigenvectors of a heat kernel

H are easy to obtain and should be sufficient to represent the coarse geometry of an expressed face. Specifically, the raw point cloud face scan is treated as a small graph built from the landmarks set; in our example, 22/83 landmarks from a Bosphorus/3D-BUFE sample could be spectral decomposed into eigenvalues and eigenfunctions, with the

K top components then truncated and fed to parameterize the scattering filter bank and the corresponding network; the details of implementing this parameterization can be found in

Section 3.2.

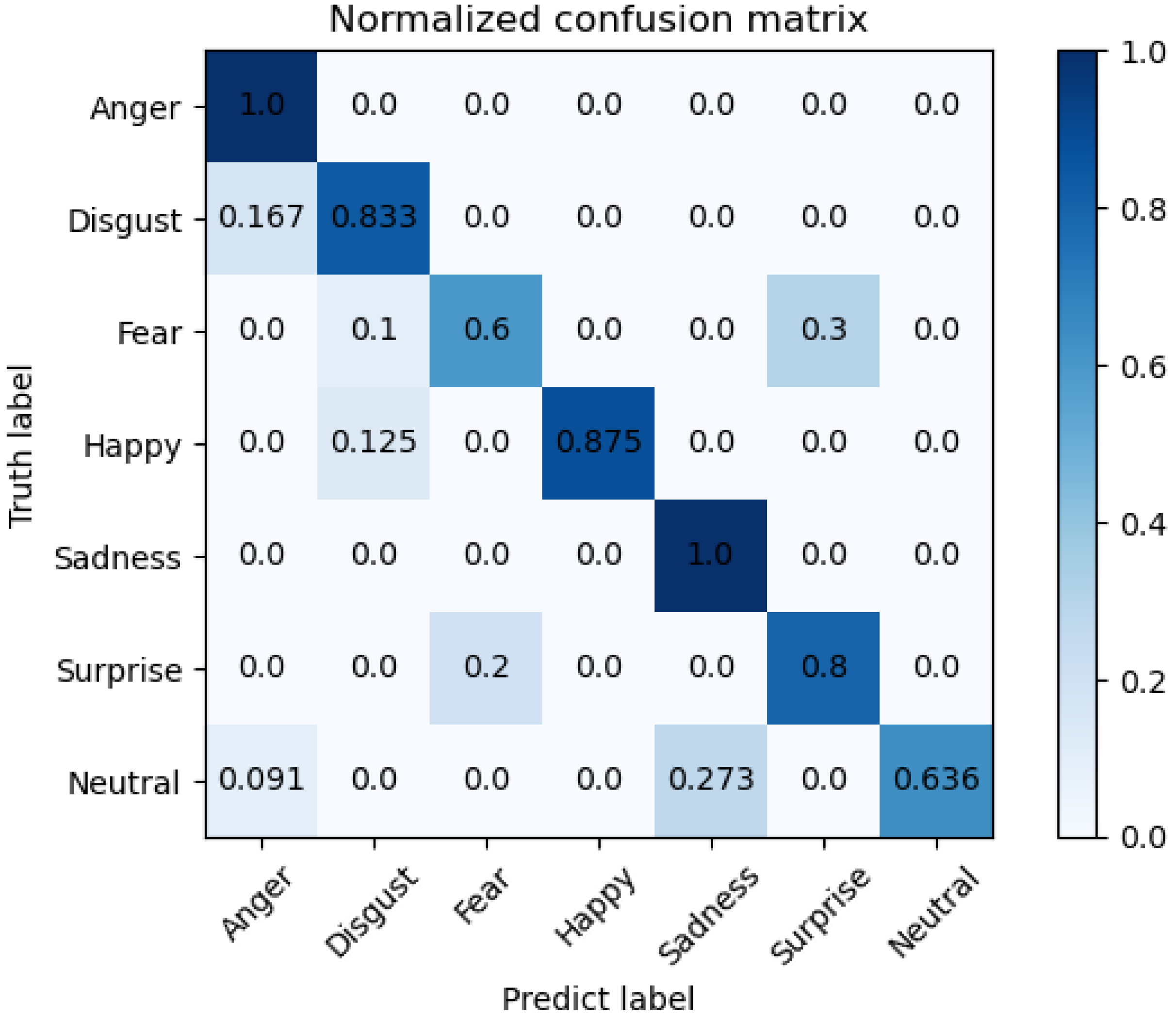

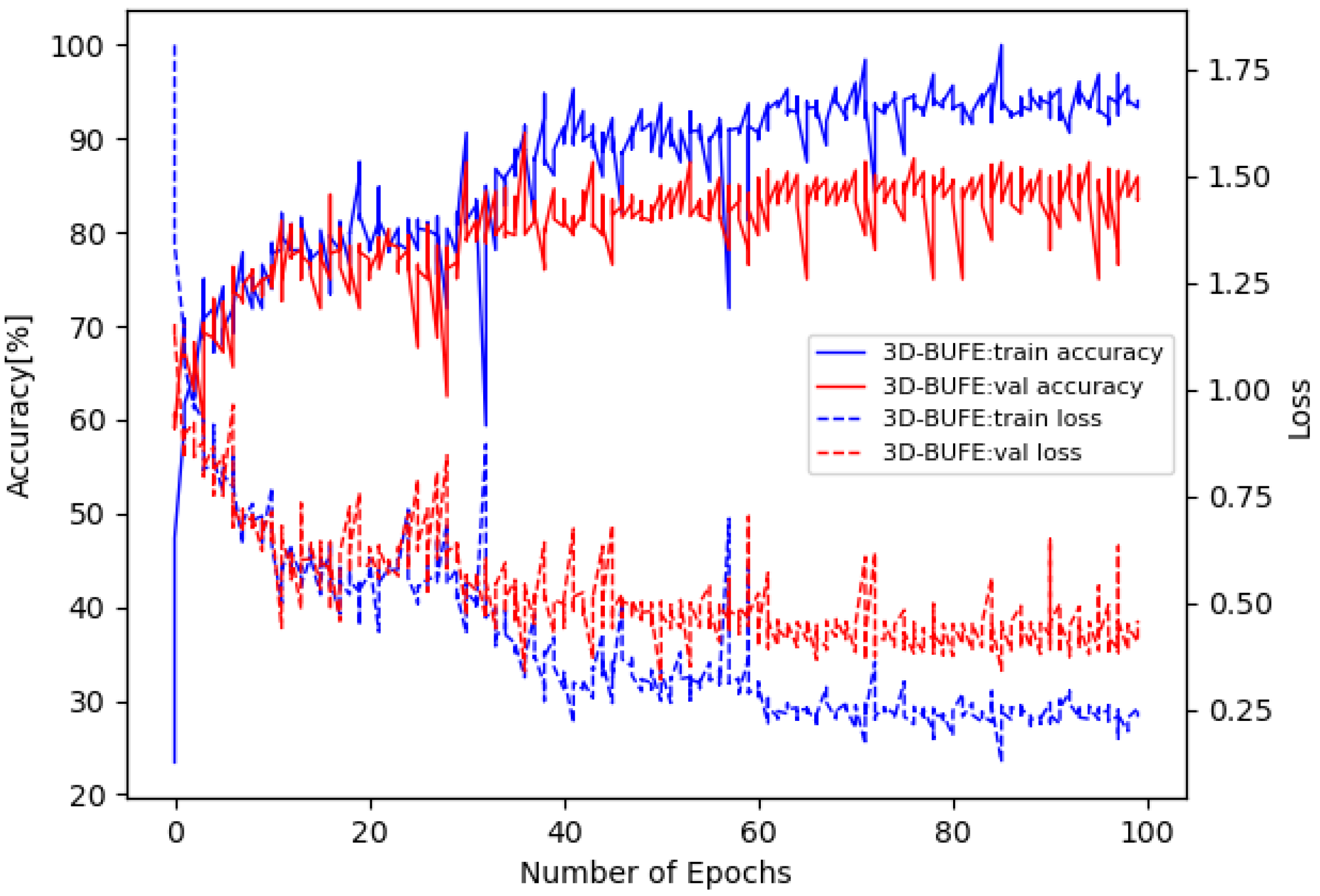

Finally, by equipping prevalent classifiers, e.g., SVM/Neural Networks, certain components of the signal are selected to enhance the recognition accuracy. Specifically, we note that the high dimensional nature of scattering coefficients representation

may lead to overfitting during training. Therefore, a sparse learning structure [

33] is inserted to provide non-linearity and enhance the sparsity of features, which are then fed to the fully connected layer for classification. An improvement in accuracy can be observed afterward, and we compare the performance using the SVM with an RBF (radial basis function) kernel as the classifier. The details of these experiments are reported in

Section 4.

3.1. Local Density Descriptor

At first, the raw point cloud’s high dimensionality tends to diminish the ability of Euclidean convolution or other deep learning methods that hold prior assumptions as to the signal’s properties, such as its smoothness and compactness. Moreover, unlike the body meshes utilized in [

27], face scan samples have more complex local geometries and irregular overall variance distributions, which increases the probability of overfitting or gradient explosion in the training phase. On the other hand, an isometry-invariant local descriptor and mesh reconstruction scheme can block the development of a real-time-capable approach. In this case, we apply a lighter local feature extraction approach to describe the expression manifolds with the occurrence probability density of the local point clusters and aggregate the localities by computing the eigenvector of landmark points and constructing the corresponding semi-group diffusion heat maps to surmount the prevalent existence of sampling non-uniformity.

We suppose a face scan, denoted as , within which we extract C landmark points, denoted as a landmark set ; we then embed this into a small graph , where is the landmark point index set and W is a symmetric matrix.

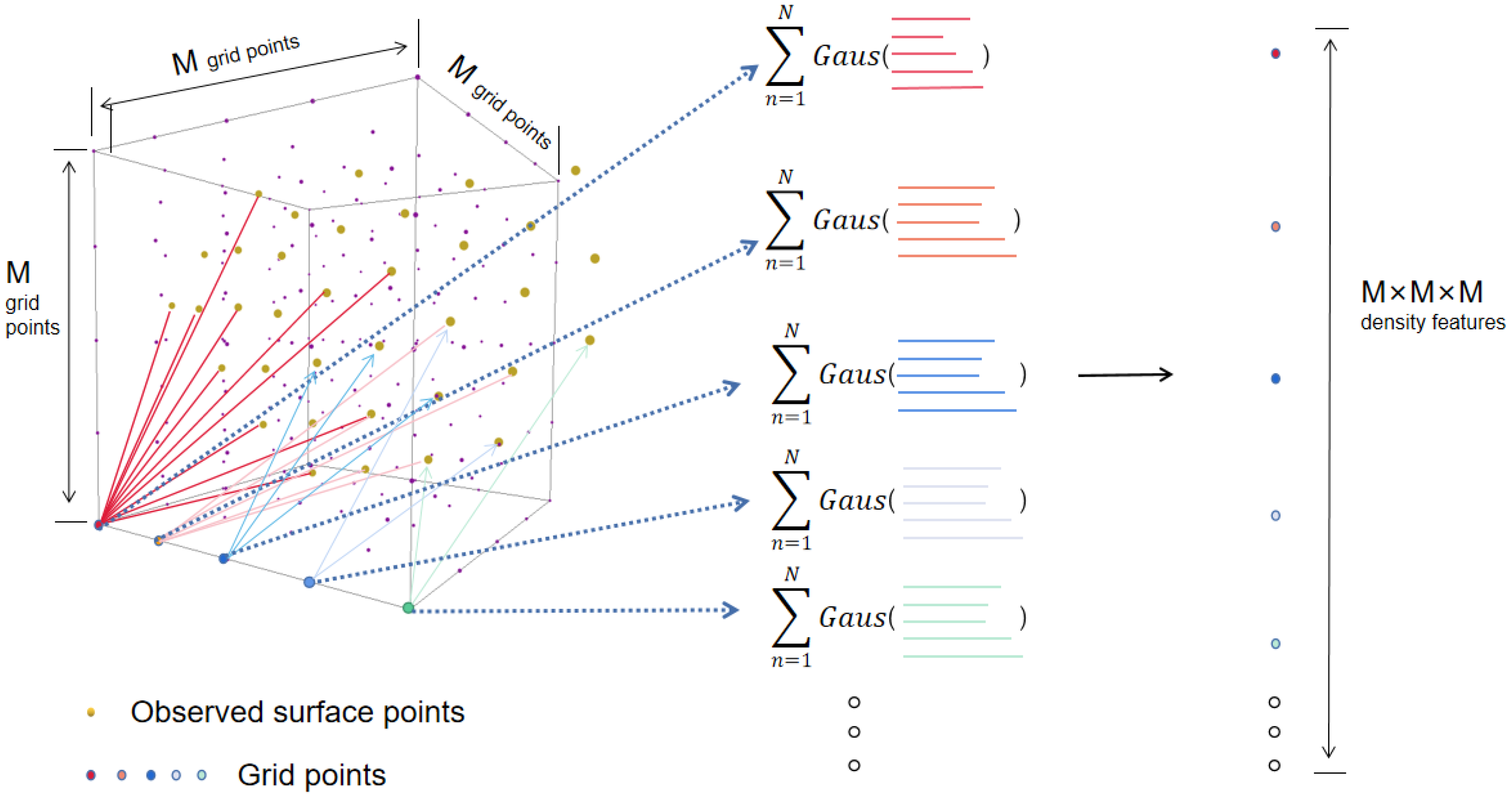

The following process of obtaining a local feature starts with the construction of local reference frames centered at each landmark point

, which can be seen as a local “atomic environment”

(See

Figure 2). In each

, a small face patch around each landmark point shares a generic pattern relating to expressions across any subject. By aligning a kernel function based on the distribution of

N observed points

in each local reference environment

, the resulting local probability density of observing a point at grid positions within each environment is a smoothed and discriminative representation/feature

which is a sum of Gaussian functions at the local regular reference lattice function

. This kernel maps the Euclidean distance from scattered points

to a probability distribution and slices each

into C local receptive fields; by normalizing each density function according to the adjustment of

, it eventually defines a global piece-wise density representation

The above approach encodes a raw point cloud face into a more regular continuous probability density representation, with local fields being invariant to permutations of the input order and each characteristic vector holding a correspondent length, thereby enabling windowed operations. Moreover, the length of local point sets can be arbitrary, and non-uniform sampling affects the results as an additive bias to the signal on each grid point.

The isometry within each local area can be treated individually by adopting the coarse graph embedding induced by each sample’s sparse landmarks set, with the induced wavelet filters parameterized to the corresponding direction decided thereby.

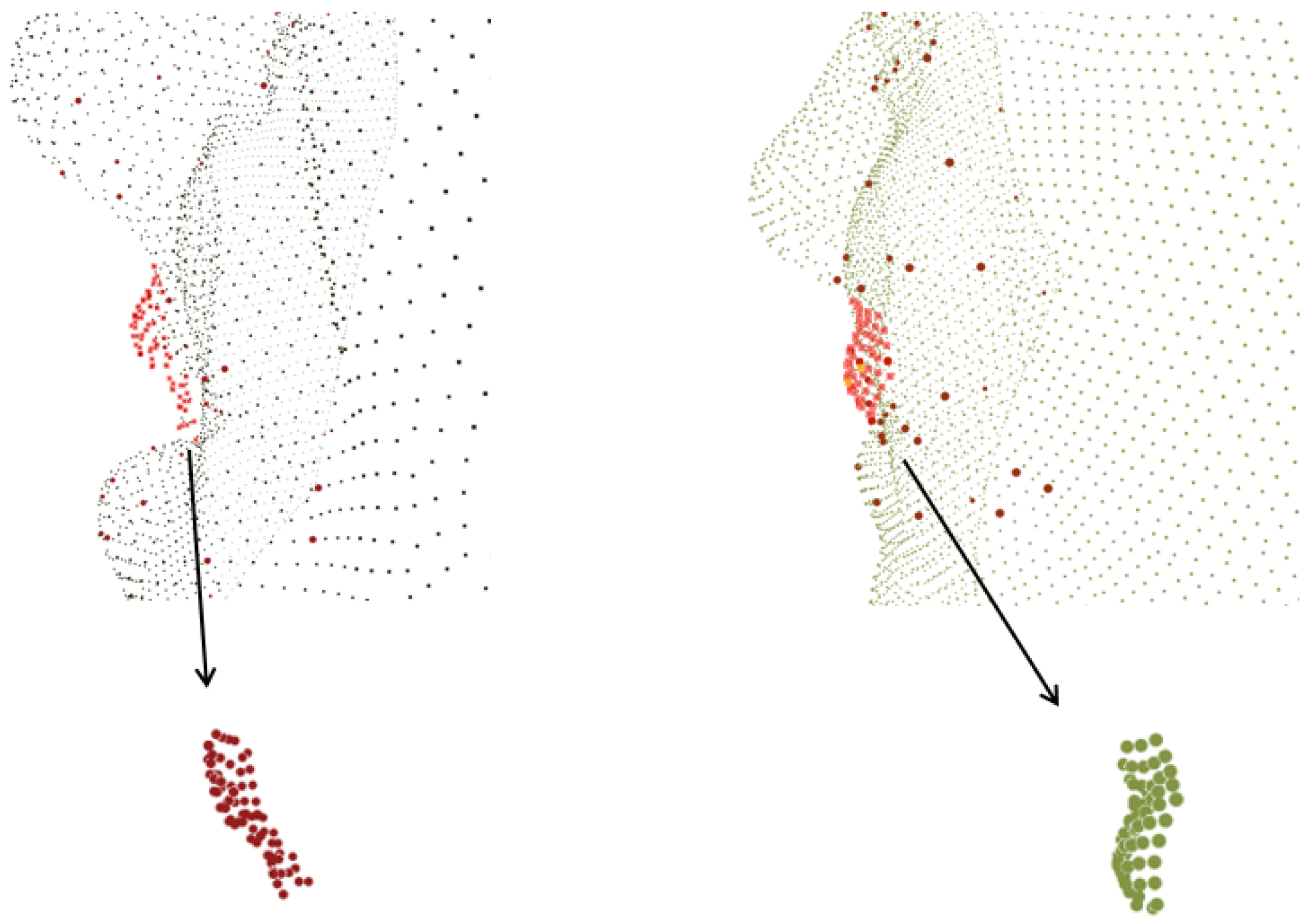

With the above property, each lattice descriptor abstracts the shape variations with respect to diffeomorphism, while global isometry only influences the result to a limited degree; see

Figure 3 for a visualization. Note that the local descriptor can easily be substituted by multiple species of descriptors; for instance, the 3D HoG descriptor [

34] defines a process including an explicit conversion from raw point cloud data back to a depth map, then computing the statistics feature on a fixed angled plane to form the representation. However, this kind of operation inevitably loses the fineness of raw scan results, as the depth map yields a regular 2D domain. The curvature descriptors and SIFT feature descriptors rely on transforming 3D point clouds into surface representations with reduced depth information. In contrast, our descriptor captures the density feature within a solid-structured base space, where variations in each axis can be reserved.

3.2. Manifold Scattering Transform on Face Point Clouds

With the above local spatial features in hand, we can build a global representation with spectral embedding. Other than embedding all the points into a whole graph or manifold, for 3D FER there is a prior property that can help reduce the computation complexity. First, the description of local geometry is likely to be affected by global as well as local rotations, and applying a rotation-invariant descriptor (as in [

35]) or a harmonic descriptor eliminates isometry (as in most spectral embedding methods) leads to the loss of too much information. In the example from 3D-BUFE shown in

Figure 4, a neighborhood cluster is found and denoted as points in red; to reserve the finest possible geometric features, our local generic function

can be aligned to an underlying surface defined by spectral embedding, as expressions behave locally as both planar stretching/perpendicular haunch-up with rigid rotation. This delicate and significant variable can be decoupled from the global rotation brought by pose variation.

In addition, we note that disturbance induced by translating in does not affect this intrinsic representation, as the above embedding is intrinsic to the global isometry, which includes translation. The complexity arising from the arbitrary variation brought about by each subject’s inherent shape becomes more intractable in the case that it entangles the representation. With these considerations, we need to construct a general structure that is stable to global isometry and order permutation while being able to apply the extended convolutional-like operations to align the local signal and compare it as the discriminative feature for recognition.

A scattering transform is a hierarchical framework with a geometric group structure obtained by constructing pre-defined dyadic wavelet layers that has been extended to manifold scattering transform with spectral embedding and diffusion maps [

27,

36]. With respect to 3D FER, the raw point cloud face can be represented as

, where

n indicates the length of local reference grid point. In practice, this is set as

, which is in the form of high dimensional representation. A relatively more common approach is to embed such a generic function into

and then implement spectral convolution with a defined spectral path, e.g., diffusion maps or a Random Walk scheme [

31].

Alternatively, a direct way to consume point cloud data was proposed in [

27] by constructing the heat semi-group process characterized by the operation path

, where the constructed convolution is defined as

where

f is from exterior feature descriptors such as SHOT [

20].

However, our task here is neither about classifying exterior signals on fixed manifolds nor just manifolds; rather, it is about classifying hybrid representations with specified underlying geometry. Based on this observation, we abandon the exterior generic function, instead using the local density feature function from 3.2 to obtain the convolved density feature functions as follows:

Because the above differential configuration of

enables further associating the negative Laplace Beltrami operator

and constrains the initial condition as

, a heat process operator on manifold

is provided by the heat equation

Note that we do not simply apply the approximating algorithm from

Section 3.2 in [

27] to approximate the Laplace Beltrami operator; rather, we utilize the landmarks set

to compute the spectral decomposition, which only undertakes the role of the skeleton to align samples into generic coarse underlying geometry while eliminating the influences of extrinsic isometry. Specifically, we denote D and W as the diagonal degree matrix and affinity matrix of the landmarks point set from regular spectral embedding methods, with N being the length of the landmark sequence and

is the estimated width parameter. The discrete approximation

is provided by

and most importantly, the wavelet transform can be constructed and specifically parameterized as

where

and the global low-pass filter is

. The diffusion time scale

t, which here indexes the geometric changes along the increasing width of receptive fields and the wavelets to capture multi-scale information within each scale, can be computed by

Then, with the defined wavelets, the first-order scattering moments can be computed as follows:

where

and

indicate the scaling steps and higher order moments, respectively; an absolute nonlinear operation on the coefficients provides the wanted invariant property within this layer.

By iterating the above procedure, the resulting second-order output is

Finally, the

qth zero-order moments are the integration on

:

and by concatenating these orders of moments as the overall representation of one sample, they can be input into trained classifiers such as SVM or Neural Networks to accomplish expression classification.