Improvement of AD-Census Algorithm Based on Stereo Vision

Abstract

:1. Introduction

2. Focused Problems

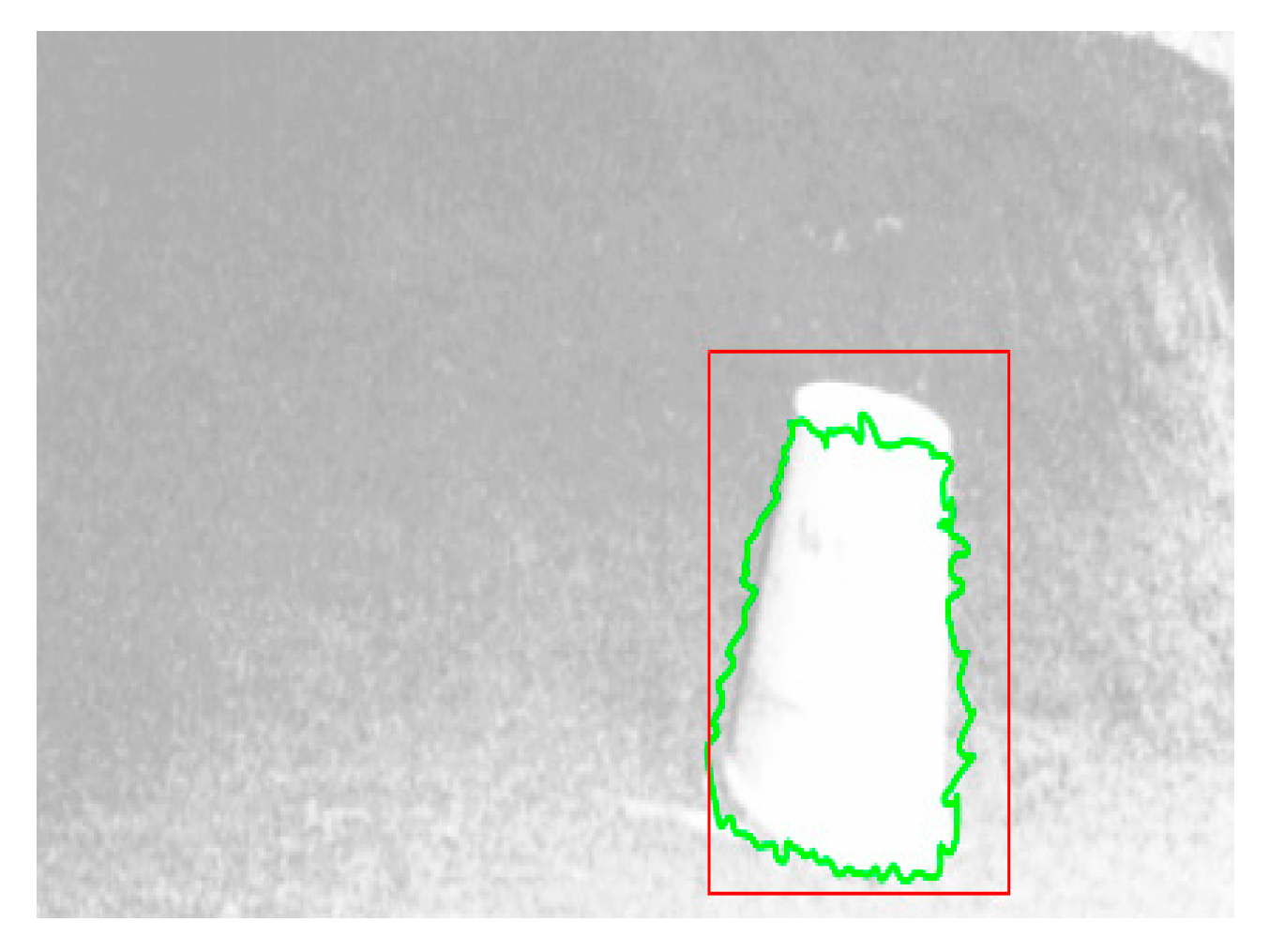

2.1. AD Algorithm

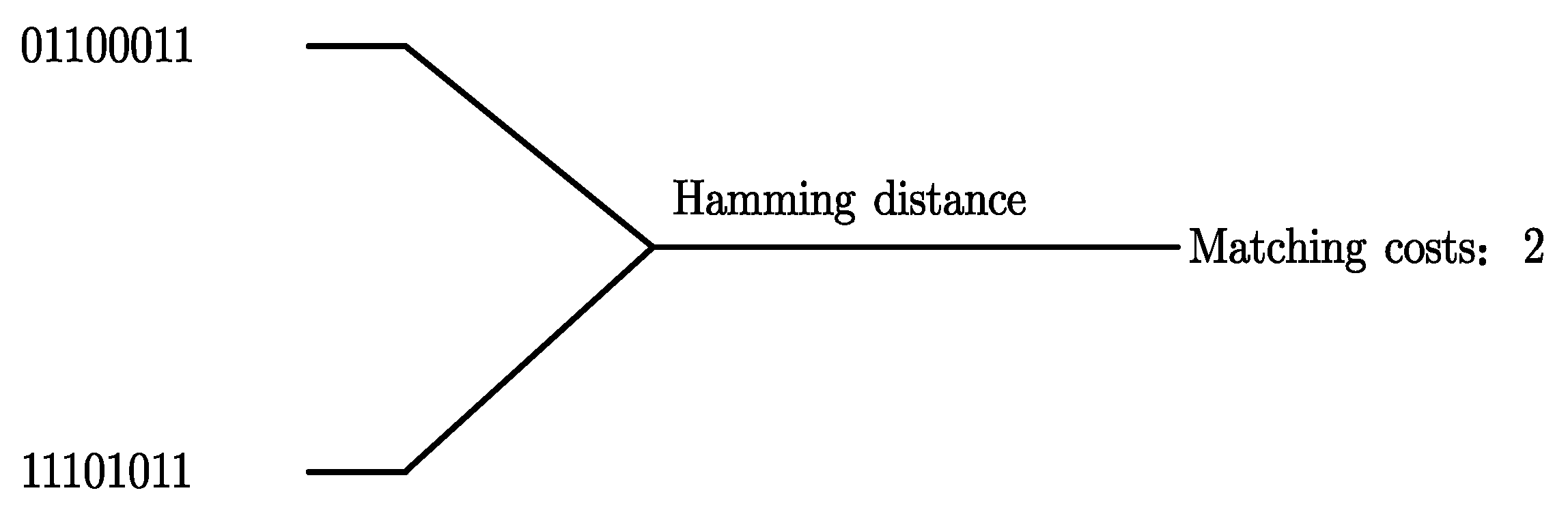

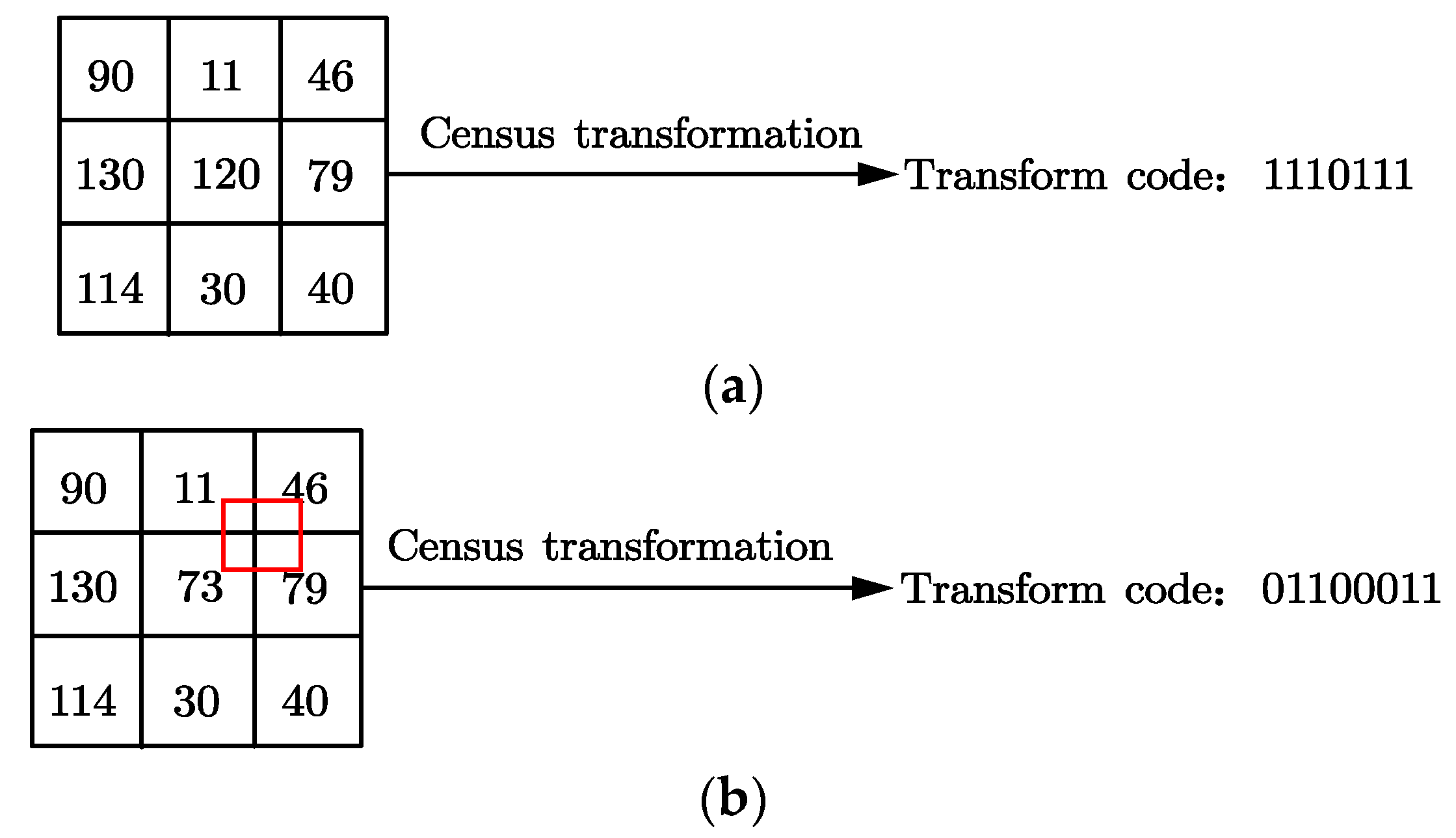

2.2. Census Algorithm

3. Improved AD-Census Algorithm

3.1. Noise Reduction

3.2. Adaptive Window

3.3. Improved AD-Census Algorithm

- (1)

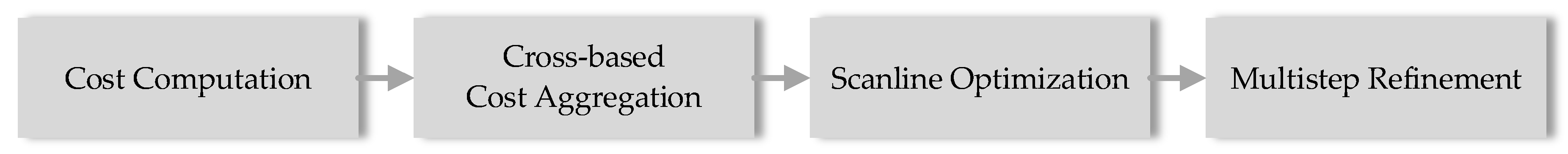

- Cost computation. The similarity between the left and right images is calculated and then evaluated. The AD algorithm and the Census algorithm are used to calculate the matching cost, respectively. The results of the two algorithms are fused to form the AD-Census cost. In the cost computation with the Census algorithm, the central value of each pixel point is replaced with the average value to achieve noise reduction and improve the matching accuracy.

- (2)

- Cross-based cost aggregation. In this paper, we use the same cost aggregation method, CBCA, as the original AD-Census algorithm. In the cost aggregation, two iterations are used, which differs from the four iterations in the original algorithm. The direction of iteration is also different from CBCA. The first iteration grows horizontally and then grows vertically in the window, and the second iteration is the exact opposite. The smaller of the two is taken as the cost aggregation value, which is also different from the final aggregated generation value in the original algorithm. In this way, the mismatching rate in the disparity discontinuity region can be effectively reduced.

- (3)

- Scanline optimization. After the cost aggregation, the most suitable disparity value is selected from the disparity map.

- (4)

- Multistep refinement. The accuracy of the algorithm can be improved by detecting and eliminating errors that arise due to errors in the first three steps.

- (5)

- The flow chart of the improved AD-Census algorithm is as shown in Figure 4.

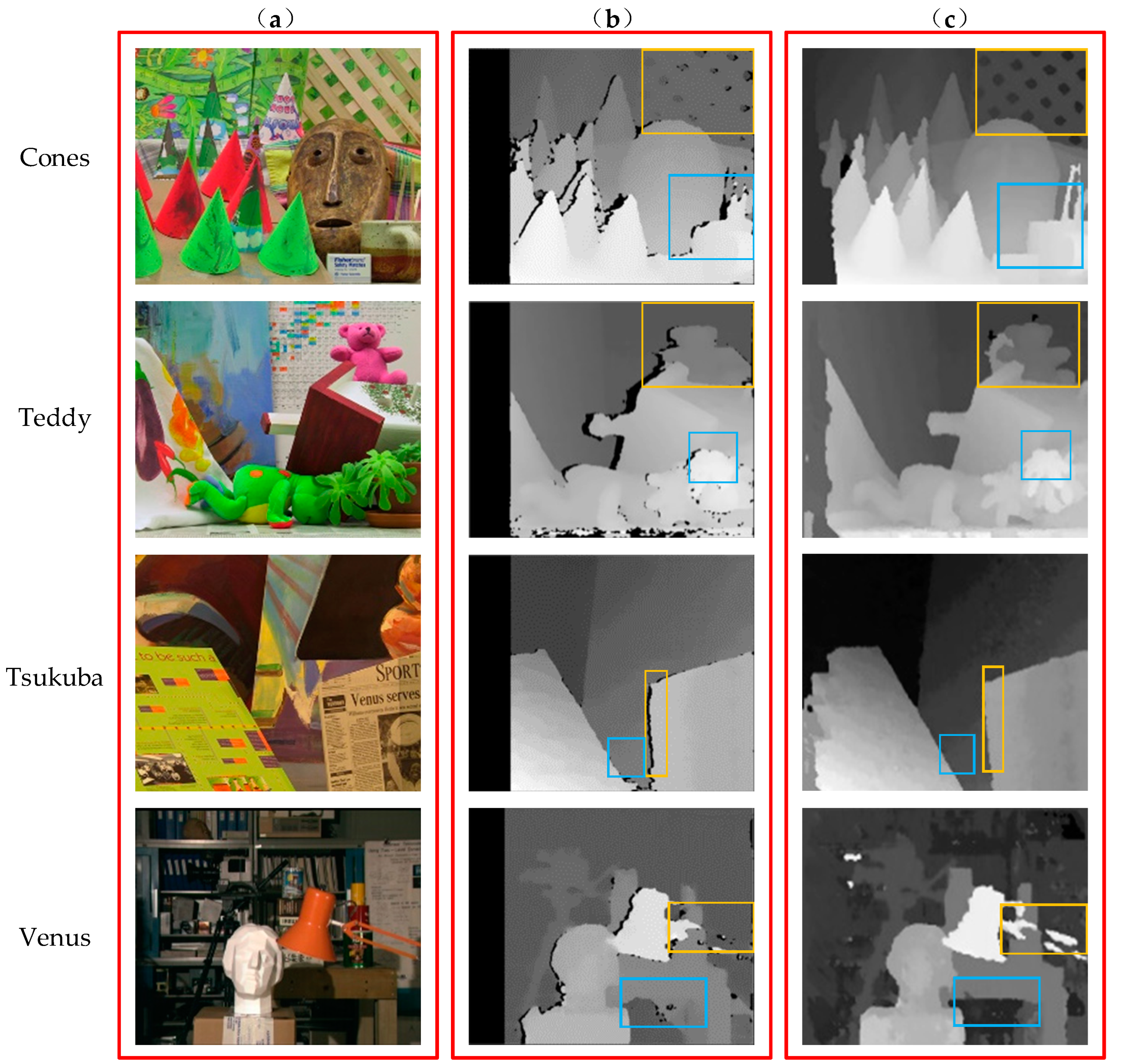

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, T.; Chao, C.; Yao, Z.; Xu, K.; Zhang, W.; Ding, X.; Liu, S.; Zhao, Z.; An, Y.; Wang, B. The Technology of Lunar Regolith Environment Construction on Earth. Acta Astronaut. 2021, 178, 216–232. [Google Scholar] [CrossRef]

- Wedler, A.; Schuster, M.J.; Müller, M.G.; Vodermayer, B.; Meyer, L.; Giubilato, R.; Vayugundla, M.; Smisek, M.; Dömel, A.; Steidle, F. German Aerospace Center’s Advanced Robotic Technology for Future Lunar Scientific Missions. Philos. Trans. R. Soc. A 2021, 379, 20190574. [Google Scholar] [CrossRef] [PubMed]

- Silvestrini, S.; Lunghi, P.; Piccinin, M.; Zanotti, G.; Lavagna, M.R. Artificial Intelligence Techniques in Autonomous Vision-Based Navigation System for Lunar Landing. In Proceedings of the 71st International Astronautical Congress (IAC 2020), Dubai, United Arab Emirates, 12–16 October 2020; pp. 1–11. [Google Scholar]

- Ge, T.; Xu, Z.-D.; Yuan, F.-G. Predictive Model of Dynamic Mechanical Properties of VE Damper Based on Acrylic Rubber–Graphene Oxide Composites Considering Aging Damage. J. Aerosp. Eng. 2022, 35, 04021132. [Google Scholar] [CrossRef]

- Sadavarte, R.S.; Raj, R.; Babu, B.S. Solving the Lunar Lander Problem Using Reinforcement Learning. In Proceedings of the 2021 IEEE International Conference on Computation System and Information Technology for Sustainable Solutions (CSITSS), Bangalore, India, 16–18 December 2021; IEEE: Piscataway, NJ, USA; pp. 1–6. [Google Scholar]

- Wang, F.; Jia, K.; Feng, J. The Real-Time Depth Map Obtainment Based on Stereo Matching. In Proceedings of the Euro-China Conference on Intelligent Data Analysis and Applications, Fuzhou, China, 7–9 November 2016; Springer: Berlin/Heidelberg, Germany; pp. 138–144. [Google Scholar]

- Chenyuan, Z.; Wenxin, L.I.; Qingxi, Z. Research and Development of Binocular Stereo Matching Algorithm. J. Front. Comput. Sci. Technol. 2020, 14, 1104. [Google Scholar]

- Do, P.N.B.; Nguyen, Q.C. A Review of Stereo-Photogrammetry Method for 3-D Reconstruction in Computer Vision. In Proceedings of the 2019 19th International Symposium on Communications and Information Technologies (ISCIT), Ho Chi Minh City, Vietnam, 25–27 September 2019; IEEE: Piscataway, NJ, USA; pp. 138–143. [Google Scholar]

- Roberts, L.G. Machine Perception of Three-Dimensional Solids; Massachusetts Institute of Technology: Cambridge, MA, USA, 1963. [Google Scholar]

- Yao, D.; Li, F.; Wang, Y.; Yang, H.; Li, X. Using 2.5 D Sketches for 3D Point Cloud Reconstruction from A Single Image. In Proceedings of the 2021 the 5th International Conference on Innovation in Artificial Intelligence, Xiamen, China, 5–8 March 2021; pp. 92–98.

- Barnard, S.T.; Fischler, M.A. Computational Stereo. ACM Comput. Surv. CSUR 1982, 14, 553–572. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Zhou, X.-Z.; Wen, G.-J.; Wang, R.-S. Fast Stereo Matching Using Adaptive Window. Chin. J. Comput. Chin. Ed. 2006, 29, 473. [Google Scholar]

- Yoon, K.-J.; Kweon, I.S. Adaptive Support-Weight Approach for Correspondence Search. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 650–656. [Google Scholar] [CrossRef]

- Nalpantidis, L.; Gasteratos, A. Biologically and Psychophysically Inspired Adaptive Support Weights Algorithm for Stereo Correspondence. Robot. Auton. Syst. 2010, 58, 457–464. [Google Scholar] [CrossRef]

- Kowalczuk, J.; Psota, E.T.; Perez, L.C. Real-Time Stereo Matching on CUDA Using an Iterative Refinement Method for Adaptive Support-Weight Correspondences. IEEE Trans. Circuits Syst. Video Technol. 2012, 23, 94–104. [Google Scholar] [CrossRef]

- Peña, D.; Sutherland, A. Disparity Estimation by Simultaneous Edge Drawing. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany; pp. 124–135. [Google Scholar]

- Keselman, L.; Iselin Woodfill, J.; Grunnet-Jepsen, A.; Bhowmik, A. Intel Realsense Stereoscopic Depth Cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1–10. [Google Scholar]

- Chai, Y.; Cao, X. Stereo Matching Algorithm Based on Joint Matching Cost and Adaptive Window. In Proceedings of the 2018 IEEE 3rd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 October 2018; IEEE: Piscataway, NJ, USA; pp. 442–446. [Google Scholar]

- Wu, Y.; Zeng, C.; Zhang, J.; Xiao, G.; Ren, M. Bayesian Inference Based High Framerate Stereo Matching and Its Application in Robot Manipulation. In Proceedings of the 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Suzhou, China, 29 July–2 August 2019; IEEE: Piscataway, NJ, USA; pp. 592–597. [Google Scholar]

- Liu, H.; Wang, R.; Xia, Y.; Zhang, X. Improved Cost Computation and Adaptive Shape Guided Filter for Local Stereo Matching of Low Texture Stereo Images. Appl. Sci. 2020, 10, 1869. [Google Scholar] [CrossRef]

- Zhang, B.; Zhu, D. Local Stereo Matching: An Adaptive Weighted Guided Image Filtering-Based Approach. Int. J. Pattern Recognit. Artif. Intell. 2021, 35, 2154010. [Google Scholar] [CrossRef]

- Yuan, W.; Meng, C.; Tong, X.; Li, Z. Efficient Local Stereo Matching Algorithm Based on Fast Gradient Domain Guided Image Filtering. Signal Process. Image Commun. 2021, 95, 116280. [Google Scholar] [CrossRef]

- Kong, L.; Zhu, J.; Ying, S. Local Stereo Matching Using Adaptive Cross-Region-Based Guided Image Filtering with Orthogonal Weights. Math. Probl. Eng. 2021, 2021. [Google Scholar] [CrossRef]

- Yang, S.; Lei, X.; Liu, Z.; Sui, G. An Efficient Local Stereo Matching Method Based on an Adaptive Exponentially Weighted Moving Average Filter in SLIC Space. IET Image Process. 2021, 15, 1722–1732. [Google Scholar] [CrossRef]

- Qi, J.; Liu, L. The Stereo Matching Algorithm Based on an Improved Adaptive Support Window. IET Image Process. 2022, 16, 2803–2816. [Google Scholar] [CrossRef]

- Roy, S.; Cox, I.J. A Maximum-Flow Formulation of the n-Camera Stereo Correspondence Problem. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), Bombay, India, 7 January 1998; IEEE: Piscataway, NJ, USA; pp. 492–499. [Google Scholar]

- Sun, J.; Zheng, N.-N.; Shum, H.-Y. Stereo Matching Using Belief Propagation. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 787–800. [Google Scholar]

- Veksler, O. Stereo Correspondence by Dynamic Programming on a Tree. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA; Volume 2, pp. 384–390. [Google Scholar]

- Delong, A.; Osokin, A.; Isack, H.N.; Boykov, Y. Fast Approximate Energy Minimization with Label Costs. Int. J. Comput. Vis. 2012, 96, 1–27. [Google Scholar] [CrossRef]

- Wang, L.; Yang, R.; Gong, M.; Liao, M. Real-Time Stereo Using Approximated Joint Bilateral Filtering and Dynamic Programming. J. Real-Time Image Process. 2014, 9, 447–461. [Google Scholar] [CrossRef]

- Yang, Y.; Liang, Q.; Niu, L.; Zhang, Q. Belief Propagation Stereo Matching Algorithm Using Ground Control Points. In Proceedings of the Fifth International Conference on Graphic and Image Processing (ICGIP 2013), Hong Kong, China, 26–27 October 2013; SPIE: Bellingham, WA, USA, 2014; Volume 9069, pp. 173–179. [Google Scholar]

- Li, J.; Zhao, H.; Li, Z.; Gu, F.; Zhao, Z.; Ma, Y.; Fang, M. A Long Baseline Global Stereo Matching Based upon Short Baseline Estimation. Meas. Sci. Technol. 2018, 29, 055201. [Google Scholar] [CrossRef]

- Wang, Z.; Yue, J.; Han, J.; Jin, Y.; Li, B. Regional Fuzzy Binocular Stereo Matching Algorithm Based on Global Correlation Coding for 3D Measurement of Rail Surface. Optik 2020, 207, 164488. [Google Scholar] [CrossRef]

- Hirschmuller, H. Accurate and Efficient Stereo Processing by Semi-Global Matching and Mutual Information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA; Volume 2, pp. 807–814. [Google Scholar]

- Guo, S.; Xu, P.; Zheng, Y. Semi-Global Matching Based Disparity Estimate Using Fast Census Transform. In Proceedings of the 2016 9th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Datong, China, 15–17 October 2016; IEEE: Piscataway, NJ, USA; pp. 548–552. [Google Scholar]

- Hamzah, R.A.; Ibrahim, H. Improvement of Stereo Matching Algorithm Based on Sum of Gradient Magnitude Differences and Semi-global Method with Refinement Step. Electron. Lett. 2018, 54, 876–878. [Google Scholar] [CrossRef]

- Chai, Y.; Yang, F. Semi-Global Stereo Matching Algorithm Based on Minimum Spanning Tree. In Proceedings of the 2018 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 25–27 May 2018; IEEE: Piscataway, NJ, USA; pp. 2181–2185. [Google Scholar]

- Yao, P.; Zhang, H.; Xue, Y.; Chen, S. As-global-as-possible Stereo Matching with Adaptive Smoothness Prior. IET Image Process. 2019, 13, 98–107. [Google Scholar] [CrossRef]

- Rahnama, O.; Cavalleri, T.; Golodetz, S.; Walker, S.; Torr, P. R3sgm: Real-Time Raster-Respecting Semi-Global Matching for Power-Constrained Systems. In Proceedings of the 2018 International Conference on Field-Programmable Technology (FPT), Naha, Japan, 10–14 December 2018; IEEE: Piscataway, NJ, USA; pp. 102–109. [Google Scholar]

- Cambuim, L.F.; Oliveira, L.A.; Barros, E.N.; Ferreira, A. An FPGA-Based Real-Time Occlusion Robust Stereo Vision System Using Semi-Global Matching. J. Real-Time Image Process. 2020, 17, 1447–1468. [Google Scholar] [CrossRef]

- Li, W.; Hu, R.; Gao, M. An Improved Semi-Global Stereo Matching Algorithm Based on Multi-Cost Fusion. In Proceedings of the 4th International Conference on Information Technologies and Electrical Engineering, Changde, China, 29–31 October 2021; pp. 1–6. [Google Scholar]

- Bu, P.; Zhao, H.; Yan, J.; Jin, Y. Collaborative Semi-Global Stereo Matching. Appl. Opt. 2021, 60, 9757–9768. [Google Scholar] [CrossRef]

- Li, T.; Xia, C.; Yu, M.; Tang, P.; Wei, W.; Zhang, D. Scale-Invariant Localization of Electric Vehicle Charging Port via Semi-Global Matching of Binocular Images. Appl. Sci. 2022, 12, 5247. [Google Scholar] [CrossRef]

- Wei, K.; Kuno, Y.; Arai, M.; Amano, H. RT-LibSGM: An Implementation of a Real-Time Stereo Matching System on FPGA. In Proceedings of the International Symposium on Highly-Efficient Accelerators and Reconfigurable Technologies, Tsukuba, Japan, 9–10 June 2022; pp. 1–9. [Google Scholar]

- Xu, Y.; Liu, K.; Ni, J.; Li, Q. 3D Reconstruction Method Based on Second-Order Semiglobal Stereo Matching and Fast Point Positioning Delaunay Triangulation. PLoS ONE 2022, 17, e0260466. [Google Scholar] [CrossRef]

- Zabih, R.; Woodfill, J. Non-Parametric Local Transforms for Computing Visual Correspondence. In Proceedings of the European conference on computer vision, Stockholm, Sweden, 2–6 May 1994; Springer: Berlin/Heidelberg, Germany; pp. 151–158. [Google Scholar]

- Mei, X.; Sun, X.; Zhou, M.; Jiao, S.; Wang, H.; Zhang, X. On Building an Accurate Stereo Matching System on Graphics Hardware. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA; pp. 467–474. [Google Scholar]

- Zhang, K.; Lu, J.; Lafruit, G. Cross-Based Local Stereo Matching Using Orthogonal Integral Images. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 1073–1079. [Google Scholar] [CrossRef]

| Image Set/s | ||||

|---|---|---|---|---|

| Algorithm | Cones | Teddy | Tsukuba | Venus |

| Traditional AD-Census algorithm | 3.149 | 2.854 | 1.912 | 2.755 |

| Improved AD-Census algorithm | 3.024 | 2.578 | 1.802 | 2.736 |

| MSE | ||||

|---|---|---|---|---|

| Algorithm | Cones | Teddy | Tsukuba | Venus |

| Traditional AD-Census algorithm | 7989.979 | 10,155.730 | 4812.042 | 4309.310 |

| Improved AD-Census algorithm | 6403.497 | 7030.245 | 2929.029 | 3446.573 |

| PSNR/dB | ||||

|---|---|---|---|---|

| Algorithm | Cones | Teddy | Tsukuba | Venus |

| Traditional AD-Census algorithm | 27.755 | 27.874 | 27.888 | 27.802 |

| Improved AD-Census algorithm | 28.080 | 28.005 | 27.979 | 27.965 |

| SSIM | ||||

|---|---|---|---|---|

| Algorithm | Cones | Teddy | Tsukuba | Venus |

| Traditional AD-Census algorithm | 0.138 | 0.231 | 0.239 | 0.173 |

| Improved AD-Census algorithm | 0.240 | 0.324 | 0.348 | 0.241 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Gu, M.; Zhu, Y.; Chen, G.; Xu, Z.; Guo, Y. Improvement of AD-Census Algorithm Based on Stereo Vision. Sensors 2022, 22, 6933. https://doi.org/10.3390/s22186933

Wang Y, Gu M, Zhu Y, Chen G, Xu Z, Guo Y. Improvement of AD-Census Algorithm Based on Stereo Vision. Sensors. 2022; 22(18):6933. https://doi.org/10.3390/s22186933

Chicago/Turabian StyleWang, Yina, Mengjiao Gu, Yufeng Zhu, Gang Chen, Zhaodong Xu, and Yingqing Guo. 2022. "Improvement of AD-Census Algorithm Based on Stereo Vision" Sensors 22, no. 18: 6933. https://doi.org/10.3390/s22186933

APA StyleWang, Y., Gu, M., Zhu, Y., Chen, G., Xu, Z., & Guo, Y. (2022). Improvement of AD-Census Algorithm Based on Stereo Vision. Sensors, 22(18), 6933. https://doi.org/10.3390/s22186933