Fast Underwater Optical Beacon Finding and High Accuracy Visual Ranging Method Based on Deep Learning

Abstract

1. Introduction

- The water pressure increases as the AUV’s navigation depth and volume increase. Therefore, it is necessary to reduce the size of the AUV and improve the ranging accuracy of the monocular camera. This poses a high demand for long-distance identification of small optical beacons, and the existing target detection algorithms are difficult to meet;

- With the increase in AUV working distance and the decrease in the size of the optical beacons, the light source characteristics of the optical beacon can only occupy a few pixel sizes, which makes it very difficult to locate the centroid pixel coordinates of the light source;

- The traditional Perspective-n-point (PnP) algorithm for optical beacon attitude calculation has low accuracy for long-distance target pose.

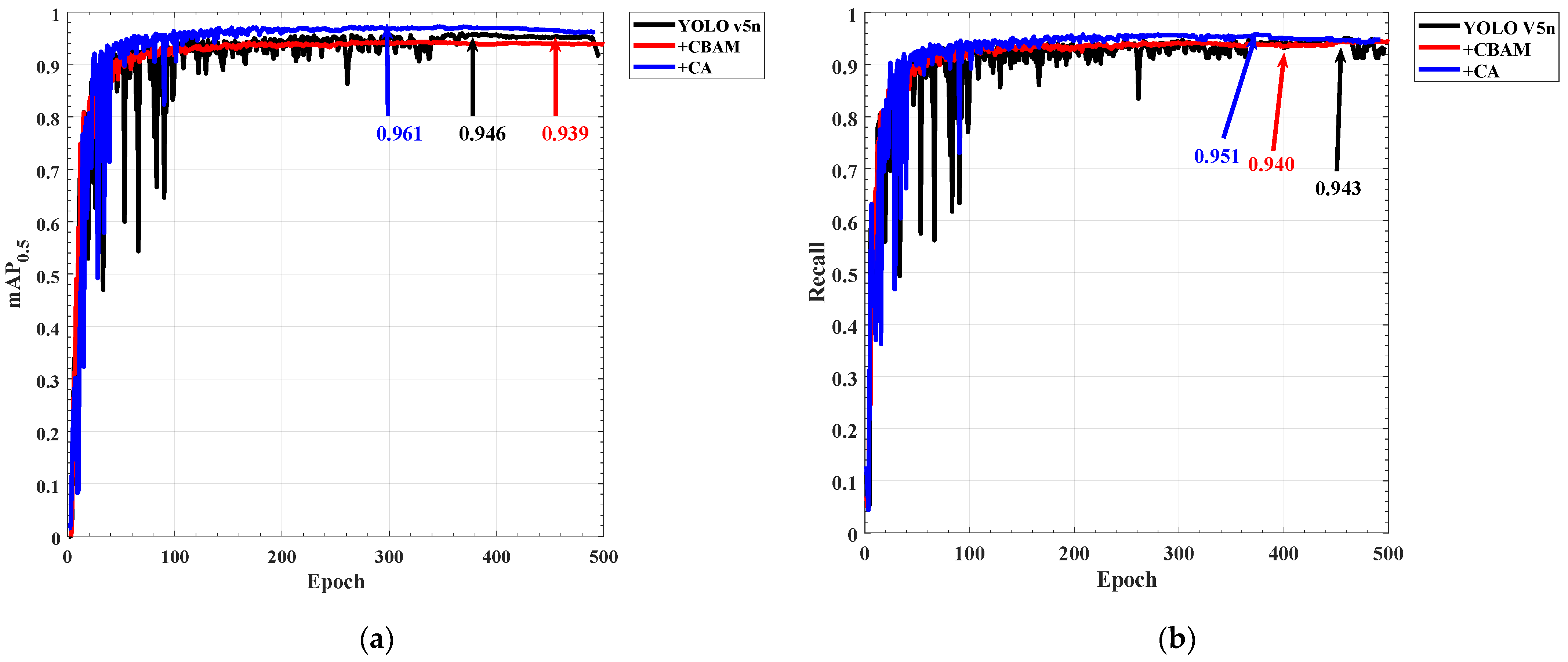

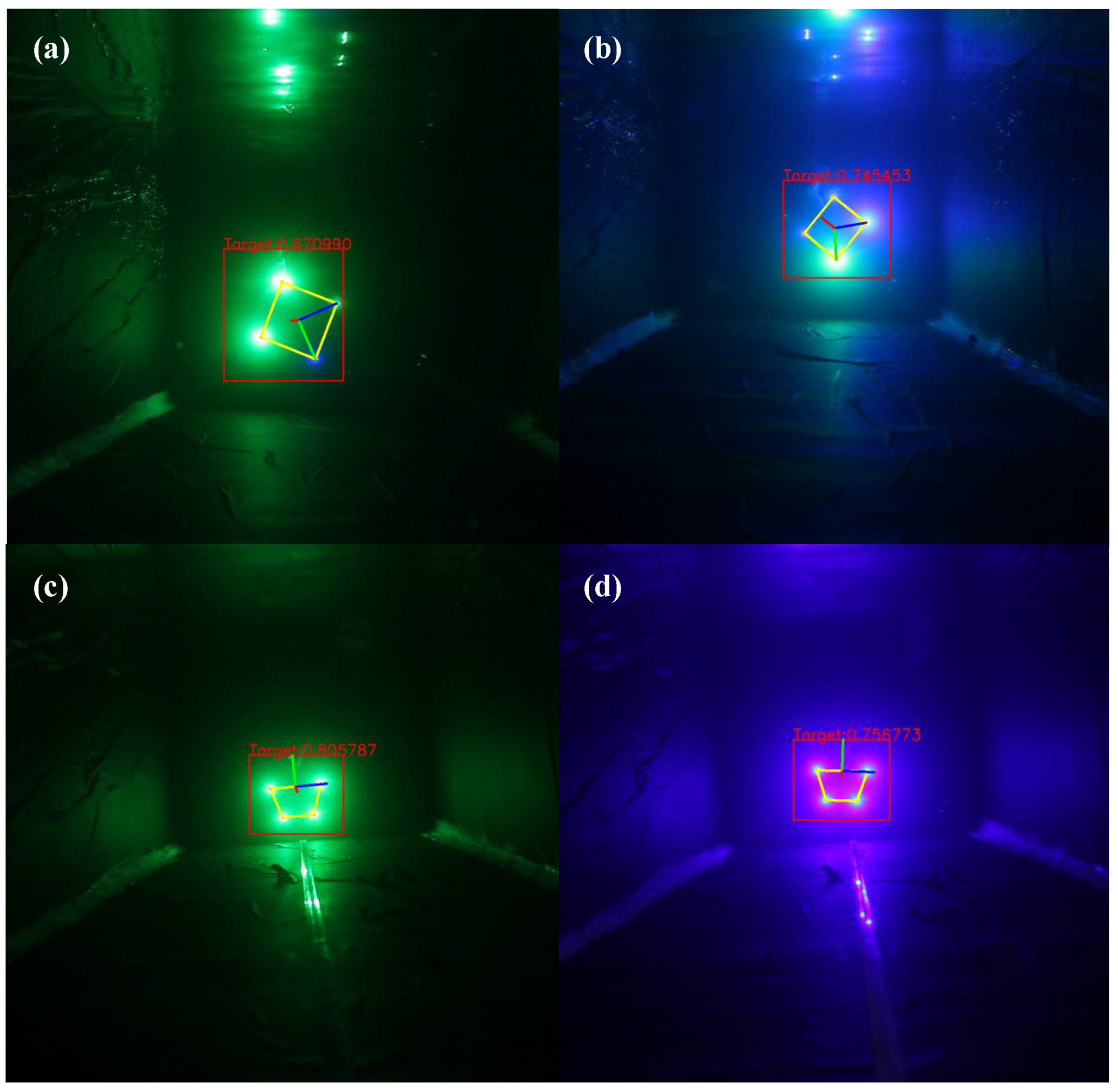

- In order to solve the problem of long-distance target recognition of small optical beacons, YOLO V5 is used as the backbone network [20], and the Coordinate Attention (CA) and Convolution Block Attention Mechanisms (CBAM) are added for comparison [21,22], and training is performed on a self-made underwater optical beacon data set. It is proved that the YOLO v5 model, by adding CA has good detection accuracy for small optical beacons when the network depth is relatively shallow and solves the problem of difficulty in extracting small optical beacons at 10 m underwater;

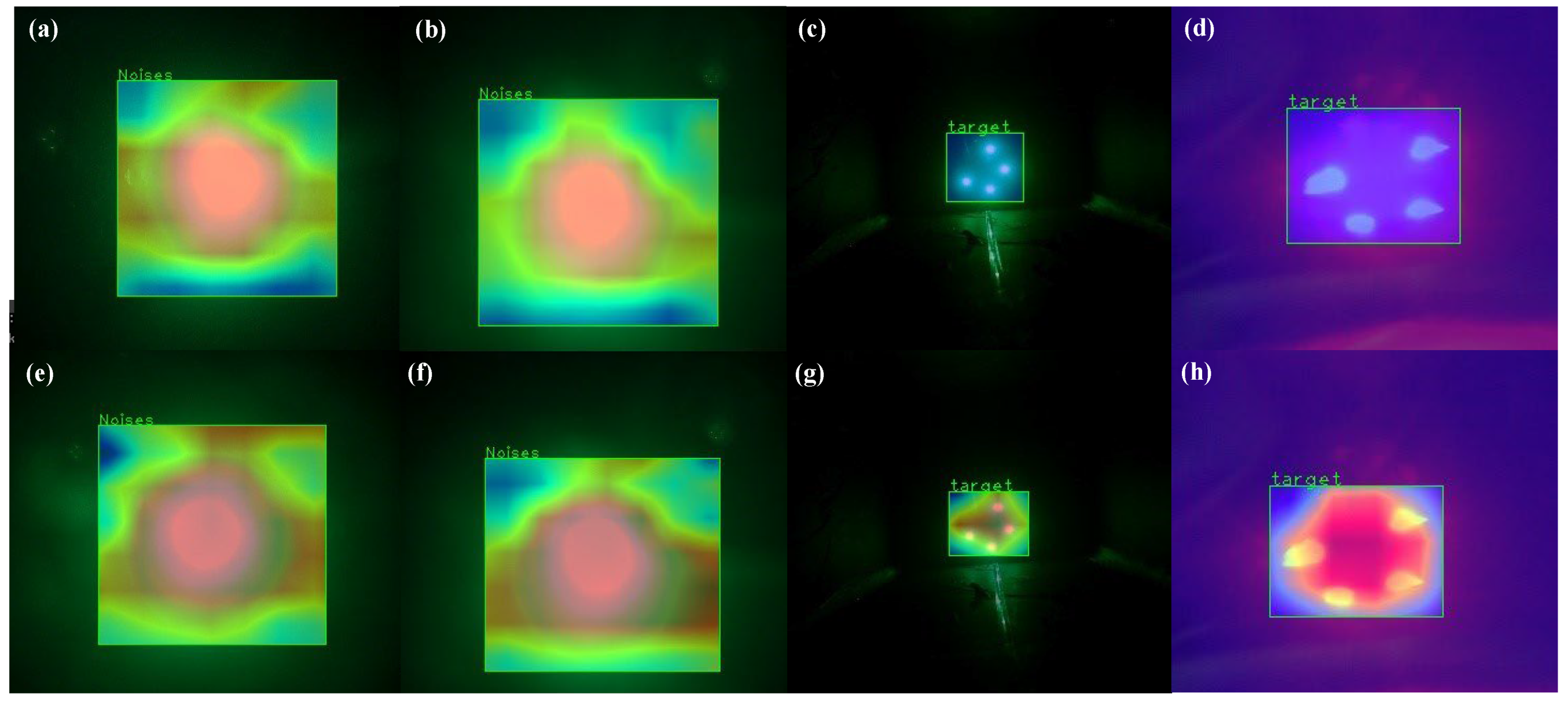

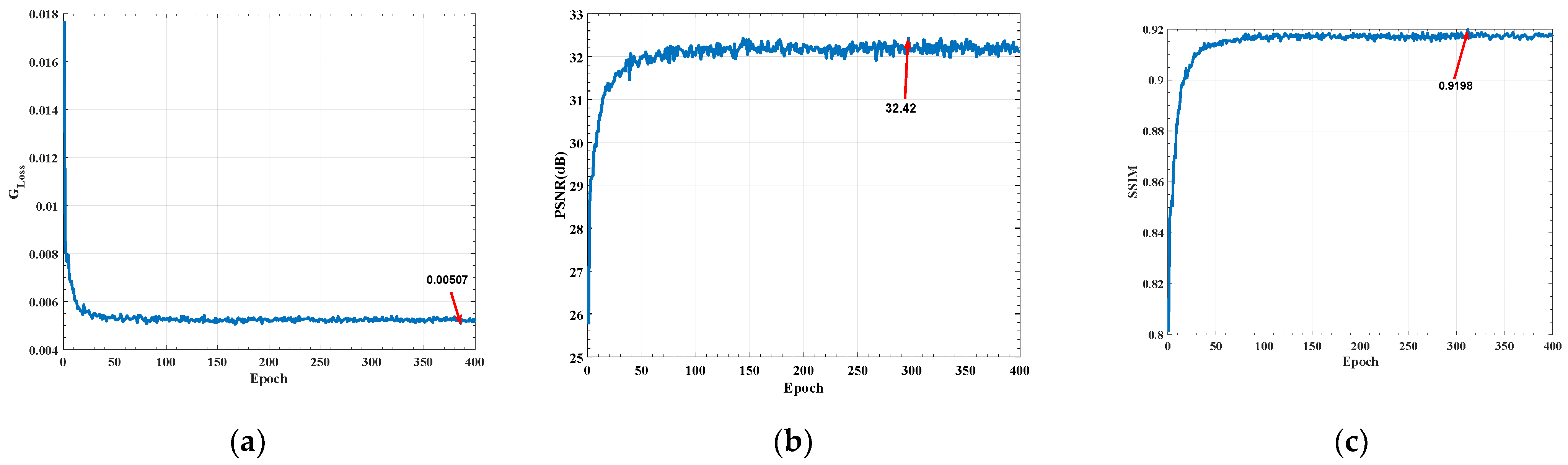

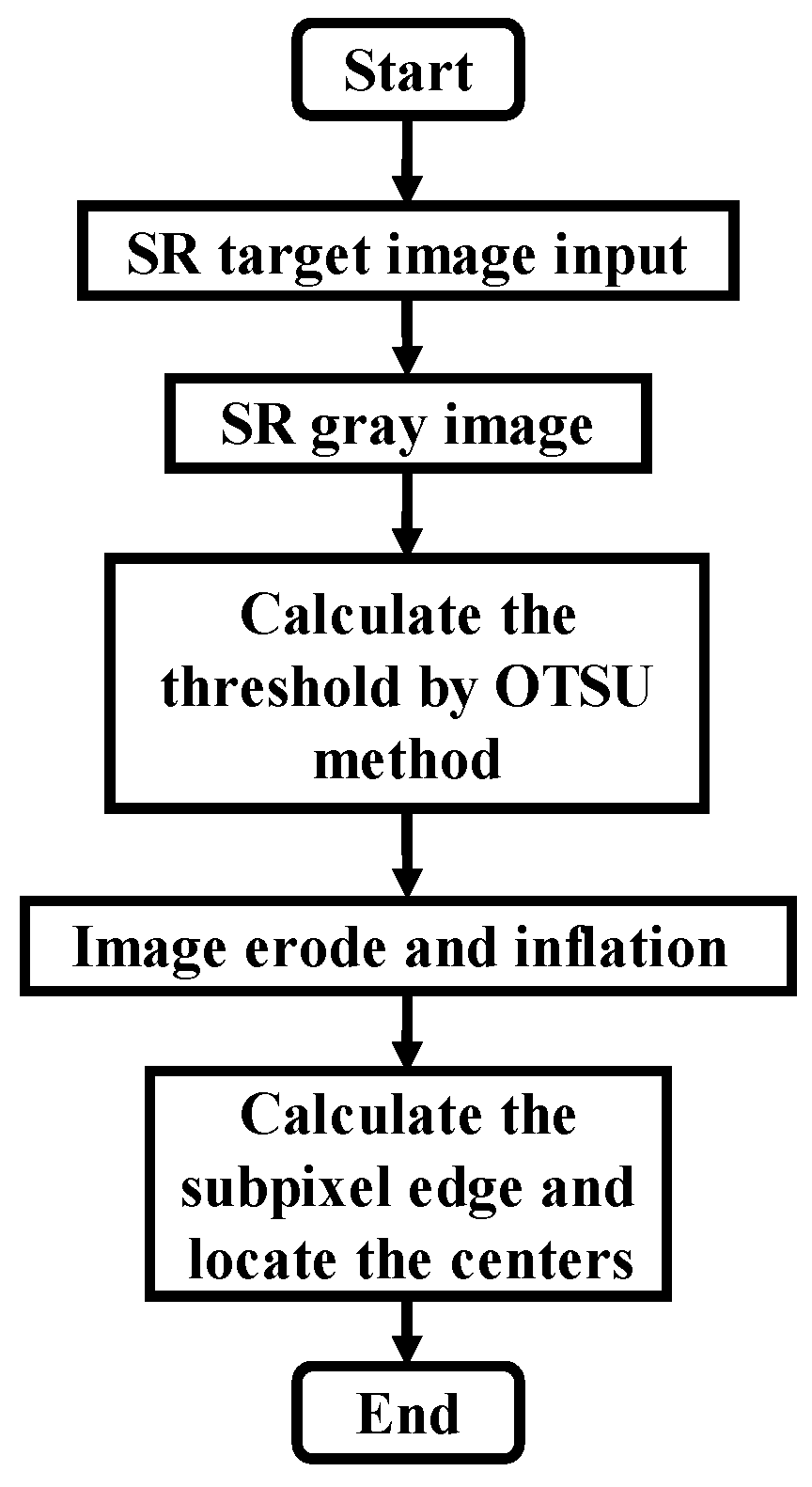

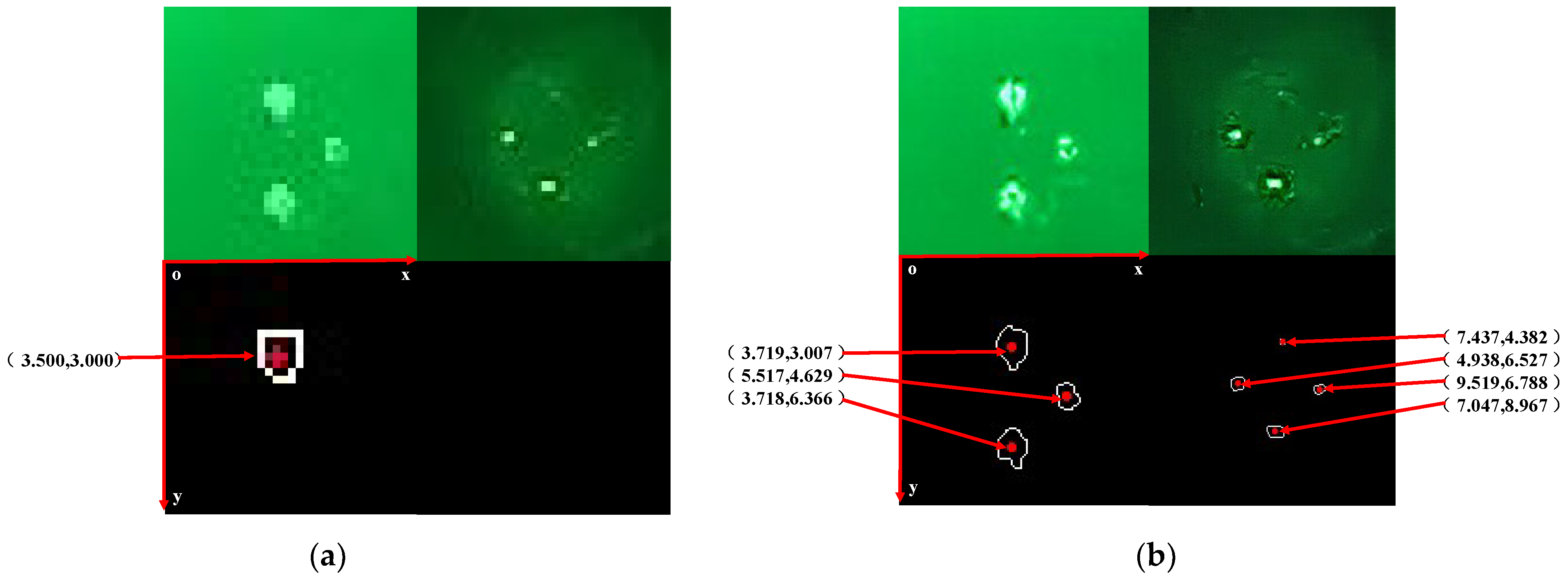

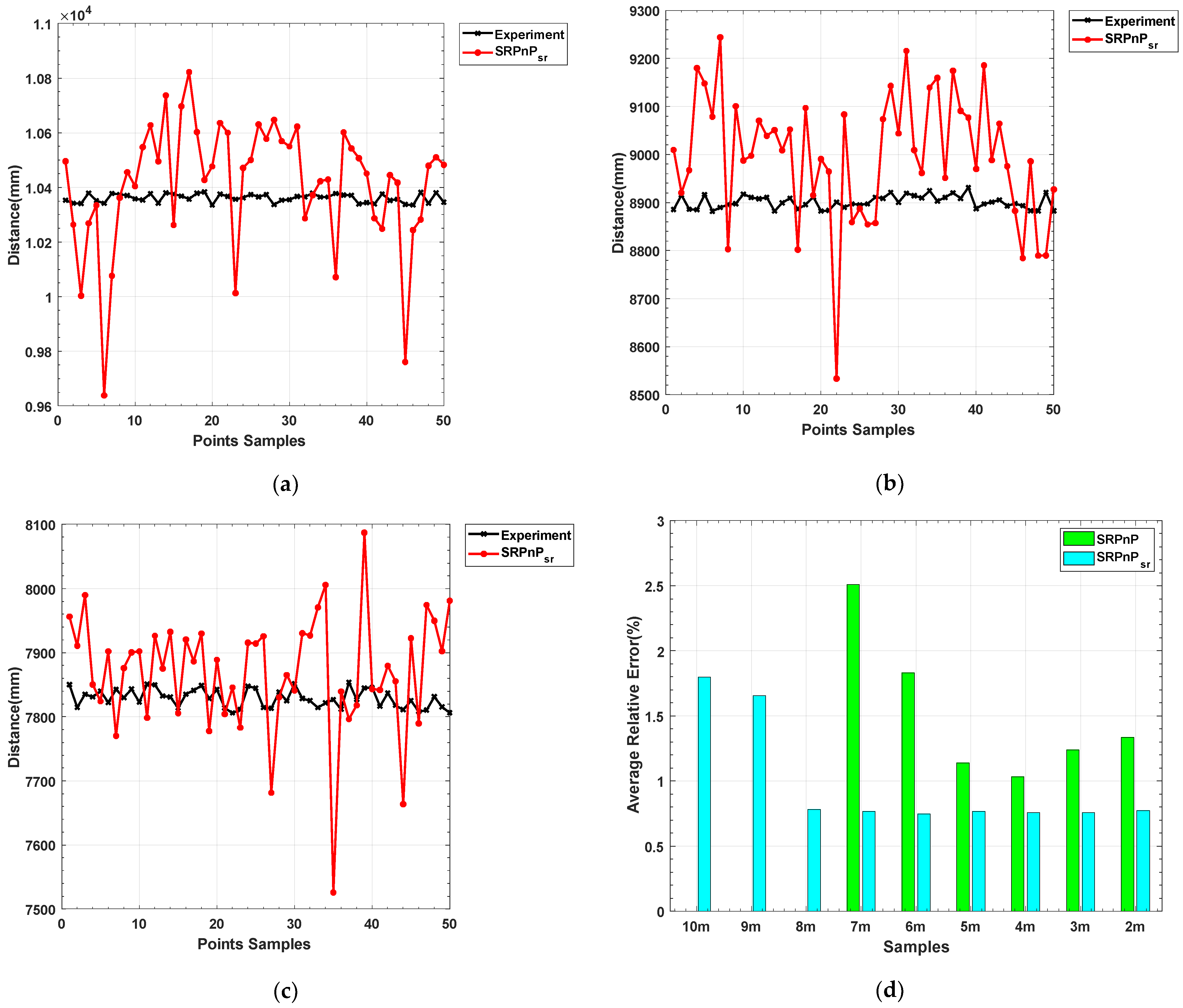

- In order to solve the problem of difficulty in obtaining pixel coordinates due to the small number of pixels occupied by the feature points of small optical beacons, a Super-Resolution Generative Adversarial Network (SRGAN) was introduced into the detection process [23]. Then, the sub-pixel coordinates of the light source centroid are obtained through adaptive threshold segmentation (OTSU) and the sub-pixel centroid extraction algorithm based on Zernike moments [24,25]. It is proved that the combination of super-resolution and sub-pixel has a good effect on the localization of the pixel coordinates of the target light source in the case of 4-time upscaling reconstruction of the image;

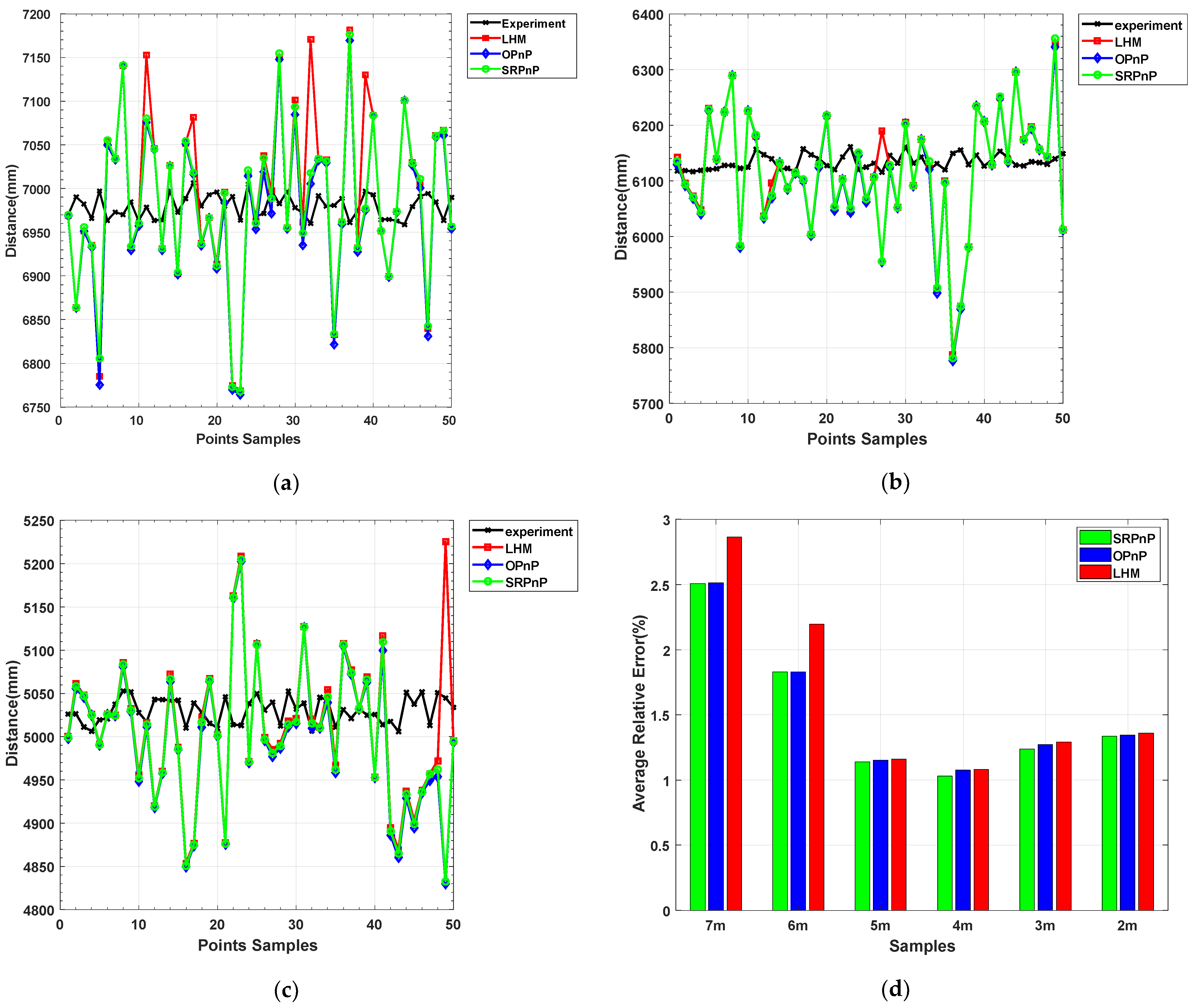

- In order to solve the problem of inaccurate calculation of the pose of small optical beacons, a simple and robust perspective-n-point algorithm (SRPnP) is used as the pose solution method, and it is compared with the non-iterative solution of the PnP problem (OPnP) and one of the best iterative methods, which is globally convergent in the ordinary case(LHM) [26,27,28].

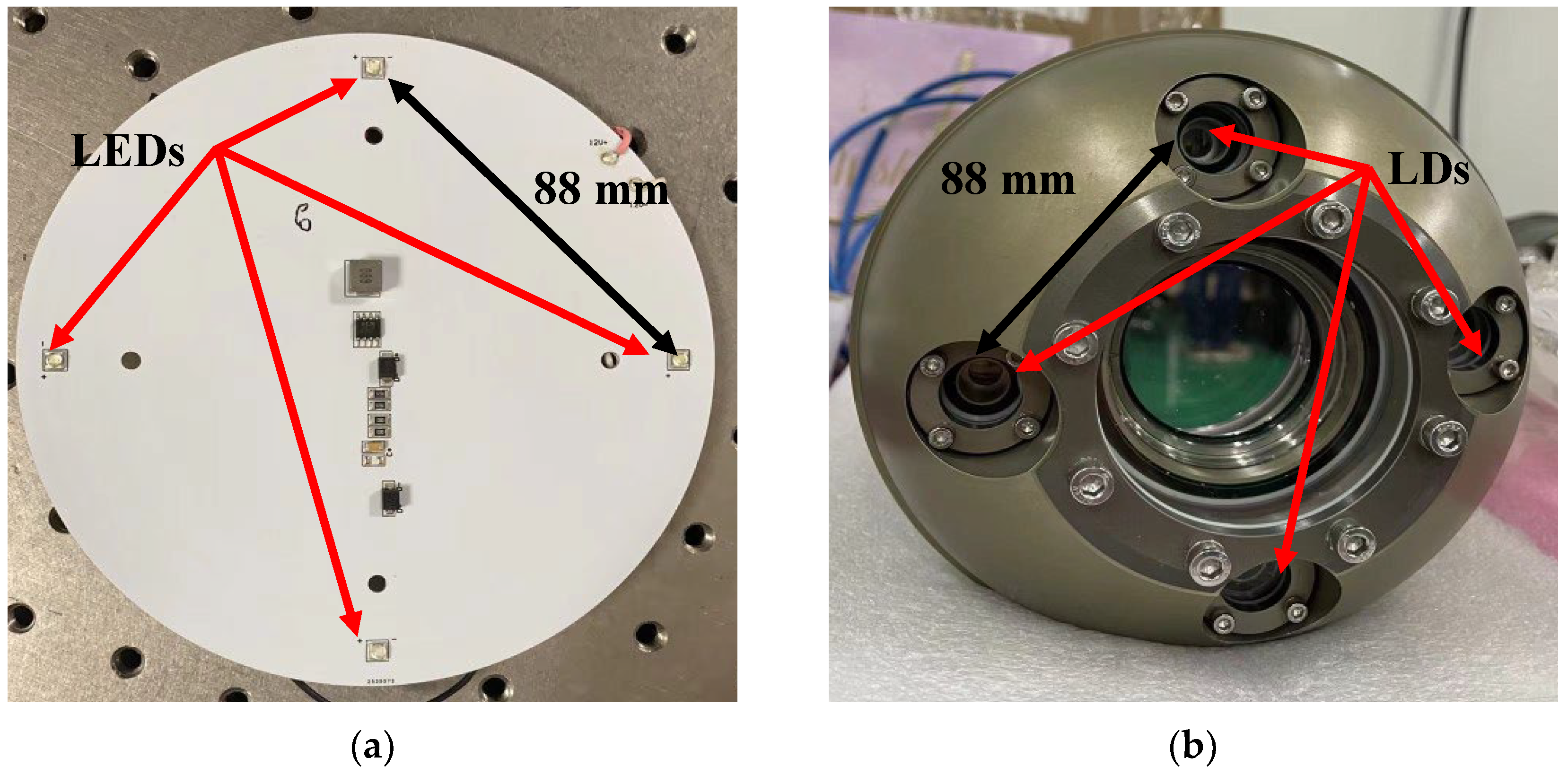

2. Experimental Equipment and Testing Devices

3. Underwater Optical Beacon Target Detection and Light Source Centroid Location Method

3.1. Underwater Target Detection Method Based on YOLO V5

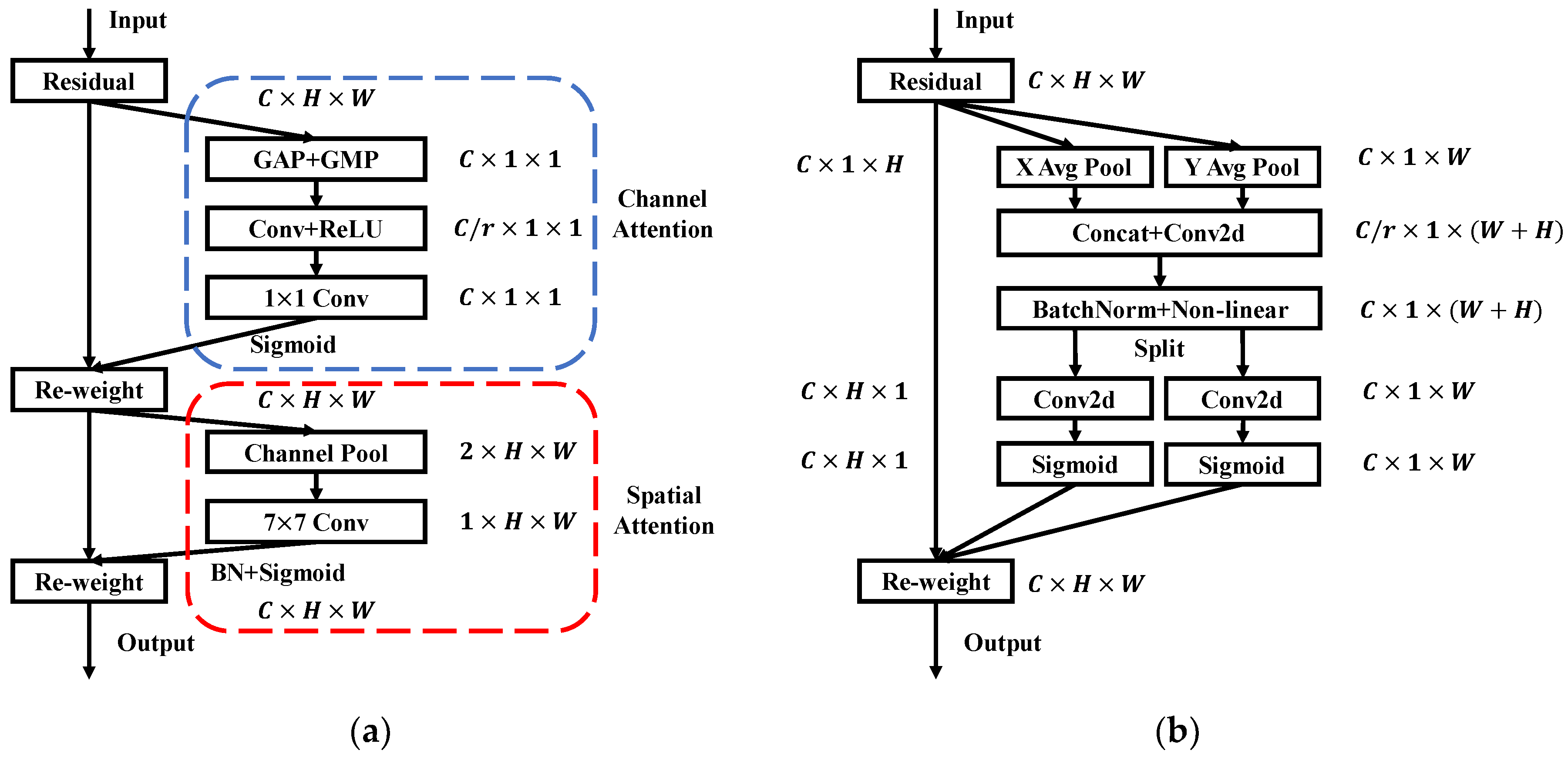

3.2. YOLO V5 with Attention Modules

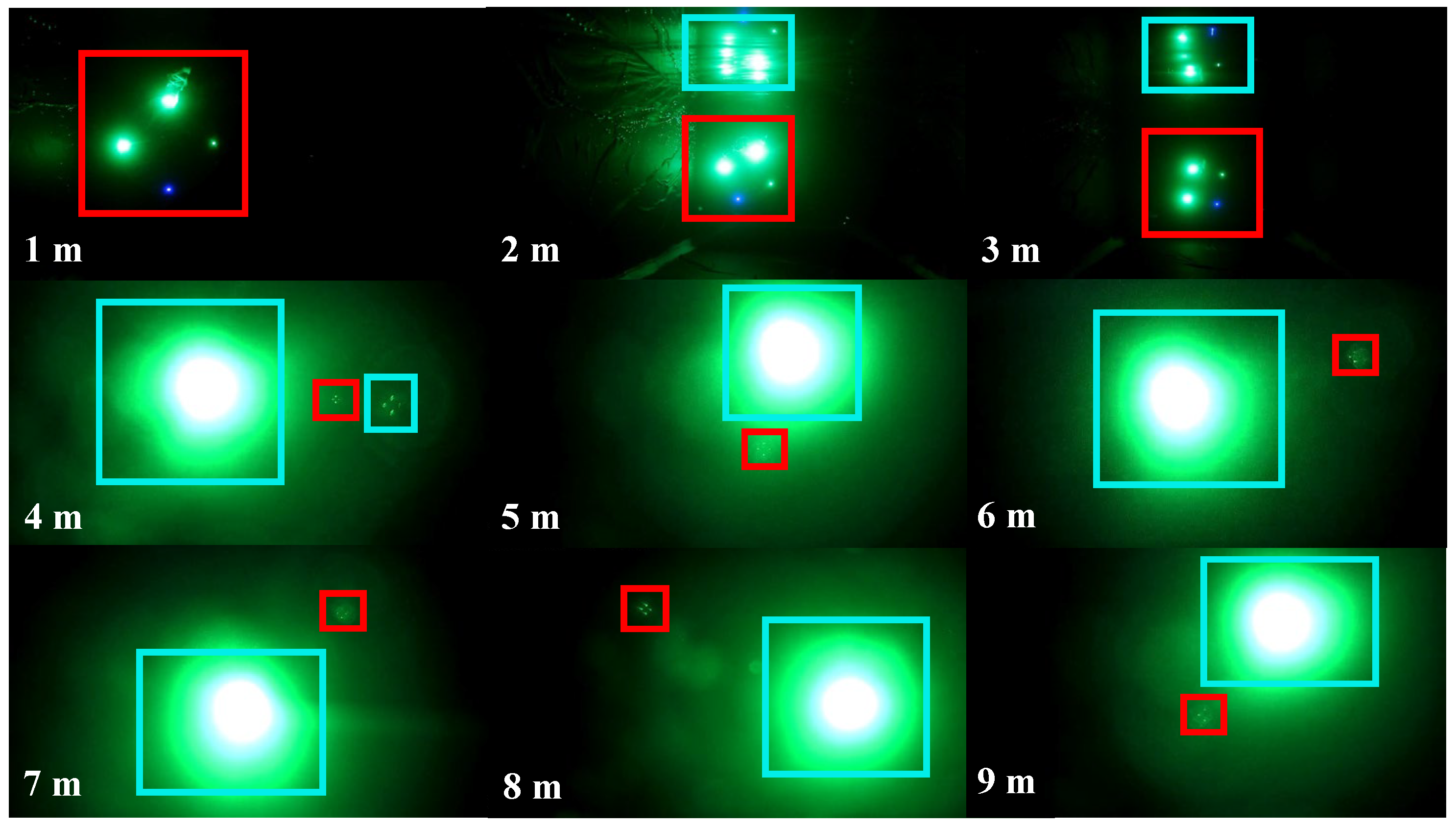

3.3. SRGAN and Zernike Moments-Based Sub-Pixel Optical Center Positioning Method

4. Experiments on Algorithm Accuracy and Performance

- Compare the traditional PnP algorithms, OPnP, LHM decomposition, and SRPnP in solving the coplanar 4-point small optical beacon translation distance error;

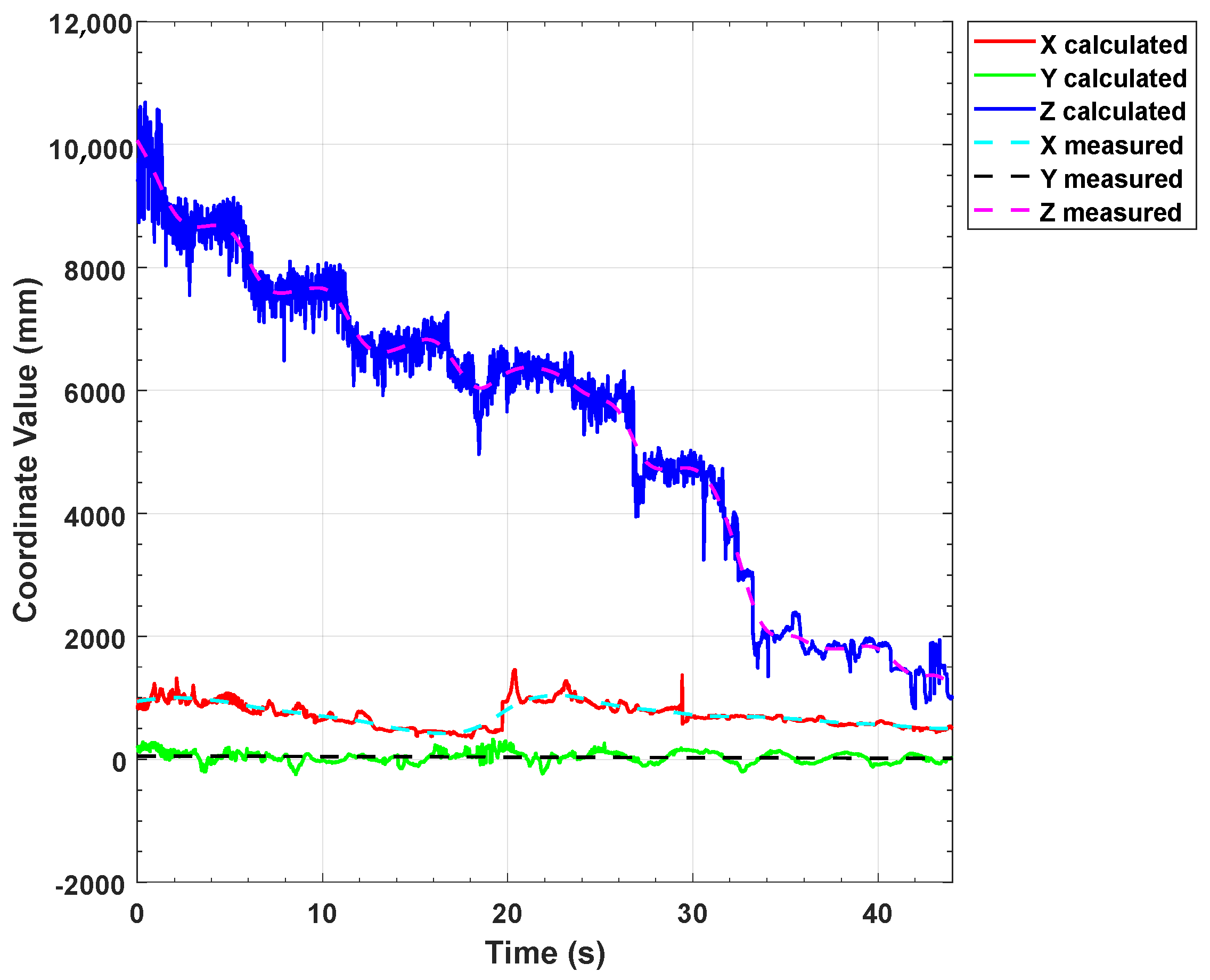

- Compare the accuracy of the traditional algorithm with the method described in Section 3;

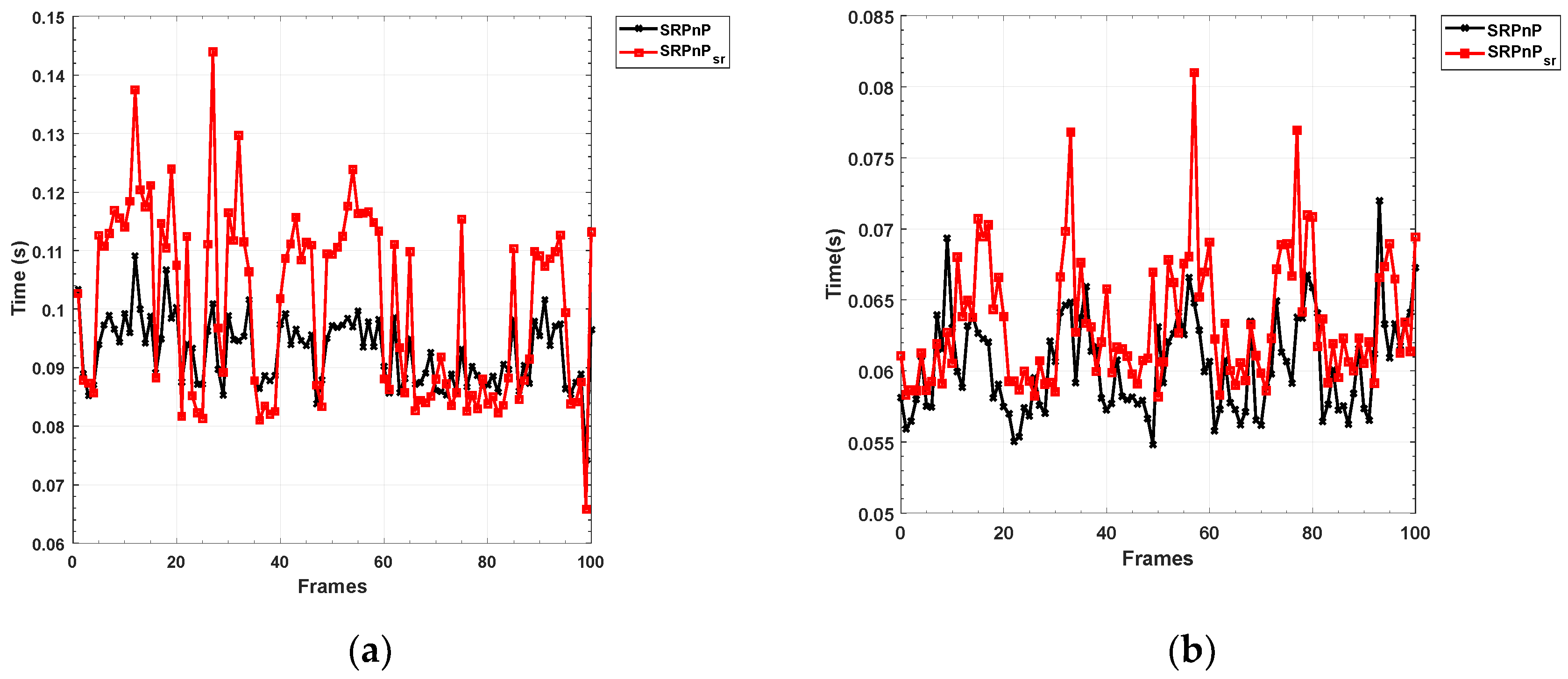

- Compare the running speed of the algorithm before and after adding the super-resolution enhancement.

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hsu, H.Y.; Toda, Y.; Yamashita, K.; Watanabe, K.; Sasano, M.; Okamoto, A.; Inaba, S.; Minami, M. Stereo-vision-based AUV navigation system for resetting the inertial navigation system error. Artif. Life Robot. 2022, 27, 165–178. [Google Scholar] [CrossRef]

- Guo, Y.; Bian, C.; Zhang, Y.; Gao, J. An EPnP Based Extended Kalman Filtering Approach forDocking Pose Estimation ofAUVs. In Proceedings of the International Conference on Autonomous Unmanned Systems (ICAUS 2021), Changsha, China, 24–26 September 2021; Springer Science and Business Media Deutschland GmbH: Changsha, China, 2022; pp. 2658–2667. [Google Scholar]

- Dong, H.; Wu, Z.; Wang, J.; Chen, D.; Tan, M.; Yu, J. Implementation of Autonomous Docking and Charging for a Supporting Robotic Fish. IEEE Trans. Ind. Electron. 2022, 1–9. [Google Scholar] [CrossRef]

- Bosch, J.; Gracias, N.; Ridao, P.; Istenic, K.; Ribas, D. Close-Range Tracking of Underwater Vehicles Using Light Beacons. Sensors 2016, 16, 429. [Google Scholar] [CrossRef] [PubMed]

- Wynn, R.B.; Huvenne, V.A.I.; Le Bas, T.P.; Murton, B.J.; Connelly, D.P.; Bett, B.J.; Ruhl, H.A.; Morris, K.J.; Peakall, J.; Parsons, D.R.; et al. Autonomous Underwater Vehicles (AUVs): Their past, present and future contributions to the advancement of marine geoscience. Mar. Geol. 2014, 352, 451–468. [Google Scholar] [CrossRef]

- Jacobi, M. Autonomous inspection of underwater structures. Robot. Auton. Syst. 2015, 67, 80–86. [Google Scholar] [CrossRef]

- Loebis, D.; Sutton, R.; Chudley, J.; Naeem, W. Adaptive tuning of a Kalman filter via fuzzy logic for an intelligent AUV navigation system. Control Eng. Pract. 2004, 12, 1531–1539. [Google Scholar] [CrossRef]

- Sans-Muntadas, A.; Brekke, E.F.; Hegrenaes, O.; Pettersen, K.Y. Navigation and Probability Assessment for Successful AUV Docking Using USBL. In Proceedings of the 10th IFAC Conference on Manoeuvring and Control of Marine Craft, Copenhagen, Denmark, 24–26 August 2015; pp. 204–209. [Google Scholar]

- Kinsey, J.C.; Whitcomb, L.L. Preliminary field experience with the DVLNAV integrated navigation system for oceanographic submersibles. Control Eng. Pract. 2004, 12, 1541–1549. [Google Scholar] [CrossRef]

- Marani, G.; Choi, S.K.; Yuh, J. Underwater autonomous manipulation for intervention missions AUVs. Ocean. Eng. 2009, 36, 15–23. [Google Scholar] [CrossRef]

- Nicosevici, T.; Garcia, R.; Carreras, M.; Villanueva, M.; IEEE. A review of sensor fusion techniques for underwater vehicle navigation. In Proceedings of the Oceans ’04 MTS/IEEE Techno-Ocean ’04 Conference, Kobe, Japan, 9–12 November 2004; pp. 1600–1605. [Google Scholar]

- Kondo, H.; Ura, T. Navigation of an AUV for investigation of underwater structures. Control Eng. Pract. 2004, 12, 1551–1559. [Google Scholar] [CrossRef]

- Bonin-Font, F.; Massot-Campos, M.; Lluis Negre-Carrasco, P.; Oliver-Codina, G.; Beltran, J.P. Inertial Sensor Self-Calibration in a Visually-Aided Navigation Approach for a Micro-AUV. Sensors 2015, 15, 1825–1860. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Y.; Cao, J.; Wang, B.; Li, Y. AUV docking experiments based on vision positioning using two cameras. Ocean Eng. 2015, 110, 163–173. [Google Scholar] [CrossRef]

- Zhong, L.; Li, D.; Lin, M.; Lin, R.; Yang, C. A Fast Binocular Localisation Method for AUV Docking. Sensors 2019, 19, 1735. [Google Scholar] [CrossRef]

- Liu, S.; Ozay, M.; Okatani, T.; Xu, H.; Sun, K.; Lin, Y. Detection and Pose Estimation for Short-Range Vision-Based Underwater Docking. IEEE Access 2019, 7, 2720–2749. [Google Scholar] [CrossRef]

- Ren, R.; Zhang, L.; Liu, L.; Yuan, Y. Two AUVs Guidance Method for Self-Reconfiguration Mission Based on Monocular Vision. IEEE Sens. J. 2021, 21, 10082–10090. [Google Scholar] [CrossRef]

- Venkatesh Alla, D.N.; Bala Naga Jyothi, V.; Venkataraman, H.; Ramadass, G.A. Vision-based Deep Learning algorithm for Underwater Object Detection and Tracking. In Proceedings of the OCEANS 2022-Chennai, Chennai, India, 21–24 February 2022; Institute of Electrical and Electronics Engineers Inc.: Chennai, India, 2022. [Google Scholar]

- Sun, K.; Han, Z. Autonomous underwater vehicle docking system for energy and data transmission in cabled ocean observatory networks. Front. Energy Res. 2022, 10, 1232. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 Release v6.0. Available online: https://github.com/ultralytics/yolov5/tree/v6.0 (accessed on 12 October 2021).

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J.; Ieee Comp, S.O.C. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, 19–25 June 2021; pp. 13708–13717. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4681–4690. [Google Scholar]

- Khotanzad, A.; Hong, Y.H. Invariant image recognition by Zernike moments. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 489–497. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Lu, C.-P.; Hager, G.D.; Mjolsness, E. Fast and globally convergent pose estimation from video images. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 610–622. [Google Scholar] [CrossRef]

- Zheng, Y.; Kuang, Y.; Sugimoto, S.; Astrom, K.; Okutomi, M. Revisiting the pnp problem: A fast, general and optimal solution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2344–2351. [Google Scholar]

- Wang, P.; Xu, G.; Cheng, Y.; Yu, Q. A simple, robust and fast method for the perspective-n-point problem. Pattern Recognit. Lett. 2018, 108, 31–37. [Google Scholar] [CrossRef]

- Baiden, G.; Bissiri, Y.; Masoti, A. Paving the way for a future underwater omni-directional wireless optical communication systems. Ocean Eng. 2009, 36, 633–640. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22-29 October 2017; pp. 618–626. [Google Scholar]

- Yan, Z.; Gong, P.; Zhang, W.; Li, Z.; Teng, Y. Autonomous Underwater Vehicle Vision Guided Docking Experiments Based on L-Shaped Light Array. IEEE Access 2019, 7, 72567–72576. [Google Scholar] [CrossRef]

| Sample Groups | Average Experiment Results (mm) | Average LHM Results (mm) | Average OPnP Results (mm) | Average SRPnP Results (mm) |

|---|---|---|---|---|

| 1 | 10,344.00 | None | None | None |

| 2 | 8892.00 | None | None | None |

| 3 | 7815.00 | None | None | None |

| 4 | 6968.00 | 7167.80 | 7143.45 | 7143.00 |

| 5 | 6122.00 | 6256.45 | 6234.07 | 6234.07 |

| 6 | 5015.00 | 5095.59 | 5072.82 | 5075.22 |

| 7 | 4082.00 | 4126.44 | 4126.27 | 4124.44 |

| 8 | 3012.00 | 3050.97 | 3050.45 | 3049.41 |

| 9 | 1987.00 | 2014.62 | 2014.34 | 2014.14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Zhong, P.; Yang, F.; Zhou, T.; Shen, L. Fast Underwater Optical Beacon Finding and High Accuracy Visual Ranging Method Based on Deep Learning. Sensors 2022, 22, 7940. https://doi.org/10.3390/s22207940

Zhang B, Zhong P, Yang F, Zhou T, Shen L. Fast Underwater Optical Beacon Finding and High Accuracy Visual Ranging Method Based on Deep Learning. Sensors. 2022; 22(20):7940. https://doi.org/10.3390/s22207940

Chicago/Turabian StyleZhang, Bo, Ping Zhong, Fu Yang, Tianhua Zhou, and Lingfei Shen. 2022. "Fast Underwater Optical Beacon Finding and High Accuracy Visual Ranging Method Based on Deep Learning" Sensors 22, no. 20: 7940. https://doi.org/10.3390/s22207940

APA StyleZhang, B., Zhong, P., Yang, F., Zhou, T., & Shen, L. (2022). Fast Underwater Optical Beacon Finding and High Accuracy Visual Ranging Method Based on Deep Learning. Sensors, 22(20), 7940. https://doi.org/10.3390/s22207940