Abstract

Diabetic retinopathy (DR) is a major health problem that can lead to vision loss if not treated early. In this study, a three-step system for DR detection utilizing optical coherence tomography (OCT) is presented. First, the proposed system segments the retinal layers from the input OCT images. Second, 3D features are extracted from each retinal layer that include the first-order reflectivity and the 3D thickness of the individual OCT layers. Finally, backpropagation neural networks are used to classify OCT images. Experimental studies on 188 cases confirm the advantages of the proposed system over related methods, achieving an accuracy of 96.81%, using the leave-one-subject-out (LOSO) cross-validation. These outcomes show the potential of the suggested method for DR detection using OCT images.

1. Introduction

Numerous conditions, including age-related macular degeneration (AMD), glaucoma, and diabetic retinopathy (DR) can affect the eyes [1,2]. These diseases range from visual fragility to vision loss, if not detected and treated early. Unfortunately, these diseases go unreported in the beginning and can only be identified by routine follow-up eye tests. This paper studies DR, a serious cumulative vascular and neurodegenerative condition that damages retinal cells without impairing vision yet is first difficult to diagnose. This disease is caused due to diabetes mellitus (DM), which is defined by poor glucose metabolism due to insulin failure or resistance. This can result in hyperglycemia, which can lead to vascular and neuropathic consequences. Thus, all diabetics are at risk of developing DR, especially in the adult population [3,4]. DR first manifests as a moderate condition with no obvious visual symptoms, but it can progress to a widespread and severe state, and the disease’s progression can result in blindness. Consequently, early diagnosis can lower the expense of therapy and the chance of sight loss to 57 percent [5].

According to Teo et al. [6], adults with DR are estimated as 103.12 million globally as of 2020. The number is anticipated to reach 160.50 million by 2045. On the other side, it was estimated that 28.54 million people could lose their vision owing to DR. The figures will rise to 44.82 million in 2045 [6]. Therefore, disease control is very required. It is necessary to start with the clinical care of DR, which entails enhancing the systemic control of blood sugar and blood pressure, where the management of blood sugar and blood pressure are the primary therapies. Although these treatments are very successful in diminishing the ratio of vision loss, they do not treat DR. There are three main treatments that are efficient in decreasing the vision loss ratio from this disease and are represented as follows: laser surgery, vitrectomy, and antivascular endothelial growth factor drug injection. Only the most severe instances of proliferative vitreoretinopathy are candidates for a vitrectomy, due to a dense vitreous tractional retinal detachment or hemorrhages [7,8]. Caution should be used when undergoing laser surgery since it harms retinal tissue [9]. Practically, those who seek therapy before their retina suffers substantial damage from advanced retinopathy have a chance of keeping their vision [10].

Specialized medical imaging of the eyes is a very important tool in early diagnosis. Fluorescein angiography, B-scan ultrasonography, fundus photography, optical coherence tomography (OCT), and OCT angiography (OCTA) are the most important specialized medical imaging tools for the eyes that are relied upon to assist in early diagnosis [11]. Noteworthy is the fact that the OCT device is of high quality, which makes any harmful changes easily noticed. In addition, it can be used to image the layers of the retina [12]. Therefore, this study uses OCT for the automated diagnosis of DR.

1.1. Related Work

On DR detection and diagnosis, several researchers have worked using retinal fundus photography images. For example, Priya et al. [13] used a model to diagnose DR using fundus images. This system was composed of four steps. First, preprocessing was done using an adaptive histogram equalization, a grayscale conversion, a matched filter, and a discrete wavelet transform. Second, fuzzy C-means clustering was applied for segmenting the blood vessels. From the segmented blood vessels, six features were extracted, which were the diameter, arc length, area, center angle, radius, and half area. Finally, three different classifiers were applied, which were a probabilistic neural network (PNN), a Bayes classifier, and a support vector machine (SVM). This system obtained an accuracy of 89.6% using PNN, 94.4% using the Bayes classifier, and 97.6% using the SVM classifier. In another study, Foeady et al. [14] used an automated system for DR diagnosis using fundus images. Preprocessing was applied to the images using histogram equalization, optical disk removal, contrast enhancement, filtering, and green channel extraction. Then, GLCM was used to extract statistical features such as contrast, correlation, energy, entropy, and homogeneity. Finally, they performed classification using an SVM. This system achieved an accuracy of 82.35%. Another study [15] applied a deep computer-aided diagnostic (CAD) system. First, preprocessing was applied to remove noise, improve quality, and resize the retinal images. Second, the authors extracted features, such as the gray-level co-occurrence matrix, regions of interest (ROIs), and the number of bifurcation points of the blood vessels. A multilabel SVM classifier was further applied to achieve an accuracy of 95.1%, specificity of 86.8%, and sensitivity of 86.1%. Rajput et al. [16] used fundus images to identify macular hard exudates, presenting an automatic approach based on mathematical morphology. By taking the extended minima transform’s complement, the hard exudates were extracted. If the scan revealed one or more hard exudates, it was then classified as diabetic maculopathy. Finally, the algorithm assigned the diabetic maculopathy a clinical significance level (CSME) or clinically nonsignificance level (Non-CSME) depending on how much the fovea was affected by the hard exudates. The accuracy, recall, and area under the curve (AUC) of the system were, respectively, 86.67%, 100%, and 97.06%. A related study [17] used a CAD method to grade diabetic maculopathy and DR. The accuracy of grading DR was 94.33%, while the specificity and sensitivity of diagnosing diabetic maculopathy were 98.56% and 96.46%, respectively. Rahim et al. [18] developed another system using six extracted features. These features were individually supplied to four different classifiers. The system achieved an accuracy of 70%, sensitivity of 45.28%, and specificity of 97.87% in the case of an SVM using a polynomial kernel. Using a naïve Bayes classifier, they achieved an accuracy, sensitivity, and specificity equal to 75%, 60.38%, and 91.49%, respectively. In the case of a K-nearest neighbors (KNN) classifier and an SVM using a radial basis function kernel, they achieved accuracies equal to 93%, 93%, sensitivities equal to 86.79%, 92.45%, and specificities equal to 100%, 93.62%, respectively. Sánchez et al. [19] employed a database of retinal pictures that was freely accessible to create a thorough DR CAD. They used 1200 digital color fundus pictures, and they extracted various characteristics to represent the structure, color, potential shapes, and contrast. These features were then used to feed a KNN classifier. This system had a sensitivity of 92.2%, a specificity of 50%, and an AUC of 87.6%. Mansour et al. [20] examined a CAD system on a Kaggle fundus dataset. They employed a multilevel optimization strategy that included preprocessing, region segmentation using a Gaussian mixture model based on adaptive learning, ROI localization using a connected component analysis (PCA), and feature extraction utilizing a PCA and linear discriminant analysis. Then, they fed these characteristics into an AlexNet-based categorization. With AlexNet, they achieved an accuracy of 97.93%. In another study, Li et al. [21] identified DR utilizing a transfer-learning Inception-v3 model, using 19,233 fundus images. Their method had a 93.49% categorization accuracy rate. Without using any pre- or postprocessing techniques, Abbas et al. [22] created a method for grading the five DR severity levels using 750 fundus pictures. Using a semisupervised multilayer deep learning method, their system obtained a 92.4% AUC, 92.18% sensitivity, and 94.50% specificity. Khalifa et al. [23] used a dataset to train and evaluate different deep learning models, such as ResNet18, VGG16, AlexNet, GoogleNet, and SqueezeNet. Their system achieved the highest testing accuracy of 97.9% using the AlexNet model. In another study, Kassani et al. [24] used an altered Xception architecture to classify the severity of DR. This method achieved 83.09% accuracy, 88.24% sensitivity, and 87.00% specificity. Rahhal et al. [25] used a neural network to categorize each picture into one of the five phases of DR using a publicly accessible dataset of fundus images. They achieved an accuracy of 66.68% for guessing the correct label for the image.

On the other hand, extensive studies have been done using OCT to identify various retinal disorders. For example, Ibrahim et al. [26] used a modified VGG16 CNN model, fused with ROI handcrafted extracted features, to diagnose drusenoid disorders, diabetic maculopathy, and choroidal neovascularization (CNV) using OCT imaging. Ghazal et al. [27] used a CNN system to analyze OCT B-scans for DR detection. They segmented the retina into 12 different layers. After that, they extracted patches from both the temporal and nasal sides of the fovea. Then, the patches, extracted from images were used for CNN training. Finally, an SVM was used for giving the final classification of DR or normal. This system achieved an accuracy of 94%. ElTanboly et al. [28] used a system to detect DR for patients with type 2 diabetes, using OCT images. First, they segmented the original OCT scan into 12 different layers. Second, they extracted features of the segmented layers such as curvature, thickness, and reflectivity. Finally, they performed a classification of each image whether diabetic or normal. They utilized a deep-fusion classification network with a stack of autoencoders that were not negatively restricted to classify the extracted features. This study achieved an accuracy of 92%, sensitivity of 83%, and specificity of 100%. Sandhu et al. [29] utilized OCT for DR grading using 80 patients. They segmented the retina into 12 layers and determined the thickness, reflectivity, and curvature features of each layer. Using these features, a deep learning network was trained to classify images as either normal or having nonproliferative DR. Their system obtained an accuracy of 95%. Li et al. [30] used OCT on 4168 images collected from 155 patients, to detect early stage DR. This study used a deep network, called OCTD-Net. This network achieved an accuracy, sensitivity, and specificity of 92%, 90%, and 95%, respectively. Aldahami et al. [31] utilized a system for DR diagnosis. They used 214 OCT images, where 107 images were normal and 107 were DR. Four statistical features were utilized: standard deviation and the matrix mean, median, and mode. The classification was performed using an SVM and a KNN classifier. Sharafeldeen et al. [32] used 2D morphology and reflectivity models for early DR detection using 260 OCT images.

Additionally, retinal disorders can be identified with OCTA. OCTA can provide views of the eye’s blood vessels and the retina. For example, Eladawi et al. [33] utilized a system for DR detection using OCTA images. This system was based on different preprocessing techniques, such as homogeneity, an initial estimation of a threshold, and a Markov random field model. They applied a segmentation step to extract the avascular zone of the fovea and vessels. Features were represented in vessel density, the width of the avascular zone of the fovea, and blood vessels’ caliber. The features were estimated to learn the SVM classifier. Finally, they achieved an accuracy of 94.3%, a sensitivity of 97.9%, and a specificity of 87%. Another study [34] used an ensemble deep learning technique using 463 OCTA volumes for DR diagnosis. Data were used to build neural networks, and ResNet50, DenseNet, and VGG19 architectures, pretrained on ImageNet, were used to fine-tune them. The network, trained on the VGG19 architecture, obtained the best accuracy of 92%, compared to the other architectures. Le et al. [35] used a CNN architecture, VGG16, to diagnose DR using OCTA. The method’s accuracy, specificity, and sensitivity were 87.27%, 90.82%, and 83.76%, respectively.

Other research combined OCT and OCTA modalities to identify the different grades of DR. For example, Sandhu et al. [36] used a system for grading NPDR using both OCTA and OCT on 111 patients. For OCT, they segmented the retina into layers. Then, they extracted features from each layer, which were represented in thickness, reflectivity, and curvature. For OCTA, they extracted the density of the vessel, the size of the avascular zone of the fovea, blood vessels’ caliber, and the count of crossover points and bifurcations. Finally, a random forest classifier was used for the classification. This system obtained an accuracy of 96%, a sensitivity of 100%, and a specificity of 94%.

1.2. Limitation of the Existing Works and Proposed Method

The main limitations of the previous works on OCT data are the following:

- Most of the techniques do not consider the 3D characteristics of the images and are limited to the 2D image features.

- A significant quantity of data is needed to train and test deep learning models, which may not be available.

To afford a better accuracy and make full use of the 3D characteristics of the retinal layers, this paper presents a novel 3D method for DR detection. The main features/contributions of the proposed method are the following:

- The paper is the first of its kind to analyze the 3D retinal layer by using low-level (first-order reflectivity) and high-level (3D thickness) information.

- Backpropagated neural networks are optimized to combine low-level and high-level information to further improve performance.

- Comparing the suggested approach to related methods, it performs better.

2. Materials and Methods

2.1. Patient Data

Our developed CAD system was trained and tested on 3D-OCT volumes. Each OCT volume, which comprised five OCT image scans, was acquired for each eye (left and right). The data’s dimensions were equal to 1024 × 1024 × 5. This information was obtained using a Zeiss-Cirrus HD-OCT 5000 in the ophthalmology department of the University of Louisville (UofL) Hospital. The UofL Institutional Review Board (IRB) approved the acquisition and data collection. The study complied with the Helsinki Declaration. The dataset consisted of 188 cases (100 normal and 88 DR) with a minimum strength of signals of 7/10. Physicians with expertise in retinal diseases performed fundus exams to detect the disease.

2.2. Proposed Computer-Aided Diagnostic (CAD) System

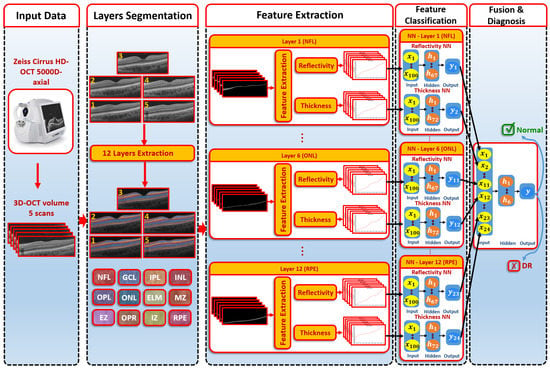

The CAD system was separated into three steps, as illustrated in Figure 1. First, we used the 3D appearance-based segmentation approach [37] in order to segment the OCT images into 12 layers, as shown in Figure 2. Second, the features from each segmented layer were extracted, including the 3D first-order reflectivity feature in addition to the 3D thickness feature. In the third phase, the classification method was applied using the 3D features, extracted from each layer individually to obtain the final diagnosis. More details of the segmentation method, feature extraction, and classification technique are presented in the next sections.

Figure 1.

An illustration of the proposed 3D-OCT technology for DR diagnosis.

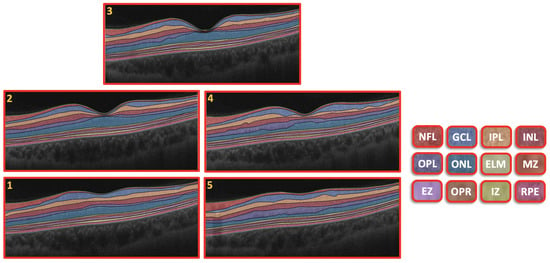

Figure 2.

Retinal segmented layers starting from NFL layer and ending with RPE layer.

2.2.1. Segmentation of 3D-OCT Images

Using this method, the slice of the central area of the macula (fovea) was segmented into 12 layers. First, a Markov–Gibbs random field (MGRF) was used to segment the area from the retinal fovea. Then, a shape prior was formed that depended on OCT scans of the patients. From the shape prior, we could segment the neighboring slices. To get the final segmentation of a 3D-OCT scan, the prior shape models were repeated. Accordingly, the process of segmentation took place in several stages, which are summarized in the following steps:

- The B-scan OCT was aligned to a shape data formed by an expert. These shape data contained manual segmentations of the area in the center of the macula (foveal) of normal and diseased retina shape priors.

- The central B-scan was divided into twelve distinct layers based on intensity, MGRF spatial interactions, and shape.

- A nonrigid deformation B-scan was used as a prior shape pattern in each segmented B-scan process.

- The models of shape prior were repeated in each slice to get the final 3D segmentation.

An example of the segmentation of the OCT layers is shown in Figure 2. More details about the 3D segmentation approach can be found in [37].

2.2.2. Feature Extraction

To describe the 3D segmented layers effectively, we extracted two features from each layer, namely, the first-order reflectivity and the 3D thickness.

The 3D first-order reflectivity was the first marker used in our approach. It expresses the light reflection intensity of a 3D individual layer. Because the reflectivity depends on some outer factors, it impacts on the quality of images, even in the condition where both eyes have no pathology and possess the same age, e.g., in the case of pupil dilation. Therefore, a normalization was applied on a given intensity of an OCT B-scan volume to control this problem as shown in Equation (1) [28].

where RV is the mean intensity of the vitreous layer and is the mean intensity of the retinal pigment epithelium layer (RPE).

3D thickness was the second marker used to describe the OCT image in our approach. By computing the 3D Euclidean distance between each pair of related voxels on the layer boundaries, the 3D thickness feature determines the 3D thickness of a layer. To avoid intensity variations problems in the B-scans of retinal layers, we applied a geometric technique to measure the thickness instead of utilizing image intensities. This feature was evaluated by solving the 3D Laplace equation on the 3D segmented B-scan layers. The 3D Laplace equation is defined as in Equation (2):

where is the harmonic function or scalar field that depicts the estimated electric field between the layer’s target and reference boundaries.

The Laplace equation was applied to colocate the corresponding voxels. The second-order iterative Jacobi method was used to calculate as in Equation (3).

where the predicted electric field at during the ith iteration is denoted by . , , and are the step lengths in the directions of x, y, and z, respectively. Using the 3D Laplace equation, we summarize the steps of voxel-wise correspondences as follows:

- Locate the surface of the target and reference objects.

- Initially: adjust the minimum and maximum potential at the corresponding reference surface and the target surface, respectively.

- Using Equation (3), between both isosurfaces, can be estimated.

- Repeat the third step until convergence is achieved (i.e., no change occurs in the evaluated values between the iterations).

More details about the 3D Laplace equation can be found in [38].

2.2.3. Classification System

After the extraction of the first-order reflectivity and 3D thickness, a classification system was applied based on two stages. First, two neural networks for each layer of the reflectivity and thickness features were built. Second, a fusing NN was used to fuse the first stage’s neural networks. The details of each network are as follows (see Figure 1):

- The first-order reflectivity feature of each layer was used to feed neural networks (NNs), each was composed of one hidden layer that involved 67 neurons. Each NN was applied to each layer individually. Through each NN, each layer gave a probability between 0 and 1

- The 3D thickness feature was fed to the NNs. Each NN was composed of one hidden layer containing 72 neurons and applied to each layer individually. The output of each NN was the probability of each layer.

- In the second stage, we fused the probabilities resulting from the previous first-stage NNs. The second-stage NN contained one hidden layer of 6 neurons. The output represented the final diagnosis.

The basic steps of NNs can be illustrated as follows:

- At first, the weights of NNs were initialized randomly.

- All outputs in hidden layers and output layers for neurons were calculated.

- The activation function was applied on each neuron of the outputs calculated in step 2.

- By using the backpropagation approach, the different weights were updated.

- Steps 2, 3, and 4 were replicated until the weights became stable.

2.2.4. System Evaluation

We used different metrics to evaluate our system and determine its quantitative efficiency, where our system had two classes (the negative class represents normal cases and the positive class represents DR cases). These metrics were sensitivity, specificity, and accuracy [39]. Many experiments were conducted to validate our system, which were the 5-fold, 10-fold, and leave-one-subject-out (LOSO) experiments.

3. Results

To evaluate the quality of the proposed method, we compared it against other systems that depended on one extracted feature and against the most popular existing machine learning (ML) and deep learning (DL) methods. To optimize the NN architectures, their hyperparameters were adjusted by using various experiments. Those parameters involved the number of hidden layers (i.e., search space: 1–5) and how many neurons were present in each hidden layer (i.e., search space: 4–200). In the case of activation functions, we tried different functions for the output and hidden layers (range: softmax, sigmoid, RLEU, rectified linear, and tanh activation functions). In our system, the configurations were applied to get the best results represented in one hidden layer for all NNs, and the numbers of neurons in the hidden layers were 67, 72, and 6 for the reflectivity, thickness, and fusion NNs, respectively. In the case of activation functions, we applied the softmax function at the output layer and the tanh function at the hidden layers.

3.1. Results in the Case of Using the Reflectivity Only

As expected, the LOSO cross-validation achieved the highest accuracy compared to other cross-validation settings, since the number of training subjects was the maximum in this setting (see Table 1). As shown in the table, using the reflectivity extracted from one layer, the best layer accuracy was 82.45% using the LOSO cross-validation. The other settings (i.e., 5 folds and 10 folds) achieved best layer accuracies of 77.22% ± 8.65% and 79.23% ± 8.08%, respectively. When fusing the reflectivity feature from the 12 layers, the system achieved better accuracies (85.11%, 80.29% ± 7.66%, and 78.70% ± 7.48% using LOSO, 5-fold, and 10-fold cross-validations, respectively). These results indicated that the fusing of the reflectivity features from different layers improved the accuracy. However, the reflectivity feature alone was not able to provide the required performance.

Table 1.

Comparison results between the proposed system and other one-feature-based systems.

3.2. Results in the Case of Using the Thickness Only

By the same analysis, using the thickness extracted from one layer, the best layer accuracy, using the LOSO cross-validation, was 89.36% (see Table 1). As illustrated in the table, the 5-fold cross-validation achieved an accuracy of 85.03% ± 7.84%, whereas the 10-fold cross-validation achieved an accuracy of 85.54% ± 10.16%. When fusing the thickness feature from the 12 layers, the system achieved an improved accuracy of 93.62% using the LOSO cross-validation, an accuracy of 91.40% ± 5.99% using the 5-fold cross-validation, and an accuracy of 90.34% ± 8.69% using the 10-fold cross-validation. These results indicated the importance of integrating the thickness of all layers to improve performance.

3.3. Results of the Proposed System

To integrate the differential effect of DR on each layer of the eye, the proposed system used a fusion neural network to fuse the layers’ features. This approach achieved improved results when compared to the other results presented (see Table 1). As demonstrated in the table, it achieved an accuracy of 96.81% using the LOSO cross-validation (the best setting accuracy, as expected), 94.12% ± 3.50% using the 5-fold cross-validation, and 94.67% ± 4.30% using the 10-fold cross-validation. These outcomes demonstrated the benefits of the suggested fusion system.

3.4. Comparison Results to Other ML Methods

To assess the advantages of the proposed fusion using NNs, we compared our proposed system with the most popular existing ML methods, such as SVM, KNN, binary decision tree (DT), and naive Bayes (NB) classifiers. The results of the comparison are illustrated in Table 2. As shown in Table 2, the proposed system achieved the highest accuracy. These outcomes demonstrated the benefits of the suggested neural-network-based fusion method.

Table 2.

Comparison between other ML-based classifications and the proposed system.

3.5. Comparison Results to DL Methods

We compared the proposed system with the most popular DL algorithms, including Google Net and Resnet-50 (see Table 3). For these tested DL algorithms, we applied transfer learning to avoid overfitting. GoogleNet achieved the best DL accuracies of 93.98% ± 9.31%, using 5 folds and of 94.43% ± 9.45%, using 10 folds. By comparing the outcomes of the proposed system with the tested DL algorithms, it was clear that the proposed system was comparable with DL algorithms.

Table 3.

Comparison between DL classifiers and the proposed system.

4. Discussion

One of the most serious diseases in the world is DR. Therefore, we proposed a CAD system that depended on retinal 3D-OCT image features to achieve an improved detection of DR. We used OCT images because OCT is one of the most common retinal examination techniques, which depicts the outstanding symptoms of DR. An OCT image shows the early symptoms of DR due to its ability to present retinal cross-sectional regions. The proposed framework depended on 3D-OCT images, which were segmented into 12 retinal layers using an automatic segmentation technique. The extracted retinal information from the segmentation process was collected and then passed to the proposed fused-NN model, which depended on the two 3D features extracted from the OCT scans. As illustrated in the result section, using each layer individually or using only one feature did not achieve a promising accuracy (see Table 1). One of the major aims of the proposed framework was to accurately diagnose DR. Thus, a fused-NN classification was proposed to integrate the information collected from all the layers and all the features. As reported in Table 2 and Table 3, the proposed framework obtained the highest diagnostic accuracy of 96.81%, compared to the most popular related ML and DL methods. The reasons behind the high accuracy of the proposed method were two folds: (i) using artificial intelligence (AI), especially the fusion NN, to fuse the reflectivity and thickness information, gathered from all the segmented layers, achieved an improved performance, and (ii) applying the method in 3D gave competing results, compared to other related works (see Table 4). Note that the comparison results of the related works in Table 4 were not fair, since all the compared methods were 2D techniques, whereas the data used in our study for evaluation were 3D (so they were not applicable to be evaluated on the same data). However, the results presented in Table 4 gave an insight into the advantages of using 3D-OCT data to improve diagnostic performance. To further investigate the advantages of the proposed 3D system, a comparison between using a 2D technique vs. a 3D technique on the same source data, using the same experimental setting, is shown in Table 5. As shown in Table 5, the proposed 3D technique on 3D data achieved an improved performance over the corresponding 2D technique on 2D data (using the slice on the center). This highlights the potential of using 3D information in order to obtain the fine details of the affected retinopathy parts in the OCT image, which improves the detection accuracy.

Table 4.

Reported related work accuracies using OCT data.

Table 5.

Comparison on 2D and 3D data using the proposed system.

5. Conclusions

A new CAD technique to identify DR was used in this study to achieve protection against vision loss by using 3D-OCT. To test the proposed system, the study included DR and normal images. The proposed system segmented the 3D-OCT retina layers automatically. Then, it extracted two different features from the segmented retinal layers, which were the first-order reflectivity and 3D thickness. Furthermore, these two features were fused to train and test an NN classifier. The proposed system achieved promising results compared to other ML and DL algorithms. These results confirmed the advantages of the proposed method. In the future, we will try to test the proposed method on more data in order to investigate its robustness. In addition, other ML and DL algorithms will be investigated.

Author Contributions

M.E., A.S., A.E. (Ahmed Elnakib), A.E. (Ahmed Elgarayhi), N.S.A., M.S. and A.E.-B.: conceptualization and formal analysis; M.E., A.S., A.E. (Ahmed Elnakib) and A.E.-B.: methodology; M.E. and A.S.: software development; M.E. and A.S.: validation and visualization; M.E., A.S., A.E. (Ahmed Elnakib) and A.E.-B.: initial draft. N.S.A. and A.E.-B.: resources, data collection, and data curation; M.E., A.S., A.E. (Ahmed Elnakib), A.E. (Ahmed Elgarayhi), N.S.A., M.S. and A.E.-B.: review and editing; A.E. (Ahmed Elgarayhi), N.S.A., M.S. and A.E.-B.: project administration. All authors have read and agreed to the published version of the manuscript.

Funding

Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R40), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of the University of Louisville.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are available upon reasonable request to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Radomska-Leśniewska, D.M.; Osiecka-Iwan, A.; Hyc, A.; Góźdź, A.; Dąbrowska, A.M.; Skopiński, P. Therapeutic potential of curcumin in eye diseases. Cent. Eur. J. Immunol. 2019, 44, 181–189. [Google Scholar] [CrossRef] [PubMed]

- Cheung, N.; Wong, T.Y. Obesity and eye diseases. Surv. Ophthalmol. 2007, 52, 180–195. [Google Scholar] [CrossRef] [PubMed]

- Das, T.; Jayasudha, R.; Chakravarthy, S.; Prashanthi, G.S.; Bhargava, A.; Tyagi, M.; Rani, P.K.; Pappuru, R.R.; Sharma, S.; Shivaji, S. Alterations in the gut bacterial microbiome in people with type 2 diabetes mellitus and diabetic retinopathy. Sci. Rep. 2021, 11, 2738. [Google Scholar] [CrossRef]

- Peplow, P.; Martinez, B. MicroRNAs as biomarkers of diabetic retinopathy and disease progression. Neural Regen. Res. 2019, 14, 1858. [Google Scholar] [CrossRef]

- Oh, K.; Kang, H.M.; Leem, D.; Lee, H.; Seo, K.Y.; Yoon, S. Early detection of diabetic retinopathy based on deep learning and ultra-wide-field fundus images. Sci. Rep. 2021, 11, 1897. [Google Scholar] [CrossRef] [PubMed]

- Teo, Z.L.; Tham, Y.C.; Yu, M.; Chee, M.L.; Rim, T.H.; Cheung, N.; Bikbov, M.M.; Wang, Y.X.; Tang, Y.; Lu, Y.; et al. Global Prevalence of Diabetic Retinopathy and Projection of Burden through 2045. Ophthalmology 2021, 128, 1580–1591. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, P.; Wong, T.Y. Management Paradigms for Diabetic Macular Edema. Am. J. Ophthalmol. 2014, 157, 505–513.e8. [Google Scholar] [CrossRef]

- Antoszyk, A.N.; Glassman, A.R.; Beaulieu, W.T.; Jampol, L.M.; Jhaveri, C.D.; Punjabi, O.S.; Salehi-Had, H.; Wells, J.A.; Maguire, M.G.; Stockdale, C.R.; et al. Effect of Intravitreous Aflibercept vs. Vitrectomy With Panretinal Photocoagulation on Visual Acuity in Patients With Vitreous Hemorrhage From Proliferative Diabetic Retinopathy. JAMA 2020, 324, 2383. [Google Scholar] [CrossRef]

- Everett, L.A.; Paulus, Y.M. Laser Therapy in the Treatment of Diabetic Retinopathy and Diabetic Macular Edema. Curr. Diabetes Rep. 2021, 21, 35. [Google Scholar] [CrossRef]

- Maniadakis, N.; Konstantakopoulou, E. Cost Effectiveness of Treatments for Diabetic Retinopathy: A Systematic Literature Review. Pharmacoeconomics 2019, 37, 995–1010. [Google Scholar] [CrossRef]

- Witkin, A.; Salz, D. Imaging in diabetic retinopathy. Middle East Afr. J. Ophthalmol. 2015, 22, 145. [Google Scholar] [CrossRef] [PubMed]

- Lains, I.; Wang, J.C.; Cui, Y.; Katz, R.; Vingopoulos, F.; Staurenghi, G.; Vavvas, D.G.; Miller, J.W.; Miller, J.B. Retinal applications of swept source optical coherence tomography (OCT) and optical coherence tomography angiography (OCTA). Prog. Retin. Eye Res. 2021, 84, 100951. [Google Scholar] [CrossRef] [PubMed]

- Priya, R.; Aruna, P. Diagnosis of diabetic retinopathy using machine learning techniques. ICTACT J. Soft Comput. 2013, 3, 563–575. [Google Scholar] [CrossRef]

- Foeady, A.Z.; Novitasari, D.C.R.; Asyhar, A.H.; Firmansjah, M. Automated Diagnosis System of Diabetic Retinopathy Using GLCM Method and SVM Classifier. In Proceedings of the 2018 5th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Malang, Indonesia, 16–18 October 2018. [Google Scholar] [CrossRef]

- Abdelmaksoud, E.; El-Sappagh, S.; Barakat, S.; Abuhmed, T.; Elmogy, M. Automatic Diabetic Retinopathy Grading System Based on Detecting Multiple Retinal Lesions. IEEE Access 2021, 9, 15939–15960. [Google Scholar] [CrossRef]

- Rajput, G.; Reshmi, B.; Rajesh, I. Automatic detection and grading of diabetic maculopathy using fundus images. Procedia Comput. Sci. 2020, 167, 57–66. [Google Scholar] [CrossRef]

- Zhou, W.; Wu, C.; Yu, X. Computer aided diagnosis for diabetic retinopathy based on fundus image. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 9214–9219. [Google Scholar]

- Rahim, S.S.; Palade, V.; Shuttleworth, J.; Jayne, C. Automatic screening and classification of diabetic retinopathy and maculopathy using fuzzy image processing. Brain Inform. 2016, 3, 249–267. [Google Scholar] [CrossRef]

- Sánchez, C.I.; Niemeijer, M.; Dumitrescu, A.V.; Suttorp-Schulten, M.S.; Abramoff, M.D.; van Ginneken, B. Evaluation of a computer-aided diagnosis system for diabetic retinopathy screening on public data. Investig. Ophthalmol. Vis. Sci. 2011, 52, 4866–4871. [Google Scholar] [CrossRef]

- Mansour, R.F. Deep-learning-based automatic computer-aided diagnosis system for diabetic retinopathy. Biomed. Eng. Lett. 2018, 8, 41–57. [Google Scholar] [CrossRef]

- Li, F.; Liu, Z.; Chen, H.; Jiang, M.; Zhang, X.; Wu, Z. Automatic detection of diabetic retinopathy in retinal fundus photographs based on deep learning algorithm. Transl. Vis. Sci. Technol. 2019, 8, 4. [Google Scholar] [CrossRef]

- Abbas, Q.; Fondon, I.; Sarmiento, A.; Jiménez, S.; Alemany, P. Automatic recognition of severity level for diagnosis of diabetic retinopathy using deep visual features. Med Biol. Eng. Comput. 2017, 55, 1959–1974. [Google Scholar] [CrossRef]

- Khalifa, N.E.M.; Loey, M.; Taha, M.H.N.; Mohamed, H.N.E.T. Deep transfer learning models for medical diabetic retinopathy detection. Acta Inform. Medica 2019, 27, 327. [Google Scholar] [CrossRef] [PubMed]

- Kassani, S.H.; Kassani, P.H.; Khazaeinezhad, R.; Wesolowski, M.J.; Schneider, K.A.; Deters, R. Diabetic retinopathy classification using a modified xception architecture. In Proceedings of the 2019 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Ajman, United Arab Emirates, 10–12 December 2019; pp. 1–6. [Google Scholar]

- Rahhal, D.; Alhamouri, R.; Albataineh, I.; Duwairi, R. Detection and Classification of Diabetic Retinopathy Using Artificial Intelligence Algorithms. In Proceedings of the 2022 13th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 21–23 June 2022; pp. 15–21. [Google Scholar]

- Ibrahim, M.R.; Fathalla, K.M.; M. Youssef, S. HyCAD-OCT: A hybrid computer-aided diagnosis of retinopathy by optical coherence tomography integrating machine learning and feature maps localization. Appl. Sci. 2020, 10, 4716. [Google Scholar] [CrossRef]

- Ghazal, M.; Ali, S.S.; Mahmoud, A.H.; Shalaby, A.M.; El-Baz, A. Accurate Detection of Non-Proliferative Diabetic Retinopathy in Optical Coherence Tomography Images Using Convolutional Neural Networks. IEEE Access 2020, 8, 34387–34397. [Google Scholar] [CrossRef]

- ElTanboly, A.; Ismail, M.; Shalaby, A.; Switala, A.; El-Baz, A.; Schaal, S.; Gimel’farb, G.; El-Azab, M. A computer-aided diagnostic system for detecting diabetic retinopathy in optical coherence tomography images. Med. Phys. 2017, 44, 914–923. [Google Scholar] [CrossRef] [PubMed]

- Sandhu, H.S.; Eltanboly, A.; Shalaby, A.; Keynton, R.S.; Schaal, S.; El-Baz, A. Automated Diagnosis and Grading of Diabetic Retinopathy Using Optical Coherence Tomography. Investig. Opthalmol. Vis. Sci. 2018, 59, 3155. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Shen, L.; Shen, M.; Tan, F.; Qiu, C.S. Deep learning based early stage diabetic retinopathy detection using optical coherence tomography. Neurocomputing 2019, 369, 134–144. [Google Scholar] [CrossRef]

- Aldahami, M.; Alqasemi, U. Classification of OCT Images for Detecting Diabetic Retinopathy Disease Using Machine Learning. 2020. Available online: https://assets.researchsquare.com/files/rs-47495/v1/bda4d133-29c5-4c2e-ae8d-f0d9cd084989.pdf?c=1642522911 (accessed on 19 September 2022).

- Sharafeldeen, A.; Elsharkawy, M.; Khalifa, F.; Soliman, A.; Ghazal, M.; AlHalabi, M.; Yaghi, M.; Alrahmawy, M.; Elmougy, S.; Sandhu, H.S.; et al. Precise higher-order reflectivity and morphology models for early diagnosis of diabetic retinopathy using OCT images. Sci. Rep. 2021, 11, 4730. [Google Scholar] [CrossRef]

- Eladawi, N.; Elmogy, M.; Khalifa, F.; Ghazal, M.; Ghazi, N.; Aboelfetouh, A.; Riad, A.; Sandhu, H.; Schaal, S.; El-Baz, A. Early diabetic retinopathy diagnosis based on local retinal blood vessel analysis in optical coherence tomography angiography (OCTA) images. Med. Phys. 2018, 45, 4582–4599. [Google Scholar] [CrossRef]

- Heisler, M.; Karst, S.; Lo, J.; Mammo, Z.; Yu, T.; Warner, S.; Maberley, D.; Beg, M.F.; Navajas, E.V.; Sarunic, M.V. Ensemble deep learning for diabetic retinopathy detection using optical coherence tomography angiography. Transl. Vis. Sci. Technol. 2020, 9, 20. [Google Scholar] [CrossRef]

- Le, D.; Alam, M.; Yao, C.K.; Lim, J.I.; Hsieh, Y.T.; Chan, R.V.; Toslak, D.; Yao, X. Transfer learning for automated OCTA detection of diabetic retinopathy. Transl. Vis. Sci. Technol. 2020, 9, 35. [Google Scholar] [CrossRef]

- Sandhu, H.S.; Elmogy, M.; Sharafeldeen, A.T.; Elsharkawy, M.; El-Adawy, N.; Eltanboly, A.; Shalaby, A.; Keynton, R.; El-Baz, A. Automated Diagnosis of Diabetic Retinopathy Using Clinical Biomarkers, Optical Coherence Tomography, and Optical Coherence Tomography Angiography. Am. J. Ophthalmol. 2020, 216, 201–206. [Google Scholar] [CrossRef] [PubMed]

- Sleman, A.A.; Soliman, A.; Elsharkawy, M.; Giridharan, G.; Ghazal, M.; Sandhu, H.; Schaal, S.; Keynton, R.; Elmaghraby, A.; El-Baz, A. A novel 3D segmentation approach for extracting retinal layers from optical coherence tomography images. Med. Phys. 2021, 48, 1584–1595. [Google Scholar] [CrossRef] [PubMed]

- Soliman, A.; Khalifa, F.; Dunlap, N.; Wang, B.; Abou El-Ghar, M.; El-Baz, A. An iso-surfaces based local deformation handling framework of lung tissues. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 1253–1259. [Google Scholar]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Sandhu, H.S.; Eladawi, N.; Elmogy, M.; Keynton, R.; Helmy, O.; Schaal, S.; El-Baz, A. Automated diabetic retinopathy detection using optical coherence tomography angiography: A pilot study. Br. J. Ophthalmol. 2018, 102, 1564–1569. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).