Green Citrus Detection and Counting in Orchards Based on YOLOv5-CS and AI Edge System

Abstract

:1. Introduction

2. Related Works

2.1. YOLO Models

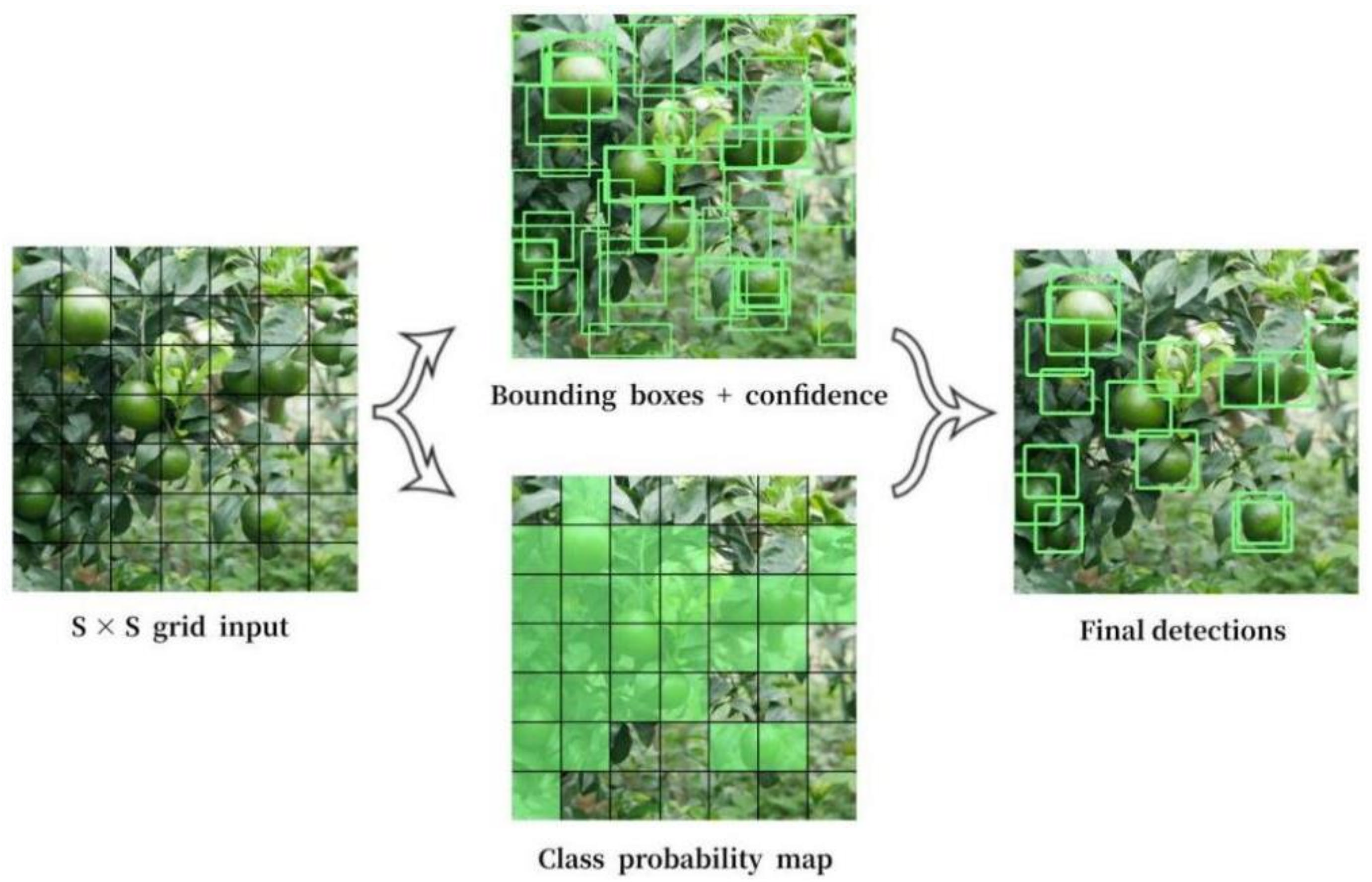

2.1.1. Input

2.1.2. Backbone

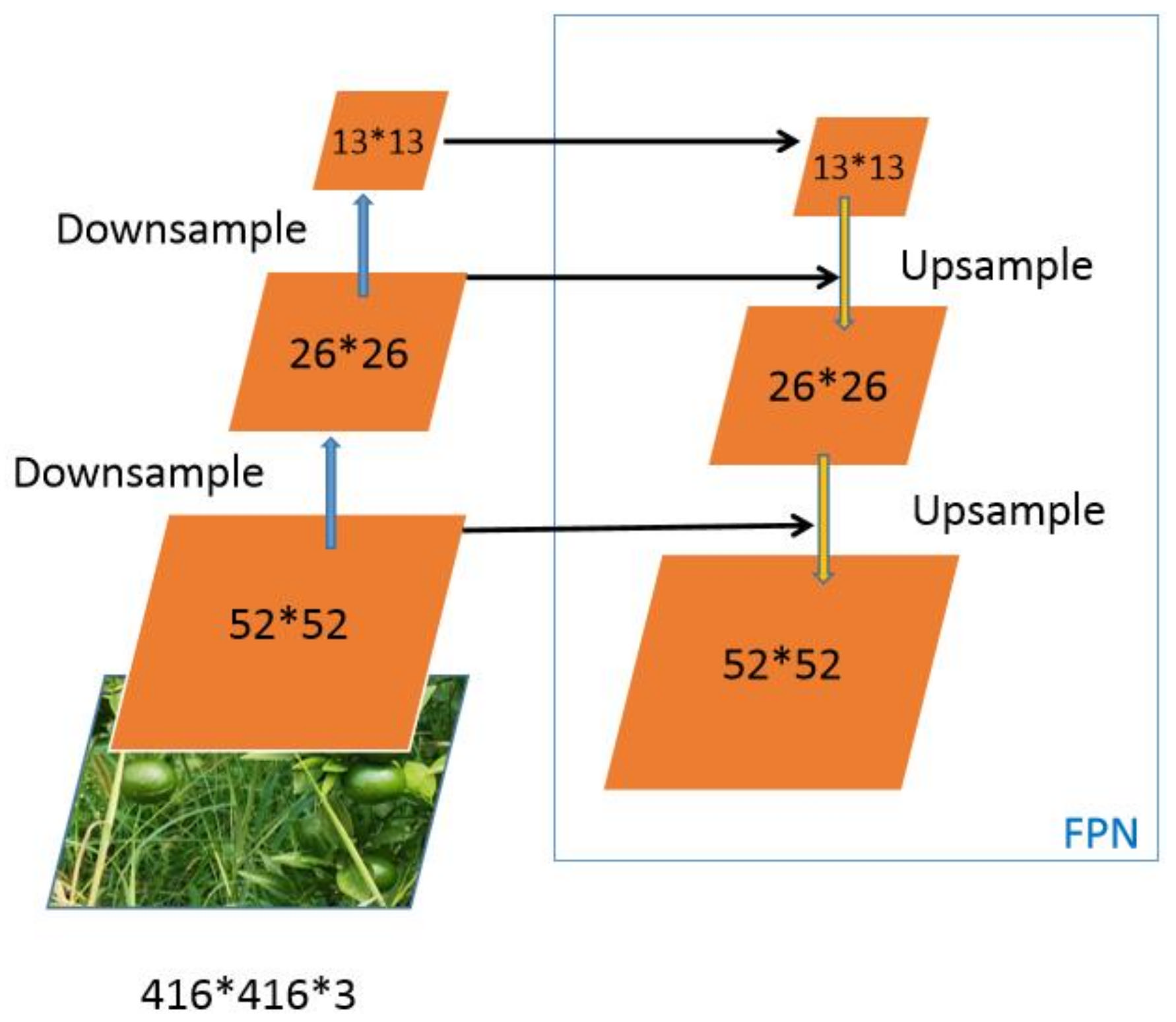

2.1.3. Neck

2.1.4. Output

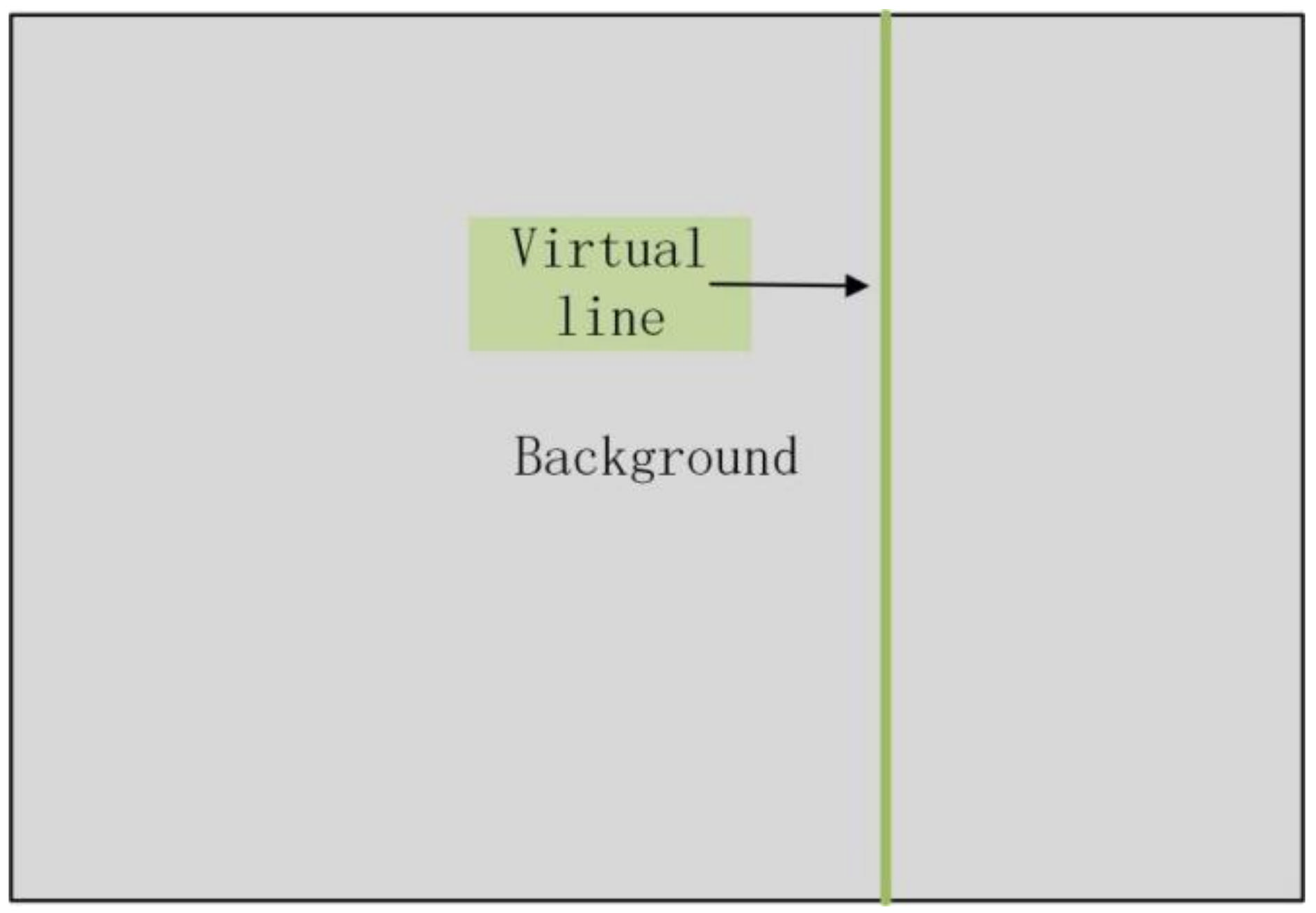

2.2. Object Tracking and Counting

3. Materials and Methods

3.1. Dataset Preprocessing

3.1.1. Data Acquisition

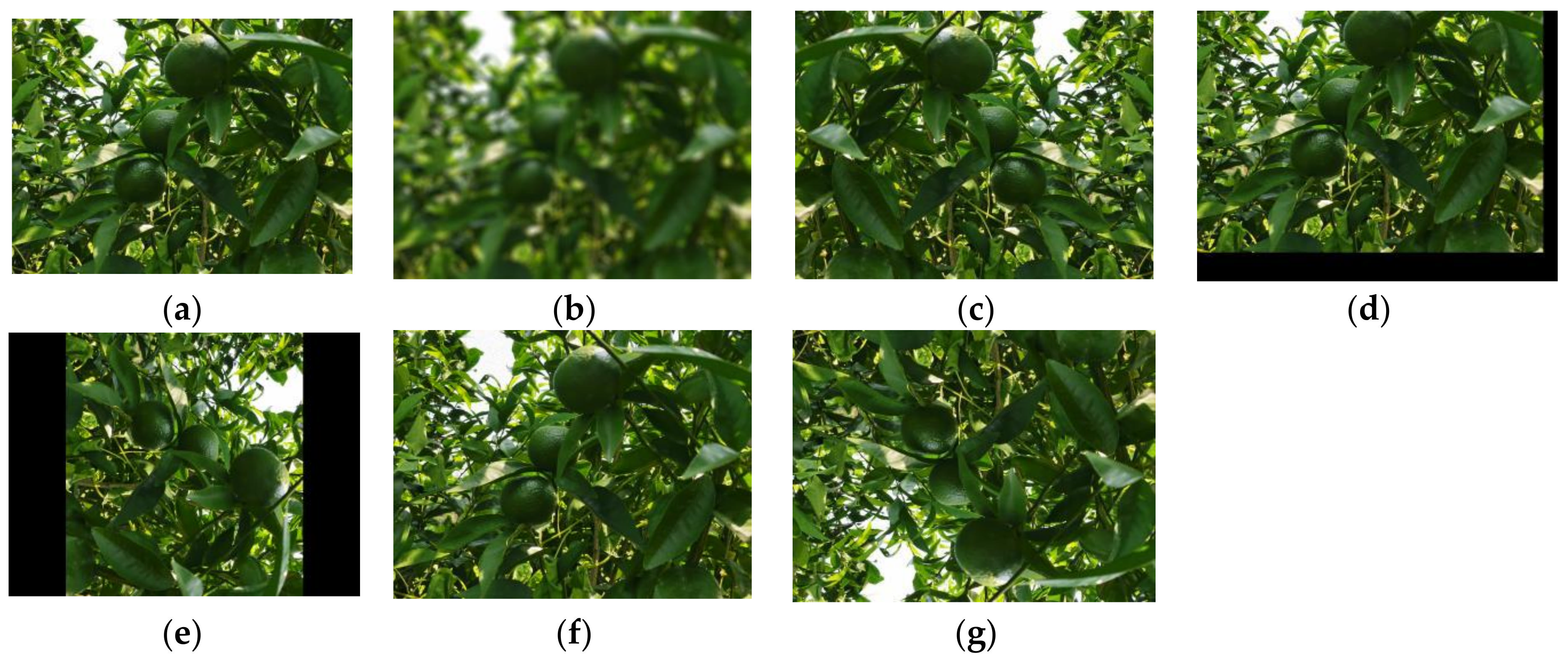

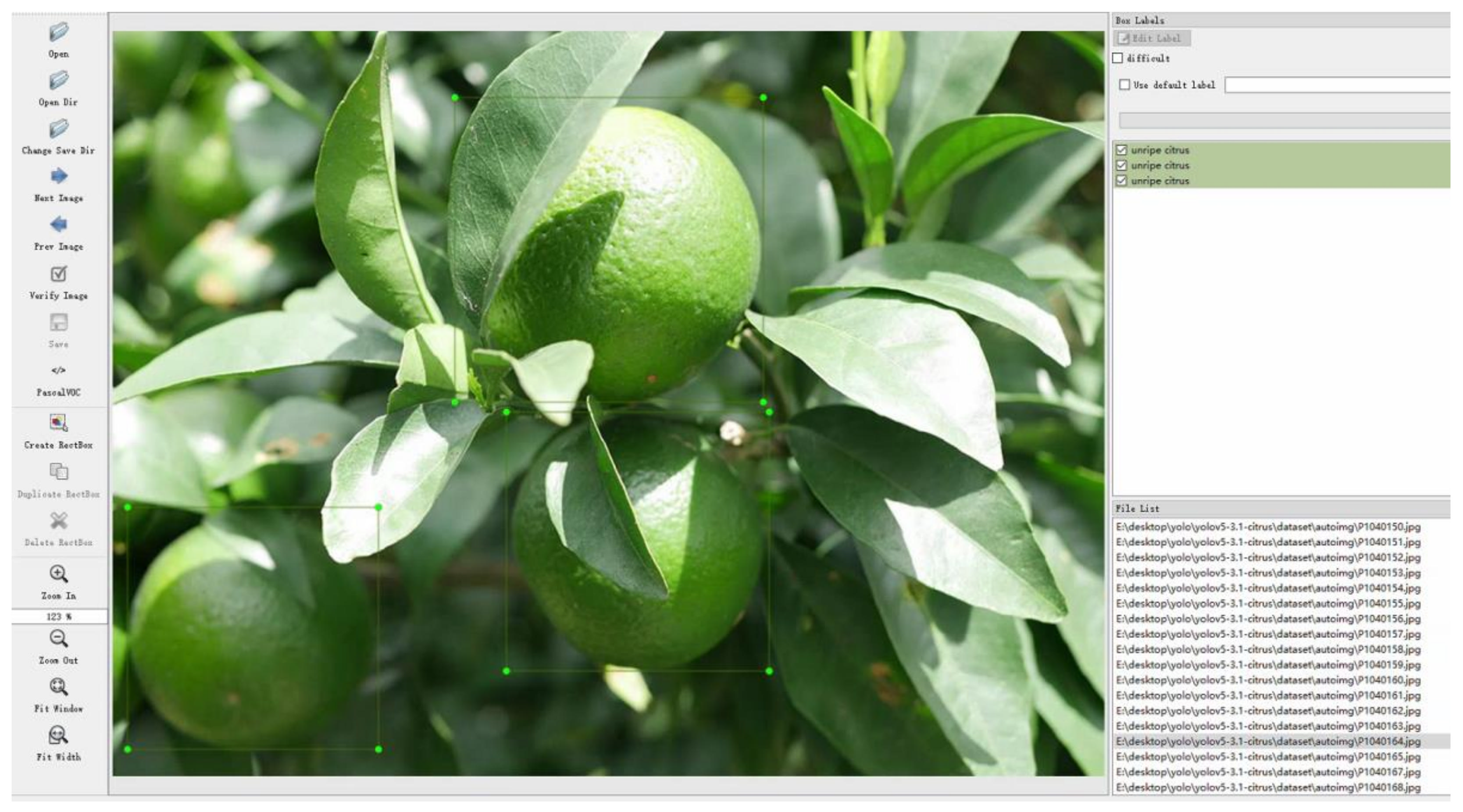

3.1.2. Data Augmentation and Labeling

3.2. YOLOv5-CS Model Design and Edge-Computing System Migration & Deployment

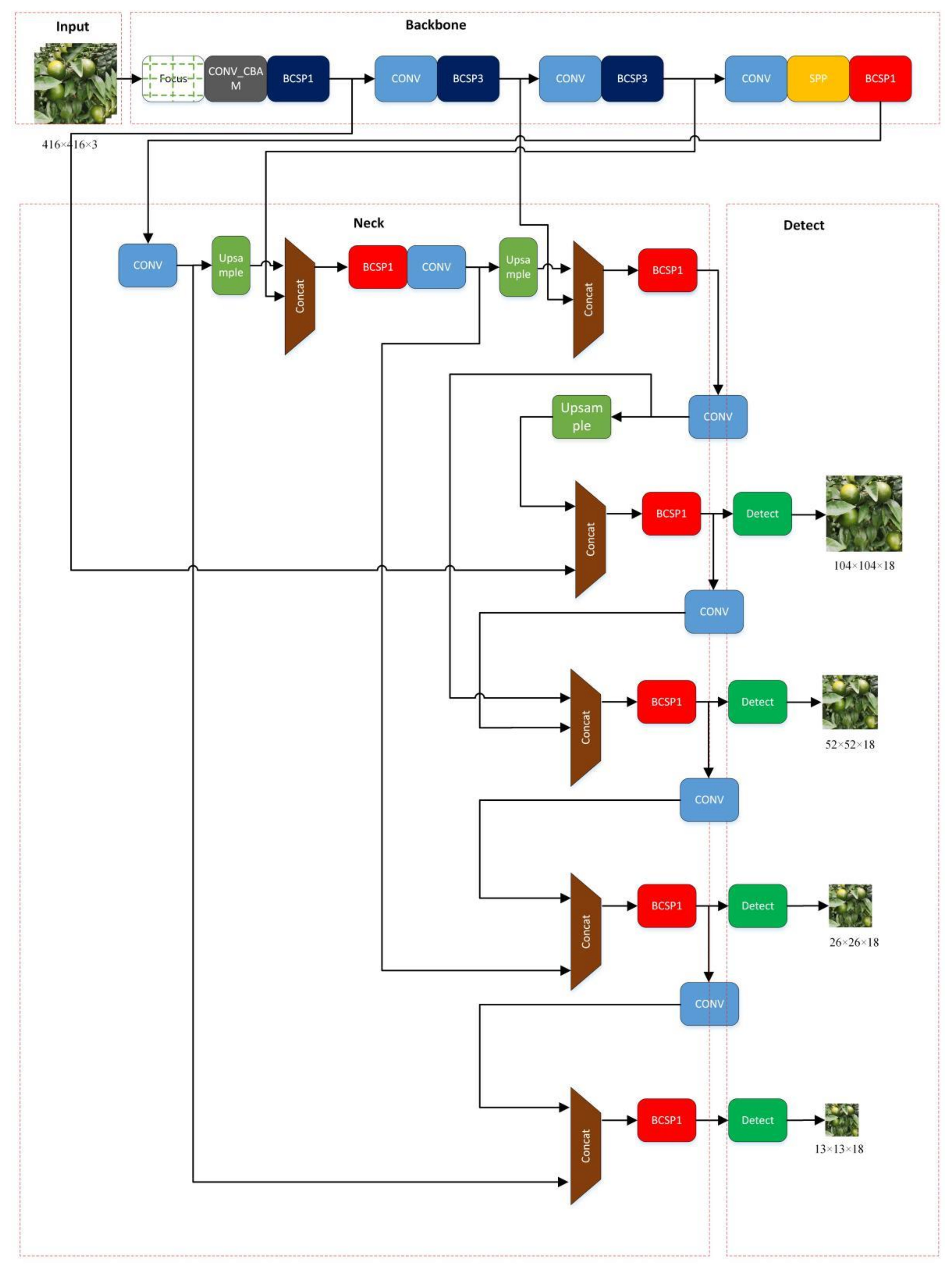

3.2.1. Model Design

3.2.2. Model Optimization

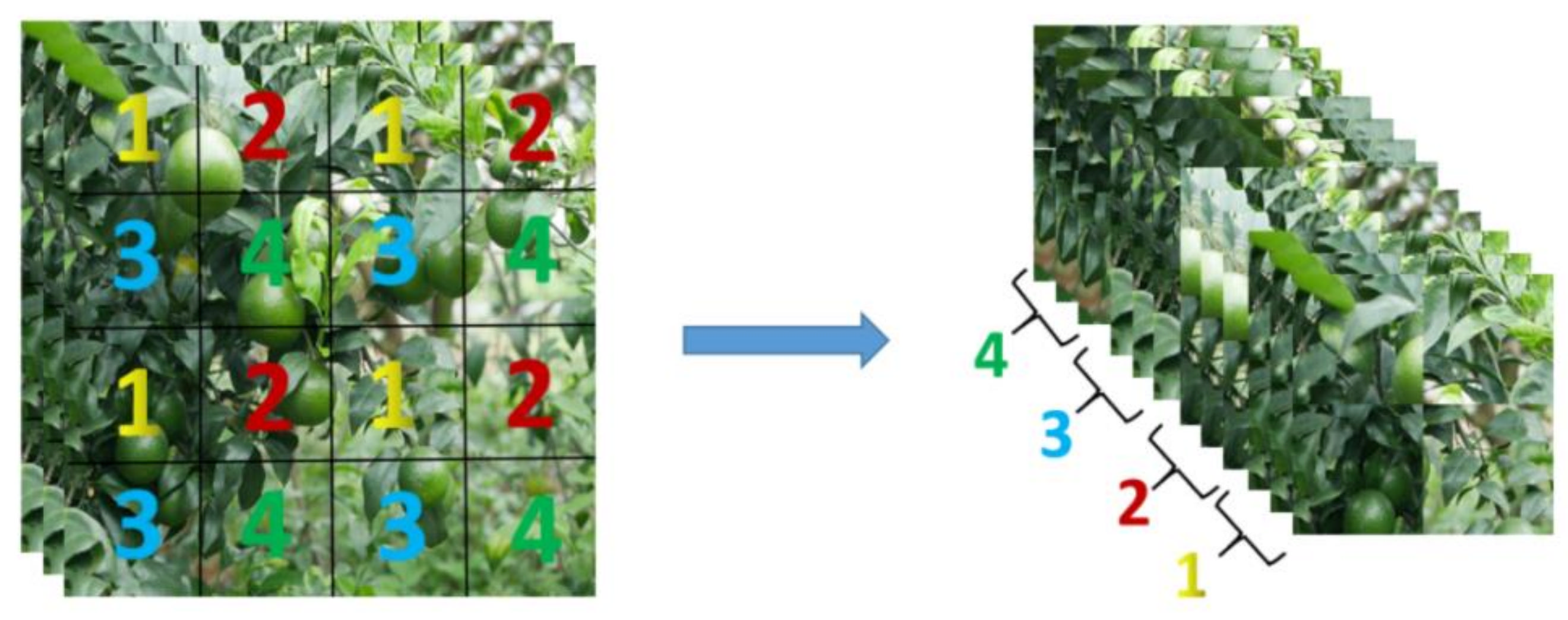

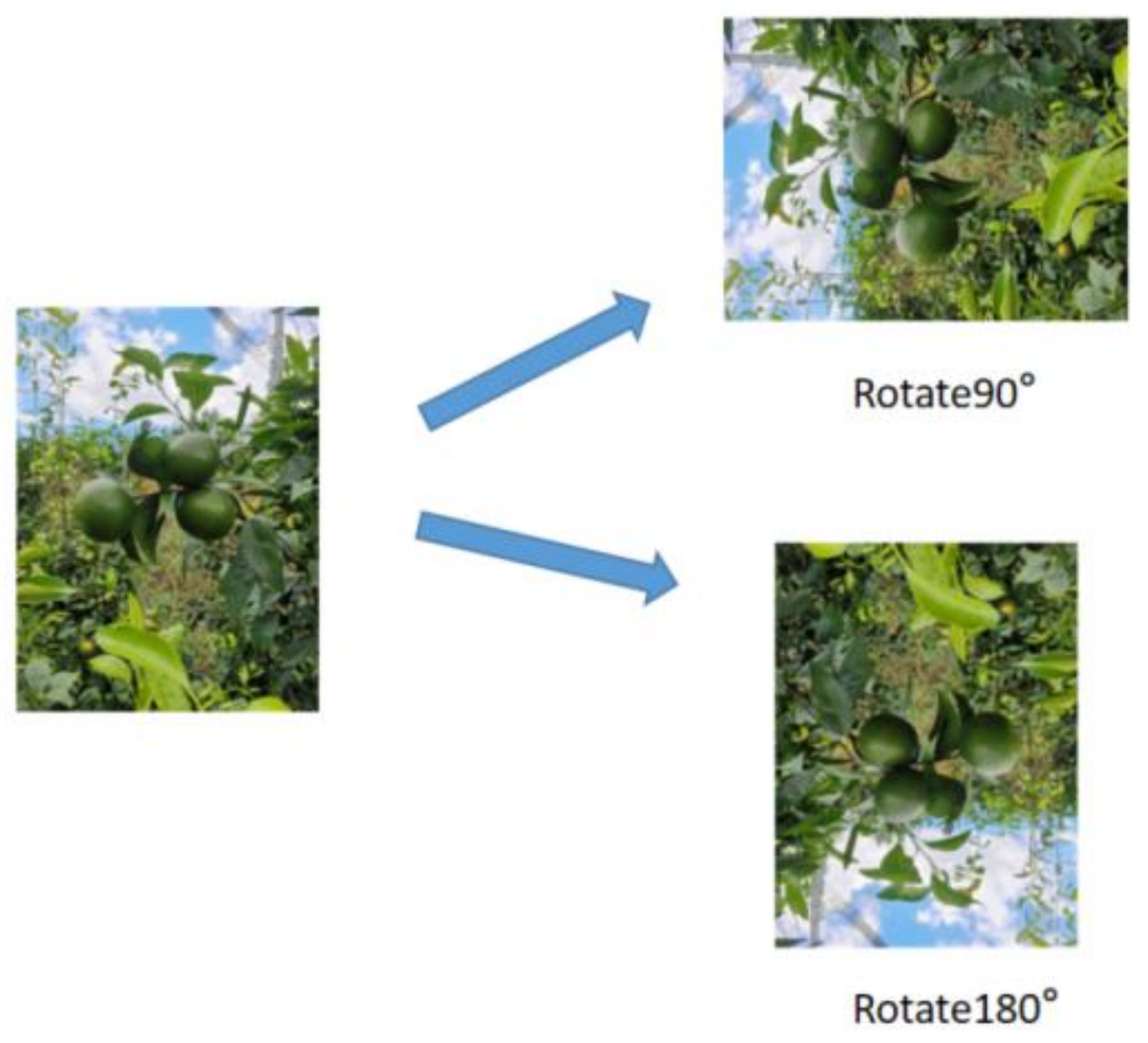

- Image Rotation

- Small Object Detection Layer

- CBAM

- Loss function

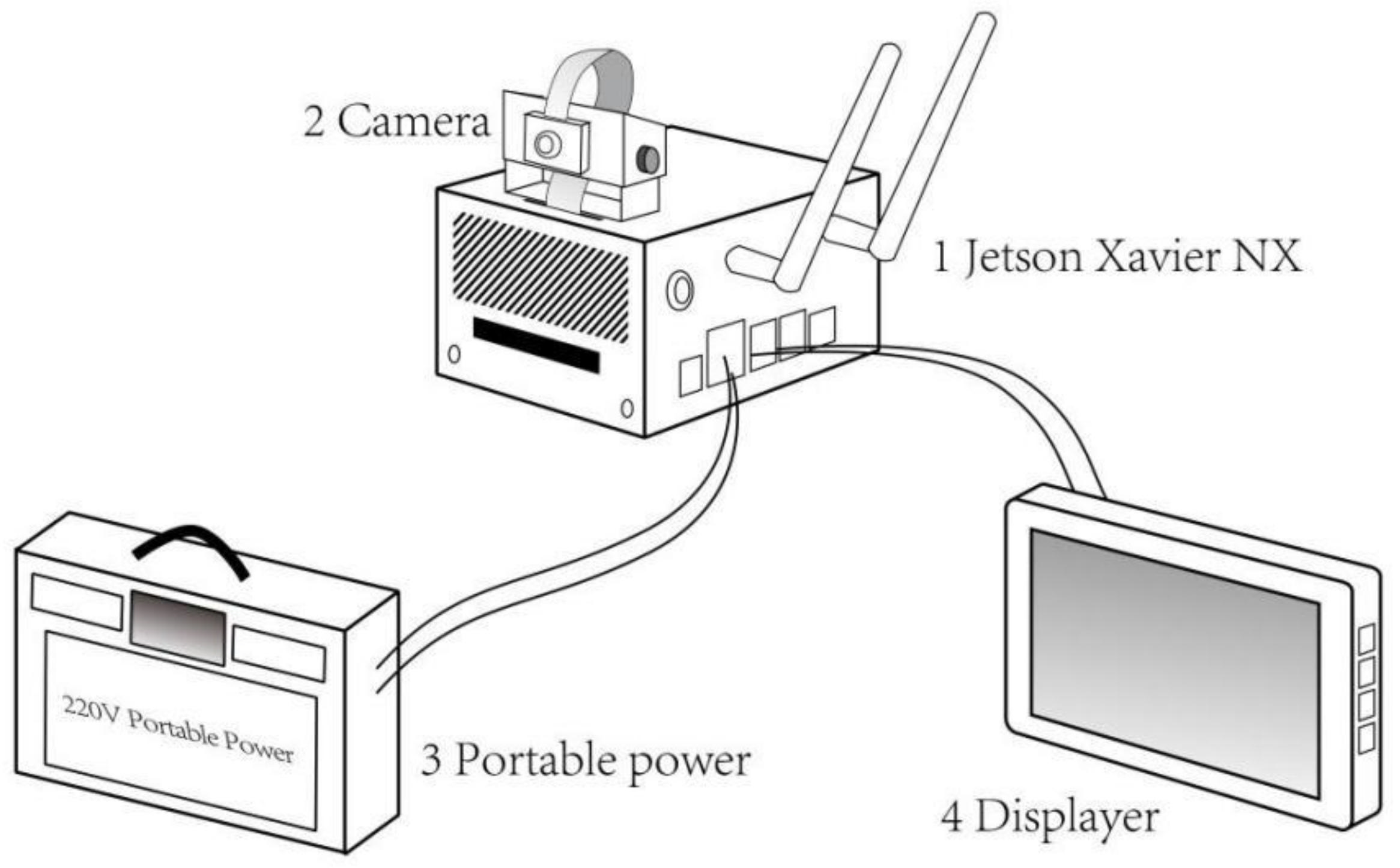

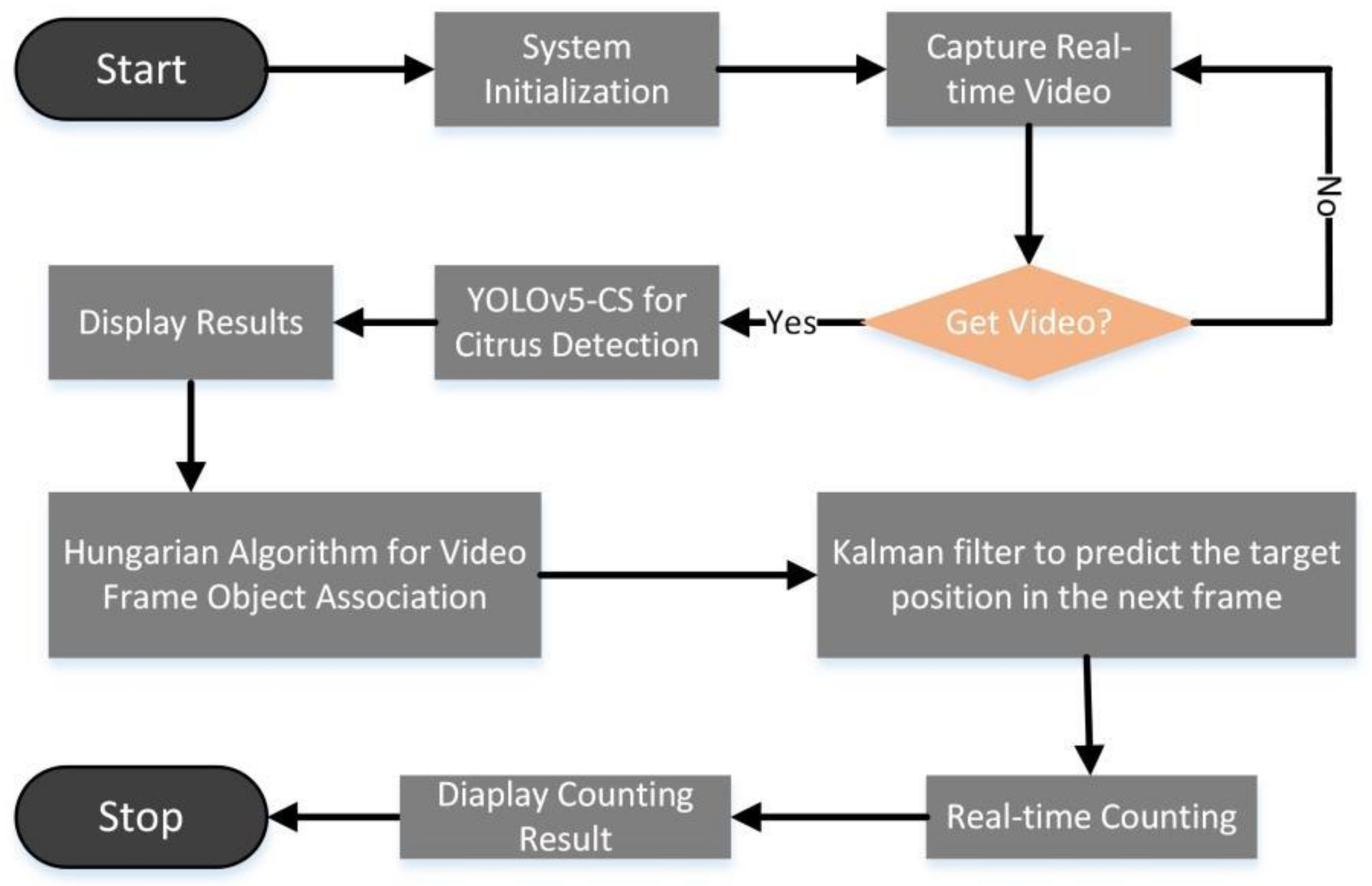

3.2.3. Edge-Computing System Migration & Deployment

3.3. Learning Rate

3.4. Experiment Platform

3.5. Evaluation Index

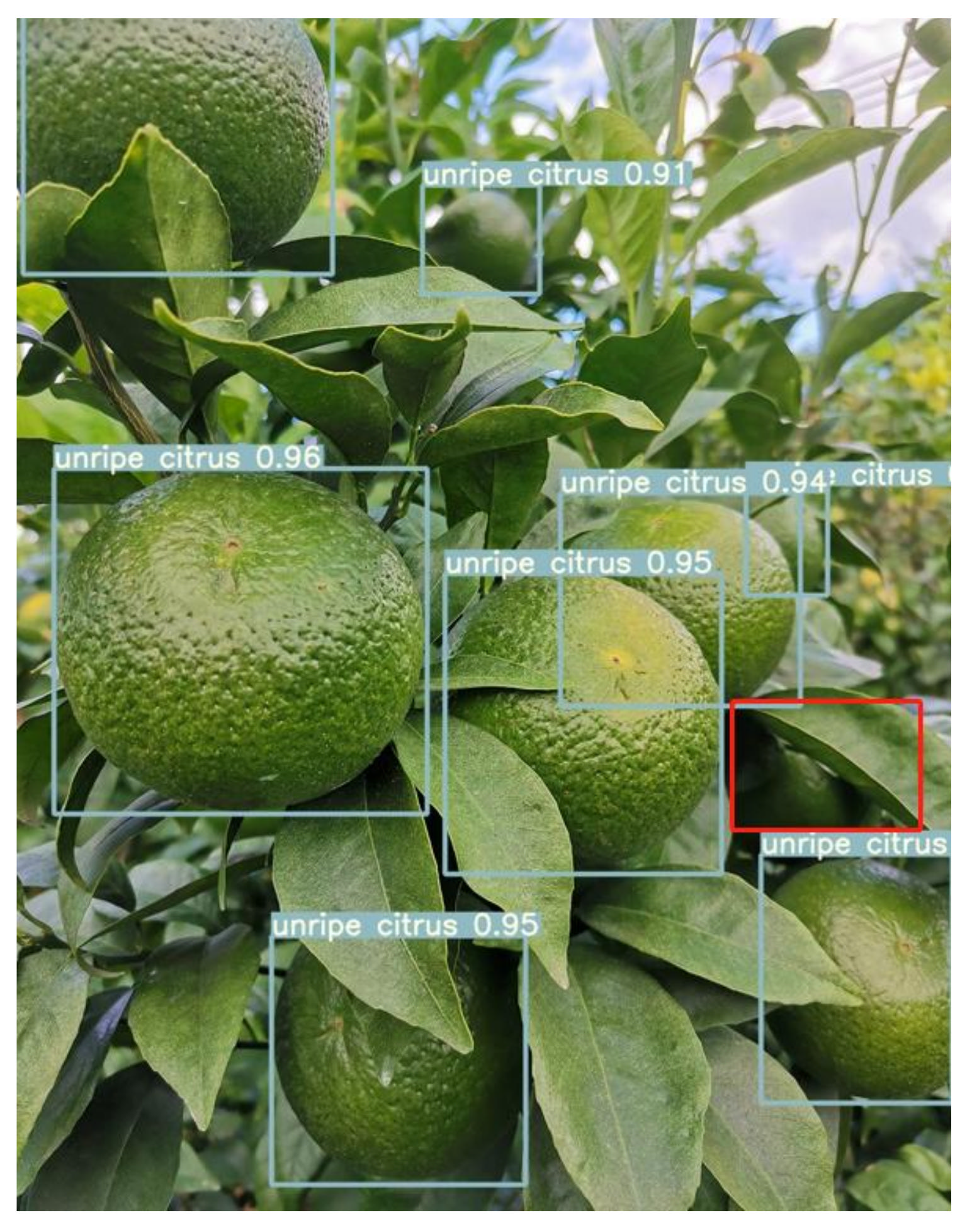

4. Experiment and Results

4.1. Ablation Experiment

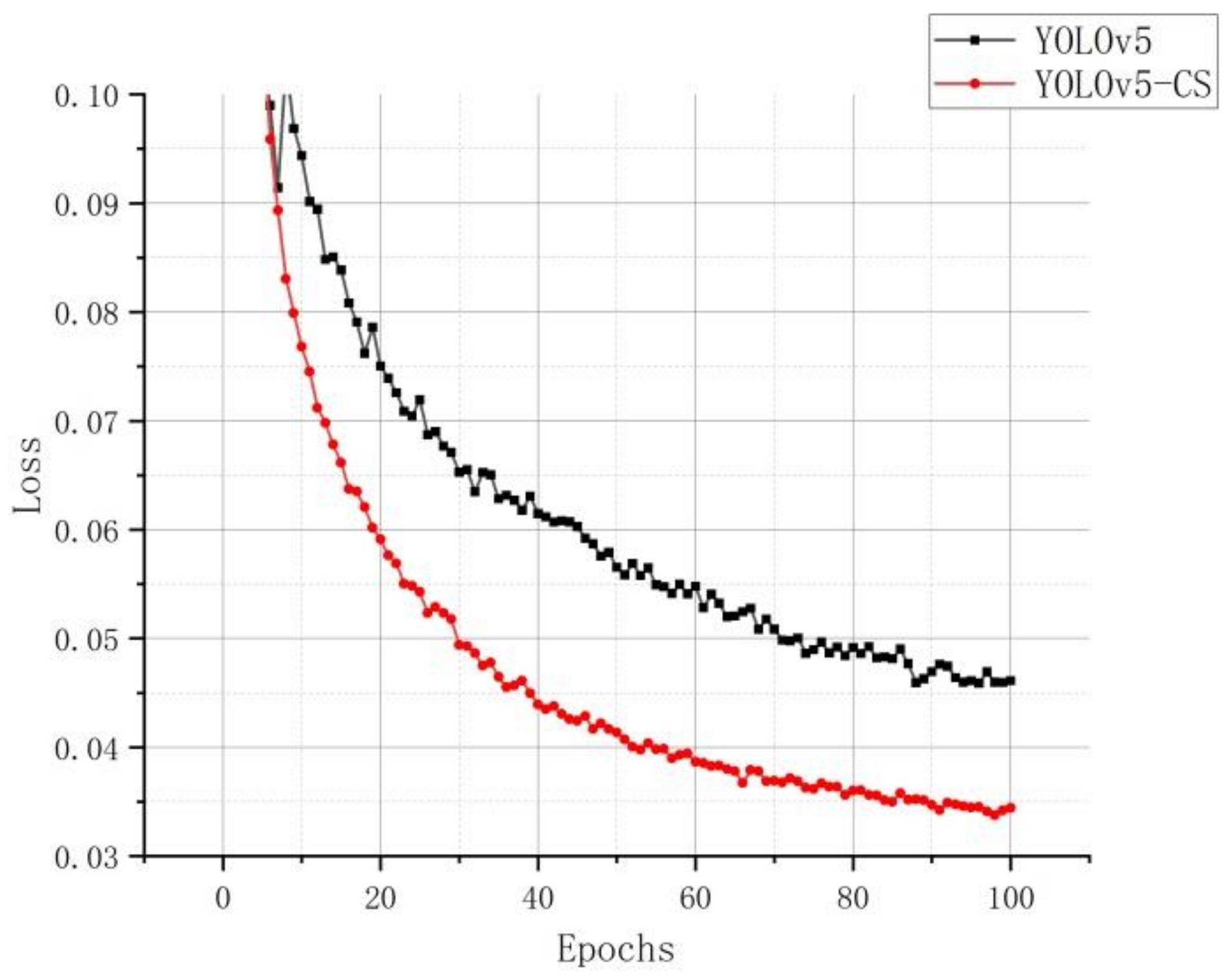

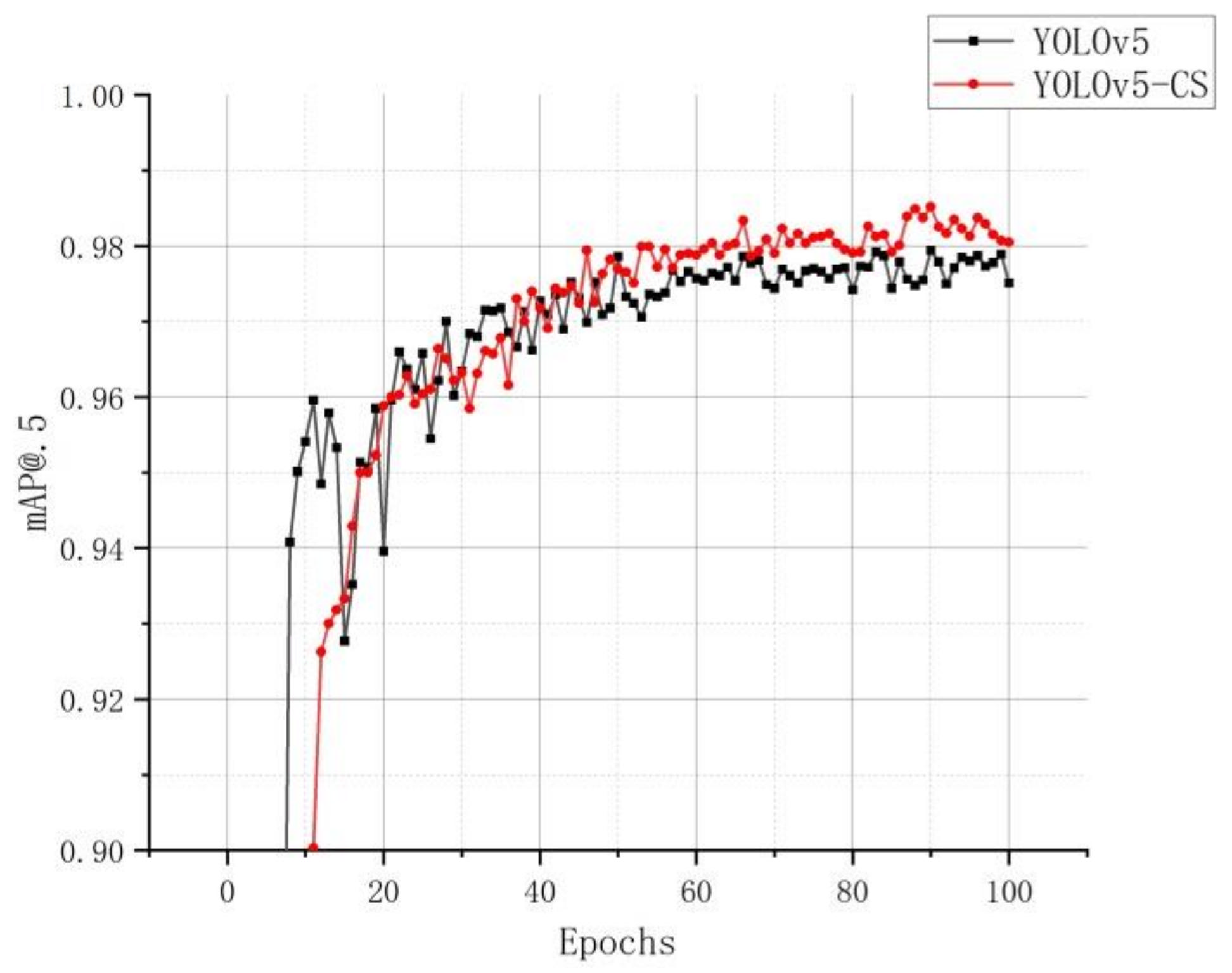

4.2. Training Result

4.3. Counting Result

5. Discussion

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning—Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Yi, S.; Li, J.J.; Zhang, P.; Wang, D.D. Detecting and counting of spring-see citrus using YOLOv4 network model and recursive fusion of features. Trans. Chin. Soc. Agric. Eng. 2020, 29, 15–20. [Google Scholar]

- Wu, X.; Qi, Z.Y.; Wang, L.J.; Yang, J.J.; Xia, X. Apple Detection Method Based on Light-YOLOv3 Convolutional Neural Net-work. Chin. Soc. Agric. Mach. 2020, 51, 17–25. [Google Scholar]

- Chen, W.K.; Lu, S.L.; Liu, B.H.; Li, G.; Liu, X.Y.; Chen, M. Real-time Citrus Recognition under Orchard Environment by Im-proved YOLOv4. J. Guangxi Nor. Univ. (Nat. Sci. Ed.) 2021, 39, 134–146. [Google Scholar]

- Li, Z.J.; Yang, S.H.; Shi, D.S.; Liu, X.X.; Zheng, Y.J. Yield Estimation Method of Apple Tree Based on Improved Lightweight YOLOv5. Smart Agric. 2021, 3, 100–114. [Google Scholar]

- Li, X.; Qin, Y.; Wang, F.; Guo, F.; Yeow, J.T.W. Pitaya detection in orchards using the MobileNet-YOLO model. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; IEEE: Shenyang, China, 2020. [Google Scholar]

- Bi, S.; Gao, F.; Chen, J.W.; Zhang, L. Detection Method of Citrus Based on Deep Convolution Neural Network. Trans. Chin. Soc. Agric. Mach. 2019, 50, 181–186. [Google Scholar]

- Xiong, J.T.; Zheng, Z.H.; Liang, J.E.; Liu, B.L.; Sun, B.X. Citrus Detection Method in Night Environment Based on Improved YOLO v3 Network. Chin. Soc. Agric. Mach. 2020, 51, 199–206. [Google Scholar]

- Li, S.J.; Hu, D.Y.; Gao, S.M.; Lin, J.H.; An, X.S.; Zhu, M. Real-time Classification and Detection of Citrus Based on Improved Single Short Multibox Detecter. Trans. Chin. Soc. Agric. Eng. 2019, 35, 307–313. [Google Scholar]

- Lyu, S.L.; Lu, S.H.; Li, Z.; Hong, T.S.; Xue, Y.J.; Wu, B.L. Orange Recognition Method Using Improved YOLOv3-LITE Light-weight Neural Network. Trans. Chin. Soc. Agric. Eng. 2019, 35, 205–214. [Google Scholar]

- Zheng, Z.; Xiong, J.; Lin, H.; Han, Y.; Sun, B.; Xie, Z.; Yang, Z.; Wang, C. A Method of Green Citrus Detection in Natural Environments Using a Deep Convolutional Neural Network. Front. Plant Sci. 2021, 12, 1861–1874. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Detecting Apples in Orchards Using YOLOv3 and YOLOv5 in General and Close-Up Images. In International Symposium on Neural Networks; Springer: Cham, Switzerland; Berlin/Heidelberg, Germany, 2020; pp. 233–243. [Google Scholar]

- Parico, A.; Ahamed, T. Real Time Pear Fruit Detection and Counting Using YOLOv4 Models and Deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef] [PubMed]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Chen, W.; Lu, S.; Liu, B.; Li, G.; Qian, T. Detecting Citrus in Orchard Environment by Using Improved YOLOv4. Sci. Program. 2020, 2020, 8859237. [Google Scholar] [CrossRef]

- Xue, Y.J.; Huang, N.; Tu, S.Q.; Mao, L.; Yang, A.Q.; Zhu, X.M.; Yang, X.F.; Chen, P.F. Immature Mango Detection Based on Improved YOLOv2. Trans. Chin. Soc. Agric. Eng. 2018, 34, 173–179. [Google Scholar]

- Shi, R.; Li, T.; Yamaguchi, Y. An attribution-based pruning method for real-time mango detection with YOLO network. Comput. Electron. Agric. 2020, 169, 105214. [Google Scholar] [CrossRef]

- Mu, Y.; Chen, T.-S.; Ninomiya, S.; Guo, W. Intact Detection of Highly Occluded Immature Tomatoes on Plants Using Deep Learning Techniques. Sensors 2020, 20, 2984. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; He, D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Song, S.; Li, Y.; Huang, Q.; Li, G. A New Real-Time Detection and Tracking Method in Videos for Small Target Traffic Signs. Appl. Sci. 2021, 11, 3061. [Google Scholar] [CrossRef]

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A Real-Time Detection Algorithm for Kiwifruit Defects Based on YOLOv5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- Janowski, A.; Kaźmierczak, R.; Kowalczyk, C.; Szulwic, J. Detecting Apples in the Wild: Potential for Harvest Quantity Es-timation. Sustainability 2021, 13, 8054. [Google Scholar] [CrossRef]

- Xu, D.G.; Wang, L.; Li, F. Review of Typical Object Detection Algorithms for Deep Learning. Comput. Eng. Appl. 2021, 57, 10–25. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 6517–6525. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement, Computer Vision and Pattern Recognition (CVPR). arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4 Optimal Speed and Accuracy of Object Detection. In Proceedings of the Computer Vision and Pattern Recognition. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 10 June 2020).

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1571–1580. [Google Scholar]

- Luvizon, D.; Tabia, H.; Picard, D. SSP-Net: Scalable Sequential Pyramid Networks for Real-Time 3D Human Pose Regression. arXiv 2020, arXiv:2009.01998. [Google Scholar]

- Lin, T.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef] [Green Version]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3645–3649. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. In Proceedings of the International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. (NRL) 2005, 52, 7–21. [Google Scholar] [CrossRef] [Green Version]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over union: A metric and a Loss for Bounding Box Regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 658–666. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

| Dataset | Label | Training Set | Test Set | Total |

|---|---|---|---|---|

| green citrus | unripe citrus | 2211 | 620 | 2831 |

| Serial Number | From | Params | Module | Arguments |

|---|---|---|---|---|

| 0 | −1 | 3520 | Focus | [3, 32, 3] |

| 1 | −1 | 19,170 | Conv_CBAM | [32, 64, 3, 2] |

| 2 | −1 | 19,904 | BottleneckCSP | [64, 64, 1] |

| 3 | −1 | 73,984 | Conv | [64, 128, 3, 2] |

| 4 | −1 | 161,152 | BottleneckCSP | [128, 128, 3] |

| 5 | −1 | 295,424 | Conv | [128, 256, 3, 2] |

| 6 | −1 | 641,792 | BottleneckCSP | [256, 256, 3] |

| 7 | −1 | 1,180,672 | Conv | [256, 512, 3, 2] |

| 8 | −1 | 656,896 | SPP | [512, 512, [5, 9, 13]] |

| 9 | −1 | 1,248,768 | BottleneckCSP | [512, 512, 1, False] |

| 10 | −1 | 131,584 | Conv | [512, 256, 1, 1] |

| 11 | −1 | 0 | Upsample | [None, 2, ‘nearest’] |

| 12 | [−1, 6] | 0 | Concat | [1] |

| 13 | −1 | 378,624 | BottleneckCSP | [512,256, 1, False] |

| 14 | −1 | 66,048 | Conv | [256, 128, 1, 1] |

| 15 | −1 | 0 | Upsample | [None, 2, ‘nearest’] |

| 16 | [−1, 4] | 0 | Concat | [1] |

| 17 | −1 | 345,856 | BottleneckCSP | [384, 256, 1, False] |

| 18 | −1 | 33,024 | Conv | [256, 128, 1, 1] |

| 19 | −1 | 0 | Upsample | [None, 2, ‘nearest’] |

| 20 | −1 | 0 | Concat | [1] |

| 21 | −1 | 86,912 | BottleneckCSP | [192, 128, 1, False] |

| 22 | −1 | 147,712 | Conv | [128, 128, 3, 2] |

| 23 | −1 | 0 | Concat | [1] |

| 24 | −1 | 95,104 | BottleneckCSP | [256, 128, 1, False] |

| 25 | −1 | 147,712 | Conv | [128, 128, 3, 2] |

| 26 | [−1, 14] | 0 | Concat | [1] |

| 27 | −1 | 313,088 | BottleneckCSP | [256,256, 1, False] |

| 28 | −1 | 590,336 | Conv | [256, 256, 3, 2] |

| 29 | [−1, 10] | 0 | Concat | [1] |

| 30 | −1 | 1,248,768 | BottleneckCSP | [512,512, 1, False] |

| Data Augmentation | Small Object Detection Layer | CBAM | mAP@.5 | Recall | Epochs |

|---|---|---|---|---|---|

| 96.66% | 92.74% | 100 | |||

| √ | 97.51% | 96.16% | |||

| √ | √ | 97.59% | 96.09% | ||

| √ | √ | √ | 98.05% | 97.38% |

| Neural Network | Epochs | mAP@.5 | Image-Size | Precision | Recall |

|---|---|---|---|---|---|

| First training | |||||

| YOLOv5 | 100 | 97.51% | 416 | 89.81% | 96.16% |

| YOLOv5-CS | 100 | 98.05% | 416 | 84.49% | 97.38% |

| Retraining | |||||

| YOLOv5 | 50 | 97.79% | 416 | 93.03% | 95.27% |

| YOLOv5-CS | 50 | 98.23% | 416 | 86.97% | 97.66% |

| Number of Experiments | Actual Number | Number of Tests | Relative Error | Average Relative Error of Ten Times | FPS |

|---|---|---|---|---|---|

| 1 | 40 | 40 | 0% | 4.25% | 28 |

| 2 | 37 | 7.5% | |||

| 3 | 36 | 10% | |||

| 4 | 38 | 5% | |||

| 5 | 38 | 5% | |||

| 6 | 39 | 2.5% | |||

| 7 | 37 | 7.5% | |||

| 8 | 39 | 2.5% | |||

| 9 | 40 | 0% | |||

| 10 | 39 | 2.5% |

| Number of Experiments | Actual Number | Number of Tests | Relative Error | Average Relative Error of Ten Times | FPS |

|---|---|---|---|---|---|

| 1 | 24 | 22 | 8.33% | 8.75% | 28 |

| 2 | 21 | 12.5% | |||

| 3 | 23 | 4.17% | |||

| 4 | 25 | 4.17% | |||

| 5 | 21 | 12.5% | |||

| 6 | 20 | 16.67% | |||

| 7 | 26 | 8.33% | |||

| 8 | 22 | 8.33% | |||

| 9 | 23 | 4.17% | |||

| 10 | 22 | 8.33% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, S.; Li, R.; Zhao, Y.; Li, Z.; Fan, R.; Liu, S. Green Citrus Detection and Counting in Orchards Based on YOLOv5-CS and AI Edge System. Sensors 2022, 22, 576. https://doi.org/10.3390/s22020576

Lyu S, Li R, Zhao Y, Li Z, Fan R, Liu S. Green Citrus Detection and Counting in Orchards Based on YOLOv5-CS and AI Edge System. Sensors. 2022; 22(2):576. https://doi.org/10.3390/s22020576

Chicago/Turabian StyleLyu, Shilei, Ruiyao Li, Yawen Zhao, Zhen Li, Renjie Fan, and Siying Liu. 2022. "Green Citrus Detection and Counting in Orchards Based on YOLOv5-CS and AI Edge System" Sensors 22, no. 2: 576. https://doi.org/10.3390/s22020576

APA StyleLyu, S., Li, R., Zhao, Y., Li, Z., Fan, R., & Liu, S. (2022). Green Citrus Detection and Counting in Orchards Based on YOLOv5-CS and AI Edge System. Sensors, 22(2), 576. https://doi.org/10.3390/s22020576