Comparison of RetinaNet-Based Single-Target Cascading and Multi-Target Detection Models for Administrative Regions in Network Map Pictures

Abstract

1. Introduction

2. Method

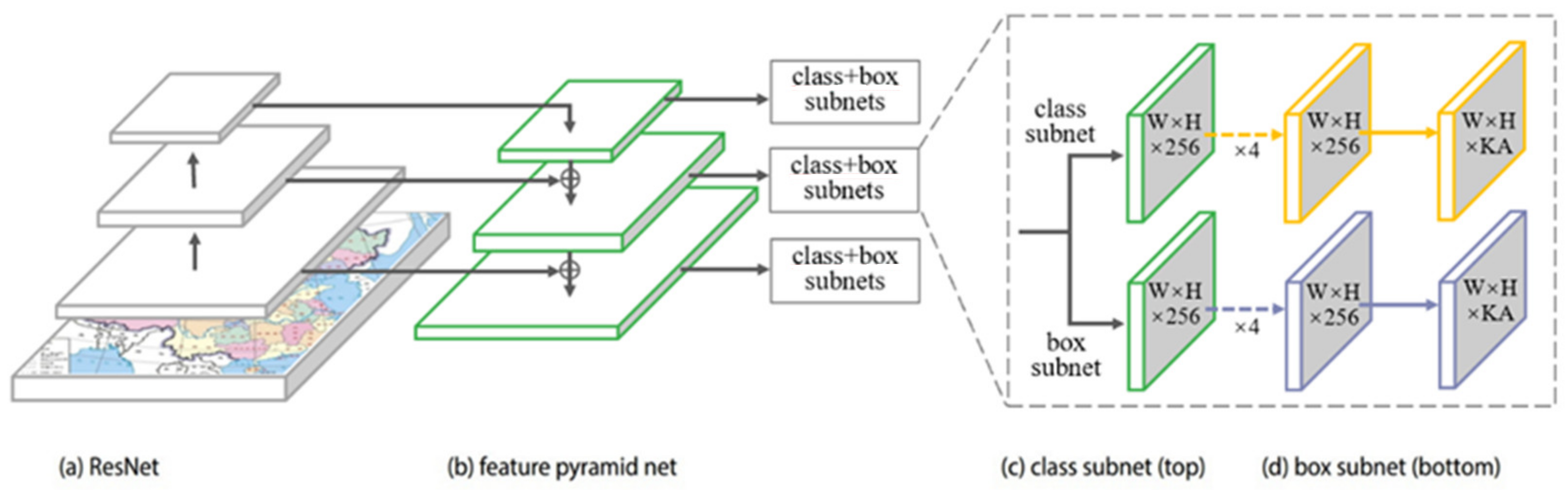

2.1. RetinaNet Model Structure

2.2. Focal Loss

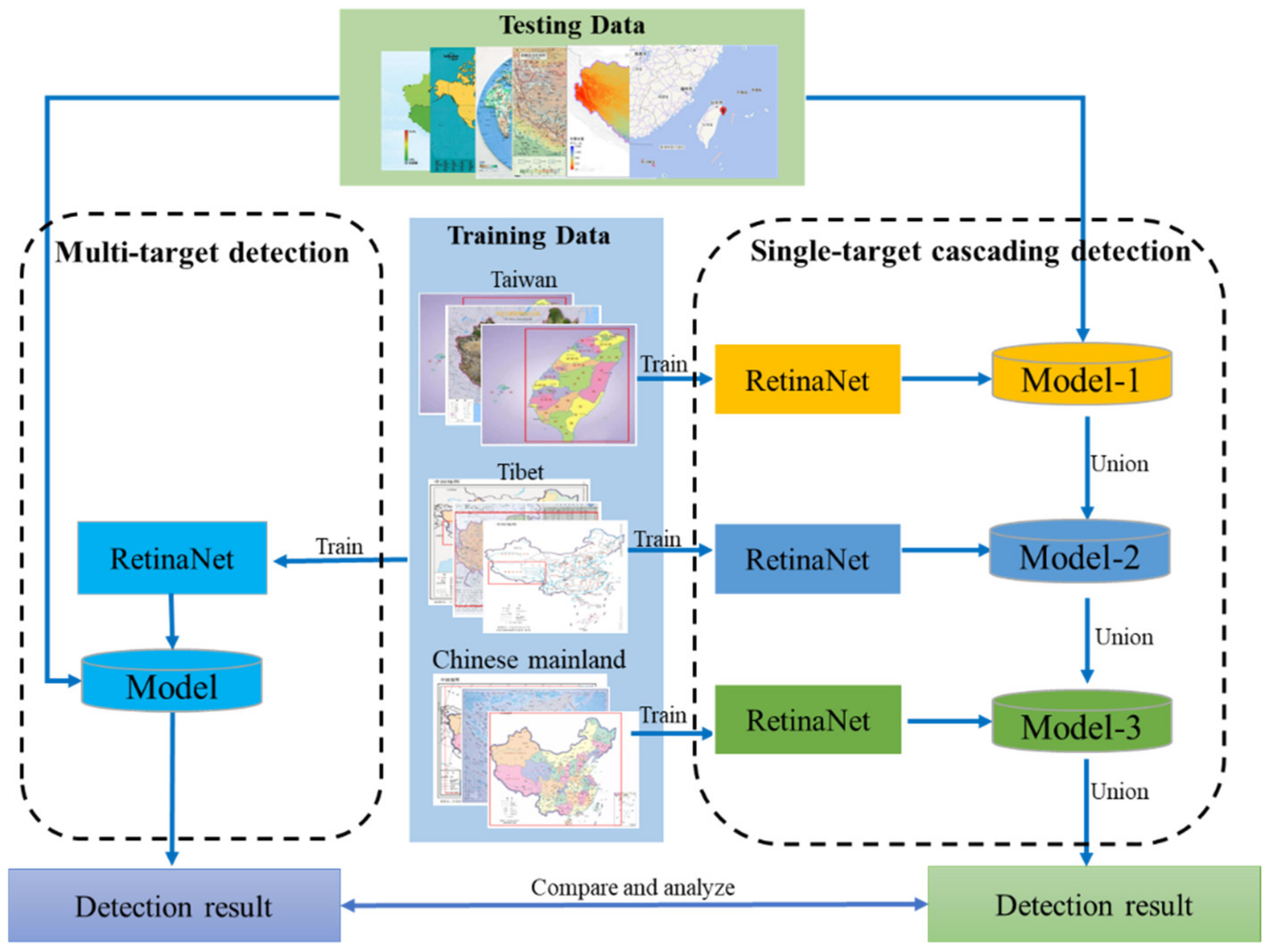

2.3. Single-Target Cascading and Multi-Target Detection Using RetinaNet Models

3. Experiments

3.1. Dataset

3.2. Evaluation Metrics

3.3. Implementation Details

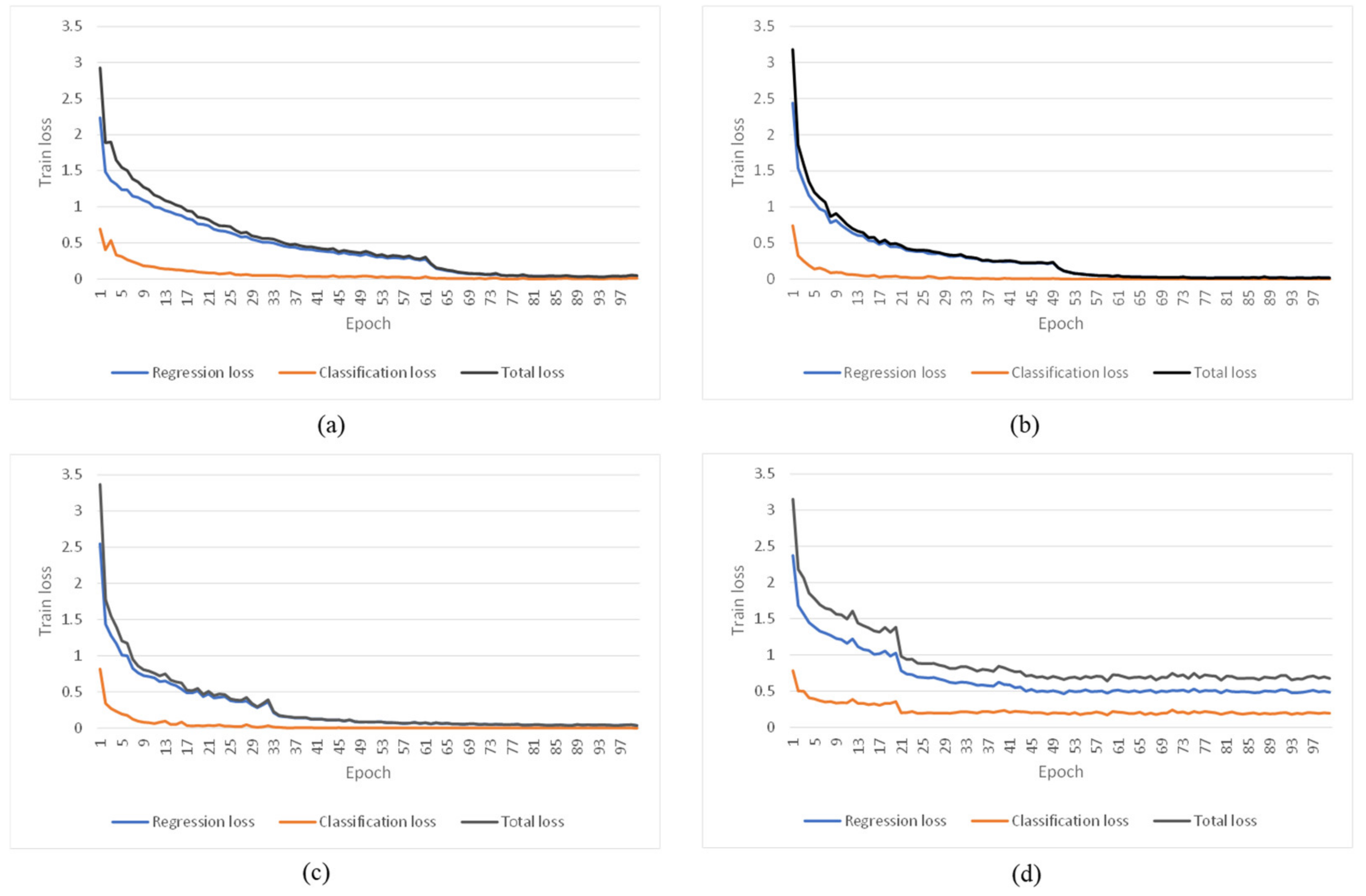

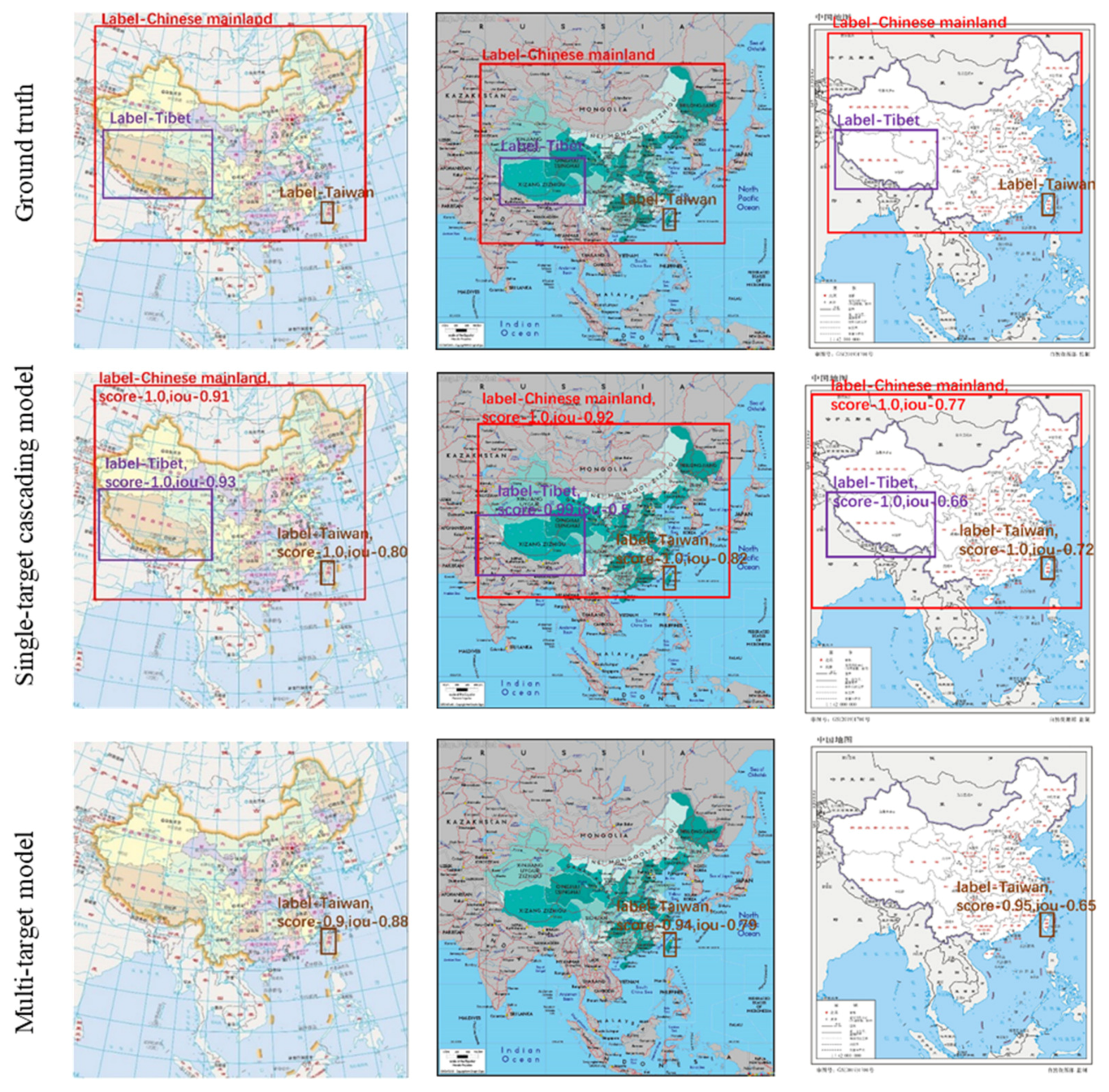

3.4. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jiayao, W.; Yi, C. Discussions on the Attributes of Cartography and the Value of Map. Acta Geod. Cartogr. Sin. 2015, 44, 237. [Google Scholar]

- Huang, L. Research on the method of fast mining internet problem map picture. Geomatrics Spat. Inf. Technol. 2017, 40, 92–96. [Google Scholar]

- Zhou, J.; Wu, X. Problem Map Picture current Situation Analysis and Countermeasures. Geomat. Technol. Equip. 2018, 20, 41–43. [Google Scholar]

- Ren, J.; Liu, W.; Li, Z.; Li, R.; Zhai, X. Intelligent Detection of “Problematic Map” Using Convolutional Neural Network. Geomat. Inf. Sci. Wuhan Univ. 2021, 46, 570–577. [Google Scholar]

- Roberts, L.G. Machine Perception of Three-Dimensional Solids. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1963. [Google Scholar]

- Lienhart, R.; Maydt, J. An Extended Set of Haar-like Features for Rapid Object Detection. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002; Volume 1, pp. 900–903. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A Discriminatively Trained, Multiscale, Deformable Part Model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Hsu, C.-W.; Lin, C.-J. A Comparison of Methods for Multiclass Support Vector Machines. IEEE Trans. Neural Netw. 2002, 13, 415–425. [Google Scholar]

- Schneiderman, H.; Kanade, T. Object Detection Using the Statistics of Parts. Int. J. Comput. Vis. 2004, 56, 151–177. [Google Scholar] [CrossRef]

- Cho, S.M.; Kim, Y.-G.; Jeong, J.; Kim, I.; Lee, H.; Kim, N. Automatic Tip Detection of Surgical Instruments in Biportal Endoscopic Spine Surgery. Comput. Biol. Med. 2021, 133, 104384. [Google Scholar] [CrossRef]

- Cao, G.; Xie, X.; Yang, W.; Liao, Q.; Shi, G.; Wu, J. Feature-Fused SSD: Fast Detection for Small Objects. In Proceedings of the Ninth International Conference on Graphic and Image Processing (ICGIP 2017), Qingdao, China, 10 April 2018; Volume 10615, pp. 381–388. [Google Scholar]

- Tripathi, S.; Dane, G.; Kang, B.; Bhaskaran, V.; Nguyen, T. Lcdet: Low-Complexity Fully-Convolutional Neural Networks for Object Detection in Embedded Systems. IEEE Conf. Comput. Vis. Pattern Recognit. Workshops 2017, 411–420. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected Crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Sharma, V.; Mir, R.N. A Comprehensive and Systematic Look up into Deep Learning Based Object Detection Techniques: A Review. Comput. Sci. Rev. 2020, 38, 100301. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-Fcn: Object Detection via Region-Based Fully Convolutional Networks. Adv. Neural Inf. Process. Syst. 2016, 29, 379–387. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intel. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A Survey of Deep Learning-Based Object Detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Recent Advances in Deep Learning for Object Detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Zafeiriou, S.; Zhang, C.; Zhang, Z. A Survey on Face Detection in the Wild: Past, Present and Future. Comput. Vis. Image Underst. 2015, 138, 1–24. [Google Scholar] [CrossRef]

- Wang, N.; Gao, X.; Tao, D.; Yang, H.; Li, X. Facial Feature Point Detection: A Comprehensive Survey. Neurocomputing 2018, 275, 50–65. [Google Scholar] [CrossRef]

- Ye, Q.; Doermann, D. Text Detection and Recognition in Imagery: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1480–1500. [Google Scholar] [CrossRef]

- Yin, X.-C.; Zuo, Z.-Y.; Tian, S.; Liu, C.-L. Text Detection, Tracking and Recognition in Video: A Comprehensive Survey. IEEE Trans. Image Process. 2016, 25, 2752–2773. [Google Scholar] [CrossRef] [PubMed]

- Sivaraman, S.; Trivedi, M.M. Looking at Vehicles on the Road: A Survey of Vision-Based Vehicle Detection, Tracking, and Behavior Analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef]

- Mogelmose, A.; Trivedi, M.M.; Moeslund, T.B. Vision-Based Traffic Sign Detection and Analysis for Intelligent Driver Assistance Systems: Perspectives and Survey. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1484–1497. [Google Scholar] [CrossRef]

- Geronimo, D.; Lopez, A.M.; Sappa, A.D.; Graf, T. Survey of Pedestrian Detection for Advanced Driver Assistance Systems. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1239–1258. [Google Scholar] [CrossRef] [PubMed]

- Brunetti, A.; Buongiorno, D.; Trotta, G.F.; Bevilacqua, V. Computer Vision and Deep Learning Techniques for Pedestrian Detection and Tracking: A Survey. Neurocomputing 2018, 300, 17–33. [Google Scholar] [CrossRef]

- Li, W.; Du, Q. A Survey on Representation-Based Classification and Detection in Hyperspectral Remote Sensing Imagery. Pattern Recognit. Lett. 2016, 83, 115–123. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Li, H.; Liu, J.; Zhou, X. Intelligent Map Reader: A Framework for Topographic Map Understanding with Deep Learning and Gazetteer. IEEE Access 2018, 6, 25363–25376. [Google Scholar] [CrossRef]

- Xiong, X.; Duan, L.; Liu, L.; Tu, H.; Yang, P.; Wu, D.; Chen, G.; Xiong, L.; Yang, W.; Liu, Q. Panicle-SEG: A Robust Image Segmentation Method for Rice Panicles in the Field Based on Deep Learning and Superpixel Optimization. Plant Methods 2017, 13, 1–15. [Google Scholar] [CrossRef]

- Ma, B.; Liu, Z.; Jiang, F.; Yan, Y.; Yuan, J.; Bu, S. Vehicle Detection in Aerial Images Using Rotation-Invariant Cascaded Forest. IEEE Access 2019, 7, 59613–59623. [Google Scholar] [CrossRef]

- Liu, S.; Cai, T.; Tang, X.; Zhang, Y.; Wang, C. Visual Recognition of Traffic Signs in Natural Scenes Based on Improved RetinaNet. Entropy 2022, 24, 112. [Google Scholar] [CrossRef]

- Chen, J.; Li, P.; Xu, T.; Xue, H.; Wang, X.; Li, Y.; Lin, H.; Liu, P.; Dong, B.; Sun, P. Detection of Cervical Lesions in Colposcopic Images Based on the RetinaNet Method. Biomed. Signal Process. Control 2022, 75, 103589. [Google Scholar] [CrossRef]

- Jaeger, P.F.; Kohl, S.A.A.; Bickelhaupt, S.; Isensee, F.; Kuder, T.A.; Schlemmer, H.-P.; Maier-Hein, K.H. Retina U-Net: Embarrassingly Simple Exploitation of Segmentation Supervision for Medical Object Detection. Proc. Mach. Learn. Health Workshop PMLR 2020, 116, 171–183. [Google Scholar]

- Chiang, Y.-Y.; Leyk, S.; Nazari, N.H.; Moghaddam, S.; Tan, T.X. Assessing the Impact of Graphical Quality on Automatic Text Recognition in Digital Maps. Comput. Geosci. 2016, 93, 21–35. [Google Scholar] [CrossRef]

- Miao, Q.; Liu, T.; Song, J.; Gong, M.; Yang, Y. Guided Superpixel Method for Topographic Map Processing. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6265–6279. [Google Scholar] [CrossRef]

- Liu, T.; Xu, P.; Zhang, S. A Review of Recent Advances in Scanned Topographic Map Processing. Neurocomputing 2019, 328, 75–87. [Google Scholar] [CrossRef]

- Pezeshk, A.; Tutwiler, R.L. Automatic Feature Extraction and Text Recognition from Scanned Topographic Maps. IEEE Trans. Geosci. Remote Sens. 2011, 49, 5047–5063. [Google Scholar] [CrossRef]

- Zhou, X. GeoAI-Enhanced Techniques to Support Geographical Knowledge Discovery from Big Geospatial Data. Ph.D. Thesis, Arizona State University, Tempe, AZ, USA, 2019. [Google Scholar]

- Zhou, X.; Li, W.; Arundel, S.T.; Liu, J. Deep Convolutional Neural Networks for Map-Type Classification. arXiv 2018, arXiv:1805.10402. [Google Scholar]

- Uhl, J.H.; Leyk, S.; Chiang, Y.Y.; Duan, W.; Knoblock, C.A. Undefined Automated Extraction of Human Settlement Patterns from Historical Topographic Map Series Using Weakly Supervised Convolutional Neural Networks. IEEE Access 2019, 8, 6978–6996. [Google Scholar] [CrossRef]

- Courtial, A.; El Ayedi, A.; Touya, G.; Zhang, X. Exploring the Potential of Deep Learning Segmentation for Mountain Roads Generalisation. ISPRS Int. J. Geo-Inf. 2020, 9, 338. [Google Scholar] [CrossRef]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-Cnn: Towards Balanced Learning for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 821–830. [Google Scholar] [CrossRef]

- An, T.-K.; Kim, M.-H. A New Diverse AdaBoost Classifier. In Proceedings of the 2010 International Conference on Artificial Intelligence and Computational Intelligence, Sanya, China, 23–24 October 2010; Volume 1, pp. 359–363. [Google Scholar]

- Pan, S.; Wang, Y.; Liu, C.; Ding, X. A Discriminative Cascade CNN Model for Offline Handwritten Digit Recognition. In Proceedings of the 2015 14th IAPR International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 18–22 May 2015; pp. 501–504. [Google Scholar]

- Luo, Y.; Jiang, P.; Duan, C.; Zhou, B. Small Object Detection Oriented Improved-RetinaNet Model and Its Application. Comput. Sci. 2021, 48, 233–238. [Google Scholar]

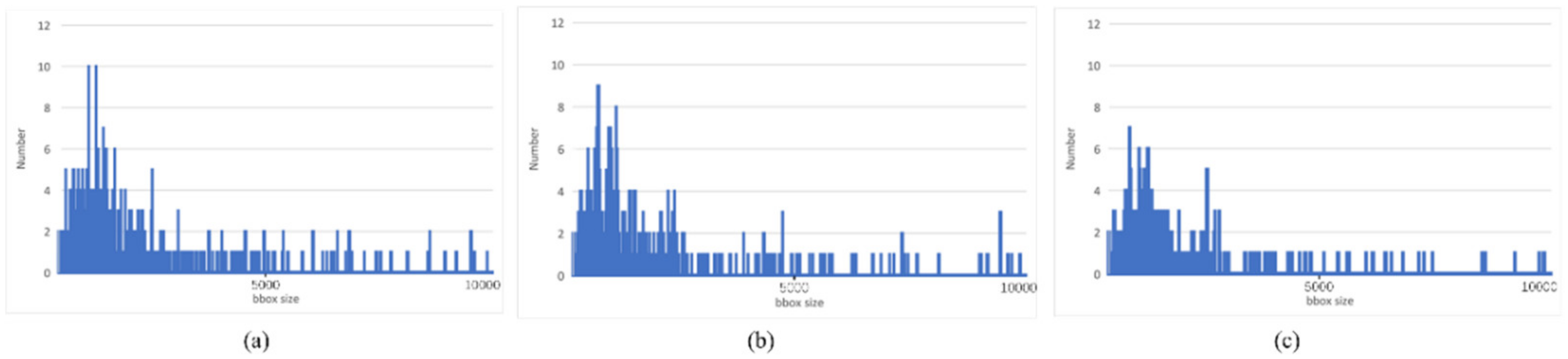

| Region of Interest | Training Dataset | Test Dataset | Total | |

|---|---|---|---|---|

| Target 1 | Taiwan | 2151 | 538 | 2689 |

| Target 2 | Tibet | 582 | 146 | 728 |

| Target 3 | Chinese mainland | 459 | 115 | 574 |

| Total | 3192 | 799 | 3991 |

| No. | path_img_file | box_x | box_y | Width | Height | Label |

|---|---|---|---|---|---|---|

| 1 | dataset/image_0001.jpg | 890 | 659 | 944 | 743 | Taiwan |

| 2 | dataset/ image_0002.jpg | 775 | 631 | 845 | 721 | Taiwan |

| 3 | dataset/ image_0003.jpg | 36 | 57 | 762 | 535 | Xizang |

| 4 | dataset/ image_0004.jpg | 5 | 51 | 536 | 316 | Xizang |

| 5 | dataset/ image_0005.jpg | 5 | 2 | 341 | 289 | Chinese mainland |

| 6 | dataset/ image_0006.jpg | 95 | 93 | 666 | 546 | Chinese mainland |

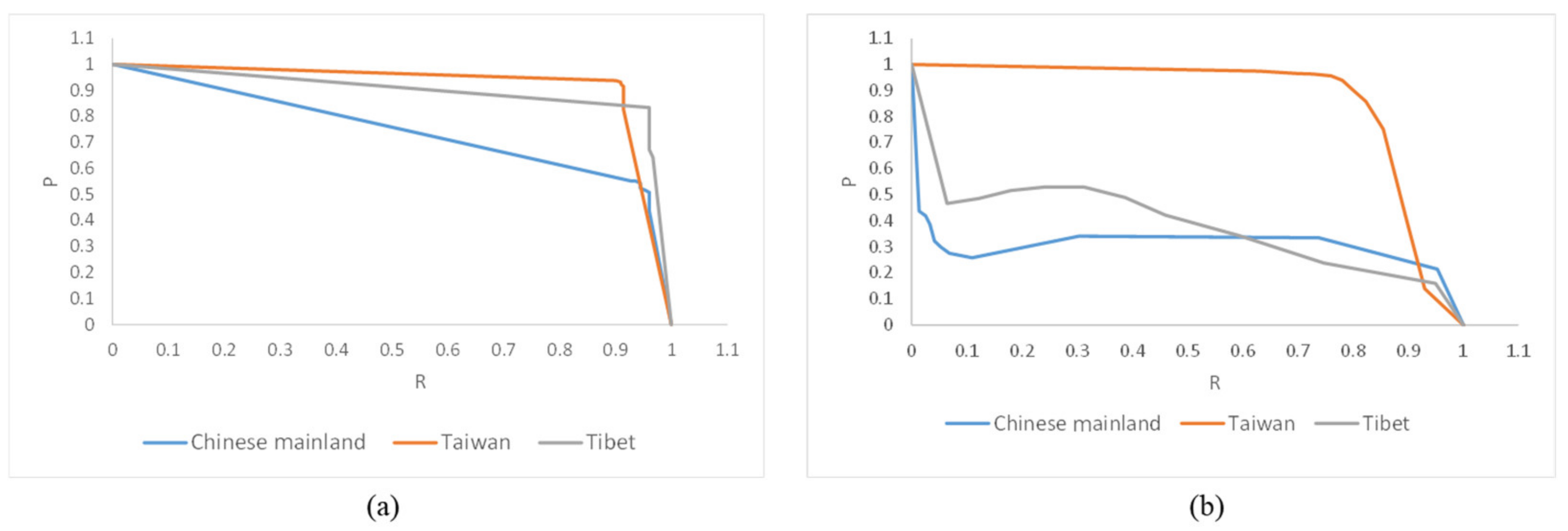

| Single-Target Model | Single-Target Cascading Detection Models | |||

|---|---|---|---|---|

| Taiwan | Tibet | Chinese Mainland | Taiwan, Tibet, and Chinese Mainland | |

| Precision (P) | 0.92 | 0.77 | 0.52 | 0.80 |

| Recall (R) | 0.91 | 0.96 | 0.94 | 0.93 |

| f1_socre | 0.92 | 0. 86 | 0.67 | 0.86 |

| Multi-Target Model | ||||

|---|---|---|---|---|

| Taiwan | Tibet | Chinese Mainland | Taiwan, Tibet, and Chinese Mainland | |

| Precision (P) | 0.94 | 0.53 | 0.3 | 0.77 |

| Recall (R) | 0.78 | 0.31 | 0.05 | 0.44 |

| f1_socre | 0.85 | 0.39 | 0.10 | 0.56 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, K.; Che, X.; Wang, Y.; Liu, J.; Luo, A.; Ma, R.; Xu, S. Comparison of RetinaNet-Based Single-Target Cascading and Multi-Target Detection Models for Administrative Regions in Network Map Pictures. Sensors 2022, 22, 7594. https://doi.org/10.3390/s22197594

Du K, Che X, Wang Y, Liu J, Luo A, Ma R, Xu S. Comparison of RetinaNet-Based Single-Target Cascading and Multi-Target Detection Models for Administrative Regions in Network Map Pictures. Sensors. 2022; 22(19):7594. https://doi.org/10.3390/s22197594

Chicago/Turabian StyleDu, Kaixuan, Xianghong Che, Yong Wang, Jiping Liu, An Luo, Ruiyuan Ma, and Shenghua Xu. 2022. "Comparison of RetinaNet-Based Single-Target Cascading and Multi-Target Detection Models for Administrative Regions in Network Map Pictures" Sensors 22, no. 19: 7594. https://doi.org/10.3390/s22197594

APA StyleDu, K., Che, X., Wang, Y., Liu, J., Luo, A., Ma, R., & Xu, S. (2022). Comparison of RetinaNet-Based Single-Target Cascading and Multi-Target Detection Models for Administrative Regions in Network Map Pictures. Sensors, 22(19), 7594. https://doi.org/10.3390/s22197594