A Comparison of Machine Learning Algorithms and Feature Sets for Automatic Vocal Emotion Recognition in Speech

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

3.1. Database

3.2. Feature Extraction

3.3. Classifiers

3.4. Statistical Analyses

4. Results

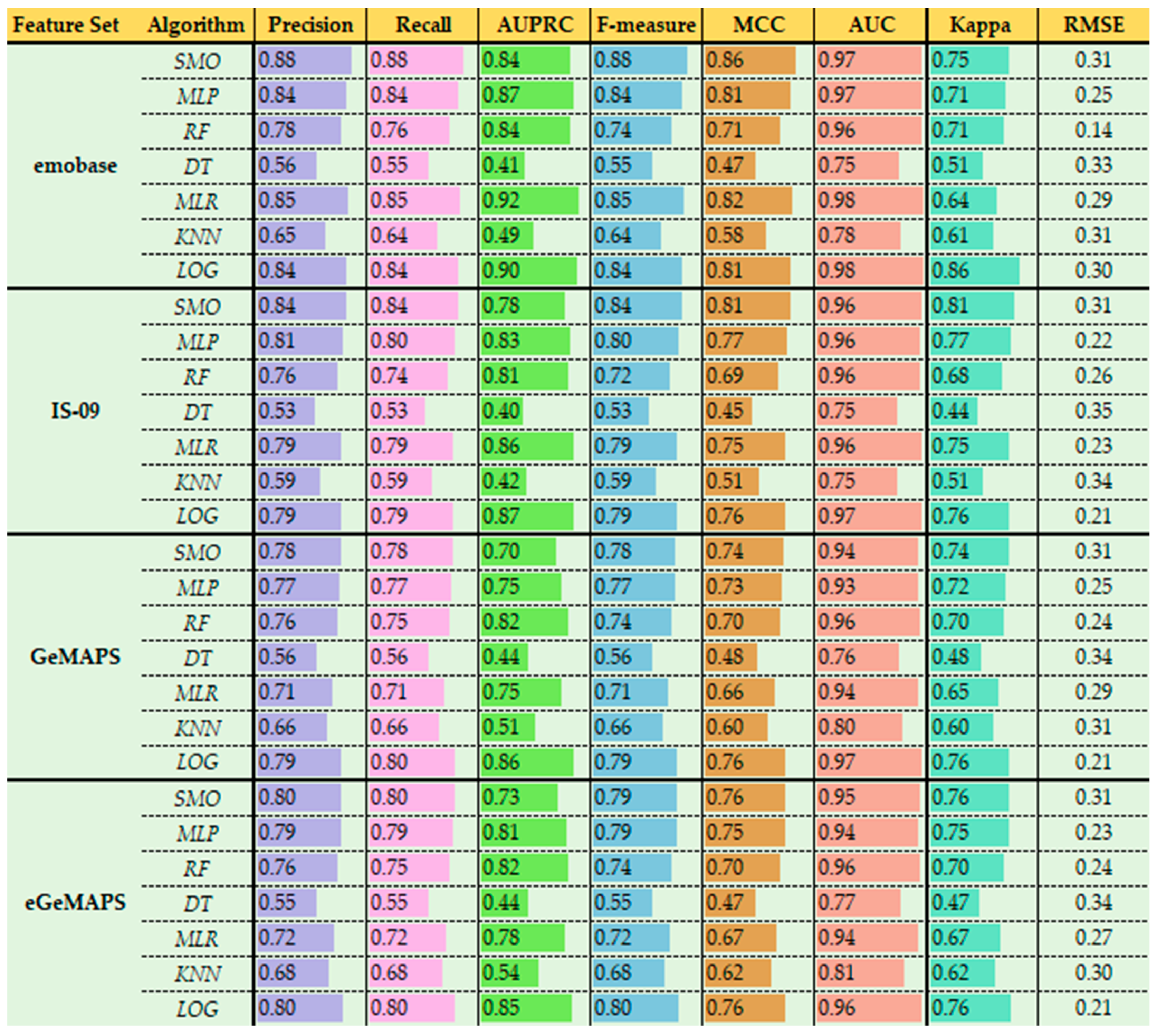

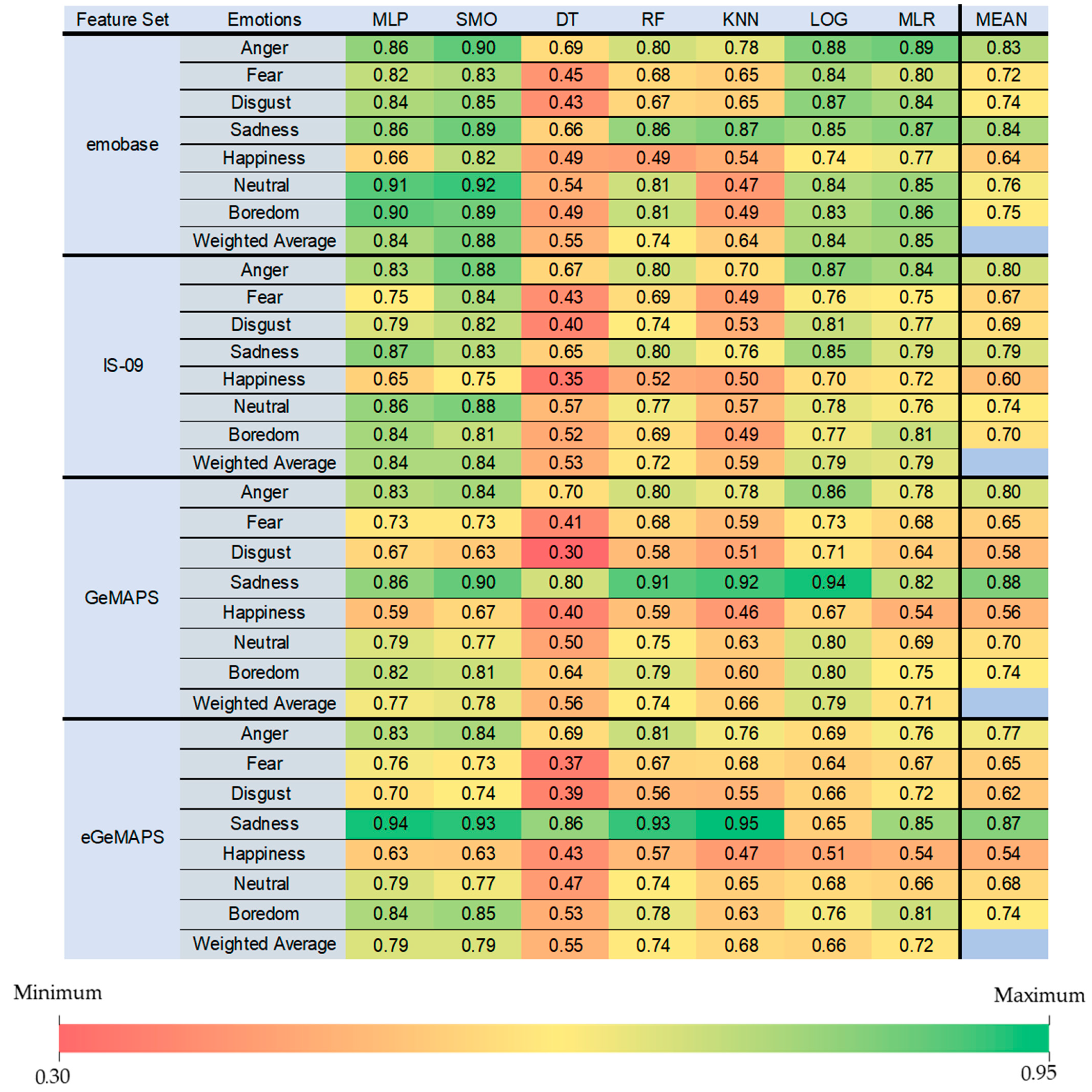

4.1. 10-Fold Cross-Validation

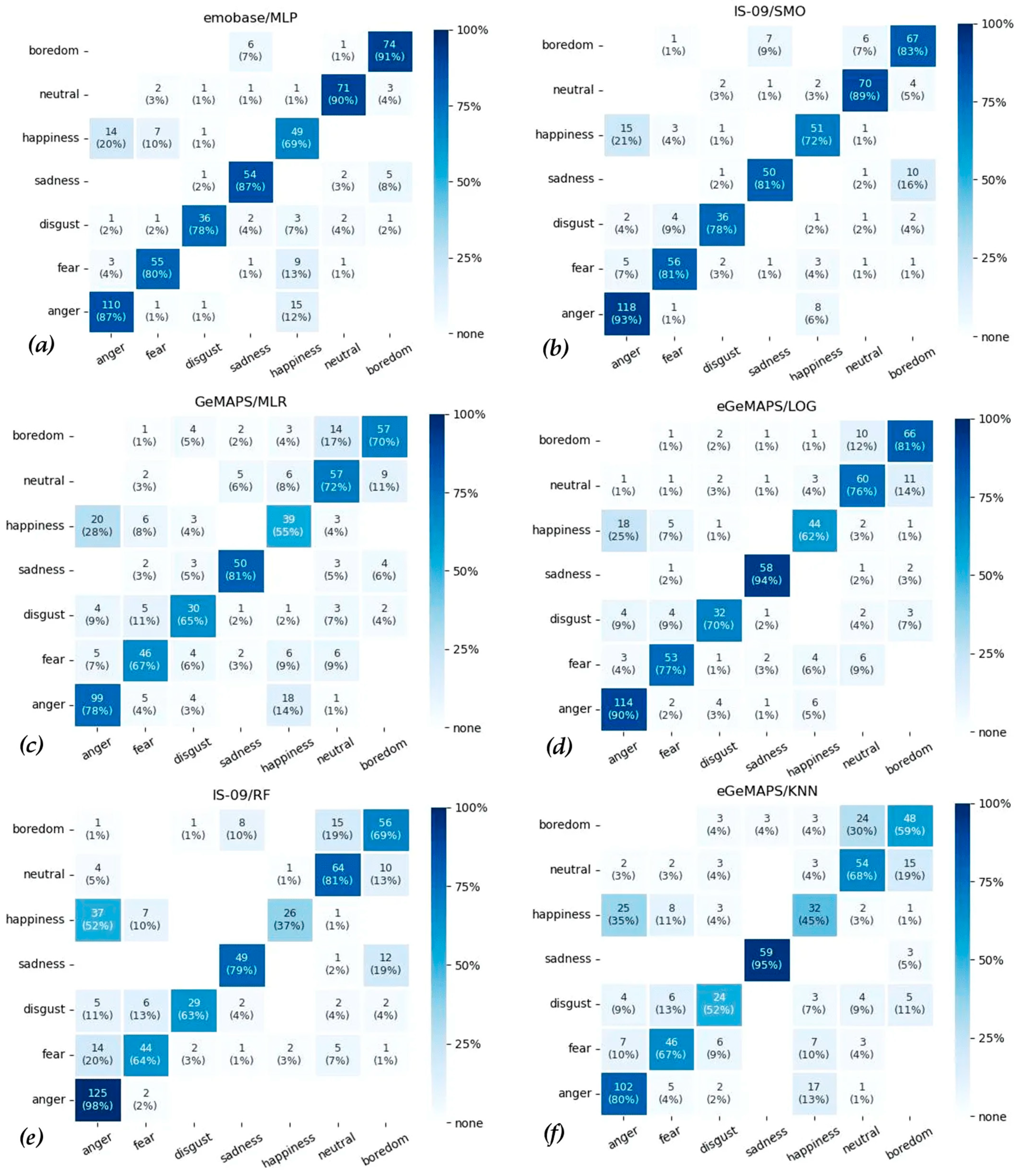

4.2. F-Measures of Class Predictions and Confusion Matrices

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schuller, B.W. Speech emotion recognition: Two decades in a nutshell, benchmarks, and ongoing trends. Commun. ACM 2018, 61, 90–99. [Google Scholar] [CrossRef]

- Drimalla, H.; Scheffer, T.; Landwehr, N.; Baskow, I.; Roepke, S.; Behnia, B.; Dziobek, I. Towards the automatic detection of social biomarkers in autism spectrum disorder: Introducing the simulated interaction task (SIT). Npj Digit. Med. 2020, 3, 25. [Google Scholar] [CrossRef] [PubMed]

- Kowallik, A.E.; Schweinberger, S.R. Sensor-Based Technology for Social Information Processing in Autism: A Review. Sensors 2019, 19, 4787. [Google Scholar] [CrossRef] [PubMed]

- Cummins, N.; Scherer, S.; Krajewski, J.; Schnieder, S.; Epps, J.; Quatieri, T.F. A review of depression and suicide risk assessment using speech analysis. Speech Commun. 2015, 71, 10–49. [Google Scholar] [CrossRef]

- Dong, Y.Z.; Yang, X.Y. A hierarchical depression detection model based on vocal and emotional cues. Neurocomputing 2021, 441, 279–290. [Google Scholar] [CrossRef]

- Longobardi, T.; Sperandeo, R.; Albano, F.; Tedesco, Y.; Moretto, E.; Di Sarno, A.D.; Dell’Orco, S.; Maldonato, N.M. Co-regulation of the voice between patient and therapist in psychotherapy: Machine learning for enhancing the synchronization of the experience of anger emotion: An experimental study proposal. In Proceedings of the 2018 9th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Budapest, Hungary, 22–24 August 2018; pp. 113–116. [Google Scholar]

- Tanana, M.J.; Soma, C.S.; Kuo, P.B.; Bertagnolli, N.M.; Dembe, A.; Pace, B.T.; Srikumar, V.; Atkins, D.C.; Imel, Z.E. How do you feel? Using natural language processing to automatically rate emotion in psychotherapy. Behav. Res. Methods 2021, 53, 2069–2082. [Google Scholar] [CrossRef]

- Halperin, E.; Schori-Eyal, N. Towards a new framework of personalized psychological interventions to improve intergroup relations and promote peace. Soc. Personal. Psychol. Compass 2020, 14, 255–270. [Google Scholar] [CrossRef]

- Shadaydeh, M.; Muller, L.; Schneider, D.; Thummel, M.; Kessler, T.; Denzler, J. Analyzing the Direction of Emotional Influence in Nonverbal Dyadic Communication: A Facial-Expression Study. IEEE Access 2021, 9, 73780–73790. [Google Scholar] [CrossRef]

- Kowallik, A.E.; Pohl, M.; Schweinberger, S.R. Facial Imitation Improves Emotion Recognition in Adults with Different Levels of Sub-Clinical Autistic Traits. J. Intell. 2021, 9, 4. [Google Scholar] [CrossRef]

- Shaham, G.; Mortillaro, M.; Aviezer, H. Automatic facial reactions to facial, body, and vocal expressions: A stimulus-response compatibility study. Psychophysiology 2020, 57, e13684. [Google Scholar] [CrossRef]

- Yamagishi, J.; Veaux, C.; King, S.; Renals, S. Speech synthesis technologies for individuals with vocal disabilities: Voice banking and reconstruction. Acoust. Sci. Technol. 2012, 33, 1–5. [Google Scholar] [CrossRef][Green Version]

- Akçay, M.B.; Oğuz, K. Speech emotion recognition: Emotional models, databases, features, preprocessing methods, supporting modalities, and classifiers. Speech Commun. 2020, 116, 56–76. [Google Scholar] [CrossRef]

- Swain, M.; Routray, A.; Kabisatpathy, P. Databases, features and classifiers for speech emotion recognition: A review. Int. J. Speech Technol. 2018, 21, 93–120. [Google Scholar] [CrossRef]

- Salzberg, S.L. C4.5: Programs for Machine Learning by J. Ross Quinlan. Morgan Kaufmann Publishers, Inc., 1993. Mach. Learn. 1994, 16, 235–240. [Google Scholar] [CrossRef]

- Casale, S.; Russo, A.; Scebba, G.; Serrano, S. Speech emotion classification using machine learning algorithms. In Proceedings of the 2008 IEEE International Conference on Semantic Computing, Santa Monica, CA, USA, 4–7 August 2008; pp. 158–165. [Google Scholar] [CrossRef]

- Chavhan, Y.; Dhore, M.L.; Yesaware, P. Speech emotion recognition using support vector machine. Int. J. Comput. Appl. 2010, 1, 6–9. [Google Scholar] [CrossRef]

- Lee, C.C.; Mower, E.; Busso, C.; Lee, S.; Narayanan, S. Emotion recognition using a hierarchical binary decision tree approach. Speech Commun. 2011, 53, 1162–1171. [Google Scholar] [CrossRef]

- Gjoreski, M.; Gjoreski, H.; Kulakov, A. Machine learning approach for emotion recognition in speech. Informatica 2014, 38, 377–384. [Google Scholar]

- Wang, S.; Wang, W.; Zhao, J.; Chen, S.; Jin, Q.; Zhang, S.; Qin, Y. Emotion recognition with multimodal features and temporal models. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 598–602. [Google Scholar] [CrossRef]

- Abbaschian, B.J.; Sierra-Sosa, D.; Elmaghraby, A. Deep learning techniques for speech emotion recognition, from databases to models. Sensors 2021, 21, 1249. [Google Scholar] [CrossRef]

- Meng, H.Y.; Bianchi-Berthouze, N. Affective State Level Recognition in Naturalistic Facial and Vocal Expressions. IEEE Trans. Cybern. 2014, 44, 315–328. [Google Scholar] [CrossRef]

- Sitaula, C.; He, J.; Priyadarshi, A.; Tracy, M.; Kavehei, O.; Hinder, M.; Withana, A.; McEwan, A.; Marzbanrad, F. Neonatal bowel sound detection using convolutional neural network and Laplace hidden semi-Markov model. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 1853–1864. [Google Scholar] [CrossRef]

- Er, M.B. A Novel Approach for Classification of Speech Emotions Based on Deep and Acoustic Features. IEEE Access 2020, 8, 221640–221653. [Google Scholar] [CrossRef]

- Nordström, H. Emotional communication in the human voice. Doctoral Dissertation, Department of Psychology, Stockholm University, Stockholm, Sweden, 2019. [Google Scholar]

- Rao, K.S.; Koolagudi, S.G.; Vempada, R.R. Emotion recognition from speech using global and local prosodic features. Int. J. Speech Technol. 2013, 16, 143–160. [Google Scholar] [CrossRef]

- Eyben, F.; Wöllmer, M.; Schuller, B. openSMILE: The munich versatile and fast open-source audio feature extractor. In Proceedings of the 18th ACM 2010 International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 1459–1462. [Google Scholar] [CrossRef]

- Eyben, F.; Scherer, K.R.; Schuller, B.W.; Sundberg, J.; André, E.; Busso, C.; Devillers, L.J.; Epps, J.; Laukka, P.; Narayanan, S.S.; et al. The Geneva minimalistic acoustic parameter set (GeMAPS) for voice research and affective computing. IEEE Trans. Affect. Comput. 2015, 7, 190–202. [Google Scholar] [CrossRef]

- Schuller, B.; Steidl, S.; Batliner, A. The Interspeech 2009 Emotion Challenge. In Proceedings of the Interspeech 2009 Emotion Challenge, Brighton, UK, 6–10 September 2009; pp. 312–315. [Google Scholar]

- Burkhardt, F.; Paeschke, A.; Rolfes, M.; Sendlmeier, W.F.; Weiss, B. A database of German emotional speech. In Proceedings of the 2005 Interspeech Conference, Lisbon, Portugal, 4–8 September 2005; pp. 1517–1520. [Google Scholar]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef]

- Kuchibhotla, S.; Vankayalapati, H.D.; Vaddi, R.S.; Anne, K.R. A comparative analysis of classifiers in emotion recognition through acoustic features. Int. J. Speech Technol. 2014, 17, 401–408. [Google Scholar] [CrossRef]

- Rumagit, R.Y.; Alexander, G.; Saputra, I.F. Model comparison in speech emotion recognition for Indonesian language. Procedia Comput. Sci. 2021, 179, 789–797. [Google Scholar] [CrossRef]

- Sugan, N.; Srinivas, N.S.; Kar, N.; Kumar, L.S.; Nath, M.K.; Kanhe, A. Performance comparison of different cepstral features for speech emotion recognition. In Proceedings of the 2018 International CET Conference on Control, Communication, and Computing (IC4), Thiruvananthapuram, India, 5–7 July 2018; pp. 266–271. [Google Scholar] [CrossRef]

- Palo, H.K.; Sagar, S. Comparison of neural network models for speech emotion recognition. In Proceedings of the 2018 2nd International Conference on Data Science and Business Analytics (ICDSBA), Changsha, China, 21–23 September 2018; pp. 127–131. [Google Scholar] [CrossRef]

- Zeng, Z.; Pantic, M.; Roisman, G.I.; Huang, T.S. A survey of affect recognition methods: Audio, visual, and spontaneous expressions. IEEE Trans. Patt. Analy. Mach. Intell. 2009, 31, 39–58. [Google Scholar] [CrossRef]

- GitHub. Available online: https://github.com/fracpete/python-weka-wrapper3 (accessed on 10 September 2022).

- Frank, E.; Hall, M.A.; Witten, I.H. The WEKA Workbench. Online Appendix for “Data Mining: Practical Machine Learning Tools and Techniques”, 4th ed.; Morgan Kaufmann Publishers: San Fransisco, CA, USA, 2016. [Google Scholar]

- Matthews, B.W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochim. Biophys. Acta (BBA)-Protein Struct. 1975, 405, 442–451. [Google Scholar] [CrossRef]

- Hanley, J.A.; McNeil, B.J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 1982, 143, 29–36. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Shen, P.; Changjun, Z.; Chen, X. Automatic speech emotion recognition using support vector machine. In Proceedings of the 2011 International Conference on Electronic & Mechanical Engineering and Information Technology, Harbin, China, 12–14 August 2011; pp. 621–625. [Google Scholar] [CrossRef]

- Bitouk, D.; Verma, R.; Nenkova, A. Class-level spectral features for emotion recognition. Speech Commun. 2010, 52, 613–625. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Zou, B.; Fu, S.; Chen, J.; Wang, F. Speech emotion recognition based on DNN-decision tree SVM model. Speech Commun. 2019, 115, 29–37. [Google Scholar] [CrossRef]

- Khan, M.; Goskula, T.; Nasiruddin, M.; Quazi, R. Comparison between k-nn and svm method for speech emotion recognition. Int. J. Comput. Sci. Eng. 2011, 3, 607–611. [Google Scholar]

- Zhu, C.; Ahmad, W. Emotion recognition from speech to improve human-robot interaction. In Proceedings of the 2019 IEEE International Conference on Dependable, Autonomic and Secure Computing, International Conference on Pervasive Intelligence and Computing, International Conference on Cloud and Big Data Computing, International Conference on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Fukuoka, Japan, 5–8 August 2019; pp. 370–375. [Google Scholar] [CrossRef]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Sauter, D.A. The nonverbal communication of positive emotions: An emotion family approach. Emot. Rev. 2017, 9, 222–234. [Google Scholar] [CrossRef] [PubMed]

- Banse, R.; Scherer, K.R. Acoustic profiles in vocal emotion expression. J. Personal. Soc. Psychol. 1996, 70, 614. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124. [Google Scholar] [CrossRef]

- Goetz, T.; Frenzel, A.C.; Hall, N.C.; Nett, U.E.; Pekrun, R.; Lipnevich, A.A. Types of boredom: An experience sampling approach. Motiv. Emot. 2014, 38, 401–419. [Google Scholar] [CrossRef]

- Young, A.W.; Frühholz, S.; Schweinberger, S.R. Face and voice perception: Understanding commonalities and differences. Trends Cogn. Sci. 2020, 24, 398–410. [Google Scholar] [CrossRef]

- Frühholz, S.; Schweinberger, S.R. Nonverbal auditory communication–evidence for integrated neural systems for voice signal production and perception. Prog. Neurobiol. 2021, 199, 101948. [Google Scholar] [CrossRef]

| Emotions | Number of Instances |

|---|---|

| Anger | 127 |

| Fear | 69 |

| Disgust | 46 |

| Sadness | 62 |

| Happiness | 71 |

| Boredom | 81 |

| Neutral | 79 |

| Total | 535 |

| Feature Sets | Low-Level-Descriptors | Functionals |

|---|---|---|

| emobase and IS-09 (Common Features) | F0, 12 MFCCs, ZCR, Probability of Voicing | Mean, Standard Deviation, Skewness, Kurtosis, Minimum and Maximum Value, Range, Slope and Offset of Linear Approximation with Quadratic Error |

| emobase | * Intensity, Loudness, F0 Envelope, 8 Line Spectral Frequencies | * 3 Inter-Quartile Ranges, Quartile 1–3 |

| IS-09 | * (RMS) Energy | - |

| GeMAPS | F0, H1-H2 Harmonic Difference F0, H1-A3 Harmonic Difference (F0 − A3), Jitter, Formant 1-2-3 Frequency, Formant 1, Shimmer, Loudness, HNR, Alpha Ratio, Hammarberg Index, Spectral Slope 0–500 Hz and 500–100 Hz, Formant 1-2-3 Relative Energy | Mean, Coefficient of Variation; (For loudness and F0): 20th, 50th and 80th Percentile, the Range of 20th to 80th percentile, Mean and Standard Deviation of the Slope of rising/falling signal parts; (6 Additional Temporal Features): Rate of Loudness Peaks, Mean Length and Standard Deviation on the Regions F0 > 0 and F0 = 0, Pseudo Syllable Rate |

| eGeMAPS | ** MFCCs 1–4, Spectral Flux and Formant 2–3 Bandwidth | * Equivalent Sound Level. Voiced and unvoiced region inclusions vary among some LLDs. |

| % | MLP | SMO | DT | RF | KNN | LOG | MLR |

|---|---|---|---|---|---|---|---|

| emobase | 84.00 | 87.85 | 54.95 | 75.70 | 63.93 | 83.74 | 84.70 |

| IS-09 | 80.37 | 83.74 | 53.08 | 73.46 | 58.69 | 79.44 | 78.51 |

| GeMAPS | 76.63 | 78.32 | 56.10 | 75.00 | 66.00 | 79.63 | 70.65 |

| eGeMAPS | 79.25 | 79.63 | 55.14 | 74.77 | 68.22 | 79.81 | 71.96 |

| Feature Set | MLP | DT | RF | KNN | LOG | MLR |

|---|---|---|---|---|---|---|

| emobase | t = 4.79 | t = 14.78 | t = 12.21 | t = 10.93 | t = 4.56 | t = 3.58 |

| p = 0.001 ** | p < 0.001 ** | p < 0.001 ** | p < 0.001 ** | p = 0.001 ** | p = 0.005 * | |

| IS-09 | t = 3.05 | t = 10.00 | t = 7.20 | t = 10.77 | t = 1.40 | t = 1.74 |

| p = 0.001 ** | p < 0.001 ** | p < 0.001 ** | p < 0.001 ** | p = 0.198 | p = 0.116 | |

| GeMAPS | t = 2.45 | t = 17.24 | t = 2.58 | t = 5.04 | t = −0.49 | t = 3.81 |

| p = 0.014 * | p < 0.001 ** | p = 0.030 * | p = 0.001 ** | p = 0.638 | p = 0.004 * | |

| eGeMAPS | t = 1.12 | t = 12.50 | t = 1.58 | t = 5.36 | t = 1.04 | t = 1.22 |

| p = 0.292 | p < 0.001 ** | p = 0.150 | p = 0.001 ** | p = 0.328 | p = 0.254 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Doğdu, C.; Kessler, T.; Schneider, D.; Shadaydeh, M.; Schweinberger, S.R. A Comparison of Machine Learning Algorithms and Feature Sets for Automatic Vocal Emotion Recognition in Speech. Sensors 2022, 22, 7561. https://doi.org/10.3390/s22197561

Doğdu C, Kessler T, Schneider D, Shadaydeh M, Schweinberger SR. A Comparison of Machine Learning Algorithms and Feature Sets for Automatic Vocal Emotion Recognition in Speech. Sensors. 2022; 22(19):7561. https://doi.org/10.3390/s22197561

Chicago/Turabian StyleDoğdu, Cem, Thomas Kessler, Dana Schneider, Maha Shadaydeh, and Stefan R. Schweinberger. 2022. "A Comparison of Machine Learning Algorithms and Feature Sets for Automatic Vocal Emotion Recognition in Speech" Sensors 22, no. 19: 7561. https://doi.org/10.3390/s22197561

APA StyleDoğdu, C., Kessler, T., Schneider, D., Shadaydeh, M., & Schweinberger, S. R. (2022). A Comparison of Machine Learning Algorithms and Feature Sets for Automatic Vocal Emotion Recognition in Speech. Sensors, 22(19), 7561. https://doi.org/10.3390/s22197561