Denoising Single Images by Feature Ensemble Revisited

Abstract

:1. Introduction

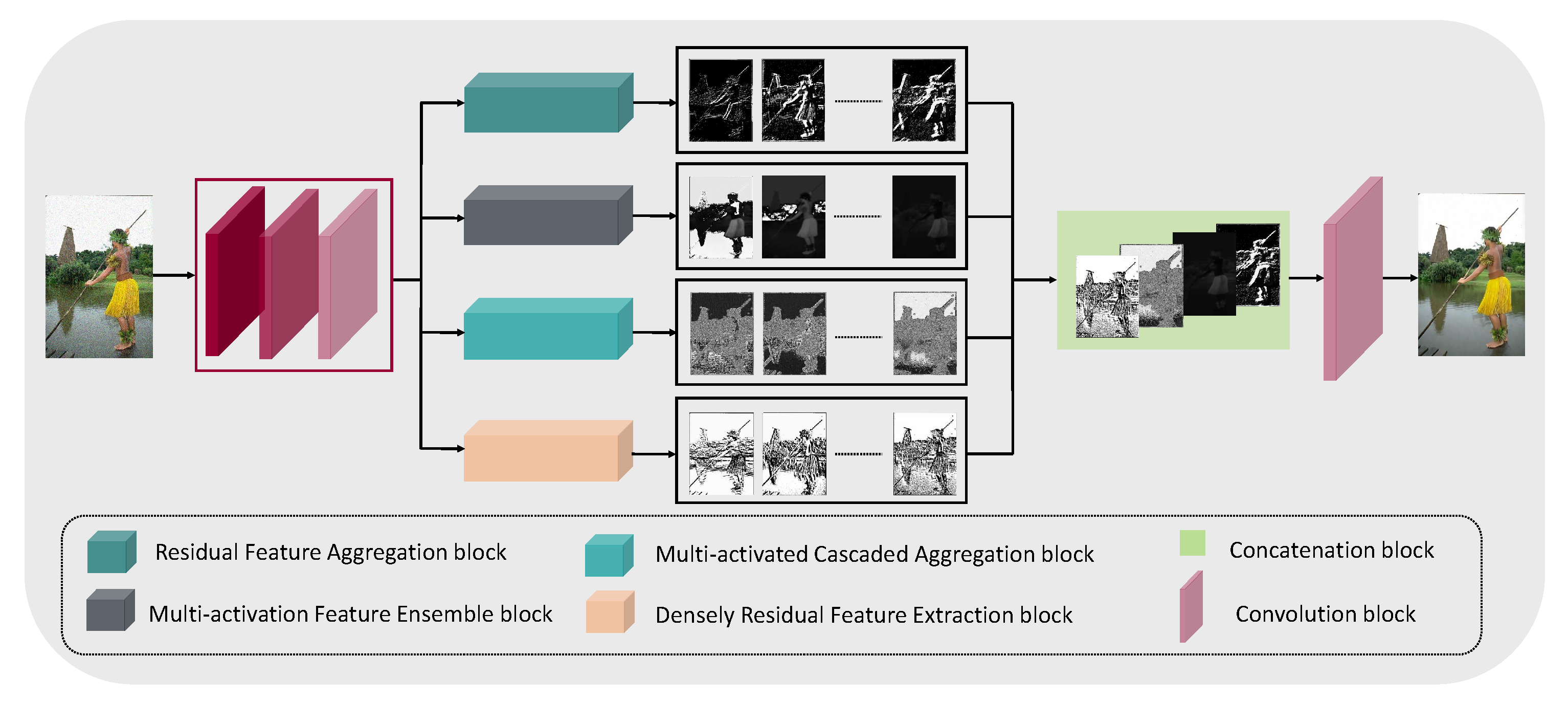

- We propose a shallow ensemble approach through feature concatenation to create a large array of feature combinations for low-level image recovery.

- Due to the ensemble of multiple modules, our model successfully returns fine details compared to previous data-driven studies.

- The parameter space is relatively small compared to the contemporary methods with a computationally fast inference time.

- Finally, the proposed study shows better performance with a different range of synthetic noise and real noise without the cartoonization and hallucination effect. See Figure 1.

2. Related Work

2.1. Filtering Based Schemes

2.2. Prior Based Schemes

2.3. Learning Based Schemes

3. Methodology

3.1. Baseline Supervised Architecture

3.2. Proposed Architecture

Initial Feature Block

3.3. Four Modules for Feature Refinement

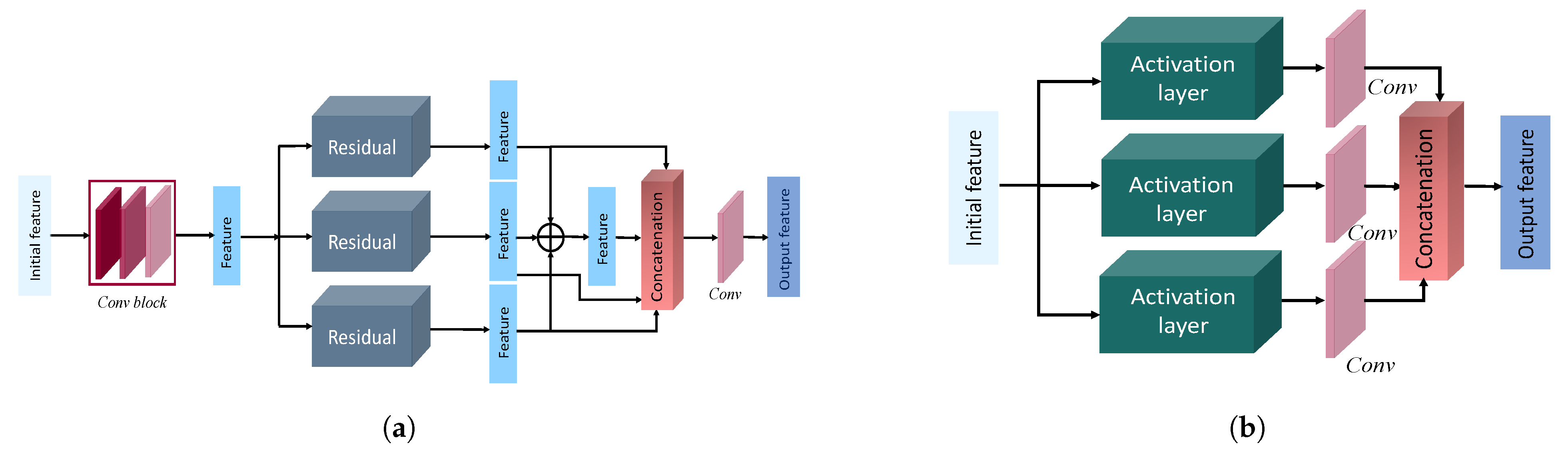

3.3.1. Residual Feature Aggregation Module

3.3.2. Multiactivation Feature Ensemble

3.3.3. Multiactivated Cascaded Aggregation

3.3.4. Densely Residual Feature Extraction

3.4. Loss Function

4. Experimental Results

4.1. Network Implementation and Training Set

4.2. Testing Set

- DND: DND [41] is a real-world image dataset consisting of 50 real-world noisy images. However, near noise-free counterparts are unavailable to the public. The corresponding server provides the PSNR/SSIM results for the uploaded denoised images.

- SIDD: SIDD [42] is another real-world noisy image dataset that provides 320 pairs of noisy images and near noise-free counterparts for training. This dataset follows a similar evaluation process as for the DND dataset.

- RN15: RN15 [26] dataset provides 15 real-world noisy images. Due to the unavailability of the ground truths, we only present the visual result of this dataset.

4.3. Denoising on Synthetic Noisy Images

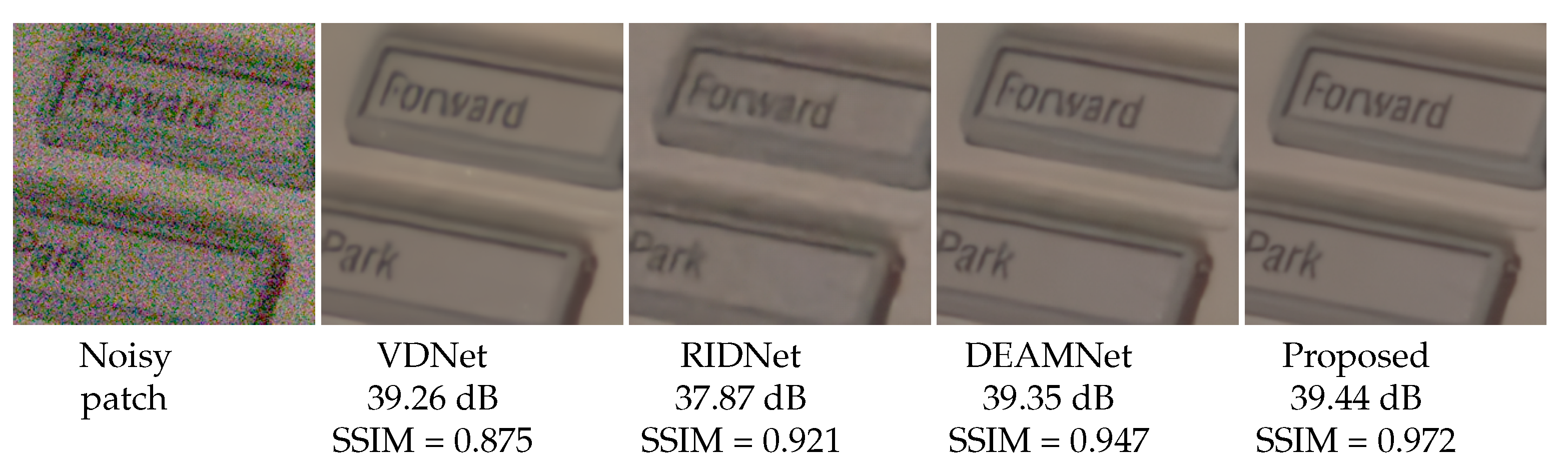

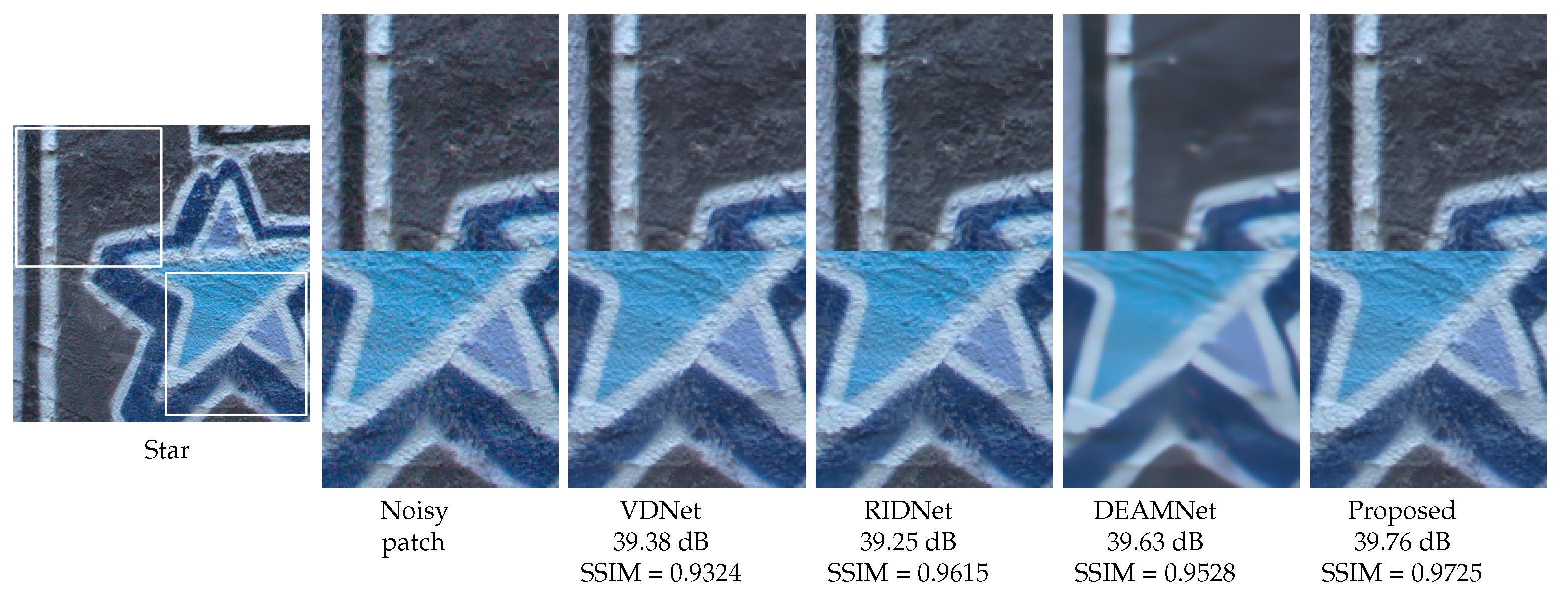

4.4. Denoising on Real-World Noisy Images

4.5. Computational Complexity

4.6. Ablation Study on Modules

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 60–65. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Ma, K.K.; Chen, L.H. Tri-state median filter for image denoising. IEEE Trans. Image Process. 1999, 8, 1834–1838. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Benesty, J.; Huang, Y.; Doclo, S. New insights into the noise reduction Wiener filter. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1218–1234. [Google Scholar] [CrossRef]

- Anwar, S.; Barnes, N. Real Image Denoising with Feature Attention. In Proceedings of the IEEE International Conference on Computer Vision (ICCV-Oral), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Ouyang, J.; Adeli, E.; Pohl, K.M.; Zhao, Q.; Zaharchuk, G. Representation Disentanglement for Multi-modal MR Analysis. arXiv 2021, arXiv:2102.11456. [Google Scholar]

- Li, P.; Chen, B.; Ouyang, W.; Wang, D.; Yang, X.; Lu, H. GradNet: Gradient-guided network for visual object tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6162–6171. [Google Scholar]

- Ren, C.; He, X.; Wang, C.; Zhao, Z. Adaptive Consistency Prior Based Deep Network for Image Denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 8596–8606. [Google Scholar]

- Knaus, C.; Zwicker, M. Progressive Image Denoising. IEEE Trans. Image Process. 2014, 23, 3114–3125. [Google Scholar] [CrossRef]

- Xie, Q.; Zhao, Q.; Meng, D.; Xu, Z.; Gu, S.; Zuo, W.; Zhang, L. Multispectral Images Denoising by Intrinsic Tensor Sparsity Regularization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1692–1700. [Google Scholar] [CrossRef]

- Elad, M.; Aharon, M. Image Denoising Via Sparse and Redundant Representations Over Learned Dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Weighted Nuclear Norm Minimization with Application to Image Denoising. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar] [CrossRef]

- Xie, Y.; Gu, S.; Liu, Y.; Zuo, W.; Zhang, W.; Zhang, L. Weighted Schatten p-Norm Minimization for Image Denoising and Background Subtraction. IEEE Trans. Image Process. 2016, 25, 4842–4857. [Google Scholar] [CrossRef]

- Xie, T.; Li, S.; Sun, B. Hyperspectral Images Denoising via Nonconvex Regularized Low-Rank and Sparse Matrix Decomposition. IEEE Trans. Image Process. 2019, 29, 44–56. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, L.; Zhang, D.; Feng, X. Multi-channel weighted nuclear norm minimization for real color image denoising. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1096–1104. [Google Scholar]

- Pang, J.; Cheung, G. Graph Laplacian Regularization for Image Denoising: Analysis in the Continuous Domain. IEEE Trans. Image Process. 2017, 26, 1770–1785. [Google Scholar] [CrossRef]

- Xu, L.; Lu, C.; Xu, Y.; Jia, J. Image Smoothing via L0 Gradient Minimization. ACM Trans. Graph. SIGGRAPH Asia 2011, 30, 1–12. [Google Scholar]

- Mahdaoui, A.E.; Ouahabi, A.; Moulay, M.S. Image Denoising Using a Compressive Sensing Approach Based on Regularization Constraints. Sensors 2022, 22, 2199. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning Deep CNN Denoiser Prior for Image Restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3929–3938. [Google Scholar]

- Song, Y.; Zhu, Y.; Du, X. Dynamic Residual Dense Network for Image Denoising. Sensors 2019, 19, 3809. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Pock, T. Trainable nonlinear reaction diffusion: A flexible framework for fast and effective image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1256–1272. [Google Scholar] [CrossRef] [PubMed]

- Lefkimmiatis, S. Non-local color image denoising with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3587–3596. [Google Scholar]

- Bae, W.; Yoo, J.; Chul Ye, J. Beyond deep residual learning for image restoration: Persistent homology-guided manifold simplification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 145–153. [Google Scholar]

- Anwar, S.; Huynh, C.P.; Porikli, F. Chaining identity mapping modules for image denoising. arXiv 2017, arXiv:1712.02933. [Google Scholar]

- Lebrun, M.; Colom, M.; Morel, J.M. The noise clinic: A universal blind denoising algorithm. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 2674–2678. [Google Scholar] [CrossRef]

- Guo, S.; Yan, Z.; Zhang, K.; Zuo, W.; Zhang, L. Toward convolutional blind denoising of real photographs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1712–1722. [Google Scholar]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef]

- Chen, S.; Shi, D.; Sadiq, M.; Cheng, X. Image Denoising With Generative Adversarial Networks and its Application to Cell Image Enhancement. IEEE Access 2020, 8, 82819–82831. [Google Scholar] [CrossRef]

- Park, H.S.; Baek, J.; You, S.K.; Choi, J.K.; Seo, J.K. Unpaired image denoising using a generative adversarial network in X-ray CT. IEEE Access 2019, 7, 110414–110425. [Google Scholar] [CrossRef]

- Li, W.; Wang, J. Residual Learning of Cycle-GAN for Seismic Data Denoising. IEEE Access 2021, 9, 11585–11597. [Google Scholar] [CrossRef]

- Chen, S.; Xu, S.; Chen, X.; Li, F. Image Denoising Using a Novel Deep Generative Network with Multiple Target Images and Adaptive Termination Condition. Appl. Sci. 2021, 11, 4803. [Google Scholar] [CrossRef]

- Kim, Y.; Soh, J.W.; Park, G.Y.; Cho, N.I. Transfer learning from synthetic to real-noise denoising with adaptive instance normalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 3482–3492. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Swish: A Self-Gated Activation Function. arXiv 2017, arXiv:1710.05941. [Google Scholar]

- Misra, D. Mish: A self regularized non-monotonic activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1026–1034. [Google Scholar]

- Plotz, T.; Roth, S. Benchmarking denoising algorithms with real photographs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1586–1595. [Google Scholar]

- Abdelhamed, A.; Lin, S.; Brown, M.S. A High-Quality Denoising Dataset for Smartphone Cameras. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1692–1700. [Google Scholar] [CrossRef]

- Tian, C.; Xu, Y.; Li, Z.; Zuo, W.; Fei, L.; Liu, H. Attention-guided CNN for image denoising. Neural Netw. 2020, 124, 117–129. [Google Scholar] [CrossRef] [PubMed]

- Yue, Z.; Yong, H.; Zhao, Q.; Meng, D.; Zhang, L. Variational Denoising Network: Toward Blind Noise Modeling and Removal. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Vancouver, BC, Canada, 2019; pp. 1690–1701. [Google Scholar]

- Zhang, K.; Li, Y.; Zuo, W.; Zhang, L.; Van Gool, L.; Timofte, R. Plug-and-Play Image Restoration with Deep Denoiser Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6360–6376. [Google Scholar] [CrossRef] [PubMed]

| Method | Metrics | 15 | 25 | 50 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BSD68 | Kodak24 | Urban100 | BSD68 | Kodak24 | Urban100 | BSD68 | Kodak24 | Urban100 | ||

| BM3D [2] | PSNR | 32.37 | 31.07 | 32.35 | 29.97 | 28.57 | 29.70 | 26.72 | 25.62 | 25.95 |

| SSIM | 0.8952 | 0.8717 | 0.9220 | 0.8504 | 0.8013 | 0.8777 | 0.7676 | 0.6864 | 0.7791 | |

| WNNM [12] | PSNR | 32.70 | 31.37 | 32.97 | 30.28 | 28.83 | 30.39 | 27.05 | 25.87 | 26.83 |

| SSIM | 0.8982 | 0.8766 | 0.9271 | 0.8577 | 0.8087 | 0.8885 | 0.7775 | 0.6982 | 0.8047 | |

| DnCNN [19] | PSNR | 32.86 | 31.73 | 31.86 | 30.06 | 28.92 | 29.25 | 27.18 | 26.23 | 26.28 |

| SSIM | 0.9031 | 0.8907 | 0.9255 | 0.8622 | 0.8278 | 0.8797 | 0.7829 | 0.7189 | 0.7874 | |

| FFDNet [28] | PSNR | 32.75 | 31.63 | 32.43 | 30.43 | 29.19 | 29.92 | 27.32 | 26.29 | 26.28 |

| SSIM | 0.9027 | 0.8902 | 0.9273 | 0.8634 | 0.8289 | 0.8886 | 0.7903 | 0.7245 | 0.8057 | |

| IrCNN [20] | PSNR | 31.67 | 33.60 | 31.85 | 29.96 | 30.98 | 28.92 | 26.59 | 27.66 | 25.21 |

| SSIM | 0.9318 | 0.9247 | 0.9493 | 0.8859 | 0.8799 | 0.9101 | 0.7899 | 0.7914 | 0.8168 | |

| ADNet [43] | PSNR | 32.98 | 31.74 | 32.87 | 30.58 | 29.25 | 30.24 | 27.37 | 26.29 | 26.64 |

| SSIM | 0.9050 | 0.8916 | 0.9308 | 0.8654 | 0.8294 | 0.8923 | 0.7908 | 0.7216 | 0.8073 | |

| RIDNet [5] | PSNR | 32.91 | 31.81 | 33.11 | 30.60 | 29.34 | 30.49 | 27.43 | 26.40 | 26.73 |

| SSIM | 0.9059 | 0.8934 | 0.9339 | 0.8672 | 0.8331 | 0.8975 | 0.7932 | 0.7267 | 0.8132 | |

| VDN [44] | PSNR | 33.90 | 34.81 | 33.41 | 31.35 | 32.38 | 30.83 | 28.19 | 29.19 | 28.43 |

| SSIM | 0.9243 | 0.9251 | 0.9339 | 0.8713 | 0.8842 | 0.8361 | 0.8014 | 0.7213 | 0.8212 | |

| DEAMNet [8] | PSNR | 33.19 | 31.91 | 33.37 | 30.81 | 29.44 | 30.85 | 27.74 | 26.54 | 27.53 |

| SSIM | 0.9097 | 0.8957 | 0.9372 | 0.8717 | 0.8373 | 0.9048 | 0.8057 | 0.7368 | 0.8373 | |

| Proposed | PSNR | 33.85 | 32.90 | 33.97 | 31.32 | 30.67 | 31.52 | 29.02 | 28.12 | 28.25 |

| SSIM | 0.9603 | 0.9517 | 0.9621 | 0.9150 | 0.9246 | 0.9241 | 0.8831 | 0.8782 | 0.8755 |

| Dataset | Metrics | BM3D | DnCNN | FFDNet | VDN | RIDNet | DEAMNet | Proposed |

|---|---|---|---|---|---|---|---|---|

| SIDD [42] | PSNR | 25.65 | 23.66 | 29.30 | 39.26 | 37.87 | 39.35 | 39.55 |

| SSIM | 0.685 | 0.583 | 0.694 | 0.944 | 0.943 | 0.955 | 0.964 | |

| DnD [41] | PSNR | 34.51 | 32.43 | 37.61 | 39.38 | 39.25 | 39.63 | 39.76 |

| SSIM | 0.8507 | 0.7900 | 0.9115 | 0.9518 | 0.9528 | 0.9531 | 0.9617 |

| Method | Size | Size | Size | Parameters |

|---|---|---|---|---|

| BM3D [2] | 0.76 | 3.12 | 12.82 | - |

| WNNM [12] | 210.26 | 858.04 | 3603.68 | - |

| DnCNN [19] | 0.01 | 0.05 | 0.16 | 558 k |

| IrCNN [20] | 0.012 | 0.038 | 0.146 | - |

| FFDNet [28] | 0.01 | 0.05 | 0.11 | 490 k |

| AINDNet [33] | 0.05 | 0.03 | 0.80 | 13,764 k |

| ADNet [43] | 0.02 | 0.06 | 0.20 | 519 k |

| VDN [44] | 0.04 | 0.07 | 0.19 | 7817 k |

| RIDNet [5] | 0.07 | 0.21 | 0.84 | 1499 k |

| DEAMNet [8] | 0.05 | 0.19 | 0.73 | 2225 k |

| Proposed | 0.031 | 0.11 | 0.42 | 846 k |

| Dataset | PSNR and SSIM | RFA Module Removed | MFE Module Removed | MCA Module Removed | DRFE Module Removed |

|---|---|---|---|---|---|

| BSD68 | 33.85 | 30.65 | 28.66 | 29.30 | 30.26 |

| 0.9603 | 0.885 | 0.783 | 0.824 | 0.855 | |

| Kodak24 | 32.90 | 29.51 | 27.43 | 28.61 | 30.38 |

| 0.9517 | 0.7507 | 0.6900 | 0.8115 | 0.8518 | |

| Urban100 | 33.97 | 30.48 | 27.75 | 30.54 | 31.38 |

| 0.9621 | 0.7824 | 0.6192 | 0.7822 | 0.8766 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fahim, M.A.N.I.; Saqib, N.; Siam, S.K.; Jung, H.Y. Denoising Single Images by Feature Ensemble Revisited. Sensors 2022, 22, 7080. https://doi.org/10.3390/s22187080

Fahim MANI, Saqib N, Siam SK, Jung HY. Denoising Single Images by Feature Ensemble Revisited. Sensors. 2022; 22(18):7080. https://doi.org/10.3390/s22187080

Chicago/Turabian StyleFahim, Masud An Nur Islam, Nazmus Saqib, Shafkat Khan Siam, and Ho Yub Jung. 2022. "Denoising Single Images by Feature Ensemble Revisited" Sensors 22, no. 18: 7080. https://doi.org/10.3390/s22187080

APA StyleFahim, M. A. N. I., Saqib, N., Siam, S. K., & Jung, H. Y. (2022). Denoising Single Images by Feature Ensemble Revisited. Sensors, 22(18), 7080. https://doi.org/10.3390/s22187080