Abstract

An autonomous navigation method based on the fusion of INS (inertial navigation system) measurements with the line-of-sight (LOS) observations of space targets is presented for unmanned aircrafts. INS/GNSS (global navigation satellite system) integration is the conventional approach to achieving the long-term and high-precision navigation of unmanned aircrafts. However, the performance of INS/GNSS integrated navigation may be degraded gradually in a GNSS-denied environment. INS/CNS (celestial navigation system) integrated navigation has been developed as a supplement to the GNSS. A limitation of traditional INS/CNS integrated navigation is that the CNS is not efficient in suppressing the position error of the INS. To solve the abovementioned problems, we studied a novel integrated navigation method, where the position, velocity and attitude errors of the INS were corrected using a star camera mounted on the aircraft in order to observe the space targets whose absolute positions were available. Additionally, a QLEKF (Q-learning extended Kalman filter) is designed for the performance enhancement of the integrated navigation system. The effectiveness of the presented autonomous navigation method based on the star camera and the IMU (inertial measurement unit) is demonstrated via CRLB (Cramer–Rao lower bounds) analysis and numerical simulations.

1. Introduction

Recently, unmanned aircrafts flying at altitudes ranging from 20–100 km above sea level have received increasing interest in both the aeronautic and astronautic fields with respect to various applications [1,2,3]. As one of the key technologies of unmanned aircrafts, the precise navigation technique is essential for ensuring the feasibility and efficiency of the onboard guidance and control system [4,5,6].

Currently, the INS/GNSS integrated navigation system is widely used to provide information about the position, velocity and attitude of unmanned aircrafts, such as X-43A, X-51A and HTV-2. The INS/GNSS integration takes advantage of the consistently high accuracy of the GNSS and the short-term stability of the INS, which is promising for the achievement of precise navigation parameters [7,8,9]. However, the performance of INS/GNSS integrated navigation is easily affected by the disturbance of the GNSS signals. The use of INS alone is limited, as the navigation error increases with time, and it is sensitive to the initial errors [10,11].

To enhance the navigation performance, many researchers have focused on other autonomous navigation approaches [12,13,14,15,16]. A typical method is INS/CNS integration, where the accumulated errors of the gyroscopes in the IMU are compensated by the measurements of star sensors mounted on the aircraft [17,18,19]. Compared with the GNSS, one of the main characteristics of INS/CNS integrated navigation is that it relies on the optical signals from celestial bodies, such that the high-autonomous and anti-disturbance abilities of the navigation system are retained. The main limitation of traditional INS/CNS integrated navigation is that the position error accumulation caused by the bias of the accelerators in the IMU cannot be suppressed efficiently. Thus, it also suffers from an increasing number of errors over long flights. Achieving precise navigation information for unmanned aircrafts with a large flight envelope in the GNSS-denied environment remains a challenging problem.

In order to address this problem, this paper presents an INS/Vision integrated navigation method based on the star camera, which is mounted on the aircraft in order to measure the LOS vectors of space targets with the available position-related information, such as low-orbit satellites with a known ephemeris [20]. Different from the traditional star sensor, the star camera captures images of both the low-orbit satellites and the background stars in the field of view (FOV), and the LOS vectors of the satellites in the inertial frame can be extracted directly using the star catalogue and the least square (LS) algorithm. The current star cameras have the ability to observe low-orbit satellites and stars simultaneously during daytime when attached to unmanned aircrafts in near space. The measurements of the IMU and the star camera can be fused with a navigation filter in order to estimate the kinematic state of the aircraft.

The benefits of the presented navigation method are rooted in three aspects. Firstly, for unmanned aircraft in near space, the observation of the space targets, which are widely distributed within the celestial sphere, is less sensitive to the effect of clouds in comparison with landmark-based navigation [21,22]. Secondly, as it is easier to accurately measure the LOS vector of the space target than the direction vector of the Earth center using current technology, the presented method is promising for the achievement of a high rate of accuracy compared to navigation approaches based on the starlight and horizon reference [23,24]. Thirdly, both the position and the attitude information of the unmanned aircrafts can be measured with the star camera, which is able to observe the space target and the starlight simultaneously.

The main contributions of this work are as follows. Firstly, a novel autonomous navigation method is presented to determine the position, velocity and attitude of the unmanned aircrafts. Secondly, the theoretical accuracy of the presented method is analyzed through the calculation of the Cramer-Rao lower bounds (CRLB) based on the visibility analysis of the space targets. Thirdly, a Q-learning extended Kalman filter (QLEKF), which was designed to improve the navigation accuracy of space-target-based INS/Vision integration, is discussed.

This paper is divided into five sections. Following this introduction, the state and observation models of space-target-based INS/Vision integrated navigation are established in Section 2. Then, the method of the visibility analysis is outlined in Section 3. In addition, the QLEKF structure and an explanation of the learning process are given in Section 4. To demonstrate the performance of the presented method, a CRLB analysis and simulation study are described in Section 5. Finally, a brief conclusion is offered in Section 6.

2. System Model

2.1. Main Idea

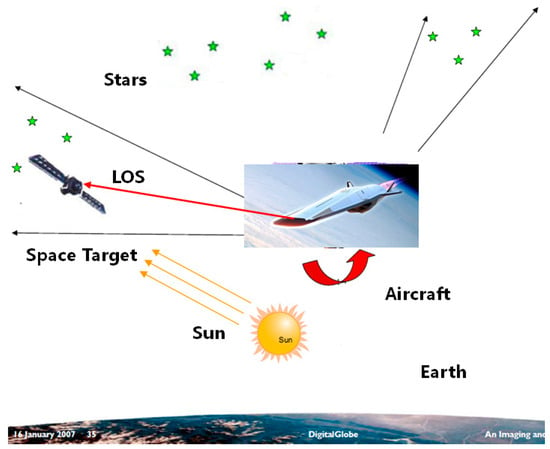

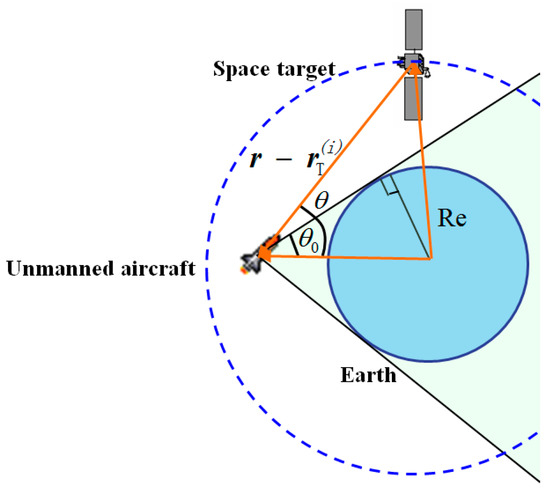

The presented navigation method is based on the observation of a space target LOS vector, as shown in Figure 1. The LOS vector of the space target in the inertial frame is acquired through the processing of the image captured by the star camera, which contains both the space targets with a known ephemeris and the background stars in the star catalog. As the observation of the space target LOS vector can be implemented by the star camera alone, it does not depend on the misalignments between the different instruments, such that the possible sources of error are reduced. The observations of the space target LOS vector and the starlight vector are used to determine the position and attitude of the aircraft. It should be mentioned that the starlight vectors of the stars in the inertial frame can be calculated with an accuracy of less than 1 mas. The number and magnitude of the observed stars depends on the FOV and the sensitivity of the star camera. Generally, a star camera with an FOV on the order of and a sensitivity of about 8 Mv is feasible for navigation.

Figure 1.

The measurement of the space target LOS vector.

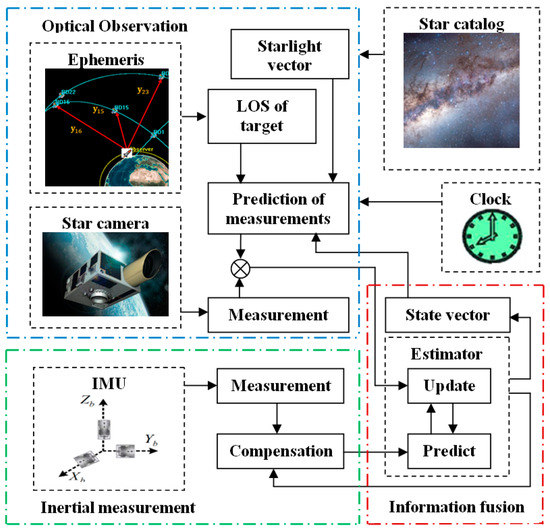

The space-target-based INS/Vision integrated navigation system is mainly composed of three parts: the star camera, the IMU and the navigation filtering algorithm, as shown in Figure 2. The IMU is composed of three orthogonal accelerometers and three orthogonal gyroscopes, which are used to measure the angular rate and the specific force of the unmanned aircraft.

Figure 2.

Space-target-based INS/Vision integrated navigation method.

The prediction of the aircraft’s position, velocity and attitude are obtained from the measurements taken by the gyroscopes and the accelerometers. The navigation filter, which is designed based on the state model and the observation model of the navigation system, is implemented in order to update the prediction of the position, velocity and attitude based on the measurements by the star camera. As the position, velocity and attitude of the unmanned aircraft are predicted using the IMU, with a high data update rate, the real-time accuracy can be guaranteed in the case when the exposure time of the star camera is recorded accurately. For the implementation of the navigation method, multiple space targets should be tracked by the ground stations, and the ephemeris of the space targets should be undated before the unmanned aircraft takes off. Then, the positions of the space targets can be obtained through orbital propagation during the flight of the aircraft.

2.2. Model for the Attitude Determination

The star camera and the gyroscopes are integrated in order to generate the attitude solution of unmanned aircrafts via the observation of the starlight so as to correct the attitude error and the gyroscope drift. The quaternion is used to describe the attitude of the aircraft [25,26]. For the attitude determination system composed of the star camera and the gyroscopes, the state vector is considered as:

where is the estimation error of the gyroscope drift, and are the gyroscope drift and its estimate, respectively, and the subscript k is the time label. is a part of the error quaternion , where . The operator represent the Euclidean norm. The error quaternion represents the difference between the estimated quaternion and the true quaternion , which is expressed as:

or

The operator represents the quaternion product, which is defined as:

with

where , is a cross-product matrix defined by

The discrete-time state model of the integrated navigation system for the attitude determination is expressed as:

with

where is the time step for the state prediction and is the estimated angular velocity, which can be obtained from the gyroscope measurements after the compensation for the drift. The denotation is formulated as:

The process noise is modeled to obtain zero-mean Gaussian white noise with the covariance matrix .

For simplicity, as the star sensor is mounted on the aircraft, the aircraft body frame and the star camera frame are not distinguished in this paper. For the attitude determination, the starlight vector on the aircraft body frame is observed using the star camera. The measurement is considered as:

where and () are the starlight vectors of the j-th star in the aircraft body frame and its estimate, while N is an integer, which denotes the number of visible stars in the FOV of the star camera.

To construct an observation model which relates the state vector to the measurements, the following function of the quaternion is introduced:

where is the starlight vector of the j-th star in the inertial frame, which can be calculated according to the star catalog, while is the attitude transformation matrix from the inertial frame to the aircraft body frame, which is formulated as:

Accordingly, is calculated as:

Substituting (3) into (12), we have:

Inserting (14) into (15) yields:

As the measurement accuracy of the star camera is on the order of a few arcsec, neglecting the high-order terms, the matrix is simplified as:

From (16) and (17), we obtain:

where

According to (11) and (18), for synchronously observed multiple stars, the measurement model is expressed as:

where

The measurement noise is modeled as Gaussian white noise with the zero mean and covariance matrix .

Using the system model shown in (8) and measurement model shown in (20), it is convenient to design a Kalman filter (KF) in order to estimate the state vector by means of the measurement . Then, the estimates of and are used to correct the attitude quaternion and gyroscope drift . The procedure of the attitude determination using the Kalman filtering algorithm is similar to that in [27], and it is omitted here for simplicity.

2.3. Model for the Position Estimation

In space-target-based INS/Vision integration, the space target LOS vectors observed using the star camera are used to correct the INS position and velocity errors in the inertial frame. The state vector for the position estimation consists of position vector , velocity vector and accelerometer bias , which is expressed as:

The state model is established to describe the dynamics of the state vector, which is represented as:

where is the process noise with the covariance matrix . The discretized nonlinear function is formulated as:

where is the time step for the state prediction, can be obtained through the method shown in the previous sub-section, is the output of the accelerometers in the aircraft body frame, and is a function used to describe the gravitational acceleration, which can be written as:

where is the gravitational constant of Earth and the function encapsulates the perturbation accelerations of the aircraft other than those that are cause by the two-body gravitational acceleration. The Jacobian matrix of the state model is derived as:

with

Let

For simplicity, only the two-body gravitational acceleration is taken into consideration. The elements in the matrix shown in (28) are written as:

The space target LOS vector in the inertial frame is used as the measurement for the position estimation. Accordingly, the measurement model is expressed as:

with

where is the measurement of the i-th space target LOS vector, is the position vector of the i-th space target, which can be calculated according to the known ephemeris and orbit propagation, and is the measurement noise with the covariance matrix . The corresponding Jacobian matrix is:

According to the state model shown in (23) and the measurement model shown in (38), the extended Kalman filter (EKF) can be designed to fuse the accelerometer and star camera data. Furthermore, an advanced filtering algorithm, which is presented in Section 4, can be used to enhance the navigation performance.

3. Principle of Visibility Analysis

The visibility analysis of the space targets is the basis for the performance evaluation of the integrated navigation method. In practice, only the visible space targets can be observed with the star camera in order to provide valuable navigation-related information. The user should confirm whether certain space targets are visible to the aircraft before the implementation of the navigation scheme. To facilitate the application, certain principles used to analyze the visibility of the space targets are described in this section.

First, for a space target to be visible, its apparent magnitude should be sufficient so as to be detected with the star camera, i.e.,

where is the apparent magnitude of the i-th space target and is a magnitude bound specified by the sensitivity of the star camera. To simplify the notation, the time label k is omitted in this section. The apparent magnitude can be calculated as:

where is the absolute magnitude of the space target, which is approximated by:

where is the apparent magnitude of Sun, is the observed body radius, is the body albedo of the space target and is an astronomical unit. is the position vector of Sun. is a function to describe the integration of the reflected light, which is approximated by:

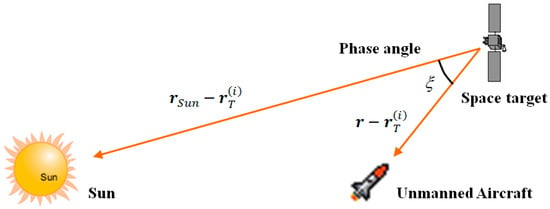

where is the sun–target–aircraft angle of the i-th space target, which is expressed as:

It describes the relative positions of the sun, the space target and the unmanned aircraft, as shown in Figure 3.

Figure 3.

Relative positions of the Sun, space target and unmanned aircraft.

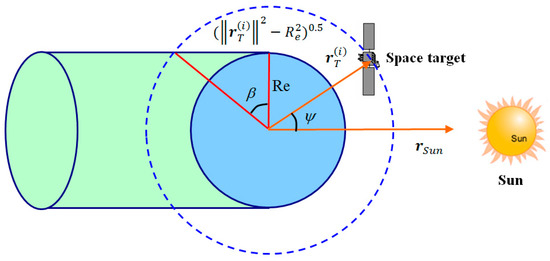

Secondly, a necessary condition for the visibility of the space target is that it is not hidden by the shadow of Earth, which can be judged with the following condition:

where is the Sun–Earth–target angle of the i-th target, which is expressed as:

From Figure 4, the angle bound is calculated as:

where is the Earth’s radius. The space target is illuminated by the Sun and can be seen as a candidate observation object when it is not within the shadow of the Earth.

Figure 4.

Earth’s shadow in the orbit of space target.

Thirdly, for the space target to be visible, it must not be occulted by celestial bodies, such as the Earth, Sun and Moon. A space target is believed to be occulted when its LOS vector points to a celestial body. Generally, the LOS vector of a space target cannot be measured effectively by the star camera in this case, as the images of the space target and the celestial body overlap. For example, the relative positions of the space target, the aircraft and Earth are shown in Figure 5.

Figure 5.

Relative positions of the Earth, aircraft and target.

From the figure, to judge whether the space target is occulted by the Earth, the following condition is given:

where is the Earth–aircraft–target angle, which is expressed as:

The angle bound is calculated as:

Similar conditions can be derived to judge whether the space target is occulted by the Sun or Moon.

4. Q-Learning Extended Kalman Filter

4.1. Q-Learning for the Filter Design

In this section, we describe an advanced navigation filter that was designed to estimate the position and velocity of the unmanned aircraft using the IMU and the star camera mounted on the aircraft in cases where the GNSS is unavailable. The EKF is a classic algorithm used to fuse the measurements of different sensors. An important procedure for the optimization of the EKF is the fine-tuning of the filtering parameters, such as the noise covariance matrices. However, the pre-determined noise covariance matrices based on the prior information may be not appropriate for aircrafts during flight, which will deteriorate the state estimation. In order to address this problem, we attempted to tune the process noise covariance with the measurement data online using the Q-learning approach so as to achieve a higher accuracy of the navigation system.

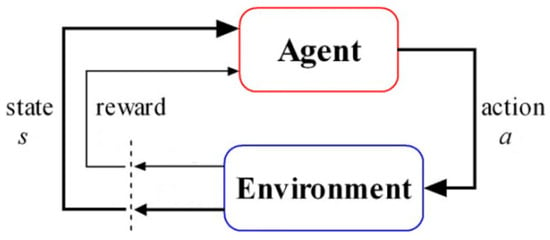

The Q-learning is a kind of reinforcement learning (RL) approach for solving sequential decision problems, where the agent interacts with an initially unknown environment and optimizes its behavior in order to maximize its cumulative reward [28,29,30,31]. Q-learning has received considerable attention, with many successful applications in various fields, such as gaming, robots, industrial process control and network management. Figure 6 illustrates the main components of Q-learning. An agent takes action in the environment described by the state , which may cause the change in the environment’s state (from to ), and receives an immediate reward from the environment as a feedback on the action. The learning objective is to modify the action selection policy such that the cumulative reward, which is represented by the Q function , is maximized. Q-learning has been studied as an important data-based approach for adaptive control and optimal filters and is preferred for practical systems with uncertain models [32,33,34].

Figure 6.

Main components of the iterative learning procedure.

In this study, to deal with the continuous state and action spaces, a Q-learning method with function approximation is adopted, where the Q function is expressed as:

with

where () are basis functions, () are weights and the subscript is the number of iterations. In this paper, the basis function is chosen as:

where , , and are the parameters of the basis function. It should be mentioned that the choice of the basis function is determined by the user, and a poor choice may lead to a poor filtering performance. In this study, the basis function is designed with the structure similar to that in [35], such that the appropriate action can be activated. The incremental weight update rule of Q-learning is given by:

where is the learning rate, is the discount factor and and are the tuning parameters. It is easy to observe from the previous equations that:

or

The convergence of the iterative Q function is studied in the following theorem, which can help readers to comprehend the key idea of the learning procedure.

Theorem 1:

Considering the iterative Q function sequence, if the initial Q functionsatisfies the following condition:

and there exist the constantsand, such that the following inequalities hold:

where

then the iterative Q functionconverges to the optimal Q functionas, i.e.,

where the functionis formulated as:

The proof of the theorem is provided in Appendix A. It shows that, under certain conditions, the iterative Q function is convergent to the optimal Q function , which represents the maximum cumulative reward. Generally, if the constants and are sufficiently large, and is sufficiently small, the conditions shown in (59) and (60) can be satisfied.

4.2. Filter Algorithm

Based on the system model for space-target-based INS/Vision integrated navigation, we designed the QLEKF algorithm, where the Q-learning is coupled with the EKF for the fine-tuning of the filter parameters with the objective of improving the estimation accuracy. The process noise covariance matrix was chosen for tuning, as it is crucial for calculating the filtering gain for the information contained in the system model and for the measurement to be utilized reasonably. In the Q-learning approach, the state is related to the current value of the process noise covariance matrix. For example, it can be assigned as 0 for the nominal noise covariance matrix. The action is related to the magnitude so as to enlarge or reduce the current process noise covariance matrix. It can be assigned as different numbers, such as 0, 1, 2 …, for different degrees of tuning. The reward is constructed based on the innovation of the EKF, which is a well-known indication of the estimation accuracy of the filter. Generally, a smaller innovation indicates a superior filtering performance, and vice versa.

For the system model shown in (23) and (38), the QLEKF algorithm is formulated in Algorithm 1. For simplicity, the subscript of the weight vector , representing the number of iterations, is omitted here.

| Algorithm1: Q-learning extended Kalman filter |

| 1: , Initialization |

| 2: k 0 |

| 3: |

| 4: for each period, do |

| 5: for all , do |

| 6: , |

| 7: for , do |

| 8: |

| 9: Benchmark filter |

| 10: Exploring filter |

| 11: Main filter |

| 12: |

| 13: |

| 14: end for |

| 15: Calculation of Reward |

| 16: Update of weight |

| 17: , Resetting of exploring filter |

| 18: end for |

| 19: Selection of the best action |

| 20: end for |

| 21: return and Result of state estimation |

In Algorithm 1, is the pre-determined action space to be explored, is a positive number representing the time interval for the calculation of the immediate reward , and are the state estimate and its corresponding error covariance matrix of the main filter, and is the innovation. Note that, in regard to the main filter, the process noise covariance matrix tuned through Q-learning is used instead of in the system model.

It can be seen from the algorithm that there are another two parallel filters in addition to the main filter, where the benchmark filter and the exploring filter are distinguished by the superscripts B and S. The difference between the benchmark filter and the exploring filter is that the process noise covariance matrix of the benchmark filter is obtained from the prior knowledge of the system, while that of the exploring filter is altered by enlarging or reducing .

The statistical values of the innovations obtained from the benchmark filter and the exploring filter are compared in order to construct the immediate reward , which indicates whether the action is preferable for improving the filtering performance. Note that the estimation of the exploring filter is reset after each update of the weight vector to avoid the impacts of historical data on the evaluation of the action. An appropriate action for tuning the process noise covariance matrix of the main filter is determined from the approximated Q function . The output of the QLEKF is the estimation result of the main filter.

For clarity, the subroutine of the EKF is shown in Algorithm 2.

| Algorithm 2: Extended Kalman filter |

| 1: function |

| Prediction |

| Update |

| 8: return |

| 9: end function |

In Algorithm 2, denotes the Kalman gain. It is easy to see from the algorithm that the EKF is designed based on the system model shown in Section 2.3. The innovation , as the output of Algorithm 2, is not used in the next iteration, but it is used to construct the immediate reward in Algorithm 1.

5. Simulations

5.1. Simulation Conditions

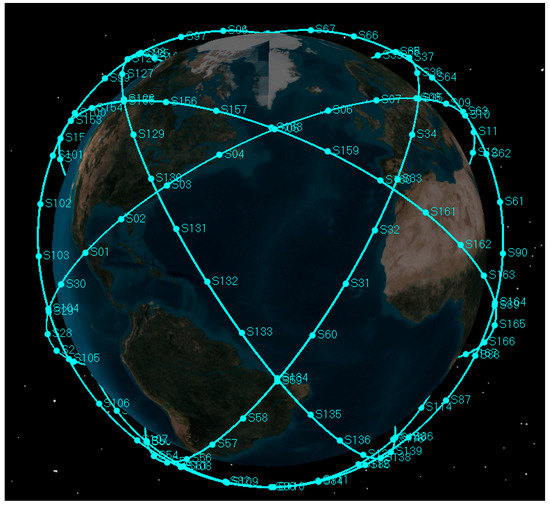

Simulations were performed to evaluate the performance of the space-target-based INS/Vision integrated navigation. An ideal trajectory was produced for the unmanned aircraft with an altitude of about 30 km and velocity of about 680 m/s, including the true position, velocity and attitude. The noises of the IMU and the star camera were added to the ideal values to obtain the simulated measurement data. For the data generation of the gyroscope, the angle random walk, the rate random walk and the bias were taken into consideration. The LEO satellites (low Earth orbit) with a semi-major axis of 6938 km and inclination of were seen as the observed space targets. The construction of the satellite constellation is shown in Figure 7.

Figure 7.

Satellite constellation in the simulation scenario.

The parameters for the integrated navigation simulation are listed in Table 1.

Table 1.

Basic simulation configuration.

It should be pointed out that the estimation performance and computational effort of the algorithm depend on the QLEKF parameters. In particular, it can be seen from the simulation results that the QLEKF parameters shown in Table 1 yield an improved performance, and the convenience of calculation is retained. Further works are planned in which we will perform a rigorous comparison analysis for the estimation performance using different QLEKF parameters. The absolute position, velocity and attitude of the aircraft are estimated with the method shown in Section 2 and Section 4 in cases where the positions of the space targets are known. The comparison between the state estimation and the ideal trajectory yields the navigation results.

5.2. CRLB Based on the Visibility Analysis

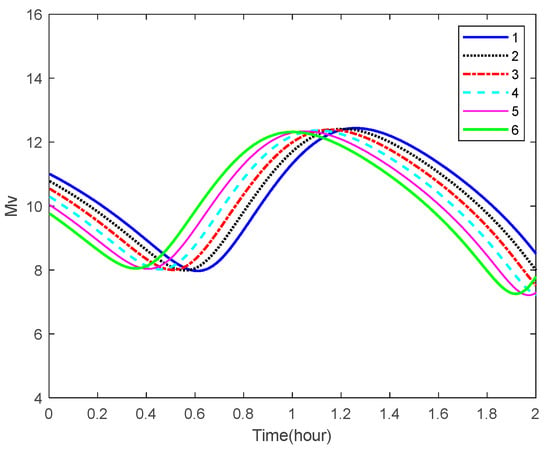

The visibility of the space targets for the star camera on the aircraft is analyzed using the principles shown in Section 3. The curves of the apparent magnitude of some space targets over time are shown in Figure 8. It can be seen from the figure that, as the specified sensitivity of the star camera in Algorithm 1 is 8 Mv, some space targets are bright enough to be observed in certain areas of the orbit.

Figure 8.

Apparent magnitudes of the space targets.

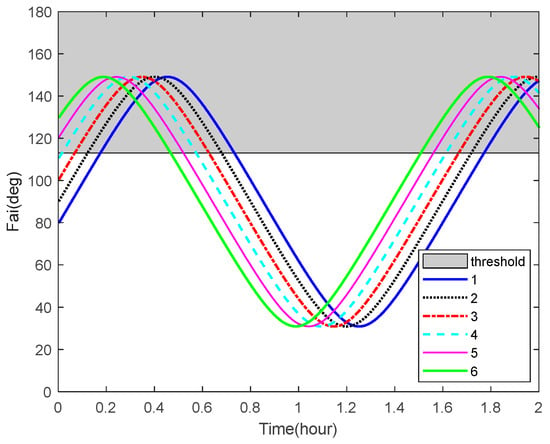

The curves of the Sun-Earth-target angles of some space targets over time are shown in Figure 9. The angle bound , representing Earth’s shadow, is plotted as the shadow in the figure. From this result, we can see that the space targets are not in Earth’s shadow for most of the time.

Figure 9.

Sun–Earth–target angles and the corresponding bounds.

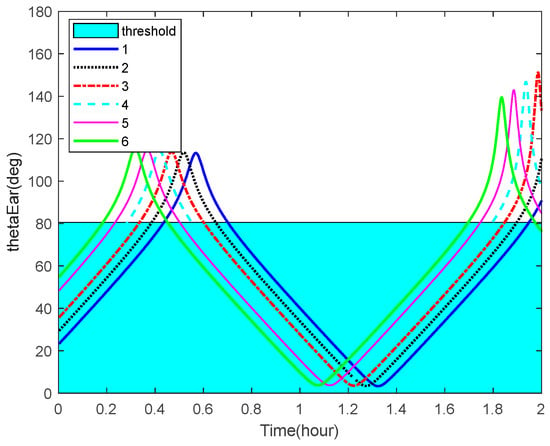

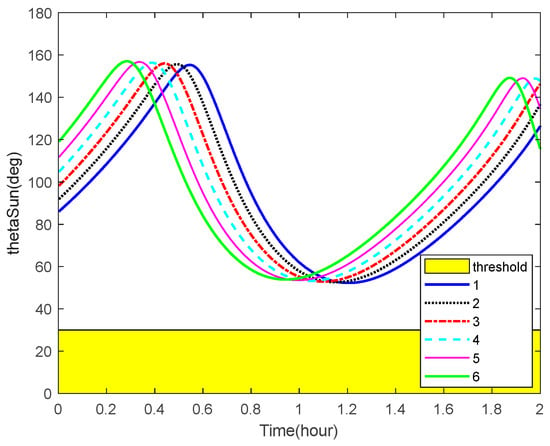

The curves of the Earth-aircraft-target angles, Sun-aircraft-target angles of some space targets over time and the corresponding angle bounds are shown in Figure 10 and Figure 11, respectively. The figures illustrate that, sometimes, the space targets are not occulted by the Earth or Sun.

Figure 10.

Earth-aircraft-target angles and the corresponding bounds.

Figure 11.

Sun-aircraft-target angles and the corresponding bounds.

Based on the previous analysis, the potential performance of the presented navigation method was evaluated through the CRLB, which was derived from the system model. The CRLB provides a theoretical bound on the achievable accuracy of the navigation system. Thus, it is useful to study the feasibility of a novel navigation scheme. The calculation procedure of the CRLB can be found in the literature [36,37].

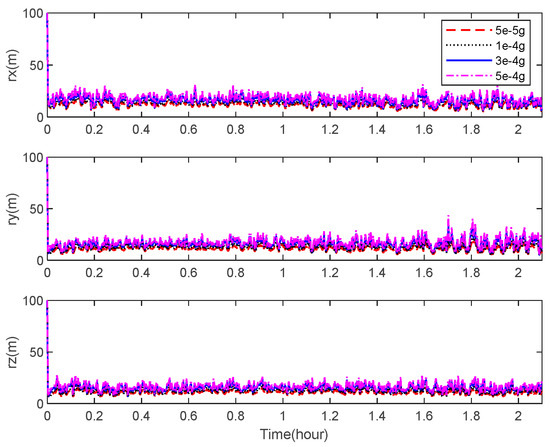

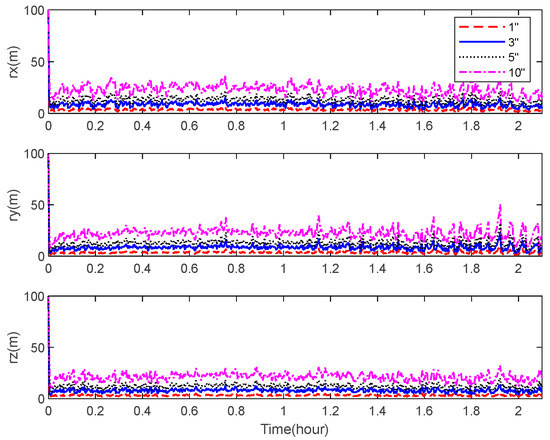

Figure 12 and Figure 13 describe the theoretical bounds of the three-axis position estimation errors of the integrated navigation system with different noise levels of the accelerometer and the star camera in cases where only the visible space targets are observed. It indicates that a high navigation performance is achievable using the state-of-the-art sensors by applying the space-target-based INS/Vision integrated navigation method.

Figure 12.

CRLB for the position estimation with different accelerometer noise levels.

Figure 13.

CRLB for the position estimation with different star camera noise levels.

5.3. Simulation Results of the Navigation Filter

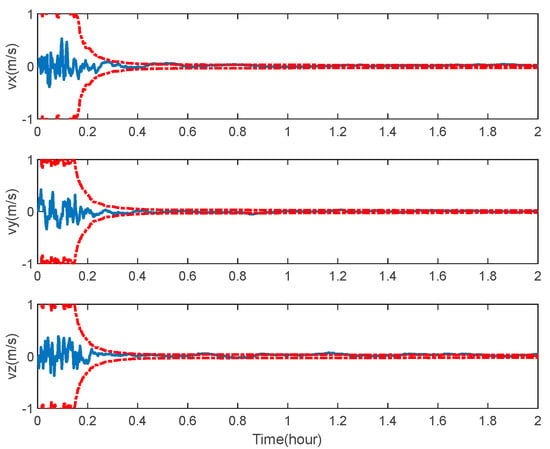

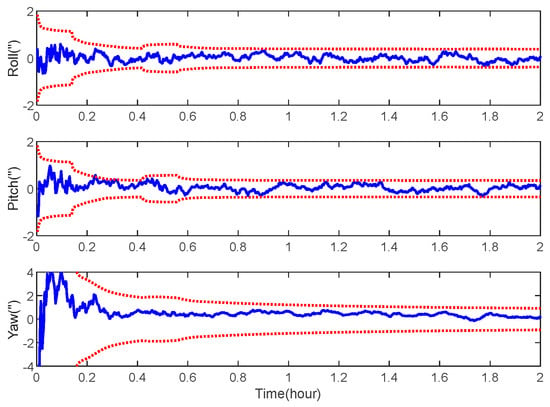

The navigation results of the space-target-based INS/Vision integration analysis obtained from the EKF are shown in Figure 14, Figure 15 and Figure 16, including the curves of the position, velocity and attitude errors, where the solid lines represent the estimation error and the dashed lines represent the bound, calculated from the error covariance matrix of the EKF.

Figure 14.

Position error of space-target-based INS/Vision integrated navigation.

Figure 15.

Velocity error of space-target-based INS/Vision integrated navigation.

Figure 16.

Attitude error of space-target-based INS/Vision integrated navigation.

As expected, the position, velocity and attitude can all be estimated correctly by the measurement of the LOS vectors of the space targets and starlight vectors. The mean estimation errors of the position, velocity and attitude of the space-target-based INS/Vision integration were 12.96 m, 0.32 m/s and 0.94″, respectively. This shows that the presented method has the potential to realize the absolute navigation of unmanned aircrafts with a high accuracy. For the implementation of the navigation method, the position error of the space target should be sufficiently less than the accuracy specification of the unmanned aircraft. In the simulation, only the measurements of the visible space targets are used to update the state vector. In the case where there are no visible space targets, the state vector is predicted by the IMU. The effect of the visibility is not distinct, as there are multiple space targets, as shown in Figure 7.

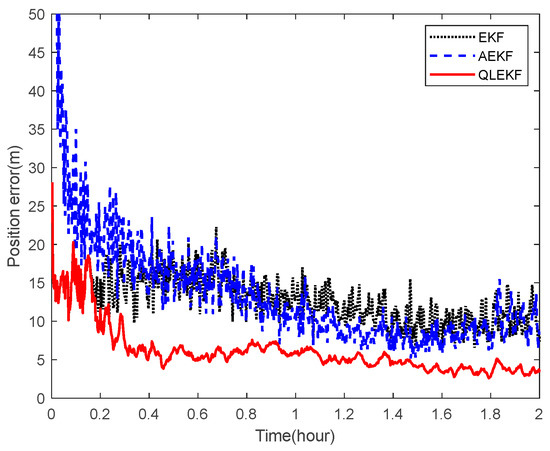

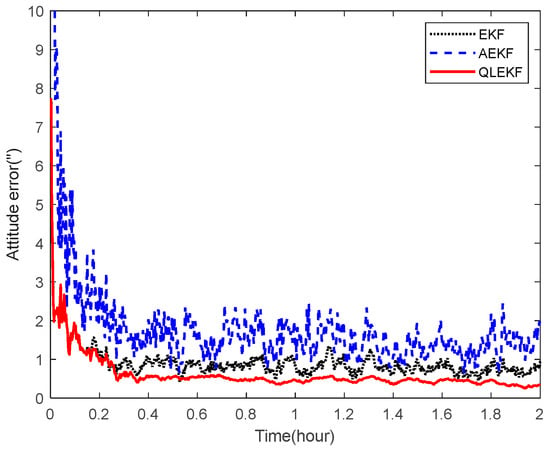

Next, the ability of the QLEKF to improve the navigation accuracy for aircraft navigation through space-target-based INS/Vision integration was assessed. Monte Carlo simulations were conducted to evaluate the performance of the QLEKF in comparison with the traditional EKF and adaptive extended Kalman filter (AEKF) [38]. The QLEKF aims to fine-tune the noise covariance matrix obtained from the prior knowledge, while the AEKF aims to estimate the noise covariance matrix together with the state vector. Figure 17 and Figure 18 show the norms of the root mean squared errors (RMSE) of the position and attitude estimates obtained from the EKF, the AEKF and the QLEKF.

Figure 17.

RMSE of the position estimation for EKF, AEKF and QLEKF.

Figure 18.

RMSE of the attitude estimation for EKF, AEKF and QLEKF.

According to the simulation results, the EKF provides similar results to the AEKF, while the QLEKF outperforms both the EKF and the AEKF with higher precision on average. These results demonstrate that the Q-learning approach is valuable for fine-tuning the critical parameters of the navigation filter.

6. Conclusions

This paper presents a novel navigation method that can be used to estimate the position, along with velocity and attitude, of unmanned aircrafts, using the star camera to observe the LOS vectors of the space targets with known positions. This method is especially suitable for aircrafts with long-term and high-accuracy autonomous navigation requirement. Based on the mathematical model and the visibility analysis, the CRLB of the integrated navigation system was calculated in order to validate the effectiveness of the presented method. In addition, the QLEKF was designed to enhance the navigation performance. The simulation results demonstrate the high performance of the space-target-based INS/Vision integrated navigation method. Further research will focus on the improvement of the navigation scheme in order to address the unfavorable effects of the space target position error and optical measurement error caused by thermal environment on the performance of the system.

Author Contributions

Conceptualization, K.X. and C.W.; methodology, K.X.; software, P.Z.; validation, K.X., P.Z. and C.W.; formal analysis, K.X.; writing—original draft preparation, K.X.; writing—review and editing, P.Z.; supervision, C.W.; project administration, K.X.; funding acquisition, C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Civil Aerospace Advance Research Project, grant number D020403, and the National Natural Science Foundation of China, grant number U21B6001.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Theorem 1.

First, to facilitate the analysis of the learning property, consider a specific case. In the case, where , with the initial value , the iterative Q function shown in (57) can be written as:

The mathematical induction is adopted to prove the following statement:

For , from (A1) and the condition shown in (59), we have:

From (60), it can be verified that:

Substituting (61) into (A4) yields:

It is derived from (A3) and (A5) that:

Similarly, we can obtain:

According to (A6) and (A7), the inequality (A2) holds for . Assume that the inequality (A2) holds for , i.e.,

From (A1) and the condition shown in (59), we obtain:

From (60), it can be verified that:

Inserting (61) into (A10), we have:

It follows from (A9) and (A11) that:

Similarly, we can obtain:

From (A12) and (A13), the inequality (A2) holds for . Hence, for , the statement (A2) holds. The mathematical induction is complete.

As and , we have:

This leads to:

It is derived from (A2) and (A15) that:

Secondly, the following statement is proven by mathematical induction:

For , from (57) and the condition shown in (58), we get:

Thus, the statement (A17) holds for . Assume that the inequality (A17) holds for , i.e.,

It is derived from (57) and (A19) that:

It is seen from (A20) that the statement (A17) holds for . Hence, for , the statement (A17) holds. The mathematical induction is complete.

Thirdly, the mathematical induction is used to prove the following statement:

For , from (57), (A1) and the value range of the parameter , we have:

Thus, the statement (A21) holds for . Assume that the inequality (A21) holds for , i.e.,

It follows from (57) and (A23) that:

Thus, the statement (A21) holds for . According to (A22) and (A24), for , the statement (A21) holds. The mathematical induction is complete.

From (A17), (A21) and (A16), there exists a limit of . Let

Finally, we prove the following statement by contradiction:

Assume that (A26) is false. Define the function

Let be a small positive constant, such that the signs of and are the same. Considering (A25), there exists a positive integer , such that the following inequality holds for :

According to (57), the iterative Q function is written as:

Similarly, can be expressed as:

From (A28) and (A32), we obtain:

According to (A33), if , then . On the contrary, if , then . This is in contradiction with the fact that there exists a limit of , as . Thus, the assumption is false, and the equation (A26) holds. Note that the formulation of (A26) is essentially the same as that of (63). □

References

- Chen, K.; Zhou, J.; Shen, F.Q.; Sun, H.Y.; Fan, H. Hypersonic boost-glide vehicle strapdown inertial navigation system/global positioning system algorithm in a launch-centered earth-fixed frame. Aerosp. Sci. Technol. 2020, 98, 1–17. [Google Scholar] [CrossRef]

- Hu, G.; Gao, B.; Zhong, Y.; Ni, L.; Gu, C. Robust unscented kalman filtering with measurement error detection for tightly coupled INS/GNSS integration in hypersonic vehicle navigation. IEEE Access 2019, 7, 151409–151421. [Google Scholar] [CrossRef]

- Cheng, X.; Yang, Y.; Hao, Q. Analysis of the effects of thermal environment on optical systems for navigation guidance and control in supersonic aircraft based on empirical equations. Sensors 2016, 16, 1717. [Google Scholar] [CrossRef] [PubMed]

- Kahn, A.D.; Edwards, D.J. Navigation, guidance, and control of a micro unmanned aerial glider. J. Guid. Control Dyn. 2019, 42, 2474–2484. [Google Scholar] [CrossRef]

- Suna, J.; Bloma, H.A.P.; Ellerbroeka, J.; Hoekstraa, J.M. Particle filter for aircraft mass estimation and uncertainty modeling. Transp. Res. Part C 2019, 105, 145–162. [Google Scholar] [CrossRef]

- Hiba, A.; Gáti, A.; Manecy, A. Optical navigation sensor for runway relative positioning of aircraft during final approach. Sensors 2021, 21, 2203. [Google Scholar] [CrossRef]

- Hu, G.; Ni, L.; Gao, B.; Zhu, X.; Wang, W.; Zhong, Y. Model predictive based unscented Kalman filter for hypersonic vehicle navigation with INS/GNSS integration. IEEE Access 2020, 8, 4814–4823. [Google Scholar] [CrossRef]

- Pan, W.; Zhan, X.; Zhang, X. Fault exclusion method for ARAIM based on tight GNSS/INS integration to achieve CAT-I approach. IET Radar Sonar Navig. 2019, 13, 1909–1917. [Google Scholar] [CrossRef]

- Krasuski, K.; Cwiklak, J.; Jafernik, H. Aircraft positioning using PPP method in GLONASS system. Aircr. Eng. Aerosp. Technol. 2018, 90, 1413–1420. [Google Scholar] [CrossRef]

- Jurevicius, R.; Marcinkevicius, V.; Šeibokas, J. Robust GNSS-denied localization for UAV using particle filter and visual odometry. Mach. Vis. Appl. 2019, 30, 1181–1190. [Google Scholar] [CrossRef] [Green Version]

- Chen, D.; Neusypin, K.; Selezneva, M.; Mu, Z. New algorithms for autonomous inertial navigation systems correction with precession angle sensors in aircrafts. Sensors 2019, 19, 5016. [Google Scholar] [CrossRef]

- Lei, X.; Liu, X. A high performance altitude navigation system for small rotorcraft unmanned aircraft. Mechatronics 2019, 62, 102248. [Google Scholar] [CrossRef]

- Gui, M.; Zhao, D.; Ning, X.; Zhang, C.; Dai, M. A time delay/ star angle integrated navigation method based on the event-triggered implicit unscented Kalman filter. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Zhang, H.; Jiao, R.; Xu, L.; Xu, C.; Mi, P. Formation of a satellite navigation system using X-ray pulsars. Publ. Astron. Soc. Pac. 2019, 131, 045002. [Google Scholar]

- Wang, T.; Somani, A.K. Aerial-DEM geolocalization for GPS-denied UAS navigation. Mach. Vis. Appl. 2020, 31, 3. [Google Scholar] [CrossRef]

- Ye, L.; Yang, Y.; Ma, J.; Deng, L.; Li, H. Research on an LEO constellation multi-aircraft collaborative navigation algorithm based on a dual-way asynchronous precision communication-time service measurement system (DWAPC-TSM). Sensors 2022, 22, 3213. [Google Scholar] [CrossRef]

- Wang, R.; Xiong, Z.; Liu, J.; Shi, L. A new tightly-coupled INS/CNS integrated navigation algorithm with weighted multi-stars observations. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2016, 230, 698–712. [Google Scholar] [CrossRef]

- Ning, X.; Yuan, W.; Liu, Y. A tightly coupled rotational SINS/CNS integrated navigation method for aircraft. J. Syst. Eng. Electron. 2019, 30, 770–782. [Google Scholar]

- Chen, H.; Gao, H.; Zhang, H. Integrated navigation approaches of vehicle aided by the strapdown celestial angles. Int. J. Adv. Robot. Syst. 2020, 5, 1–12. [Google Scholar] [CrossRef]

- Xiong, K.; Wei, C.; Liu, L. Autonomous navigation for a group of satellites with star sensors and inter-satellite links. Acta Astronaut. 2013, 86, 10–23. [Google Scholar]

- Huang, L.; Song, J.; Zhang, C.; Cai, G. Observable modes and absolute navigation capability for landmark-based IMU/Vision navigation system of UAV. Optik 2020, 202, 1–19. [Google Scholar] [CrossRef]

- Kim, Y.; Bang, H. Vision-based navigation for unmanned aircraft using ground feature points and terrain elevation data. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2018, 232, 1334–1346. [Google Scholar] [CrossRef]

- Christian, J.A. Optical navigation using planet’s centroid and apparent diameter in image. J. Guid. Control Dyn. 2015, 38, 192–204. [Google Scholar] [CrossRef]

- Chang, J.; Geng, Y.; Guo, J.; Fan, W. Calibration of satellite autonomous navigation based on attitude sensor. J. Guid. Control Dyn. 2017, 40, 185–191. [Google Scholar] [CrossRef]

- Lefferts, E.J.; Markley, F.L.; Shuster, M.D. Kalman filtering for spacecraft attitude estimation. J. Guid. Control Dyn. 1982, 5, 417–429. [Google Scholar] [CrossRef]

- Markley, F.L. Attitude error representations for Kalman filtering. J. Guid. Control Dyn. 2003, 63, 311–317. [Google Scholar] [CrossRef]

- Xiong, K.; Wei, C. Integrated celestial navigation for spacecraft using interferometer and earth sensor. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2020, 234, 2248–2262. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; The MIT Press: London, UK, 2018. [Google Scholar]

- Gosavi, A. Reinforcement learning: A tutorial survey and recent advances. INFORMS J. Comput. 2009, 21, 178–192. [Google Scholar] [CrossRef]

- Xu, X.; Zuo, L.; Huang, Z. Reinforcement learning algorithms with function approximation: Recent advance and applications. Inf. Sci. 2014, 261, 1–31. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Wei, Q.; Lewis, F.L.; Sun, Q.; Yan, P.; Song, R. Discrete-time deterministic Q-learning: A novel convergence analysis. IEEE Trans. Cybern. 2017, 47, 1224–1237. [Google Scholar] [CrossRef]

- Luo, B.; Wu, H.N.; Huang, T. Optimal output regulation for model-free quanser helicopter with multistep Q-learning. IEEE Trans. Ind. Electron. 2018, 65, 4953–4961. [Google Scholar] [CrossRef]

- Xiong, K.; Wei, C.; Zhang, H. Q-learning for noise covariance adaptation in extended Kalman filter. Asian J. Control 2021, 23, 1803–1816. [Google Scholar] [CrossRef]

- Ding, L.; Yang, Q. Research on air combat maneuver decision of UAVs based on reinforcement learning. Avion. Technol. 2018, 49, 29–35. [Google Scholar]

- Ristic, B.; Farina, A.; Benvenuti, D.; Arulampalam, M.S. Performance bounds and comparison of nonlinear filters for tracking a ballistic object on re-entry. IEE P-Radar Sonar Navig. 2003, 150, 65–70. [Google Scholar] [CrossRef]

- Lei, M.; Wyk, B.J.; Qi, Y. Online estimation of the approximate posterior cramer-rao lower bound for discrete-time nonlinear filtering. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 37–57. [Google Scholar] [CrossRef]

- Xiong, K.; Liu, L. Design of parallel adaptive extended Kalman filter for online estimation of noise covariance. Aircr. Eng. Aerosp. Technol. 2019, 91, 112–123. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).