Transportation Mode Detection Combining CNN and Vision Transformer with Sensors Recalibration Using Smartphone Built-In Sensors

Abstract

:1. Introduction

2. Related Work

2.1. Machine Learning-Based Methods

2.2. Deep Learning-Based Methods

3. Motivation and Methodology

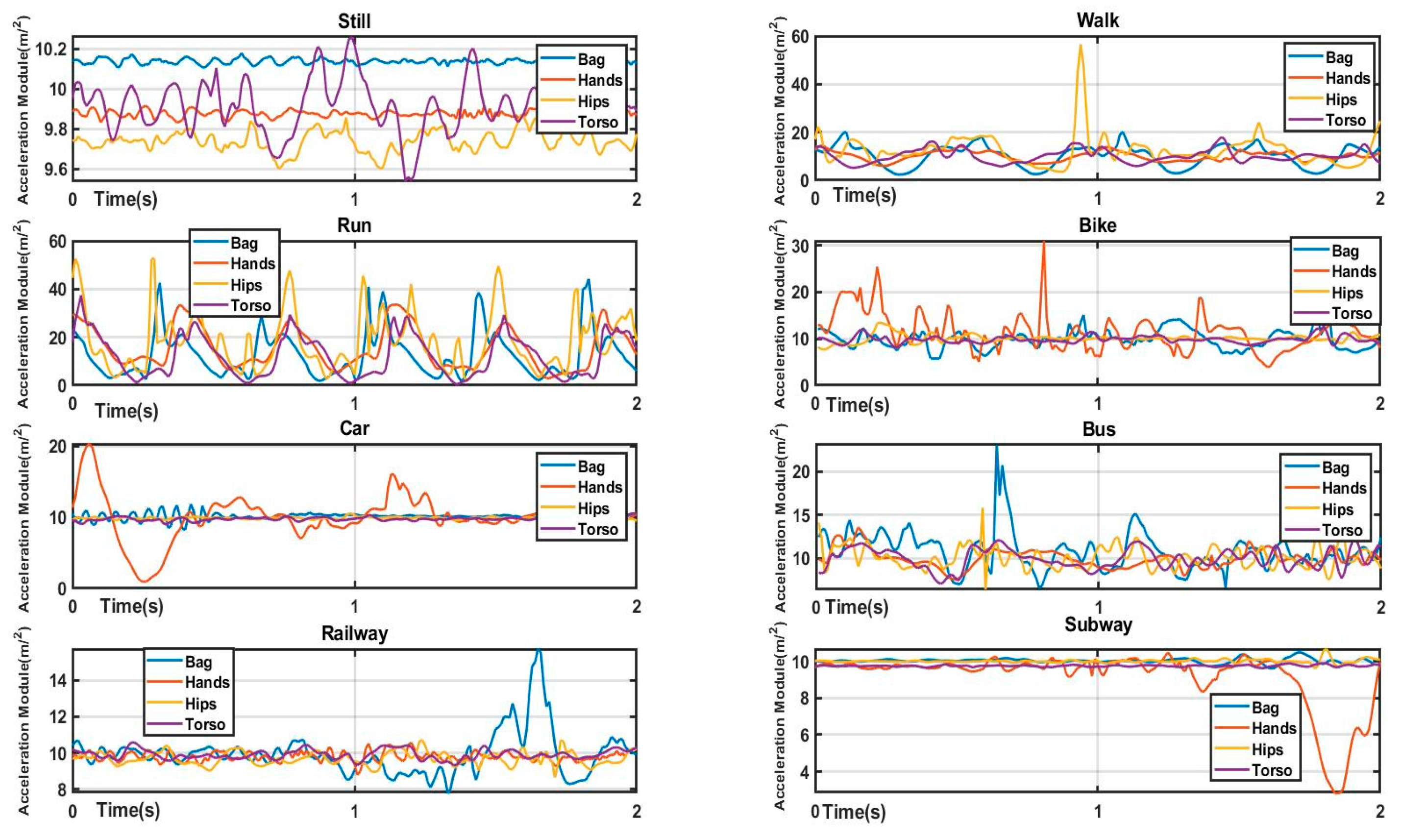

3.1. Data Input

3.2. Data Pre-Processing

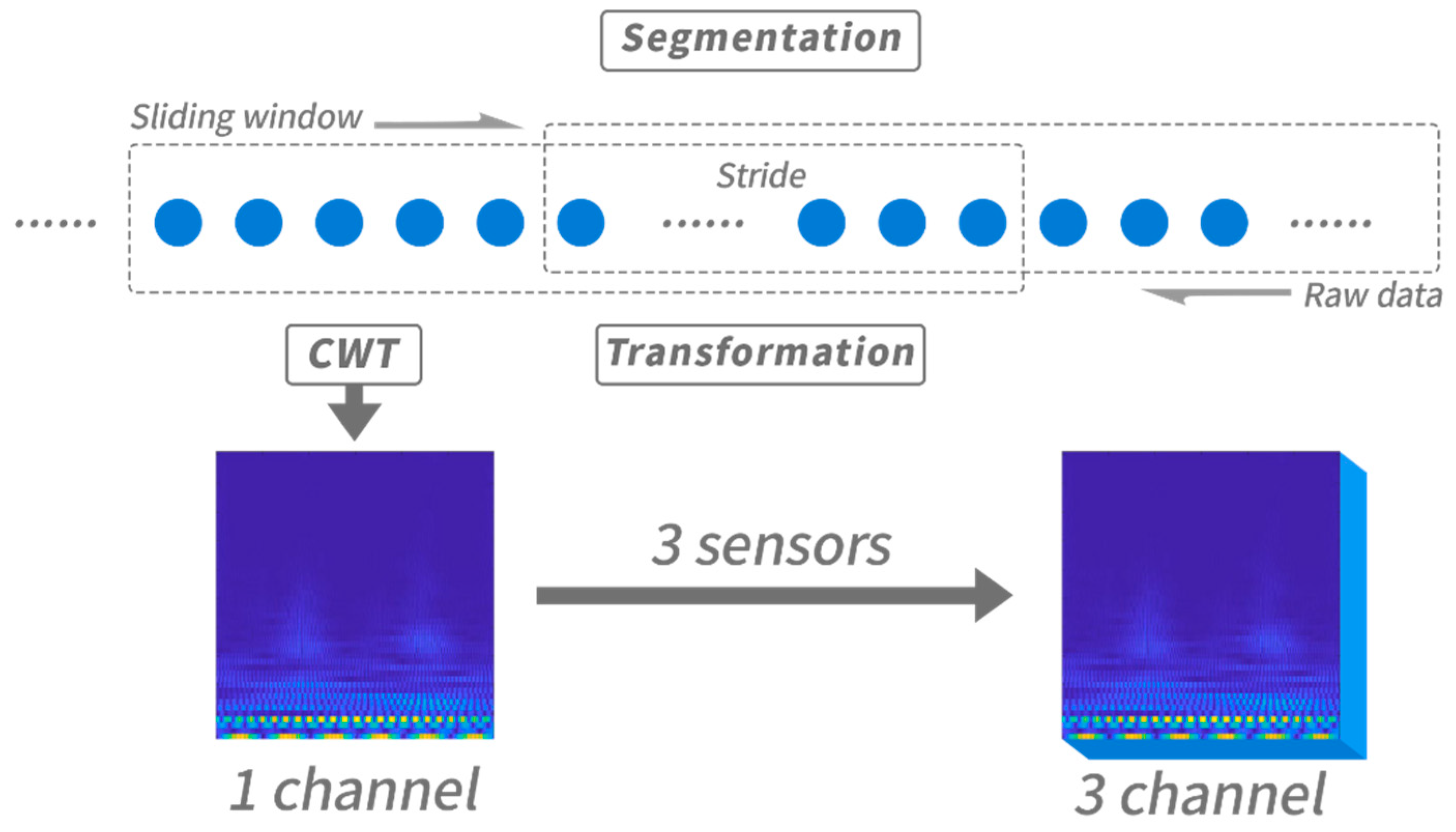

3.2.1. Motivation of Data Segmentation

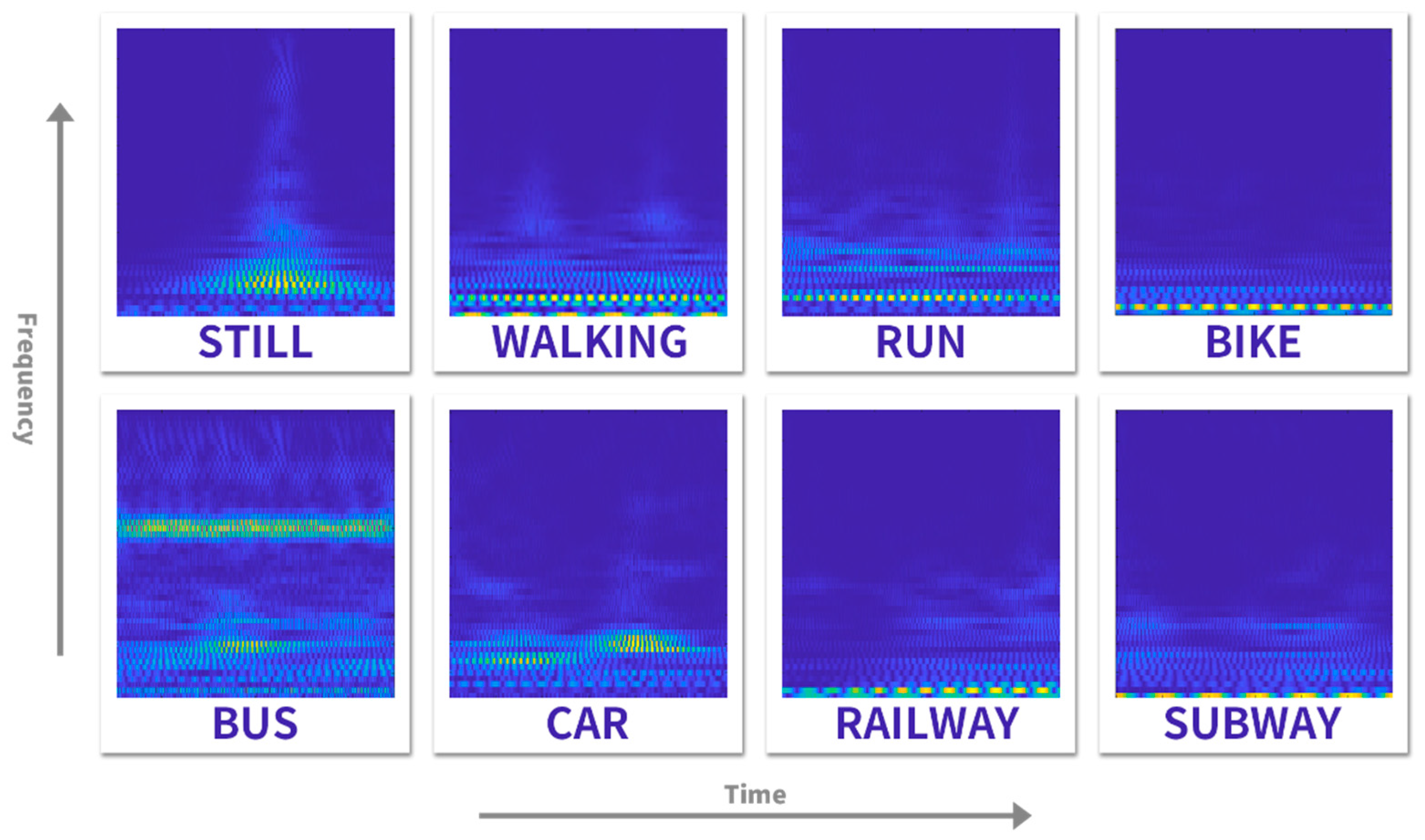

3.2.2. Motivation of Time-Frequency Transformation

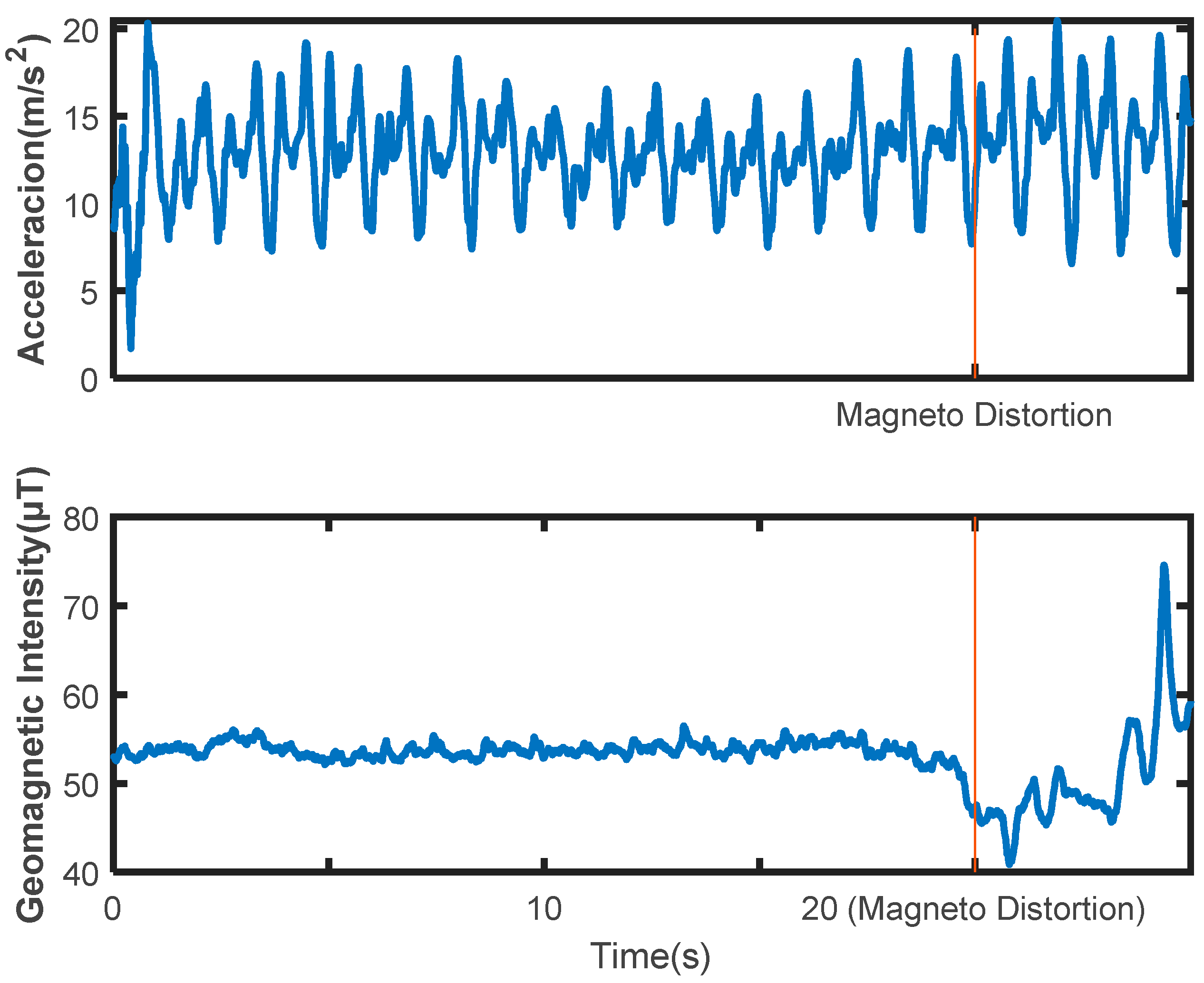

3.3. Multiple Sensors Integration and Recalibration

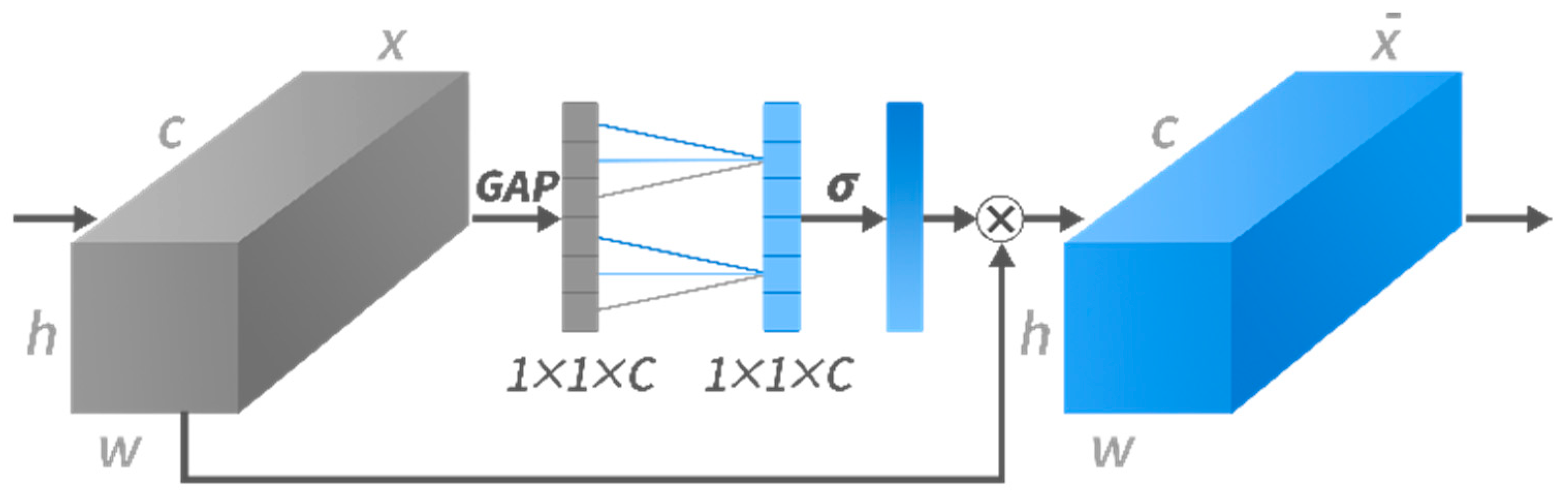

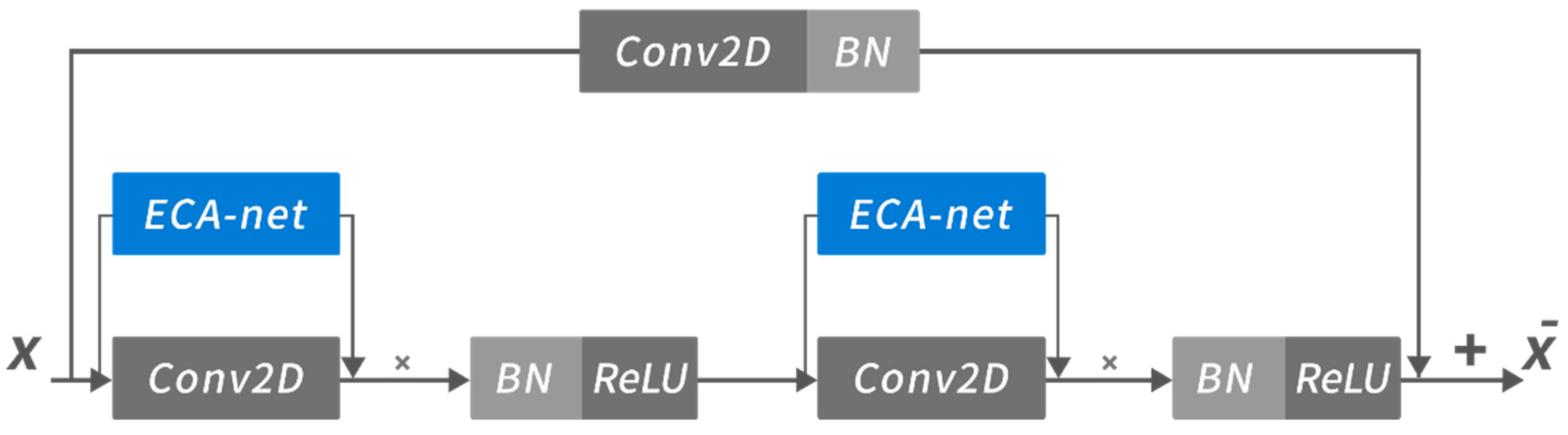

3.4. Feature Extraction and Fusion

4. Experiments and Analysis

4.1. Dataset

4.2. Preprocessing

4.3. Experimental Setup

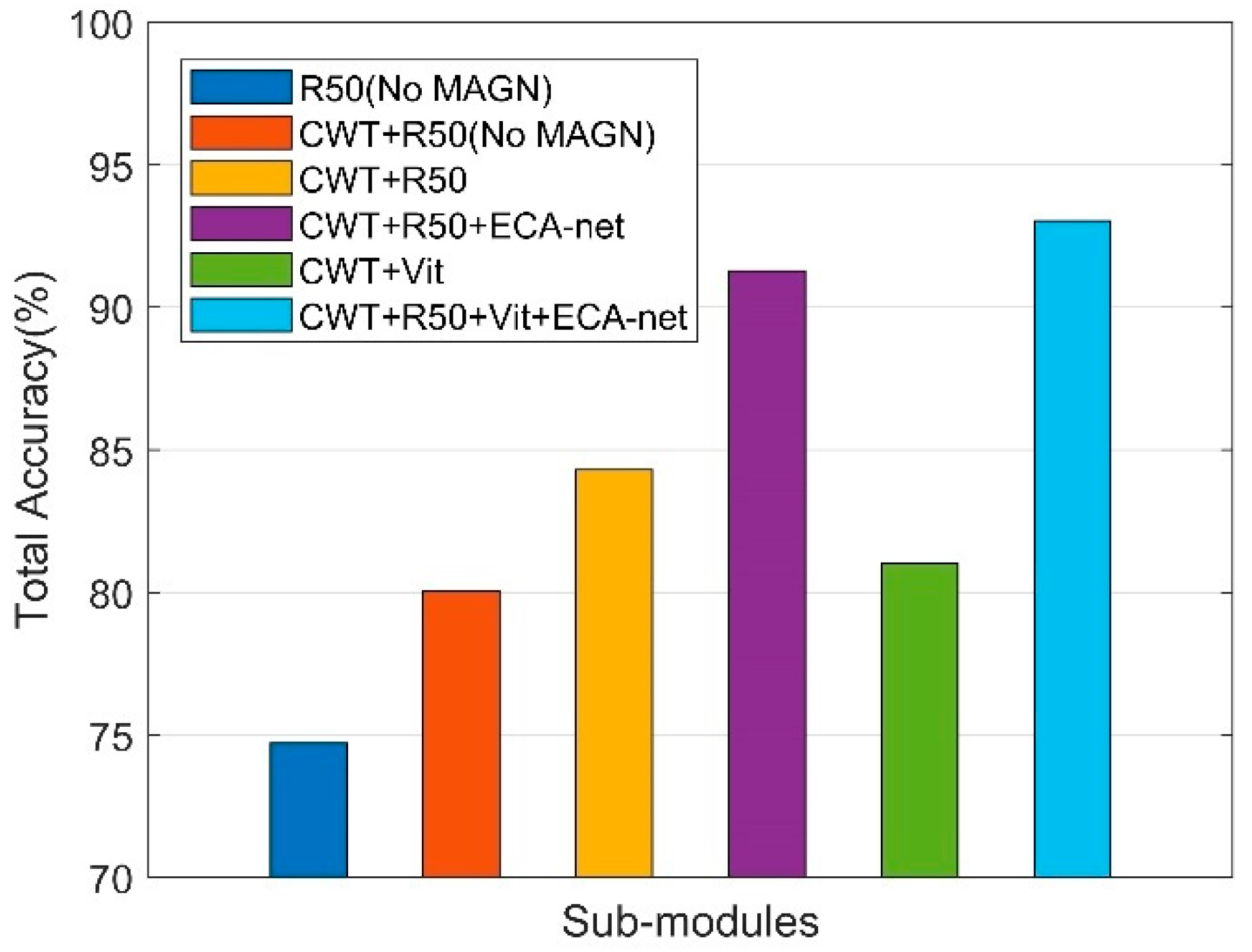

4.4. Recognition Performance of Sub-Module Integrations

- Each patch would be flattened into a vector as input in Vit and the network will lose its structure information because of the flattening operation;

- Vit is eager for datasets. Due to the flattening operation mentioned above, it is difficult for Vit to extract the local information of each patch when the dataset is insufficient. If a Vit model is trained alone, the accuracy will exceed the CNN-based model when the dataset is more than 100 M, but our dataset size is only about 1 M.

4.5. Comparison with Baselines

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Veres, M.; Moussa, M. Deep Learning for Intelligent Transportation Systems: A Survey of Emerging Trends. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3152–3168. [Google Scholar] [CrossRef]

- Yürür, Ö.; Liu, C.H.; Sheng, Z.; Leung, V.C.M.; Moreno, W.; Leung, K.K. Context-awareness for mobile sensing: A survey and future directions. IEEE Commun. Surv. Tutor. 2014, 18, 68–93. [Google Scholar] [CrossRef]

- Bharti, P.; De, D.; Chellappan, S.; Das, S.K. HuMAn: Complex activity recognition with multi-modal multi-positional body sensing. IEEE Trans. Mob. Comput. 2018, 18, 857–870. [Google Scholar] [CrossRef]

- Gu, Y.; Li, D.; Kamiya, Y.; Kamijo, S. Lifelog using Mobility Context Information in Urban City Area. In Proceedings of the ION 2019 Pacific PNT Meeting (PNT2019), Honolulu, HI, USA, 8–11 April 2019. [Google Scholar]

- Wang, L.; Gjoreski, H.; Ciliberto, M.; Mekki, S.; Valentin, S.; Roggen, D. Enabling reproducible research in sensor-based transportation mode recognition with the Sussex-Huawei dataset. IEEE Access 2019, 7, 10870–10891. [Google Scholar] [CrossRef]

- Feng, T.; Timmermans, H.J.P. Transportation mode recognition using GPS and accelerometer data. Transp. Res. Part C Emerg. Technol. 2013, 37, 118–130. [Google Scholar]

- Chen, L.; Hoey, J.; Nugent, C.D.; Cook, D.J.; Yu, Z. Sensor-based activity recognition. IEEE Trans. Syst. Man Cybern. Part C 2012, 42, 790–808. [Google Scholar] [CrossRef]

- Reddy, S.; Mun, M.; Burke, J.; Estrin, D.; Hansen, M.; Srivastava, M. Using mobile phones to determine transportation modes. ACM Trans. Sens. Netw. (TOSN) 2010, 6, 1–27. [Google Scholar] [CrossRef]

- Xiao, Y.; Low, D.; Bandara, T.; Pathak, P.; Lim, H.B.; Goyal, D.; Santos, J.; Cottrill, C.; Pereira, F.; Zegras, C.; et al. Transportation activity analysis using smartphones. In Proceedings of the 2012 IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 14–17 January 2012. [Google Scholar]

- Jahangiri, A.; Rakha, H.A. Applying machine learning techniques to transportation mode recognition using mobile phone sensor data. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2406–2417. [Google Scholar] [CrossRef]

- Ashqar, H.I.; Almannaa, M.H.; Elhenawy, M.; Rakha, H.A.; House, L. Smartphone transportation mode recognition using a hierarchical machine learning classifier and pooled features from time and frequency domains. IEEE Trans. Intell. Transp. Syst. 2018, 20, 244–252. [Google Scholar] [CrossRef]

- Li, D.; Gu, Y.; Kamijo, S. Pedestrian positioning in urban environment by integration of PDR and traffic mode detection. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017. [Google Scholar]

- Janko, V.; Lustrek, M.; Reščič, N.; Mlakar, M. A new frontier for activity recognition: The Sussex-Huawei locomotion challenge. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018. [Google Scholar]

- Zhao, H.; Zhang, C. An online-learning-based evolutionary many-objective algorithm. Inf. Sci. 2020, 509, 1–21. [Google Scholar] [CrossRef]

- Gong, Y.; Fang, Z.; Shaomeng, C.; Haiyong, L. A convolutional neural networks-based transportation mode identification algorithm. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Liang, X.; Wang, G. A convolutional neural network for transportation mode detection based on smartphone platform. In Proceedings of the 2017 IEEE 14th international conference on mobile Ad Hoc and sensor systems (MASS), Orlando, FL, USA, 22–25 October 2017. [Google Scholar]

- Liu, H.; Lee, I. End-to-end trajectory transportation mode classification using Bi-LSTM recurrent neural network. In Proceedings of the 2017 12th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), Nanjing, China, 24–26 November 2017. [Google Scholar]

- Chen, Z.; Zhang, L.; Jiang, C.; Cao, Z.; Cui, W. WiFi CSI based passive human activity recognition using attention based BLSTM. IEEE Trans. Mob. Comput. 2018, 18, 2714–2724. [Google Scholar] [CrossRef]

- Saeed, A.; Ozcelebi, T.; Lukkien, J. Multi-task self-supervised learning for human activity detection. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–30. [Google Scholar] [CrossRef]

- Gjoreski, M.; Gams, M.; Janko, V.; Reščič, N. Applying multiple knowledge to Sussex-Huawei locomotion challenge. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018. [Google Scholar]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Zhu, Y.; Markos, C.; Yu, S.; Yu, J.J.Q. Toward Crowdsourced Transportation Mode Identification: A Semisupervised Federated Learning Approach. IEEE Internet Things J. 2022, 9, 11868–11882. [Google Scholar] [CrossRef]

- Sharma, A.; Singh, S.K.; Udmale, S.S.; Singh, A.K.; Singh, R. Early Transportation Mode Detection Using Smartphone Sensing Data. IEEE Sens. J. 2021, 21, 15651–15659. [Google Scholar] [CrossRef]

- Wang, C.; Luo, H.; Zhao, F.; Qin, Y. Combining residual and LSTM recurrent networks for transportation mode detection using multimodal sensors integrated in smartphones. IEEE Trans. Intell. Transp. Syst. 2020, 22, 5473–5485. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) IEEE, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016. [Google Scholar]

- Dosovitskiy, A.; Zhang, X.; Ren, S.; Sun, J. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Richoz, S.; Wang, L.; Birch, P.; Roggen, D. Transportation mode recognition fusing wearable motion, sound, and vision sensors. IEEE Sens. J. 2020, 20, 9314–9328. [Google Scholar] [CrossRef]

- Sinha, S.; Routh, P.S.; Anno, P.D.; Castagna, J.P. Spectral decomposition of seismic data with continuous-wavelet transform. Geophysics 2005, 70, P19–P25. [Google Scholar] [CrossRef]

- Raghu, M.; Unterthiner, T.; Kornblith, S.; Zhang, C.; Dosovitskiy, A. Do vision transformers see like convolutional neural networks? Adv. Neural Inf. Process. Syst. 2021, 34, 12116–12128. [Google Scholar]

| Transportation Mode | Definition |

|---|---|

| Still | Still in the open area |

| Walk | Walking in the open area |

| Run | Running in the open area |

| Bike | Cycling in the open area |

| Car | Still and waiting for traffic lights or running |

| Bus | Still at the station or running |

| Railway | Still at the station or running |

| Subway | Still at the station or running |

| Parameter Name | Parameter Setting |

|---|---|

| Sliding window length | 3 s |

| Sliding stride length | 1 s |

| Wavelet transform scale | 64 |

| Wavelet name | Cmor3-3 |

| Hardware and Software Environment | |

|---|---|

| CPU | AMD Ryzen 7 5800X 8-Core |

| Memory | 16 GB |

| GPU | GTX 3060Ti 8GB x1 |

| Development Language | Python 3.9 |

| Framework | Pytorch 1.9 |

| Parameter Setting | Resnet | Vit | ECA-Net | MLP |

|---|---|---|---|---|

| Conv Layer 1 | / | / | / | |

| Conv Layer 2 | / | / | / | |

| Conv Layer 3 | / | / | / | |

| Conv Layer 4 | / | / | / | |

| Conv Layer 5 | / | / | / | |

| Patch Size | / | (4 × 20) | / | / |

| Head Number | / | 12 | / | / |

| Encoder Layer Number | / | 8 | / | / |

| Embedding Dropout | / | 0.1 | / | / |

| Dropout | / | 0.1 | / | / |

| Kernel Size | / | / | 2 | / |

| Linear Layer Size | / | / | / | |

| Dropout | / | / | / | 0.2 |

| Sub-Modules | Metrics | Still | Walk | Run | Bike | Car | Bus | Railway | Subway |

|---|---|---|---|---|---|---|---|---|---|

| R50 (No MAGN) | Accuracy/% | 62.93 | 90.88 | 98.57 | 79.50 | 78.56 | 70.94 | 59.76 | 53.26 |

| Recall/% | 66.34 | 90.45 | 98.40 | 85.46 | 83.13 | 65.86 | 59.02 | 48.00 | |

| F1-score/% | 64.59 | 90.66 | 98.49 | 82.37 | 80.78 | 68.31 | 59.38 | 50.49 | |

| CWT + R50 (No MAGN) | Accuracy/% | 56.90 | 92.85 | 99.16 | 93.47 | 89.49 | 82.16 | 68.56 | 63.60 |

| Recall/% | 80.14 | 90.28 | 97.79 | 93.04 | 91.35 | 73.33 | 58.45 | 55.35 | |

| F1-score/% | 66.55 | 91.55 | 98.47 | 93.25 | 90.41 | 77.49 | 63.10 | 59.19 | |

| CWT + R50 | Accuracy/% | 78.01 | 95.89 | 99.58 | 91.34 | 96.99 | 81.52 | 73.49 | 65.26 |

| Recall/% | 88.85 | 86.77 | 75.07 | 94.26 | 90.42 | 92.66 | 79.71 | 66.52 | |

| F1-score/% | 83.08 | 91.10 | 85.61 | 92.78 | 93.59 | 86.73 | 76.48 | 65.88 | |

| CWT + R50 + ECA-net | Accuracy/% | 86.87 | 96.83 | 98.54 | 95.15 | 94.94 | 94.48 | 80.69 | 83.35 |

| Recall/% | 88.73 | 90.72 | 99.10 | 95.30 | 96.81 | 92.99 | 84.72 | 81.77 | |

| F1-score/% | 87.79 | 93.67 | 98.82 | 95.22 | 95.87 | 93.73 | 82.66 | 82.55 | |

| CWT + Vit | Accuracy/% | 70.28 | 86.53 | 97.73 | 90.38 | 88.90 | 82.34 | 71.28 | 63.23 |

| Recall/% | 89.88 | 86.61 | 97.79 | 86.13 | 85.60 | 78.34 | 55.60 | 67.81 | |

| F1-score/% | 78.88 | 86.57 | 97.76 | 88.21 | 87.22 | 80.29 | 62.47 | 65.44 | |

| CWT + R50 + Vit + ECA-net (Proposed Method) | Accuracy/% | 88.10 | 95.34 | 99.24 | 96.62 | 96.49 | 95.13 | 88.80 | 84.72 |

| Recall/% | 91.30 | 93.68 | 98.96 | 95.91 | 97.34 | 94.41 | 85.10 | 87.42 | |

| F1-score/% | 89.67 | 94.50 | 99.11 | 96.26 | 96.91 | 94.77 | 86.91 | 86.05 |

| Baselines | Conv Layer | LSTM Layer | Attention Layer | Output Layer |

|---|---|---|---|---|

| LSTM | / | / | ||

| ABLSTM | / | tanh SoftMax | ||

| Deep-Conv LSTM | Sigmoid | |||

| TPN | Dropout = 0.1 Maxpool(8) | / | / | |

| MSRLSTM | Res Maxpool(2) | SoftMax |

| Baselines | Metrics | Still | Walk | Run | Bike | Car | Bus | Railway | Subway |

|---|---|---|---|---|---|---|---|---|---|

| LSTM | Accuracy/% | 56.95 | 59.98 | 79.88 | 50.40 | 52.18 | 49.34 | 35.18 | 38.22 |

| Recall/% | 60.50 | 56.86 | 83.14 | 56.33 | 55.18 | 43.63 | 28.12 | 41.60 | |

| F1-score/% | 58.67 | 58.37 | 81.47 | 53.20 | 53.64 | 46.31 | 31.25 | 39.84 | |

| ABLSTM | Accuracy/% | 50.30 | 86.43 | 96.74 | 62.75 | 52.90 | 43.77 | 41.58 | 37.52 |

| Recall/% | 77.01 | 73.06 | 96.04 | 60.37 | 52.34 | 29.47 | 33.43 | 45.13 | |

| F1-score/% | 60.86 | 79.19 | 96.39 | 61.54 | 52.62 | 35.22 | 37.06 | 40.97 | |

| Deep-ConvLSTM | Accuracy/% | 78.14 | 92.71 | 99.38 | 88.03 | 87.72 | 77.66 | 67.55 | 61.52 |

| Recall/% | 80.22 | 87.04 | 98.03 | 90.42 | 86.48 | 74.27 | 63.66 | 69.95 | |

| F1-score/% | 79.17 | 89.79 | 98.70 | 89.21 | 87.10 | 75.93 | 65.55 | 65.46 | |

| TPN | Accuracy/% | 81.43 | 99.06 | 98.59 | 86.87 | 67.79 | 76.08 | 71.74 | 56.90 |

| Recall/% | 74.34 | 61.26 | 97.35 | 84.58 | 95.83 | 78.16 | 59.65 | 70.39 | |

| F1-score/% | 77.72 | 75.70 | 97.97 | 85.71 | 79.40 | 77.10 | 65.14 | 62.93 | |

| MSRLSTM | Accuracy/% | 75.26 | 91.94 | 99.37 | 90.27 | 88.34 | 82.24 | 71.89 | 68.36 |

| Recall/% | 86.44 | 89.69 | 97.69 | 91.42 | 88.53 | 78.16 | 65.64 | 69.23 | |

| F1-score/% | 80.47 | 90.80 | 98.52 | 90.84 | 88.44 | 80.15 | 68.57 | 68.80 | |

| Proposed Method | Accuracy/% | 88.10 | 95.34 | 99.24 | 96.62 | 96.49 | 95.13 | 88.80 | 84.72 |

| Recall/% | 91.30 | 93.68 | 98.96 | 95.91 | 97.34 | 94.41 | 85.10 | 87.42 | |

| F1-score/% | 89.67 | 94.50 | 99.11 | 96.26 | 96.91 | 94.77 | 86.91 | 86.05 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, Y.; Hettiarachchi, D.; Kamijo, S. Transportation Mode Detection Combining CNN and Vision Transformer with Sensors Recalibration Using Smartphone Built-In Sensors. Sensors 2022, 22, 6453. https://doi.org/10.3390/s22176453

Tian Y, Hettiarachchi D, Kamijo S. Transportation Mode Detection Combining CNN and Vision Transformer with Sensors Recalibration Using Smartphone Built-In Sensors. Sensors. 2022; 22(17):6453. https://doi.org/10.3390/s22176453

Chicago/Turabian StyleTian, Ye, Dulmini Hettiarachchi, and Shunsuke Kamijo. 2022. "Transportation Mode Detection Combining CNN and Vision Transformer with Sensors Recalibration Using Smartphone Built-In Sensors" Sensors 22, no. 17: 6453. https://doi.org/10.3390/s22176453

APA StyleTian, Y., Hettiarachchi, D., & Kamijo, S. (2022). Transportation Mode Detection Combining CNN and Vision Transformer with Sensors Recalibration Using Smartphone Built-In Sensors. Sensors, 22(17), 6453. https://doi.org/10.3390/s22176453