Extended Reality in Neurosurgical Education: A Systematic Review

Abstract

:1. Introduction

Rationale

- What kinds of cranial surgical procedures is recent research focused on?

- Is research on the topic localized in specific geographical areas of the world, or is it evenly distributed?

- Are users benefiting from the use of XR technologies in education, and appreciating their fidelity to real-life scenarios? In other words, are proposed applications useful and realistic according to the users?

- What metrics are used to assess the impact of these technologies on performance, usability, and learning curves of test subjects? Are such metrics employed across multiple studies, or are they related to a specific experimental setup?

- What devices are used in recent research on the topic?

2. Methods

2.1. Eligibility Criteria

2.2. Types of Studies

2.3. Types of Population

2.4. Type of Intervention

2.5. Types of Comparators

2.6. Types of Outcome Measures

2.7. Databases and Search Strategy

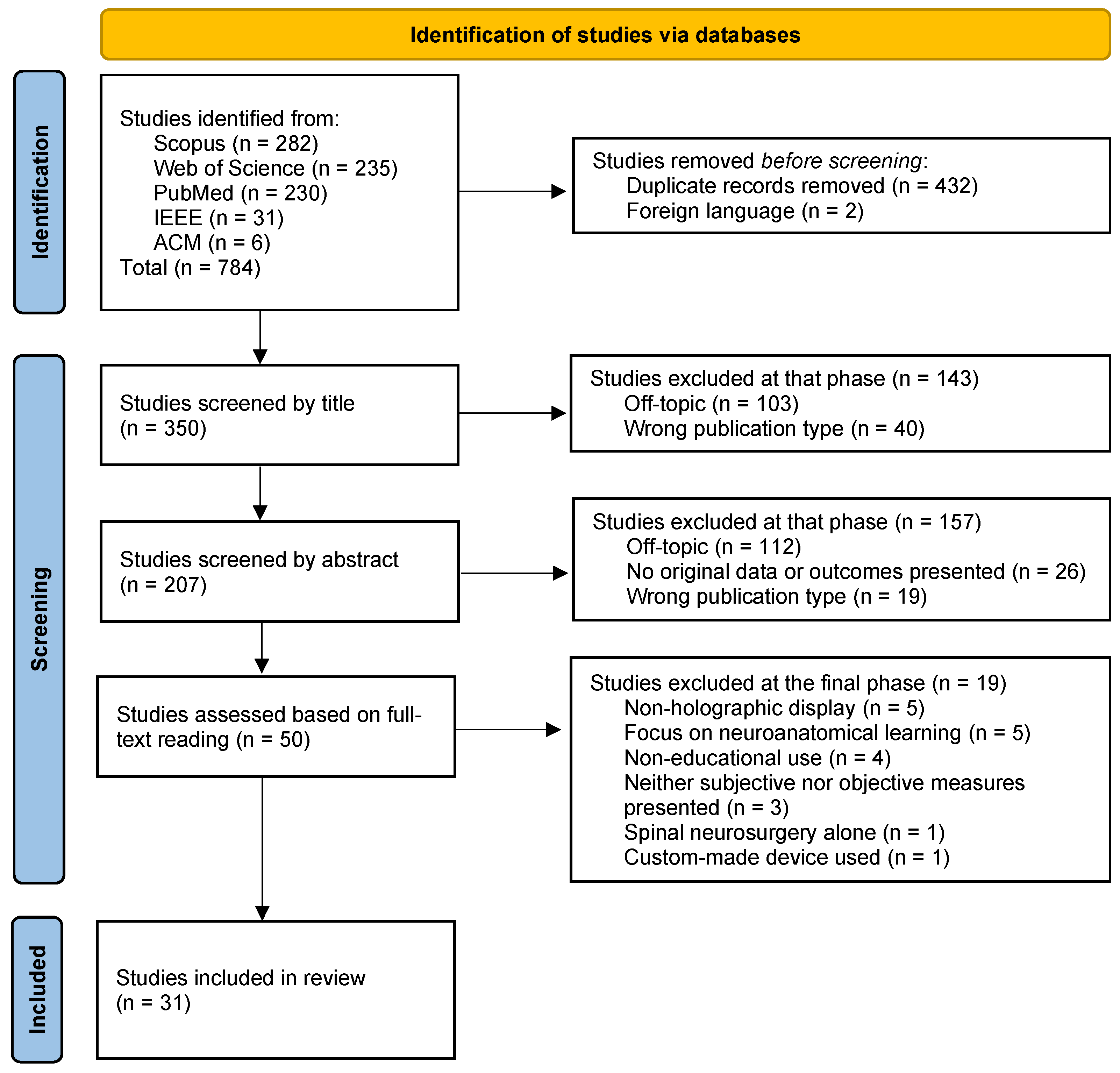

2.8. Study Selection

2.9. Data Extraction

2.10. Data Synthesis and Risk of Bias Assessment

3. Related Works

4. Results

4.1. Characteristics of the Included Studies

4.2. User Performance (UP)

4.3. User Experience (UX)

5. Discussion

5.1. Limitations

5.2. Future Perspective

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

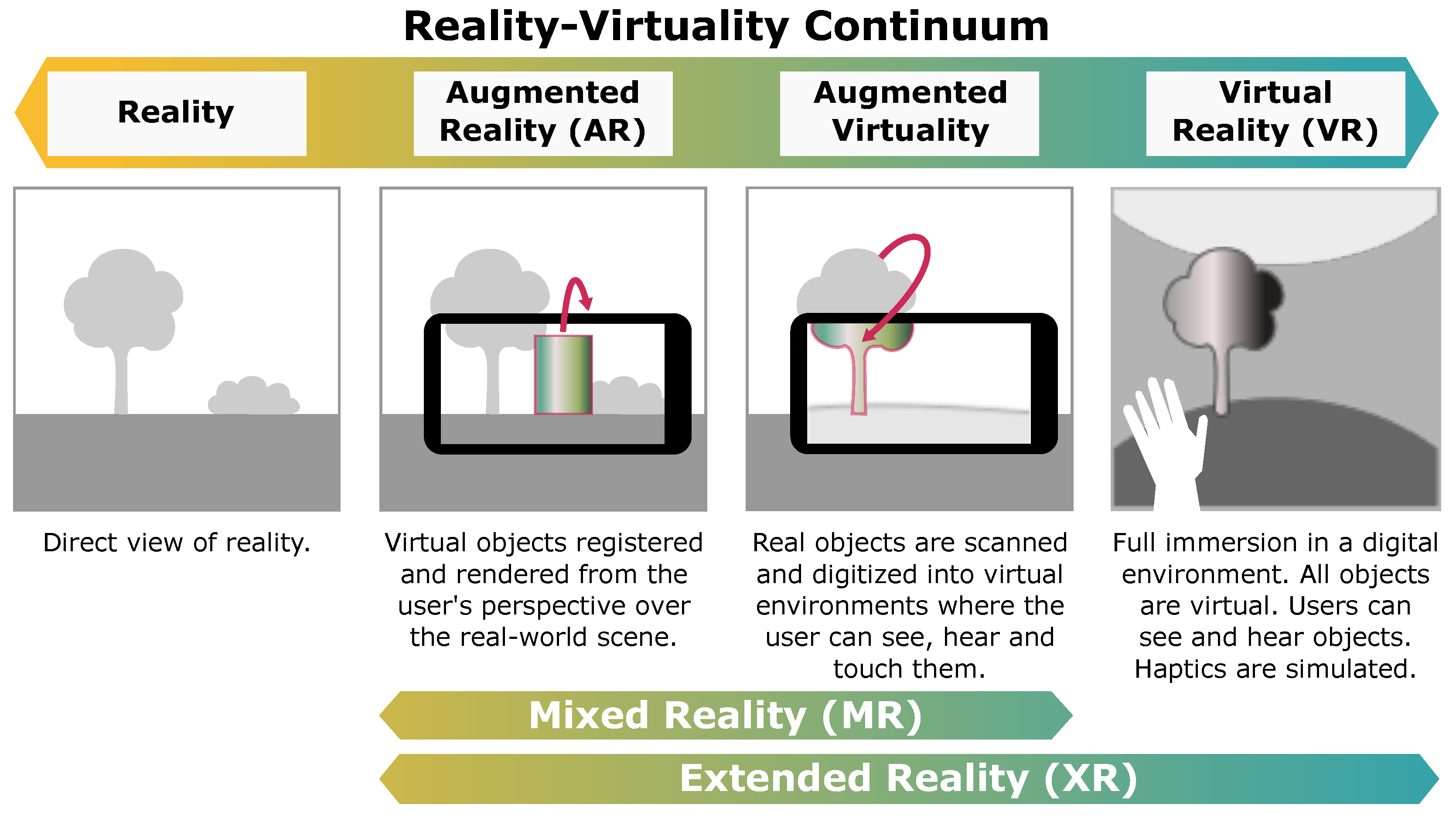

Abbreviations

| AI | Artificial intelligence |

| AR | Augmented reality |

| AV | Augmented virtuality |

| ETV | Endoscopic third ventriculostomy |

| HMD | Head-mounted display |

| MR | Mixed reality |

| NOS-E | Newcastle–Ottawa Scale-Education |

| OR | Operating room |

| PICO | Population, intervention, comparators, outcome |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PROSPERO | International Prospective Register of Systematic Reviews |

| SUS | System Usability Scale |

| TLX | Task load index |

| UP | User performance |

| UX | User experience |

| VR | Virtual reality |

| XR | Extended reality |

References

- Kapur, N.; Parand, A.; Soukup, T.; Reader, T.; Sevdalis, N. Aviation and healthcare: A comparative review with implications for patient safety. JRSM Open 2015, 7, 2054270415616548. [Google Scholar] [CrossRef] [PubMed]

- Rehder, R.; Abd-El-Barr, M.; Hooten, K.; Weinstock, P.; Madsen, J.R.; Cohen, A.R. The Role of Simulation in Neurosurgery. Child’s Nerv. Syst. 2016, 32, 43–54. [Google Scholar] [CrossRef] [PubMed]

- Gnanakumar, S.; Kostusiak, M.; Budohoski, K.P.; Barone, D.; Pizzuti, V.; Kirollos, R.; Santarius, T.; Trivedi, R. Effectiveness of cadaveric simulation in neurosurgical training: A review of the literature. World Neurosurg. 2018, 118, 88–96. [Google Scholar] [CrossRef] [PubMed]

- Thiong’o, G.M.; Bernstein, M.; Drake, J.M. 3D printing in neurosurgery education: A review. 3D Print. Med. 2021, 7, S801–S809. [Google Scholar] [CrossRef]

- Chan, J.; Pangal, D.J.; Cardinal, T.; Kugener, G.; Zhu, Y.; Roshannai, A.; Markarian, N.; Sinha, A.; Anandkumar, A.; Hung, A.; et al. A systematic review of virtual reality for the assessment of technical skills in neurosurgery. Neurosurg. Focus 2021, 51, E15. [Google Scholar] [CrossRef]

- Bernardo, A. Virtual reality and simulation in neurosurgical training. World Neurosurg. 2017, 106, 1015–1029. [Google Scholar] [CrossRef]

- Vles, M.; Terng, N.; Zijlstra, K.; Mureau, M.; Corten, E. Virtual and augmented reality for preoperative planning in plastic surgical procedures: A systematic review. J. Plast. Reconstr. Aesthetic Surg. 2020, 73, 1951–1959. [Google Scholar] [CrossRef]

- Zheng, C.; Li, J.; Zeng, G.; Ye, W.; Sun, J.; Hong, J.; Li, C. Development of a virtual reality preoperative planning system for postlateral endoscopic lumbar discectomy surgery and its clinical application. World Neurosurg. 2019, 123, e1–e8. [Google Scholar] [CrossRef]

- Lai, M.; Skyrman, S.; Shan, C.; Babic, D.; Homan, R.; Edström, E.; Persson, O.; Burström, G.; Elmi-Terander, A.; Hendriks, B.H.; et al. Fusion of augmented reality imaging with the endoscopic view for endonasal skull base surgery; a novel application for surgical navigation based on intraoperative cone beam computed tomography and optical tracking. PLoS ONE 2020, 15, e0227312. [Google Scholar]

- Frisk, H.; Lindqvist, E.; Persson, O.; Weinzierl, J.; Bruetzel, L.K.; Cewe, P.; Burström, G.; Edström, E.; Elmi-Terander, A. Feasibility and Accuracy of Thoracolumbar Pedicle Screw Placement Using an Augmented Reality Head Mounted Device. Sensors 2022, 22, 522. [Google Scholar] [CrossRef]

- Elmi-Terander, A.; Burström, G.; Nachabé, R.; Fagerlund, M.; Ståhl, F.; Charalampidis, A.; Edström, E.; Gerdhem, P. Augmented reality navigation with intraoperative 3D imaging vs fluoroscopy-assisted free-hand surgery for spine fixation surgery: A matched-control study comparing accuracy. Sci. Rep. 2020, 10, 707. [Google Scholar] [CrossRef] [PubMed]

- Voinescu, A.; Sui, J.; Stanton Fraser, D. Virtual Reality in Neurorehabilitation: An Umbrella Review of Meta-Analyses. J. Clin. Med. 2021, 10, 1478. [Google Scholar] [CrossRef]

- Kolbe, L.; Jaywant, A.; Gupta, A.; Vanderlind, W.M.; Jabbour, G. Use of virtual reality in the inpatient rehabilitation of COVID-19 patients. Gen. Hosp. Psychiatry 2021, 71, 76–81. [Google Scholar] [CrossRef] [PubMed]

- Slond, F.; Liesdek, O.C.; Suyker, W.J.; Weldam, S.W. The use of virtual reality in patient education related to medical somatic treatment: A scoping review. Patient Educ. Couns. 2021. [Google Scholar] [CrossRef]

- Louis, R.; Cagigas, J.; Brant-Zawadzki, M.; Ricks, M. Impact of neurosurgical consultation with 360-degree virtual reality technology on patient engagement and satisfaction. Neurosurg. Open 2020, 1, okaa004. [Google Scholar] [CrossRef]

- Perin, A.; Galbiati, T.F.; Ayadi, R.; Gambatesa, E.; Orena, E.F.; Riker, N.I.; Silberberg, H.; Sgubin, D.; Meling, T.R.; DiMeco, F. Informed consent through 3D virtual reality: A randomized clinical trial. Acta Neurochir. 2021, 163, 301–308. [Google Scholar] [CrossRef]

- Micko, A.; Knopp, K.; Knosp, E.; Wolfsberger, S. Microsurgical performance after sleep interruption: A NeuroTouch simulator study. World Neurosurg. 2017, 106, 92–101. [Google Scholar] [CrossRef]

- Piromchai, P.; Ioannou, I.; Wijewickrema, S.; Kasemsiri, P.; Lodge, J.; Kennedy, G.; O’Leary, S. Effects of anatomical variation on trainee performance in a virtual reality temporal bone surgery simulator. J. Laryngol. Otol. 2017, 131, S29–S35. [Google Scholar] [CrossRef]

- Yudkowsky, R.; Luciano, C.; Banerjee, P.; Schwartz, A.; Alaraj, A.; Lemole, G.M., Jr.; Charbel, F.; Smith, K.; Rizzi, S.; Byrne, R.; et al. Practice on an augmented reality/haptic simulator and library of virtual brains improves residents’ ability to perform a ventriculostomy. Simul. Healthc. 2013, 8, 25–31. [Google Scholar] [CrossRef]

- Salmimaa, M.; Kimmel, J.; Jokela, T.; Eskolin, P.; Järvenpää, T.; Piippo, P.; Müller, K.; Satopää, J. Live delivery of neurosurgical operating theater experience in virtual reality. J. Soc. Inf. Disp. 2018, 26, 98–104. [Google Scholar] [CrossRef]

- Higginbotham, G. Virtual connections: Improving global neurosurgery through immersive technologies. Front. Surg. 2021, 8, 25. [Google Scholar] [CrossRef] [PubMed]

- Davis, M.C.; Can, D.D.; Pindrik, J.; Rocque, B.G.; Johnston, J.M. Virtual interactive presence in global surgical education: International collaboration through augmented reality. World Neurosurg. 2016, 86, 103–111. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Xu, X.; Jiang, H.; Ding, Y. The effectiveness of virtual reality-based technology on anatomy teaching: A meta-analysis of randomized controlled studies. BMC Med. Educ. 2020, 20, 127. [Google Scholar] [CrossRef]

- Yamazaki, A.; Ito, T.; Sugimoto, M.; Yoshida, S.; Honda, K.; Kawashima, Y.; Fujikawa, T.; Fujii, Y.; Tsutsumi, T. Patient-specific virtual and mixed reality for immersive, experiential anatomy education and for surgical planning in temporal bone surgery. Auris Nasus Larynx 2021, 48, 1081–1091. [Google Scholar] [CrossRef]

- Chytas, D.; Nikolaou, V.S. Mixed reality for visualization of orthopedic surgical anatomy. World J. Orthop. 2021, 12, 727. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.Y.; Duan, W.C.; Chen, R.K.; Zhang, F.J.; Yu, B.; Zhan, Y.B.; Li, K.; Zhao, H.B.; Sun, T.; Ji, Y.C.; et al. Preliminary application of mxed reality in neurosurgery: Development and evaluation of a new intraoperative procedure. J. Clin. Neurosci. 2019, 67, 234–238. [Google Scholar] [CrossRef] [PubMed]

- Sawaya, R.; Bugdadi, A.; Azarnoush, H.; Winkler-Schwartz, A.; Alotaibi, F.E.; Bajunaid, K.; AlZhrani, G.A.; Alsideiri, G.; Sabbagh, A.J.; Del Maestro, R.F. Virtual reality tumor resection: The force pyramid approach. Oper. Neurosurg. 2018, 14, 686–696. [Google Scholar] [CrossRef]

- Sawaya, R.; Alsideiri, G.; Bugdadi, A.; Winkler-Schwartz, A.; Azarnoush, H.; Bajunaid, K.; Sabbagh, A.J.; Del Maestro, R. Development of a Performance Model for Virtual Reality Tumor Resections. J. Neurosurg. 2019, 13, 192–200. [Google Scholar] [CrossRef]

- Siyar, S.; Azarnoush, H.; Rashidi, S.; Del Maestro, R.F. Tremor assessment during virtual reality brain tumor resection. J. Surg. Educ. 2020, 77, 643–651. [Google Scholar] [CrossRef]

- Roitberg, B.Z.; Kania, P.; Luciano, C.; Dharmavaram, N.; Banerjee, P. Evaluation of Sensory and Motor Skills in Neurosurgery Applicants Using a Virtual Reality Neurosurgical Simulator: The Sensory-Motor Quotient. J. Surg. Educ. 2015, 72, 1165–1171. [Google Scholar] [CrossRef]

- Winkler-Schwartz, A.; Bajunaid, K.; Mullah, M.A.; Marwa, I.; Alotaibi, F.E.; Fares, J.; Baggiani, M.; Azarnoush, H.; Al Zharni, G.; Christie, S.; et al. Bimanual psychomotor performance in neurosurgical resident applicants assessed using NeuroTouch, a virtual reality simulator. J. Surg. Educ. 2016, 73, 942–953. [Google Scholar] [CrossRef] [PubMed]

- Hooten, K.G.; Lister, J.R.; Lombard, G.; Lizdas, D.E.; Lampotang, S.; Rajon, D.A.; Bova, F.; Murad, G.J. Mixed reality ventriculostomy simulation: Experience in neurosurgical residency. Oper. Neurosurg. 2014, 10, 565–576. [Google Scholar] [CrossRef] [PubMed]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Albert, B.; Tullis, T. Measuring the User Experience: Collecting, Analyzing, and Presenting Usability Metrics; Newnes: Cambridge, MA, USA, 2013. [Google Scholar]

- Jean, W.C. Virtual and Augmented Reality in Neurosurgery: The Evolution of its Application and Study Designs. World Neurosurg. 2022, 161, 459–464. [Google Scholar] [CrossRef]

- Ganju, A.; Aoun, S.G.; Daou, M.R.; El Ahmadieh, T.Y.; Chang, A.; Wang, L.; Batjer, H.H.; Bendok, B.R. The role of simulation in neurosurgical education: A survey of 99 United States neurosurgery program directors. World Neurosurg. 2013, 80, e1–e8. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Int. J. Surg. 2021, 88, 105906. [Google Scholar] [CrossRef]

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—A web and mobile app for systematic reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef]

- Cook, D.A.; Levinson, A.J.; Garside, S.; Dupras, D.M.; Erwin, P.J.; Montori, V.M. Internet-Based Learning in the Health Professions: A Meta-analysis. JAMA 2008, 300, 1181–1196. [Google Scholar] [CrossRef]

- Kirkman, M.A.; Ahmed, M.; Albert, A.F.; Wilson, M.H.; Nandi, D.; Sevdalis, N. The use of simulation in neurosurgical education and training: A systematic review. J. Neurosurg. 2014, 121, 228–246. [Google Scholar] [CrossRef]

- Chawla, S.; Devi, S.; Calvachi, P.; Gormley, W.B.; Rueda-Esteban, R. Evaluation of Simulation Models in Neurosurgical Training According to Face, Content, and Construct Validity: A Systematic Review. Acta Neurochir. 2022, 164, 947–966. [Google Scholar] [CrossRef]

- Dadario, N.B.; Quinoa, T.; Khatri, D.; Boockvar, J.; Langer, D.; D’Amico, R.S. Examining the benefits of extended reality in neurosurgery: A systematic review. J. Clin. Neurosci. 2021, 94, 41–53. [Google Scholar] [CrossRef] [PubMed]

- Mazur, T.; Mansour, T.R.; Mugge, L.; Medhkour, A. Virtual Reality–Based Simulators for Cranial Tumor Surgery: A Systematic Review. World Neurosurg. 2018, 110, 414–422. [Google Scholar] [CrossRef]

- Barsom, E.Z.; Graafland, M.; Schijven, M.P. Systematic Review on the Effectiveness of Augmented Reality Applications in Medical Training. Surg. Endosc. 2016, 30, 4174–4183. [Google Scholar] [CrossRef] [PubMed]

- Innocente, C.; Ulrich, L.; Moos, S.; Vezzetti, E. Augmented Reality: Mapping Methods and Tools for Enhancing the Human Role in Healthcare HMI. Appl. Sci. 2022, 12, 4295. [Google Scholar] [CrossRef]

- Alaraj, A.; Luciano, C.J.; Bailey, D.P.; Elsenousi, A.; Roitberg, B.Z.; Bernardo, A.; Banerjee, P.P.; Charbel, F.T. Virtual Reality Cerebral Aneurysm Clipping Simulation with Real-Time Haptic Feedback. Oper. Neurosurg. 2015, 11, 52–58. [Google Scholar] [CrossRef] [PubMed]

- Alotaibi, F.E.; AlZhrani, G.A.; Mullah, M.A.; Sabbagh, A.J.; Azarnoush, H.; Winkler-Schwartz, A.; Del Maestro, R.F. Assessing Bimanual Performance in Brain Tumor Resection with NeuroTouch, a Virtual Reality Simulator. Neurosurgery 2015, 11, 89–98. [Google Scholar] [CrossRef] [PubMed]

- AlZhrani, G.; Alotaibi, F.; Azarnoush, H.; Winkler-Schwartz, A.; Sabbagh, A.; Bajunaid, K.; Lajoie, S.P.; Del Maestro, R.F. Proficiency Performance Benchmarks for Removal of Simulated Brain Tumors Using a Virtual Reality Simulator NeuroTouch. J. Surg. Educ. 2015, 72, 685–696. [Google Scholar] [CrossRef]

- Ansaripour, A.; Haddad, A.; Maratos, E.; Zebian, B. P56 Virtual reality simulation in neurosurgical training: A single blinded randomised controlled trial & review of all available training models. J. Neurol. Neurosurg. Psychiatry 2019, 90, e38. [Google Scholar] [CrossRef]

- Azarnoush, H.; Alzhrani, G.; Winkler-Schwartz, A.; Alotaibi, F.; Gelinas-Phaneuf, N.; Pazos, V.; Choudhury, N.; Fares, J.; DiRaddo, R.; Del Maestro, R.F. Neurosurgical Virtual Reality Simulation Metrics to Assess Psychomotor Skills during Brain Tumor Resection. Int. J. Comput. Assist. Radiol. Surg. 2015, 10, 603–618. [Google Scholar] [CrossRef] [PubMed]

- Azimi, E.; Molina, C.; Chang, A.; Huang, J.; Huang, C.M.; Kazanzides, P. Interactive training and operation ecosystem for surgical tasks in mixed reality. In OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis; Springer: Berlin/Heidelberg, Germany, 2018; pp. 20–29. [Google Scholar]

- Breimer, G.E.; Haji, F.A.; Bodani, V.; Cunningham, M.S.; Lopez-Rios, A.L.; Okrainec, A.; Drake, J.M. Simulation-Based Education for Endoscopic Third Ventriculostomy: A Comparison between Virtual and Physical Training Models. Oper. Neurosurg. 2017, 13, 89–95. [Google Scholar] [CrossRef] [PubMed]

- Bugdadi, A.; Sawaya, R.; Olwi, D.; Al-Zhrani, G.; Azarnoush, H.; Sabbagh, A.J.; Alsideiri, G.; Bajunaid, K.; Alotaibi, F.E.; Winkler-Schwartz, A.; et al. Automaticity of Force Application during Simulated Brain Tumor Resection: Testing the Fitts and Posner Model. J. Surg. Educ. 2018, 75, 104–115. [Google Scholar] [CrossRef]

- Bugdadi, A.; Sawaya, R.; Bajunaid, K.; Olwi, D.; Winkler-Schwartz, A.; Ledwos, N.; Marwa, I.; Alsideiri, G.; Sabbagh, A.J.; Alotaibi, F.E.; et al. Is Virtual Reality Surgical Performance Influenced by Force Feedback Device Utilized? J. Surg. Educ. 2019, 76, 262–273. [Google Scholar] [CrossRef]

- Cutolo, F.; Meola, A.; Carbone, M.; Sinceri, S.; Cagnazzo, F.; Denaro, E.; Esposito, N.; Ferrari, M.; Ferrari, V. A New Head-Mounted Display-Based Augmented Reality System in Neurosurgical Oncology: A Study on Phantom. Comput. Assist. Surg. 2017, 22, 39–53. [Google Scholar] [CrossRef]

- Gasco, J.; Patel, A.; Luciano, C.; Holbrook, T.; Ortega-Barnett, J.; Kuo, Y.F.; Rizzi, S.; Kania, P.; Banerjee, P.; Roitberg, B.Z. A Novel Virtual Reality Simulation for Hemostasis in a Brain Surgical Cavity: Perceived Utility for Visuomotor Skills in Current and Aspiring Neurosurgery Residents. World Neurosurg. 2013, 80, 732–737. [Google Scholar] [CrossRef] [PubMed]

- Gelinas-Phaneuf, N.; Choudhury, N.; Al-Habib, A.R.; Cabral, A.; Nadeau, E.; Mora, V.; Pazos, V.; Debergue, P.; DiRaddo, R.; Del Maestro, R.; et al. Assessing Performance in Brain Tumor Resection Using a Novel Virtual Reality Simulator. Int. J. Comput. Assist. Radiol. Surg. 2014, 9, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Holloway, T.; Lorsch, Z.S.; Chary, M.A.; Sobotka, S.; Moore, M.M.; Costa, A.B.; Del Maestro, R.F.; Bederson, J. Operator Experience Determines Performance in a Simulated Computer-Based Brain Tumor Resection Task. Int. J. Comput. Assist. Radiol. Surg. 2015, 10, 1853–1862. [Google Scholar] [CrossRef]

- Ledwos, N.; Mirchi, N.; Yilmaz, R.; Winkler-Schwartz, A.; Sawni, A.; Fazlollahi, A.M.; Bissonnette, V.; Bajunaid, K.; Sabbagh, A.J.; Del Maestro, R.F. Assessment of Learning Curves on a Simulated Neurosurgical Task Using Metrics Selected by Artificial Intelligence. J. Neurosurg. 2022, 1, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.; Zhu, Z.; He, B.; Liu, Y.; Hong, W.; Liao, Z. A Novel Virtual Reality Simulation Training System with Haptic Feedback for Improving Lateral Ventricle Puncture Skill. Virtual Real. 2021, 26, 399–411. [Google Scholar] [CrossRef]

- Patel, A.; Koshy, N.; Ortega-Barnett, J.; Chan, H.C.; Kuo, Y.F.; Luciano, C.; Rizzi, S.; Matulyauskas, M.; Kania, P.; Banerjee, P.; et al. Neurosurgical Tactile Discrimination Training with Haptic-Based Virtual Reality Simulation. Neurol. Res. 2014, 36, 1035–1039. [Google Scholar] [CrossRef]

- Perin, A.; Gambatesa, E.; Galbiati, T.F.; Fanizzi, C.; Carone, G.; Rui, C.B.; Ayadi, R.; Saladino, A.; Mattei, L.; Sop, F.Y.L.; et al. The “STARS-CASCADE” Study: Virtual Reality Simulation as a New Training Approach in Vascular Neurosurgery. World Neurosurg. 2021, 154, e130–e146. [Google Scholar] [CrossRef] [PubMed]

- Roh, T.H.; Oh, J.W.; Jang, C.K.; Choi, S.; Kim, E.H.; Hong, C.K.; Kim, S.H. Virtual Dissection of the Real Brain: Integration of Photographic 3D Models into Virtual Reality and Its Effect on Neurosurgical Resident Education. Neurosurg. Focus 2021, 51, E16. [Google Scholar] [CrossRef]

- Ros, M.; Debien, B.; Cyteval, C.; Molinari, N.; Gatto, F.; Lonjon, N. Applying an Immersive Tutorial in Virtual Reality to Learning a New Technique. Neuro-Chirurgie 2020, 66, 212–218. [Google Scholar] [CrossRef] [PubMed]

- Schirmer, C.M.; Elder, J.B.; Roitberg, B.; Lobel, D.A. Virtual Reality-Based Simulation Training for Ventriculostomy: An Evidence-Based Approach. Neurosurgery 2013, 73, 66–73. [Google Scholar] [CrossRef] [PubMed]

- Shakur, S.F.; Luciano, C.J.; Kania, P.; Roitberg, B.Z.; Banerjee, P.P.; Slavin, K.V.; Sorenson, J.; Charbel, F.T.; Alaraj, A. Usefulness of a Virtual Reality Percutaneous Trigeminal Rhizotomy Simulator in Neurosurgical Training. Oper. Neurosurg. 2015, 11, 420–425. [Google Scholar] [CrossRef]

- Si, W.X.; Liao, X.Y.; Qian, Y.L.; Sun, H.T.; Chen, X.D.; Wang, Q.; Heng, P.A. Assessing Performance of Augmented Reality-Based Neurosurgical Training. Vis. Comput. Ind. Biomed. Art 2019, 2, 6. [Google Scholar] [CrossRef] [PubMed]

- Teodoro-Vite, S.; Perez-Lomeli, J.S.; Dominguez-Velasco, C.F.; Hernández-Valencia, A.F.; Capurso-Garcia, M.A.; Padilla-Castaneda, M.A. A High-Fidelity Hybrid Virtual Reality Simulator of Aneurysm Clipping Repair with Brain Sylvian Fissure Exploration for Vascular Neurosurgery Training. Simul. Healthc. J. Soc. Simul. Healthc. 2021, 16, 285–294. [Google Scholar] [CrossRef]

- Thawani, J.P.; Ramayya, A.G.; Abdullah, K.G.; Hudgins, E.; Vaughan, K.; Piazza, M.; Madsen, P.J.; Buch, V.; Grady, M.S. Resident Simulation Training in Endoscopic Endonasal Surgery Utilizing Haptic Feedback Technology. J. Clin. Neurosci. 2016, 34, 112–116. [Google Scholar] [CrossRef]

- Winkler-Schwartz, A.; Yilmaz, R.; Mirchi, N.; Bissonnette, V.; Ledwos, N.; Siyar, S.; Azarnoush, H.; Karlik, B.; Del Maestro, R. Machine Learning Identification of Surgical and Operative Factors Associated with Surgical Expertise in Virtual Reality Simulation. JAMA Netw. Open 2019, 2, e198363. [Google Scholar] [CrossRef] [PubMed]

- Winkler-Schwartz, A.; Marwa, I.; Bajunaid, K.; Mullah, M.; Alotaibi, F.E.; Bugdadi, A.; Sawaya, R.; Sabbagh, A.J.; Del Maestro, R. A comparison of visual rating scales and simulated virtual reality metrics in neurosurgical training: A generalizability theory study. World Neurosurg. 2019, 127, e230–e235. [Google Scholar] [CrossRef] [PubMed]

- Brooke, J. Sus: A “quick and dirty’usability. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Edmondson, D. Likert scales: A history. In Proceedings of the Conference on Historical Analysis and Research in Marketing, Tampa, FL, USA, 1 May 2005; Volume 12, pp. 127–133. [Google Scholar]

- Joshi, A.; Kale, S.; Chandel, S.; Pal, D.K. Likert scale: Explored and explained. Br. J. Appl. Sci. Technol. 2015, 7, 396. [Google Scholar] [CrossRef]

- Barteit, S.; Lanfermann, L.; Bärnighausen, T.; Neuhann, F.; Beiersmann, C. Augmented, mixed, and virtual reality-based head-mounted devices for medical education: Systematic review. JMIR Serious Games 2021, 9, e29080. [Google Scholar] [CrossRef] [PubMed]

- Oke, A.E.; Arowoiya, V.A. Critical barriers to augmented reality technology adoption in developing countries: A case study of Nigeria. J. Eng. Des. Technol. 2021. [Google Scholar] [CrossRef]

- Herrington, J.; Reeves, T.C.; Oliver, R. Immersive learning technologies: Realism and online authentic learning. J. Comput. High. Educ. 2007, 19, 80–99. [Google Scholar] [CrossRef]

- Barrie, M.; Socha, J.J.; Mansour, L.; Patterson, E.S. Mixed reality in medical education: A narrative literature review. In Proceedings of the International Symposium on Human Factors and Ergonomics in Health Care, Chicago, IL, USA, 24–27 March 2019; SAGE Publications Sage CA: Los Angeles, CA, USA, 2019; Volume 8, pp. 28–32. [Google Scholar]

- Hu, H.Z.; Feng, X.B.; Shao, Z.W.; Xie, M.; Xu, S.; Wu, X.H.; Ye, Z.W. Application and prospect of mixed reality technology in medical field. Curr. Med. Sci. 2019, 39, 1–6. [Google Scholar] [CrossRef]

- Chen, S.C.; Duh, H. Mixed reality in education: Recent developments and future trends. In Proceedings of the 2018 IEEE 18th International Conference on Advanced Learning Technologies (ICALT), Mumbai, India, 9–13 July 2018; pp. 367–371. [Google Scholar]

| Criteria | Inclusion | Exclusion |

|---|---|---|

| Study type | Empirical studies presenting quantitative data | Narrative or non-empirical studies (reviews, editorials, opinions) |

| Year of publishing | 2013–2022 | Before 2013 |

| Language | English | All other languages |

| Population | n/a | n/a |

| Device | Stereoscopic, off-the-shelf displays | Mobile-based |

| Intervention | Procedural skill acquisition in cranial neurosurgery | Spinal neurosurgery, other medical specialties and other application domains (e.g., patient education, surgical navigation, preoperative planning) |

| Comparator | n/a | n/a |

| Outcome | Performance metrics and/or user experience | n/a |

| ID | Country | Population | Domain | Procedure | Device | XR Type | Haptics | RoB |

|---|---|---|---|---|---|---|---|---|

| Alaraj 2015 [46] | USA | 17 R | Practice | Aneurysm clipping | ImmersiveTouch † | VR | YES | 1 |

| Alotaibi 2015 [47] | Canada | 6 JR, 6 SR, 6 E | Skill assessment | Tumor resection | NeuroVR † | VR | YES | 3 |

| AlZhrani 2015 [48] | Canada | 9 JR, 7 SR, 17 E | Skill assessment | Tumor resection | NeuroVR † | VR | YES | 4 |

| Ansaripour 2019 [49] | UK | 6 MS, 12 R, 4 E | Practice | Microsurgical tasks | NeuroVR † | VR | N/A | 4 |

| Azarnoush 2015 [50] | Canada | 1 JR, 1 E | Skill assessment | Tumor resection | NeuroVR † | VR | YES | 3 |

| Azimi 2018 [51] | USA | 10 NP | Learning | Ventriculostomy | HoloLens ‡ | AR | NO | 2 |

| Breimer 2017 [52] | Canada | 23 R, 3 F | Practice | ETV | NeuroVR † | VR | YES | 2 |

| Bugdadi 2018 [53] | Canada | 10 SR, 8 JR | Skill assessment | Tumor resection | NeuroVR † | VR | YES | 3 |

| Bugdadi 2019 [54] | Canada | 6 E | Practice | Subpial tumor resection | NeuroVR † | VR | YES | 2 |

| Cutolo 2017 [55] | Italy | 3 E | Practice | Surgical access, tumor detection | Sony HMZ-T2 ‡ | AR | NO | 2 |

| Gasco 2013 [56] | USA | 40 MS, 13 R | Learning | Bipolar hemostasis | ImmersiveTouch † | VR | YES | 2 |

| Gelinas-Phaneuf 2014 [57] | Canada | 10 MS, 18 JR, 44 SR | Skill assessment | Meningioma resection | NeuroVR † | VR | YES | 5 |

| Holloway 2015 [58] | USA | 71 MS, 6 JR, 6 SR | Learning | GBM resection | NeuroVR † | VR | YES | 3 |

| Ledwos 2022 [59] | Canada | 12 MS, 10 JR, 10 SR, 4 F, 13 E | Practice | Subpial tumor resection | NeuroVR † | VR | YES | 4 |

| Lin 2021 [60] | China | 30 I | Learning | Lateral ventricle puncture | HTC VIVE Pro ‡ | VR | YES | 5 |

| Patel 2014 [61] | USA | 20 MS | Learning | Detection of objects in brain cavity | ImmersiveTouch † | VR | YES | 5 |

| Perin 2021 [62] | Italy | 2 JR, 1 F, 4 E | Practice | Aneurysm clipping | Surgical Theater ‡ | VR | YES | 4 |

| Roh 2021 [63] | South Korea | 31 R | Learning | Cranial neurosurgical procedures of unspecified type | Oculus Quest 2 ‡ | AV | NO | 2 |

| Roitberg 2015 [30] | USA | 64 MS, 10 MS, 4 JR | Skill assessment | Cauterization and detection of objects in brain cavity | ImmersiveTouch † | VR | YES | 3 |

| Ros 2020 [64] | France | 1 st exp. 176 MS, 2nd exp. 80 MS | Learning | EVD placement | Samsung Gear VR ‡ | VR | NO | 5 |

| Sawaya 2018 [27] | Canada | 14 R, 6 E | Skill assessment | Tumor resection | NeuroVR † | VR | YES | 3 |

| Sawaya 2019 [28] | Canada | 6 MS, 6 JR, 6 SR, 6 E | Skill assessment | Tumor resection | NeuroVR † | VR | YES | 4 |

| Schirmer 2013 [65] | USA | 10 R | Learning | Ventriculostomy | ImmersiveTouch † | VR | YES | 4 |

| Shakur 2015 [66] | USA | 44 JR, 27 SR | Skill assessment | Trigeminal Rhizotomy | ImmersiveTouch † | VR | YES | 3 |

| Si 2019 [67] | China | 10 NP | Learning | Tumor resection | HoloLens ‡ | AR | YES | 2 |

| Teodoro-Vite 2021 [68] | Mexico | 6 R, 6 E | Practice | Aneurysm clipping | Unspecified ‡ | VR | YES | 3 |

| Thawani 2016 [69] | USA | 6 JR | Practice | Endoscopic surgery | NeuroVR † | VR | YES | 5 |

| Winkler-Schwartz 2016 [31] | Canada | 16 MS | Skill assessment | Tumor resection | NeuroVR † | VR | YES | 3 |

| Winkler-Schwartz 2019 [70] | Canada | 12 MS, 10 JR, 10 SR, 4 F, 14 E | Skill assessment | Subpial tumor resection | NeuroVR † | VR | YES | 2 |

| Winkler-Schwartz 2019 [71] | Canada | 16 MS | Skill assessment | Tumor resection | NeuroVR † | VR | YES | 4 |

| Yudkowsky 2013 [19] | USA | 11 JR, 5 SR | Practice | Ventriculostomy | ImmersiveTouch † | AV | YES | 3 |

| Study ID | Outcome | vs. No-XR | Longitudinal | Training Level Comparison |

|---|---|---|---|---|

| Alotaibi 2015 | UP and UX | NO | NO | YES |

| AlZhrani 2015 | UP | NO | NO | YES |

| Ansaripour 2019 | UP | NO | YES | NO |

| Azarnoush 2015 | UP | NO | NO | YES |

| Azimi 2018 | UP and UX | NO | YES | YES |

| Bugdadi 2018 | UP | NO | NO | YES |

| Bugdadi 2019 | UP and UX | NO | NO | NO |

| Cutolo 2017 | UP | NO | YES | NO |

| Gelinas-Phaneuf 2014 | UP and UX | NO | NO | YES |

| Holloway 2015 * | UP | YES | NO | YES |

| Ledwos 2022 * | UP | YES | NO | YES |

| Lin 2021 | UP and UX | NO | YES | NO |

| Patel 2014 | UP | NO | YES | NO |

| Perin 2021 | UP and UX | NO | YES | NO |

| Roitberg 2015 | UP | NO | NO | YES |

| Ros 2020 | UP | YES | YES | NO |

| Sawaya 2018 | UP | NO | NO | YES |

| Sawaya 2019 | UP | NO | NO | YES |

| Schirmer 2013 * | UP | YES | NO | YES |

| Shakur 2015 | UP | NO | NO | YES |

| Teodoro-Vite 2021 | UP and UX | NO | NO | YES |

| Thawani 2016 | UP | YES | YES | NO |

| Winkler-Schwartz 2016 | UP | NO | NO | YES |

| Winkler-Schwartz 2019 | UP | NO | NO | NO |

| Winkler-Schwartz 2019 | UP | NO | NO | NO |

| Yudkowsky 2013 * | UP and UX | YES | NO | YES |

| Study ID | Outcome | Usefulness | Realism | Questionnaire items |

|---|---|---|---|---|

| Alaraj 2015 | UX | YES | YES | Binary questions + Likert scales |

| Alotaibi 2015 | UP and UX | YES | YES | Likert scales |

| Azimi 2018 | UP and UX | NO | NO | Likert scales |

| Breimer 2017 | UX | NO | YES | Likert scales + open comments |

| Bugdadi 2019 | UP and UX | NO | NO | Likert scales |

| Gasco 2013 | UX | YES | NO | Binary questions + Likert scales |

| Gelinas-Phaneuf 2014 | UP and UX | YES | YES | Likert scales + open comments |

| Lin 2021 | UP and UX | YES | NO | Binary questions + Likert scales + open comments |

| Perin 2021 | UP and UX | YES | YES | Binary questions + Likert scales + open comments |

| Roh 2021 | UX | YES | YES | Likert scales + open comments |

| Si 2019 | UX | YES | YES | Likert scales |

| Teodoro-Vite 2021 | UP and UX | NO | YES | Likert scales |

| Yudkowsky 2013 | UP and UX | YES | YES | Likert scales + open comments |

| Technology | Advantages | Disadvantages |

|---|---|---|

| Fixed monitors | Better precision | Expensive |

| Easier registration | Limited motion range | |

| More control over experiments | Not immersive | |

| HMDs | Relatively affordable | Poor research coverage |

| Enables AR | Calibration required | |

| 3 degrees of freedom |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Iop, A.; El-Hajj, V.G.; Gharios, M.; de Giorgio, A.; Monetti, F.M.; Edström, E.; Elmi-Terander, A.; Romero, M. Extended Reality in Neurosurgical Education: A Systematic Review. Sensors 2022, 22, 6067. https://doi.org/10.3390/s22166067

Iop A, El-Hajj VG, Gharios M, de Giorgio A, Monetti FM, Edström E, Elmi-Terander A, Romero M. Extended Reality in Neurosurgical Education: A Systematic Review. Sensors. 2022; 22(16):6067. https://doi.org/10.3390/s22166067

Chicago/Turabian StyleIop, Alessandro, Victor Gabriel El-Hajj, Maria Gharios, Andrea de Giorgio, Fabio Marco Monetti, Erik Edström, Adrian Elmi-Terander, and Mario Romero. 2022. "Extended Reality in Neurosurgical Education: A Systematic Review" Sensors 22, no. 16: 6067. https://doi.org/10.3390/s22166067

APA StyleIop, A., El-Hajj, V. G., Gharios, M., de Giorgio, A., Monetti, F. M., Edström, E., Elmi-Terander, A., & Romero, M. (2022). Extended Reality in Neurosurgical Education: A Systematic Review. Sensors, 22(16), 6067. https://doi.org/10.3390/s22166067