Abstract

The development of synthetic biology has enabled massive progress in biotechnology and in approaching research questions from a brand-new perspective. In particular, the design and study of gene regulatory networks in vitro, in vivo, and in silico have played an increasingly indispensable role in understanding and controlling biological phenomena. Among them, it is of great interest to understand how associative learning is formed at the molecular circuit level. Mathematical models are increasingly used to predict the behaviours of molecular circuits. Fernando’s model, which is one of the first works in this line of research using the Hill equation, attempted to design a synthetic circuit that mimics Hebbian learning in a neural network architecture. In this article, we carry out indepth computational analysis of the model and demonstrate that the reinforcement effect can be achieved by choosing the proper parameter values. We also construct a novel circuit that can demonstrate forced dissociation, which was not observed in Fernando’s model. Our work can be readily used as reference for synthetic biologists who consider implementing circuits of this kind in biological systems.

1. Introduction

Synthetic biology is an emerging field that involves re-engineering existing biological systems or creating new ones that may solve real-world problems in medicine, agriculture, etc. [1,2,3,4,5,6,7,8,9]. Over the past few decades, synthetic biology has witnessed a rapid revolution in the biotechnology industry, and opened up enormous potential for next-generation research in biology due to the increasingly tremendous power of genetic engineering technology, and ever decreasing cost of synthesis and sequencing [10,11,12,13,14,15,16,17]. Particularly, increasing attention has been drawn to designing and testing synthetic biological circuits in vitro, in vivo and in silico in an attempt to better understand bioartificial intelligence at the cellular and molecular levels [18,19,20,21,22,23]. These artificial circuits can, therefore, function as fundamental units to modify existing cellular behaviours, and to perform a wide range of tasks of our own interest in programmable organisms [24,25,26,27,28]. Numerous synthetic circuits were developed for associative learning, decision making, and oscillators, and a brief summary is exhibited in Table 1 [29,30,31,32,33,34,35,36,37]. With the growing collaboration between theorists and experimentalists in almost every discipline, we also noticed a trend in synthetic biology that mathematical models are frequently used to acquire insight, inform troubleshooting, and perform predictions [38,39,40,41].

Associative learning occurs in many aspects of our life, and it is regarded as the basis of our understanding of other forms of behaviours and cognition in human and nonhuman animals [42,43,44,45,46]. The most classical experiment on associative learning is Pavlov’s dog, in which the dog associated the ring of a bell with the smell of food [47,48]. The dog learned to associate the conditioned stimulus (bell’s ring) with the unconditioned stimulus (smell), such that next time, in the presence of the bell’s ring alone, the dog knew that the food would be served soon, and the learned response (the saliva from its mouth) was observed. The historical viewpoint is that the mammalian nervous system plays a vital role in associative learning through neuronal signaling and reconfiguration [49,50,51,52].

Table 1.

Types of synthetic circuits.

Table 1.

Types of synthetic circuits.

| Associative Learning | Decision Making | Oscillator |

|---|---|---|

| Fernando et al., 2009 [53] | Nene et al., 2012 [30] | Stricker et al., 2008 [36] |

| Nesbeth et al., 2016 [18] | Filicheva et al., 2016 [31] | Tigges et al., 2009 [37] |

| Macia et al., 2017 [34] | Abrego and Zaikin, 2017 [32] | Borg et al., 2022 [35] |

However, some studies revealed the possibility that non-neural agents may also organise in a similar fashion [34,54,55]. Naturally, molecular circuits may display similar behaviours as molecular reactions form the building block of cellular activities. As a result, the design and investigation of molecular interactions that manifest associative learning have become active research topics. Although logic gates have been widely adopted in synthetic biology for emulating diverse biological behaviours [56,57,58,59,60], in this article, the focus is confined to the continuous models constructed by the Hill equation [61,62], since continuous models tend to generate more accurate results and facilitate the comprehension of fine details of the system. One of the first models is Fernando’s model, where the authors designed a genetic circuit that mimicked Hebbian learning in a neural network architecture [53]. That work stands as a well-organised interdisciplinary article in which a mathematical model was developed, and the biotechnological approach that can be implemented in Escherichia coli is thoroughly discussed. Nonetheless, because of its interdisciplinary nature, the work only demonstrates the fact that learning can be formed after conditioning, but fails to investigate other, more advanced behaviours, such as the reinforcement effect and forced dissociation. More specifically, it is natural to assume that the learned response becomes stronger with increasing times of conditioning. For illustrative brevity, we name the phenomenon reinforcement effect. It is also reasonable to suppose that the response becomes weaker with the repeated cuing of conditioned stimulus (with no unconditioned stimulus taking place at the same time) shortly after the formation of conditioning, and we call it forced dissociation. To the best of our knowledge, neither of them has been formally discussed in the previous literature. The potential importance of these two behaviours can be explained by two aspects. On the one hand, in Pavlov’s dog experiment, the former would be equivalent to the scenario that the repeated conditioning of the bell and the food would reinforce the dog’s belief that the bell’s ring is a reminder of food availability; the latter would be that the repeated bell’s ring alone shortly after the conditioning would stop the dog reckoning that the bell is related to the food’s availability. On the other hand, these properties may provide experimentalists with more flexibility over the control of some biological systems, as synthetic circuits are widely used to regulate them [4,6,63,64]. We show that Fernando’s model is able to manifest the reinforcement effect by choosing the proper parameters, but unable to manifest forced dissociation. This motivated us to design a new circuit that possesses the potential to display forced dissociation. The new circuit also involves fewer proteins and does not contain any feedback loop, which can potentially reduce the wiring complexity in practical implementation. In the meantime, we also study the robustness of respective models to the Hill coefficients, as this instructs experimentalists on the type of polymers that can be used to implement the circuit.

2. Models

2.1. Fernando’s Model

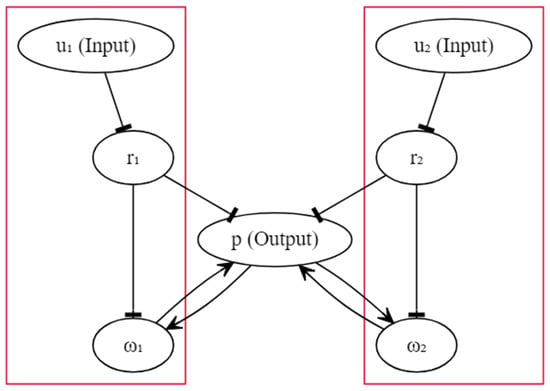

The circuit diagram of Fernando’s model is shown in Figure 1, and we assumed that the circuit could be implemented in a programmable cell. Unlike the schematic diagram given in [53], here we omit genes for illustrative simplicity. In the diagram, each oval box denotes a particular protein. The activation was drawn with an arrow, and the inhibition was drawn with a hammerhead. Except for inhibitions and , where the input molecules were directly bound to the repressors, all other activations and inhibitions were realised by transcription and translation. For instance, repressor inhibits the transcription of a particular gene, which guides the manufacture of molecule . The upstream gene of protein p has two available operator sites, one for , and another for , . A more detailed explanation can be found in [53]. Fernando’s model is characterised by the system as follows ():

Figure 1.

The schematic circuit of Fernando’s model.

In the equations above, and represent the respective concentrations of the unconditioned and conditioned stimuli, and they are given to the cell in a transient time window. and represent the respective concentrations of the weight molecules. and represent the respective concentrations of the repressor molecules. The concentration of the response molecule is denoted by p. , and denote the respective Hill constants for molecule , r and p that measure the concentrations of the transcription factors required for half occupancy. R denotes the repressor concentration in the absence of molecule u. a and b are Hill coefficients that measure the cooperativity of the respective transcriptional factors. In [53], the authors used and ; however, in this work, we study the impact of varying integer values of a and b on qualitative behaviours. denotes the basal grow rate, and we assumed that it was only nonzero for . v and denote the growth and degradation rate parameter, respectively, and the subscripts are used to signify the source of contribution. The architecture shows that the genetic circuit was structurally symmetric, and the left and the right halves were independent. The association was triggered by the feedback of response molecule p, and the inspiration came from Hebbian learning, which dictates information exchange between neurons [65].

In order for an association to be formed, we can simply render the concentration of the molecule abundant, and molecule insignificant before the start of the experiment. When only molecule was given to the cell, it was bound to repressor molecule and reduced the concentration of molecule . Therefore, the inhibition of the transcription with respect to the genes controlled by molecule was lifted. Eventually, sufficient molecule activated the transcription with respect to the gene associated with response molecule p and promoted the production of p. Conversely, when only molecule was given to the cell, we could not observe abundant molecule p due to the shortage of availability. However, at the time when molecule was paired with molecule , the production of molecule p (triggered by ) elevated the concentration of molecule because of the feedback loop, so that the next time, even when only molecule was present, there already existed a sufficient molecule for the production of p, which implies that the association was formed.

In order to analyse a system of differential equations, one often converts the system to a dimensionless scale as a first step. One generally reduces the volume of parameters and removes physical units from the system, which facilitates mathematical investigations and renders the model more flexible for experimentalists who wish to implement the system in vivo or in vitro, as the units are not specified. By using scaling , , , , , the dimensionless model becomes (overlines were dropped for simplicity):

where , , , , .

Now, we try to intuitively interpret whether the reinforcement effect can be realised by Fernando’s model or not. Suppose we carry out the conditioning twice in order to guarantee that the second learned response was more abundant than the first, the simplest way is to ensure that the initial concentration of is small, and the growth parameter is not too large, so that we could anticipate p to keep growing with the repeated conditioning according to Equation (2). Additionally, we needed to select a relatively small to guarantee that the memory that was reflected by the weight molecules did not disappear too rapidly. Next, we investigate how the two stimuli dissociate. Intuitively, in light of the design of Fernando’s model, the disappearance of the learned response was dictated by the time elapse. This is due to the fact that promotes the production of p, such that the response eventually disappears only if falls to 0. Additionally, the learned response does not attenuate if the time interval between the two successive stimuli is insufficient relative to the decay rate. In all, the dissociation is autonomous and is not dictated by the repeated cuing of the conditioned stimulus (alone). This can be circumvented by a different design, which is introduced next.

2.2. A Model with Forced Dissociation

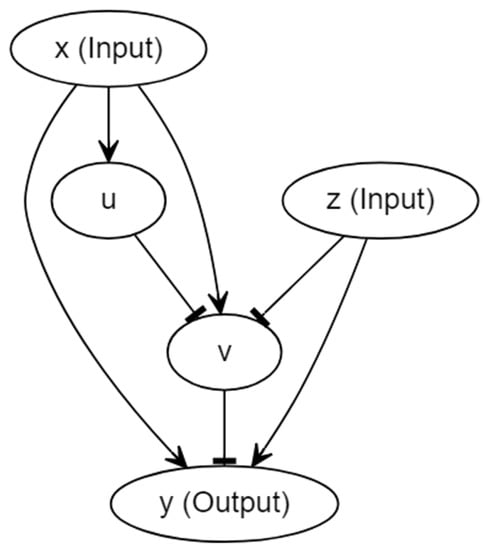

The circuit for the model proposed here was inspired by [18] and is shown in Figure 2. In the diagram, each oval box denotes a particular protein molecule, and we again omitted the genes for the illustrative brevity. More specifically, input x initiates the transcription of a particular gene which guides the manufacture of molecule u; the translation of molecule v is controlled by another gene, the expression of which is dictated by x, u and z together. Similarly, y is controlled by a third gene, the expression of which is dictated by x, v and z. The activation was drawn with an arrow, and the inhibition was drawn with a hammerhead. Input x plays the role of the conditioned stimulus, input z plays the role of the unconditioned stimulus, and y represents the response. However, the discrimination between the conditioned and unconditioned stimuli was downplayed in some sense; we explain this in the discussion. The design of this new architecture comes with at least two purposes. First, we intended to construct a simple circuit of which the mechanism was completely different from that of Fernando’s model, aiming for the components of the circuit to be more interactive with each other, and the association not to be reliant upon the participation of the feedback loops. These may lift a few restrictions on the synthetic implementation. Second, given that Fernando’s model is not capable of demonstrating forced dissociation, we hope to build a model that could successfully dissociate the two stimuli by repeating the cuing of the conditioned stimulus alone right after the conditioning. To fulfil the latter requirement, instead of placing a molecule ( in Fernando’s model) that promoted the transcription of the response protein molecule, we could actually consider introducing an inhibitor upstream of the response molecule. In this way, the consistent input of conditioned stimulus alone was expected to reduce the amount of the response molecule, so long as the stimulus promoted the expression of the inhibitor after the conditioning of the conditioned and unconditioned stimuli. This explains why we introduce the pathway in Figure 2. Regarding the other parts of the circuit, and guarantee that input z can always activate output y, ensures that there can exist a sufficient learned response upon the formation of associative learning, and u functions as a moderator, speeding up the consumption of v that renders the reinforcement effect more likely to occur. Here, we assumed that the Hill coefficients for all molecules were identical, and they are denoted by a.

Figure 2.

The schematic circuit of the model with forced dissociation.

By employing the Hill equation, the system can be described with the following equations:

Similarly to Fernando’s model, , , , and denote the Hill constants for molecules x, z, v, and u, respectively. The production and degradation rates are denoted by and , where the subscripts are used to signify the source of contribution. Then, we used a similar scaling approach to render the system dimensionless. The dimensionless system is shown below:

One of the drawbacks of this circuit is that the model is not as heuristic as Fernando’s model. Indeed, Fernando’s model borrows the architecture of Hebbian learning, whereas we built our model from scratch, tailored for the properties that we wanted to achieve. Intuitively, in order for the reinforcement effect to occur, we anticipated that, by properly choosing the parameter values, the presence of x alone could significantly elevate the amount of v, and the conditioning (whenever both x and z are present) could speed up the consumption of v. This ensured that the second learned response is more abundant than the first, and the response to the conditioned stimulus alone was simultaneously less abundant than that to the unconditioned stimulus. When it comes to forced dissociation, as we discussed previously, the repeated cuing of input x alone after the conditioning could elevate the concentration of v, which subsequently reduces output y as a result of . The numerical result is given in the next section.

Before closing this section, we briefly mention a potential adjustment to the current circuit that could simplify the system given in Equation (4). Our existing scheme allows for x, v, and z to bind at a single operator site. In fact, we can adjust the output part in a way such that z exploits operator sites that only restrict to z itself, which render it looking somehow analogous to the output part of Fernando’s model, in which the left half and the right half are unrelated. The dimensionless model arising from the adjustment can then be reduced to:

The adjustment provides an alternative with a simpler mathematical formulation (but likely with more biological complexity) for readers who wish to implement our circuit. We later demonstrate that this adjusted model can also display the same qualitative behaviours.

3. Results

The numerical simulations were conducted in MATLAB R2020b. As the ODE systems are too complex to be analysed in terms of the numerical stability [66,67], we first used the Runge–Kutta fourth-order method [68] to obtain a benchmark result for the models; for the purpose of efficiency, we used the forward Euler method with time step to carry out the entire analysis. We gradually reduced the time step from , and the result remained unchanged, which demonstrates that the numerical method was stable in this case.

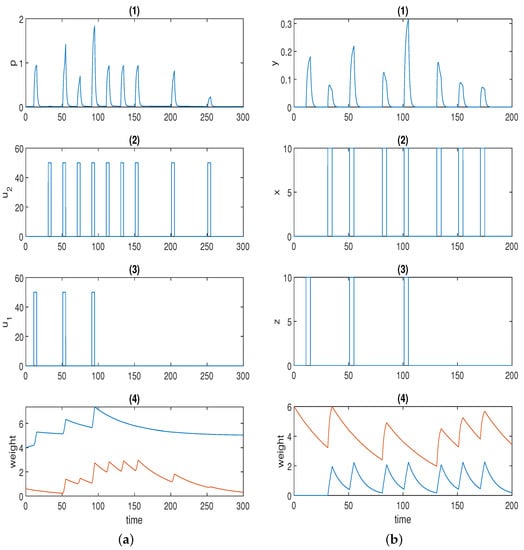

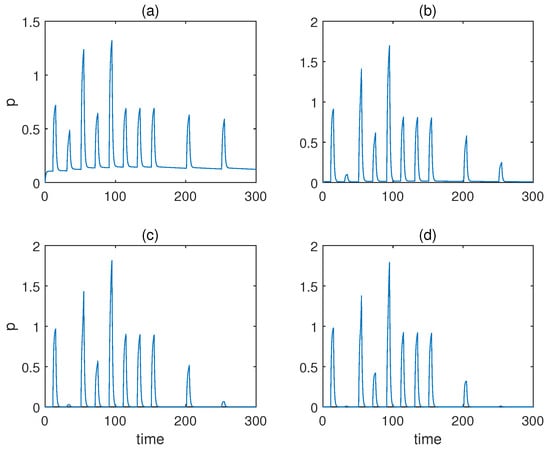

Figure 3a displays one simulation result for Fernando’s model (Equation (2)), and the parameter values used in the simulation are listed in Table 2. Here, we used the Hill coefficients recommended in [53], which are and .

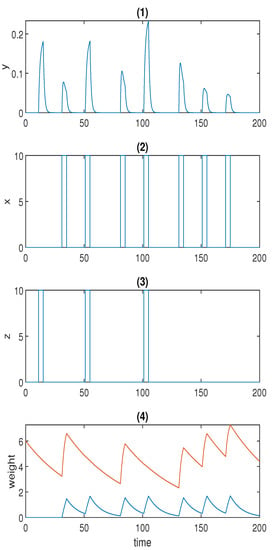

Figure 3.

(a) Time series for Fernando’s model: (1) response molecule p; (2), (3) conditioned stimulus and unconditioned stimulus ; (4) weight molecule (blue) and (red); . (b) Time series for the model with forced dissociation (1) response molecule y; (2), (3) conditioned stimulus x and unconditioned stimulus z; (4) weight molecule u (blue) and v (red); a = 2, αyx = 2, αyz = 4, αxyz = 4, αux = 0.6, αvx = 1.5, βu = 0.1, βv = 0.02. In (1), the first spike was stimulated by the unconditioned stimulus, and the second spike was triggered by the conditioned stimulus; the first and second conditionings were formed at the third and fifth spikes, respectively; the first and the second learned responses were reflected by the fourth and the sixth spikes; the remaining spikes demonstrate whether the forced dissociation could be realised or not.

Table 2.

Parameter values used in the simulation for Fernando’s model (Equation (2)).

The first spike in p was stimulated by unconditioned stimulus . The second (small) spike in response p was triggered by conditioned stimulus . Of course, response p could be adjusted to 0 only in the presence of by setting the initial concentration of to 0 just as in the original paper. Here, we highlight the fact that various levels of the conditioned response are available to be chosen. The first conditioning was formed at the third spike, and the second conditioning was formed at the fifth spike in p when both and were present. The fourth and the sixth spikes in p represent the first and the second learned responses, respectively, when solely is present. The learned response was reinforced after repeated conditioning. Next, we move on to the discussion of how the two stimuli dissociate, namely, how the learned response is attenuated in the presence of consecutive conditioned stimuli alone after the formation of conditioning. The conjecture in the previous section was validated by the last four spikes in response p in Figure 3a. As is apparent, the sixth, seventh, and eighth spikes were of the same amplitude, as the time intervals were not wide enough. Conversely, the response started to decrease (shown by the last two spikes) when the time interval was further widened. This could be deemed to be a limitation for the model because, in some applications (e.g., immune inflammation), we may hope to force the stimuli to dissociate by the repeated cuing of the conditioned stimulus alone in a short time window after the formation of associative learning, which is one of the motivations for our novel design.

Furthermore, we study whether the qualitative behaviours of associative learning that we previously introduced were preserved or not apart from using the Hill coefficients recommended in [53]. The values for the other parameters remained the same, as shown in Table 2.

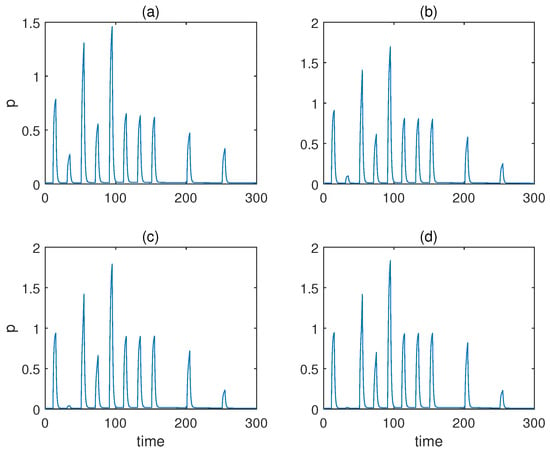

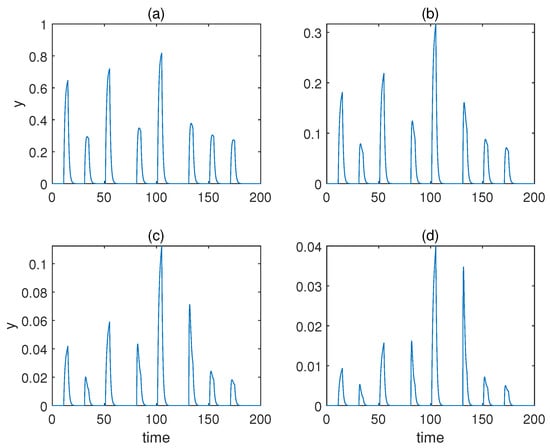

First, we fixed and altered the Hill coefficient for the weight molecules from to . The respective responses of molecule p are displayed in Figure 4. As is obvious from the figure, the qualitative behaviours barely changed irrespective of the value of a, apart from the fact gave rise to a relatively notable response when only conditioned stimulus was present prior to conditioning. However, the result of could still be classified as a valid associative learning in broad terms, as the response triggered by the conditioned stimulus alone was more significant after conditioning than before.

Figure 4.

The concentration of molecule p under various Hill coefficients a when the value of b is fixed for Fernando’s model. (a–d) Results when a = 1, 2, 3, 4. b = 2, α = 1, β = 0.8, θ = 0.02, τ = 0.1, S = 10, respectively.

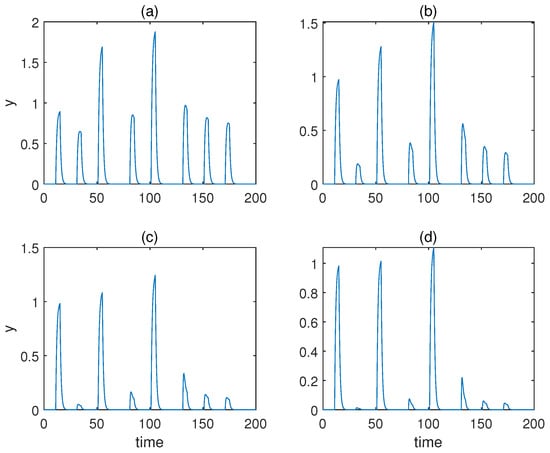

Then, we studied the case when , and we altered a from to . The respective responses of molecule p are displayed in Figure 5, which shows that only gave rise to undesirable behaviours, as the concentration of response p never came down to 0. This is because leads to a large transient growth rate of the weight for conditioned stimulus .

Figure 5.

The concentration of molecule p under various Hill coefficients when for Fernando’s model. (a–d) Results when a = 1, 2, 3, 4. α = 1, β = 0.8, θ = 0.02, τ = 0.1, S = 10, respectively.

In all, Fernando’s model is robust to the variation in Hill coefficients even without exploring the other parameters. It may offer more flexibility to synthetic biologists, since two dimers are not required to be bound cooperatively for weight molecules and . As we show in Figure 4, even and could produce desirable results, which may reduce the experimental complexity.

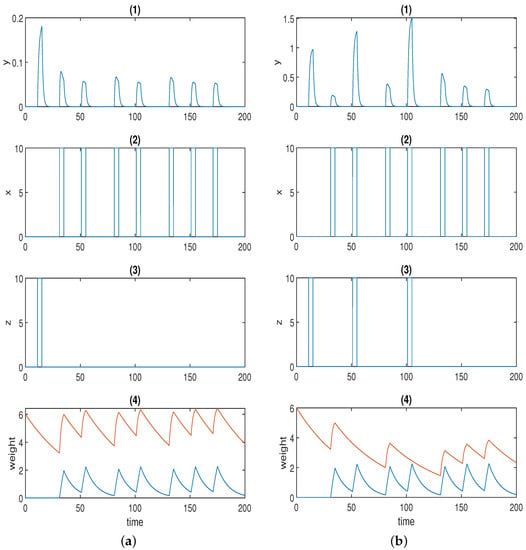

Figure 3b displays the simulation result for the model with forced dissociation (Equation (4)) using , and the other parameter values used in the simulation are listed in Table 3.

Table 3.

Parameter values used in the simulation for the model with forced dissociation (Equation (4)).

The first spike in response y was stimulated by unconditioned stimulus z, and the second spike was stimulated by conditioned stimulus x. The first conditioning was formed at the third spike, and the second conditioning was formed at the fifth spike in p when both z and x were present. The fourth and sixth spikes in p represent the first and the second learned responses, respectively. The learned response was reinforced after repeated conditioning. As opposed to Fernando’s model, this model could successfully repress the learned response to the preconditioned level by means of the repeated cuing of the conditioned stimulus within a short time window, which was corroborated by the last two spikes in response y in Figure 3b.

Considering that our model is not as heuristic as Fernando’s model, it was necessary to validate that the reinforcement effect was indeed the result of the conditioning. Therefore, we removed the second and the third unconditioned stimuli z from the system, and the result is shown in Figure 6a. As can be seen, the reinforcement effect no longer existed without the conditioning of x and z. The result also validates our conjecture in the previous section that the presence of x and z speeds up the degradation of u, which is a game changer for the formation of the reinforcement effect.

Figure 6.

(a): Time series for the model with forced dissociation without conditioning (1) response molecule y; (2), (3) conditioned stimulus x and unconditioned stimulus z; (4) weight molecule u (blue) and v (red); a = 2, αyx = 2, αyz = 4, αxyz = 4, αux = 0.6, αvx = 1.5, βu = 0.1, βv = 0.02. (b) Time series for the adjusted model with forced dissociation (1) response molecule y; (2), (3) conditioned stimulus x and unconditioned stimulus z; (4) weight molecule u (blue) and v (red); a = 2, αyx = 4, αyz = 1, αux = 0.6, αvx = 1, βu = 0.1, βv = 0.02.

Similarly to what we performed for Fernando’s model, we studied the behaviours of this model under various Hill coefficients a while keeping the other parameters specified in Table 3 unchanged.

From to , the respective responses of molecule y are displayed in Figure 7. As can be observed, only and yielded desirable associative learning behaviours. The effect of conditioning was not discriminative for , and for , the response during conditioning was not more significant than the one when only the unconditioned stimulus is present.

Figure 7.

The concentration of molecule y under various Hill coefficients a for the model with forced dissociation. (a–d) Results when . .

The previously introduced adjusted model (Equation (5)) could also display the qualitative behaviours that are shown in Figure 6b when using . The parameter values used in this simulation are listed in Table 4. The adjusted model could give rise to a more abundant response on the dimensionless scale compared to the original version (Figure 3b and Figure 6b).

Table 4.

Parameter values used in the simulation for the adjusted model with forced dissociation (Equation (5)).

Again, we studied the behaviours of the model with various Hill coefficients a without changing the other parameters specified in Table 4. As is apparent from Figure 8, the qualitative behaviours of the associative learning were largely preserved. However, the learned responses for cases and were less significant than that of .

Figure 8.

The concentration of molecule y under various Hill coefficients a for the adjusted model with forced dissociation. (a–d) Results when . .

4. Discussion

In this work, we presented a detailed analysis of two advanced behaviours (reinforcement effect and forced dissociation) in associative learning. Fernando’s model could successfully demonstrate the reinforcement effect if we properly chose the parameter values. However, the attenuation of the learned response only occurred when the time interval between the learned responses was large enough in the sense that there was no way to force the learned response to decrease within a short time window. The model introduced in Section 2.2 could manifest a reinforcement effect and forced dissociation with the parameter values listed in Table 3, which could potentially provide more possibilities for the biological and medical applications of synthetic biology.

Having highlighted the contribution of our model, we must point out that it comes with a few constraints of which synthetic biologists need to be aware. First, the overall qualitative behaviour of the system is not very robust to the parameters aside from the Hill coefficients. We found that a 25% change in parameter values could lead to less desirable behaviours. An example is given in Figure 9, where was changed from 0.6 to 0.45. The first spike was then of the same amplitude as that of the third in response y. However, the stringent constraint can be relaxed if we expect to implement only one of the two behaviours, either reinforcement effect or forced dissociation. Second, it is extremely difficult if not impossible to control the response to the conditioned stimulus prior to conditioning at an insignificant level (second spike in y in Figure 3b) while maintaining the behaviours of interest. Hence, the model proposed here may not be a suitable candidate to emulate the behaviours of Pavlov’s dog, but fits the context of associative learning in a broader sense where conditioning and learning are major concerns. Third, the model demands that v remains abundant in order for associative learning to happen. Therefore, v needs to be supplemented to a sufficient level before the start of each experiment. Otherwise, conditioned stimulus x alone could result in an overexpression of the response.

Figure 9.

An example to illustrate the effect of change in parameter values on the model with forced dissociation. .

Last but not least, we also want to mention several potential applications of our work in the field of synthetic biology and medicine. First, in the treatment for diabetes, Ye et al. [69] built a synthetic signaling cascade that enhances blood-glucose homeostasis. The reinforcement effect that we demonstrated in the models may pave the way for further adjustment to the circuit in the hope to attain the more efficient control of glucose level. Second, in the treatment of immune-mediated diseases, adoptive T-cell transfer technology shows immense promise in the treatment of immune-mediated disease such as cancer immunotherapy [70]. The feature of forced dissociation displayed in our model may provide more flexibility for experimentalists to shut off an excessive immune response once the tumours are eliminated. Lastly, some recent studies were focused on modelling the network of neurodegenerative markers [71,72]. The models that we discussed in this article may shed light on how to model these new findings at the genetic circuit level and build hierarchical neuronal architectures. The models may also play a supportive role in the existing technology that controls neurotransmitter release [73].

Author Contributions

Supervision: A.Z.; conceptualization: A.Z. and Z.L.; model derivation: A.F. and Z.L.; numerical simulation: Z.L.; writing: Z.L., A.Z., A.F. and P.T. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by CRUK Early Detection Committee Project Award C12077/A26223.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Acknowledgments

Medical Research Council grant (MR/R02524X/1) is acknowledged by A.Z., Russian Science Foundation grant no. 22-12-00216 by A.Z., and CRUK Early Detection Committee Project Award C12077/A26223 by Z.L. and A.Z.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Benner, S.A.; Sismour, A.M. Synthetic biology. Nat. Rev. Genet. 2005, 6, 533–543. [Google Scholar] [CrossRef] [PubMed]

- Cameron, D.E.; Bashor, C.J.; Collins, J.J. A brief history of synthetic biology. Nat. Rev. Microbiol. 2014, 12, 381–390. [Google Scholar] [CrossRef] [PubMed]

- Khalil, A.S.; Collins, J.J. Synthetic biology: Applications come of age. Nat. Rev. Genet. 2010, 11, 367–379. [Google Scholar] [CrossRef] [PubMed]

- Ruder, W.C.; Lu, T.; Collins, J.J. Synthetic biology moving into the clinic. Science 2011, 333, 1248–1252. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bueso, Y.F.; Lehouritis, P.; Tangney, M. In situ biomolecule production by bacteria; a synthetic biology approach to medicine. J. Control. Release 2018, 275, 217–228. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.R.; Jusiak, B.; Lu, T.K. Engineering advanced cancer therapies with synthetic biology. Nat. Rev. Cancer 2019, 19, 187–195. [Google Scholar] [CrossRef]

- Goold, H.D.; Wright, P.; Hailstones, D. Emerging opportunities for synthetic biology in agriculture. Genes 2018, 9, 341. [Google Scholar] [CrossRef] [Green Version]

- Wurtzel, E.T.; Vickers, C.E.; Hanson, A.D.; Millar, A.H.; Cooper, M.; Voss-Fels, K.P.; Nikel, P.I.; Erb, T.J. Revolutionizing agriculture with synthetic biology. Nat. Plants 2019, 5, 1207–1210. [Google Scholar] [CrossRef]

- Ke, J.; Wang, B.; Yoshikuni, Y. Microbiome engineering: Synthetic biology of plant-associated microbiomes in sustainable agriculture. Trends Biotechnol. 2021, 39, 244–261. [Google Scholar] [CrossRef]

- Bueso, Y.F.; Tangney, M. Synthetic biology in the driving seat of the bioeconomy. Trends Biotechnol. 2017, 35, 373–378. [Google Scholar] [CrossRef]

- Anderson, J.C.; Clarke, E.J.; Arkin, A.P.; Voigt, C.A. Environmentally controlled invasion of cancer cells by engineered bacteria. J. Mol. Biol. 2006, 355, 619–627. [Google Scholar] [CrossRef] [PubMed]

- Jinek, M.; Chylinski, K.; Fonfara, I.; Hauer, M.; Doudna, J.A.; Charpentier, E. A programmable dual-RNA–guided DNA endonuclease in adaptive bacterial immunity. Science 2012, 337, 816–821. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Qi, L.S. A CRISPR–dCas toolbox for genetic engineering and synthetic biology. J. Mol. Biol. 2019, 431, 34–47. [Google Scholar] [CrossRef] [PubMed]

- Ren, J.; Lee, J.; Na, D. Recent advances in genetic engineering tools based on synthetic biology. J. Microbiol. 2020, 58, 1–10. [Google Scholar] [CrossRef]

- Jensen, M.K.; Keasling, J.D. Recent applications of synthetic biology tools for yeast metabolic engineering. FEMS Yeast Res. 2015, 15, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Hughes, R.A.; Ellington, A.D. Synthetic DNA synthesis and assembly: Putting the synthetic in synthetic biology. Cold Spring Harb. Perspect. Biol. 2017, 9, a023812. [Google Scholar] [CrossRef] [Green Version]

- Borg, Y.; Grigonyte, A.M.; Boeing, P.; Wolfenden, B.; Smith, P.; Beaufoy, W.; Rose, S.; Ratisai, T.; Zaikin, A.; Nesbeth, D.N. Open source approaches to establishing Roseobacter clade bacteria as synthetic biology chassis for biogeoengineering. PeerJ 2016, 4, e2031. [Google Scholar] [CrossRef] [Green Version]

- Nesbeth, D.N.; Zaikin, A.; Saka, Y.; Romano, M.C.; Giuraniuc, C.V.; Kanakov, O.; Laptyeva, T. Synthetic biology routes to bio-artificial intelligence. Essays Biochem. 2016, 60, 381–391. [Google Scholar]

- Bianchini, F. Artificial intelligence and synthetic biology: A tri-temporal contribution. Biosystems 2016, 148, 32–39. [Google Scholar] [CrossRef]

- Abrego, L.; Zaikin, A. Integrated information as a measure of cognitive processes in coupled genetic repressilators. Entropy 2019, 21, 382. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Lee, J.; Land, M.A.; Lai, S.; Igoshin, O.A.; St-Pierre, F. A synthetic circuit for buffering gene dosage variation between individual mammalian cells. Nat. Commun. 2021, 12, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; White, K.S.; Winfree, E. Construction of an in vitro bistable circuit from synthetic transcriptional switches. Mol. Syst. Biol. 2006, 2, 68. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bacchus, W.; Aubel, D.; Fussenegger, M. Biomedically relevant circuit-design strategies in mammalian synthetic biology. Mol. Syst. Biol. 2013, 9, 691. [Google Scholar] [CrossRef] [PubMed]

- Brophy, J.A.; Voigt, C.A. Principles of genetic circuit design. Nat. Methods 2014, 11, 508–520. [Google Scholar] [CrossRef] [Green Version]

- Kobayashi, H.; Kaern, M.; Araki, M.; Chung, K.; Gardner, T.S.; Cantor, C.R.; Collins, J.J. Programmable cells: Interfacing natural and engineered gene networks. Proc. Natl. Acad. Sci. USA 2004, 101, 8414–8419. [Google Scholar] [CrossRef] [Green Version]

- Xie, M.; Fussenegger, M. Designing cell function: Assembly of synthetic gene circuits for cell biology applications. Nat. Rev. Mol. Cell Biol. 2018, 19, 507–525. [Google Scholar] [CrossRef]

- Xia, P.F.; Ling, H.; Foo, J.L.; Chang, M.W. Synthetic genetic circuits for programmable biological functionalities. Biotechnol. Adv. 2019, 37, 107393. [Google Scholar] [CrossRef]

- Tolle, F.; Stücheli, P.; Fussenegger, M. Genetic circuitry for personalized human cell therapy. Curr. Opin. Biotechnol. 2019, 59, 31–38. [Google Scholar] [CrossRef]

- Prochazka, L.; Benenson, Y.; Zandstra, P.W. Synthetic gene circuits and cellular decision-making in human pluripotent stem cells. Curr. Opin. Syst. Biol. 2017, 5, 93–103. [Google Scholar] [CrossRef]

- Nene, N.R.; Garca-Ojalvo, J.; Zaikin, A. Speed-dependent cellular decision making in nonequilibrium genetic circuits. PLoS ONE 2012, 7, e32779. [Google Scholar] [CrossRef] [Green Version]

- Filicheva, S.; Zaikin, A.; Kanakov, O. Dynamical decision making in a genetic perceptron. Phys. D Nonlinear Phenom. 2016, 318, 112–115. [Google Scholar] [CrossRef]

- Abrego, L.; Zaikin, A. Decision making in an intracellular genetic classifier. Math. Model. Nat. Phenom. 2017, 12, 30–42. [Google Scholar] [CrossRef]

- Kanakov, O.; Kotelnikov, R.; Alsaedi, A.; Tsimring, L.; Huerta, R.; Zaikin, A.; Ivanchenko, M. Multi-input distributed classifiers for synthetic genetic circuits. PLoS ONE 2015, 10, e0125144. [Google Scholar] [CrossRef] [Green Version]

- Macia, J.; Vidiella, B.; Solé, R.V. Synthetic associative learning in engineered multicellular consortia. J. R. Soc. Interface 2017, 14, 20170158. [Google Scholar] [CrossRef] [PubMed]

- Borg, Y.; Alsford, S.; Pavlika, V.; Zaikin, A.; Nesbeth, D.N. Synthetic biology tools for engineering Goodwin oscillation in Trypanosoma brucei brucei. Heliyon 2022, 8, e08891. [Google Scholar] [CrossRef]

- Stricker, J.; Cookson, S.; Bennett, M.R.; Mather, W.H.; Tsimring, L.S.; Hasty, J. A fast, robust and tunable synthetic gene oscillator. Nature 2008, 456, 516–519. [Google Scholar] [CrossRef] [PubMed]

- Tigges, M.; Marquez-Lago, T.T.; Stelling, J.; Fussenegger, M. A tunable synthetic mammalian oscillator. Nature 2009, 457, 309–312. [Google Scholar] [CrossRef] [PubMed]

- Ullner, E.; Koseska, A.; Zaikin, A.; Volkov, E.; Kurths, J.; Garcia-Ojalvo, J. Dynamics of Multicellular Synthetic Gene Networks. In Handbook on Biological Networks; World Scientific: Singapore, 2009. [Google Scholar]

- Ullner, E.; Ares, S.; Morelli, L.G.; Oates, A.C.; Jülicher, F.; Nicola, E.; Heussen, R.; Whitmore, D.; Blyuss, K.; Fryett, M.; et al. Noise and oscillations in biological systems: Multidisciplinary approach between experimental biology, theoretical modelling and synthetic biology. Int. J. Mod. Phys. B 2012, 26, 1246009. [Google Scholar] [CrossRef] [Green Version]

- Ullner, E.; Zaikin, A.; Volkov, E.I.; García-Ojalvo, J. Multistability and clustering in a population of synthetic genetic oscillators via phase-repulsive cell-to-cell communication. Phys. Rev. Lett. 2007, 99, 148103. [Google Scholar] [CrossRef] [Green Version]

- Anesiadis, N.; Kobayashi, H.; Cluett, W.R.; Mahadevan, R. Analysis and design of a genetic circuit for dynamic metabolic engineering. ACS Synth. Biol. 2013, 2, 442–452. [Google Scholar] [CrossRef]

- Shanks, D.R. The Psychology of Associative Learning; Cambridge University Press: Cambridge, UK, 1995. [Google Scholar]

- Wasserman, E.A.; Miller, R.R. What’s elementary about associative learning? Annu. Rev. Psychol. 1997, 48, 573–607. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pearce, J.M.; Bouton, M.E. Theories of associative learning in animals. Annu. Rev. Psychol. 2001, 52, 111–139. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Behrens, T.E.; Hunt, L.T.; Woolrich, M.W.; Rushworth, M.F. Associative learning of social value. Nature 2008, 456, 245–249. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, C.J.; De Houwer, J.; Lovibond, P.F. The propositional nature of human associative learning. Behav. Brain Sci. 2009, 32, 183–198. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pavlov, P.I. Conditioned reflexes: An investigation of the physiological activity of the cerebral cortex. Ann. Neurosci. 2010, 17, 136. [Google Scholar] [CrossRef] [Green Version]

- Ziegler, M.; Soni, R.; Patelczyk, T.; Ignatov, M.; Bartsch, T.; Meuffels, P.; Kohlstedt, H. An electronic version of Pavlov’s dog. Adv. Funct. Mater. 2012, 22, 2744–2749. [Google Scholar] [CrossRef]

- Fanselow, M.S.; Poulos, A.M. The neuroscience of mammalian associative learning. Annu. Rev. Psychol. 2005, 56, 207–234. [Google Scholar] [CrossRef] [Green Version]

- Uwano, T.; Nishijo, H.; Ono, T.; Tamura, R. Neuronal responsiveness to various sensory stimuli, and associative learning in the rat amygdala. Neuroscience 1995, 68, 339–361. [Google Scholar] [CrossRef]

- Faber, T.; Joerges, J.; Menzel, R. Associative learning modifies neural representations of odors in the insect brain. Nat. Neurosci. 1999, 2, 74–78. [Google Scholar] [CrossRef]

- Johansen, J.P.; Diaz-Mataix, L.; Hamanaka, H.; Ozawa, T.; Ycu, E.; Koivumaa, J.; Kumar, A.; Hou, M.; Deisseroth, K.; Boyden, E.S.; et al. Hebbian and neuromodulatory mechanisms interact to trigger associative memory formation. Proc. Natl. Acad. Sci. USA 2014, 111, E5584–E5592. [Google Scholar] [CrossRef] [Green Version]

- Fern, C.T.; Liekens, A.M.; Bingle, L.E.; Beck, C.; Lenser, T.; Stekel, D.J.; Rowe, J.E. Molecular circuits for associative learning in single-celled organisms. J. R. Soc. Interface 2009, 6, 463–469. [Google Scholar]

- Bray, D. Molecular networks: The top-down view. Science 2003, 301, 1864–1865. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jacob, E.B.; Becker, I.; Shapira, Y.; Levine, H. Bacterial linguistic communication and social intelligence. TRENDS Microbiol. 2004, 12, 366–372. [Google Scholar] [CrossRef] [PubMed]

- Morris, M.K.; Saez-Rodriguez, J.; Sorger, P.K.; Lauffenburger, D.A. Logic-based models for the analysis of cell signaling networks. Biochemistry 2010, 49, 3216–3224. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gardner, T.S.; Cantor, C.R.; Collins, J.J. Construction of a genetic toggle switch in Escherichia coli. Nature 2000, 403, 339–342. [Google Scholar] [CrossRef]

- Siuti, P.; Yazbek, J.; Lu, T.K. Synthetic circuits integrating logic and memory in living cells. Nat. Biotechnol. 2013, 31, 448–452. [Google Scholar] [CrossRef]

- Padirac, A.; Fujii, T.; Rondelez, Y. Bottom-up construction of in vitro switchable memories. Proc. Natl. Acad. Sci. USA 2012, 109, E3212–E3220. [Google Scholar] [CrossRef] [Green Version]

- Wang, B.; Kitney, R.I.; Joly, N.; Buck, M. Engineering modular and orthogonal genetic logic gates for robust digital-like synthetic biology. Nat. Commun. 2011, 2, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Hill, A.V. The possible effects of the aggregation of the molecules of haemoglobin on its dissociation curves. J. Physiol. 1910, 40, 4–7. [Google Scholar]

- O’Brien, E.L.; Van Itallie, E.; Bennett, M.R. Modeling synthetic gene oscillators. Math. Biosci. 2012, 236, 1–5. [Google Scholar] [CrossRef]

- Zorzan, I.; López, A.R.; Malyshava, A.; Ellis, T.; Barberis, M. Synthetic designs regulating cellular transitions: Fine-tuning of switches and oscillators. Curr. Opin. Syst. Biol. 2021, 25, 11–26. [Google Scholar] [CrossRef]

- Fitzgerald, M.; Gibbs, C.; Shimpi, A.A.; Deans, T.L. Adoption of the Q transcriptional system for regulating gene expression in stem cells. ACS Synth. Biol. 2017, 6, 2014–2020. [Google Scholar] [CrossRef] [PubMed]

- Linsker, R. Local synaptic learning rules suffice to maximize mutual information in a linear network. Neural Comput. 1992, 4, 691–702. [Google Scholar] [CrossRef]

- Higham, N.J. Accuracy and Stability of Numerical Algorithms; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2002. [Google Scholar]

- Burden, R.L.; Faires, J.D.; Burden, A.M. Numerical Analysis; Cengage Learning: Boston, MA, USA, 2015. [Google Scholar]

- Dorm, J.R.; Prince, P.J. A family of embedded Runge-Kutta formulae. J. Comput. Appl. Math. 1980, 6, 19–26. [Google Scholar]

- Ye, H.; Baba, M.D.E.; Peng, R.W.; Fussenegger, M. A synthetic optogenetic transcription device enhances blood-glucose homeostasis in mice. Science 2011, 332, 1565–1568. [Google Scholar] [CrossRef]

- Kalos, M.; June, C.H. Adoptive T cell transfer for cancer immunotherapy in the era of synthetic biology. Immunity 2013, 39, 49–60. [Google Scholar] [CrossRef] [Green Version]

- Krivonosov, M.; Nazarenko, T.; Bacalini, M.G.; Zaikin, A.; Ivanchenko, M.; Franceschi, C. Network markers of DNA methylation in neurodegenerative diseases. In Proceedings of the 2020 4th Scientific School on Dynamics of Complex Networks and their Application in Intellectual Robotics (DCNAIR), Innopolis, Russia, 7–9 September 2020; pp. 138–139. [Google Scholar]

- Krivonosov, M.; Nazarenko, T.; Bacalini, M.G.; Franceschi, C.; Zaikin, A.; Ivanchenko, M. DNA methylation changes with age as a complex system: A parenclitic network approach to a family-based cohort of patients with Down Syndrome. bioRxiv 2020. [Google Scholar] [CrossRef] [Green Version]

- Rössger, K.; Charpin-El Hamri, G.; Fussenegger, M. Reward-based hypertension control by a synthetic brain–dopamine interface. Proc. Natl. Acad. Sci. USA 2013, 110, 18150–18155. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).