Abstract

Multi-robot motion and observation generally have nonlinear characteristics; in response to the problem that the existing extended Kalman filter (EKF) algorithm used in robot position estimation only considers first-order expansion and ignores the higher-order information, this paper proposes a multi-robot formation trajectory based on the high-order Kalman filter method. The joint estimation method uses Taylor expansion of the state equation and observation equation and introduces remainder variables on this basis, which effectively improves the estimation accuracy. In addition, the truncation error and rounding error of the filtering algorithm before and after the introduction of remainder variables, respectively, are compared. Our analysis shows that the rounding error is much smaller than the truncation error, and the nonlinear estimation performance is greatly improved.

1. Introduction

As a typical complex system, swarm dynamics systems have been widely studied since first being was proposed. A swarm is composed of many simple intelligent individuals. The interaction between individuals based on basic mechanical rules can stimulate highly coordinated swarm behavior [1,2,3,4]. The dynamic behavior of swarming in nature shows amazing charm. The system composed of interconnected and constantly moving individuals emerges as colorful and highly coordinated swarming behavior, which provides a rich source of ideas for the cognition and optimization of industrial and social groups. It has considerable prospects in the fields of multi-agent collaboration [5], UAV formation [6], multi-robot collaboration [7], and intelligent grids [8].

In a multi-robot formation system, because the robot can detect the external environment it is a control system with pattern recognition, complex task execution and allocation, autonomous behavior decision-making, and other functions necessary to operate in a hostile environment and complete a variety of complex tasks. Therefore, a multi-robot formation system exhibits the characteristics of high consistency and collaboration with the outside world. In this relatively complex control system, it is necessary to obtain its precise state quantity first in order for control to be applied and to achieve the purpose of completing complex tasks. Therefore, it is necessary to perform more accurate state estimation for multi-robot motion.

Among the state estimation methods, in 1960 R. E. Kalman et al. [9,10] proposed a recursive state estimation method suitable for a system in which the model is linear and the noise obeys a Gaussian distribution. Kalman filtering (KF)is a time domain filtering method; when the system process noise and measurement noise are mutually independent Gaussian white noise with zero mean, a Kalman filter is the optimal unbiased estimator in the sense of minimum variance [11].

In general, the motion equation of a multi-robot formation and the measurement equation of its sensor are both nonlinear equations. The motion equation has weak nonlinear characteristics because only simple operations such as turning and going straight are available. The sensor observes the robot target, which shows strong nonlinear characteristics. For nonlinear state estimation problems, the traditional filtering methods are mainly extended Kalmanl filter (EKF), unscented Kalman filter (UKF) and cubature Kalman filter (CKF). EKF uses a Taylor series first-order approximation expansion of the nonlinear equations, which introduces linearization errors and has low estimation accuracy for estimating systems with strong nonlinearity [12]. Over time, Julier et al. [13] proposed another nonlinear filtering method, the unscented Kalman filter. The core of the UKF algorithm is the UT transformation, which uses an ensemble of sigma sampling points to approximate the nonlinear system model [14,15,16]. The UKF algorithm is more computationally intensive than the EKF algorithm, and its filtering accuracy is better, at least to the second-order accuracy of the Taylor expansion. However, the shortcomings of the UKF algorithm are obvious in that it can only be applied to Gaussian systems, and a problem with the non-positive definite error covariance matrix is caused by the emergence of negative weight in the implementation of the algorithm [17]. The frequency of this problem becomes greater as the order of the system increases and the nonlinearity grows stronger. In order to better solve the problem of UKF being non-positive definite when dealing with high-dimensional covariance matrices, resulting in divergent filtering results, Ienkaram proposed a weight-selective UKF filtering method called Cubature Kalman Filter (CKF) [18,19]. CKF optimizes the sigma point sampling method and weight distribution in UKF using a spherical integral and radial integral. Compared with traditional EKF, it has improved estimation accuracy and improved stability of filtering methods [20].

In recent years, in the treatment of nonlinear estimation problems, the structure of the extended Kalman filter (EKF) is used to design recursive estimators as a new idea to solve the problem.The main idea is to transform the linear error of the nonlinear equation after doing the first-order Taylor expansion into the form of a product of the scaling matrix associated with the problem solved and some time-varying positive definite matrix, then set the upper bound of the covariance matrix [21,22,23], and get the upper bound of the minimum covariance matrix by numerically solving the Ricatti equation, and finally use recursive filtering, which cannot be accurately resolved to get this scaling matrix, and then the process of solving the inverse matrix in the covariance matrix obtained by the Ricatti equation is more complicated, which greatly increases the computational burden in some high-dimensional occasions and cannot meet the real-time requirements [24]. The method cannot accurately analyze the scaling matrix, and the process of solving the inverse matrix in the covariance matrix obtained by the Ricatti equation is relatively complicated. The other is to borrow the Taylor expansion idea, expand the higher-order term with the original equation, design the new state and measurement equation, and use recursive filtering to get the required state estimate [25,26]. It can choose the expansion order reasonably according to the nonlinear strength and weakness of the actual problem, which is a good balance between the requirements of arithmetic power and estimation accuracy, and has a good real-time filtering feature [27,28]. At the same time, this method has also been extended to correlation entropy filtering [29], Kronecker product [30] and lithium battery life prediction [31], which all show good estimation performance.

In traditional state estimation for multi-robot operation, the nonlinear state estimation is performed separately for each robot using methods such as EKF, UKF, or CKF. In addition to the problems already mentioned in this paper, performing individual state estimation for each robot reduces the estimation accuracy [32,33,34]. In recent years, the use of trigger probability in the multi-robot formation problem as the connection relationship between robots has gradually become a mainstream idea [24]. The reason for real-time estimation is that at a certain moment the connection relationship between multiple robots is deterministic, and there is only a connection or no connection, which is equivalent to a binary connection.

On this basis, this paper addresses the multi-robot formation state estimation problem by proposing a centerless multi-robot state joint estimation method based on high-order extended Kalman filter (HEKF). This improves the original multi-robot connection relationship based on trigger probability and improves the multi-robot state estimation accuracy. The main algorithm of this paper is as follows. In view of the nonlinear characteristics of multi-robot motion and observation, Taylor’s higher-order expansion of the robot’s state equation and measurement equation is performed to solve the problem of different nonlinear degrees of the two nonlinear equations. The high-order Kalman filtering algorithm is improved by expanding the truncation error with the state for dimensional estimation and fusing the state information of neighboring node robots to improve the multi-robot state estimation accuracy. A numerical simulation experiment proves the effectiveness of the algorithm. Therefore, we choose the second method based on high-order Kalman filtering. The main work of this paper includes:

- After Taylor expansion of the nonlinear state equation and observation equation, the remainder variable is introduced and the original EKF algorithm and the high-order Kalman filtering algorithm are changed to discard the truncation error. Only the first-order and low-order items are retained, reducing the estimation error and improving the estimation accuracy.

- A dynamic model of the remainder variables is introduced into the state equation and observation equation as hidden variables, and the changed pseudo-linear state equation and observation equation are rewritten into higher-order linear forms through dimension expansion.

- The new high-order linear equation is used to obtain the state estimation value using the recursive filtering algorithm, and the effectiveness of the algorithm is analyzed.

The rest of this paper is organized as follows. Section 2 proposes state equations and observation equations for the multi-robot formation operating system and designs a recursive estimator based on the EKF structure, while Section 3 presents the recursive estimator form derived from the second section, introduces the remainder variables into the state equation and the observation equation, and presents a new high-order linear filter derived through dynamic modeling, then analyzes its performance. In Section 4, an indoor multi-robot simulation numerical experiment is used to verify the effectiveness of the algorithm proposed in this paper. Finally, conclusions are provided in Section 5. In addition, the important mathematical symbols in Appendix A.

2. Problem Description

The movement of the robot is generally carried out by operations such as going straight and turning, and the observation system is generally composed of radar or visual sensors. Therefore, both the robot’s motion and the observation system have nonlinear characteristics. In a multi-robot formation environment, there is a certain degree of motion consistency between the robots, and thus a certain coupling relationship. The dynamic equations can be described by the following N node coupling equations:

where and denote the state vector and the measurement vector, respectively, i and k denote the node index and the time instant, respectively, and are known nonlinear functions that are assumed to be continuously differentiable, is the overall coupling strength, and is the inner-coupling matrix. The process noise, ,and the measurement noise, , are assumed to be mutually uncorrelated zero-mean white Gaussian noise with the covariances and , respectively.

The EKF structure is used to design the recursive estimation of the multi-robot formation trajectories (1) and (2):

where and denote the predicted and updated estimates at time instant , respectively., and is the gain matrix to be determined

As in the EKF, we define the updated estimation error and the corresponding covariance as follows:

3. Algorithm Description

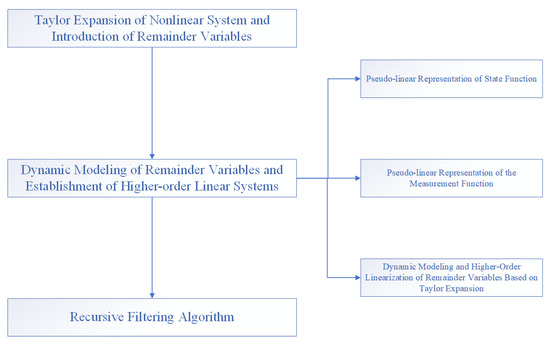

In the second section, we use the EKF structure to design the recursive filter. This idea generally expands the nonlinear function to obtain the first-order terms and truncation errors. The traditional EKF algorithm directly discards the truncation errors and keeps only the first-order terms. In the transfer function with strong non-linearity a large amount of information will be lost, which makes the estimation result inaccurate. In recent years, in the study of nonlinear filtering problems, the following two ideas have mainly been adopted in the design of recursive filters using the EKF structure. The first is to transform the truncation error into the form of a product of the scaling matrix and a time-varying positive definite matrix related to the problem, then set the upper bound of the covariance matrix, obtain the upper bound of the minimum covariance matrix by numerically solving the Ricatti equation, and finally use recursive filtering, which cannot accurately analyze the scaling matrix, before obtaining the covariance matrix through the Ricatti equation. The inverse matrix process is more complicated, and in occasions with high dimensions the computational burden is greatly increased to the point that real-time requirements cannot be met. The second is to use the idea of Taylor expansion to expand the high-order term while using the original equation to expand the dimension, design new state and measurement equations, use recursive filtering to obtain the required state estimation value, and avoid first above method. The “dimension disaster” caused by inversion in the dimensional situation, as well as the expansion order, can be reasonably selected according to the nonlinear strong and weak characteristics in the actual problem, which takes into account the requirements of computing power and estimation accuracy and has a good real-time filtering feature. A block diagram of the algorithm is shown in Figure 1.

Figure 1.

Algorithm block diagram.

3.1. Taylor Expansion of Nonlinear System and Introduction of Remainder Variables

Among the nonlinear filtering algorithms, the extended Kalman filter (EKF) algorithm is widely used. Its main idea is perform a first-order Taylor expansion of the nonlinear state equation around the filter estimated value. The main process is as follows.

Let ; then, is subjected to a first-order Taylor expansion at the filter estimate to obtain the following expression for :

where the state transition matrix is , and is the state remainder variables.

In the same way, the first-order Taylor expansion of the nonlinear observation function at the state prediction value can be obtained:

where the observation matrix is , and is the observation remainder.

From this, we can see that in the original extended Kalman filtering algorithm, which uses only the first-order term information of the nonlinear function, the high-order term information and the truncation error are directly discarded, which makes the estimation accuracy low in occasions with a high degree of nonlinearity and leads to inaccurate estimation results. In certain extreme cases, the filtering results may even directly diverge. Here, we introduce remainder variables into the state equation and observation equation, respectively, to compensate for the loss of high-order information in the original filtering algorithm.

3.2. Dynamic Modeling of Remainder Variables and Establishment of Higher-Order Linear Systems

The remainder variable is obtained by integrating the constant term with the truncation error after Taylor expansion of the nonlinear state function and the observation function around the filter estimation value and the one-step prediction value, respectively. By using the remainder variable information to improve the estimation accuracy, the original state equation and observation equation need to be expanded and rewritten to establish a high-order Kalman filter.

Lemma 1.

The n-order Taylor formula of the binary function at :

Assuming that is continuous in a neighborhood of and has continuous partial derivatives up to order; if is any point in this neighborhood, there are

where

Lemma 2.

Taylor expansion of a multivariate function at :

Assume that

where

3.2.1. Pseudo-Linear Representation of State Function

According to the above lemma, the state transition function in Formula (1) can be expressed as a polynomial form using Taylor expansion, as follows.

For simplicity, the following derivation takes two state variables, and :

where is a constant, , are the weighted sum of all l order tensor terms, is the weight of the corresponding term, and are the respective remainder variables of the state.

Theorem 1.

Let be a set of l-order hidden variables corresponding to the original system variable .

Theorem 2.

The weight vector corresponding to the l-order latent variable vector is then

We use the following two states as an example:

Donate

Then,

Remark 1.

Compared with the original state model, , the above equation is in a pseudo-linear form with higher-order latent variables and remainder variables . There is only a change in representation; as there is no essential difference, this is called pseudo-linearization.

3.2.2. Pseudolinear Representation of the Measurement Function

Similarly, the measurement function in formula (2) is expressed as a polynomial form by Taylor expansion, as follows:

where denotes the remainder variables of the measurement function:

3.2.3. Dynamic Modeling and Higher-Order Linearization of Remainder Variables Based on Taylor Expansion

In order to convert the pseudo-linearized model established above into a real high-order linearized form, it is necessary to expand the dimension and model using the high-order latent variables and remainder variables as new variables of the system.

In order to solve the problem in Remark 1, the high-order latent variable and the remainder variables and are used as time-varying parameters and the linear coupling relationship between the l-order latent variable and the u-order latent variable is established, along with the dynamic model relationship of the remainder variables and :

Then, the latent variables and remainder variables are used as the extension of the original state vector to realize the linear representation of the state model. Here, and can be identified according to the input information of the original state model; when there is no prior information, the settings are as follows:

Then,

Denote

The argmented state equation can be described as

Based on the above-mentioned argmented state variables, the linearized description model of the observation equation is similarly described as follows:

Denote ,

, where, .

Then, the linearized representation of the nonlinear measurement model equation is as follows:

3.3. Recursive Filtering Algorithm

Considering the linearized state model and measurement model Equations (30) and (32) shown above, its statistical characteristics are as follows:

Step 1: Set the initial values of the new system; then, according to the initial value of , the following formula is satisfied:

Then, satisfies the following characteristics:

where is a positive semi-definite matrix.

Step 2: Recursive filtering

Assuming that has been obtained, that is, and are known, the new higher-order Kalman filter is designed as follows:

The corresponding covariance matrix is as follows:

Step 3: Time Update

(1) Obtain the following based on and :

(2) Obtain based on and :

Step 4: Observe Update

(3) According to the relevant information of and the observed value, the gain matrix is obtained:

(4) A higher-order Kalman filter is obtained from the remainders of the actual and predicted observations of and :

(5) Calculate the update error covariance matrix:

is the obtained state estimate.

4. Performance Analysis

4.1. Projection Matrix Analysis

Rewrite the expanded state variables in Equation (46) as the original state variables and all higher-order variables and remainder variables in the following form:

where and are the gain matrices corresponding to the original variables and the remainder augmented-dimensional variables, respectively, and and are the observation matrices corresponding to the original variables and the remaining augmented-dimensional variables, respectively. Let the projection operator be

where and are matrices that match the dimensions of the original and remainder augmented variables, respectively.

Then,

It is found that after projection through the projection matrix, only the original system state estimation value is retained, which reduces the complexity of the algorithm and the actual computational burden, and includes more information from the model, improving the estimation accuracy.

4.2. Covariance Matrix Analysis

Bring Equation (48) into Equation (47) and at the same time divide the covariance matrix into blocks according to the original variables and the remaining dimension expansion variables, then write as follows:

where is the covariance matrix of the original variable,

is the covariance matrix of the original variable and the remainder variable, is the covariance matrix of the remainder variable and the original variable, and is the covariance matrix of the remainder variable.

Then, the original variable covariance matrix can be calculated by the projection matrix as follows:

In the experiment, it was found that is a positive-definite matrix. Compared with the original EKF algorithm, the posterior covariance matrix becomes smaller with the application of higher-order residual information, and the algorithm makes full use of the higher-order information of the model. Theoretically, the state estimation accuracy will be higher.

5. Simulation Experiments

For multi-robot formation systems, the state equation is as follows [24,35]:

where and represent the position and direction of the i-th robot, respectively, and and represent the linear velocity and angular velocity, respectively. Suppose that , , are Gaussian white noise with zero mean variance .

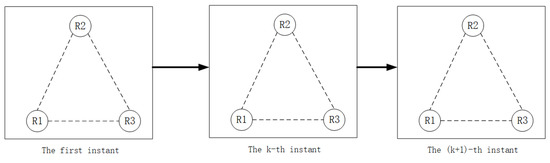

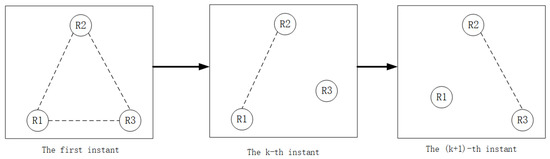

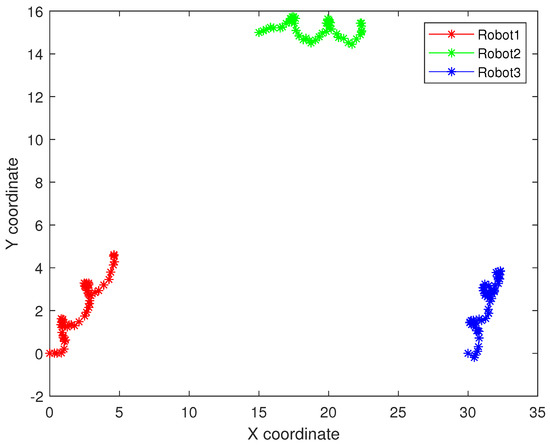

In a multi-robot formation, is a time-varying matrix related to the formation and is the formation; if is an upper triangular matrix, it means that the formation has a fixed movement formation, as shown in Figure 2. If , it means that the formation is time-varying and has a random switching topology, as shown in Figure 3. We use the extended Kalman filter (EKF) and high-order Kalman filter methods to estimate the second-order Kalman filter algorithm (SOKF), including the remainder extended Kalman filter algorithm (REKF), as well as the second-order remainder Kalman filter algorithm(SEORKF) for comparison.

Figure 2.

Multi-robot formation with fixed formation.

Figure 3.

Multi-robot formation in a random topology formation.

As can be seen from the above figure, in a robot formation with a fixed formation, the robot maintains the formation at the first moment and continues to move with a unique nonlinear model. However, in a formation with a random switching topology, at the first moment it maintains a fixed formation structure. With the constraints of different communication or task division, the formation at each moment will be different. There are arbitrary formations or individual formations, and they maintain their own nonlinear motion.

The visual measurement is provided by the following equation:

where defines the coordinates of the robot in the image plane, are its frame coordinates, is the distance from the optical center of the camera to the robot, and and are variable pixel magnification factors; for a visual tracking system, the feature points are placed on the ceiling, are the coordinates of the feature points in the world frame, and is the camera image coordinate principal point; is white Gaussian noise with zero mean variance .

In the simulation, the following parameters of the visual tracking system are adopted:

,,,,,,

The process noise covariance matrix is and the measurement noise covariance matrix is .

To illustrate the tracking performance of the proposed filter, we use the root mean square error (MSE) of the three robots. Over 50 Monte-Carlo runs were obtained, and the table below shows the mean squared error for each robot position:

where represents the position estimate of the i-th robot at the m-th Monte Carlo run.

5.1. Trajectory Estimation of Multi-Robot in Fixed Formation

In a multi-robot formation, is a time-varying matrix related to the formation, is the formation, and when is an upper triangular matrix, it means that the formation has a fixed movement formation to complete a certain task. Table 1 shows the multi-robot trajectory estimation MSE with fixed formation, where ’Improved’ refers to the improved estimation accuracy compared with EKF method.

Table 1.

MSE for multi-robot trajectory estimation in fixed formation.

The original nonlinear filtering algorithms based on Taylor expansion, such as extended Kalman filtering and high-order Kalman filtering methods, directly discard the truncation error. The estimation accuracy has a great influence. Table 2 shows the truncation errors generated by the EKF and second-order Kalman filtering methods for estimating the fixed formation trajectories of multi-robot. It can be seen from the table below that both methods have considerable truncation errors.

Table 2.

Truncation error of trajectory estimation in fixed-formation multi-robot.

In the high-order Kalman filtering method, the remainder variables are introduced into the algorithm to make full use of the nonlinear model information, which can effectively avoid the influence of the truncation error on the estimation results; however, there will always be rounding errors. Here, the rounding error results generated by the first-order remainder extended Kalman filtering algorithm and the second-order remainder Kalman filtering algorithm with a fixed formation of robots are compared and analyzed, as show in Table 3, with the result that the rounding error is much smaller than the truncation error.

Table 3.

Rounding error of trajectory estimation in fixed-formation multi-robot swarm.

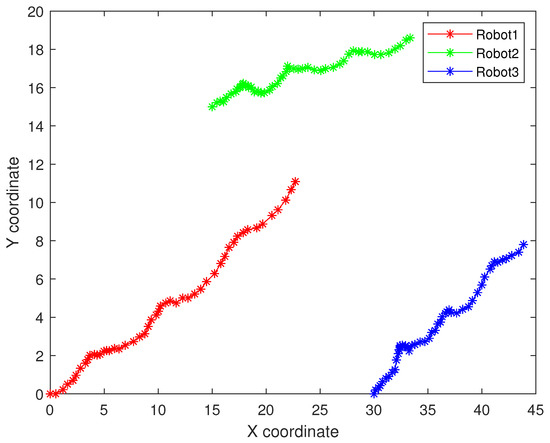

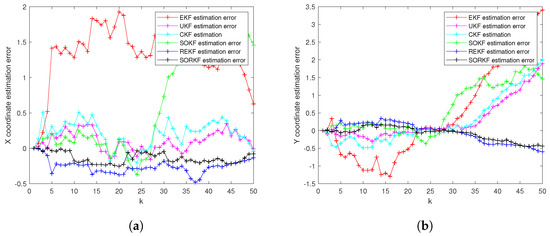

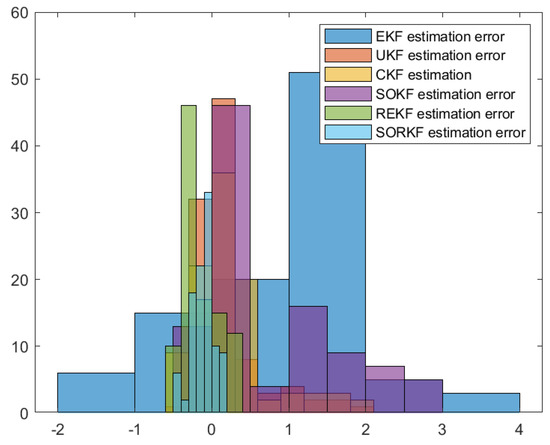

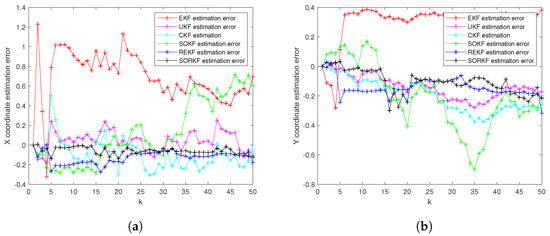

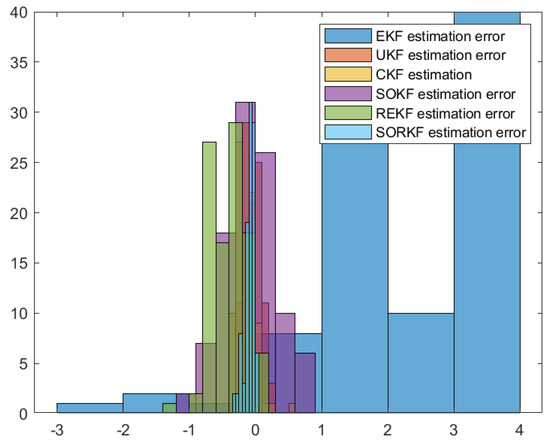

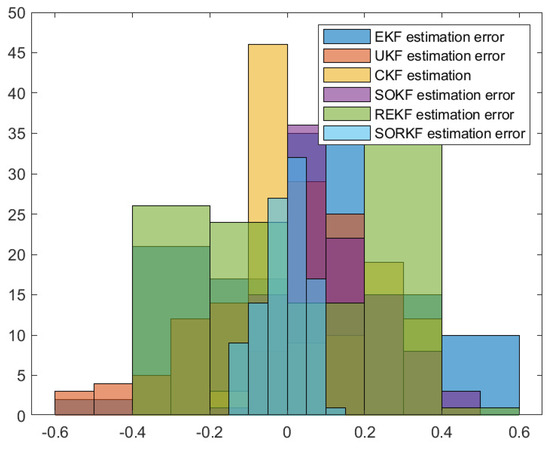

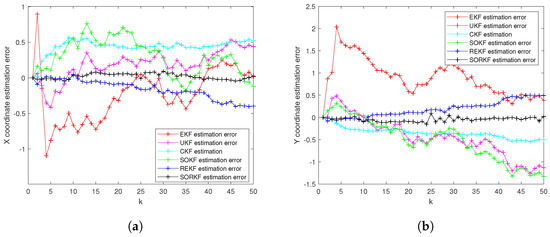

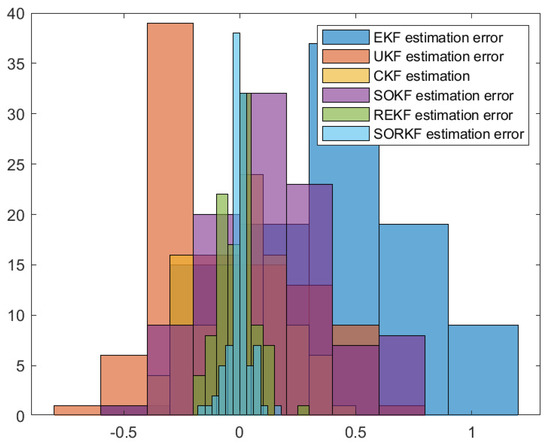

As follows, in the fixed formation, Figure 4 shows the real trajectory of the fixed multi-robot formation; Figure 5a,b shows the R1 estimation error in X-Coordinate and Y-Coordinate respectively; Figure 6 shows the histogram of R1 estimation error; Figure 7a,b shows the R2 estimation error in X-Coordinate and Y-Coordinate respectively; Figure 8 shows the histogram of R2 estimation error; Figure 9a,b shows the R3 estimation error in X-Coordinate and Y-Coordinate respectively; Figure 10 shows the histogram of R3 estimation error.

Figure 4.

The real trajectory of the fixed multi-robot formation.

Figure 5.

R1 estimation error of multi-robot in fixed formation. (a) X-Coordinate estimation error; (b) Y-Coordinate estimation error.

Figure 6.

Histogram of R1 positioning estimation error in fixed formation.

Figure 7.

R2 estimation error of multi-robot in fixed formation. (a) X-Coordinate estimation error; (b) Y-Coordinate estimation error.

Figure 8.

Histogram of R2 positioning estimation error in fixed formation.

Figure 9.

R3 estimation error of multi-robot in fixed formation. (a) X-Coordinate estimation error; (b) Y-Coordinate estimation error.

Figure 10.

Histogram of R3 positioning estimation error in fixed formation.

5.2. Multi-Robot Formation Trajectory Estimation with Random Topology Structure

In the multi-robot formation, is the time-varying matrix related to the formation, that is, , indicating that the formation has a random switching topology. Table 4 shows MSE for multi-robot trajectory estimation and Table 5 shows the truncation error of trajectory estimation. The truncation error of the trajectory estimation extended Kalman algorithm and the second-order Kalman filtering algorithm in Table 6 shows the rounding error when the trajectory estimation is performed by introducing the remainder variables into the above two algorithms, and the results are similar to the trajectory estimation under the fixed formation.

Table 4.

MSE for multi-robot trajectory estimation in random topology formation.

Table 5.

Truncation error of trajectory estimation in random topology formation.

Table 6.

Rounding error of trajectory estimation in random topology formation.

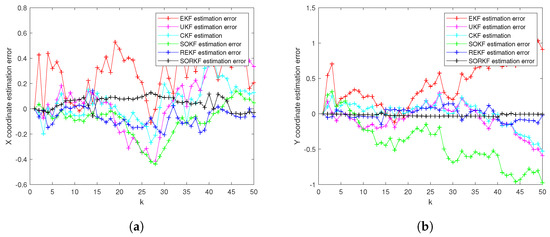

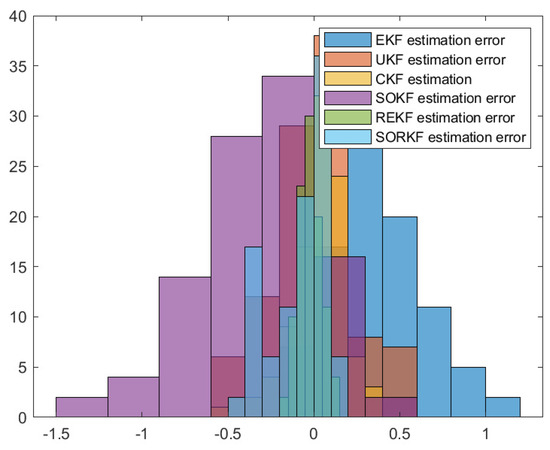

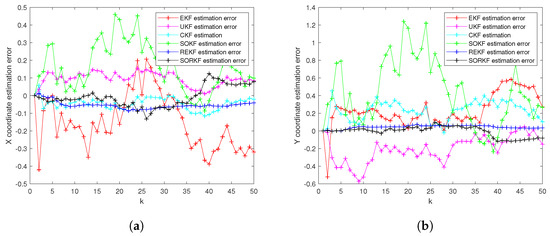

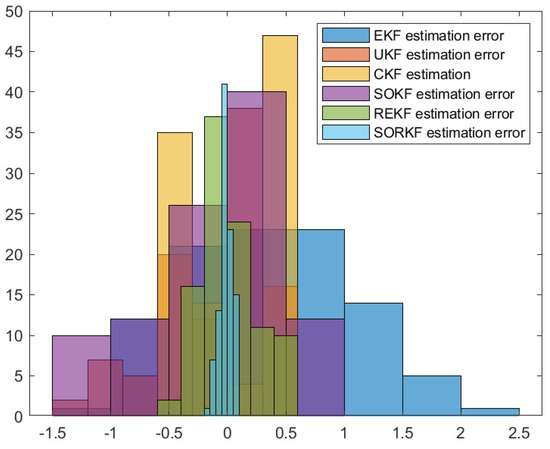

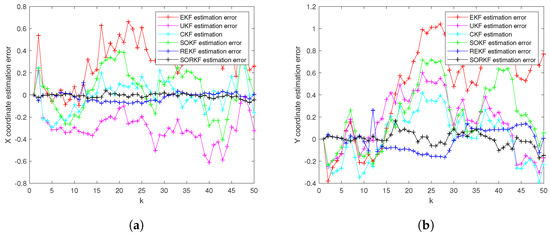

As follows, in random topology formation, Figure 11 shows the real trajectory; Figure 12a,b shows the R1 estimation error in X-Coordinate and Y-Coordinate respectively; Figure 13 shows the histogram of R1 estimation error; Figure 14a,b shows the R2 estimation error in X-Coordinate and Y-Coordinate respectively; Figure 15 shows the histogram of R2 estimation error; Figure 16a,b shows the R3 estimation error in X-Coordinate and Y-Coordinate respectively; Figure 17 shows the histogram of R3 estimation error.

Figure 11.

The real trajectory of the multi-robot swarm in random topology formation.

Figure 12.

R1 estimation error of multi-robot swarm in random topology formation. (a) X-Coordinate estimation error; (b) Y-Coordinate estimation error.

Figure 13.

Histogram of R1 positioning estimation error in random topology formation.

Figure 14.

R2 estimation error of multi-robot swarm in random topology formation. (a) X-Coordinate estimation error; (b) Y-Coordinate estimation error.

Figure 15.

Histogram of R2 positioning estimation error in random topology formation.

Figure 16.

R3 estimation error of multi-robot swarm in random topology formation. (a) X-Coordinate estimation error; (b) Y-Coordinate estimation error.

Figure 17.

Histogram of R3 positioning estimation error in random topology formation.

As shown in the figure above, we use a fixed formation formation and a random topology formation to respectively estimate the actual trajectories of the three robots, use the estimation error of each robot in the x–y direction of the two-dimensional plane as a measure, and use the EKF and the second-order Kalman filtering(SOKF) methods, respectively, to introduce the residual term variable in each of these two methods. From the results, it can be concluded that the estimation accuracy of EKF is the lowest, followed by the second-order Kalman filtering algorithm (SOKF), the remainder extended Kalman filtering Algorithm (REKF), and the remainder second-order Kalman filtering algorithm (SORKF). The other three filtering methods designed according to EKF structure, excepting EKF, significantly improve the estimation accuracy of EKF. Thus, we can see that for the robot in the motion model, the degree of non-linearity of the turning characteristics is relatively high. If first-order Taylor expansion is performed, the information contained in it cannot be completely extracted, which inevitably leads to a decrease in the estimation accuracy. The higher the degree of expansion performed, the higher the robot position estimation accuracy, and the more accurate the robot estimation estimation. In addition, we analyzed the truncation error of the EKF and second-order Kalman filtering(SOKF) algorithms. The truncation error has a great influence on the estimation accuracy. When the nonlinearity is strong, the model information cannot be fully utilized by directly discarding the truncation error, resulting in low estimation accuracy. After the variables are introduced, the rounding error of the estimation results is analyzed, and it is found that the rounding error is much smaller than the truncation error. The Taylor expansion of the nonlinear model and the use of remainder variables can make full use of the model information to improve the estimation accuracy and make the estimation more accurate.

6. Conclusions and Future Work

This paper studies the multi-robot trajectory estimation problem, and proposes a fusion estimation method based on the high-order Kalman filter algorithm. The extended Kalman filter (EKF) algorithm used in the existing robot position estimation only considers first-order expansion and ignores the high-order information. To solve the problem, a joint trajectory estimation method with a multi-robot formation based on the high-order Kalman filtering method was adopted, the Taylor expansion of the state equation and the observation equation was carried out, and the remainder variables were introduced on this basis, effectively improving the estimation accuracy. Through a simulation, we found that for robot fixed formations and random topology formations the introduction of remainder variables improves the accuracy of position estimation by more than 50%, and in certain cases even more, compared to the EKF algorithm. At the same time, the truncation error in the estimation process of the EKF and the high-order Kalman filter algorithms was analyzed and compared with the rounding error of the estimation algorithm after the introduction of the remainder variable. We found that the rounding error is much smaller than the truncation error. After the remainder variable is introduced during filtering, the algorithm makes full use of the model information, and the estimation accuracy is greatly improved.

In future research, we may encounter more complex models. If the state model has strong nonlinear characteristics, the observation model has super strong nonlinear characteristics, and the modeling error is non Gaussian white noise, our method will be difficult to achieve, and will be completed with the help of the characteristic function filtering method [36,37]; At the same time, for the neural network model, we can also introduce the high-order Kalman filtering method to update the real-time parameters [38]; Finally, for the state estimation of non-cooperative targets, there must be multiple targets and sensors. We can extend the method in this paper to distributed filtering. Similarly, for the distributed model of Federated learning, we will also applicable [39,40].

Author Contributions

Conceptualization, M.W., C.W. and W.L.; methodology, C.W.; software, M.W.; validation, M.W.; formal analysis, M.W.; investigation, M.W.; resources, M.W.; data curation, M.W.; writing—original draft preparation, M.W.; writing—review and editing, C.W. and W.L; visualization, C.W.; supervision, C.W. and W.L.; project administration, C.W.; funding acquisition, W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shaanxi Province key research and development program 2021GY-087.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Mathematical Symbols

The important mathematical symbols used in the text are described as follows:

Table A1.

Mathematical Symbols.

Table A1.

Mathematical Symbols.

| Mathematical Symbols | Description | |

|---|---|---|

| x | X | State vector |

| y | Y | Observation vector |

| State estimation | ||

| Observation estimation | ||

| Nonlinear continuous function | ||

| P | Covariance matrix | |

| K | Kalman gain | |

| A | State transfer matrix | |

| H | Observation matrix | |

| State remainder variable | ||

| Observation remainder variable | ||

References

- Liu, H.; Xu, X.; Lu, J.A.; Chen, G.; Zeng, Z. Optimizing pinning control of complex dynamical networks based on spectral properties of grounded Laplacian matrices. IEEE Trans. Syst. Man Cybern. Syst. 2018, 51, 786–796. [Google Scholar] [CrossRef] [Green Version]

- Qiu, H.X.; Duan, H.B. From collective flight in bird flocks to unmanned aerial vehicle autonomous swarm formation. Chin. J. Eng. 2017, 39, 317–322. [Google Scholar] [CrossRef]

- Cornejo, A.; Rubenstein, M.; Nagpal, R. Programmable self-assembly in a thousand-robot swarm. Science 2014, 345, 795–799. [Google Scholar]

- Vicsek, T.; Zafeiris, A. Collective motion. Phys. Rep. 2012, 517, 71–140. [Google Scholar] [CrossRef] [Green Version]

- Li, T.; Qiu, Q.; Zhao, C. A fully distributed protocol with an event-triggered communication strategy for second-order multi-agent systems consensus with nonlinear dynamics. Sensors 2021, 21, 4059. [Google Scholar] [CrossRef]

- Yan, J.; Yu, Y.; Wang, X. Distance-Based Formation Control for Fixed-Wing UAVs with Input Constraints: A Low Gain Method. Drones 2022, 6, 159. [Google Scholar] [CrossRef]

- Martinez, J.B.; Becerra, H.M.; Gomez-Gutierrez, D. Formation tracking control and obstacle avoidance of unicycle-type robots guaranteeing continuous velocities. Sensors 2021, 21, 4374. [Google Scholar] [CrossRef]

- Jiangfeng, Z.; Chunxiao, L.; Shuzhe, S.; Dadong, H.; Biao, W.; Jun, C.; Ying, T. Research on Decision-Making optimization for Operation System of Power Grid Framework under Smart Grid and Dijkstra Algorithm. In Proceedings of the 2021 IEEE International Conference on Data Science and Computer Application (ICDSCA), Dalian, China, 29–31 October 2021; pp. 378–382. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Dean, G. An introduction to Kalman filters. Meas. Control 1986, 19, 69–73. [Google Scholar] [CrossRef]

- Lee, T.S. Theory and application of adaptive fading memory Kalman filters. IEEE Trans. Circuits Syst. 1988, 35, 474–477. [Google Scholar] [CrossRef]

- Meinhold, R.J.; Singpurwalla, N.D. Robustification of Kalman filter models. J. Am. Stat. Assoc. 1989, 84, 479–486. [Google Scholar] [CrossRef]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef] [Green Version]

- Yang, F.; Zheng, L.T.; Wang, J.Q.; Pan, Q. Double Layer Unscented Kalman Filter. Acta Autom. Sin. 2019, 45, 1386–1391. [Google Scholar]

- Perea, L.; Elosegui, P. New state update equation for the unscented Kalman filter. J. Guid. Control Dyn. 2008, 31, 1500–1504. [Google Scholar] [CrossRef]

- Chandra, K.; Gu, D.W.; Postlethwaite, I. Square Root Cubature Information Filter. IEEE Sens. J. 2013, 13, 750–758. [Google Scholar] [CrossRef]

- Cui, T.; Sun, X.; Wen, C. A Novel Data Sampling Driven Kalman Filter Is Designed by Combining the Characteristic Sampling of UKF and the Random Sampling of EnKF. Sensors 2022, 22, 1343. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Cubature kalman filters. IEEE Trans. Autom. Control 2009, 54, 1254–1269. [Google Scholar] [CrossRef] [Green Version]

- Arasaratnam, I.; Haykin, S. Square-root quadrature Kalman filtering. IEEE Trans. Signal Process. 2008, 56, 2589–2593. [Google Scholar] [CrossRef]

- Rvg, A.; Pcpmp, A.; Hkk, B.; Mcz, C. Nonlinear filtering for sequential spacecraft attitude estimation with real data: Cubature Kalman Filter, Unscented Kalman Filter and Extended Kalman Filter - ScienceDirect. Adv. Sp. Res. 2019, 63, 1038–1050. [Google Scholar]

- Song, W.; Wang, J.; Zhao, S.; Shan, J. Event-triggered cooperative unscented Kalman filtering and its application in multi-UAV systems. Automatica 2019, 105, 264–273. [Google Scholar] [CrossRef]

- Li, W.; Wang, Z.; Ho, D.; Wei, G. On Boundedness of Error Covariances for Kalman Consensus Filtering Problems. IEEE Trans. Autom. Control 2019, 65, 2654–2661. [Google Scholar] [CrossRef]

- Hu, J.; Wang, Z.; Liu, S.; Gao, H. A variance-constrained approach to recursive state estimation for time-varying complex networks with missing measurements. Automatica 2016, 64, 155–162. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Jia, Y.; Du, J. State estimation for stochastic complex networks with switching topology. IEEE Trans. Autom. Control 2017, 62, 6377–6384. [Google Scholar] [CrossRef]

- Xiaohui, S.; Chenglin, W.; Tao, W. High-Order Extended Kalman Filter Design for a Class of Complex Dynamic Systems with Polynomial Nonlinearities. Chin. J. Electron. 2021, 30, 508–515. [Google Scholar] [CrossRef]

- Sun, X.; Wen, T.; Wen, C.; Cheng, X.; Yunkai, W.U. High-Order Extended Strong Tracking Filter. Chin. J. Electron. 2021, 30, 1152–1158. [Google Scholar]

- Xiaohui, S.; Chenglin, W.; Tao, W. A Novel Step-by-Step High-Order Extended Kalman Filter Design for a Class of Complex Systems with Multiple Basic Multipliers. Chin. J. Electron. 2021, 30, 313–321. [Google Scholar] [CrossRef]

- SUN, X.; WEN, C.; WEN, T. Maximum Correntropy High-Order Extended Kalman Filter. Chin. J. Electron. 2022, 31, 190–198. [Google Scholar]

- Wang, Q.; Sun, X.; Wen, C. Design method for a higher order extended Kalman filter based on maximum correlation entropy and a Taylor network system. Sensors 2021, 21, 5864. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Wen, C.; Sun, X. Design Method of High-Order Kalman Filter for Strong Nonlinear System Based on Kronecker Product Transform. Sensors 2022, 22, 653. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Wen, C. Real-Time Updating High-Order Extended Kalman Filtering Method Based on Fixed-Step Life Prediction for Vehicle Lithium-Ion Batteries. Sensors 2022, 22, 2574. [Google Scholar] [CrossRef] [PubMed]

- Chow, S.M.; Ferrer, E.; Nesselroade, J.R. An unscented Kalman filter approach to the estimation of nonlinear dynamical systems models. Multivar. Behav. Res. 2007, 42, 283–321. [Google Scholar] [CrossRef] [PubMed]

- Guo, L.; Wang, H. Minimum entropy filtering for multivariate stochastic systems with non-Gaussian noises. IEEE Trans. Autom. Control 2006, 51, 695–700. [Google Scholar]

- Zhou, J.; Wang, H.; Zhou, D. PDF tracking filter design using hybrid characteristic functions. In Proceedings of the 2008 American Control Conference, Seattle, WA, USA, 11–13 June 2008; pp. 3046–3051. [Google Scholar]

- Chen, X.; Jia, Y. Indoor localization for mobile robots using lampshade corners as landmarks: Visual system calibration, feature extraction and experiments. Inter. J. Control Autom. Syst. 2014, 12, 1313–1322. [Google Scholar] [CrossRef]

- Wen, C.; Cheng, X.; Xu, D.; Wen, C. Filter design based on characteristic functions for one class of multi-dimensional nonlinear non-Gaussian systems. Automatica 2017, 82, 171–180. [Google Scholar] [CrossRef]

- Wen, C.; Ge, Q.; Cheng, X.; Xu, D. Filters design based on multiple characteristic functions for the grinding process cylindrical workpieces. IEEE Trans. Ind. Electron. 2017, 64, 4671–4679. [Google Scholar] [CrossRef]

- Wen, T.; Xie, G.; Cao, Y.; Cai, B. A DNN-based channel model for network planning in train control systems. IEEE Trans. Intell. Transp. Syst. 2021, 23, 2392–2399. [Google Scholar] [CrossRef]

- Ma, X.; Wen, C.; Wen, T. An asynchronous and real-time update paradigm of federated learning for fault diagnosis. IEEE Trans. Ind. Inform. 2021, 17, 8531–8540. [Google Scholar] [CrossRef]

- Xue, M.; Chenglin, W. An Asynchronous Quasi-Cloud/Edge/Client Collaborative Federated Learning Mechanism for Fault Diagnosis. Chin. J. Electron. 2021, 30, 969–977. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).