A Timestamp-Independent Haptic–Visual Synchronization Method for Haptic-Based Interaction System

Abstract

:1. Introduction

- i.

- Haptic–visual synchronization plays an important role in HIS. It is worthy of further investigation.

- ii.

- The traditional timestamp-dependent method used in an HIS has some shortcomings. As a result, there is still room for research on the haptic–visual synchronization method.

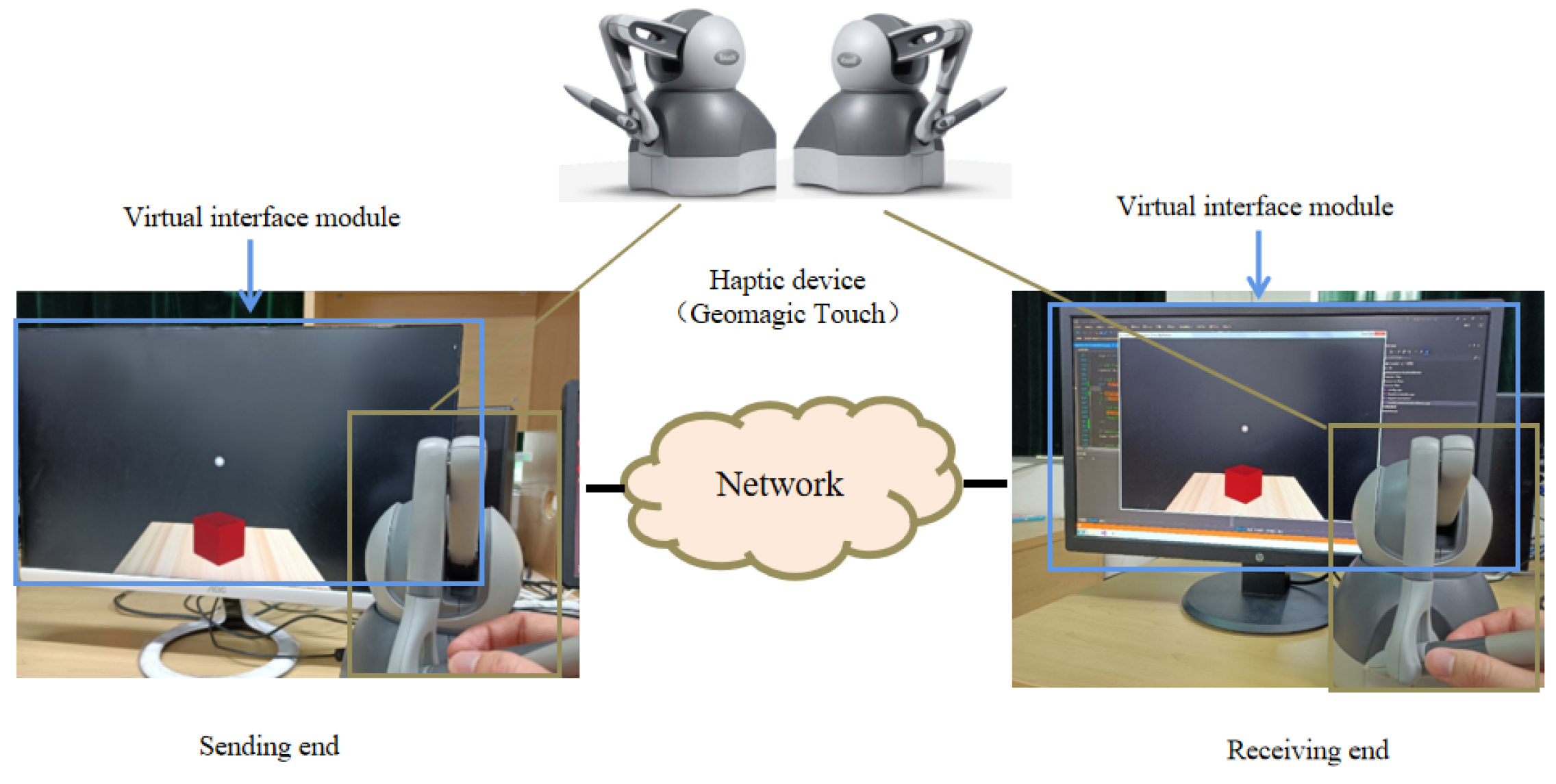

2. Motivation

3. Proposed Method

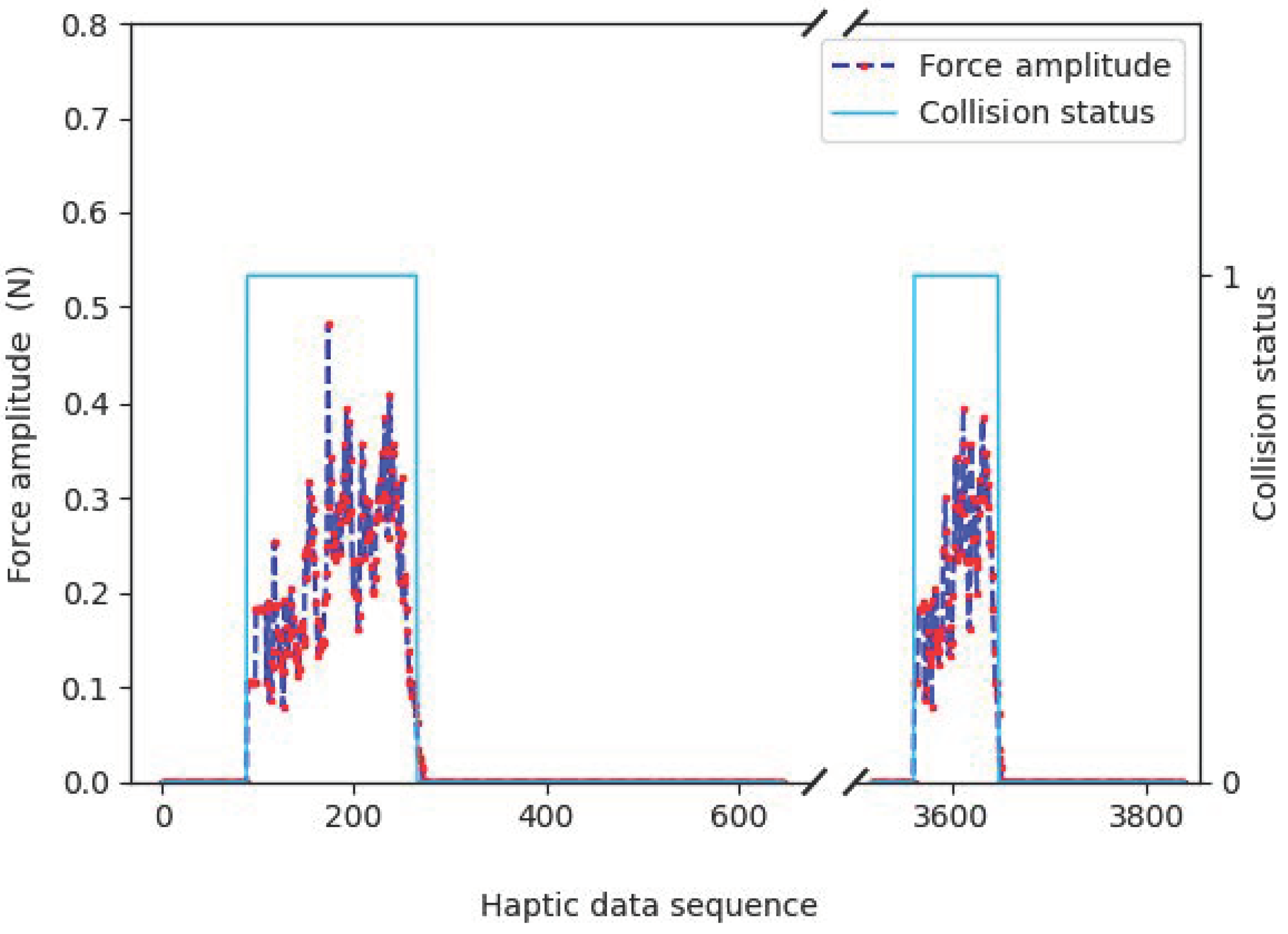

3.1. Key Sample Detection in the Haptic Signal

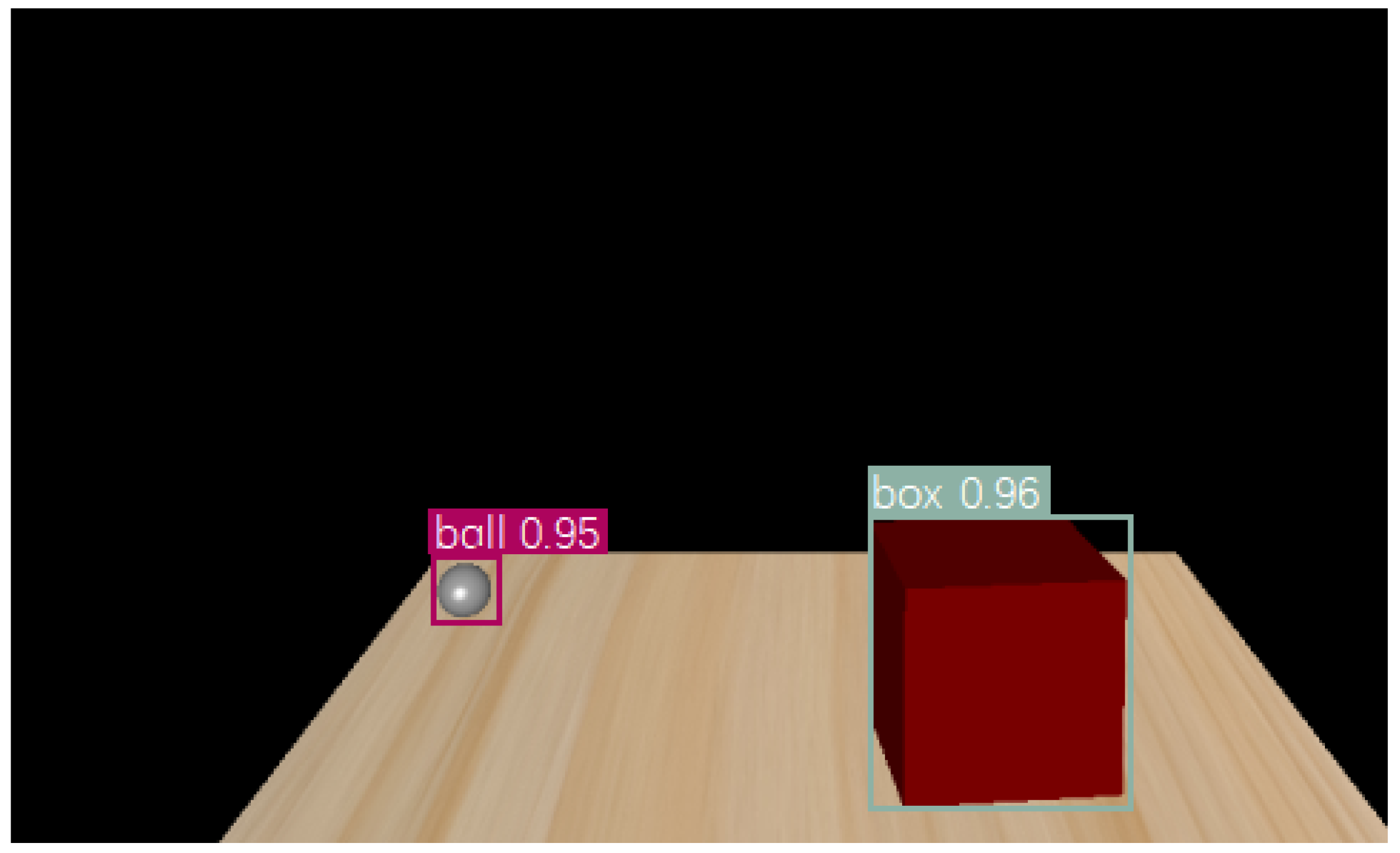

3.2. Key Frame Detection in the Visual Signal

3.2.1. Object Detection

3.2.2. Collision Detection

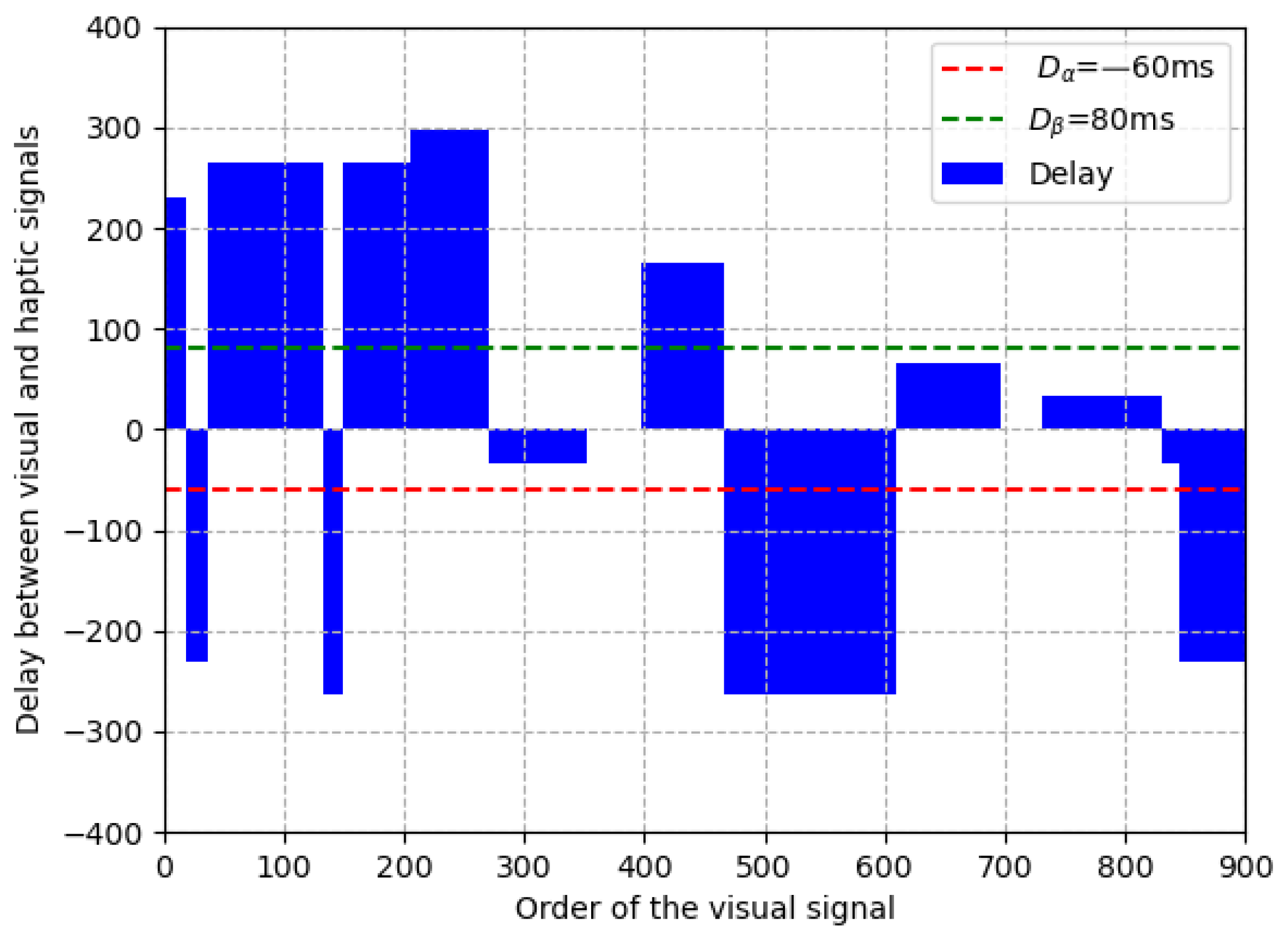

3.3. The Synchronization Threshold

3.4. Asynchronization Removal

3.5. The Overall Method

4. Experimental Results

4.1. Estimation Accuracy of Synchronization Delay

4.2. Effectiveness of the Haptic–Visual Synchronization

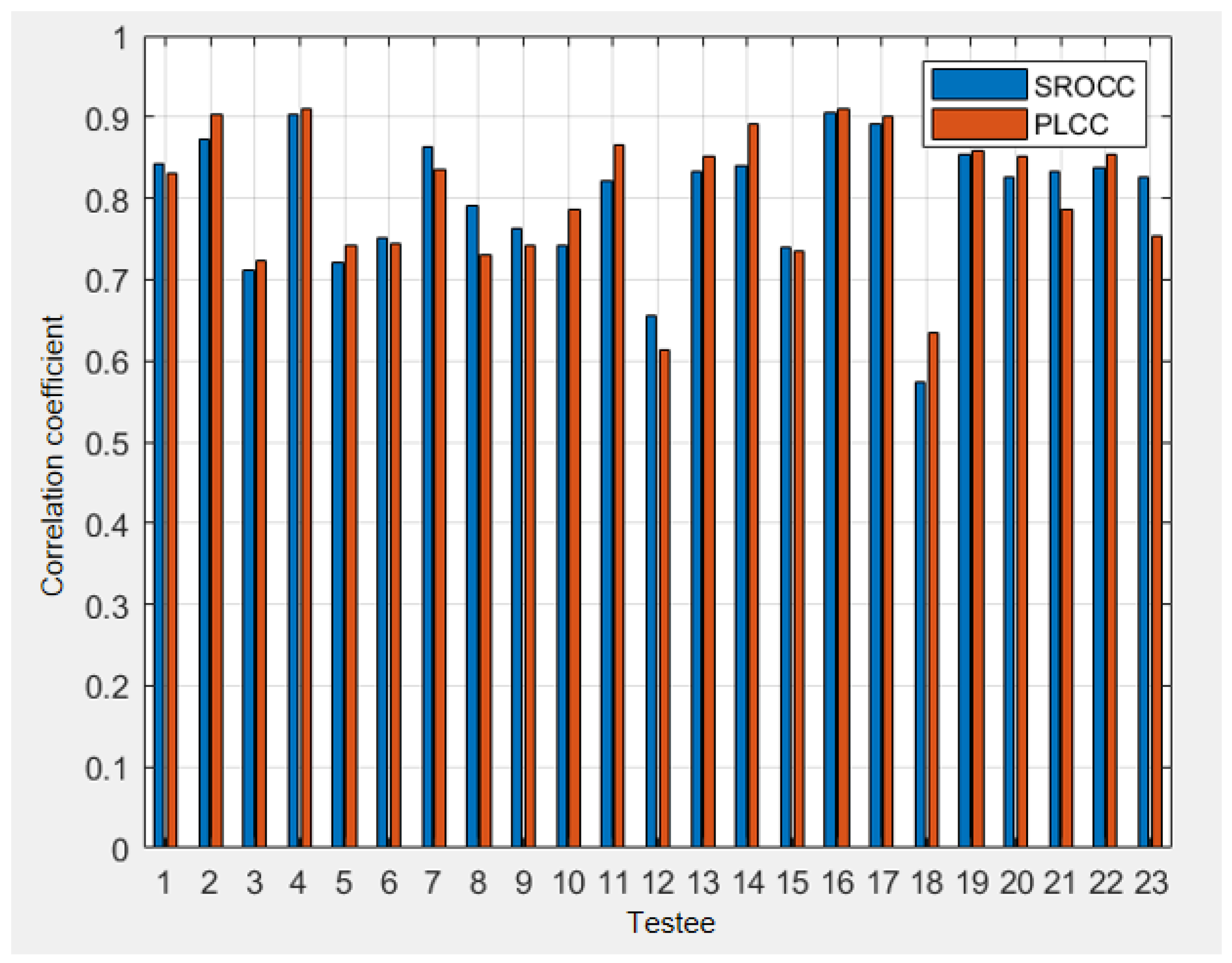

4.3. Subjective Test on User Experience

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aijaz, A.; Dohler, M.; Aghvami, A.H.; Friderikos, V.; Frodigh, M. Realizing the Tactile Internet: Haptic Communications over Next Generation 5G Cellular Networks. IEEE Wirel. Commun. 2017, 24, 82–89. [Google Scholar] [CrossRef] [Green Version]

- Antonakoglou, K.; Xu, X.; Steinbach, E.; Mahmoodi, T. Toward Haptic Communications Over the 5G Tactile Internet. IEEE Commun. Surv. Tutor. 2018, 20, 3034–3059. [Google Scholar] [CrossRef] [Green Version]

- Qiao, Y.; Zheng, Q.; Lin, Y.; Fang, Y.; Xu, Y.; Zhao, T. Haptic Communication: Toward 5G Tactile Internet. In Proceedings of the 2020 Cross Strait Radio Science & Wireless Technology Conference (CSRSWTC), Fuzhou, China, 13–16 December 2020; pp. 1–3. [Google Scholar]

- Steinbach, E.; Strese, M.; Eid, M.; Liu, X.; Bhardwaj, A.; Liu, Q.; Al-Ja’afreh, M.; Mahmoodi, T.; Hassen, R.; El Saddik, A.; et al. Haptic Codecs for the Tactile Internet. Proc. IEEE 2019, 107, 447–470. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Huang, Y.; Chen, W.; Xue, H.; Zhao, T. Error Resilience of Haptic Data in Interactive Systems. In Proceedings of the 2019 11th International Conference on Wireless Communications and Signal Processing (WCSP), Xi’an, China, 23–25 October 2019; pp. 1–6. [Google Scholar]

- Bortone, I.; Leonardis, D.; Mastronicola, N.; Crecchi, A.; Bonfiglio, L.; Procopio, C.; Solazzi, M.; Frisoli, A. Wearable Haptics and Immersive Virtual Reality Rehabilitation Training in Children With Neuromotor Impairments. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1469–1478. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Wei, L.; Cao, R.; Hanoun, S.; Bhatti, A.; Tai, Y.; Nahavandi, S. The Study of Using Eye Movements to Control the Laparoscope Under a Haptically-Enabled Laparoscopic Surgery Simulation Environment. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 3022–3026. [Google Scholar]

- Chen, Y.; Zhu, J.; Xu, M.; Zhang, H.; Tang, X.; Dong, E. Application of Haptic Virtual Fixtures on Hot-Line Work Robot-Assisted Manipulation. In Intelligent Robotics and Applications; Yu, H., Liu, J., Liu, L., Ju, Z., Liu, Y., Zhou, D., Eds.; Publishing House: Hefei, China, 2019; pp. 221–232. [Google Scholar]

- Durai, V.S.I.; Arjunan, R.; Manivannan, M. The Effect of Audio and Visual Modality Based CPR Skill Training with Haptics Feedback in VR. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 910–911. [Google Scholar]

- Decré, G.B.; Cloonan, C. A touch of gloss: Haptic perception of packaging and consumers’ reactions. J. Prod. Brand Manag. 2019, 11743, 117–132. [Google Scholar] [CrossRef]

- Wong, H.; Kuan, W.; Chan, A.; Omamalin, S.; Yap, K.; Ding, A.; Soh, M.; Rahim, A. Deformation and Friction: 3D Haptic Asset Enhancement in e-Commerce for the Visually Impaired. Haptic Interact. AsiaHaptics 2018, 535, 256–261. [Google Scholar]

- Lisini Baldi, T.; Scheggi, S.; Aggravi, M.; Prattichizzo, D. Haptic Guidance in Dynamic Environments Using Optimal Reciprocal Collision Avoidance. IEEE Robot. Autom. Lett. 2018, 3, 265–272. [Google Scholar] [CrossRef] [Green Version]

- Da Fonseca, V.P.; Monteiro Rocha Lima, B.; Alves de Oliveira, T.E.; Zhu, Q.; Groza, V.Z.; Petriu, E.M. In-Hand Telemanipulation Using a Robotic Hand and Biology-Inspired Haptic Sensing. In Proceedings of the 2019 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Istanbul, Turkey, 26–28 June 2019; pp. 1–6. [Google Scholar]

- Li, S.; Rameshwar, R.; Votta, A.M.; Onal, C.D. Intuitive Control of a Robotic Arm and Hand System With Pneumatic Haptic Feedback. IEEE Robot. Autom. Lett. 2019, 4, 4424–4430. [Google Scholar] [CrossRef]

- Zeng, Q.; Ishibashi, Y.; Fukushima, N.; Sugawara, S.; Psannis, K.E. Influences of inter-stream synchronization errors among haptic media, sound, and video on quality of experience in networked ensemble. In Proceedings of the 2013 IEEE 2nd Global Conference on Consumer Electronics (GCCE), Tokyo, Japan, 1–4 October 2013; pp. 466–470. [Google Scholar]

- Aung, S.T.; Ishibashi, Y.; Mya, K.T.; Watanabe, H.; Huang, P. Influences of Network Delay on Cooperative Work in Networked Virtual Environment with Haptics. In Proceedings of the 2020 IEEE Region 10 Conference (TENCON), Osaka, Japan, 16–19 November 2020; pp. 1266–1271. [Google Scholar]

- El-Helaly, M.; Amer, A. Synchronization of Processed Audio-Video Signals using Time-Stamps. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16 September–19 October 2007; pp. VI-193–VI-196. [Google Scholar]

- Staelens, N.; Meulenaere, J.D.; Bleumers, L.; Wallendael, G.V.; Cock, J.D.; Geeraert, K.; Vercammen, N.; Broeck, W.; Vermeulen, B.; Walle, R. Assessing the importance of audio/video synchronization for simultaneous translation of video sequences. Multimed. Syst. 2012, 18, 445–457. [Google Scholar] [CrossRef] [Green Version]

- Kikuchi, T.; Ozasa, Y. Watch, Listen Once, and Sync: Audio-Visual Synchronization With Multi-Modal Regression Cnn. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 3036–3040. [Google Scholar]

- Torfi, A.; Iranmanesh, S.M.; Nasrabadi, N.; Dawson, J. 3D Convolutional Neural Networks for Cross Audio-Visual Matching Recognition. IEEE Access 2017, 5, 22081–22091. [Google Scholar] [CrossRef]

- Yang, M.; Bourbakis, N.; Chen, Z.; Trifas, M. An Efficient Audio-Video Synchronization Methodology. In Proceedings of the 2007 IEEE International Conference on Multimedia and Expo, Beijing, China, 2–5 July 2007; pp. 767–770. [Google Scholar]

- Yang, L.; Song, Q.; Wang, Z.; Hu, M.; Liu, C. Hier R-CNN: Instance-Level Human Parts Detection and A New Benchmark. IEEE Trans. Image Process. 2021, 30, 39–54. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [Green Version]

- Ullah, A.; Xie, H.; Farooq, M.O.; Sun, Z. Pedestrian Detection in Infrared Images Using Fast RCNN. In Proceedings of the 2018 Eighth International Conference on Image Processing Theory, Tools and Applications (IPTA), Xi’an, China, 7–10 November 2018; pp. 1–6. [Google Scholar]

- Gomzales, R.; Machacuay, J.; Rotta, P.; Chinguel, C. Faster R-CNN with a cross-validation approach to object detection in radar images. In Proceedings of the 2021 IEEE International Conference on Aerospace and Signal Processing(INCAS), Lima, Peru, 28–30 November 2021; pp. 1–4. [Google Scholar]

- Ahmad, T.; Chen, X.; Saqlain, A.; Ma, Y. EDF-SSD: An Improved Feature Fused SSD for Object Detection. In Proceedings of the 2021 IEEE 6th International Conference on Cloud Computing and Big Data Analytics (ICCCBDA), Chengdu, China, 24–26 April 2021; pp. 469–473. [Google Scholar]

- Wang, Z.; Xie, K.; Zhang, X.; Chen, H.; Wen, C.; He, J. Small-Object Detection Based on YOLO and Dense Block via Image Super-Resolution. IEEE Access 2021, 9, 56416–56429. [Google Scholar] [CrossRef]

- Chen, H.; He, Z.; Shi, B.; Zhong, T. Research on Recognition Method of Electrical Components Based on YOLO V3. IEEE Access 2019, 7, 157818–157829. [Google Scholar] [CrossRef]

- International Telecommunication Union. Methodology for the Subjective Assessment of the Quality of Television Pictures; International Telecommunication Union: Geneva, Switzerland, 2002. [Google Scholar]

- Huang, P.; Sithu, M.; Ishibashi, Y. Media Synchronization in Networked Multisensory Applications with Haptics. In MediaSync; Montagud, M., Cesar, P., Boronat, F., Jansen, J., Eds.; Publishing House: Grao de Gandia, Spain; Amsterdam, The Netherlands, 2018; pp. 295–317. [Google Scholar]

- Thirumalai, C.; Chandhini, S.A.; Vaishnavi, M. Analysing the concrete compressive strength using Pearson and Spearman. In Proceedings of the 2017 International Conference of Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 20–22 April 2017; pp. 215–218. [Google Scholar]

- Yang, H.; Bao, B.; Guo, H.; Jiang, Y.; Zhang, J. Spearman Correlation Coefficient Abnormal Behavior Monitoring Technology Based on RNN in 5G Network for Smart City. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; pp. 1440–1442. [Google Scholar]

| Epoch | Batchsize | Learning Rate | ||

|---|---|---|---|---|

| 300 | 16 | 0.5 | 0.5 | cosine decay |

| Metrics | MAE (ms) | MaxAE (ms) |

|---|---|---|

| Results | 7.3 | 15 |

| 7 | −7 | 8 | −8 | 8 | 9 | −1 | 0 | 5 | −8 | −8 | 2 | 0 | 1 | −1 | −7 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 19 | 18 | 95 | 17 | 56 | 65 | 82 | 46 | 69 | 96 | 47 | 86 | 36 | 99 | 14 | 55 |

| Without Our Method | With Our Method | |

|---|---|---|

| Probabilities | 25.3% | 89.2% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Huang, L.; Zhao, T.; Fang, Y.; Lin, L. A Timestamp-Independent Haptic–Visual Synchronization Method for Haptic-Based Interaction System. Sensors 2022, 22, 5502. https://doi.org/10.3390/s22155502

Xu Y, Huang L, Zhao T, Fang Y, Lin L. A Timestamp-Independent Haptic–Visual Synchronization Method for Haptic-Based Interaction System. Sensors. 2022; 22(15):5502. https://doi.org/10.3390/s22155502

Chicago/Turabian StyleXu, Yiwen, Liangtao Huang, Tiesong Zhao, Ying Fang, and Liqun Lin. 2022. "A Timestamp-Independent Haptic–Visual Synchronization Method for Haptic-Based Interaction System" Sensors 22, no. 15: 5502. https://doi.org/10.3390/s22155502

APA StyleXu, Y., Huang, L., Zhao, T., Fang, Y., & Lin, L. (2022). A Timestamp-Independent Haptic–Visual Synchronization Method for Haptic-Based Interaction System. Sensors, 22(15), 5502. https://doi.org/10.3390/s22155502