Towards a Low-Cost Solution for Gait Analysis Using Millimeter Wave Sensor and Machine Learning

Abstract

:1. Introduction

- The use of MMW radar and ML to recognize and classify different abnormal gait patterns commonly associated with frailty and disability, including walking with a limp, walking with a stooped posture, and walking with an assistive device.

- The use of a low-cost MMW radar prototype with competitive object localization and detection accuracy that can be used in both community and clinical settings.

- The use of micro-Doppler signatures and skeleton pose estimation techniques to achieve a gait pattern classification accuracy of 95.7 to 98.8%.

2. Background and Literature Review

- (1)

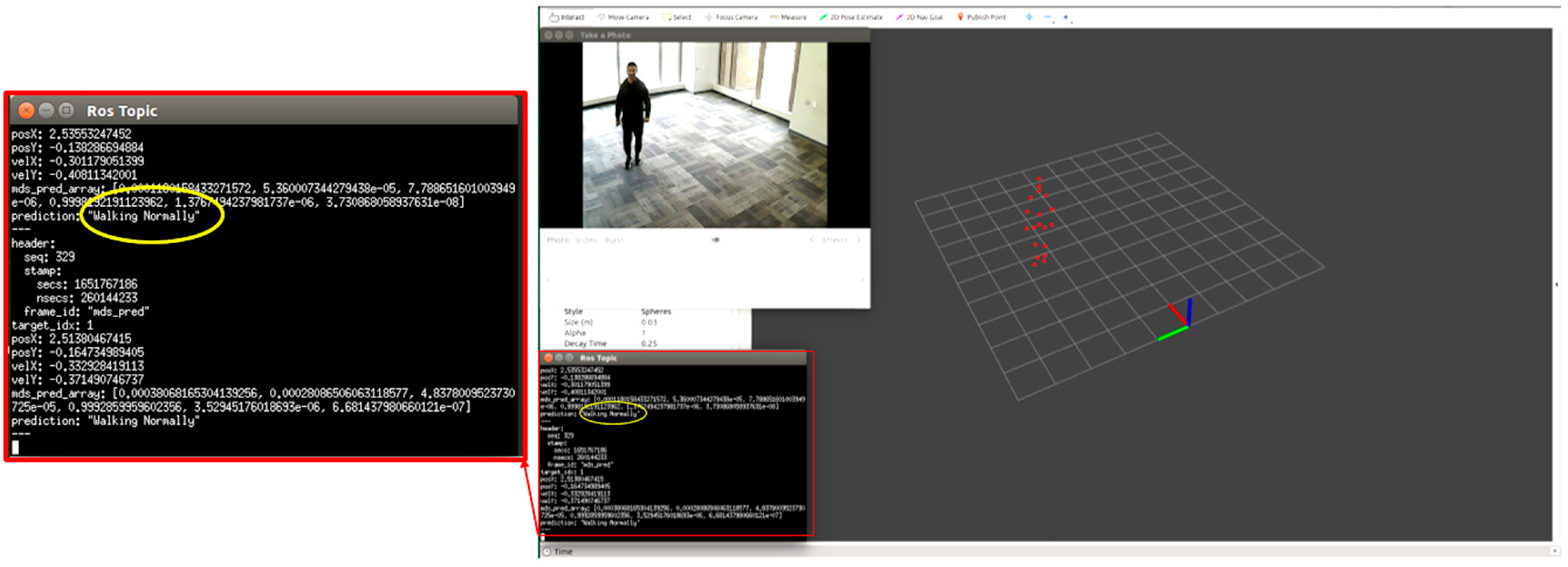

- Normal Gait—Walking normally with good posture and without an assistive device.

- (2)

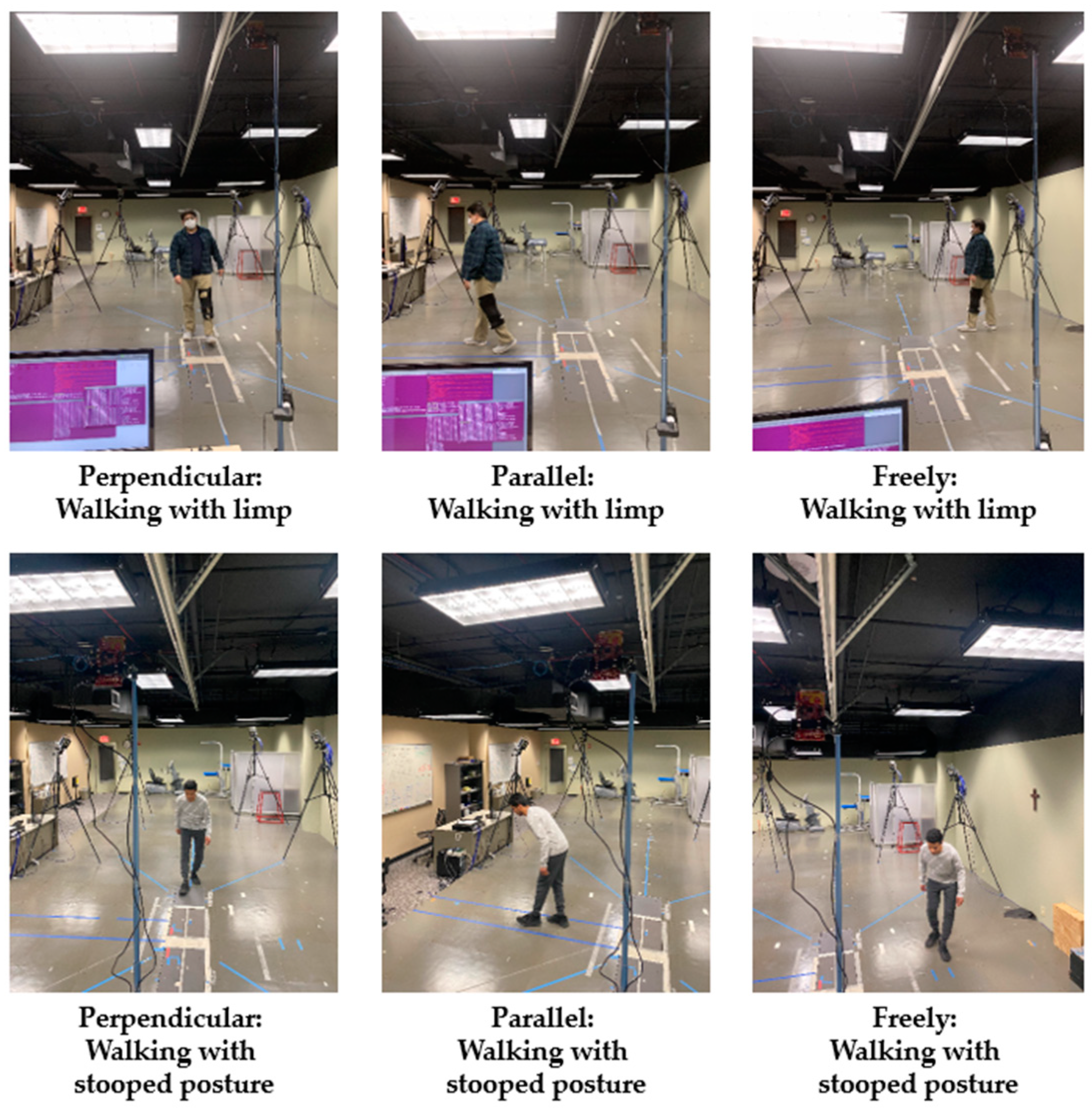

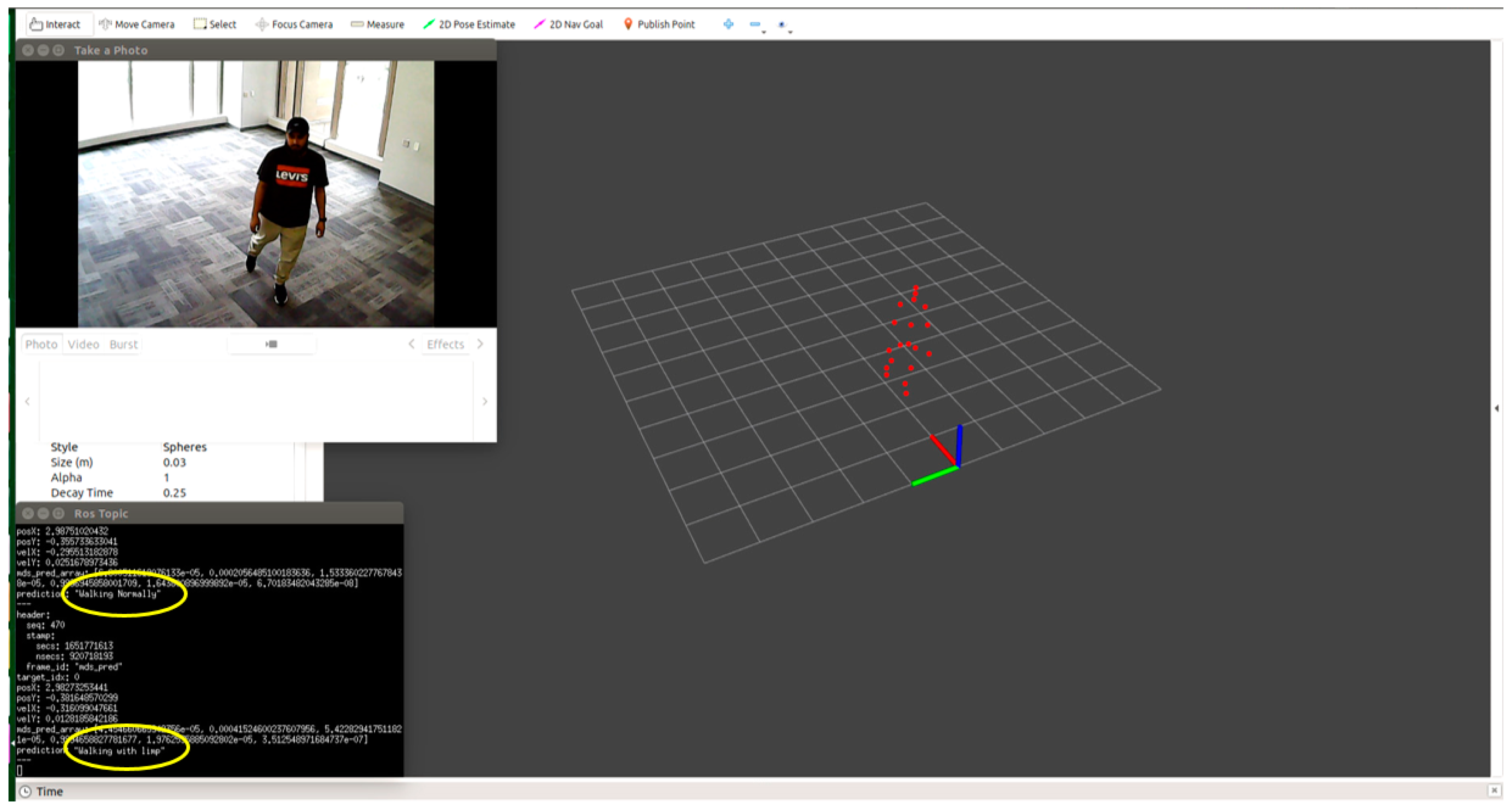

- Limping—Many gait deficits lead to asymmetry in movement; limping is a common gait disorder characterized by asymmetry in step/stride length and lateral trunk movement. A similar pattern can be seen in people with hemiparesis due to disorders such as stroke, Multiple Sclerosis (MS), and brain injury [38].

- (3)

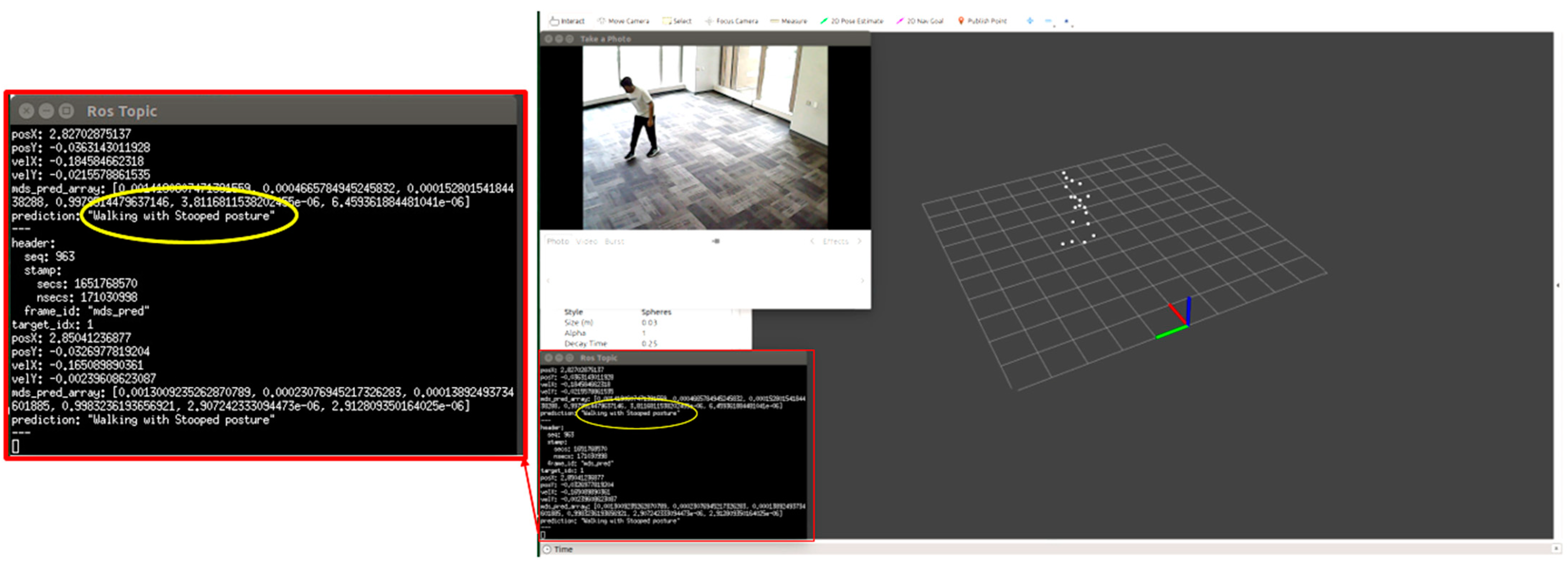

- Stooped posture—A stooped posture is common in frail persons who have difficulty overcoming the postural demands of gravity. A stooped posture is also common with various neurological disorders, such as dementia and Parkinson’s Disease [39].

- (4)

- Using a walker—Many people with gait and balance disorders use walkers. The ability to detect whether someone is using a walker can help to determine whether they are complying with their prescribed use or have adopted the device independently or whether they were using it at the time of a fall [40]. We used a front-wheel rolling walker in this work, because these are very commonly used in home and institutional settings.

- (5)

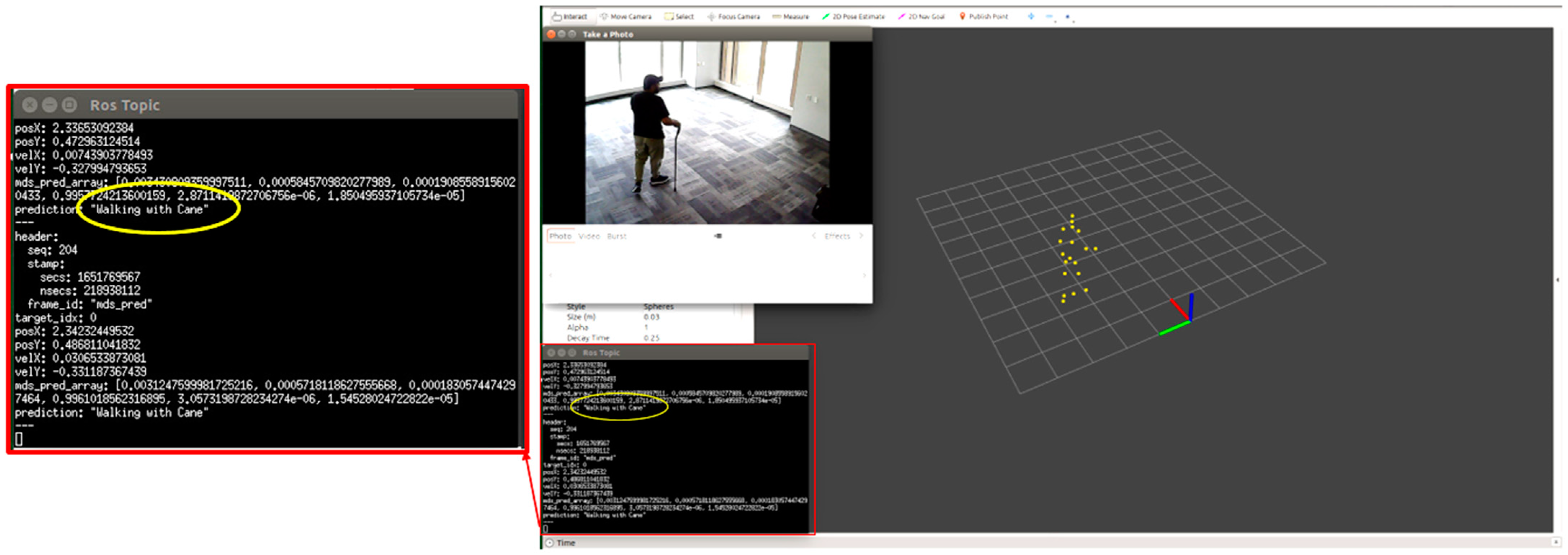

- Using a cane—Many people with gait and balance disorders use canes. The ability to detect whether someone is using a cane can help to determine whether they are complying with their prescribed use or have adopted the device independently [40].

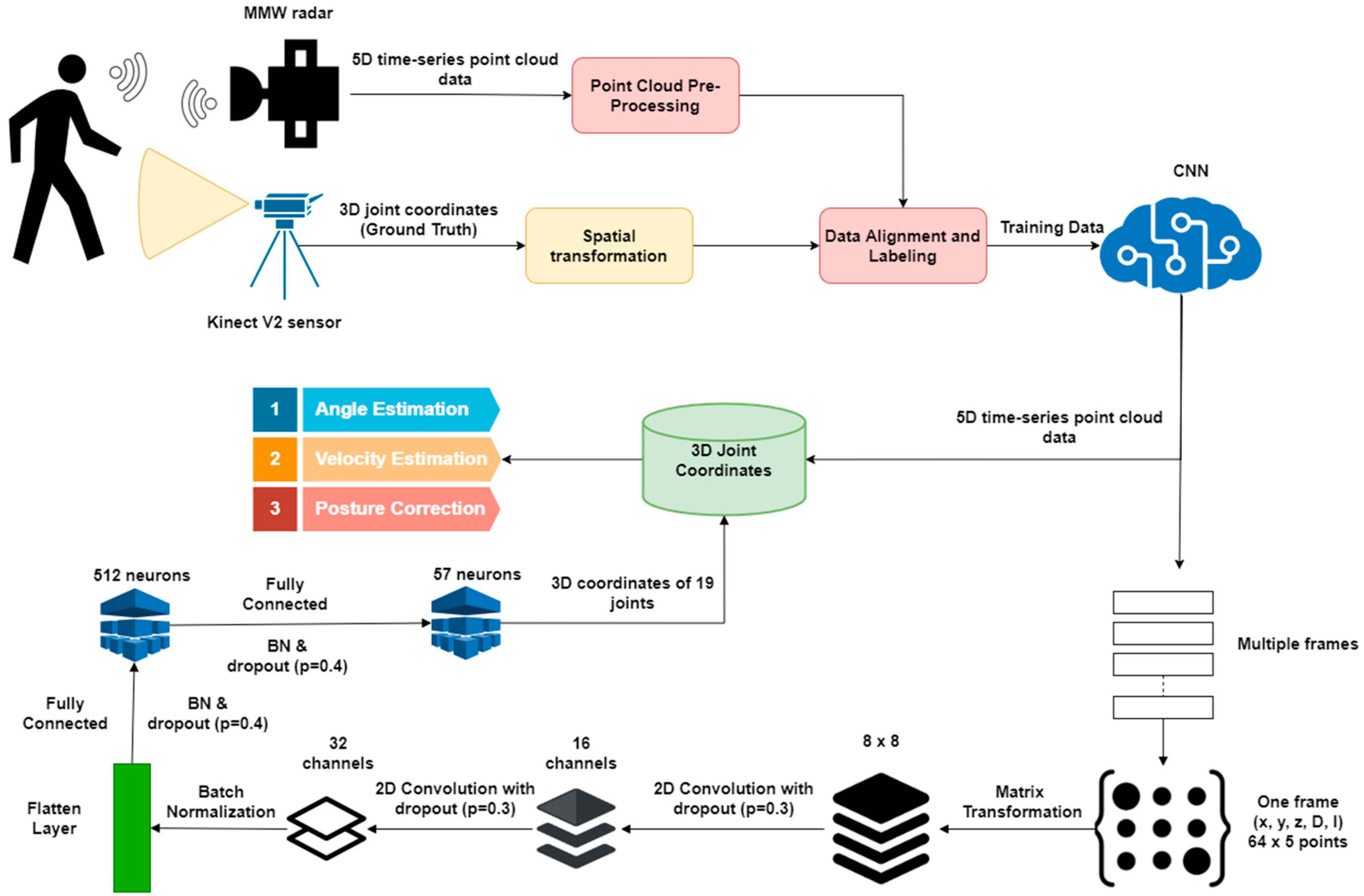

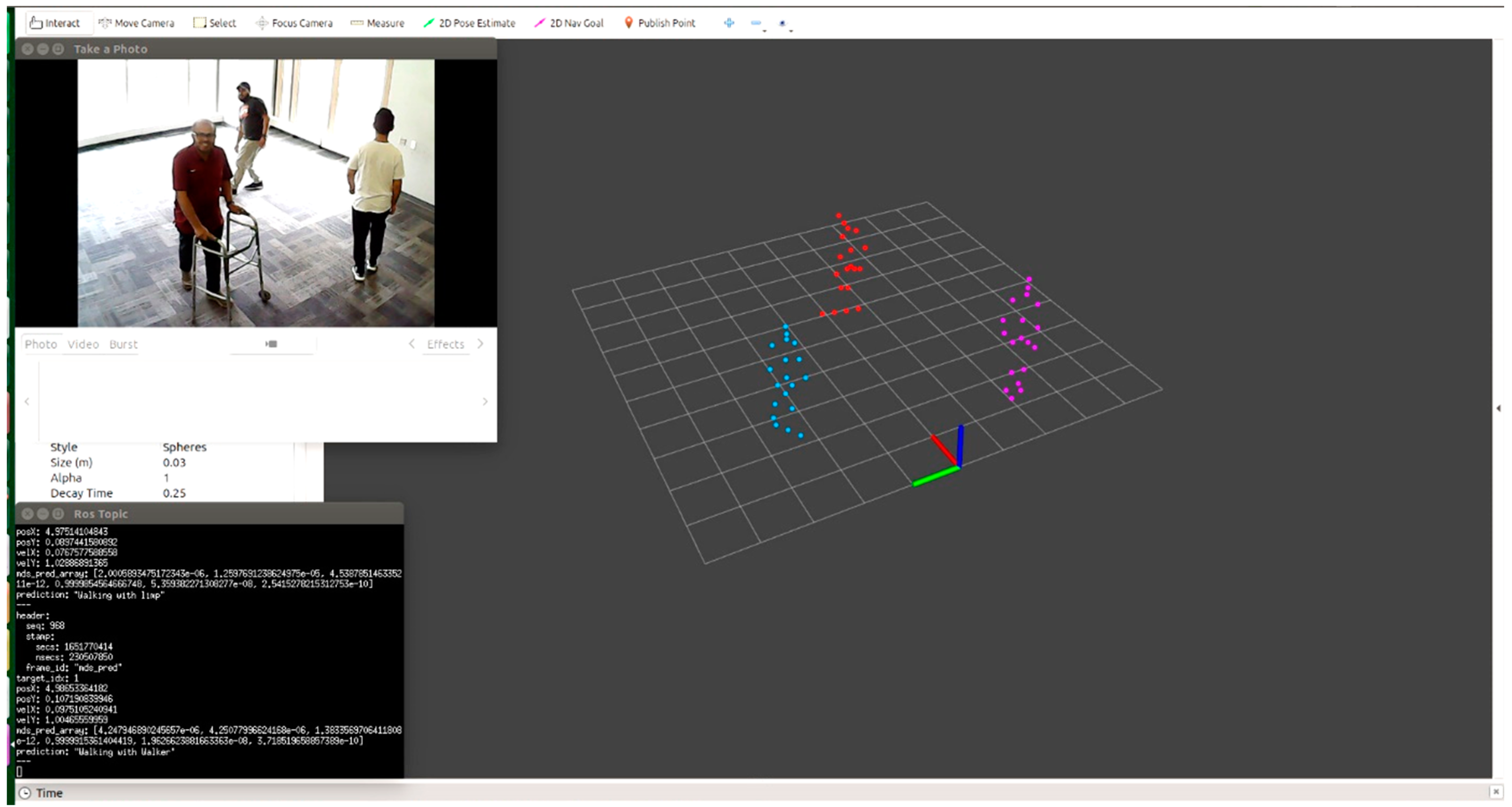

3. Proposed MMW Radar Gait System

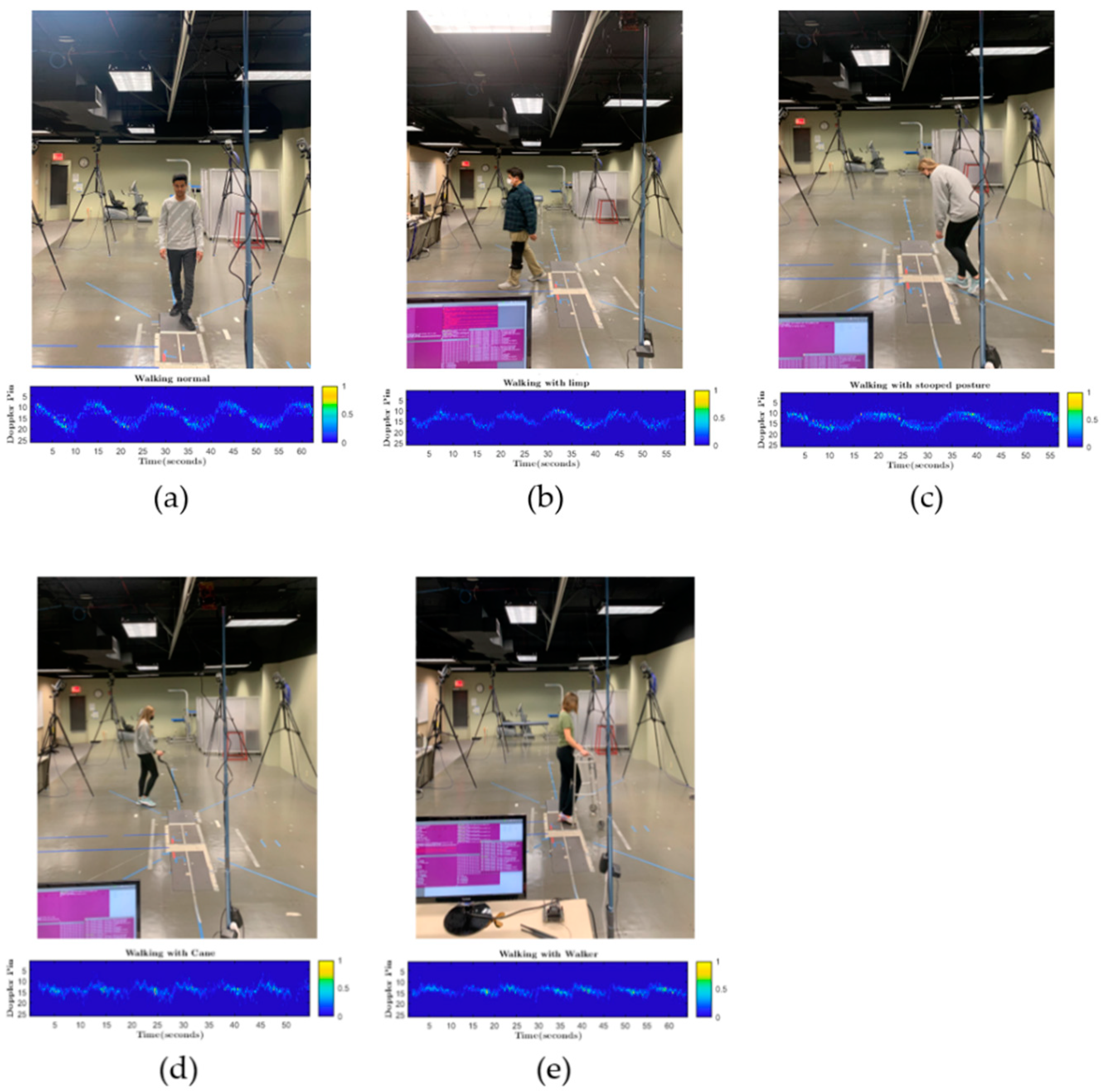

3.1. Detection Using Micro-Doppler Signatures

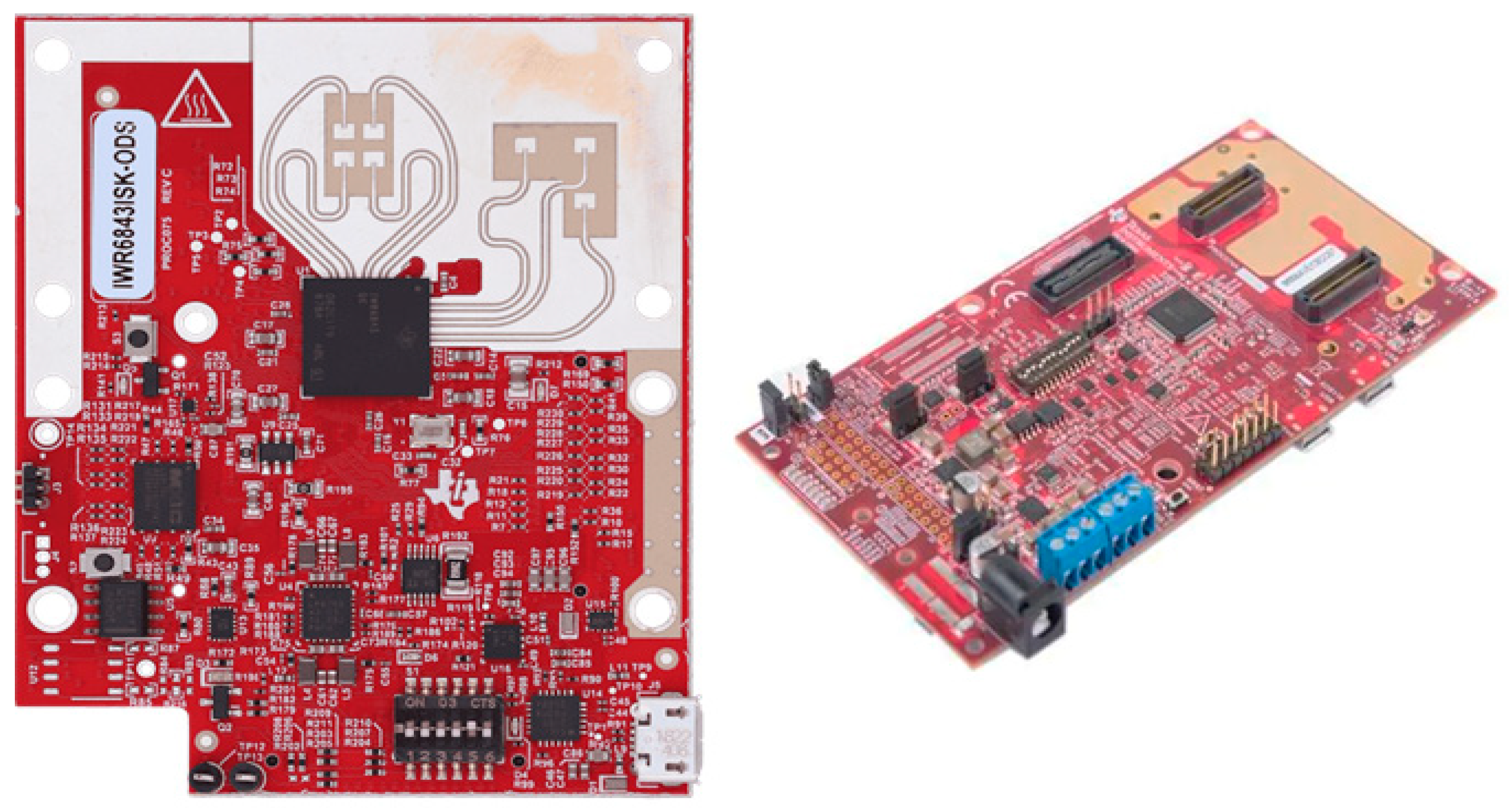

TI IWR6843ISK-ODS MMW Radar

- Range and velocity estimation is performed in the first step using the Fast Fourier Transform (FFT).

- Moving Target Indication (MTI) removes the static clutter points (surrounding reflections) from the data.

- The Constant False Alarm Rate (CFAR) detects static points among the noisy data in the fourth step.

- The fifth step includes angle estimation using the FFT.

- Finally, Density-Based Spatial Clustering of Applications with Noise (DBSCAN) is applied to separate the scattered points into different categories, such as regular walking or walking with a limp [42].

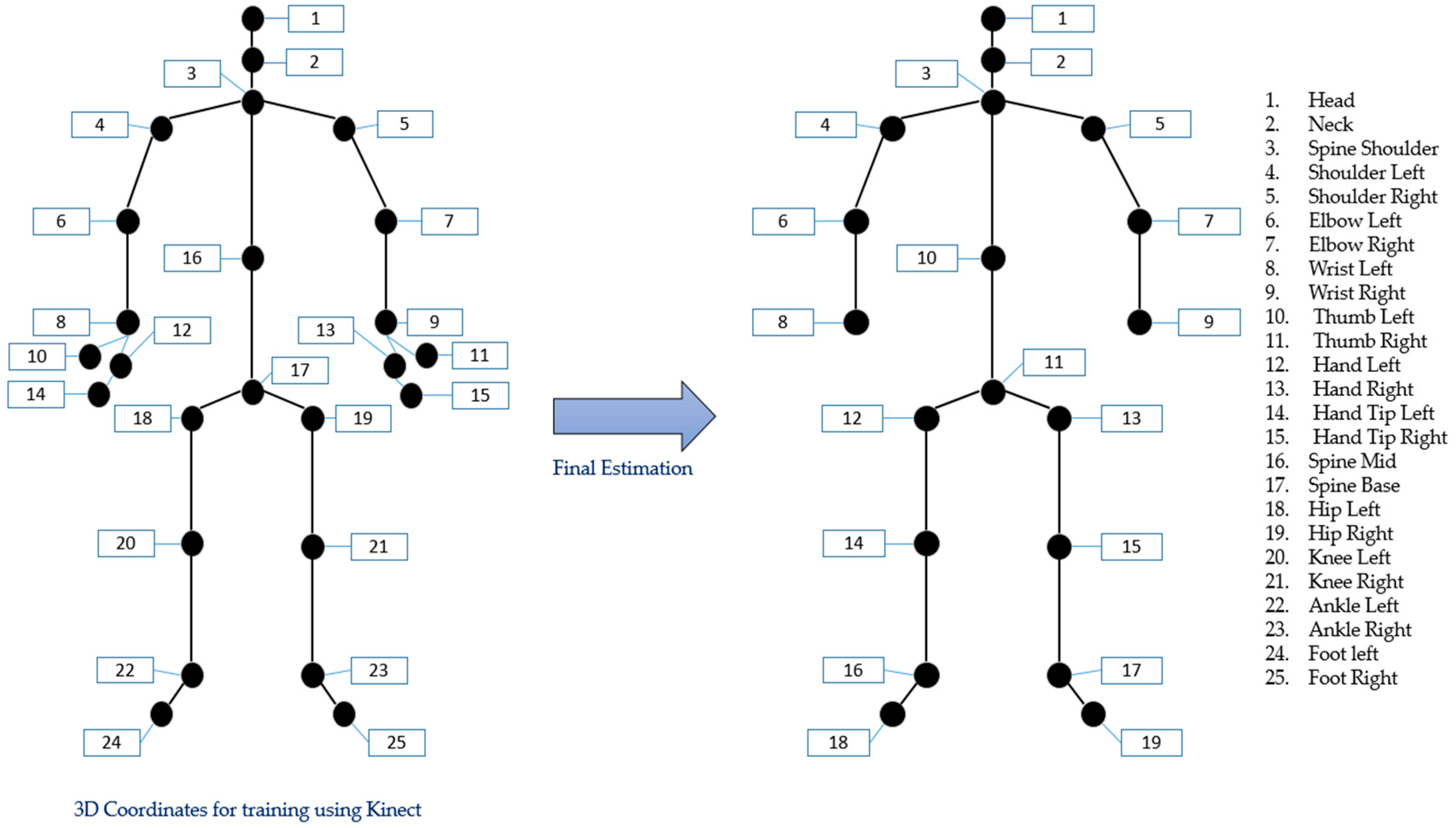

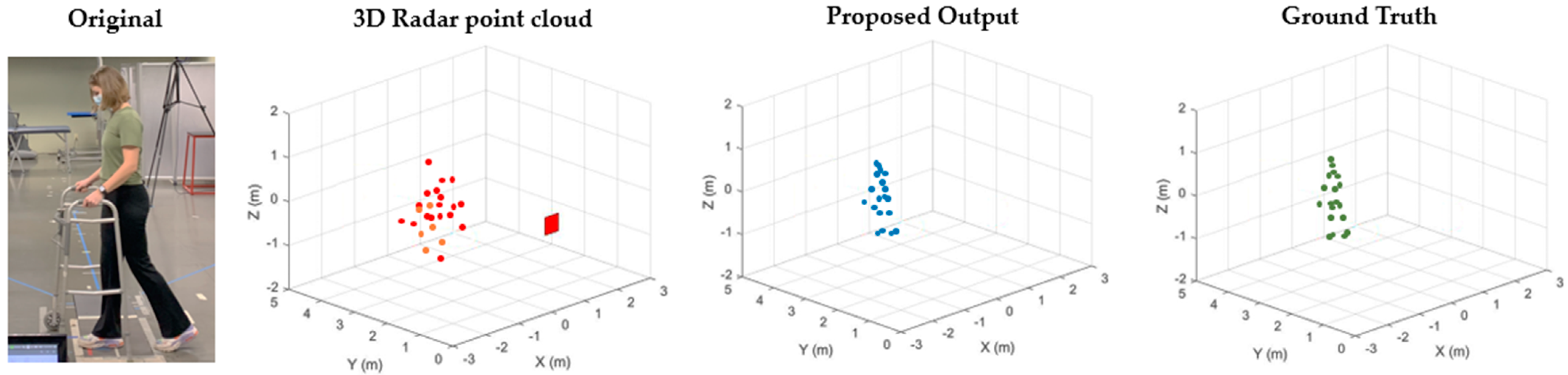

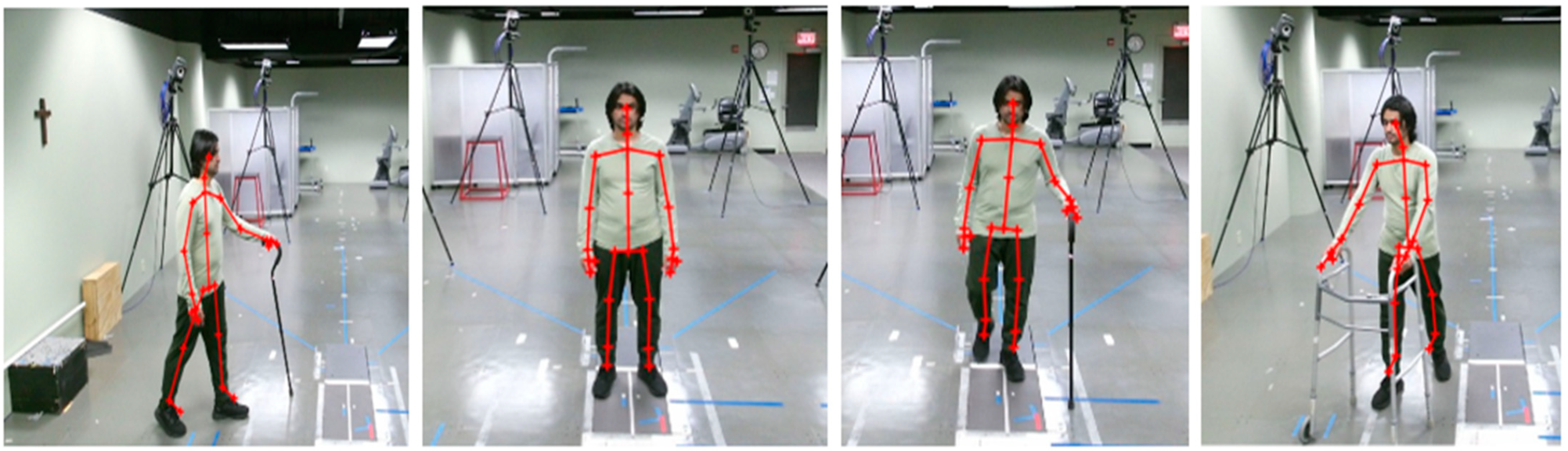

3.2. Skeletal Pose Estimation Technique

3.3. MMW-Based HAR Algorithms

| Algorithm 1: The MMW-based HAR algorithm using the CNN. |

| Require: MMW |

| Ensure: Walking Posture |

| for do |

| read radar signal |

| estimate |

| estimate |

| estimate |

| end for |

| for do read MMW and Kinect V2 data |

| extract coordinates |

| extract 5D points 19 points |

| extract 3D points 25 points |

| estimate skeleton joints total 19 points |

| estimate micro-Doppler Signatures |

| get prediction probabilities |

| end for |

| Read |

| Predict Walking Posture |

- W1: walking normal.

- W2: walking with stooped posture.

- W3: walking with limp.

- W4: walking with a walker.

- W5: walking with a cane.

4. Experiment Setup

Data Collection and Labelling

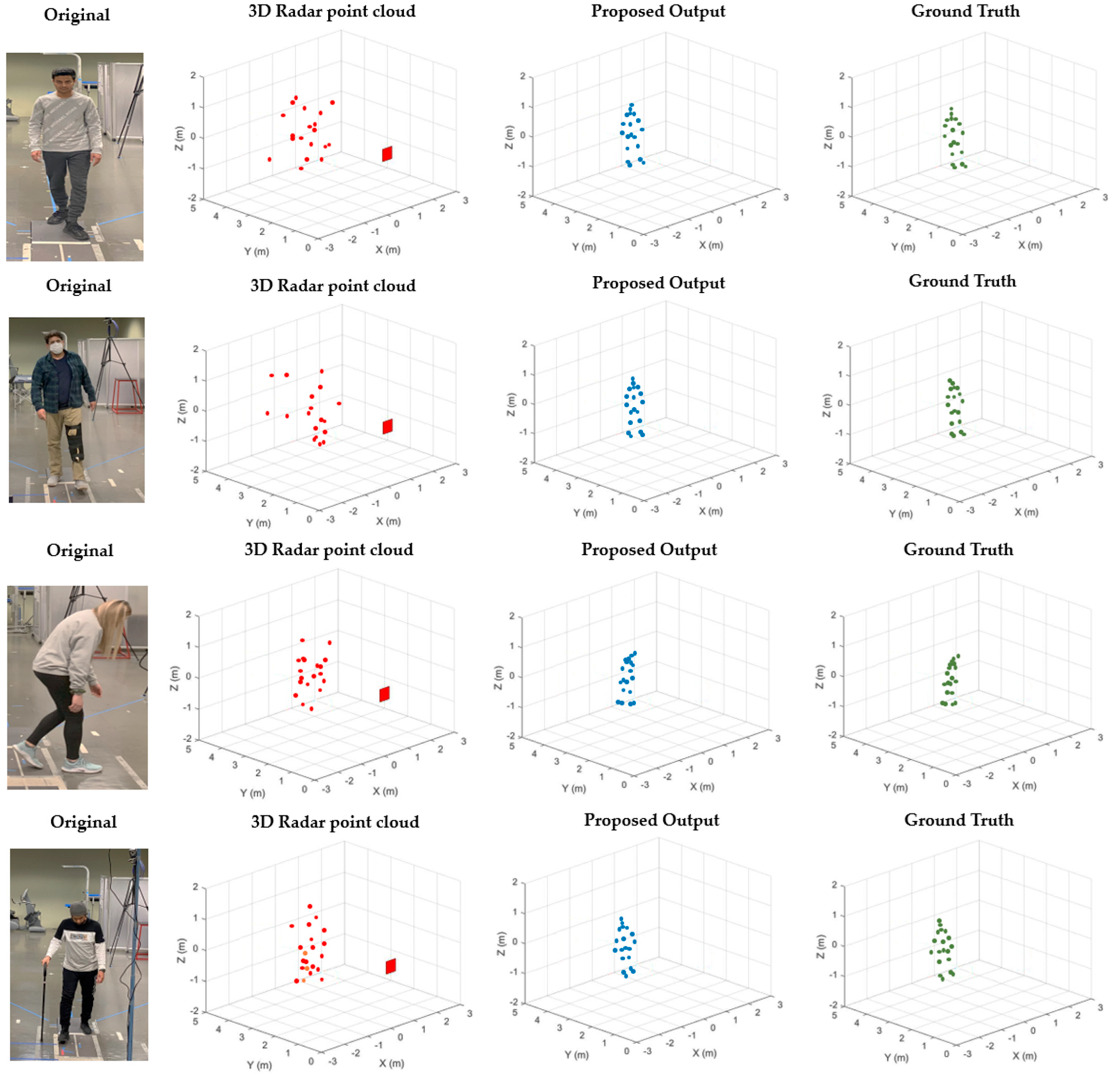

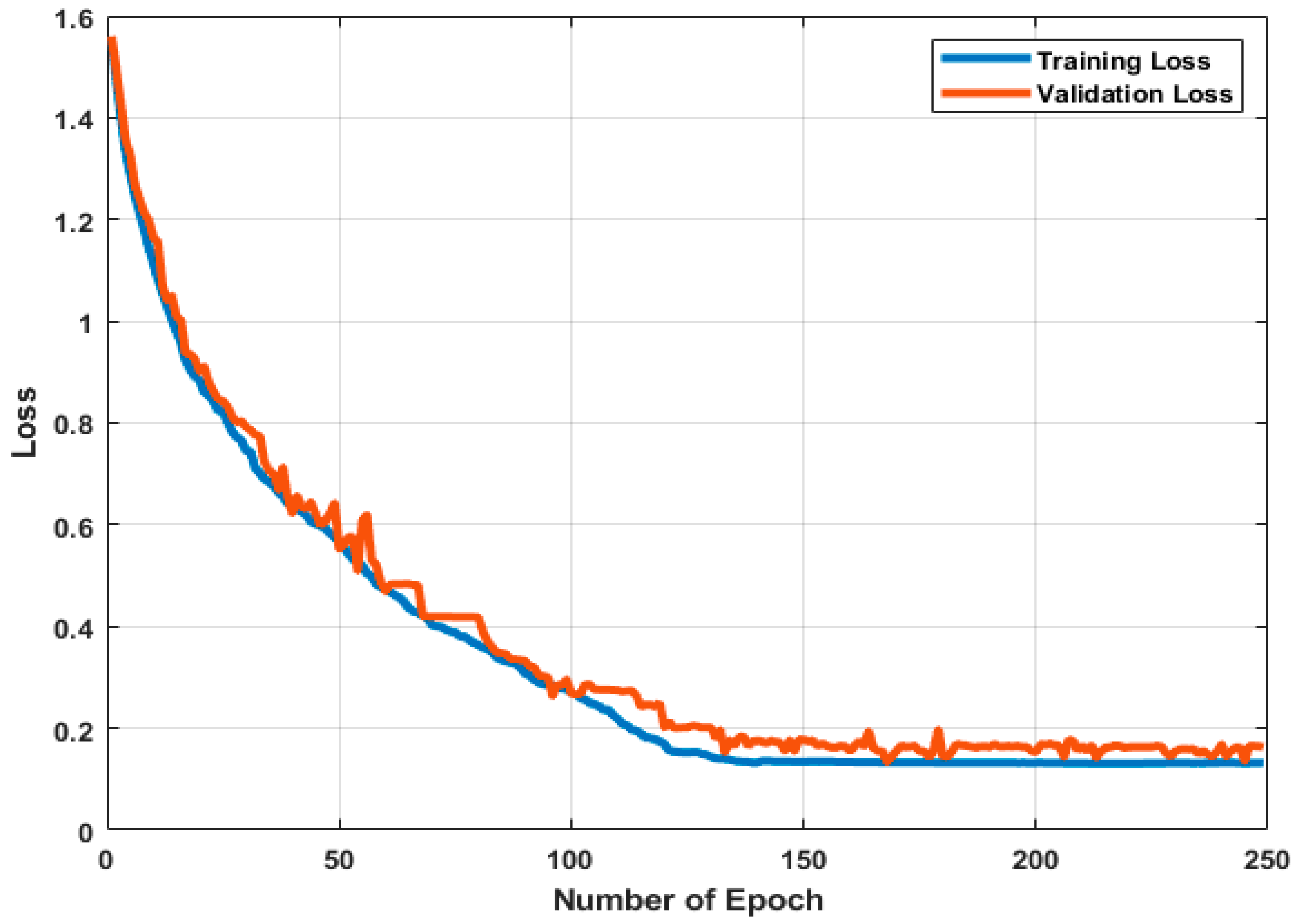

5. Results & Discussion

5.1. Monitoring Individual Activity

5.2. Monitoring Multiple Activities

5.3. Monitoring Different Subjects with Different Activities

5.3.1. The Accuracy Evaluation and Time Analysis

5.3.2. Prediction Accuracy

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mshali, H.; Lemlouma, T.; Moloney, M.; Magoni, D. A Survey on Health Monitoring Systems for Health Smart Homes. Int. J. Ind. Ergon. 2018, 66, 26–56. [Google Scholar] [CrossRef] [Green Version]

- Guan, C.; Niu, H. Frailty Assessment in Older Adults with Chronic Obstructive Respiratory Diseases. Clin. Interv. Aging 2018, 13, 1513–1524. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Saboor, A.; Ahmad, R.; Ahmed, W.; Kiani, A.K.; Moullec, Y.L.; Alam, M.M. On Research Challenges in Hybrid Medium-Access Control Protocols for IEEE 802.15.6 WBANs. IEEE Sens. J. 2019, 19, 8543–8555. [Google Scholar] [CrossRef]

- Saboor, A.; Mustafa, A.; Ahmad, R.; Khan, M.A.; Haris, M.; Hameed, R. Evolution of Wireless Standards for Health Monitoring. In Proceedings of the 2019 9th Annual Information Technology, Electromechanical Engineering and Microelectronics Conference (IEMECON), Jaipur, India, 13–15 March 2019; pp. 268–272. [Google Scholar]

- Khan, M.A.; Saboor, A.; Kim, H.; Park, H. A Systematic Review of Location Aware Schemes in the Internet of Things. Sensors 2021, 21, 3228. [Google Scholar] [CrossRef]

- Agham, N.; Chaskar, U. Prevalent Approach of Learning Based Cuffless Blood Pressure Measurement System for Continuous Health-Care Monitoring. In Proceedings of the 2019 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Istanbul, Turkey, 26–28 June 2019; pp. 1–5. [Google Scholar]

- Baig, M.M.; Afifi, S.; GholamHosseini, H.; Mirza, F. A Systematic Review of Wearable Sensors and IoT-Based Monitoring Applications for Older Adults—A Focus on Ageing Population and Independent Living. J. Med. Syst. 2019, 43, 233. [Google Scholar] [CrossRef] [PubMed]

- Alanazi, M.A.; Alhazmi, A.K.; Yakopcic, C.; Chodavarapu, V.P. Machine Learning Models for Human Fall Detection Using Millimeter Wave Sensor. In Proceedings of the 2021 55th Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 24 March 2021; pp. 1–5. [Google Scholar]

- Yu, C.; Xu, Z.; Yan, K.; Chien, Y.-R.; Fang, S.-H.; Wu, H.-C. Noninvasive Human Activity Recognition Using Millimeter-Wave Radar. IEEE Syst. J. 2022, 16, 3036–3047. [Google Scholar] [CrossRef]

- di Biase, L.; Di Santo, A.; Caminiti, M.L.; De Liso, A.; Shah, S.A.; Ricci, L.; Di Lazzaro, V. Gait Analysis in Parkinson’s Disease: An Overview of the Most Accurate Markers for Diagnosis and Symptoms Monitoring. Sensors 2020, 20, 3529. [Google Scholar] [CrossRef]

- Usmani, S.; Saboor, A.; Haris, M.; Khan, M.A.; Park, H. Latest Research Trends in Fall Detection and Prevention Using Machine Learning: A Systematic Review. Sensors 2021, 21, 5134. [Google Scholar] [CrossRef]

- Mekruksavanich, S.; Jitpattanakul, A. LSTM Networks Using Smartphone Data for Sensor-Based Human Activity Recognition in Smart Homes. Sensors 2021, 21, 1636. [Google Scholar] [CrossRef]

- Alrashdi, I.; Siddiqi, M.H.; Alhwaiti, Y.; Alruwaili, M.; Azad, M. Maximum Entropy Markov Model for Human Activity Recognition Using Depth Camera. IEEE Access 2021, 9, 160635–160645. [Google Scholar] [CrossRef]

- Jia, Y.; Guo, Y.; Wang, G.; Song, R.; Cui, G.; Zhong, X. Multi-Frequency and Multi-Domain Human Activity Recognition Based on SFCW Radar Using Deep Learning. Neurocomputing 2021, 444, 274–287. [Google Scholar] [CrossRef]

- Reddy Maddikunta, P.K.; Srivastava, G.; Reddy Gadekallu, T.; Deepa, N.; Boopathy, P. Predictive Model for Battery Life in IoT Networks. IET Intell. Transp. Syst. 2020, 14, 1388–1395. [Google Scholar] [CrossRef]

- van Wamelen, D.J.; Sringean, J.; Trivedi, D.; Carroll, C.B.; Schrag, A.E.; Odin, P.; Antonini, A.; Bloem, B.R.; Bhidayasiri, R.; Chaudhuri, K.R. Digital Health Technology for Non-Motor Symptoms in People with Parkinson’s Disease: Futile or Future? Parkinsonism Relat. Disord. 2021, 89, 186–194. [Google Scholar] [CrossRef] [PubMed]

- Sengupta, A.; Jin, F.; Cao, S. NLP Based Skeletal Pose Estimation Using MmWave Radar Point-Cloud: A Simulation Approach. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21 September 2020; pp. 1–6. [Google Scholar]

- Yang, X.; Liu, J.; Chen, Y.; Guo, X.; Xie, Y. MU-ID: Multi-User Identification Through Gaits Using Millimeter Wave Radios. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; pp. 2589–2598. [Google Scholar]

- Yang, Z.; Pathak, P.H.; Zeng, Y.; Liran, X.; Mohapatra, P. Monitoring Vital Signs Using Millimeter Wave. In Proceedings of the Proceedings of the 17th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Paderborn, Germany, 5 July 2016; pp. 211–220. [Google Scholar]

- Cen, S.H.; Newman, P. Precise Ego-Motion Estimation with Millimeter-Wave Radar Under Diverse and Challenging Conditions. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Wonsetler, E.C.; Bowden, M.G. A Systematic Review of Mechanisms of Gait Speed Change Post-Stroke. Part 1: Spatiotemporal Parameters and Asymmetry Ratios. Top. Stroke Rehabil. 2017, 24, 435–446. [Google Scholar] [CrossRef] [PubMed]

- Mulas, I.; Putzu, V.; Asoni, G.; Viale, D.; Mameli, I.; Pau, M. Clinical Assessment of Gait and Functional Mobility in Italian Healthy and Cognitively Impaired Older Persons Using Wearable Inertial Sensors. Aging Clin. Exp. Res. 2021, 33, 1853–1864. [Google Scholar] [CrossRef] [PubMed]

- Saboor, A.; Kask, T.; Kuusik, A.; Alam, M.M.; Le Moullec, Y.; Niazi, I.K.; Zoha, A.; Ahmad, R. Latest Research Trends in Gait Analysis Using Wearable Sensors and Machine Learning: A Systematic Review. IEEE Access 2020, 8, 167830–167864. [Google Scholar] [CrossRef]

- Zebin, T.; Scully, P.J.; Ozanyan, K.B. Human Activity Recognition with Inertial Sensors Using a Deep Learning Approach. In Proceedings of the 2016 IEEE SENSORS, Orlando, FL, USA, 30 October–3 November 2016; pp. 1–3. [Google Scholar]

- Muro-de-la-Herran, A.; Garcia-Zapirain, B.; Mendez-Zorrilla, A. Gait Analysis Methods: An Overview of Wearable and Non-Wearable Systems, Highlighting Clinical Applications. Sensors 2014, 14, 3362–3394. [Google Scholar] [CrossRef] [Green Version]

- Rescio, G.; Leone, A.; Siciliano, P. Supervised Machine Learning Scheme for Electromyography-Based Pre-Fall Detection System. Expert Syst. Appl. 2018, 100, 95–105. [Google Scholar] [CrossRef]

- Umair Bin Altaf, M.; Butko, T.; Juang, B.-H. Acoustic Gaits: Gait Analysis With Footstep Sounds. IEEE Trans. Biomed. Eng. 2015, 62, 2001–2011. [Google Scholar] [CrossRef]

- Chiang, T.-H.; Su, Y.-J.; Shiu, H.-R.; Tseng, Y.-C. 3D Gait Tracking by Acoustic Doppler Effects. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 3146–3149. [Google Scholar]

- Huitema, R.B.; Hof, A.L.; Postema, K. Ultrasonic Motion Analysis System—Measurement of Temporal and Spatial Gait Parameters. J. Biomech. 2002, 35, 837–842. [Google Scholar] [CrossRef] [Green Version]

- Maki, H.; Ogawa, H.; Yonezawa, Y.; Hahn, A.W.; Caldwell, W.M. A New Ultrasonic Stride Length Measuring System. Biomed. Sci. Instrum. 2012, 48, 282–287. [Google Scholar] [PubMed]

- Steinert, A.; Sattler, I.; Otte, K.; Röhling, H.; Mansow-Model, S.; Müller-Werdan, U. Using New Camera-Based Technologies for Gait Analysis in Older Adults in Comparison to the Established GAITRite System. Sensors 2019, 20, 125. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, C.; Ugbolue, U.; Carse, B.; Stankovic, V.; Stankovic, L.; Rowe, P. Multiple Marker Tracking in a Single-Camera System for Gait Analysis. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 3128–3131. [Google Scholar]

- Zhao, M.; Li, T.; Alsheikh, M.A.; Tian, Y.; Zhao, H.; Torralba, A.; Katabi, D. Through-Wall Human Pose Estimation Using Radio Signals. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7356–7365. [Google Scholar]

- Cagliyan, B.; Gurbuz, S.Z. Micro-Doppler-Based Human Activity Classification Using the Mote-Scale BumbleBee Radar. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2135–2139. [Google Scholar] [CrossRef]

- Sengupta, A.; Cao, S. MmPose-NLP: A Natural Language Processing Approach to Precise Skeletal Pose Estimation Using MmWave Radars. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–12. [Google Scholar] [CrossRef] [PubMed]

- An, S.; Ogras, U.Y. MARS: MmWave-Based Assistive Rehabilitation System for Smart Healthcare. ACM Trans. Embed. Comput. Syst. 2021, 20, 1–22. [Google Scholar] [CrossRef]

- Seifert, A.-K.; Amin, M.G.; Zoubir, A.M. Toward Unobtrusive In-Home Gait Analysis Based on Radar Micro-Doppler Signatures. IEEE Trans. Biomed. Eng. 2019, 66, 2629–2640. [Google Scholar] [CrossRef] [Green Version]

- Sahraian, M.A.; Yadegari, S.; Azarpajouh, R.; Forughipour, M. Avascular Necrosis of the Femoral Head in Multiple Sclerosis: Report of Five Patients. Neurol. Sci. 2012, 33, 1443–1446. [Google Scholar] [CrossRef]

- Benatru, I.; Vaugoyeau, M.; Azulay, J.-P. Postural Disorders in Parkinson’s Disease. Neurophysiol. Clin. Neurophysiol. 2008, 38, 459–465. [Google Scholar] [CrossRef]

- Hobeika, C.P. Equilibrium and Balance in the Elderly. Ear. Nose. Throat J. 1999, 78, 558–566. [Google Scholar] [CrossRef] [Green Version]

- Instruments, T. IWR6843 Intelligent MmWave Overhead Detection Sensor (ODS) Antenna Plug-in Module. Available online: https://www.ti.com/tool/IWR6843ISK-ODS (accessed on 28 June 2022).

- Sander, J.; Ester, M.; Kriegel, H.-P.; Xu, X. Density-Based Clustering in Spatial Databases: The Algorithm GDBSCAN and Its Applications. Data Min. Knowl. Discov. 1998, 2, 169–194. [Google Scholar] [CrossRef]

- Anthimopoulos, M.; Christodoulidis, S.; Ebner, L.; Christe, A.; Mougiakakou, S. Lung Pattern Classification for Interstitial Lung Diseases Using a Deep Convolutional Neural Network. IEEE Trans. Med. Imaging 2016, 35, 1207–1216. [Google Scholar] [CrossRef] [PubMed]

- Jetson Nano Developer Kit. Available online: https://developer.nvidia.com/embedded/jetson-nano-developer-kit (accessed on 28 June 2022).

| Parameter | Physical Description |

|---|---|

| Start Frequency | 60.75 GHz |

| Number of TX | 3 TX |

| Number of RX | 4 RX |

| Number of samples per chirp | 96 |

| Number of chirps | 288 |

| Maximum velocity | 16.2 km/h |

| Velocity resolution | 0.324 km/h |

| Idle time | 30 µs |

| ADC valid start time | 25 µs |

| F_s (Sampling frequency) | 2.950 Msps |

| F_c (Central frequency) | 63.008 GHz |

| Valid sweep Bandwidth (BW) | 1780.393 MHz |

| ) | 55 ms |

| ) | 8.083 m |

| Range resolution(∆R) | 0.084 m |

| ) | ±4.450 m/s |

| Doppler resolution (∆D) | 0.093 m/s |

| Range Detection Threshold | 15 dB |

| Doppler Detection Threshold | 15 dB |

| Parameter | Mean ± SD (Range) |

|---|---|

| Age | 24 ± 7.36 (21–53) |

| Height (cm) | 170 ± 5.55 (160–185.42) |

| Weight (kg) | 75 ± 12.59 (55–115) |

| BMI | 25.47 ± 4.36 (19.26–40.75) |

| Gender (M/F) | 42/32 |

| No. Point | Description | X (Horizontal) (cm) | Y (Depth) (cm) | Z (Vertical) (cm) | |||

|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | MAE | RMSE | ||

| 1 | Head | 6.35 | 9.46 | 2.59 | 3.59 | 7.10 | 9.58 |

| 2 | Neck | 5.68 | 8.71 | 2.47 | 3.23 | 6.47 | 8.87 |

| 3 | Spine Shoulder | 5.51 | 8.46 | 2.13 | 3.02 | 6.30 | 8.61 |

| 4 | Shoulder Left | 5.82 | 8.80 | 2.28 | 3.28 | 5.77 | 8.01 |

| 5 | Shoulder Right | 5.64 | 8.58 | 2.66 | 4.02 | 6.01 | 8.11 |

| 6 | Elbow Left | 6.41 | 9.12 | 3.26 | 5.01 | 7.08 | 9.61 |

| 7 | Elbow Right | 6.85 | 9.63 | 3.61 | 5.62 | 7.30 | 9.81 |

| 8 | Wrist Left | 9.23 | 12.66 | 4.02 | 5.71 | 12.45 | 16.23 |

| 9 | Wrist Right | 9.62 | 13.14 | 4.13 | 6.14 | 13.03 | 16.52 |

| 10 | Spine Mid | 5.05 | 7.81 | 2.02 | 2.86 | 5.71 | 7.85 |

| 11 | Spine Base | 4.55 | 7.12 | 2.45 | 3.87 | 4.87 | 6.72 |

| 12 | Hip Left | 4.54 | 7.02 | 2.45 | 3.87 | 4.72 | 6.56 |

| 13 | Hip Right | 4.45 | 7.02 | 2.56 | 4.04 | 4.82 | 6.67 |

| 14 | Knee Left | 4.46 | 7.01 | 3.07 | 4.52 | 2.14 | 3.43 |

| 15 | Knee Right | 5.10 | 7.42 | 3.27 | 4.72 | 2.50 | 4.21 |

| 16 | Ankle Left | 4.45 | 7.08 | 3.08 | 4.54 | 2.23 | 3.42 |

| 17 | Ankle Right | 5.81 | 8.32 | 3.34 | 5.09 | 1.65 | 4.26 |

| 18 | Foot Left | 5.48 | 8.25 | 3.72 | 6.02 | 2.05 | 4.12 |

| 19 | Foot Right | 6.27 | 8.87 | 3.46 | 5.42 | 2.10 | 4.69 |

| 19 points Average | 5.86 | 8.66 | 2.98 | 4.45 | 5.49 | 7.75 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alanazi, M.A.; Alhazmi, A.K.; Alsattam, O.; Gnau, K.; Brown, M.; Thiel, S.; Jackson, K.; Chodavarapu, V.P. Towards a Low-Cost Solution for Gait Analysis Using Millimeter Wave Sensor and Machine Learning. Sensors 2022, 22, 5470. https://doi.org/10.3390/s22155470

Alanazi MA, Alhazmi AK, Alsattam O, Gnau K, Brown M, Thiel S, Jackson K, Chodavarapu VP. Towards a Low-Cost Solution for Gait Analysis Using Millimeter Wave Sensor and Machine Learning. Sensors. 2022; 22(15):5470. https://doi.org/10.3390/s22155470

Chicago/Turabian StyleAlanazi, Mubarak A., Abdullah K. Alhazmi, Osama Alsattam, Kara Gnau, Meghan Brown, Shannon Thiel, Kurt Jackson, and Vamsy P. Chodavarapu. 2022. "Towards a Low-Cost Solution for Gait Analysis Using Millimeter Wave Sensor and Machine Learning" Sensors 22, no. 15: 5470. https://doi.org/10.3390/s22155470

APA StyleAlanazi, M. A., Alhazmi, A. K., Alsattam, O., Gnau, K., Brown, M., Thiel, S., Jackson, K., & Chodavarapu, V. P. (2022). Towards a Low-Cost Solution for Gait Analysis Using Millimeter Wave Sensor and Machine Learning. Sensors, 22(15), 5470. https://doi.org/10.3390/s22155470