ScatterHough: Automatic Lane Detection from Noisy LiDAR Data

Abstract

:1. Introduction

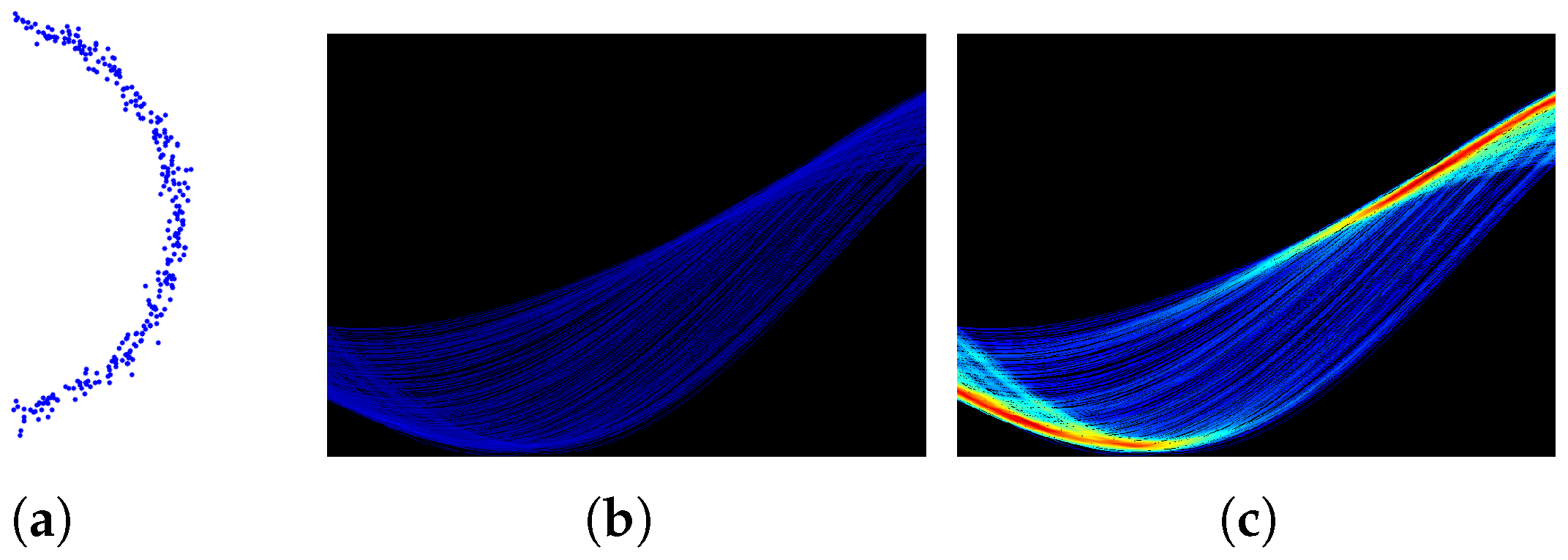

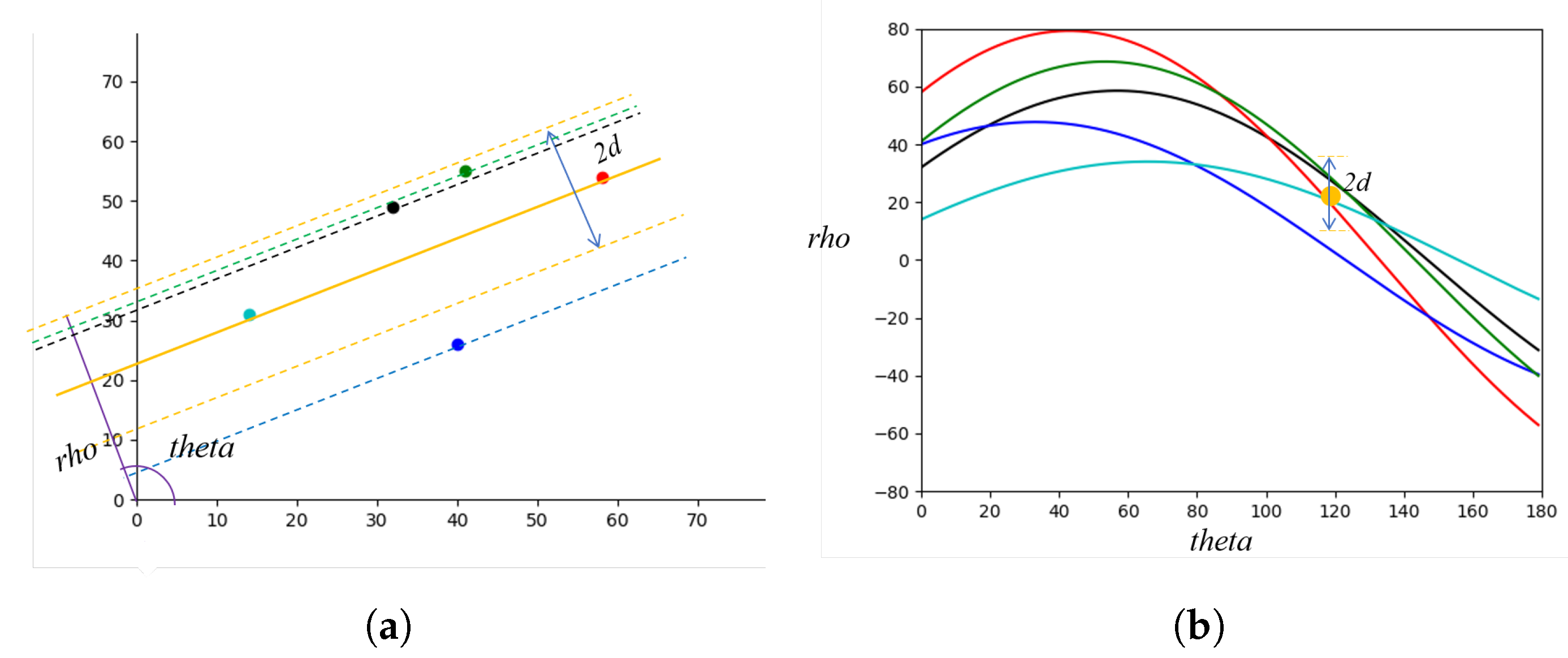

- neighbor voting method is introduced into HT to allow points in the neighborhood of estimated value to vote, tailed for scatter points;

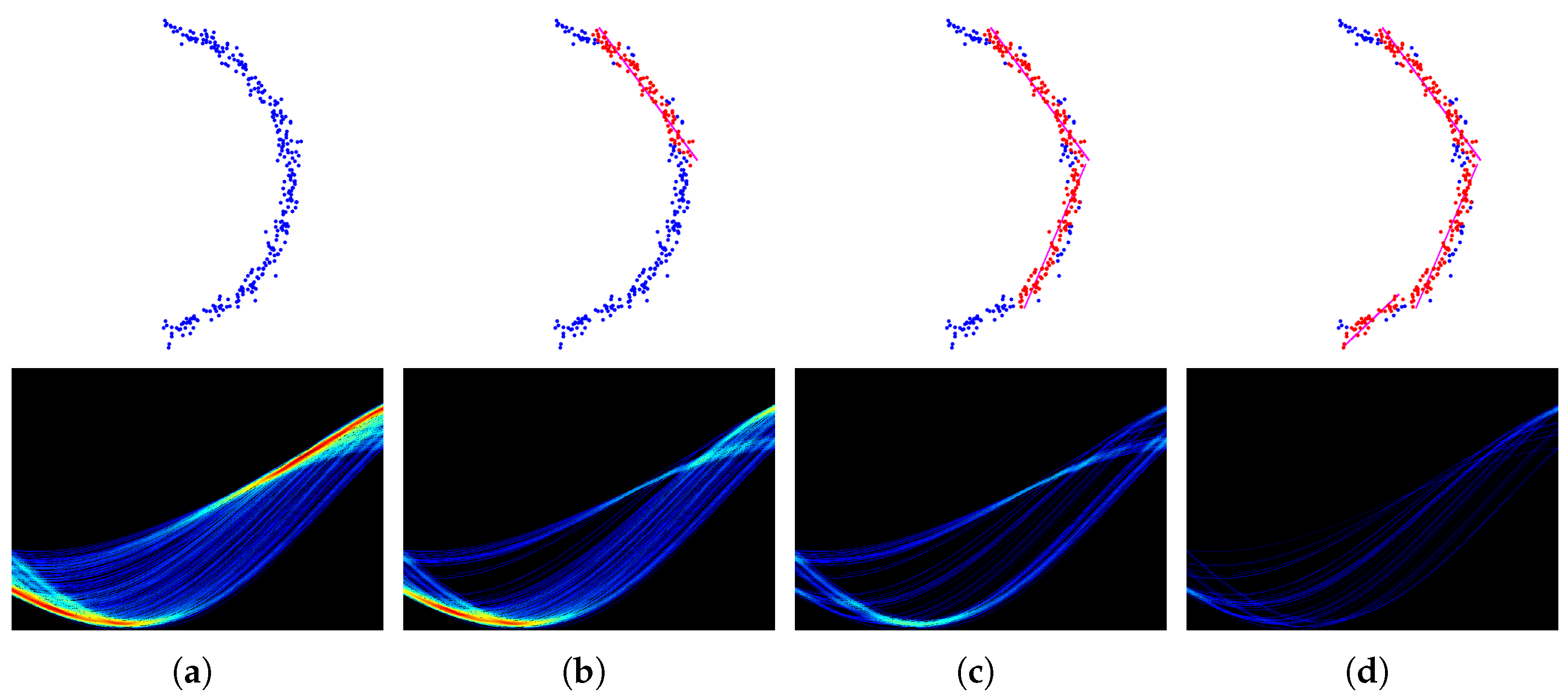

- neighbor vote-reduction method is introduced into HT to drop votes that already contribute to existing fitted lines for better curve fitting;

- Experimental results on the popular PandaSet demonstrate that our method achieves better performance compared with other line fitting approaches.

2. Related Work

3. The Proposed ScatterHough

3.1. Neighbor Voting

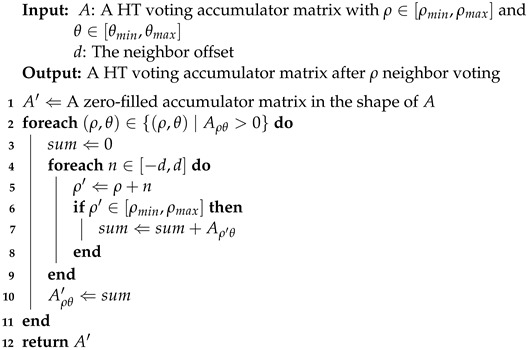

| Algorithm 1: Neighbor Voting. |

|

3.2. Neighbor Vote-Reduction

| Algorithm 2: Neighbor Vote-reduction. |

|

4. Evaluation

4.1. Dataset

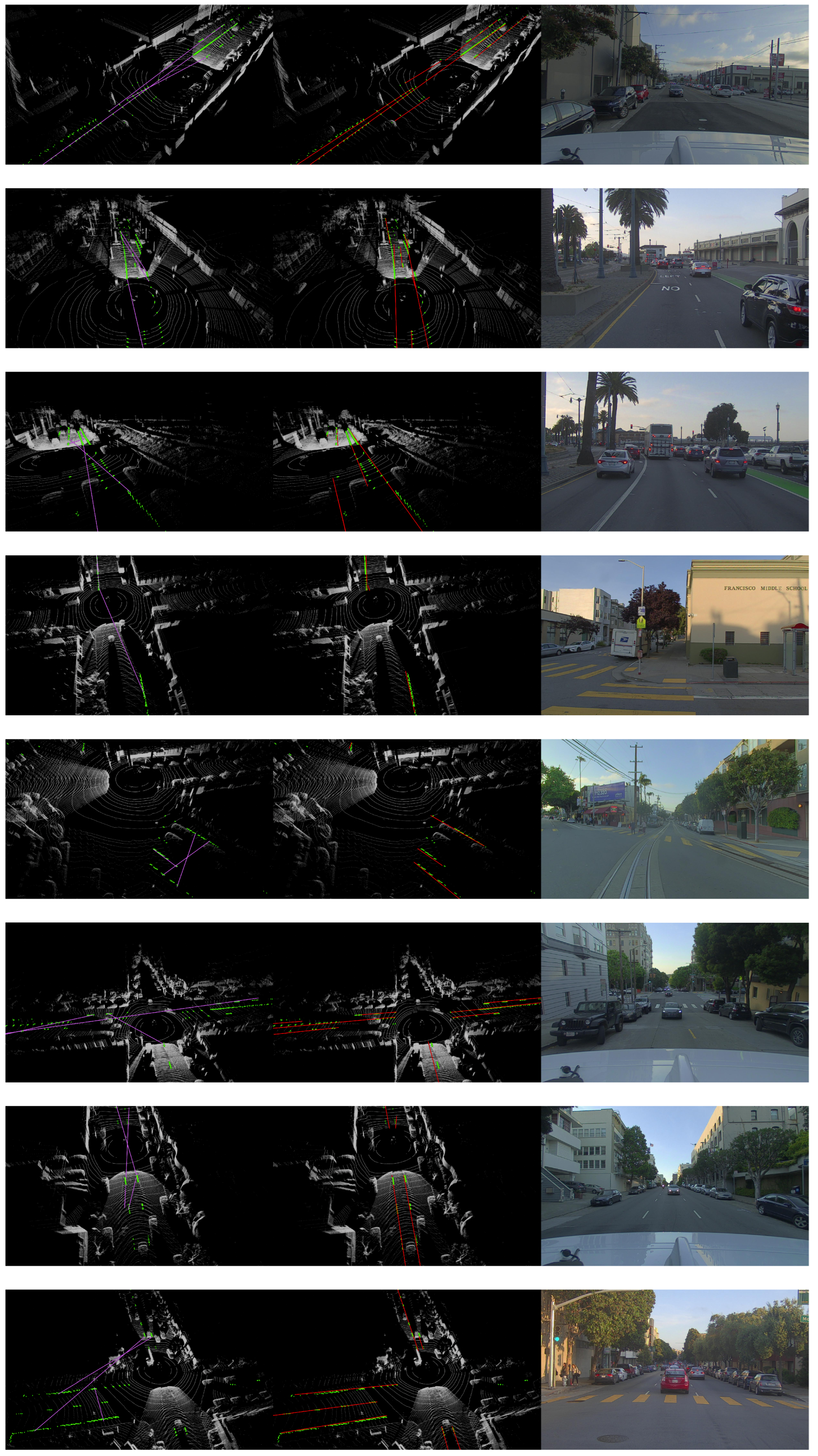

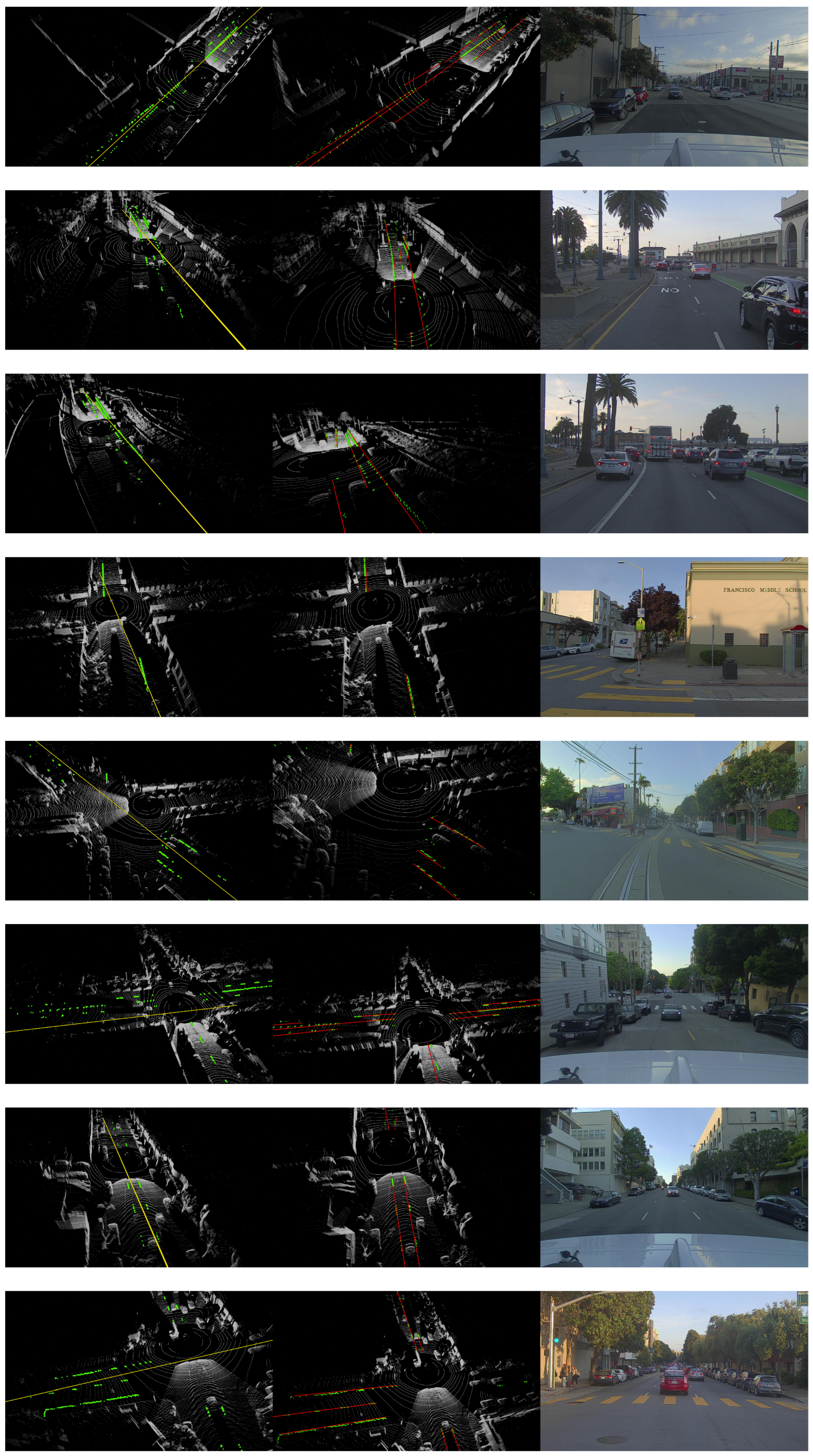

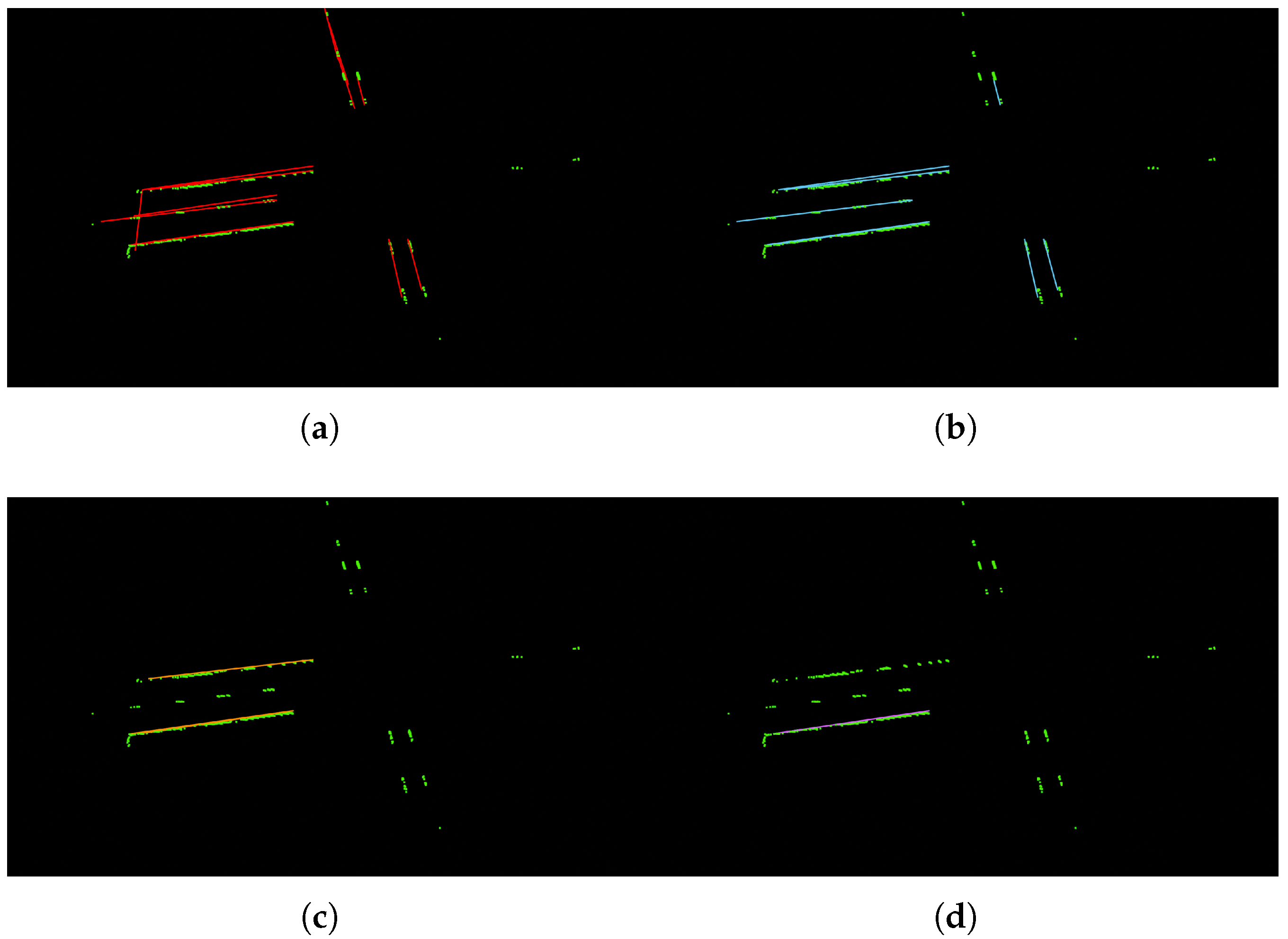

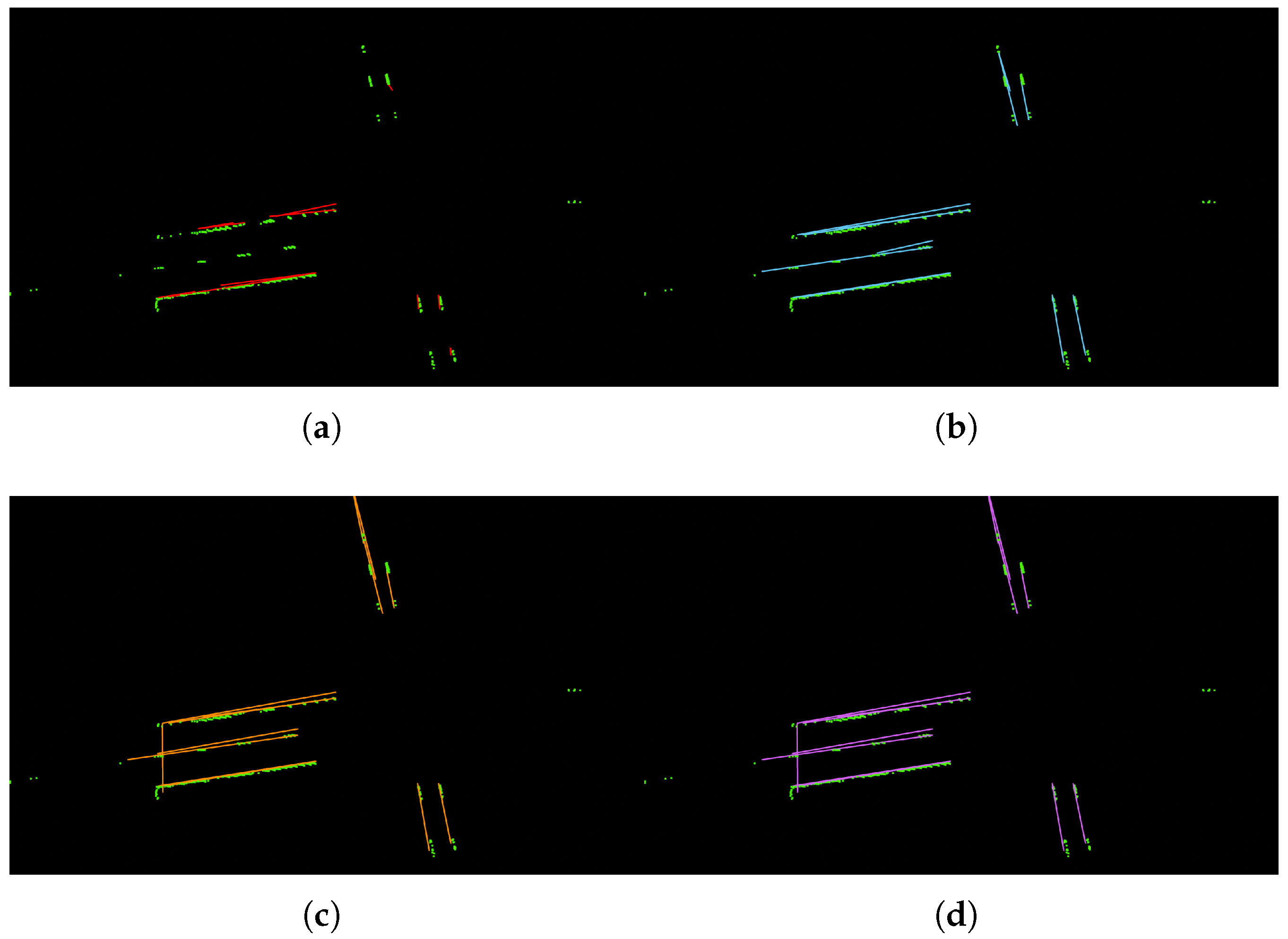

4.2. Experimental Results

4.3. Computational Efficiency

4.4. Hyper-Parameters Setting

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kluge, K.; Lakshmanan, S. A deformable-template approach to lane detection. In Proceedings of the Intelligent Vehicles’95. Symposium, Detroit, MI, USA, 25–26 September 1995; pp. 54–59. [Google Scholar] [CrossRef]

- Liu, R.; Yuan, Z.; Liu, T.; Xiong, Z. End-to-end lane shape prediction with transformers. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–9 January 2021; pp. 3694–3702. [Google Scholar]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Keep your eyes on the lane: Real-time attention-guided lane detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 294–302. [Google Scholar]

- Zheng, T.; Fang, H.; Zhang, Y.; Tang, W.; Yang, Z.; Liu, H.; Cai, D. Resa: Recurrent feature-shift aggregator for lane detection. arXiv 2020, arXiv:2008.13719. [Google Scholar]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixao, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Hur, J.; Kang, S.N.; Seo, S.W. Multi-lane detection in urban driving environments using conditional random fields. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast City, Australia, 23–26 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1297–1302. [Google Scholar]

- Huval, B.; Wang, T.; Tandon, S.; Kiske, J.; Song, W.; Pazhayampallil, J.; Andriluka, M.; Rajpurkar, P.; Migimatsu, T.; Cheng-Yue, R.; et al. An empirical evaluation of deep learning on highway driving. arXiv 2015, arXiv:1504.01716. [Google Scholar]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as deep: Spatial cnn for traffic scene understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Li, X.; Li, J.; Hu, X.; Yang, J. Line-CNN: End-to-End Traffic Line Detection With Line Proposal Unit. IEEE Trans. Intell. Transp. Syst. 2020, 21, 248–258. [Google Scholar] [CrossRef]

- Xu, H.; Wang, S.; Cai, X.; Zhang, W.; Liang, X.; Li, Z. Curvelane-nas: Unifying lane-sensitive architecture search and adaptive point blending. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 689–704. [Google Scholar]

- Neven, D.; De Brabandere, B.; Georgoulis, S.; Proesmans, M.; Van Gool, L. Towards end-to-end lane detection: An instance segmentation approach. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 286–291. [Google Scholar]

- Feng, Z.; Guo, S.; Tan, X.; Xu, K.; Wang, M.; Ma, L. Rethinking efficient lane detection via curve modeling. In Proceedings of the Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hough, P.V.C. Method and Means for Recognizing Complex Patterns. U.S. Patent US3069654A, 18 December 1962. [Google Scholar]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Tao, D. Empowering Things with Intelligence: A Survey of the Progress, Challenges, and Opportunities in Artificial Intelligence of Things. IEEE Internet Things J. 2021, 8, 7789–7817. [Google Scholar] [CrossRef]

- Li, K.; Shao, J.; Guo, D. A Multi-Feature Search Window Method for Road Boundary Detection Based on LIDAR Data. Sensors 2019, 19, 1551. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lindner, P.; Richter, E.; Wanielik, G.; Takagi, K.; Isogai, A. Multi-channel lidar processing for lane detection and estimation. In Proceedings of the 2009 12th International IEEE Conference on Intelligent Transportation Systems, St. Louis, MO, USA, 4–7 October 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Ghallabi, F.; El-Haj-Shhade, G.; Mittet, M.A.; Nashashibi, F. LIDAR-Based road signs detection For Vehicle Localization in an HD Map. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1484–1490. [Google Scholar]

- Tabelini, L.; Berriel, R.; Paixo, T.M.; Badue, C.; Oliveira-Santos, T. PolyLaneNet: Lane Estimation via Deep Polynomial Regression. arXiv 2020, arXiv:2004.10924. [Google Scholar]

- Lasenby, J.; Zisserman, A.; Cipolla, R.; Longuet Higgins, H.C.; Torr, P.H.S. Geometric motion segmentation and model selection. Philos. Trans. R. Soc. London. Ser. A Math. Phys. Eng. Sci 1998, 356, 1321–1340. [Google Scholar] [CrossRef]

- Vincent, E.; Laganiere, R. Detecting planar homographies in an image pair. In Proceedings of the ISPA 2001. 2nd International Symposium on Image and Signal Processing and Analysis. In Conjunction with 23rd International Conference on Information Technology Interfaces (IEEE Cat.), Pula, Croatia, 19–21 June 2001; pp. 182–187. [Google Scholar] [CrossRef] [Green Version]

- Zuliani, M.; Kenney, C.S.; Manjunath, B.S. The multiRANSAC algorithm and its application to detect planar homographies. In Proceedings of the IEEE International Conference on Image Processing, Genova, Italy, 14 September 2005; Volume 3, pp. 3–8549, ISBN 2381-8549. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Lee, M. Robust lane detection based on convolutional neural network and random sample consensus. In Proceedings of the International Conference on Neural Information Processing, Montreal, QC, Canada, 8–11 December 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 454–461. [Google Scholar]

- Gao, Y.L.; Luo, S.Z.; Wang, Z.H.; Chen, C.C.; Pan, J.Y. Locality Sensitive Discriminative Unsupervised Dimensionality Reduction. Symmetry 2019, 11, 1036. [Google Scholar] [CrossRef] [Green Version]

- Ge, H.; Zhu, Z.; Lou, K.; Wei, W.; Liu, R.; Damasevicius, R.; Wozniak, M. Classification of Infrared Objects in Manifold Space Using Kullback–Leibler Divergence of Gaussian Distributions of Image Points. Symmetry 2020, 12, 434. [Google Scholar] [CrossRef] [Green Version]

- Jaw, E.; Wang, X. Feature Selection and Ensemble-Based Intrusion Detection System: An Efficient and Comprehensive Approach. Symmetry 2021, 13, 1764. [Google Scholar] [CrossRef]

- Brachmann, E.; Krull, A.; Nowozin, S.; Shotton, J.; Michel, F.; Gumhold, S.; Rother, C. DSAC-Differentiable RANSAC for Camera Localization. In Proceedings of the Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Scene | Method | Metric | Result |

|---|---|---|---|

| Straight dual-lane line | ScatterHough (Ours) | inline | 665 |

| total | 821 | ||

| accuracy | 0.8090 | ||

| Ransac | inline | 533 | |

| total | 821 | ||

| accuracy | 0.6492 | ||

| Dsac | inline | 280 | |

| total | 821 | ||

| accuracy | 0.3410 | ||

| multiRansac | inline | 614 | |

| total | 821 | ||

| accuracy | 0.7479 | ||

| Poly | inline | 216 | |

| total | 821 | ||

| accuracy | 0.2631 | ||

| 3-lane crossroad | ScatterHough (Ours) | inline | 335 |

| total | 479 | ||

| accuracy | 0.6994 | ||

| Ransac | inline | 179 | |

| total | 479 | ||

| accuracy | 0.3737 | ||

| Dsac | inline | 12 | |

| total | 479 | ||

| accuracy | 0.0251 | ||

| multiRansac | inline | 31 | |

| total | 479 | ||

| accuracy | 0.0647 | ||

| Poly | inline | 5 | |

| total | 479 | ||

| accuracy | 0.0104 | ||

| fork road | ScatterHough (Ours) | inline | 90 |

| total | 178 | ||

| accuracy | 0.5056 | ||

| Ransac | inline | 50 | |

| total | 178 | ||

| accuracy | 0.2809 | ||

| Dsac | inline | 3 | |

| total | 178 | ||

| accuracy | 0.0169 | ||

| multiRansac | inline | 41 | |

| total | 178 | ||

| accuracy | 0.2303 | ||

| Poly | inline | 0 | |

| total | 178 | ||

| accuracy | 0 | ||

| slope road | ScatterHough (Ours) | inline | 594 |

| total | 734 | ||

| accuracy | 0.8093 | ||

| Ransac | inline | 55 | |

| total | 734 | ||

| accuracy | 0.0749 | ||

| Dsac | inline | 125 | |

| total | 734 | ||

| accuracy | 0.1703 | ||

| multiRansac | inline | 162 | |

| total | 734 | ||

| accuracy | 0.2207 | ||

| Poly | inline | 52 | |

| total | 734 | ||

| accuracy | 0.0708 |

| Scene | Method | Metric | Result |

|---|---|---|---|

| double dashed line | ScatterHough (Ours) | inline | 393 |

| total | 429 | ||

| accuracy | 0.9161 | ||

| Ransac | inline | 106 | |

| total | 429 | ||

| accuracy | 0.2471 | ||

| Dsac | inline | 13 | |

| total | 429 | ||

| accuracy | 0.0303 | ||

| multiRansac | inline | 305 | |

| total | 429 | ||

| accuracy | 0.7110 | ||

| Poly | inline | 0 | |

| total | 429 | ||

| accuracy | 0 | ||

| curve line | ScatterHough (Ours) | inline | 794 |

| total | 1335 | ||

| accuracy | 0.5947 | ||

| Ransac | inline | 688 | |

| total | 1335 | ||

| accuracy | 0.5154 | ||

| Dsac | inline | 44 | |

| total | 1335 | ||

| accuracy | 0.0330 | ||

| multiRansac | inline | 425 | |

| total | 1335 | ||

| accuracy | 0.3184 | ||

| Poly | inline | 23 | |

| total | 1335 | ||

| accuracy | 0.0172 | ||

| Overall | ScatterHough (Ours) | inline | 3926 |

| total | 5702 | ||

| accuracy | 0.6885 | ||

| Ransac | inline | 1983 | |

| total | 5702 | ||

| accuracy | 0.3477 | ||

| Dsac | inline | 854 | |

| total | 5702 | ||

| accuracy | 0.1497 | ||

| multiRansac | inline | 2124 | |

| total | 5702 | ||

| accuracy | 0.3725 | ||

| Poly | inline | 356 | |

| total | 5702 | ||

| accuracy | 0.0624 |

| Method | ScatterHough (Ours) | Ransac | Dsac | multiRansac | Poly |

|---|---|---|---|---|---|

| frames per second (FPS) | 12 | 8 | 2 | 5 | 313 |

| d = 0.1 | d = 0.25 | d = 1 | d = 2 | |

|---|---|---|---|---|

| inline | 769 | 802 | 129 | 32 |

| total | 821 | 821 | 821 | 821 |

| accuracy | 0.9367 | 0.9769 | 0.1571 | 0.0390 |

| = 10 | = 30 | = 60 | = 120 | |

|---|---|---|---|---|

| inline | 403 | 409 | 73 | 12 |

| total | 429 | 429 | 429 | 429 |

| accuracy | 0.9394 | 0.9534 | 0.1702 | 0.0280 |

| = 5 | = 10 | = 15 | = 25 | |

|---|---|---|---|---|

| inline | 344 | 409 | 412 | 412 |

| total | 429 | 429 | 429 | 429 |

| accuracy | 0.8019 | 0.9534 | 0.9604 | 0.9604 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, H.; Jiang, S.; Cui, T.; Lu, Z.; Li, J.; Lee, B.-G.; Zhu, J.; Yang, X. ScatterHough: Automatic Lane Detection from Noisy LiDAR Data. Sensors 2022, 22, 5424. https://doi.org/10.3390/s22145424

Zeng H, Jiang S, Cui T, Lu Z, Li J, Lee B-G, Zhu J, Yang X. ScatterHough: Automatic Lane Detection from Noisy LiDAR Data. Sensors. 2022; 22(14):5424. https://doi.org/10.3390/s22145424

Chicago/Turabian StyleZeng, Honghao, Shihong Jiang, Tianxiang Cui, Zheng Lu, Jiawei Li, Boon-Giin Lee, Junsong Zhu, and Xiaoying Yang. 2022. "ScatterHough: Automatic Lane Detection from Noisy LiDAR Data" Sensors 22, no. 14: 5424. https://doi.org/10.3390/s22145424

APA StyleZeng, H., Jiang, S., Cui, T., Lu, Z., Li, J., Lee, B.-G., Zhu, J., & Yang, X. (2022). ScatterHough: Automatic Lane Detection from Noisy LiDAR Data. Sensors, 22(14), 5424. https://doi.org/10.3390/s22145424