Rendering Immersive Haptic Force Feedback via Neuromuscular Electrical Stimulation

Abstract

:1. Introduction

2. Haptic Force Feedback via Functional Electrical Stimulation for Virtual Reality

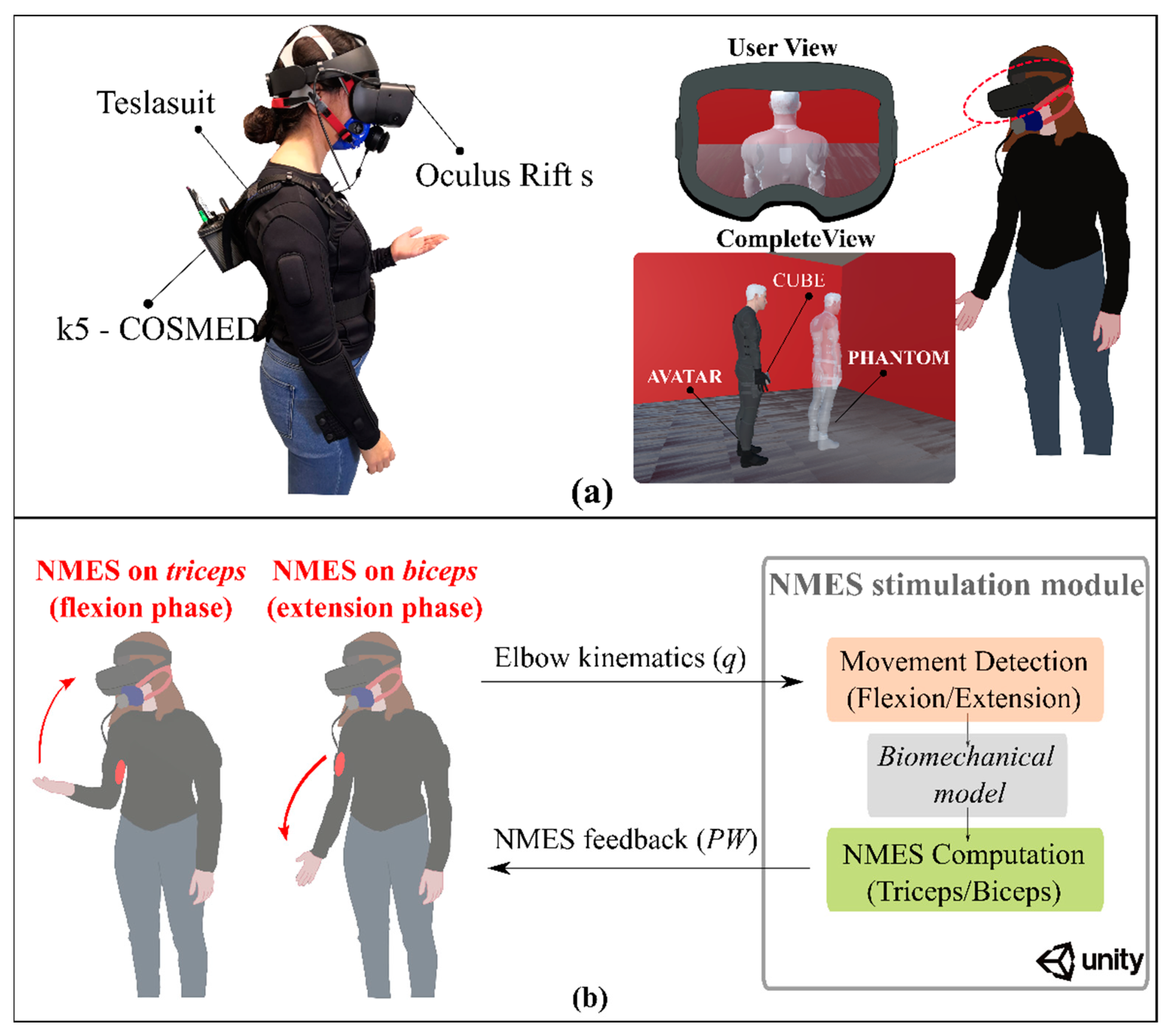

2.1. Experimental Setup and Task

- (1)

- Visual and Physical weight handled (0.5 kg) (Physical): the user received visual feedback from the virtual scenario combined with the haptic feedback of the handled physical weight;

- (2)

- Visual and NMES haptic feedback (NMES): the user received visual feedback from the virtual scenario combined with the haptic feedback provided by the NMES;

- (3)

- Visual feedback only (Visual): the user received only visual feedback from the virtual scenario without any haptic feedback.

2.2. Subjects

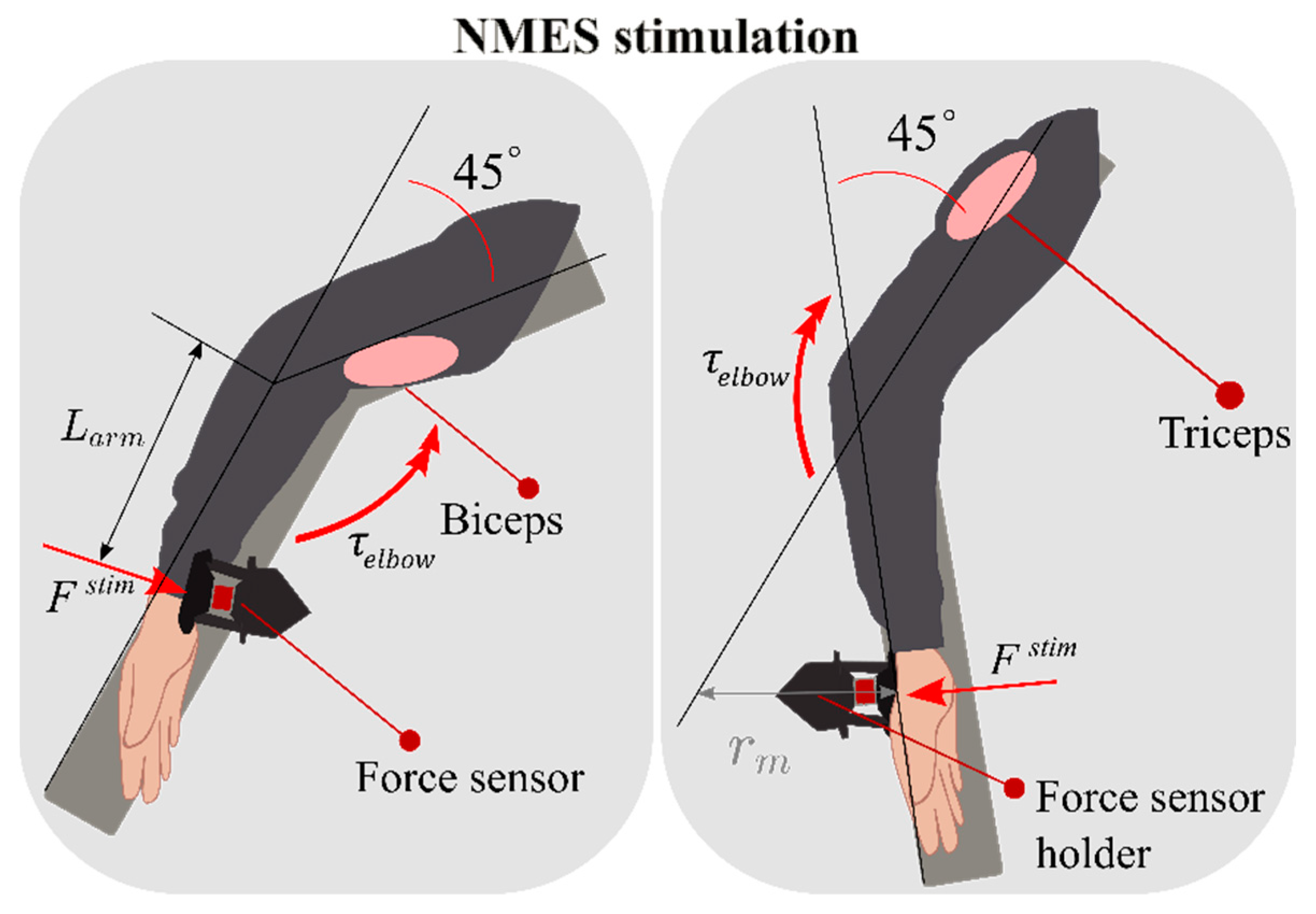

2.3. NMES Calibration and Biomechanical Model

2.4. Outcome Measures

2.5. Statistical Analysis

3. Results

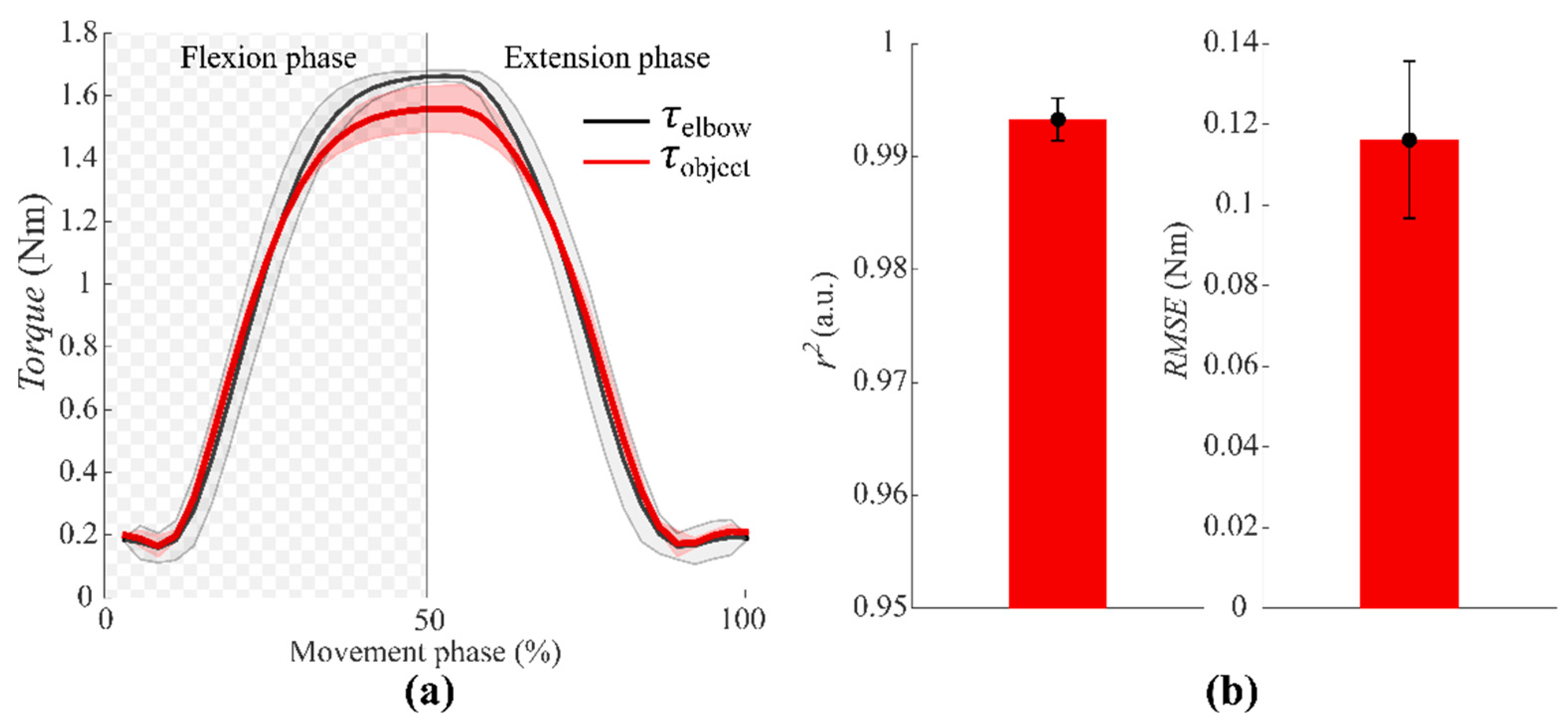

3.1. The NMES Feedback Is Comparable to the Physical in Terms of Torque

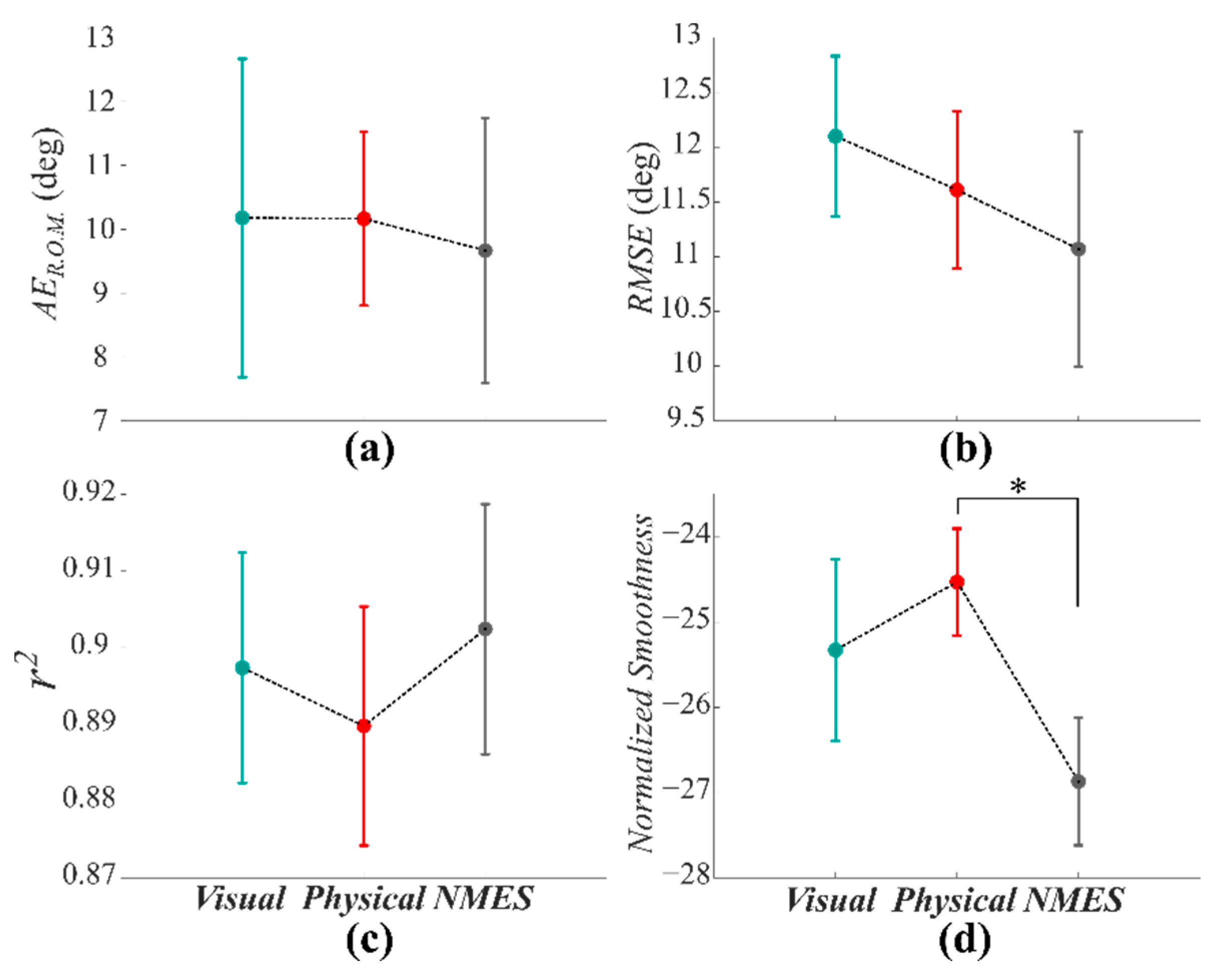

3.2. NMES Condition Does Not Influence the Kinematic Accuracy

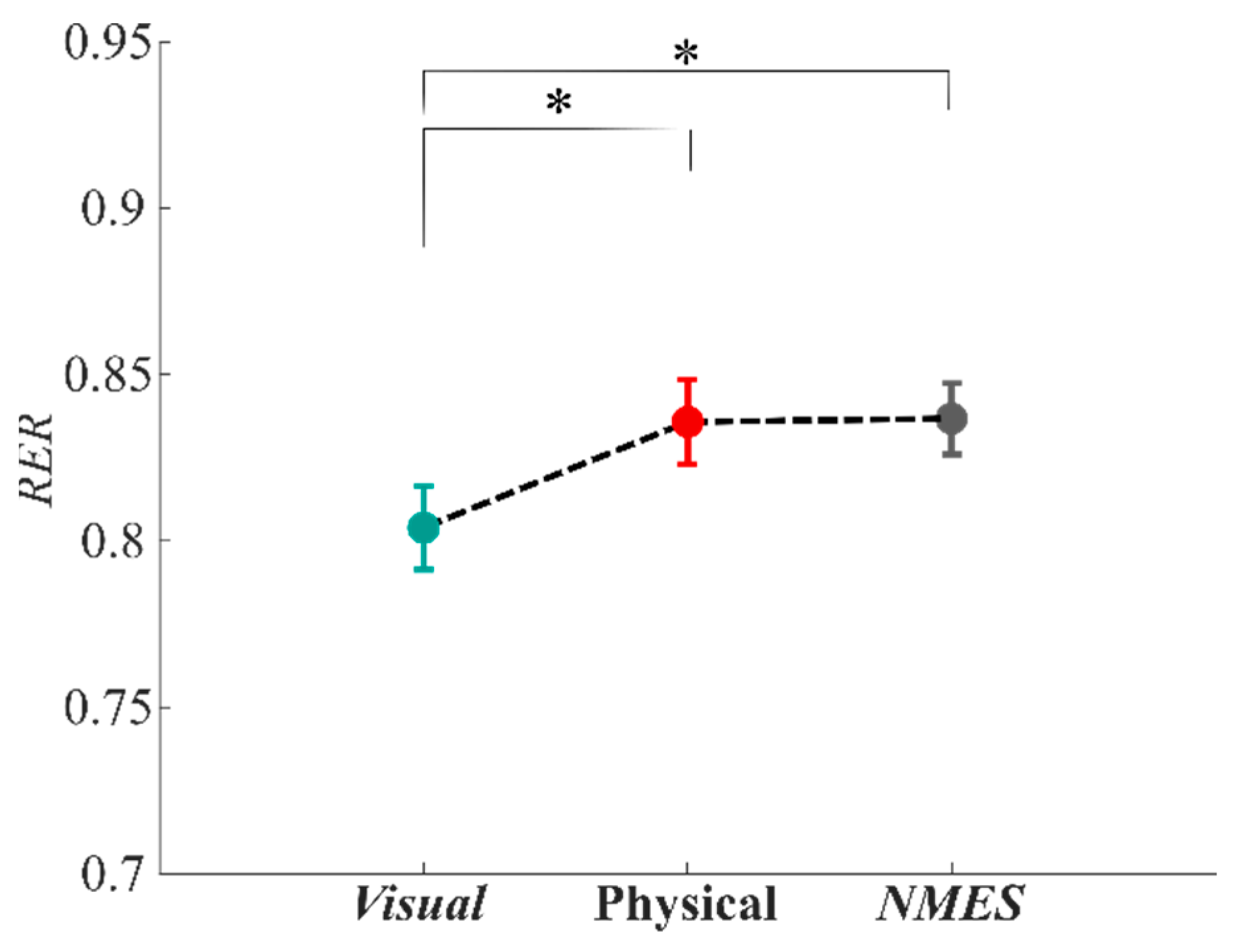

3.3. Metabolic Consumption during the NMES Condition Is Comparable with the Physical One

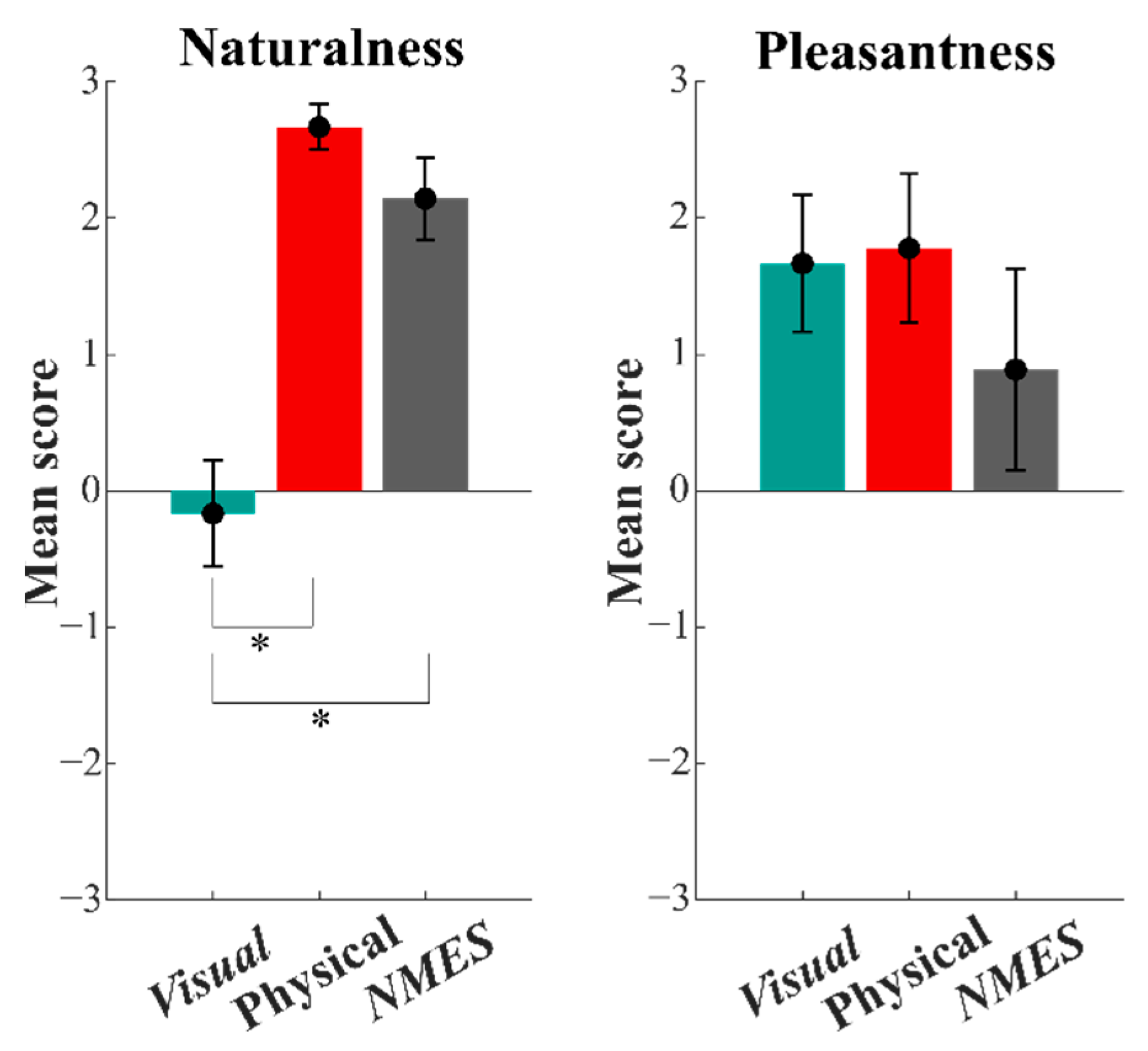

3.4. Naturalness and Pleasantness

4. Discussion

4.1. NMES Feedback Reliability and Its Quantitative Assessment

4.2. Integration of NMES-Based-Haptic Feedback in Virtual Scenarios

4.3. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hannaford, B.; Okamura, A.M. Haptics. In Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 1063–1084. [Google Scholar]

- Salvato, M.; Heravi, N.; Okamura, A.M.; Bohg, J. Predicting Hand-Object Interaction for Improved Haptic Feedback in Mixed Reality. IEEE Robot. Autom. Lett. 2022, 7, 3851–3857. [Google Scholar] [CrossRef]

- Leonardis, D.; Santamato, G.; Gabardi, M.; Solazzi, M.; Frisoli, A. A parallel-elastic actuation approach for wide bandwidth fingertip haptic devices. Meccanica 2022, 57, 739–749. [Google Scholar] [CrossRef]

- Fan, L.; Song, A.; Zhang, H. Development of an Integrated Haptic Sensor System for Multimodal Human-Computer Interaction Using Ultrasonic Array and Cable Robot. IEEE Sens. J. 2022, 22, 4634–4643. [Google Scholar] [CrossRef]

- Lam, T.M.; Boschloo, H.W.; Mulder, M.; van Paassen, M.M. Artificial force field for haptic feedback in UAV teleoperation. IEEE Trans. Syst. Man Cybern. A Syst. Hum. 2009, 39, 1316–1330. [Google Scholar] [CrossRef]

- Lieberman, J.; Breazeal, C. TIKL: Development of a Wearable Vibrotactile Feedback Suit for Improved Human Motor Learning. IEEE Trans. Robot. 2007, 23, 919–926. [Google Scholar] [CrossRef]

- Lindeman, R.W.; Page, R.; Yanagida, Y.; Sibert, J.L. Towards full-body haptic feedback: The design and deployment of a spatialized vibrotactile feedback system. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Hong Kong, China, 10–12 November 2004; pp. 146–149. [Google Scholar]

- Petrenko, V.I.; Tebueva, F.B.; Antonov, V.O.; Apurin, A.A.; Zavolokina, U.V. Development of haptic gloves with vibration feedback as a tool for manipulation in virtual reality based on bend sensors and absolute orientation sensors. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Chennai, India, 16–17 September 2020; Volume 873, p. 12025. [Google Scholar]

- Rakkolainen, I.; Sand, A.; Raisamo, R. A Survey of Mid-Air Ultrasonic Tactile Feedback. In Proceedings of the 2019 IEEE International Symposium on Multimedia (ISM), San Diego, CA, USA, 9–11 December 2019; pp. 94–944. [Google Scholar]

- Liang, Y.; Du, G.; Li, C.; Chen, C.; Wang, X.; Liu, P.X. A Gesture-Based Natural Human-Robot Interaction Interface With Unrestricted Force Feedback. IEEE Trans. Instrum. Meas. 2022. [Google Scholar] [CrossRef]

- James, J.; Davis, D.; Gokulnath, K.; Rao, R.B. Bilateral human-in-the-loop tele-haptic interface for controlling a robotic manipulator. Int. J. Mechatron. Autom. 2018, 6, 104–119. [Google Scholar] [CrossRef]

- Abdi, E.; Kulić, D.; Croft, E. Haptics in Teleoperated Medical Interventions: Force Measurement, Haptic Interfaces and Their Influence on User’s Performance. IEEE Trans. Biomed. Eng. 2020, 67, 3438–3451. [Google Scholar] [CrossRef]

- Zhu, M.; Biswas, S.; Dinulescu, S.I.; Kastor, N.; Hawkes, E.W.; Visell, Y. Soft, Wearable Robotics and Haptics: Technologies, Trends, and Emerging Applications. Proc. IEEE 2022, 110, 246–272. [Google Scholar] [CrossRef]

- Lotti, N.; Xiloyannis, M.; Durandau, G.; Galofaro, E.; Sanguineti, V.; Masia, L.; Sartori, M. Adaptive model-based myoelectric control for a soft wearable arm exosuit: A new generation of wearable robot control. IEEE Robot. Autom. Mag. 2020, 27, 43–53. [Google Scholar] [CrossRef]

- Lotti, N.; Xiloyannis, M.; Missiroli, F.; Bokranz, C.; Chiaradia, D.; Frisoli, A.; Riener, R.; Masia, L. Myoelectric or Force Control? A Comparative Study on a Soft Arm Exosuit. IEEE Trans. Robot. 2022, 38, 1363–1379. [Google Scholar] [CrossRef]

- Pfeiffer, M.; Schneegass, S.; Alt, F.; Rohs, M. Let me grab this: A comparison of ems and vibration for haptic feedback in free-hand interaction. In Proceedings of the 5th augmented human international conference, Kobe, Japan, 7–9 March 2014; pp. 1–8. [Google Scholar]

- Pfeiffer, M.; Dünte, T.; Schneegass, S.; Alt, F.; Rohs, M. Cruise control for pedestrians: Controlling walking direction using electrical muscle stimulation. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 2505–2514. [Google Scholar]

- Kruijff, E.; Schmalstieg, D.; Beckhaus, S. Using neuromuscular electrical stimulation for pseudo-haptic feedback. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Limassol, Cyprus, 1–3 November 2006; pp. 316–319. [Google Scholar]

- Witteveen, H.J.B.; Droog, E.A.; Rietman, J.S.; Veltink, P.H. Vibro-and electrotactile user feedback on hand opening for myoelectric forearm prostheses. IEEE Trans. Biomed. Eng. 2012, 59, 2219–2226. [Google Scholar] [CrossRef] [PubMed]

- Antfolk, C.; D’alonzo, M.; Rosén, B.; Lundborg, G.; Sebelius, F.; Cipriani, C. Sensory feedback in upper limb prosthetics. Expert Rev. Med. Devices 2013, 10, 45–54. [Google Scholar] [CrossRef]

- El Rassi, I.; El Rassi, J.-M. A review of haptic feedback in tele-operated robotic surgery. J. Med. Eng. Technol. 2020, 44, 247–254. [Google Scholar] [CrossRef] [PubMed]

- Yeh, I.-L.; Holst-Wolf, J.; Elangovan, N.; Cuppone, A.V.; Lakshminarayan, K.; Capello, L.; Masia, L.; Konczak, J. Effects of a robot-aided somatosensory training on proprioception and motor function in stroke survivors. J. Neuroeng. Rehabil. 2021, 18, 77. [Google Scholar] [CrossRef] [PubMed]

- Harris, M.; McCarty, M.; Montes, A.; Celik, O. Enhancing Haptic Effects Displayed via Neuromuscular Electrical Stimulation. In Proceedings of the Dynamic Systems and Control Conference, Bern, Switzerland, 17–19 December 2016; Volume 50695, p. V001T07A003. [Google Scholar]

- Lopes, P.; You, S.; Cheng, L.-P.; Marwecki, S.; Baudisch, P. Providing haptics to walls & heavy objects in virtual reality by means of electrical muscle stimulation. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1471–1482. [Google Scholar]

- Yem, V.; Vu, K.; Kon, Y.; Kajimoto, H. Effect of electrical stimulation haptic feedback on perceptions of softness-hardness and stickiness while touching a virtual object. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Reutlingen, Germany, 18–22 March 2018; pp. 89–96. [Google Scholar]

- Lugrin, J.; Landeck, M.; Latoschik, M.E. Avatar embodiment realism and virtual fitness training. In Proceedings of the 2015 IEEE Virtual Reality (VR), Arles, France, 23–27 March 2015; pp. 225–226. [Google Scholar]

- Osterlund, J.; Lawrence, B. Virtual reality: Avatars in human spaceflight training. Acta Astronaut. 2012, 71, 139–150. [Google Scholar] [CrossRef]

- Stansfield, S.; Shawver, D.; Sobel, A.; Prasad, M.; Tapia, L. Design and Implementation of a Virtual Reality System and Its Application to Training Medical First Responders. Presence Teleoperators Virtual Environ. 2000, 9, 524–556. [Google Scholar] [CrossRef]

- Missiroli, F.; Lotti, N.; Xiloyannis, M.; Sloot, L.H.; Riener, R.; Masia, L. Relationship Between Muscular Activity and Assistance Magnitude for a Myoelectric Model Based Controlled Exosuit. Front. Robot. AI 2020, 7, 190. [Google Scholar] [CrossRef]

- DeBlois, J.P.; White, L.E.; Barreira, T.V. Reliability and validity of the COSMED K5 portable metabolic system during walking. Eur. J. Appl. Physiol. 2021, 121, 209–217. [Google Scholar] [CrossRef]

- Guidetti, L.; Meucci, M.; Bolletta, F.; Emerenziani, G.P.; Gallotta, M.C.; Baldari, C. Validity, reliability and minimum detectable change of COSMED K5 portable gas exchange system in breath-by-breath mode. PLoS ONE 2018, 13, e0209925. [Google Scholar] [CrossRef] [Green Version]

- Crouter, S.E.; LaMunion, S.R.; Hibbing, P.R.; Kaplan, A.S.; Bassett, D.R., Jr. Accuracy of the Cosmed K5 portable calorimeter. PLoS ONE 2019, 14, e0226290. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Winkert, K.; Kirsten, J.; Dreyhaupt, J.; Steinacker, J.M.; Treff, G. The COSMED K5 in Breath-by-Breath and Mixing Chamber Mode at Low to High Intensities. Med. Sci. Sports Exerc. 2020, 52, 1153–1162. [Google Scholar] [CrossRef] [PubMed]

- Ramos-Jiménez, A.; Hernández-Torres, R.P.; Torres-Durán, P.V.; Romero-Gonzalez, J.; Mascher, D.; Posadas-Romero, C.; Juárez-Oropeza, M.A. The Respiratory Exchange Ratio is Associated with Fitness Indicators Both in Trained and Untrained Men: A Possible Application for People with Reduced Exercise Tolerance. Clin. Med. Circ. Respirat. Pulm. Med. 2008, 2, CCRPM-S449. [Google Scholar] [CrossRef]

- Deuster, P.A.; Heled, Y.; Seidenberg, P.H.; Beutler, A.I. Testing for maximal aerobic power. In The Sports Medicine Resource Manual; WB Saunders: Philadelphia, PA, USA, 2008; pp. 520–528. [Google Scholar]

- Balasubramanian, S.; Melendez-Calderon, A.; Roby-Brami, A.; Burdet, E. On the analysis of movement smoothness. J. Neuroeng. Rehabil. 2015, 12, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Preatoni, G.; Bracher, N.M.; Raspopovic, S. Towards a future VR-TENS multimodal platform to treat neuropathic pain. In Proceedings of the 2021 10th International IEEE/EMBS Conference on Neural Engineering (NER), Virtual Event, 4–6 May 2021; pp. 1105–1108. [Google Scholar]

- Lopes, P.; You, S.; Ion, A.; Baudisch, P. Adding force feedback to mixed reality experiences and games using electrical muscle stimulation. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–27 April 2018; pp. 1–13. [Google Scholar]

- Contu, S.; Hughes, C.M.L.; Masia, L. The role of visual and haptic feedback during dynamically coupled bimanual manipulation. IEEE Trans. Haptics 2016, 9, 536–547. [Google Scholar] [CrossRef]

- Overtoom, E.M.; Horeman, T.; Jansen, F.-W.; Dankelman, J.; Schreuder, H.W.R. Haptic feedback, force feedback, and force-sensing in simulation training for laparoscopy: A systematic overview. J. Surg. Educ. 2019, 76, 242–261. [Google Scholar] [CrossRef]

- Marchal-Crespo, L.; McHughen, S.; Cramer, S.C.; Reinkensmeyer, D.J. The effect of haptic guidance, aging, and initial skill level on motor learning of a steering task. Exp. Brain Res. 2010, 201, 209–220. [Google Scholar] [CrossRef] [Green Version]

- Bekrater-Bodmann, R. Factors associated with prosthesis embodiment and its importance for prosthetic satisfaction in lower limb amputees. Front. Neurorobot. 2020, 14, 604376. [Google Scholar] [CrossRef]

- Perez-Marcos, D.; Chevalley, O.; Schmidlin, T.; Garipelli, G.; Serino, A.; Vuadens, P.; Tadi, T.; Blanke, O.; Millán, J.D.R. Increasing upper limb training intensity in chronic stroke using embodied virtual reality: A pilot study. J. Neuroeng. Rehabil. 2017, 14, 1–14. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Galofaro, E.; D’Antonio, E.; Lotti, N.; Masia, L. Rendering Immersive Haptic Force Feedback via Neuromuscular Electrical Stimulation. Sensors 2022, 22, 5069. https://doi.org/10.3390/s22145069

Galofaro E, D’Antonio E, Lotti N, Masia L. Rendering Immersive Haptic Force Feedback via Neuromuscular Electrical Stimulation. Sensors. 2022; 22(14):5069. https://doi.org/10.3390/s22145069

Chicago/Turabian StyleGalofaro, Elisa, Erika D’Antonio, Nicola Lotti, and Lorenzo Masia. 2022. "Rendering Immersive Haptic Force Feedback via Neuromuscular Electrical Stimulation" Sensors 22, no. 14: 5069. https://doi.org/10.3390/s22145069

APA StyleGalofaro, E., D’Antonio, E., Lotti, N., & Masia, L. (2022). Rendering Immersive Haptic Force Feedback via Neuromuscular Electrical Stimulation. Sensors, 22(14), 5069. https://doi.org/10.3390/s22145069