1. Introduction

Mosquito-borne diseases remain a significant cause of morbidity and mortality across tropical regions. Thus, mosquitoes are considered a significant problem for human health. The mosquito transmits infectious pathogens to humans through bites, serving as vectors of diverse life-threatening diseases such as dengue, chikungunya virus, dirofilariasis, malaria, and Zika. In Singapore, the National Environmental Agency (NEA) has stated open perimeter drains, covered perimeter drains, covered car parks, and roadside faulty drainage sites as the most common breeding habitats for mosquitoes in public areas [

1]. Hence, routine mosquito surveillance is essential for effective control of the mosquito population. According to the Integrated Mosquito Management (IMM) program, mosquito surveillance includes inspecting breeding sites, identifying mosquito types, and measuring the critical environment through mosquito population mapping. However, conventional surveillance methods such as manual inspection are used to detect and classify mosquitoes, which are time-consuming, difficult to monitor, and labor-intensive. Thus, automation of mosquito surveillance and breed classification needs to be a high priority.

Various human-assisted methods have been used for mosquito surveillance in the past decade. For example, thermal fogging trucks, pesticides, and electrical traps have been regularly and effectively used for mosquito surveillance. Further, traps can be divided into active traps (visual, olfactory, or thermal cues) and passive traps (suction fans). In the literature, various mosquito trapping devices, such as the Biogents sentinel trap [

2], heavy-duty Encephalitis Vector Survey trap (EVS trap) [

3], Centres for Disease Control miniature light trap (CDC trap) [

4], Mosquito Magnet Patriot Mosquito trap (MM trap) [

5], MosquiTrap [

6], and Gravitrap [

7], have been reported. These devices use various techniques, such as a fan, contrast light, scent dispenser, carbon dioxide, and magnets, to target mosquitoes and breeding sites. These methods are labor-intensive and consume a lot of time. However, the efficiency of these tracking devices is greatly influenced by the device’s location, time of deployment, number of devices, and the extent and duration of the transmission. The contribution and efficiency of such devices are quite uncertain due to the complex interplay of multiple factors. Lastly, mosquito surveillance and mosquito population mapping require a highly skilled workforce.

Recently, machine learning (ML) approaches have been widely used for mosquito detection and classification tasks. Here, Artificial Neural Network (ANN), Decision Trees (DT), Support Vector Machines (SVM), and Convolutional Neural Networks (CNNs) are the most commonly used methods. Among these techniques, CNN-based frameworks are the more popular mosquito detection and classification tool. In [

8], Kittichai et al. presented a deep learning-based algorithm to simultaneously classify and localize the images to identify the species and the gender of mosquitoes. The authors reported that the concatenated two YOLO V3 models were optimal in identifying the mosquitoes, with a mean average precision and sensitivity of 99% and 92.4%, respectively. In another study, Rustam et al. proposed a system to detect the presence of two critical disease-spreading classes of mosquitoes [

9]. The authors introduced a hybrid feature selection method named RIFS, which integrates two feature selection techniques—the Region of Interest (ROI)-based image filtering and the wrapper-based Forward Feature Selection (FFS) technique. The proposed approach outperformed all other models by providing 99.2% accuracy. Further, a lightweight deep learning approach was proposed by Yin et al. for mosquito species and gender classification from wingbeat audio signals [

10]. A one-dimensional CNN was applied directly on a low-sample-rate raw audio signal. The model achieved a classification accuracy of over 93%. In [

11], the authors proposed a deep learning-based framework for mosquito species identification. The Convolutional Neural Network comprised a multitiered ensemble model. The results demonstrate the model as an accurate, scalable, and practical computer vision solution with 97.04 ± 0.87% classification accuracy. Motta et al. employed a Convolutional Neural Network to accomplish the automated morphological classification of mosquitoes [

12]. In their research, the authors compared LeNet, GoogleNet, and AlexNet’s performance and concluded that GoogleNet outperformed all other models, with a detection accuracy of 76.2%. Lastly, Li-Pang, in [

13], proposed an automatic framework for the classification of mosquitoes using edge computing and deep learning. The proposed system was implemented with the help of IoT-based devices. The highest detection accuracy that the authors reported was 90.5% on test data.

Robot-assisted surveillance has become an attractive solution for performing various automated tasks. It has widely been used for bringing a certain degree of quality and precision that human labor would be unable to maintain consistently for long periods. In the literature, various robot-assisted applications, such as crawl space inspection, tunnel inspection, drain inspection, and power transmission line fault detection, have been reported. In [

14], the authors designed an insect monitoring robot to detect and identify Pyralidae insects. The contours of Asian Pyralidae insect characteristics are selected using the Hu moment feature. The authors reported a recognition rate of 94.3%. In [

15], Kim et al. proposed a deep learning-based automatic mosquito sensing and control system for urban mosquito habitats. The Fully Convolutional Network (FCN) and neural network-based regression demonstrated a classification accuracy of 84%.

In [

16], the authors proposed Unmanned Aerial Vehicles (UAVs) for identifying malaria vector larval habitats (Nyssorhynchus darlingi) and breeding sites with high-resolution imagery. The results demonstrated that high-resolution multispectral imagery where Nyssorhynchus darlingi is most likely to breed can be obtained with an overall accuracy of 86.73–96.98%. Another study by Dias et al. proposed the autonomous detection of mosquito breeding habitats using a UAV [

17]. Here, the authors used a random forest classifier algorithm and reported detection accuracy of 99% on the test dataset.

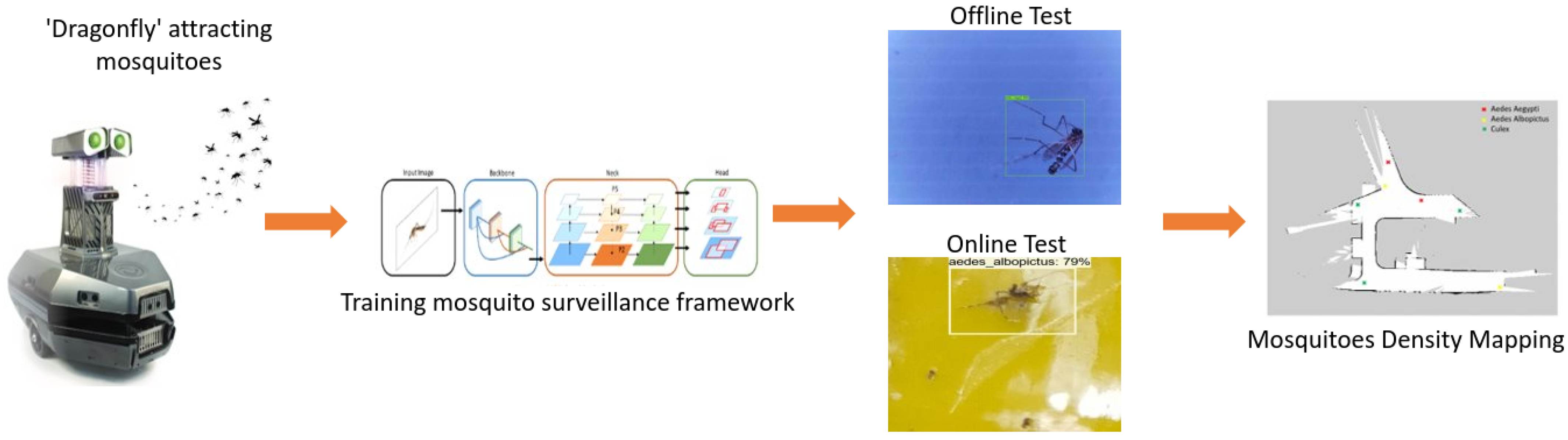

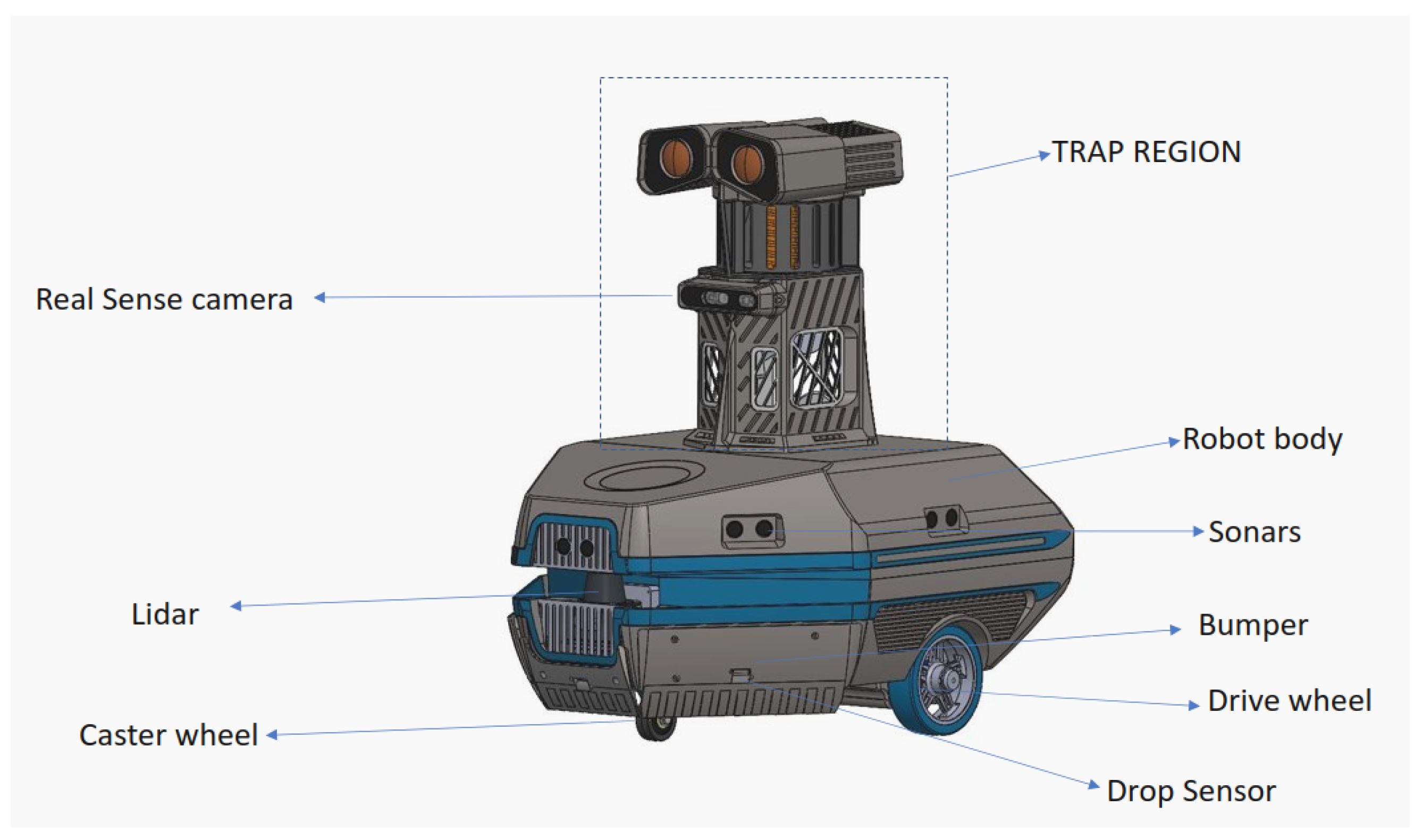

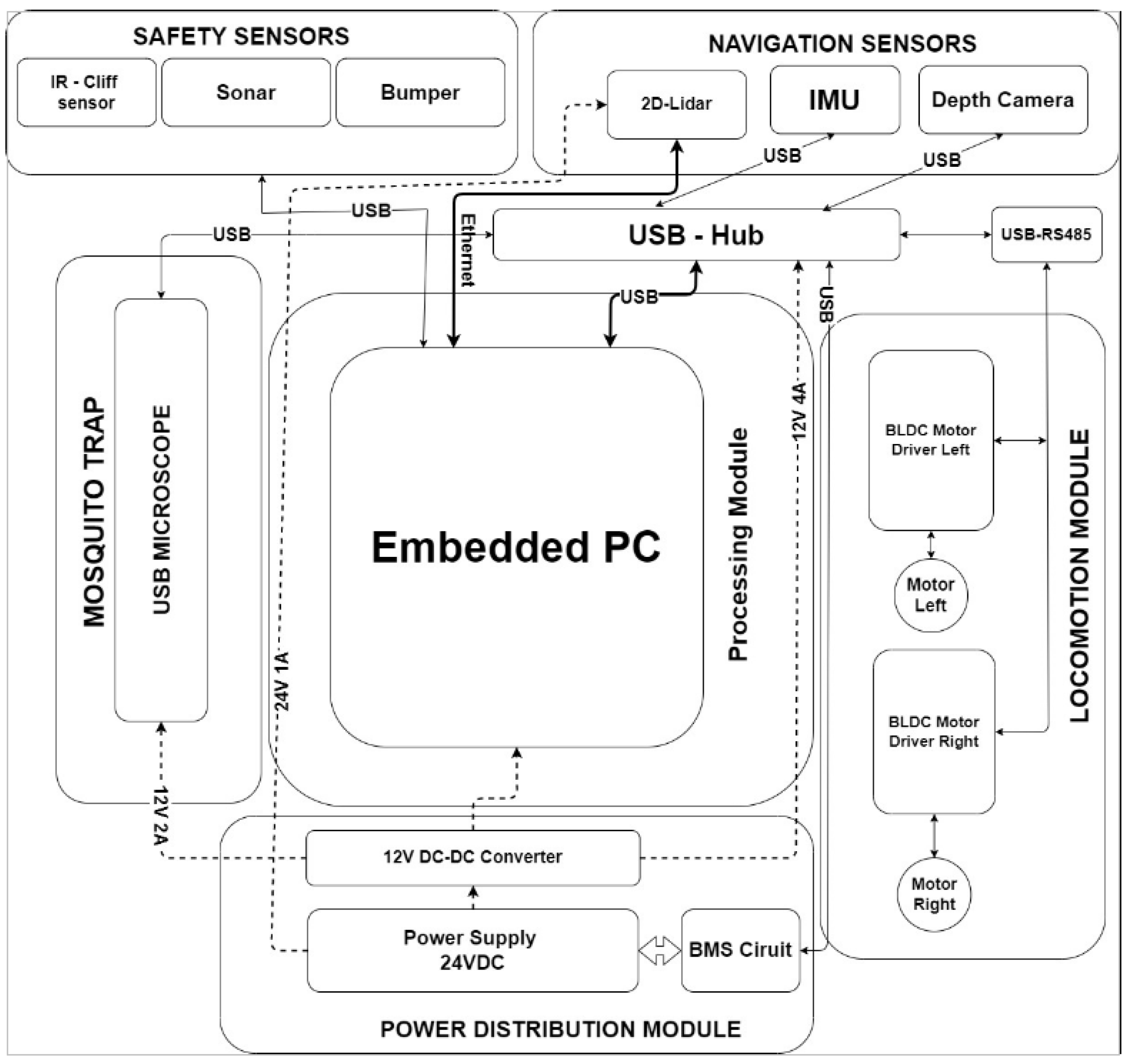

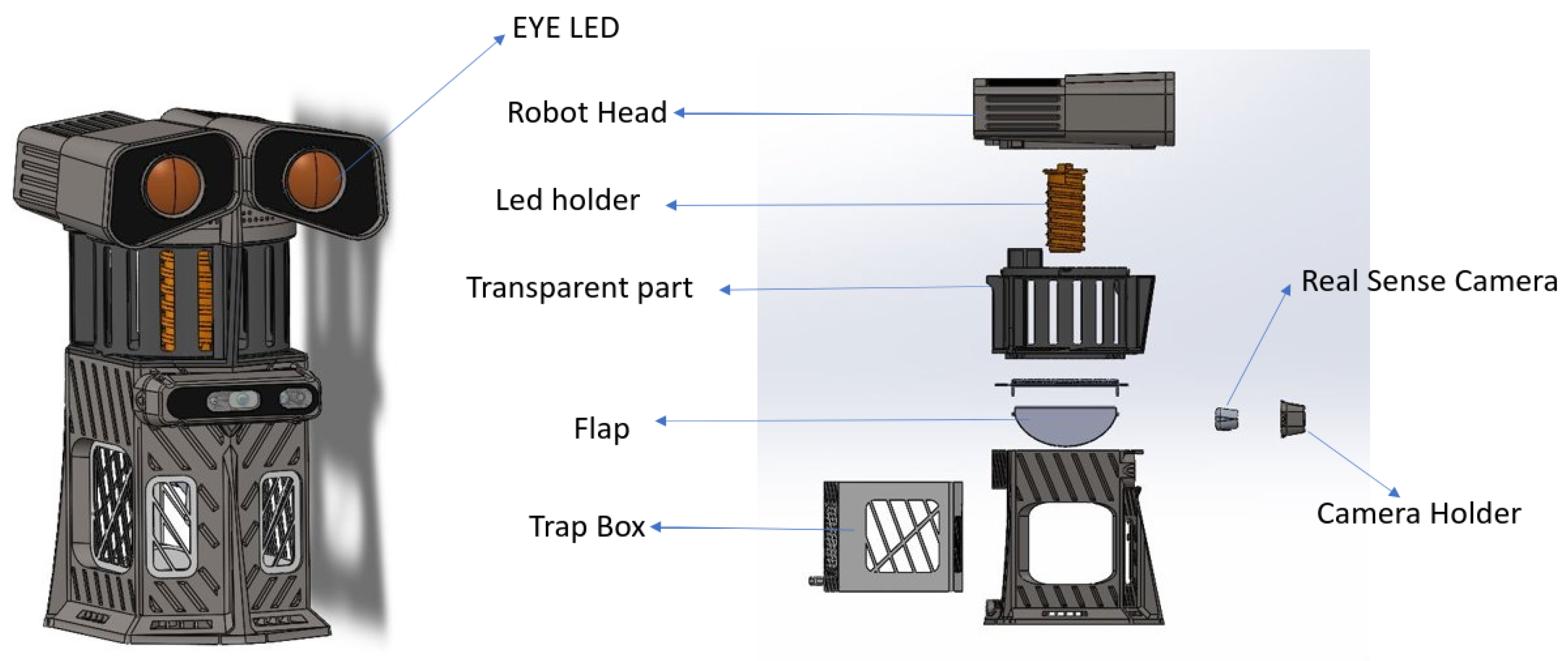

This work presents an AI-enabled mosquito surveillance and population mapping framework using our in-house-developed robot, Dragonfly. Our research studies entomological characterizations of mosquitoes and obtains the required information to detect, classify, and map various breeds of mosquitoes in various environments. The main objective of the Dragonfly robot developed is to identify the mosquito hotspots and trap and kill mosquitoes by attracting the insects towards it. Moreover, from a pest control management perspective, it is crucial to identify mosquito distribution in a given region to take countermeasures to restrict the infestation effectively. Public health experts can also study the mosquito population and deploy necessary mosquito management programs. These programs help to effectively control the mosquito population and protect humans from life-threatening mosquito-borne diseases. Currently, a real-time deployable robot system for mosquito surveillance infestation control is lacking, which makes our research valuable to the community.

This paper is organized as follows.

Section 1 presents an introduction and literature review.

Section 2 provides the methodology and an overview of the proposed system. The experimental setup, findings, and discussion are covered in

Section 3. Finally,

Section 4 concludes this research work.

3. Experimental Setup and Results

This section describes the experimental results of the mosquito surveillance and population mapping framework. The experiments were carried out in four phases: dataset preparation and training, evaluating the trained mosquito surveillance algorithm model on an offline test, a real-time field trial for mosquito population mapping, and comparing the trained mosquito framework with other models.

3.1. Dataset Preparation and Training

The mosquito surveillance framework’s training dataset consists of 500 images of Aedes aegypti mosquitoes [

26], 500 images of Aedes albopictus mosquitoes [

26], and 500 images of Culex mosquitoes [

27]. The dataset consists of a combination of real-time and online collected datasets. Here, the real-time mosquito images were collected using the Dragonfly robot with a mosquito glue trap in gardening regions, a marine dumping yard, and water body areas for real-time mosquito data. Both online collected and real-time collected trap images were resized to

pixel resolution.

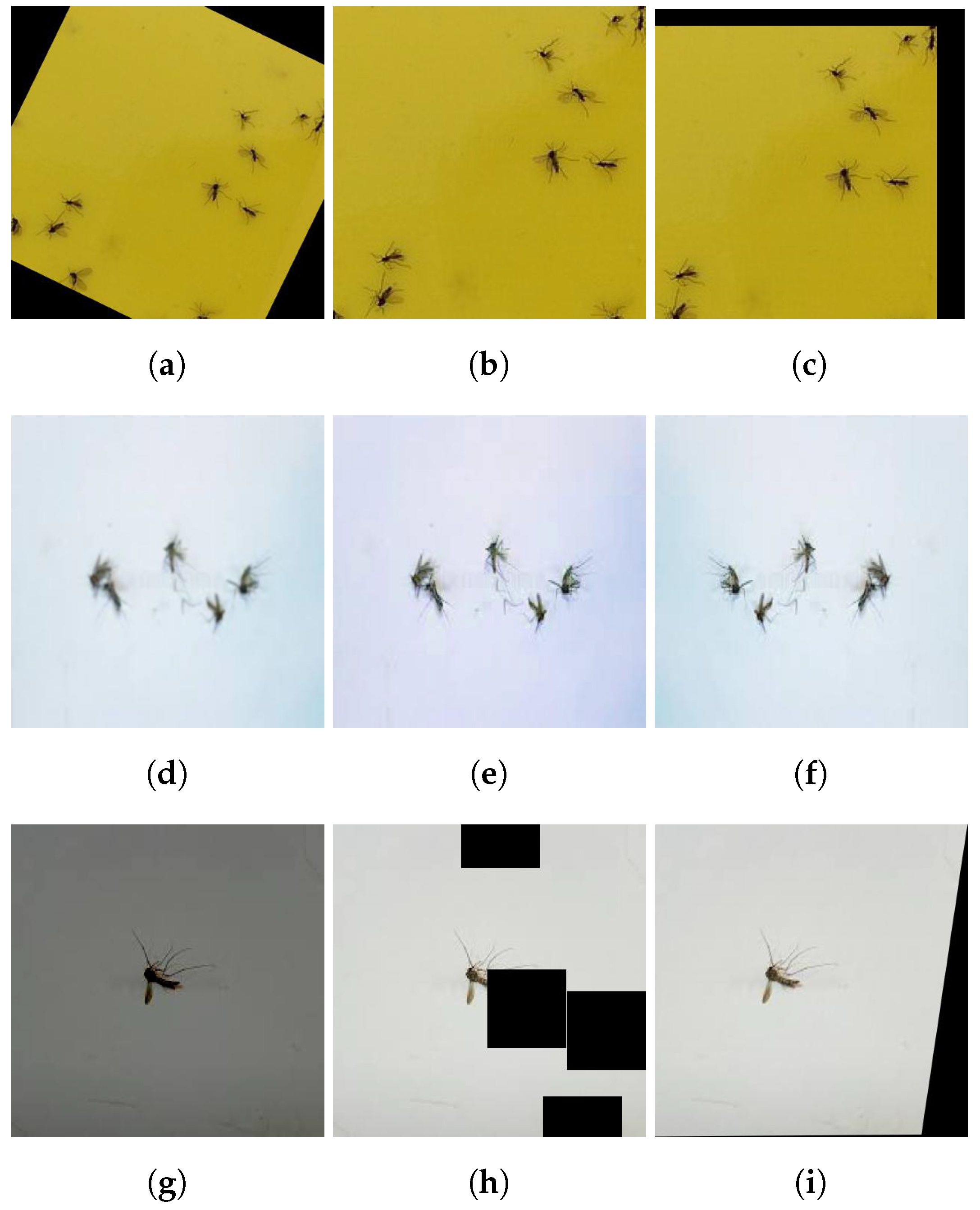

Generally, the mosquito can be trapped or glued on a glue trap in any orientation in the real-time scenario. Thus, data augmentation is applied to the training dataset to overcome the orientation issue. The data augmentation also helps to control the over-fitting and class imbalance issues in the model training stage. Therefore, a total of 15,000 images were used for training. Data augmentation processes such as scaling, rotation, translation, horizontal flip, color enhancement, blurring, brightness, shearing, and cutout were applied to collected images.

Figure 6 shows an example of the data augmentation of one image.

Table 2 elaborates the settings of the various types of augmentation applied.

3.1.1. Training Hardware and Software Details

The mosquito surveillance algorithm YOLO V4 was built using the Darknet library, and pre-trained CSPDarknet53 was used as a feature extractor [

22]. The CSPDarknet53 model was trained on the MSCOCO dataset consisting of 80 classes. The Stochastic Gradient Descent (SGD) optimizer was used to train the YOLO V4 model. The hyper-parameters used were 0.949 for momentum, an initial learning rate of 0.001, and a batch size of 64 along subdivisions of 16. The model was trained for total epochs of 3700 before early stopping and validation of the model in real-time inference.

The model was trained and tested on the Lenovo ThinkStation P510. It consists of an Intel Xeon E5-1630V4 CPU running at 3.7 GHz, 64 GB Random Access Memory (RAM), and an Nvidia Quadro P4000 GPU (1792 Nvidia CUDA Cores and 8 GB GDDR5 memory size running at 192.3 GBps bandwidth).

The K-fold (here K = 10) cross-validation technique was used for validating the dataset and model training accuracy. In this evaluation, the dataset was divided into K subsets; K−1 subsets were used for training, and the remaining subset was used to evaluate the performance. This process was run K times to obtain the detection model’s mean accuracy and other quality metrics. K-fold cross-validation was done to verify that the images reported were accurate and not biased towards a specific dataset split. The images shown were attained from the model with good precision. In this analysis, the model scored 91.5% mean accuracy for K = 10. This indicates that the model was not biased towards a specific dataset split.

3.2. Offline Test

The offline test was carried out with augmented and non-augmented images collected using online sources and glue-trapped mosquito images collected via the Dragonfly robot. The model’s performance was evaluated using 50 images composed of three mosquito classes. These images were not used to train the mosquito surveillance framework.

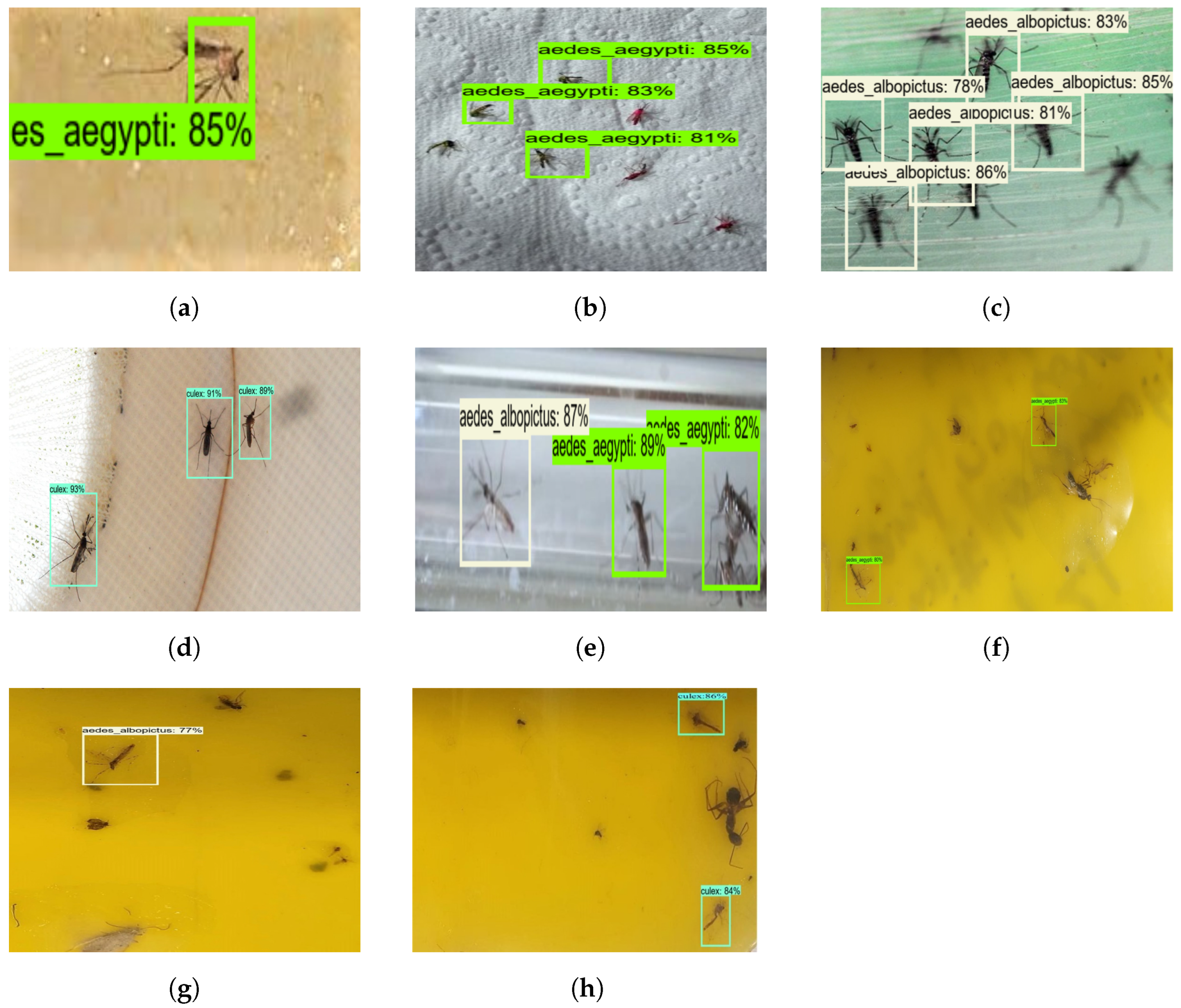

Figure 7 shows the mosquito surveillance framework’s experimental results in the offline test, and

Table 3 indicates the confusion matrix-based performance analysis results of the offline test experiment.

Here, the algorithm detects mosquitoes with an average confidence level of 88%. Aedes aegypti, Aedes albopictus, and Culex mosquitoes were classified with an accuracy of 78.33%, 77.73%, and 77.81%, respectively, before augmentation. However, Aedes aegypti, Aedes albopictus, and Culex mosquitoes were classified with an accuracy of 93.61%, 90.70%, and 95.29%, respectively, after augmentation. Therefore, it can be concluded that the mosquito surveillance framework demonstrates higher classification accuracy after applying data augmentation. The framework was able to detect and classify most of the mosquitoes. However, the missed detection, false classification, and detection with lower confidence levels were due to partially occluded mosquitoes.

3.3. Real-Time Mosquito Surveillance and Mosquito Population Mapping Test

This section evaluates the mosquito surveillance framework’s performance in the real-time field trial. As per the literature survey and NEA Singapore guidelines, mosquitoes are more active at dusk, evening, nighttime, after rainfall, and in environments such as open perimeter drains, covered car parks, roadside drains, and garden landscapes [

1,

28,

29]. Hence, the experiments were carried out during the night (6 p.m. to 10 p.m.) and early morning (4 a.m. to 8 a.m.) in the potential breeding and cluttered environment of the SUTD campus and Brightson ship maintenance facility. In this experiment, the mosquito glue trap was fixed inside the trap unit and performed a mosquito trapping and surveillance function.

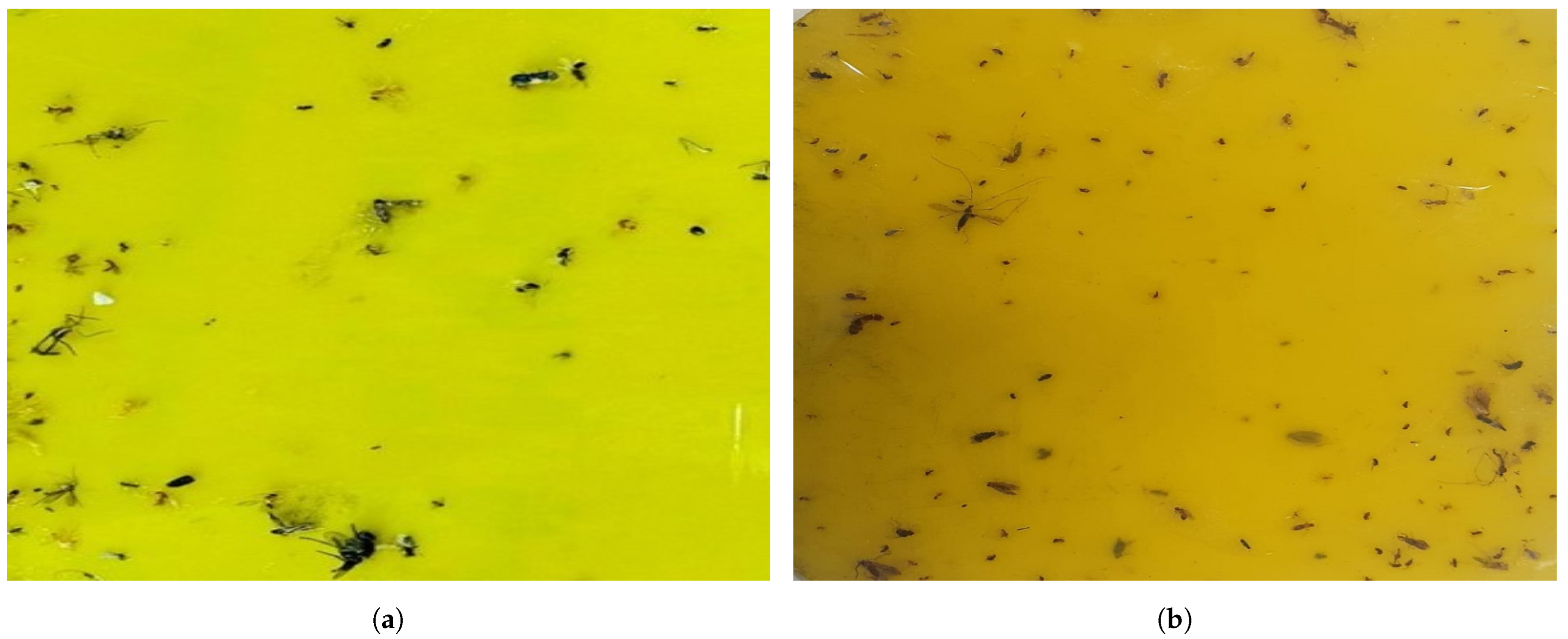

Figure 8 shows the robot ’Dragonfly’ performing experiments in a different environment. The robot navigated to pre-defined waypoints in the region of operation autonomously on multiple cycles. The robot paused for 10 min at every waypoint, keeping its trap operational to gather more mosquitoes in the respective location. Once the robot completed its navigation to the final waypoint, it moved to the first waypoint and continued its inspection cycle.

Figure 9 shows a sample of real-time collected mosquito glue trap images from test environments.

In this real-time analysis, the mosquito glue trap images were captured by a trap camera, and images were transferred to an onboard high-powered GPU-enabled Industrial PC (IPC) for mosquito surveillance and population mapping tasks.

Figure 10 shows the detection results of real-time field trial images, and

Table 4 shows the statistical measure results of the mosquito surveillance framework.

The experimental results indicate that the surveillance algorithm detected Aedes aegypti, Aedes albopictus, and Culex mosquito classes on the Dragonfly robot’s captured images with an 82% confidence level. Its bounding region is also accurate with respect to ground truth. The statistical measure indicates that the framework has detected the class of mosquito with a detection accuracy of 87.67% for Aedes aegypti, 86.68% for Aedes albopictus, and 89.62% for Culex mosquitoes. Further, the model’s miss rate is 5.61% for online tests. The missed detection is attributed to mosquito occlusion and blurring due to robot navigation jerking when moving on uneven surfaces.

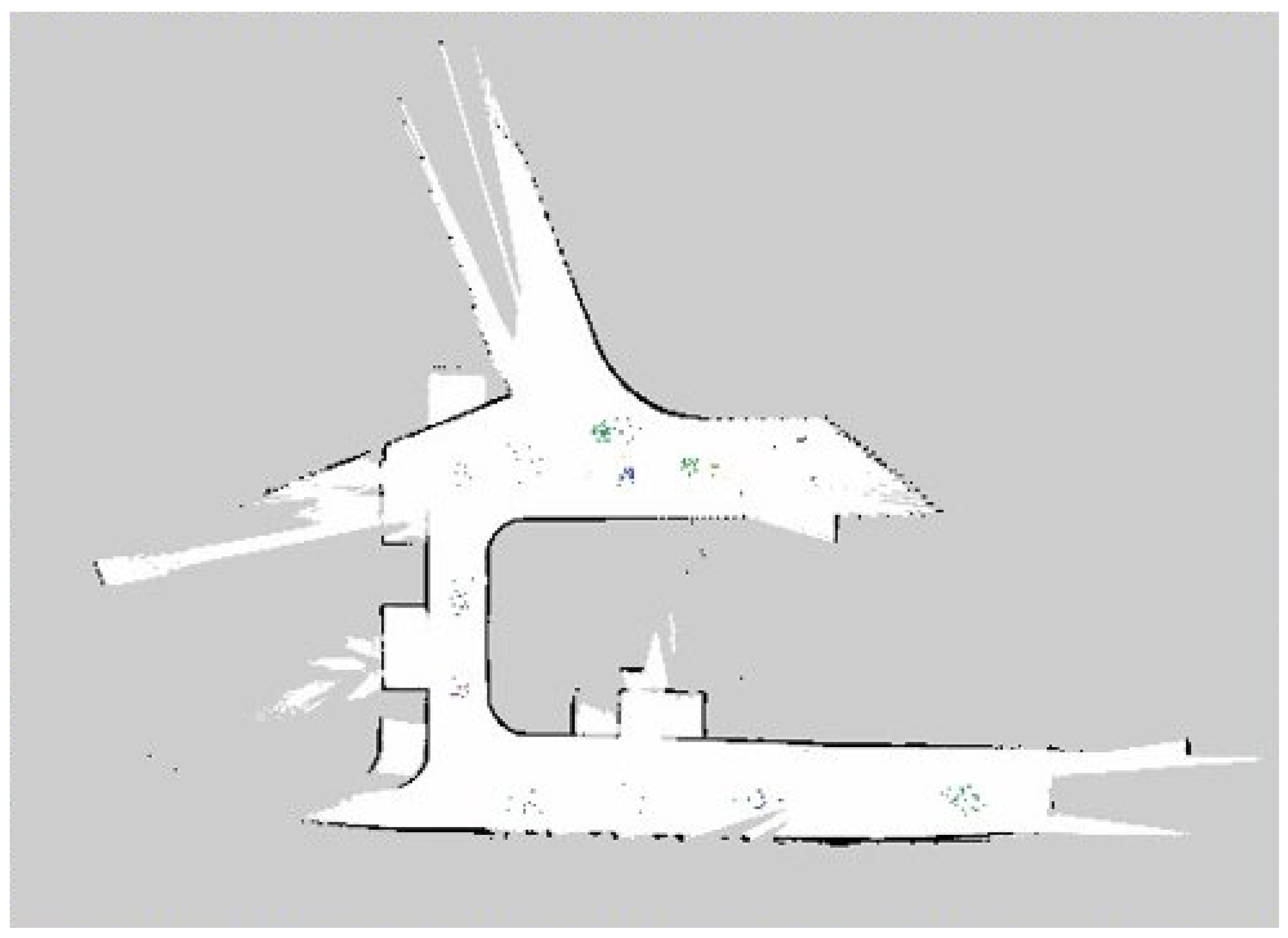

Figure 11 shows the mosquito population mapping results of the field trial for ten days. The population map was generated by fusing the trapped mosquito classes on a robot navigation map using different color codes. Here, Aedes Aegypti is marked as green, Aedes Albopictus is marked as blue, and Culex is marked in purple.

Table 5 shows the details of the number of mosquitoes trapped on a field trial, calculated through the mosquito population mapping function.

From

Table 5, it is reported that Aedes Aegypti and Aedes Albopictus are more active during the morning, whereas Culex is primarily active at night. This variation in the number of trapped mosquitoes is because Aegypti and Aedes Albopictus are more attracted to heated objects and covered in body odor. However, in Singapore’s well-lit urban environment, the Aedes mosquito may also be active at night, as it could adapt to artificial lighting [

1].

3.4. Comparison with Other Existing Model

To evaluate the YOLO V4 model performance, the comparison analysis was performed with three different feature extractors on the YOLO V3 head and SSD MobileNetv2. The training dataset and hardware used were as per

Section 3.1 and

Section 3.1.1. The hyper-parameters used for MobileNetv2 were 0.9 for momentum, an initial learning rate of 0.08, and a batch size of 128, and images were resized to

. Meanwhile, the hyper-parameters used for ResNet101 were 0.9 for momentum, an initial learning rate of 0.04, and a batch size of 64, and the images were resized to

.

For comparison, a combination of the real-time collected dataset as well as the online collected dataset was used. Fifty images of each class were obtained in real time and online, respectively, resulting in a total of 300 images. Likewise, with the online testing, images from the real-time collection were pre-processed by cropping the images into grids of

before inference.

Table 6 shows the comparison between our proposed model and other object detection models.

The proposed model outperformed the other models in precision, recall, F1, and accuracy. The outliers, being YOLO V3 and ResNet101, were able to outperform in the Aedes aegypti class in terms of precision and F1 score. In terms of FPS, the proposed model managed a decent 57 FPS, ranking third. Specifically for the application on the Dragonfly robot, the trade-off between having higher accuracy is preferred while maintaining a decent FPS.

3.5. Comparison with Other Existing Works

This section elaborates the comparative analysis of the proposed algorithm with other existing mosquito detection and classification studies reported in the literature.

Table 7 states the accuracy of various inspection models and algorithms based on some similar classes.

The literature has reported various studies focusing on mosquito detection and classification. However, the implementations in these case studies cannot be directly compared to our work. The case studies have employed different training datasets, CNN algorithms, training parameters, and performed offline inspection. Further, the accuracy of our proposed framework is comparatively low, and the proposed framework has a key feature of performing real-time mosquito surveillance and population mapping.