Lightweight Hot-Spot Fault Detection Model of Photovoltaic Panels in UAV Remote-Sensing Image

Abstract

:1. Introduction

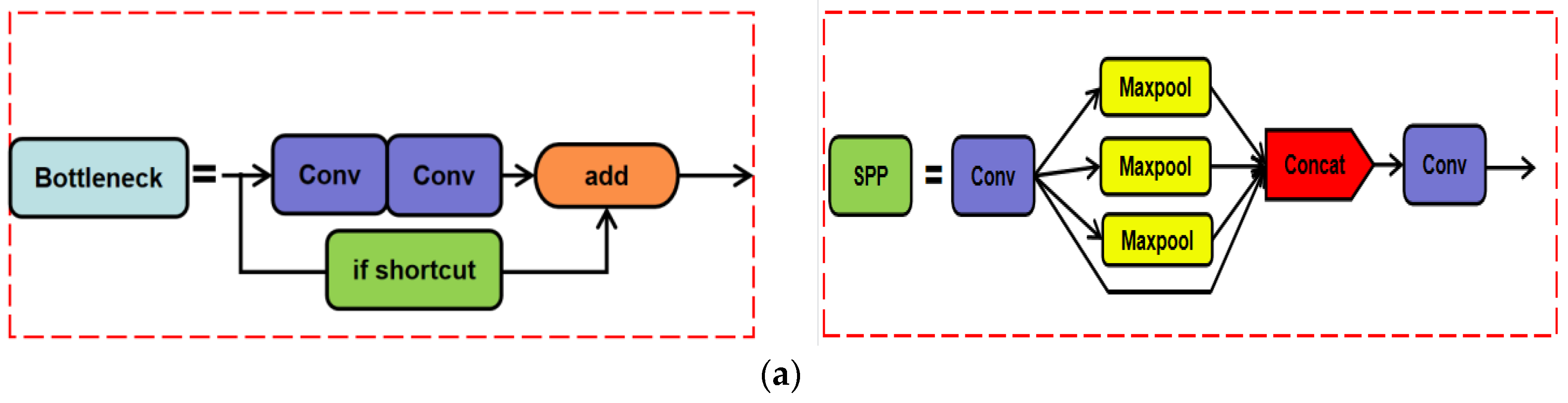

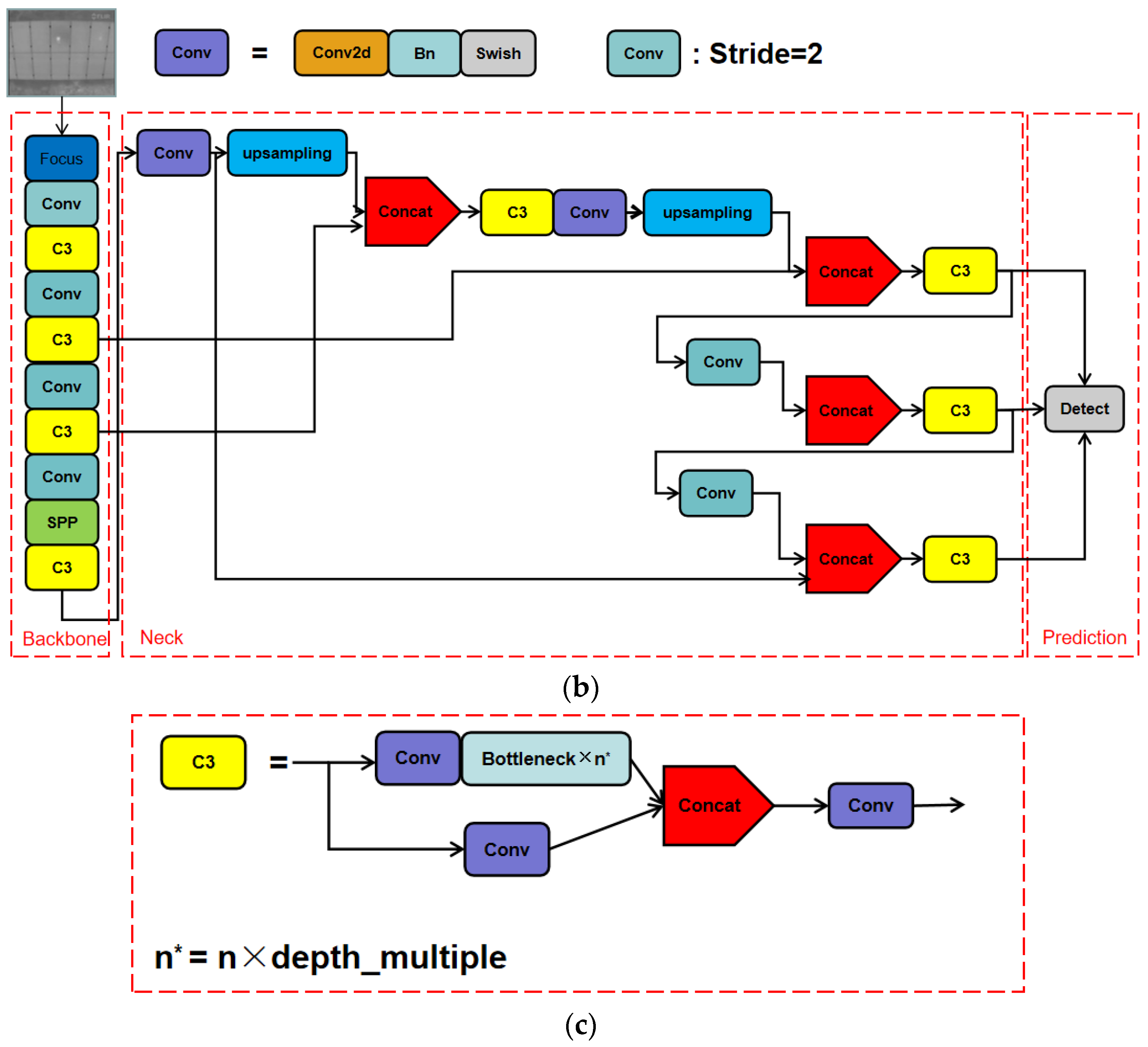

- (i)

- A new lightweight fault detection technique based on deep learning is proposed to solve and fill the gaps proposed in the literature survey.

- (ii)

- An infrared photovoltaic panel image dataset was made by integrating and processing the previous public data.

- (iii)

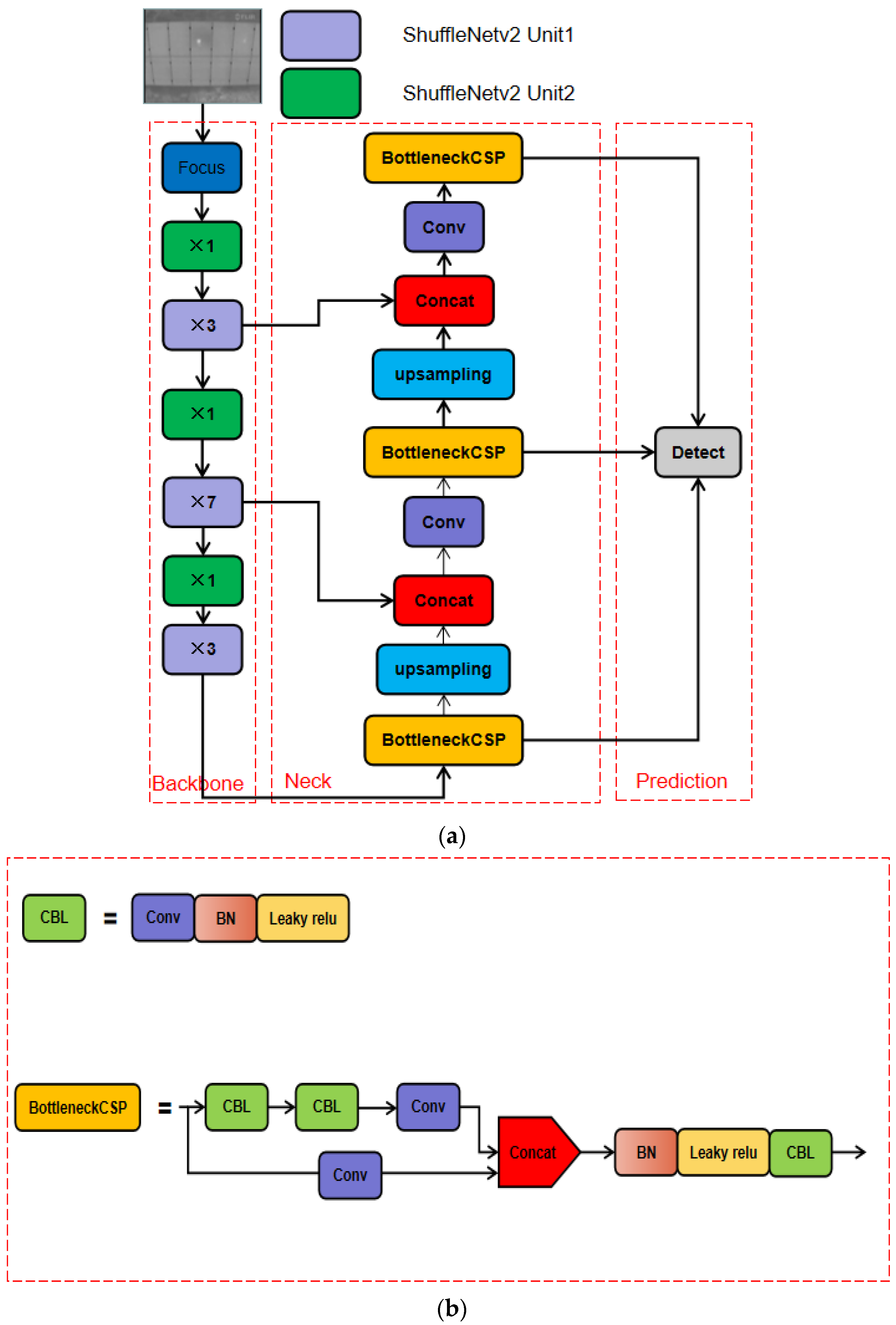

- The original feature extraction and feature fusion methods of the YOLOv5 were optimized to improve the detection accuracy of photovoltaic panels and hot spots. S-YOLOv5 has good performance in terms of detection accuracy, number of parameters, and detection speed.

2. Related Works

2.1. Hot-Spot Fault Detection Based on the Electrical Characteristics of Photovoltaic Panels

2.2. Hot-Spot Fault Detection Based on the Infrared Image Features of Photovoltaic Panels

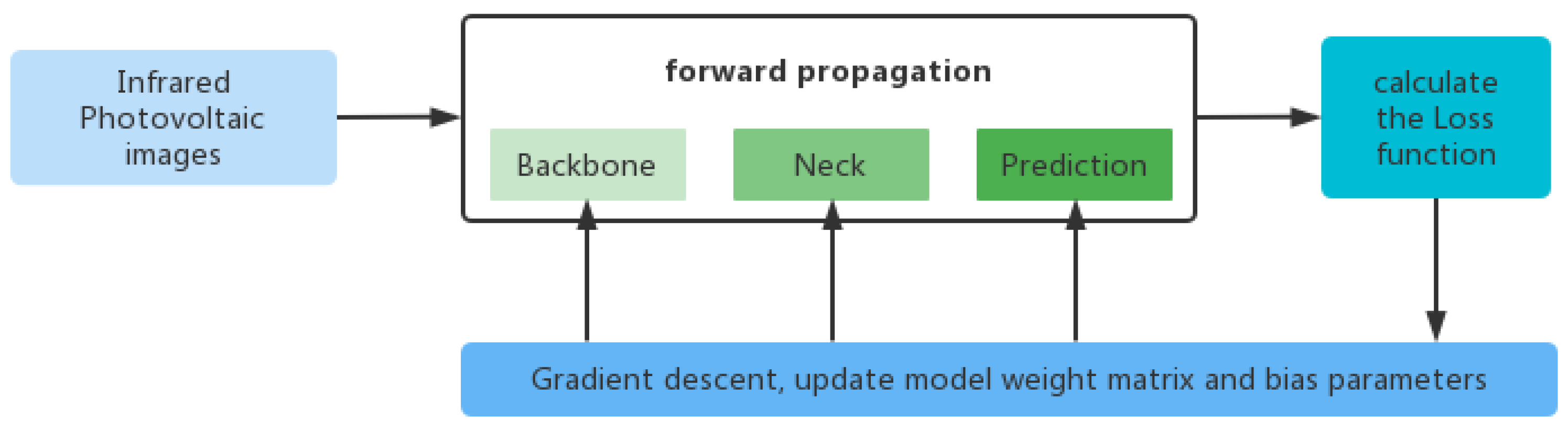

3. Proposed Method

4. Experiments

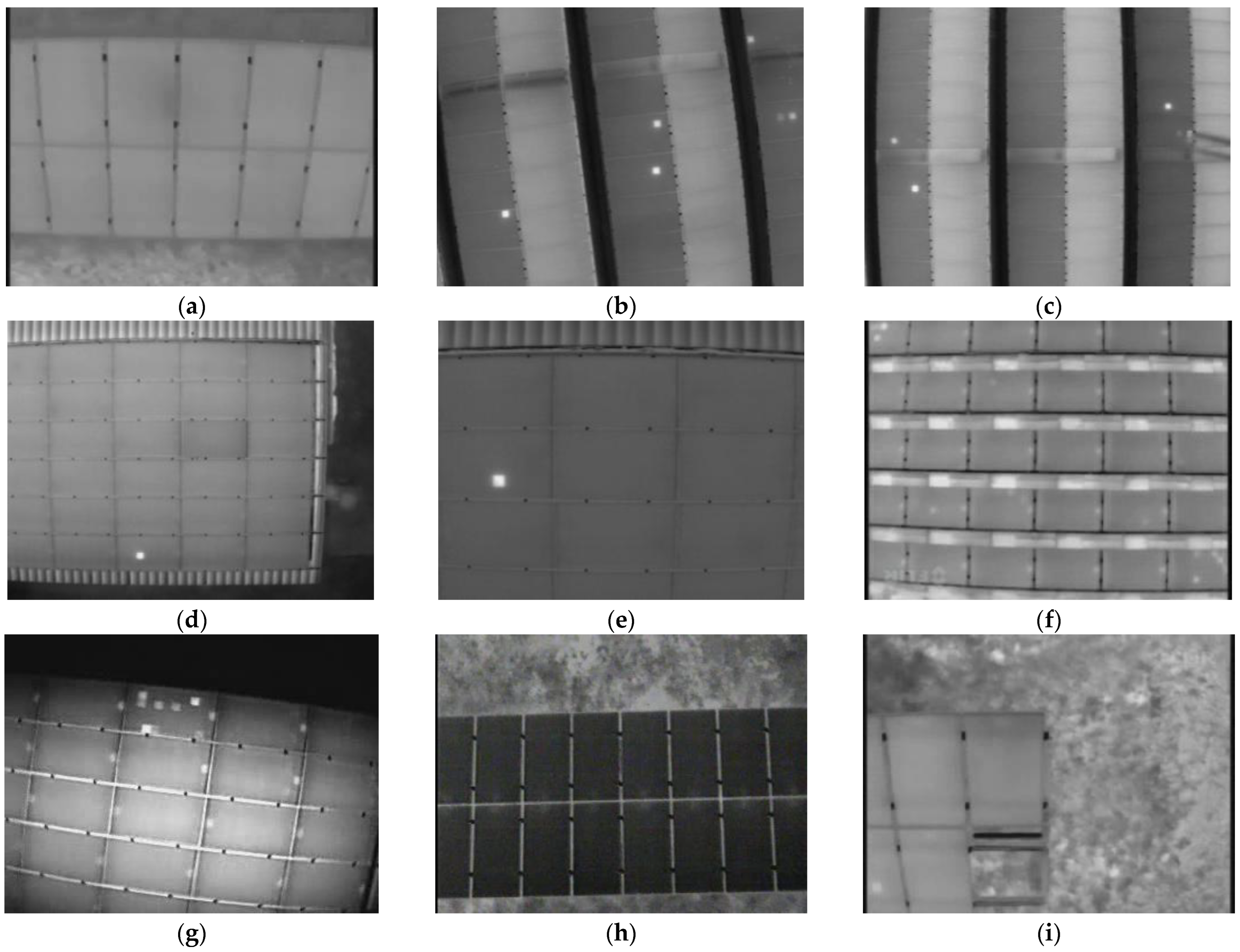

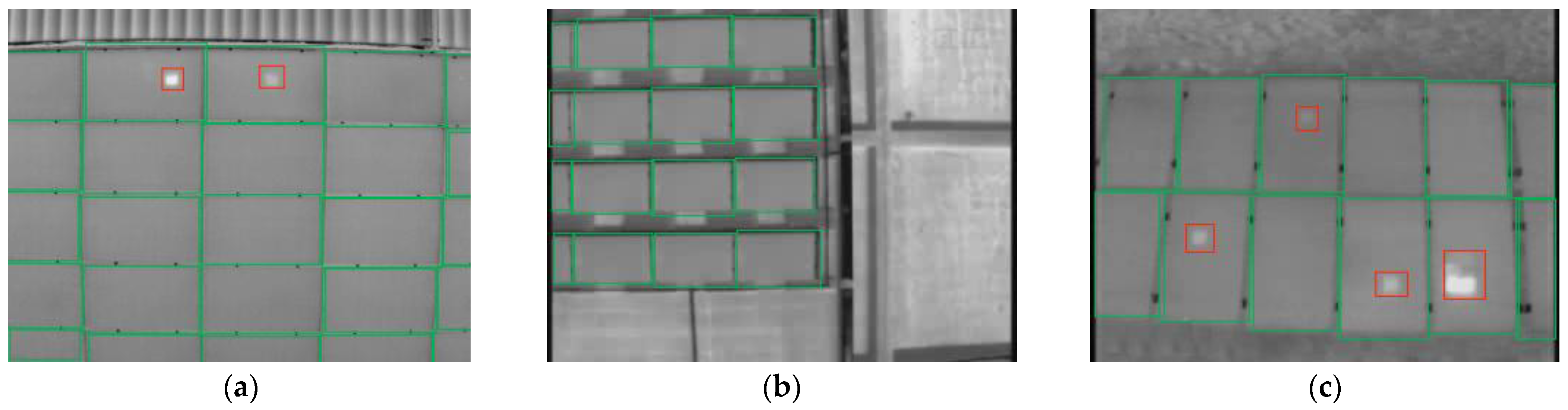

4.1. Infrared Photovoltaic Images Dataset

4.2. Evaluation Metrics

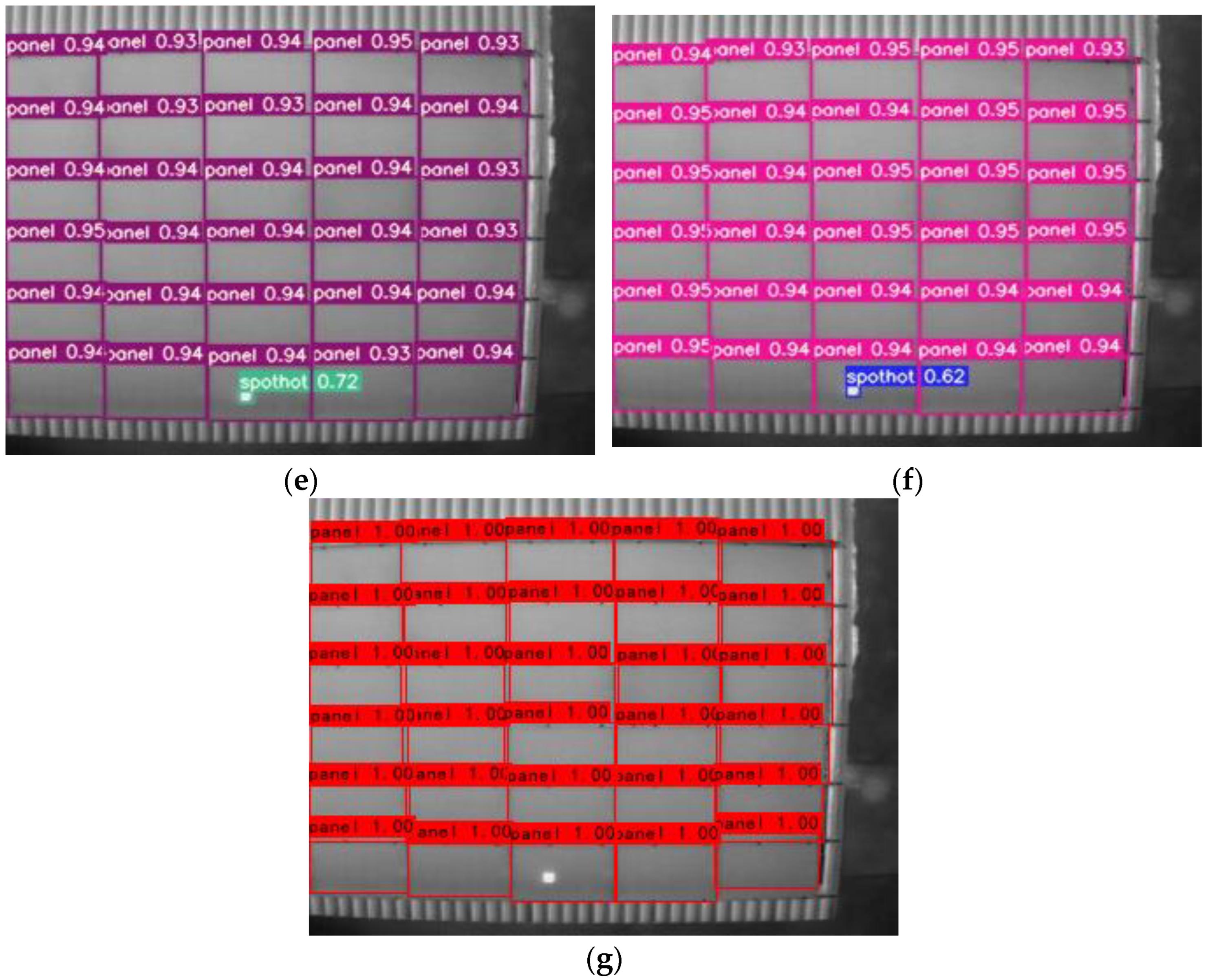

4.3. Ablation Study

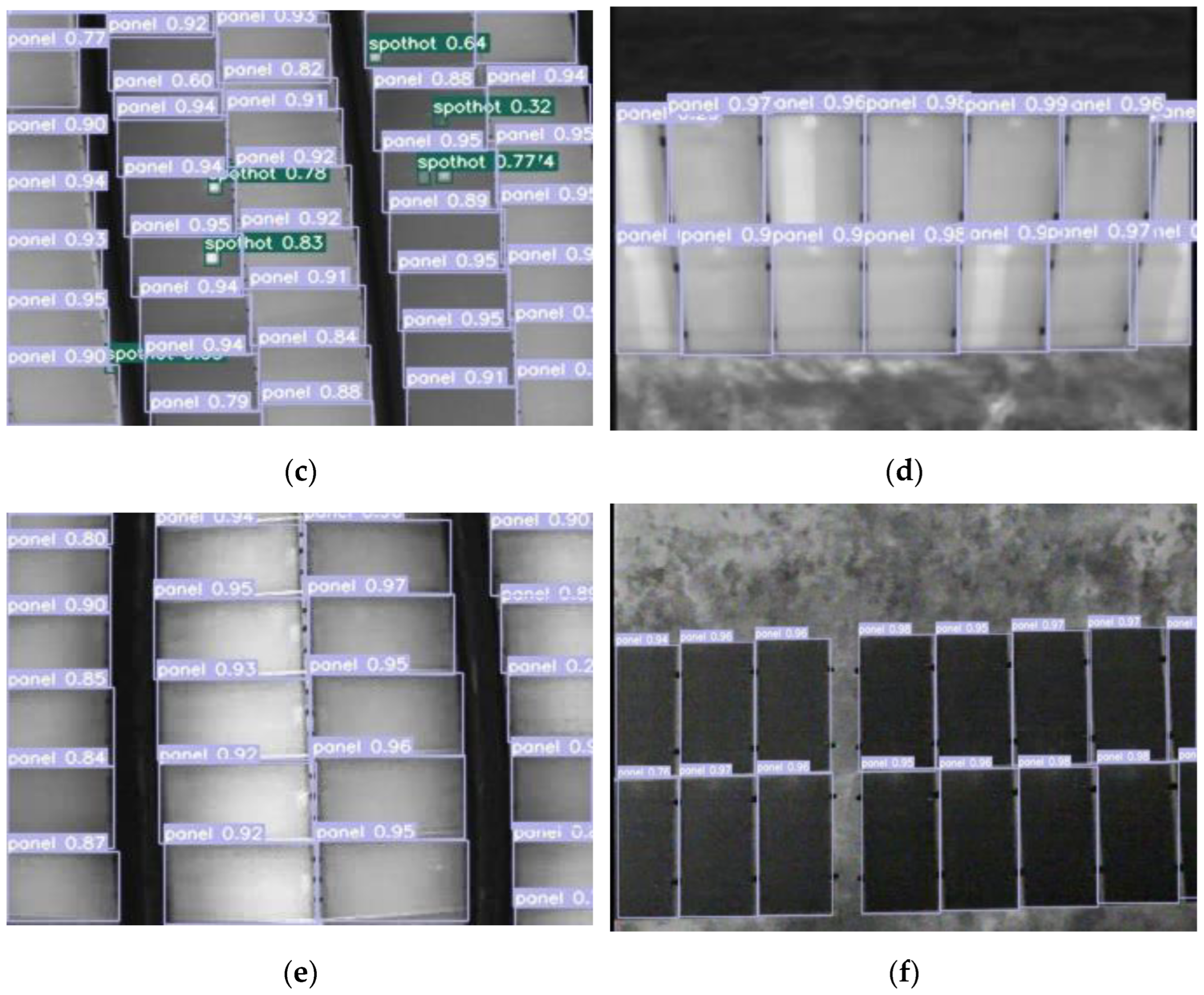

4.4. Comparative Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- The Worldwide Exhibition and Conference Series for the Solar Industry and Its Partners—Intersolar Global. Available online: https://www.intersolarglobal.com (accessed on 2 December 2021).

- Al-Shahri, O.A.; Ismail, F.B.; Hannan, M.A.; Lipu, M.H.; Al-Shetwi, A.Q.; Begum, R.A.; Soujeri, E. Solar photovoltaic energy optimization methods, challenges and issues: A comprehensive review. J. Clean. Prod. 2021, 284, 125465. [Google Scholar] [CrossRef]

- Poulek, V.; Šafránková, J.; Černá, L.; Libra, M.; Beránek, V.; Finsterle, T.; Hrzina, P. PV panel and PV inverter damages caused by combination of edge delamination, water penetration, and high string voltage in moderate climate. IEEE J. Photovolt. 2021, 11, 561–565. [Google Scholar] [CrossRef]

- Dimitrievska, V.; Pittino, F.; Muehleisen, W.; Diewald, N.; Hilweg, M.; Montvay, A.; Hirschl, C. Statistical Methods for Degradation Estimation and Anomaly Detection in Photovoltaic Plants. Sensors 2021, 21, 3733. [Google Scholar] [CrossRef] [PubMed]

- Di Lorenzo, G.; Araneo, R.; Mitolo, M.; Niccolai, A.; Grimaccia, F. Review of O&M practices in PV plants: Failures, solutions, remote control, and monitoring tools. IEEE J. Photovolt. 2020, 10, 914–926. [Google Scholar]

- Gao, W.; Wai, R.J. A novel fault identification method for photovoltaic array via convolutional neural network and residual gated recurrent unit. IEEE Access 2020, 8, 159493–159510. [Google Scholar] [CrossRef]

- Alves, R.H.F.; de Deus Júnior, G.A.; Marra, E.G.; Lemos, R.P. Automatic fault classification in photovoltaic modules using Convolutional Neural Networks. Renew. Energy 2021, 179, 502–516. [Google Scholar] [CrossRef]

- Chine, W.; Mellit, A.; Lughi, V.; Malek, A.; Sulligoi, G.; Pavan, A.M. A novel fault diagnosis technique for photovoltaic systems based on artificial neural networks. Renew. Energy 2016, 90, 501–512. [Google Scholar] [CrossRef]

- Abdul Mawjood, K.; Refaat, S.S.; Morsi, W.G. Detection and prediction of faults in photovoltaic arrays: A review. In Proceedings of the 2018 IEEE 12th International Conference on Compatibility, Power Electronics and Power Engineering, Doha, Qatar, 10–12 April 2018; pp. 1–8. [Google Scholar]

- Tang, S.; Xing, Y.; Chen, L.; Song, X.; Yao, F. Review and a novel strategy for mitigating hot spot of PV panels. Sol. Energy 2021, 214, 51–61. [Google Scholar] [CrossRef]

- Čabo, F.G.; Marinić-Kragić, I.; Garma, T.; Nižetić, S. Development of thermo-electrical model of photovoltaic panel under hot-spot conditions with experimental validation. Energy 2021, 230, 120785. [Google Scholar] [CrossRef]

- Aghaei, M.; Grimaccia, F.; Gonano, C.A.; Leva, S. Innovative automated control system for PV fields inspection and remote control. IEEE Trans. Ind. Electron. 2015, 62, 7287–7296. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early fire detection based on aerial 360-degree sensors, deep convolution neural networks and exploitation of fire dynamic textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Dihkan, M.; Mus, E. Automatic detection of power transmission lines and risky object locations using UAV LiDAR data. Arab. J. Geosci. 2021, 14, 567. [Google Scholar] [CrossRef]

- Lei, B.; Ren, Y.; Wang, N.; Huo, L.; Song, G. Design of a new low-cost unmanned aerial vehicle and vision-based concrete crack inspection method. Struct. Health Monit. 2020, 19, 1871–1883. [Google Scholar] [CrossRef]

- Quater, P.B.; Grimaccia, F.; Leva, S.; Mussetta, M.; Aghaei, M. Light Unmanned Aerial Vehicles (UAVs) for cooperative inspection of PV plants. IEEE J. Photovolt. 2014, 4, 1107–1113. [Google Scholar] [CrossRef] [Green Version]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Harrou, F.; Taghezouit, B.; Sun, Y. Improved KNN-Based monitoring schemes for detecting faults in PV systems. IEEE J. Photovolt. 2019, 9, 811–821. [Google Scholar] [CrossRef]

- Hariharan, R.; Chakkarapani, M.; Ilango, G.S.; Nagamani, C. A method to detect photovoltaic array faults and partial shading in PV systems. IEEE J. Photovolt. 2016, 6, 1278–1285. [Google Scholar] [CrossRef]

- Dhimish, M.; Holmes, V.; Mehrdadi, B.; Dales, M. Simultaneous fault detection algorithm for grid-connected photovoltaic plants. IET Renew. Power Gener. 2017, 11, 1565–1575. [Google Scholar] [CrossRef]

- Winston, D.P.; Murugan, M.S.; Elavarasan, R.M.; Pugazhendhi, R.; Singh, O.J.; Murugesan, P.; Hossain, E. Solar PV’s Micro Crack and Hotspots Detection Technique Using NN and SVM. IEEE Access 2021, 9, 127259–127269. [Google Scholar] [CrossRef]

- Rossi, D.; Omana, M.; Giaffreda, D.; Metra, C. Modeling and Detection of Hotspot in Shaded Photovoltaic Cells. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2015, 23, 1031–1039. [Google Scholar] [CrossRef] [Green Version]

- Hossain, M.K.; Ahmed, M.H.; Khan, I.; Miah, S. Recent Progress of Rare Earth Oxides for Please check if the individual contribution of each co-author has been stated and if they are correct. ACS Appl. Electron. Mater. 2021, 3, 4255–4283. [Google Scholar] [CrossRef]

- Hwang, H.P.C.; Ku, C.C.Y.; Chan, J.C.C. Detection of Malfunctioning Photovoltaic Modules Based on Machine Learning Algorithms. IEEE Access 2021, 9, 37210–37219. [Google Scholar] [CrossRef]

- Ali, M.U.; Khan, H.F.; Masud, M.; Kallu, K.D.; Zafar, A. A machine learning framework to identify the hotspot in photovoltaic module using infrared thermography. Sol. Energy 2020, 208, 643–651. [Google Scholar] [CrossRef]

- Pierdicca, R.; Malinverni, E.S.; Piccinini, F.; Paolanti, M.; Felicetti, A.; Zingaretti, P. Deep convolutional neural network for automatic detection of damaged photovoltaic cells. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 893–900. [Google Scholar] [CrossRef] [Green Version]

- Herraiz, Á.H.; Marugán, A.P.; Márquez, F.P.G. Photovoltaic plant condition monitoring using thermal images analysis by convolutional neural network-based structure. Renew. Energy 2020, 153, 334–348. [Google Scholar] [CrossRef] [Green Version]

- Aghaei, M.; Leva, S.; Grimaccia, F. PV power plant inspection by image mosaicing techniques for IR real-time images. In Proceedings of the 2016 IEEE 43rd Photovoltaic Specialists Conference (PVSC), Portland, OR, USA, 5–10 June 2016; pp. 3100–3105. [Google Scholar]

- Dunderdale, C.; Brettenny, W.; Clohessy, C.; van Dyk, E.E. Photovoltaic defect classification through thermal infrared imaging using a machine learning approach. Prog. Photovolt. Res. Appl. 2020, 28, 177–188. [Google Scholar] [CrossRef]

- Ultralytics. Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 18 May 2020).

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Zhou, L.; Rao, X.; Li, Y.; Zuo, X.; Qiao, B.; Lin, Y. A Lightweight Object Detection Method in Aerial Images Based on Dense Feature Fusion Path Aggregation Network. ISPRS Int. J. Geo-Inf. 2022, 11, 189. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Greco, A.; Pironti, C.; Saggese, A.; Vento, M.; Vigilante, V. A deep learning based approach for detecting panels in photovoltaic plants. In Proceedings of the 3rd International Conference on Applications of Intelligent Systems, Las Palmas de Gran Canaria, Spain, 7–9 January 2020; pp. 1–7. [Google Scholar]

- Carletti, V.; Greco, A.; Saggese, A.; Vento, M. An intelligent flying system for automatic detection of faults in photovoltaic plants. J. Ambient Intell. Humaniz. Comput. 2020, 11, 2027–2040. [Google Scholar] [CrossRef]

- Tzutalin. LabelImg. Available online: https://github.com/tzutalin/labelImg (accessed on 30 April 2022).

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. Available online: https://arxiabs.org/abs/1804.02767 (accessed on 8 April 2018).

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. Available online: https://arxiv.org/abs/2004.10934 (accessed on 23 April 2020).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Dataset | Pictures | Panels | Hot Spots |

|---|---|---|---|

| Training set | 3360 | 75,102 | 4990 |

| Validation set | 1120 | 24,760 | 1560 |

| Test set | 1120 | 24,860 | 1520 |

| Model | Precision (%) | Recall (%) | mAP (%) | Params (M) |

|---|---|---|---|---|

| Baseline | 93.7 | 92.0 | 94.4 | 7.06 |

| + ShuffleNetv2 | 92.5 | 89.9 | 93.0 | 0.43 |

| + Focus + ShuffleNetv2 | 95.2 | 94.5 | 97.0 | 0.44 |

| + FPN | 94.1 | 90.7 | 94.3 | 6.11 |

| + ShuffleNetv2 + FPN | 94.9 | 95.6 | 97.1 | 3.69 |

| + Focus + ShuffleNetv2 + FPN | 96.0 | 97.2 | 98.1 | 3.71 |

| Model | mAP (%) | FPS | Params (M) |

|---|---|---|---|

| YOLOv3 | 96.4 | 34 | 61.50 |

| YOLOv4 | 82.4 | 44 | 63.94 |

| YOLOv5m | 95.6 | 54 | 21.04 |

| YOLOv5l | 97.1 | 46 | 46.60 |

| YOLOv5x | 96.5 | 37 | 87.25 |

| Faster RCNN | 66.6 | 7 | 28.29 |

| Our method | 98.1 | 49 | 3.71 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, Q.; Ma, J.; Liu, M.; Liu, Y.; Li, Y.; Shi, G. Lightweight Hot-Spot Fault Detection Model of Photovoltaic Panels in UAV Remote-Sensing Image. Sensors 2022, 22, 4617. https://doi.org/10.3390/s22124617

Zheng Q, Ma J, Liu M, Liu Y, Li Y, Shi G. Lightweight Hot-Spot Fault Detection Model of Photovoltaic Panels in UAV Remote-Sensing Image. Sensors. 2022; 22(12):4617. https://doi.org/10.3390/s22124617

Chicago/Turabian StyleZheng, Qiuping, Jinming Ma, Minghui Liu, Yuchen Liu, Yanxiang Li, and Gang Shi. 2022. "Lightweight Hot-Spot Fault Detection Model of Photovoltaic Panels in UAV Remote-Sensing Image" Sensors 22, no. 12: 4617. https://doi.org/10.3390/s22124617

APA StyleZheng, Q., Ma, J., Liu, M., Liu, Y., Li, Y., & Shi, G. (2022). Lightweight Hot-Spot Fault Detection Model of Photovoltaic Panels in UAV Remote-Sensing Image. Sensors, 22(12), 4617. https://doi.org/10.3390/s22124617