The State-of-the-Art Sensing Techniques in Human Activity Recognition: A Survey

Abstract

:1. Introduction

1.1. Relevant Surveys

1.2. Paper Aims and Contribution

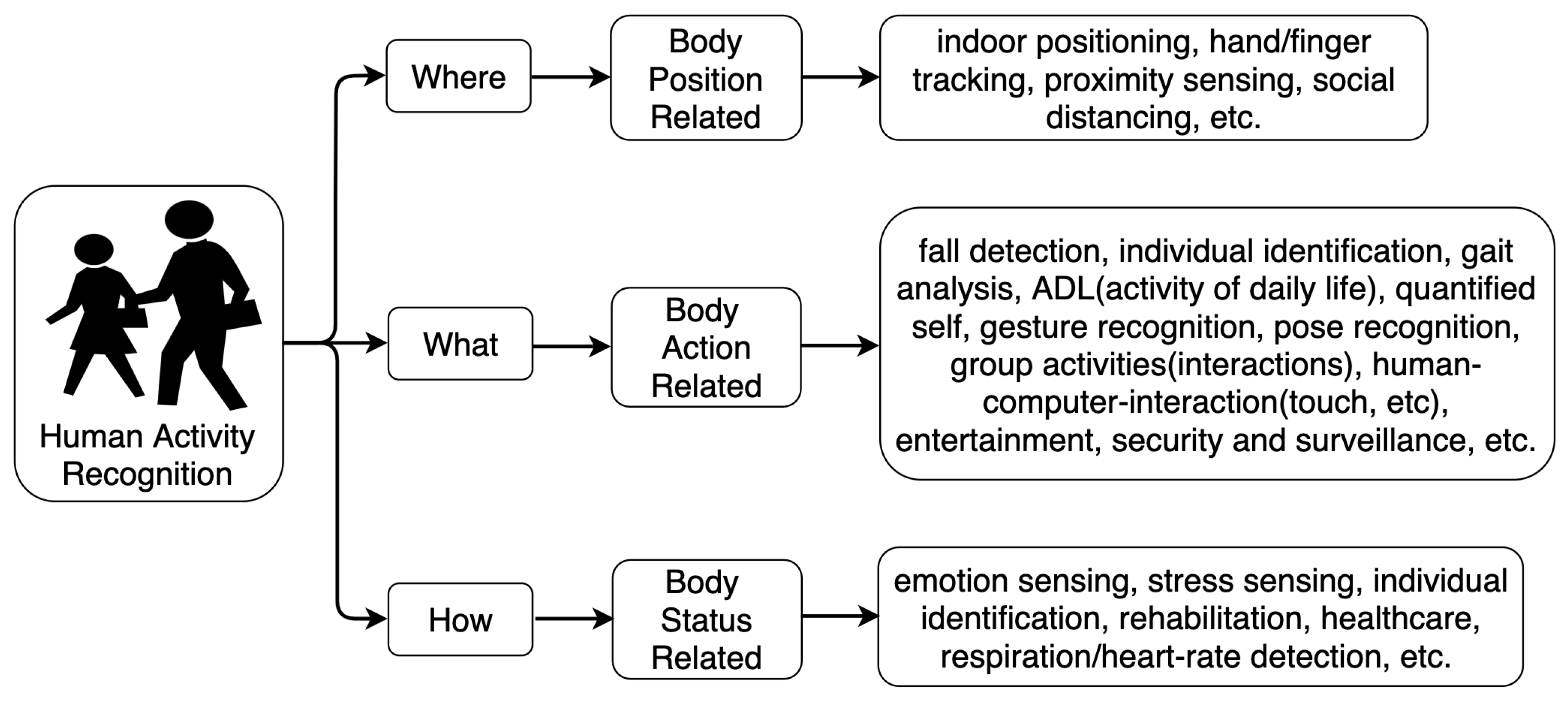

- For a clear overview of the multifaceted nature of HAR tasks, we firstly sorted the human activities into three types: body position-related services (“where”), body action-related services (“what”), and body status-related services (“how”). Such sorting coarsely but briefly introduces the final objective of the utilized sensing technique, which supplies the readers with an elementary step for the sensing concept.

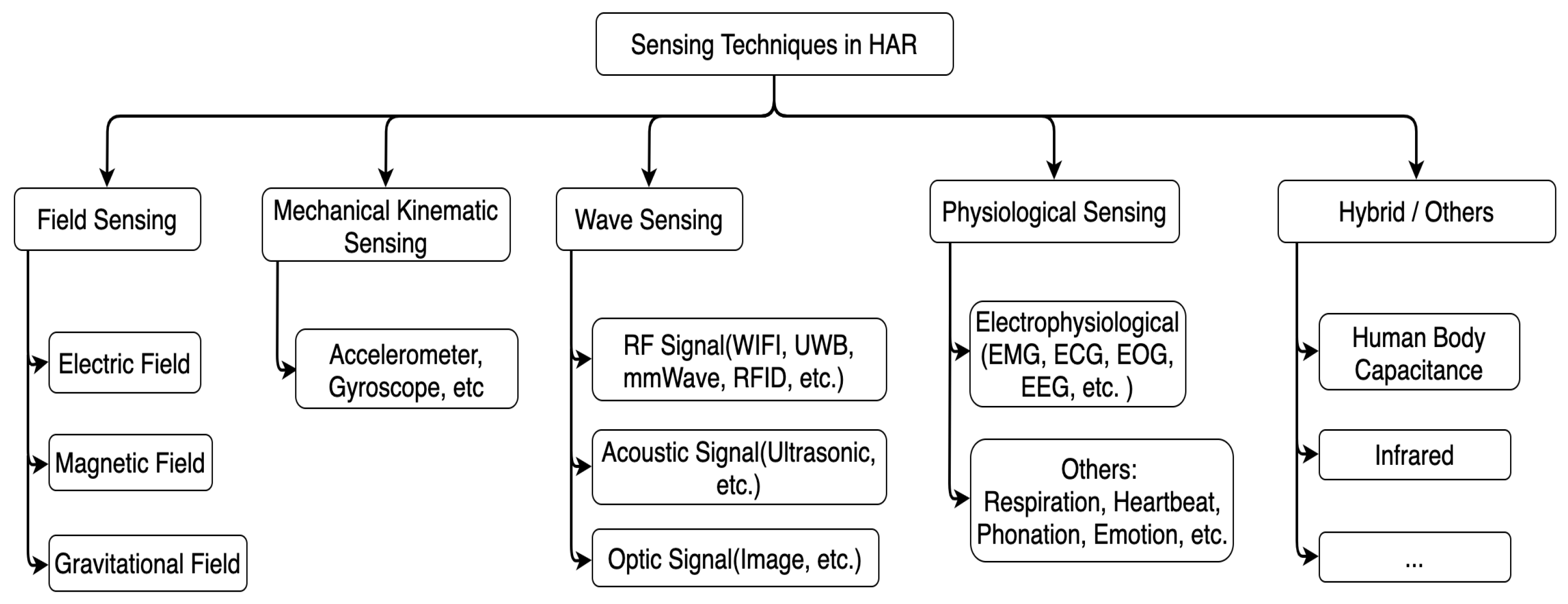

- We then categorized the sensing techniques in HAR tasks into five classes based on the underlying physical principle: mechanical kinematic sensing, wave-based sensing, field-based sensing, physiological sensing, and hybrid or others. We enumerated broadly the adopted sensing modalities within each category and supplied an in-depth description of the underlying technical tricks. Such a sensor-oriented categorization supplies the readers a further understanding of the distinct HAR tasks.

- We gave each sensing modality an in/cross-class comparison with eight metrics to better understand each modality’s limitation and dominant properties and its typical applications in HAR. Finally, we provided a few insights regarding its future development.

2. Background

2.1. Object of Human Activities Recognition (HAR)

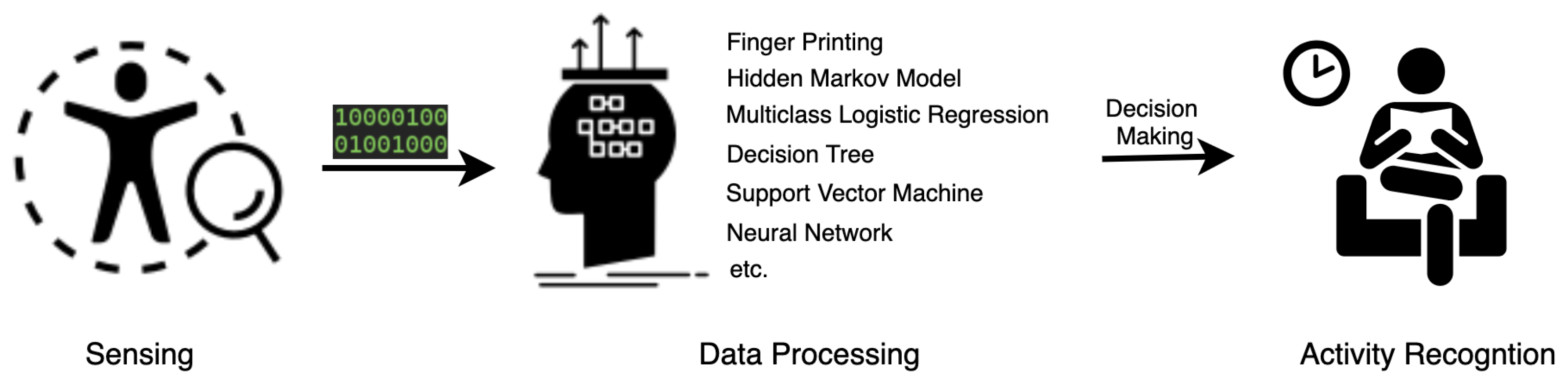

2.2. General Process of Human Activity Recognition

3. Sensing Techniques

- Cost. Low: less than 10 USD. High: hundreds to thousands of USD.

- Power efficiency. Low: level of mW. High: level of W.

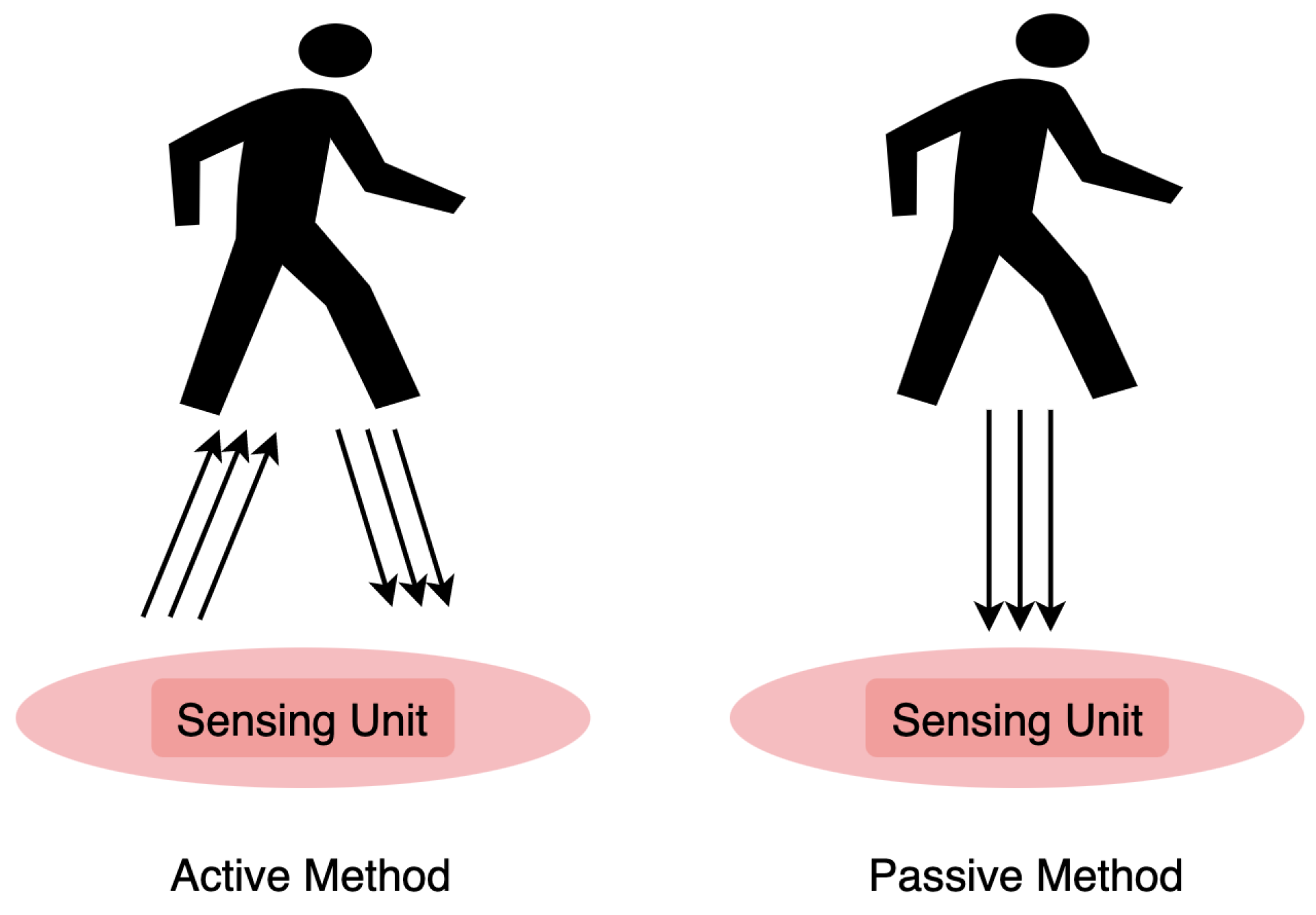

- Signal source. Active or passive according to the source of the measured physical characteristics (naturally or emitted by the sensing system).

- Robustness. The ability to tolerate perturbations that might affect the performance of the sensor.

- Privacy concern. If the sensing approach records individual information beyond the need of interest.

- Computational load. The demand of the hardware resources for successful decision making.

- Typical application. A list of HAR tasks being addressed by the sensing approach.

- Other criterion. Such as installing/maintaining complexity, environmental dependency (line of sight, etc.), accuracy and sensitivity, etc.

3.1. Mechanical Kinematic Sensing

3.2. Wave Sensing

- (A)

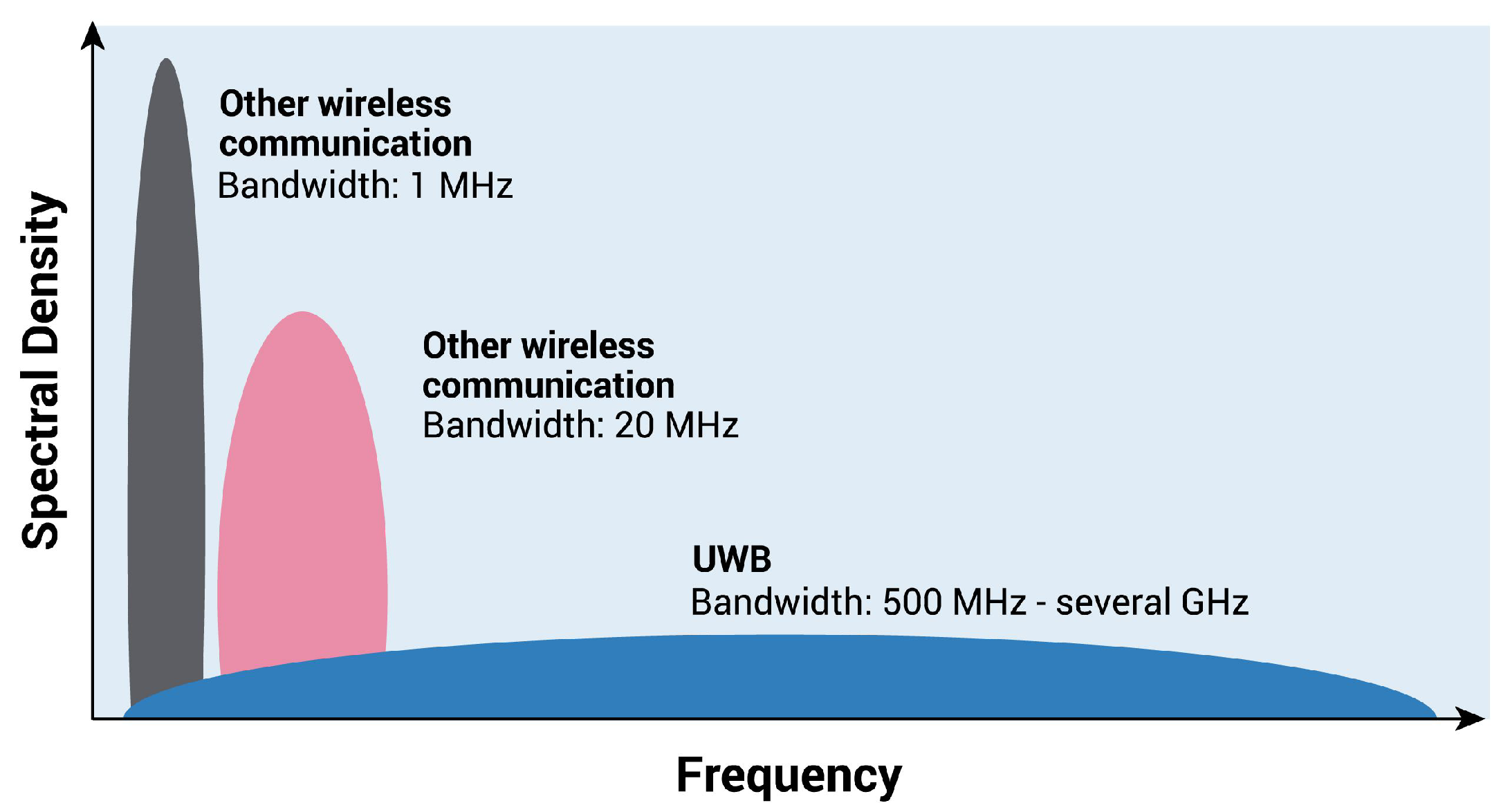

- RF Signal

- (B)

- Acoustic Signal

- (C)

- Optic Signal

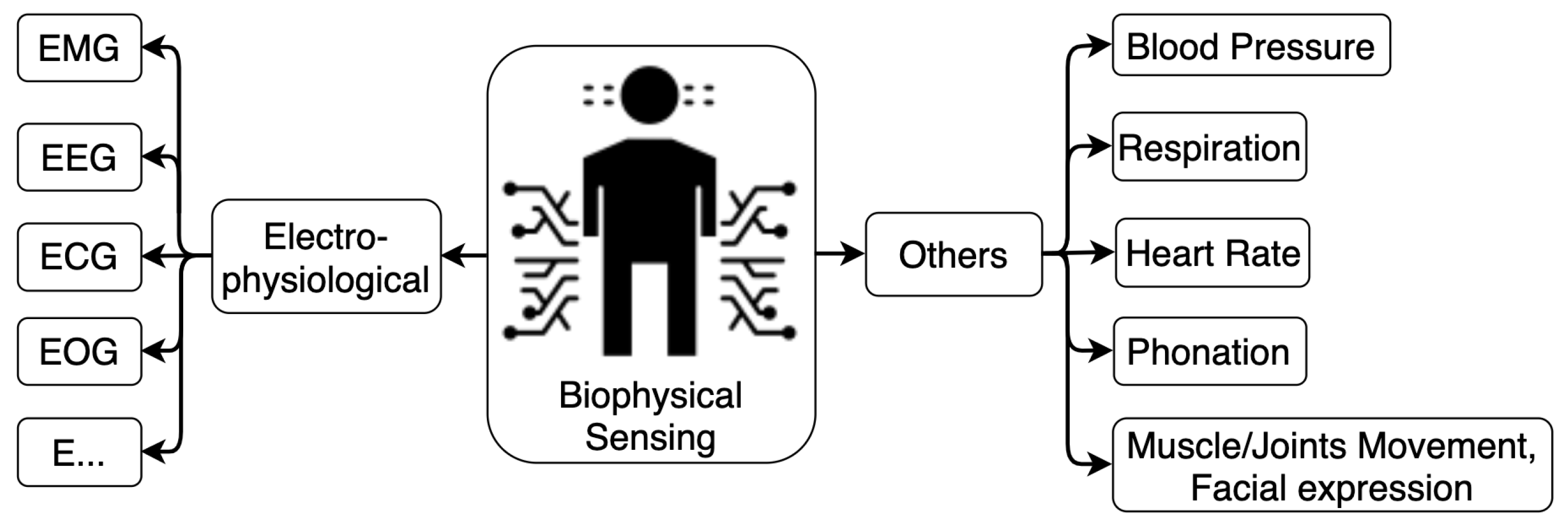

3.3. Physiological Sensing

- (A)

- Electrophysiological Signals

- (B)

- Other physiological signals

3.4. Field Sensing

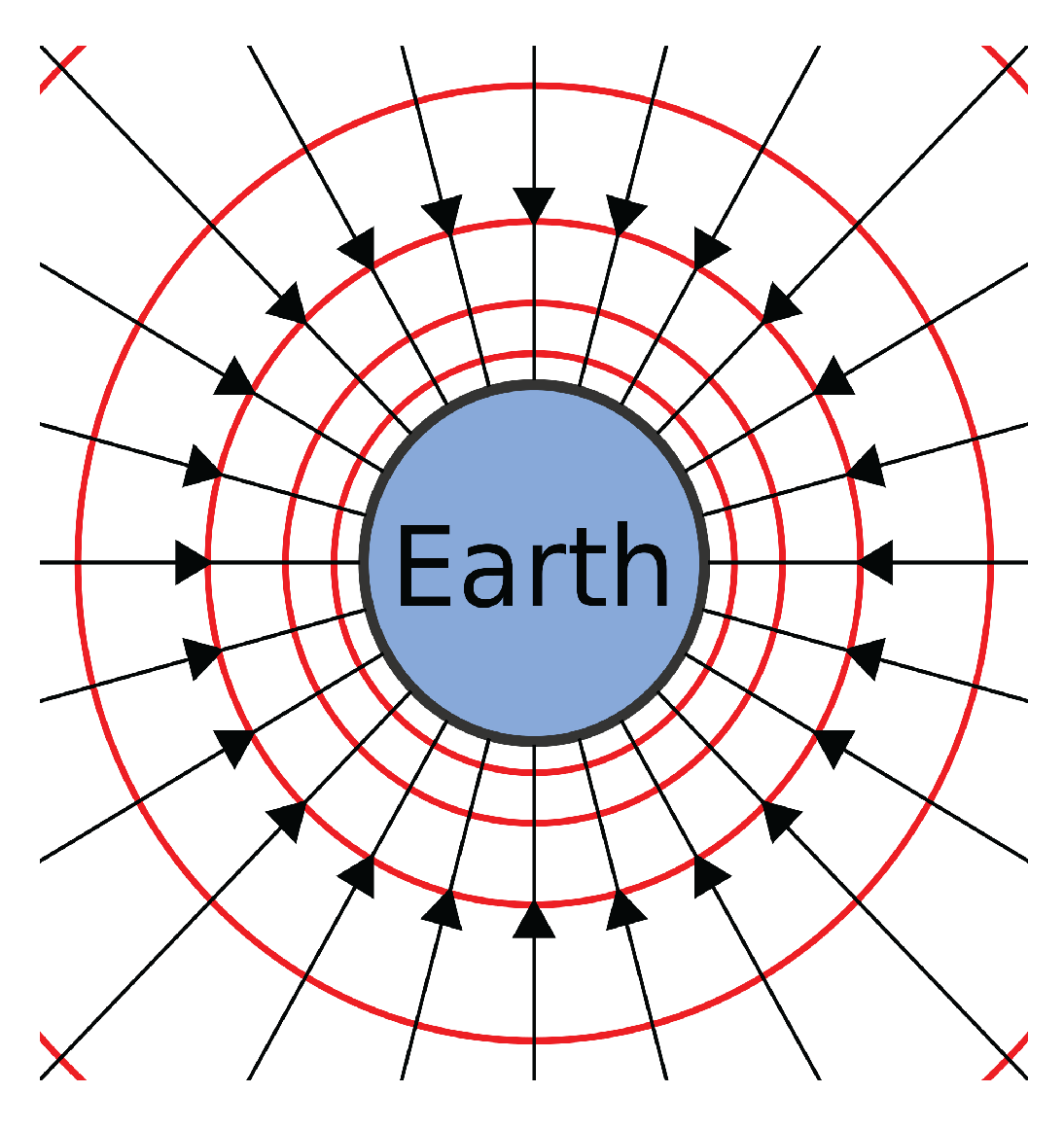

- (A)

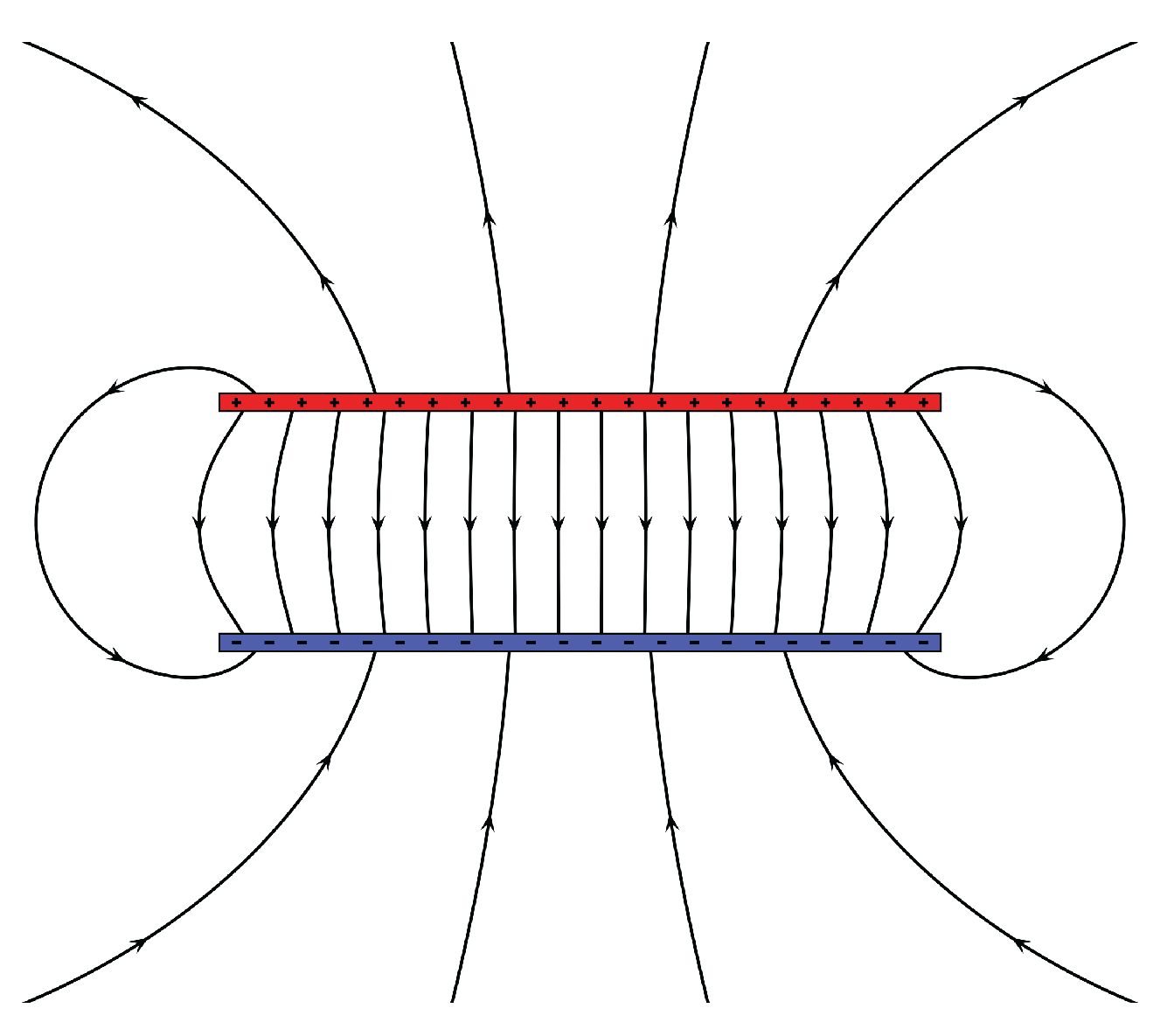

- Electric Field

- (B)

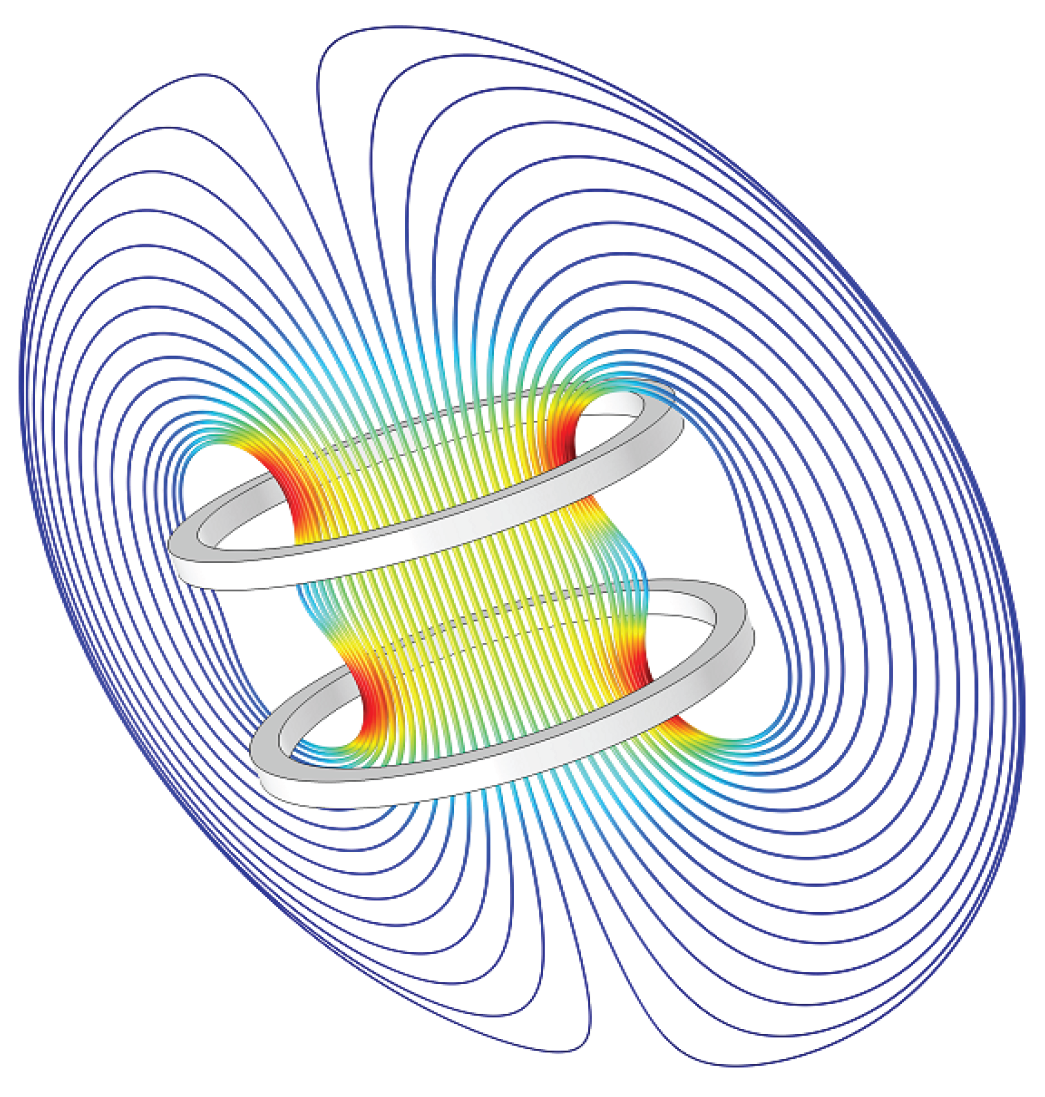

- Magnetic Field

- (C)

- Gravitational Field

3.5. Hybrid / Others

- (A)

- Human Body Capacitance

- (B)

- Infrared

3.6. Summary

4. Outlooks

- Sensor fusion: The sensor fusion method has great potential to improve sensing robustness by fusing different sensor data. Each sensor modality has inherent strengths and weaknesses. By merging data from various sensor modalities, a robust data model can be generated. For example, the long-term positioning tasks with a high-rate IMU sensor will be disturbed by the integration errors, which could be addressed by a lower rate sensor that provides absolute anchor points (such as visual features). Some classic and efficient algorithms could be designed for sensor fusing, such as Kalman Filter [180]. As another example, the electric field sensors can perceive the straightforward proximity information of an individual. Meanwhile, they are sensitive to environmental variation, resulting in multi-source issues. By deploying motion sensors such as IMU on both individuals and the environment, the electric field signal source could be recognized. Such fusion approaches could not only address the weakness of a particular sensing modality but also provide a more holistic appreciation of the system being monitored.

- Smart sensors: Driven by the pervasive practical user scenarios and power-efficient data processing techniques, as well as the chip manufacturing technology, there is an apparent trend that sensors are becoming smarter with the ability to process the signal data locally. Compared to conventional sensor systems, smart sensors take advantage of emerging machine learning algorithms and modern computer hardware to create sophisticated, intelligent systems tailored to specific sensing applications. In recent years, many smart sensors have been proposed for HAR tasks such as the pedometer integrated IMUs (BMI270), gesture recognition integrated sensors (PAJ7620), etc. All the recognition, classification, and decision processes are executed on the smart sensor system locally instead of uploading the raw data to the cloud for inferencing. Thus, the user’s privacy is well protected, and the computing load of the central processing unit is significantly reduced.

- Novel sensors: With the development of materials and fabrication technology, novel sensing techniques and devices emerge to provide a broader perceiving ability towards the body and environment where people live. Novel sensors for HAR offer an alternative or complementary approach to existing solutions. More importantly, they supply a new method for body or environment knowledge collection that the current sensing technique cannot supply. An example is a microelectrode-based biosensor, which has been proposed for long-term monitoring of sweat glucose levels [181]. The multi-function microelectrode-based biosensor is fabricated on a flexible substrate, which offers greater wearing comfort than rigid sensors, thus providing long-term on-skin healthy monitoring.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ToF | Time of Flight |

| RSS | Received Signal Strength |

| LiDAR | Light and Radar |

| UWB | Ultra-wideband |

| RF | Radio Frequency |

| ID | Identification |

| MAE | Mean Absolute Error |

| IMU | Inertial Measurement Unit |

| PWM | Pulse-Width Modulation |

| AUV | Autonomous Underwater Vehicles |

| SLAM | Simultaneous Localization and Mapping |

References

- Sigg, S.; Shi, S.; Buesching, F.; Ji, Y.; Wolf, L. Leveraging RF-channel fluctuation for activity recognition: Active and passive systems, continuous and RSSI-based signal features. In Proceedings of the International Conference on Advances in Mobile Computing & Multimedia, Vienna, Austria, 2–4 December 2013; pp. 43–52. [Google Scholar]

- Ramos, R.G.; Domingo, J.D.; Zalama, E.; Gómez-García-Bermejo, J. Daily Human Activity Recognition Using Non-Intrusive Sensors. Sensors 2021, 21, 5270. [Google Scholar] [CrossRef] [PubMed]

- Samadi, M.R.H.; Cooke, N. EEG signal processing for eye tracking. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO), Lisbon, Portugal, 1–5 September 2014; pp. 2030–2034. [Google Scholar]

- Hussain, Z.; Sheng, Q.Z.; Zhang, W.E. A review and categorization of techniques on device-free human activity recognition. J. Netw. Comput. Appl. 2020, 167, 102738. [Google Scholar] [CrossRef]

- Yuan, G.; Wang, Z.; Meng, F.; Yan, Q.; Xia, S. An overview of human activity recognition based on smartphone. Sens. Rev. 2019, 39, 288–306. [Google Scholar] [CrossRef]

- Li, Y.; Wang, S.; Jin, C.; Zhang, Y.; Jiang, T. A survey of underwater magnetic induction communications: Fundamental issues, recent advances, and challenges. IEEE Commun. Surv. Tutor. 2019, 21, 2466–2487. [Google Scholar] [CrossRef]

- Dang, L.M.; Min, K.; Wang, H.; Piran, M.J.; Lee, C.H.; Moon, H. Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern Recognit. 2020, 108, 107561. [Google Scholar] [CrossRef]

- Fu, B.; Damer, N.; Kirchbuchner, F.; Kuijper, A. Sensing technology for human activity recognition: A comprehensive survey. IEEE Access 2020, 8, 83791–83820. [Google Scholar] [CrossRef]

- Raval, R.M.; Prajapati, H.B.; Dabhi, V.K. Survey and analysis of human activity recognition in surveillance videos. Intell. Decis. Technol. 2019, 13, 271–294. [Google Scholar] [CrossRef]

- Mohamed, R.; Perumal, T.; Sulaiman, M.N.; Mustapha, N. Multi resident complex activity recognition in smart home: A literature review. Int. J. Smart Home 2017, 11, 21–32. [Google Scholar] [CrossRef]

- Bux, A.; Angelov, P.; Habib, Z. Vision based human activity recognition: A review. Adv. Comput. Intell. Syst. 2017, 341–371. [Google Scholar]

- Ma, J.; Wang, H.; Zhang, D.; Wang, Y.; Wang, Y. A survey on wi-fi based contactless activity recognition. In Proceedings of the International Conference on Ubiquitous Intelligence & Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People and Smart World Congress (UIC/ATC/ScalCom/CBDCom/IoP/SmartWorld), Toulouse, France, 18–21 July 2016; pp. 1086–1091. [Google Scholar]

- Lioulemes, A.; Papakostas, M.; Gieser, S.N.; Toutountzi, T.; Abujelala, M.; Gupta, S.; Collander, C.; Mcmurrough, C.D.; Makedon, F. A survey of sensing modalities for human activity, behavior, and physiological monitoring. In Proceedings of the PETRA ’16: 9th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Corfu Island, Greece, 29 June–1 July 2016; pp. 1–8. [Google Scholar]

- Vrigkas, M.; Nikou, C.; Kakadiaris, I.A. A review of human activity recognition methods. Front. Robot. AI 2015, 2, 28. [Google Scholar] [CrossRef] [Green Version]

- Mukhopadhyay, S.C. Wearable sensors for human activity monitoring: A review. IEEE Sens. J. 2014, 15, 1321–1330. [Google Scholar] [CrossRef]

- Jalal, A.; Sarif, N.; Kim, J.T.; Kim, T.S. Human activity recognition via recognized body parts of human depth silhouettes for residents monitoring services at smart home. Indoor Built Environ. 2013, 22, 271–279. [Google Scholar] [CrossRef]

- Javed, A.R.; Faheem, R.; Asim, M.; Baker, T.; Beg, M.O. A smartphone sensors-based personalized human activity recognition system for sustainable smart cities. Sustain. Cities Soc. 2021, 71, 102970. [Google Scholar] [CrossRef]

- Li, R.; Chellappa, R.; Zhou, S.K. Learning multi-modal densities on discriminative temporal interaction manifold for group activity recognition. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2450–2457. [Google Scholar]

- Cho, N.G.; Kim, Y.J.; Park, U.; Park, J.S.; Lee, S.W. Group activity recognition with group interaction zone based on relative distance between human objects. Int. J. Pattern Recognit. Artif. Intell. 2015, 29, 1555007. [Google Scholar] [CrossRef]

- Brena, R.F.; García-Vázquez, J.P.; Galván-Tejada, C.E.; Muñoz-Rodriguez, D.; Vargas-Rosales, C.; Fangmeyer, J. Evolution of indoor positioning technologies: A survey. J. Sens. 2017, 2017, 2630413. [Google Scholar] [CrossRef]

- Fuchs, C.; Aschenbruck, N.; Martini, P.; Wieneke, M. Indoor tracking for mission critical scenarios: A survey. Pervasive Mob. Comput. 2011, 7, 1–15. [Google Scholar] [CrossRef]

- Navarro, S.E.; Mühlbacher-Karrer, S.; Alagi, H.; Zangl, H.; Koyama, K.; Hein, B.; Duriez, C.; Smith, J.R. Proximity perception in human-centered robotics: A survey on sensing systems and applications. IEEE Trans. Robot. 2021, 38, 1599–1620. [Google Scholar] [CrossRef]

- Mubashir, M.; Shao, L.; Seed, L. A survey on fall detection: Principles and approaches. Neurocomputing 2013, 100, 144–152. [Google Scholar] [CrossRef]

- Akhtaruzzaman, M.; Shafie, A.A.; Khan, M.R. Gait analysis: Systems, technologies, and importance. J. Mech. Med. Biol. 2016, 16, 1630003. [Google Scholar] [CrossRef]

- Verbunt, J.A.; Huijnen, I.P.; Köke, A. Assessment of physical activity in daily life in patients with musculoskeletal pain. Eur. J. Pain 2009, 13, 231–242. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Ho, S.B.; Cambria, E. A review of emotion sensing: Categorization models and algorithms. Multimed. Tools Appl. 2020, 79, 35553–35582. [Google Scholar] [CrossRef]

- Arakawa, T. A Review of Heartbeat Detection Systems for Automotive Applications. Sensors 2021, 21, 6112. [Google Scholar] [CrossRef] [PubMed]

- Alemdar, H.; Ersoy, C. Wireless sensor networks for healthcare: A survey. Comput. Netw. 2010, 54, 2688–2710. [Google Scholar] [CrossRef]

- Oguntala, G.A.; Abd-Alhameed, R.A.; Ali, N.T.; Hu, Y.F.; Noras, J.M.; Eya, N.N.; Elfergani, I.; Rodriguez, J. SmartWall: Novel RFID-enabled ambient human activity recognition using machine learning for unobtrusive health monitoring. IEEE Access 2019, 7, 68022–68033. [Google Scholar] [CrossRef]

- Gaglio, S.; Re, G.L.; Morana, M. Human activity recognition process using 3-D posture data. IEEE Trans. Hum.-Mach. Syst. 2014, 45, 586–597. [Google Scholar] [CrossRef]

- Jiang, S.; Gao, Q.; Liu, H.; Shull, P.B. A novel, co-located EMG-FMG-sensing wearable armband for hand gesture recognition. Sens. Actuators A Phys. 2020, 301, 111738. [Google Scholar] [CrossRef]

- Elniema Abdrahman Abdalla, H. Hand Gesture Recognition Based on Time-of-Flight Sensors. Ph.D Thesis, Politecnico di Torino, Turlin, Italy, 2021. [Google Scholar]

- Nahler, C.; Plank, H.; Steger, C.; Druml, N. Resource-Constrained Human Presence Detection for Indirect Time-of-Flight Sensors. In Proceedings of the 2021 Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 29 November–1 December 2021; pp. 1–5. [Google Scholar]

- Hossen, M.A.; Zahir, E.; Ata-E-Rabbi, H.; Azam, M.A.; Rahman, M.H. Developing a Mobile Automated Medical Assistant for Hospitals in Bangladesh. In Proceedings of the 2021 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 10–13 May 2021; pp. 366–372. [Google Scholar]

- Lin, J.T.; Newquist, C.; Harnett, C. Multitouch Pressure Sensing with Soft Optical Time-of-Flight Sensors. IEEE Trans. Instrum. Meas. 2022, 71, 7000708. [Google Scholar] [CrossRef]

- Bortolan, G.; Christov, I.; Simova, I. Potential of Rule-Based Methods and Deep Learning Architectures for ECG Diagnostics. Diagnostics 2021, 11, 1678. [Google Scholar] [CrossRef] [PubMed]

- Ismail, M.I.M.; Dzyauddin, R.A.; Samsul, S.; Azmi, N.A.; Yamada, Y.; Yakub, M.F.M.; Salleh, N.A.B.A. An RSSI-based Wireless Sensor Node Localisation using Trilateration and Multilateration Methods for Outdoor Environment. arXiv 2019, arXiv:1912.07801. [Google Scholar]

- Chen, K.; Zhang, D.; Yao, L.; Guo, B.; Yu, Z.; Liu, Y. Deep learning for sensor-based human activity recognition: Overview, challenges, and opportunities. ACM Comput. Surv. (CSUR) 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Aggarwal, J.K.; Cai, Q. Human motion analysis: A review. Comput. Vis. Image Underst. 1999, 73, 428–440. [Google Scholar] [CrossRef]

- Kellokumpu, V.; Pietikäinen, M.; Heikkilä, J. Human activity recognition using sequences of postures. In Proceedings of the MVA, Tsukuba, Japan, 16–18 May 2005; pp. 570–573. [Google Scholar]

- Münzner, S.; Schmidt, P.; Reiss, A.; Hanselmann, M.; Stiefelhagen, R.; Dürichen, R. CNN-based sensor fusion techniques for multimodal human activity recognition. In Proceedings of the 2017 ACM International Symposium on Wearable Computers, Maui, HI, USA, 11–15 September 2017; pp. 158–165. [Google Scholar]

- Yang, X.; Cao, R.; Zhou, M.; Xie, L. Temporal-frequency attention-based human activity recognition using commercial WiFi devices. IEEE Access 2020, 8, 137758–137769. [Google Scholar] [CrossRef]

- Köping, L.; Shirahama, K.; Grzegorzek, M. A general framework for sensor-based human activity recognition. Comput. Biol. Med. 2018, 95, 248–260. [Google Scholar] [CrossRef] [PubMed]

- Bustoni, I.A.; Hidayatulloh, I.; Ningtyas, A.; Purwaningsih, A.; Azhari, S. Classification methods performance on human activity recognition. J. Phys. Conf. Ser. 2020, 1456, 012027. [Google Scholar] [CrossRef] [Green Version]

- Gjoreski, H.; Kiprijanovska, I.; Stankoski, S.; Kalabakov, S.; Broulidakis, J.; Nduka, C.; Gjoreski, M. Head-AR: Human Activity Recognition with Head-Mounted IMU Using Weighted Ensemble Learning. In Activity and Behavior Computing; Springer: Singapore, 2021; pp. 153–167. [Google Scholar]

- Röddiger, T.; Wolffram, D.; Laubenstein, D.; Budde, M.; Beigl, M. Towards respiration rate monitoring using an in-ear headphone inertial measurement unit. In Proceedings of the 1st International Workshop on Earable Computing, London, UK, 9 September 2019; pp. 48–53. [Google Scholar]

- Kim, M.; Cho, J.; Lee, S.; Jung, Y. IMU sensor-based hand gesture recognition for human-machine interfaces. Sensors 2019, 19, 3827. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Georgi, M.; Amma, C.; Schultz, T. Recognizing Hand and Finger Gestures with IMU based Motion and EMG based Muscle Activity Sensing. In Biosignals; Citeseer: Princeton, NJ, USA, 2015; pp. 99–108. [Google Scholar]

- Mummadi, C.K.; Leo, F.P.P.; Verma, K.D.; Kasireddy, S.; Scholl, P.M.; Kempfle, J.; Laerhoven, K.V. Real-time and embedded detection of hand gestures with an IMU-based glove. Informatics 2018, 5, 28. [Google Scholar] [CrossRef] [Green Version]

- Kang, S.W.; Choi, H.; Park, H.I.; Choi, B.G.; Im, H.; Shin, D.; Jung, Y.G.; Lee, J.Y.; Park, H.W.; Park, S.; et al. The development of an IMU integrated clothes for postural monitoring using conductive yarn and interconnecting technology. Sensors 2017, 17, 2560. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Q. Evaluation of a Wearable System for Motion Analysis during Different Exercises. Master’s Thesis, ING School of Industrial and Information Engineering, Milano, Italy, 2019. Available online: https://www.politesi.polimi.it/handle/10589/149029 (accessed on 30 April 2022).

- Wang, Q.; Timmermans, A.; Chen, W.; Jia, J.; Ding, L.; Xiong, L.; Rong, J.; Markopoulos, P. Stroke patients’ acceptance of a smart garment for supporting upper extremity rehabilitation. IEEE J. Transl. Eng. Health Med. 2018, 6, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Kang, Y.; Valencia, D.R.; Kim, D. An Integrated System for Gait Analysis Using FSRs and an IMU. In Proceedings of the 2018 Second IEEE International Conference on Robotic Computing (IRC), Laguna Hills, CA, USA, 31 January–2 February 2018; pp. 347–351. [Google Scholar]

- Abdulrahim, K.; Hide, C.; Moore, T.; Hill, C. Aiding MEMS IMU with building heading for indoor pedestrian navigation. In Proceedings of the 2010 Ubiquitous Positioning Indoor Navigation and Location Based Service, Kirkkonummi, Finland, 14–15 October 2010; pp. 1–6. [Google Scholar]

- Wahjudi, F.; Lin, F.J. IMU-Based Walking Workouts Recognition. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019; pp. 251–256. [Google Scholar]

- Nagano, H.; Begg, R.K. Shoe-insole technology for injury prevention in walking. Sensors 2018, 18, 1468. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cesareo, A.; Previtali, Y.; Biffi, E.; Aliverti, A. Assessment of breathing parameters using an inertial measurement unit (IMU)-based system. Sensors 2019, 19, 88. [Google Scholar] [CrossRef] [Green Version]

- Huang, Y.; Kaufmann, M.; Aksan, E.; Black, M.J.; Hilliges, O.; Pons-Moll, G. Deep inertial poser: Learning to reconstruct human pose from sparse inertial measurements in real time. ACM Trans. Graph. (TOG) 2018, 37, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Younas, J.; Margarito, H.; Bian, S.; Lukowicz, P. Finger Air Writing-Movement Reconstruction with Low-cost IMU Sensor. In Proceedings of the MobiQuitous 2020—17th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Online, 7–9 December 2020; pp. 69–75. [Google Scholar]

- Li, H.; He, X.; Chen, X.; Fang, Y.; Fang, Q. Wi-motion: A robust human activity recognition using WiFi signals. IEEE Access 2019, 7, 153287–153299. [Google Scholar] [CrossRef]

- Liu, X.; Cao, J.; Tang, S.; Wen, J. Wi-sleep: Contactless sleep monitoring via wifi signals. In Proceedings of the 2014 IEEE Real-Time Systems Symposium, Rome, Italy, 2–5 December 2014; pp. 346–355. [Google Scholar]

- Wang, F.; Feng, J.; Zhao, Y.; Zhang, X.; Zhang, S.; Han, J. Joint activity recognition and indoor localization with WiFi fingerprints. IEEE Access 2019, 7, 80058–80068. [Google Scholar] [CrossRef]

- Bahle, G.; Fortes Rey, V.; Bian, S.; Bello, H.; Lukowicz, P. Using privacy respecting sound analysis to improve bluetooth based proximity detection for COVID-19 exposure tracing and social distancing. Sensors 2021, 21, 5604. [Google Scholar] [CrossRef]

- Hossain, A.M.; Soh, W.S. A comprehensive study of bluetooth signal parameters for localization. In Proceedings of the 2007 IEEE 18th International Symposium on Personal, Indoor and Mobile Radio Communications, Athens, Greece, 3–7 September 2007; pp. 1–5. [Google Scholar]

- Zhang, R.; Cao, S. Real-time human motion behavior detection via CNN using mmWave radar. IEEE Sens. Lett. 2018, 3, 1–4. [Google Scholar] [CrossRef]

- Zhao, P.; Lu, C.X.; Wang, B.; Chen, C.; Xie, L.; Wang, M.; Trigoni, N.; Markham, A. Heart rate sensing with a robot mounted mmwave radar. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2812–2818. [Google Scholar]

- Sun, Y.; Hang, R.; Li, Z.; Jin, M.; Xu, K. Privacy-Preserving Fall Detection with Deep Learning on mmWave Radar Signal. In Proceedings of the 2019 IEEE Visual Communications and Image Processing (VCIP), Sydney, Australia, 1–4 December 2019; pp. 1–4. [Google Scholar]

- Li, Z.; Lei, Z.; Yan, A.; Solovey, E.; Pahlavan, K. ThuMouse: A micro-gesture cursor input through mmWave radar-based interaction. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 4–6 January 2020; pp. 1–9. [Google Scholar]

- Cheng, Y.; Zhou, T. UWB indoor positioning algorithm based on TDOA technology. In Proceedings of the 2019 10th International Conference on Information Technology in Medicine and Education (ITME), Qingdao, China, 23–25 August 2019; pp. 777–782. [Google Scholar]

- Lee, S.; Kim, J.; Moon, N. Random forest and WiFi fingerprint-based indoor location recognition system using smart watch. Hum.-Centric Comput. Inf. Sci. 2019, 9, 1–14. [Google Scholar] [CrossRef]

- Porcino, D.; Hirt, W. Ultra-wideband radio technology: Potential and challenges ahead. IEEE Commun. Mag. 2003, 41, 66–74. [Google Scholar] [CrossRef] [Green Version]

- Bouchard, K.; Maitre, J.; Bertuglia, C.; Gaboury, S. Activity recognition in smart homes using UWB radars. Procedia Comput. Sci. 2020, 170, 10–17. [Google Scholar] [CrossRef]

- Ren, N.; Quan, X.; Cho, S.H. Algorithm for gesture recognition using an IR-UWB radar sensor. J. Comput. Commun. 2016, 4, 95–100. [Google Scholar] [CrossRef] [Green Version]

- Piriyajitakonkij, M.; Warin, P.; Lakhan, P.; Leelaarporn, P.; Kumchaiseemak, N.; Suwajanakorn, S.; Pianpanit, T.; Niparnan, N.; Mukhopadhyay, S.C.; Wilaiprasitporn, T. SleepPoseNet: Multi-view learning for sleep postural transition recognition using UWB. IEEE J. Biomed. Health Inform. 2020, 25, 1305–1314. [Google Scholar] [CrossRef]

- Bharadwaj, R.; Parini, C.; Koul, S.K.; Alomainy, A. Effect of Limb Movements on Compact UWB Wearable Antenna Radiation Performance for Healthcare Monitoring. Prog. Electromagn. Res. 2019, 91, 15–26. [Google Scholar] [CrossRef] [Green Version]

- Qi, J.; Liu, G.P. A robust high-accuracy ultrasound indoor positioning system based on a wireless sensor network. Sensors 2017, 17, 2554. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoeflinger, F.; Saphala, A.; Schott, D.J.; Reindl, L.M.; Schindelhauer, C. Passive indoor-localization using echoes of ultrasound signals. In Proceedings of the 2019 International Conference on Advanced Information Technologies (ICAIT), Yangon, Myanmar, 6–7 November 2019; pp. 60–65. [Google Scholar]

- Yang, X.; Sun, X.; Zhou, D.; Li, Y.; Liu, H. Towards wearable A-mode ultrasound sensing for real-time finger motion recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1199–1208. [Google Scholar] [CrossRef] [Green Version]

- Mokhtari, G.; Zhang, Q.; Nourbakhsh, G.; Ball, S.; Karunanithi, M. BLUESOUND: A new resident identification sensor—Using ultrasound array and BLE technology for smart home platform. IEEE Sens. J. 2017, 17, 1503–1512. [Google Scholar] [CrossRef]

- Rossi, M.; Feese, S.; Amft, O.; Braune, N.; Martis, S.; Tröster, G. AmbientSense: A real-time ambient sound recognition system for smartphones. In Proceedings of the 2013 IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), San Diego, CA, USA, 18–22 March 2013; pp. 230–235. [Google Scholar]

- Garg, S.; Lim, K.M.; Lee, H.P. An averaging method for accurately calibrating smartphone microphones for environmental noise measurement. Appl. Acoust. 2019, 143, 222–228. [Google Scholar] [CrossRef]

- Thiel, B.; Kloch, K.; Lukowicz, P. Sound-based proximity detection with mobile phones. In Proceedings of the Third International Workshop on Sensing Applications on Mobile Phones, Toronto, ON, Canada, 6 November 2012; pp. 1–4. [Google Scholar]

- Ward, J.A.; Lukowicz, P.; Troster, G.; Starner, T.E. Activity recognition of assembly tasks using body-worn microphones and accelerometers. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1553–1567. [Google Scholar] [CrossRef] [Green Version]

- Murata, S.; Yara, C.; Kaneta, K.; Ioroi, S.; Tanaka, H. Accurate indoor positioning system using near-ultrasonic sound from a smartphone. In Proceedings of the 2014 Eighth International Conference on Next Generation Mobile Apps, Services and Technologies, Oxford, UK, 10–12 September 2014; pp. 13–18. [Google Scholar]

- Rossi, M.; Seiter, J.; Amft, O.; Buchmeier, S.; Tröster, G. RoomSense: An indoor positioning system for smartphones using active sound probing. In Proceedings of the 4th Augmented Human International Conference, Stuttgart, Germany, 7–8 March 2013; pp. 89–95. [Google Scholar]

- Sathyamoorthy, A.J.; Patel, U.; Savle, Y.A.; Paul, M.; Manocha, D. COVID-robot: Monitoring social distancing constraints in crowded scenarios. arXiv 2020, arXiv:2008.06585. [Google Scholar]

- Lee, Y.H.; Medioni, G. Wearable RGBD indoor navigation system for the blind. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 493–508. [Google Scholar]

- Kim, K.; Kim, J.; Choi, J.; Kim, J.; Lee, S. Depth camera-based 3D hand gesture controls with immersive tactile feedback for natural mid-air gesture interactions. Sensors 2015, 15, 1022–1046. [Google Scholar] [CrossRef] [Green Version]

- Nagarkoti, A.; Teotia, R.; Mahale, A.K.; Das, P.K. Realtime indoor workout analysis using machine learning & computer vision. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 1440–1443. [Google Scholar]

- Li, M.; Leung, H. Multi-view depth-based pairwise feature learning for person-person interaction recognition. Multimed. Tools Appl. 2019, 78, 5731–5749. [Google Scholar] [CrossRef]

- Pancholi, S.; Agarwal, R. Development of low cost EMG data acquisition system for Arm Activities Recognition. In Proceedings of the 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Jaipur, India, 21–24 September 2016; pp. 2465–2469. [Google Scholar]

- Bangaru, S.S.; Wang, C.; Busam, S.A.; Aghazadeh, F. ANN-based automated scaffold builder activity recognition through wearable EMG and IMU sensors. Autom. Constr. 2021, 126, 103653. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, X.; Li, Y.; Lantz, V.; Wang, K.; Yang, J. A framework for hand gesture recognition based on accelerometer and EMG sensors. IEEE Trans. Syst. Man Cybern.-Part A Syst. Humans 2011, 41, 1064–1076. [Google Scholar] [CrossRef]

- Kim, J.; Mastnik, S.; André, E. EMG-based hand gesture recognition for realtime biosignal interfacing. In Proceedings of the 13th International Conference on Intelligent User Interfaces, Gran Canaria, Spain; 2008; pp. 30–39. [Google Scholar]

- Benatti, S.; Farella, E.; Benini, L. Towards EMG control interface for smart garments. In Proceedings of the 2014 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 13–17 September 2014; pp. 163–170. [Google Scholar]

- Ahsan, M.R.; Ibrahimy, M.I.; Khalifa, O.O. EMG signal classification for human computer interaction: A review. Eur. J. Sci. Res. 2009, 33, 480–501. [Google Scholar]

- Jia, R.; Liu, B. Human daily activity recognition by fusing accelerometer and multi-lead ECG data. In Proceedings of the 2013 IEEE International Conference on Signal Processing, Communication and Computing (ICSPCC 2013), KunMing, China, 5–8 August 2013; pp. 1–4. [Google Scholar]

- Liu, J.; Chen, J.; Jiang, H.; Jia, W.; Lin, Q.; Wang, Z. Activity recognition in wearable ECG monitoring aided by accelerometer data. In Proceedings of the 2018 IEEE international symposium on circuits and systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–4. [Google Scholar]

- Zhang, X.; Yao, L.; Zhang, D.; Wang, X.; Sheng, Q.Z.; Gu, T. Multi-person brain activity recognition via comprehensive EEG signal analysis. In Proceedings of the 14th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Melbourne, Australia, 7–10 November 2017; pp. 28–37. [Google Scholar]

- Kaur, B.; Singh, D.; Roy, P.P. Eyes open and eyes close activity recognition using EEG signals. In Proceedings of the International Conference on Cognitive Computing and Information Processing, Bengaluru, India, 15–16 December 2017; pp. 3–9. [Google Scholar]

- Liu, Y.; Sourina, O.; Nguyen, M.K. Real-time EEG-based human emotion recognition and visualization. In Proceedings of the 2010 International Conference on Cyberworlds, Singapore, 20–22 October 2010; pp. 262–269. [Google Scholar]

- Ishimaru, S.; Kunze, K.; Uema, Y.; Kise, K.; Inami, M.; Tanaka, K. Smarter eyewear: Using commercial EOG glasses for activity recognition. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014; pp. 239–242. [Google Scholar]

- Lu, Y.; Zhang, C.; Zhou, B.Y.; Gao, X.P.; Lv, Z. A dual model approach to EOG-based human activity recognition. Biomed. Signal Process. Control 2018, 45, 50–57. [Google Scholar] [CrossRef]

- Palatini, P. Blood pressure behaviour during physical activity. Sport. Med. 1988, 5, 353–374. [Google Scholar] [CrossRef] [PubMed]

- Lu, K.; Yang, L.; Seoane, F.; Abtahi, F.; Forsman, M.; Lindecrantz, K. Fusion of heart rate, respiration and motion measurements from a wearable sensor system to enhance energy expenditure estimation. Sensors 2018, 18, 3092. [Google Scholar] [CrossRef] [Green Version]

- Brouwer, A.M.; van Dam, E.; Van Erp, J.B.; Spangler, D.P.; Brooks, J.R. Improving real-life estimates of emotion based on heart rate: A perspective on taking metabolic heart rate into account. Front. Hum. Neurosci. 2018, 12, 284. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Yang, X.; Dai, A.; Chen, K. Sleep and wake classification based on heart rate and respiration rate. IOP Conf. Ser. Mater. Sci. Eng. 2018, 428, 012017. [Google Scholar] [CrossRef]

- cheol Jeong, I.; Bychkov, D.; Searson, P.C. Wearable devices for precision medicine and health state monitoring. IEEE Trans. Biomed. Eng. 2018, 66, 1242–1258. [Google Scholar] [CrossRef]

- Tateno, S.; Guan, X.; Cao, R.; Qu, Z. Development of drowsiness detection system based on respiration changes using heart rate monitoring. In Proceedings of the 2018 57th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Nara, Japan, 11–14 September 2018; pp. 1664–1669. [Google Scholar]

- Lee, M. A Lip-reading Algorithm Using Optical Flow and Properties of Articulatory Phonation. J. Korea Multimed. Soc. 2018, 21, 745–754. [Google Scholar]

- Gomez-Vilda, P.; Palacios-Alonso, D.; Rodellar-Biarge, V.; Álvarez-Marquina, A.; Nieto-Lluis, V.; Martínez-Olalla, R. Parkinson’s disease monitoring by biomechanical instability of phonation. Neurocomputing 2017, 255, 3–16. [Google Scholar] [CrossRef]

- Benalcázar, M.E.; Motoche, C.; Zea, J.A.; Jaramillo, A.G.; Anchundia, C.E.; Zambrano, P.; Segura, M.; Palacios, F.B.; Pérez, M. Real-time hand gesture recognition using the Myo armband and muscle activity detection. In Proceedings of the 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, 16–20 October 2017; pp. 1–6. [Google Scholar]

- Li, X.; Hong, K.; Liu, G. Detection of physical stress using facial muscle activity. J. Opt. Technol. 2018, 85, 562–569. [Google Scholar] [CrossRef]

- Caulcrick, C.; Russell, F.; Wilson, S.; Sawade, C.; Vaidyanathan, R. Unilateral Inertial and Muscle Activity Sensor Fusion for Gait Cycle Progress Estimation. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018; pp. 1151–1156. [Google Scholar]

- Fasih Haider, P.A.; Luz, S. Automatic Recognition of Low-Back Chronic Pain Level and Protective Movement Behaviour using Physical and Muscle Activity Information. In Proceedings of the 5th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16-20 Nov, 2020; pp. 834–838. [Google Scholar]

- Zhang, Y.; Yang, C.; Hudson, S.E.; Harrison, C.; Sample, A. Wall++ room-scale interactive and context-aware sensing. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–15. [Google Scholar]

- Cheng, J.; Amft, O.; Lukowicz, P. Active capacitive sensing: Exploring a new wearable sensing modality for activity recognition. In Proceedings of the International Conference on Pervasive Computing, Helsinki, Finland, 17–20 May 2010; pp. 319–336. [Google Scholar]

- Zhang, Y.; Laput, G.; Harrison, C. Electrick: Low-cost touch sensing using electric field tomography. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1–14. [Google Scholar]

- Valtonen, M.; Maentausta, J.; Vanhala, J. Tiletrack: Capacitive human tracking using floor tiles. In Proceedings of the 2009 IEEE International Conference on Pervasive Computing and Communications, Galveston, TX, USA, 9–13 March 2009; pp. 1–10. [Google Scholar]

- Noble, D.J.; MacDowell, C.J.; McKinnon, M.L.; Neblett, T.I.; Goolsby, W.N.; Hochman, S. Use of electric field sensors for recording respiration, heart rate, and stereotyped motor behaviors in the rodent home cage. J. Neurosci. Methods 2017, 277, 88–100. [Google Scholar] [CrossRef] [PubMed]

- Wong, W.; Juwono, F.H.; Khoo, B.T.T. Multi-Features Capacitive Hand Gesture Recognition Sensor: A Machine Learning Approach. IEEE Sens. J. 2021, 21, 8441–8450. [Google Scholar] [CrossRef]

- Chen, K.Y.; Lyons, K.; White, S.; Patel, S. uTrack: 3D input using two magnetic sensors. In Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, St. Andrews, UK, 8–11 October 2013; pp. 237–244. [Google Scholar]

- Reyes, G.; Wu, J.; Juneja, N.; Goldshtein, M.; Edwards, W.K.; Abowd, G.D.; Starner, T. Synchrowatch: One-handed synchronous smartwatch gestures using correlation and magnetic sensing. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–26. [Google Scholar] [CrossRef]

- Lyons, K. 2D input for virtual reality enclosures with magnetic field sensing. In Proceedings of the 2016 ACM International Symposium on Wearable Computers, Heidelberg, Germany, 12–16 September 2016; pp. 176–183. [Google Scholar]

- Pirkl, G.; Lukowicz, P. Robust, low cost indoor positioning using magnetic resonant coupling. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 431–440. [Google Scholar]

- Parizi, F.S.; Whitmire, E.; Patel, S. Auraring: Precise electromagnetic finger tracking. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–28. [Google Scholar] [CrossRef]

- Huang, J.; Mori, T.; Takashima, K.; Hashi, S.; Kitamura, Y. IM6D: Magnetic tracking system with 6-DOF passive markers for dexterous 3D interaction and motion. ACM Trans. Graph. (TOG) 2015, 34, 1–10. [Google Scholar] [CrossRef]

- Bian, S.; Zhou, B.; Lukowicz, P. Social distance monitor with a wearable magnetic field proximity sensor. Sensors 2020, 20, 5101. [Google Scholar] [CrossRef]

- Bian, S.; Zhou, B.; Bello, H.; Lukowicz, P. A wearable magnetic field based proximity sensing system for monitoring COVID-19 social distancing. In Proceedings of the 2020 International Symposium on Wearable Computers, Virtual Event, 12–16 September 2020; pp. 22–26. [Google Scholar]

- Amft, O.; González, L.I.L.; Lukowicz, P.; Bian, S.; Burggraf, P. Wearables to fight COVID-19: From symptom tracking to contact tracing. IEEE Pervasive Comput. 2020, 19, 53–60. [Google Scholar] [CrossRef]

- Bian, S.; Hevesi, P.; Christensen, L.; Lukowicz, P. Induced Magnetic Field-Based Indoor Positioning System for Underwater Environments. Sensors 2021, 21, 2218. [Google Scholar] [CrossRef]

- Kindratenko, V.V.; Sherman, W.R. Neural network-based calibration of electromagnetic tracking systems. Virtual Real. 2005, 9, 70–78. [Google Scholar] [CrossRef]

- Shu, L.; Hua, T.; Wang, Y.; Li, Q.; Feng, D.D.; Tao, X. In-shoe plantar pressure measurement and analysis system based on fabric pressure sensing array. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 767–775. [Google Scholar] [PubMed] [Green Version]

- Zhou, B.; Sundholm, M.; Cheng, J.; Cruz, H.; Lukowicz, P. Measuring muscle activities during gym exercises with textile pressure mapping sensors. Pervasive Mob. Comput. 2017, 38, 331–345. [Google Scholar] [CrossRef]

- Kaddoura, Y.; King, J.; Helal, A. Cost-precision tradeoffs in unencumbered floor-based indoor location tracking. In Proceedings of the Third International Conference On Smart Homes and Health Telematic (ICOST), Montreal, QC, Canada, 4–6 July 2005. [Google Scholar]

- Nakane, H.; Toyama, J.; Kudo, M. Fatigue detection using a pressure sensor chair. In Proceedings of the 2011 IEEE International Conference on Granular Computing, Taiwan, China, 8–10 November 2011; pp. 490–495. [Google Scholar]

- Goetschius, J.; Feger, M.A.; Hertel, J.; Hart, J.M. Validating center-of-pressure balance measurements using the MatScan® pressure mat. J. Sport Rehabil. 2018, 27, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Aliau Bonet, C.; Pallàs Areny, R. A fast method to estimate body capacitance to ground. In Proceedings of the Proceedings of XX IMEKO World Congress 2012, Busan, Korea, 9–14 September 2012; pp. 1–4. [Google Scholar]

- Aliau-Bonet, C.; Pallas-Areny, R. A novel method to estimate body capacitance to ground at mid frequencies. IEEE Trans. Instrum. Meas. 2013, 62, 2519–2525. [Google Scholar] [CrossRef]

- Buller, W.; Wilson, B. Measurement and modeling mutual capacitance of electrical wiring and humans. IEEE Trans. Instrum. Meas. 2006, 55, 1519–1522. [Google Scholar] [CrossRef]

- Bian, S.; Lukowicz, P. A Systematic Study of the Influence of Various User Specific and Environmental Factors on Wearable Human Body Capacitance Sensing. In Proceedings of the EAI International Conference on Body Area Networks, Virtual Event, 25–26 October 2021; pp. 247–274. [Google Scholar]

- Goad, N.; Gawkrodger, D. Ambient humidity and the skin: The impact of air humidity in healthy and diseased states. J. Eur. Acad. Dermatol. Venereol. 2016, 30, 1285–1294. [Google Scholar] [CrossRef] [PubMed]

- Egawa, M.; Oguri, M.; Kuwahara, T.; Takahashi, M. Effect of exposure of human skin to a dry environment. Skin Res. Technol. 2002, 8, 212–218. [Google Scholar] [CrossRef] [PubMed]

- Jonassen, N. Human body capacitance: Static or dynamic concept? [ESD]. In Proceedings of the Electrical Overstress/Electrostatic Discharge Symposium Proceedings 1998 (Cat. No. 98TH8347), Reno, NV, USA, 6–8 October 1998; pp. 111–117. [Google Scholar]

- Bian, S.; Yuan, S.; Rey, V.F.; Lukowicz, P. Using human body capacitance sensing to monitor leg motion dominated activities with a wrist worn device. In Proceedings of the Sensor-and Video-Based Activity and Behavior Computing: 3rd International Conference on Activity and Behavior Computing (ABC 2021), Virtual Event, 20–22 October 2021; p. 81. [Google Scholar]

- Cohn, G.; Morris, D.; Patel, S.; Tan, D. Humantenna: Using the body as an antenna for real-time whole-body interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 1901–1910. [Google Scholar]

- Bian, S.; Rey, V.F.; Younas, J.; Lukowicz, P. Wrist-Worn Capacitive Sensor for Activity and Physical Collaboration Recognition. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kyoto, Japan, 11–15 March 2019; pp. 261–266. [Google Scholar]

- Bian, S.; Lukowicz, P. Capacitive Sensing Based On-board Hand Gesture Recognition with TinyML. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Virtual, 21–26 September 2021; pp. 4–5.

- Cohn, G.; Gupta, S.; Lee, T.J.; Morris, D.; Smith, J.R.; Reynolds, M.S.; Tan, D.S.; Patel, S.N. An ultra-low-power human body motion sensor using static electric field sensing. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 99–102. [Google Scholar]

- Pouryazdan, A.; Prance, R.J.; Prance, H.; Roggen, D. Wearable electric potential sensing: A new modality sensing hair touch and restless leg movement. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, Heidelberg, Germany, 12–16 September 2016; pp. 846–850. [Google Scholar]

- von Wilmsdorff, J.; Kirchbuchner, F.; Fu, B.; Braun, A.; Kuijper, A. An exploratory study on electric field sensing. In Proceedings of the European Conference on Ambient Intelligence, Malaga, Spain, 26–28 April 2017; pp. 247–262. [Google Scholar]

- Bian, S.; Rey, V.F.; Hevesi, P.; Lukowicz, P. Passive Capacitive based Approach for Full Body Gym Workout Recognition and Counting. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications (PerCom), Kyoto, Japan, 11–15 March 2019; pp. 1–10. [Google Scholar]

- Yang, D.; Xu, B.; Rao, K.; Sheng, W. Passive infrared (PIR)-based indoor position tracking for smart homes using accessibility maps and a-star algorithm. Sensors 2018, 18, 332. [Google Scholar] [CrossRef] [Green Version]

- Yang, B.; Luo, J.; Liu, Q. A novel low-cost and small-size human tracking system with pyroelectric infrared sensor mesh network. Infrared Phys. Technol. 2014, 63, 147–156. [Google Scholar] [CrossRef]

- Kashimoto, Y.; Hata, K.; Suwa, H.; Fujimoto, M.; Arakawa, Y.; Shigezumi, T.; Komiya, K.; Konishi, K.; Yasumoto, K. Low-cost and device-free activity recognition system with energy harvesting PIR and door sensors. In Proceedings of the Adjunct Proceedings of the 13th International Conference on Mobile and Ubiquitous Systems: Computing Networking and Services, Hiroshima, Japan, 28 November–1 December 2016; pp. 6–11. [Google Scholar]

- Kashimoto, Y.; Fujiwara, M.; Fujimoto, M.; Suwa, H.; Arakawa, Y.; Yasumoto, K. ALPAS: Analog-PIR-sensor-based activity recognition system in smarthome. In Proceedings of the 2017 IEEE 31st International Conference on Advanced Information Networking and Applications (AINA), Taiwan, China, 27–29 March 2017; pp. 880–885. [Google Scholar]

- Naik, K.; Pandit, T.; Naik, N.; Shah, P. Activity Recognition in Residential Spaces with Internet of Things Devices and Thermal Imaging. Sensors 2021, 21, 988. [Google Scholar] [CrossRef] [PubMed]

- Hossen, J.; Jacobs, E.L.; Chowdhury, F.K. Activity recognition in thermal infrared video. In Proceedings of the SoutheastCon 2015, Fort Lauderdale, FL, USA, 9–12 April 2015; pp. 1–2. [Google Scholar]

- Chudecka, M.; Lubkowska, A.; Leźnicka, K.; Krupecki, K. The use of thermal imaging in the evaluation of the symmetry of muscle activity in various types of exercises (symmetrical and asymmetrical). J. Hum. Kinet. 2015, 49, 141. [Google Scholar] [CrossRef] [Green Version]

- Al-Khalidi, F.; Saatchi, R.; Elphick, H.; Burke, D. An evaluation of thermal imaging based respiration rate monitoring in children. Am. J. Eng. Appl. Sci. 2011, 4, 586–597. [Google Scholar]

- Ruminski, J.; Kwasniewska, A. Evaluation of respiration rate using thermal imaging in mobile conditions. In Application of Infrared to Biomedical Sciences; Springer: Singapore, 2017; pp. 311–346. [Google Scholar]

- Uddin, M.Z.; Torresen, J. A deep learning-based human activity recognition in darkness. In Proceedings of the 2018 Colour and Visual Computing Symposium (CVCS), Gjovik, Norway, 19–20 September 2018; pp. 1–5. [Google Scholar]

- Baha’A, A.; Almazari, M.M.; Alazrai, R.; Daoud, M.I. A dataset for Wi-Fi-based human activity recognition in line-of-sight and non-line-of-sight indoor environments. Data Brief 2020, 33, 106534. [Google Scholar]

- Guo, L.; Wang, L.; Lin, C.; Liu, J.; Lu, B.; Fang, J.; Liu, Z.; Shan, Z.; Yang, J.; Guo, S. Wiar: A public dataset for wifi-based activity recognition. IEEE Access 2019, 7, 154935–154945. [Google Scholar] [CrossRef]

- Tian, J.; Yongkun, S.; Yongpeng, D.; Xikun, H.; Yongping, S.; Xiaolong, Z.; Zhifeng, Q. UWB-HA4D-1.0: An Ultra-wideband Radar Human Activity 4D Imaging Dataset. Lei Da Xue Bao 2022, 11, 27–39. [Google Scholar]

- Delamare, M.; Duval, F.; Boutteau, R. A new dataset of people flow in an industrial site with uwb and motion capture systems. Sensors 2020, 20, 4511. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, S.; Wang, D.; Park, J.; Cho, S.H. UWB-gestures, a public dataset of dynamic hand gestures acquired using impulse radar sensors. Sci. Data 2021, 8, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.D.; Sandha, S.S.; Garcia, L.; Srivastava, M. Radhar: Human activity recognition from point clouds generated through a millimeter-wave radar. In Proceedings of the 3rd ACM Workshop on Millimeter-wave Networks and Sensing Systems, Los Cabos, Mexico, 25 October 2019; pp. 51–56. [Google Scholar]

- Liu, H.; Zhou, A.; Dong, Z.; Sun, Y.; Zhang, J.; Liu, L.; Ma, H.; Liu, J.; Yang, N. M-gesture: Person-independent real-time in-air gesture recognition using commodity millimeter wave radar. IEEE Internet Things J. 2021, 9, 3397–3415. [Google Scholar] [CrossRef]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P.; et al. The kinetics human action video dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Chaquet, J.M.; Carmona, E.J.; Fernández-Caballero, A. A survey of video datasets for human action and activity recognition. Comput. Vis. Image Underst. 2013, 117, 633–659. [Google Scholar] [CrossRef] [Green Version]

- Mohino-Herranz, I.; Gil-Pita, R.; Rosa-Zurera, M.; Seoane, F. Activity recognition using wearable physiological measurements: Selection of features from a comprehensive literature study. Sensors 2019, 19, 5524. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Casale, P.; Pujol, O.; Radeva, P. Personalization and user verification in wearable systems using biometric walking patterns. Pers. Ubiquitous Comput. 2012, 16, 563–580. [Google Scholar] [CrossRef]

- Zhang, M.; Sawchuk, A.A. USC-HAD: A daily activity dataset for ubiquitous activity recognition using wearable sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 1036–1043. [Google Scholar]

- Hanley, D.; Faustino, A.B.; Zelman, S.D.; Degenhardt, D.A.; Bretl, T. MagPIE: A dataset for indoor positioning with magnetic anomalies. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017; pp. 1–8. [Google Scholar]

- zhaxidelebsz. Gym Workouts Data Set. 2021. Available online: https://github.com/zhaxidele/Toolkit-for-HBC-sensing (accessed on 30 April 2022).

- Pouyan, M.B.; Birjandtalab, J.; Heydarzadeh, M.; Nourani, M.; Ostadabbas, S. A pressure map dataset for posture and subject analytics. In Proceedings of the 2017 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Orlando, FL, USA, 16–19 February 2017; pp. 65–68. [Google Scholar]

- Chatzaki, C.; Skaramagkas, V.; Tachos, N.; Christodoulakis, G.; Maniadi, E.; Kefalopoulou, Z.; Fotiadis, D.I.; Tsiknakis, M. The smart-insole dataset: Gait analysis using wearable sensors with a focus on elderly and Parkinson’s patients. Sensors 2021, 21, 2821. [Google Scholar] [CrossRef] [PubMed]

- Assa, A.; Janabi-Sharifi, F. A Kalman Filter-Based Framework for Enhanced Sensor Fusion. IEEE Sens. J. 2015, 15, 3281–3292. [Google Scholar] [CrossRef]

- Han, J.; Li, M.; Li, H.; Li, C.; Ye, J.; Yang, B. Pt-poly(L-lactic acid) microelectrode-based microsensor for in situ glucose detection in sweat. Biosens. Bioelectron. 2020, 170, 112675. [Google Scholar] [CrossRef] [PubMed]

- Cheng, S.; Gu, Z.; Zhou, L.; Hao, M.; An, H.; Song, K.; Wu, X.; Zhang, K.; Zhao, Z.; Dong, Y.; et al. Recent Progress in Intelligent Wearable Sensors for Health Monitoring and Wound Healing Based on Biofluids. J. Front. Bioeng. Biotechnol. 2021, 9, 765987. [Google Scholar] [CrossRef] [PubMed]

| Focused Subject | Ref | Year | Contribution |

|---|---|---|---|

| Device-free sensors | [4] | 2020 |

|

| Full-stack (sensors and algorithms) | [7] | 2020 |

|

| Overall sensors | [8] | 2020 |

|

| Smartphone sensors | [5] | 2019 |

|

| Surveillance video | [9] | 2019 |

|

| Radar sensors | [6] | 2019 |

|

| Bespoke sensors in smart home | [10] | 2017 |

|

| Vision-based | [11] | 2017 |

|

| WiFi-based | [12] | 2016 |

|

| Non-invasive sensors | [13] | 2016 |

|

| Vision-based | [14] | 2015 |

|

| Wearable sensors | [15] | 2014 |

|

| Modality | Cost (USD) | Power Level | Active/ Passive | Privacy | Compute Load | Robustness | Target | Typical Application | Comment | Accessible Dataset |

|---|---|---|---|---|---|---|---|---|---|---|

| WiFi | tens | ≈tens Watt | active | no | medium | low | where, what | positioning, ADL, ambient intelligence | pervasiveness, environmental sensitivity | [163,164] |

| UWB | tens | ≈mW | active | no | low | low | where, what | positioning, proximity, ADL, gesture recognition, ambient intelligence | multi-path resistive, high accuracy, costly for massive consumer usage | [165,166,167] |

| mmWave | tens | ≈W | active | no | medium | low | where, what, how | positioning, proximity, ADL, gesture recognition, health monitoring, ambient intelligence | high accuracy, low power efficiency for massive consumer usage | [168,169] |

| Ultrasonic | hundreds | ≈mW to W | active | no | low | low | where, what | positioning, proximity, ambient intelligence | high accuracy, weak robustness | - |

| Optic | tens of hundreds | ≈W and above | passive | yes | high | medium | where, what, how | positioning, proximity, ADL, gait analysis, gesture recognition, surveillance | comprehensive approach, high resource consumption | [170,171,172] |

| ExG | hundreds | ≈tens mW | passive | no | medium | high | how, what | sports, healthcare monitoring, ADL | high resolution, noise sensitive | [173] |

| IMU | a few | ≈mW | passive | no | high | medium | where, what | positioning, ADL, gesture recognition, healthcare monitoring, gait analysis, sports | dominant sensing modality, accumulated bias | [174,175] |

| Magnetic Field(AC) | tens | ≈hundreds mW | active | no | low | high | where, what | positioning, proximity, | high robustness, limited detection range | - |

| Magnetic Field(DC) | a few | ≈mW | passive | no | low | high | what | proximity, gesture recognition | high accuracy, short detection range | [176] |

| Electric Field(active) | tens | ≈mW | active | no | low | low | where, what | positioning, proximity, ambient intelligence | high sensitivity, noise sensitive | - |

| Electric Field(Passive) | a few | ≈sub-mW | passive | no | low | low | where, what | positioning, proximity, sports, gait analysis, ambient intelligence | high sensitivity, noise sensitive | [177] |

| Gravitational Field | tens of hundreds | area dependent | passive | no | depends | high | where, what | positioning, sports, gait analysis, ambient intelligence | versatility/customizability, costly maintenance | [178,179] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bian, S.; Liu, M.; Zhou, B.; Lukowicz, P. The State-of-the-Art Sensing Techniques in Human Activity Recognition: A Survey. Sensors 2022, 22, 4596. https://doi.org/10.3390/s22124596

Bian S, Liu M, Zhou B, Lukowicz P. The State-of-the-Art Sensing Techniques in Human Activity Recognition: A Survey. Sensors. 2022; 22(12):4596. https://doi.org/10.3390/s22124596

Chicago/Turabian StyleBian, Sizhen, Mengxi Liu, Bo Zhou, and Paul Lukowicz. 2022. "The State-of-the-Art Sensing Techniques in Human Activity Recognition: A Survey" Sensors 22, no. 12: 4596. https://doi.org/10.3390/s22124596

APA StyleBian, S., Liu, M., Zhou, B., & Lukowicz, P. (2022). The State-of-the-Art Sensing Techniques in Human Activity Recognition: A Survey. Sensors, 22(12), 4596. https://doi.org/10.3390/s22124596