Predicting Bulk Average Velocity with Rigid Vegetation in Open Channels Using Tree-Based Machine Learning: A Novel Approach Using Explainable Artificial Intelligence

Abstract

:1. Introduction

2. Explainable Artificial Intelligence (XAI)

SHAP (Shapley Additive Explanations)

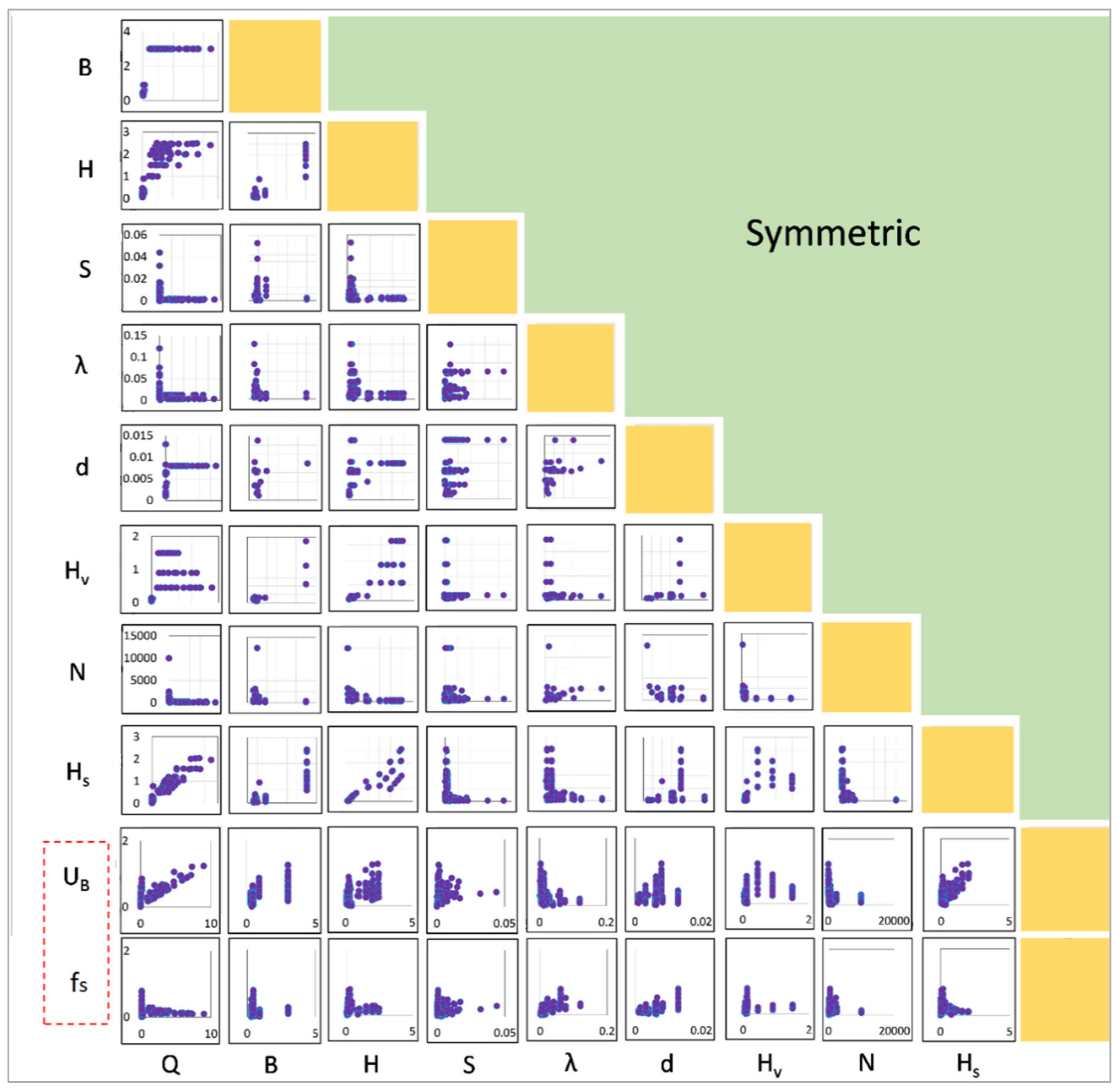

3. Data Description

4. Machine Learning Models

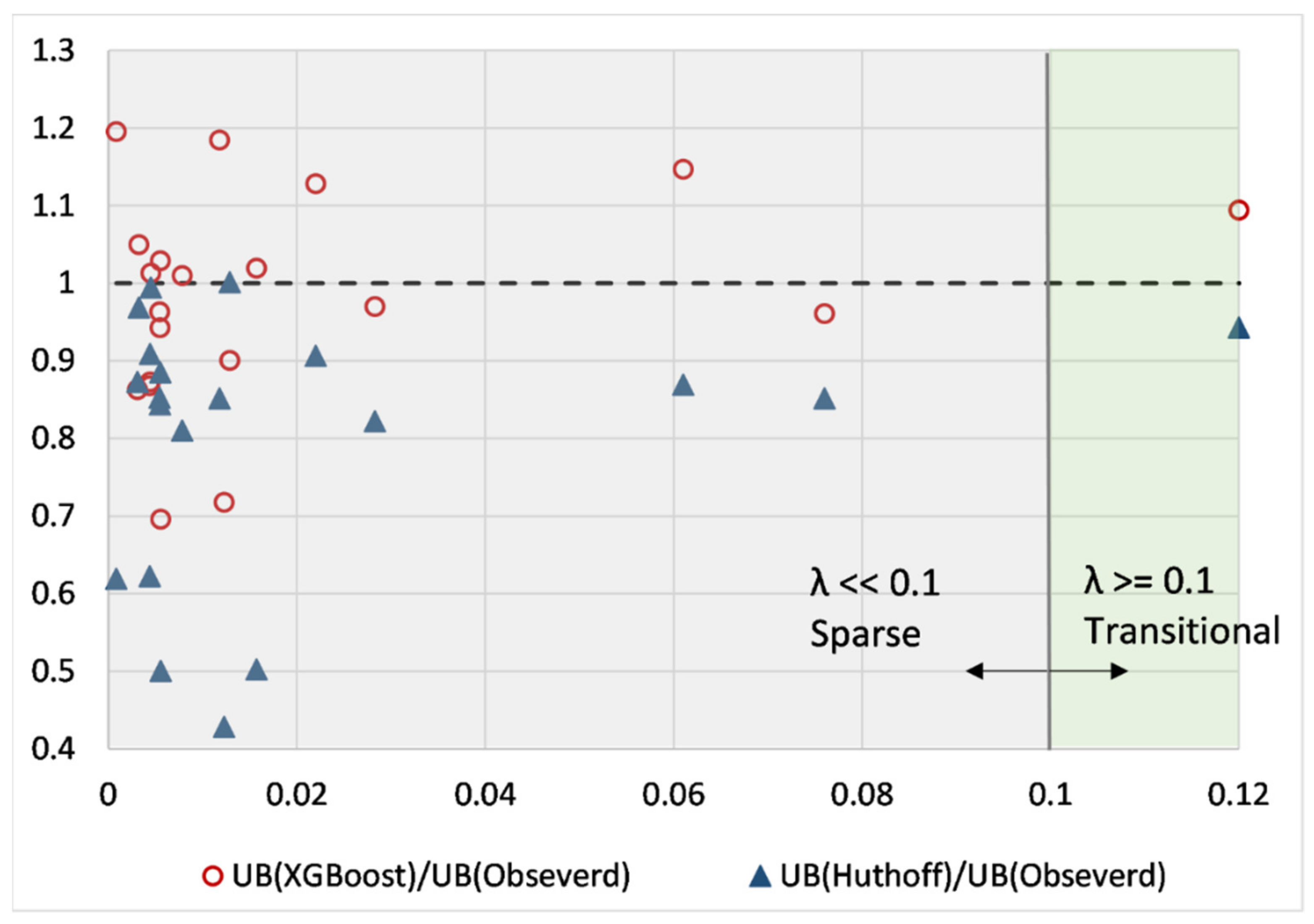

4.1. Decision Tree Regressor

4.2. Extra Tree Regressor

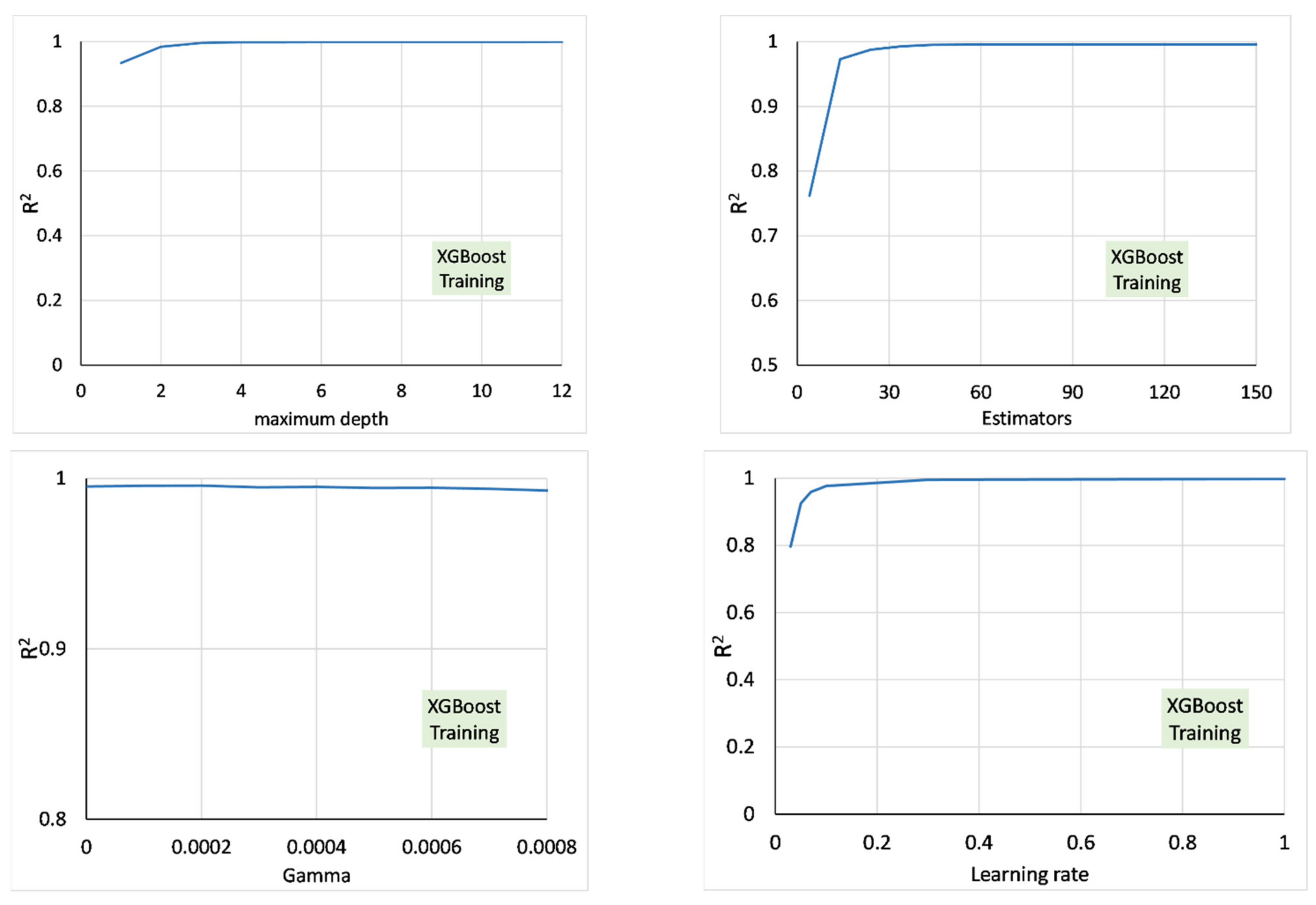

4.3. Extreme Gradient Boosting Regressor (XGBoost)

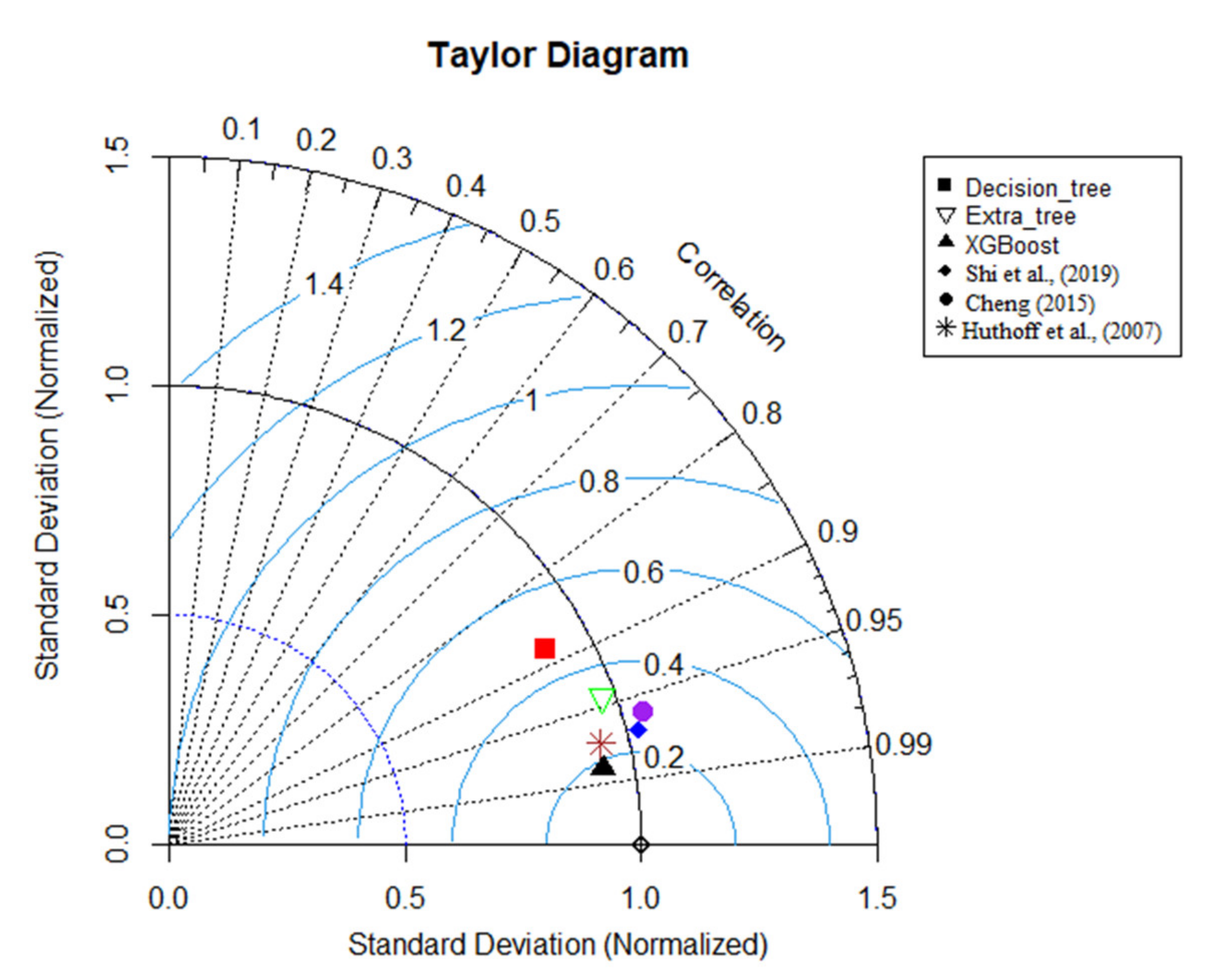

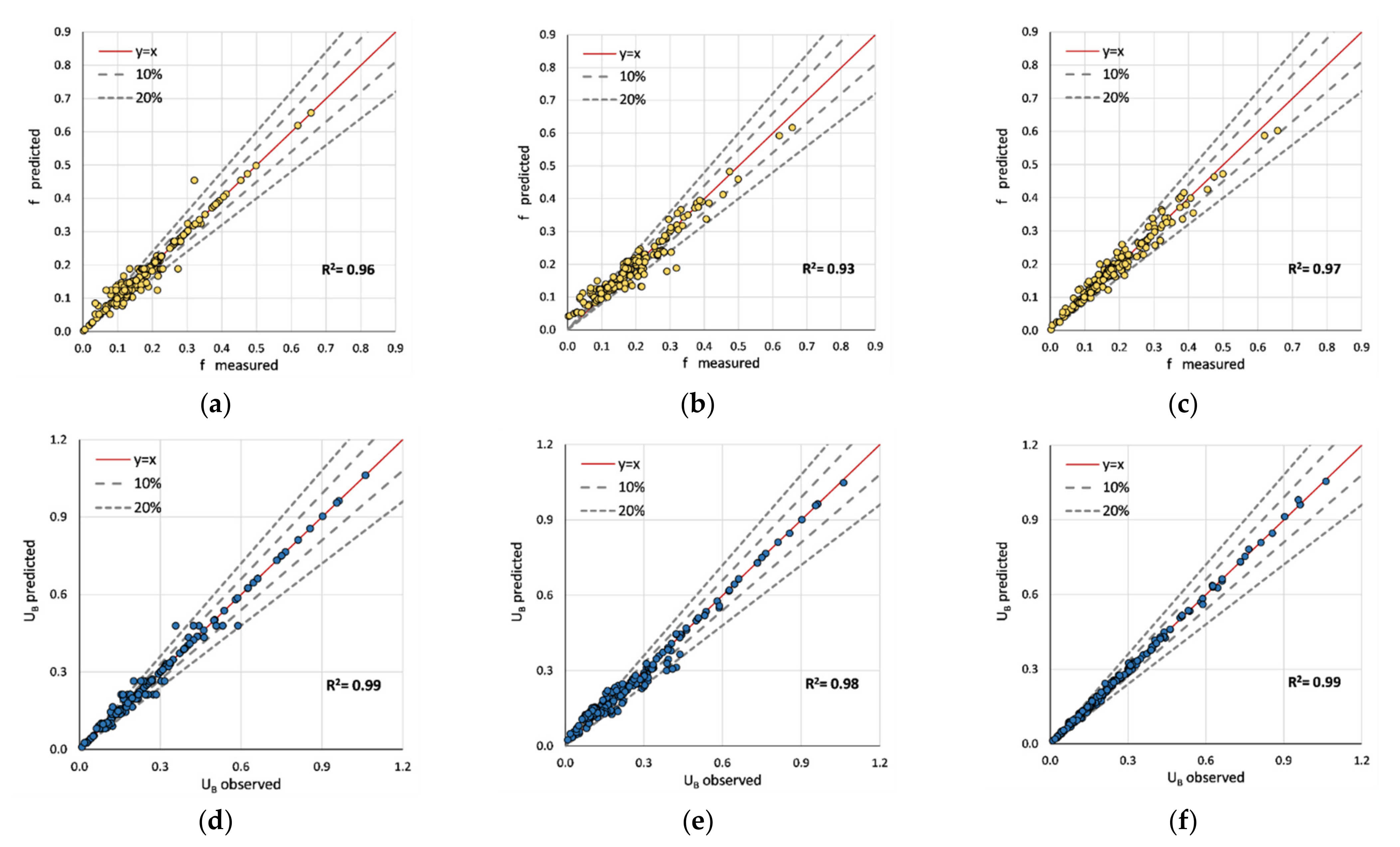

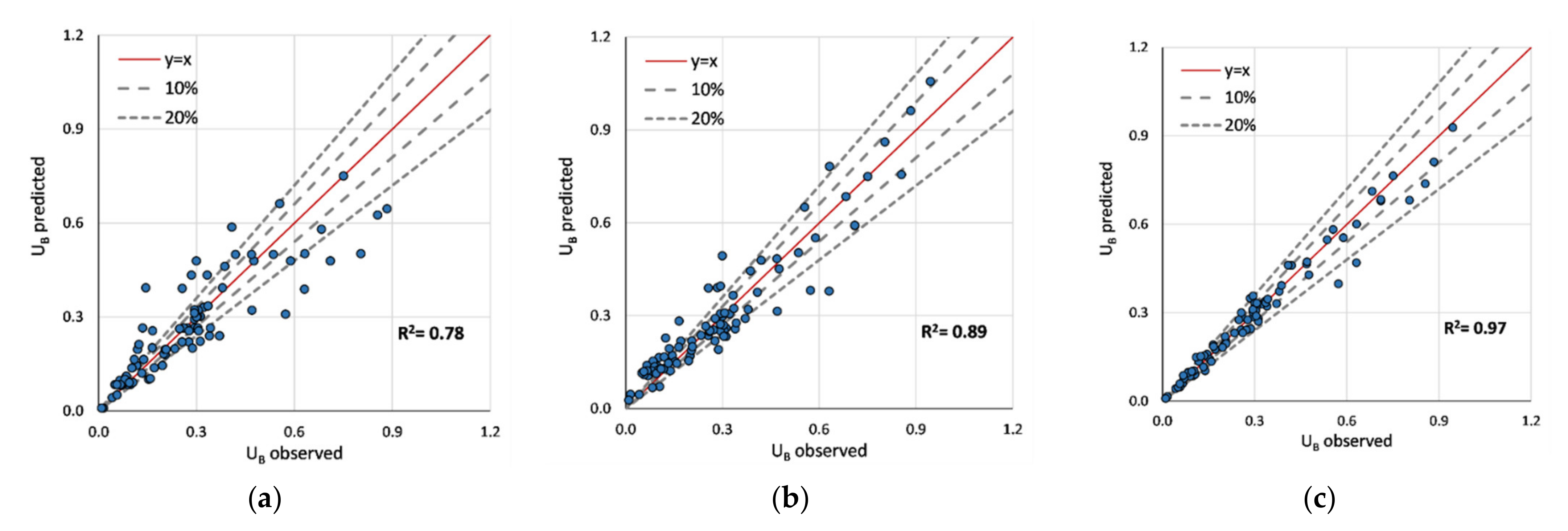

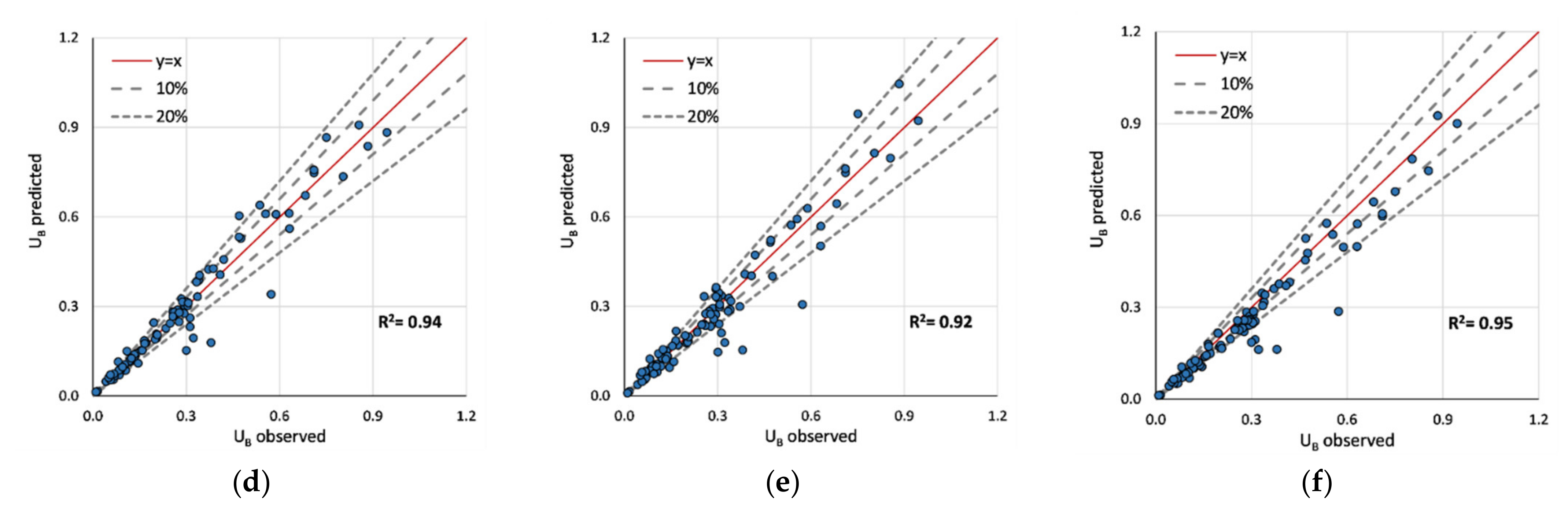

5. Performance Evaluation of Tree-Based Models

6. Application of XAI for Model Predictions

6.1. Intrinsic Model Explanation

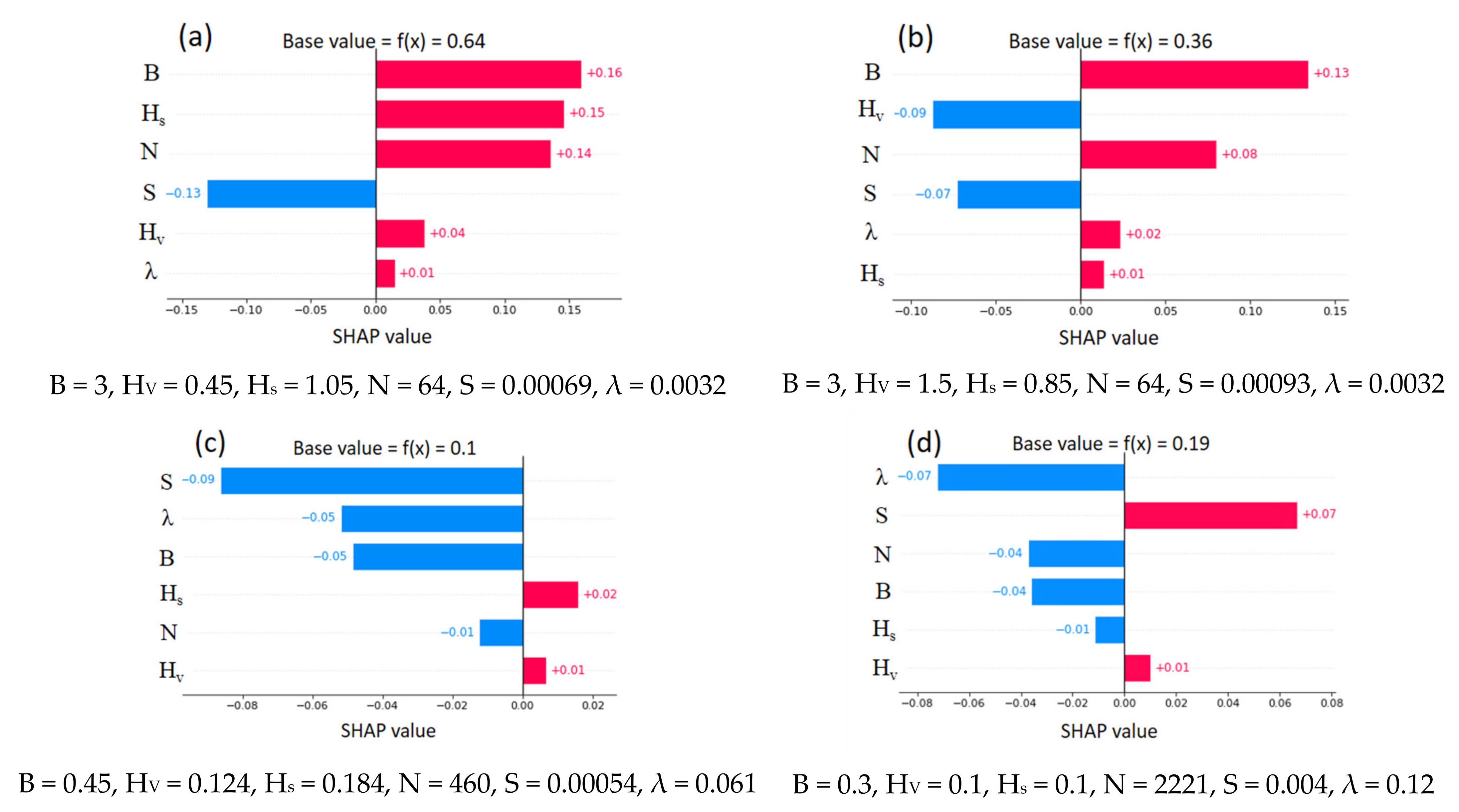

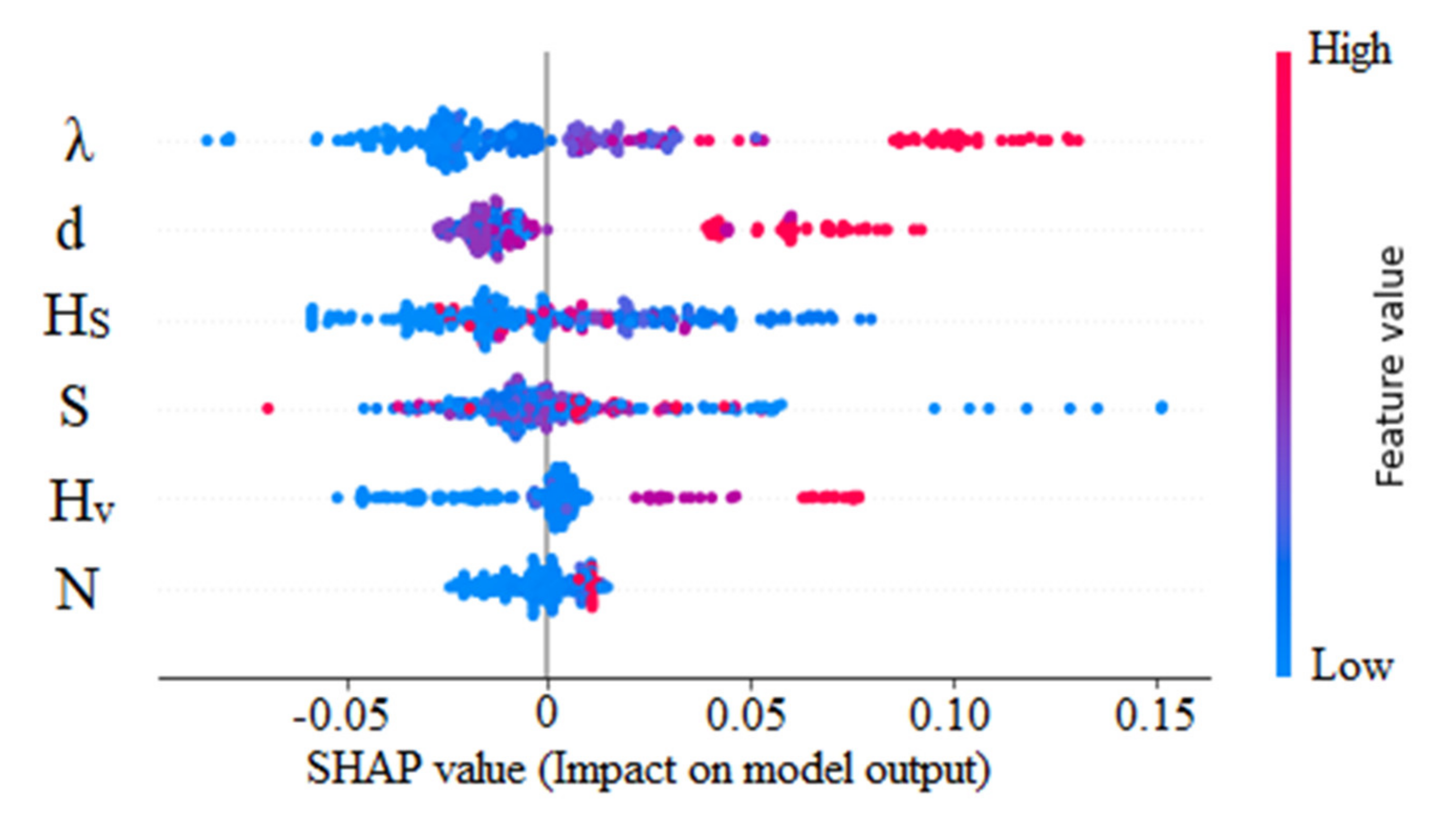

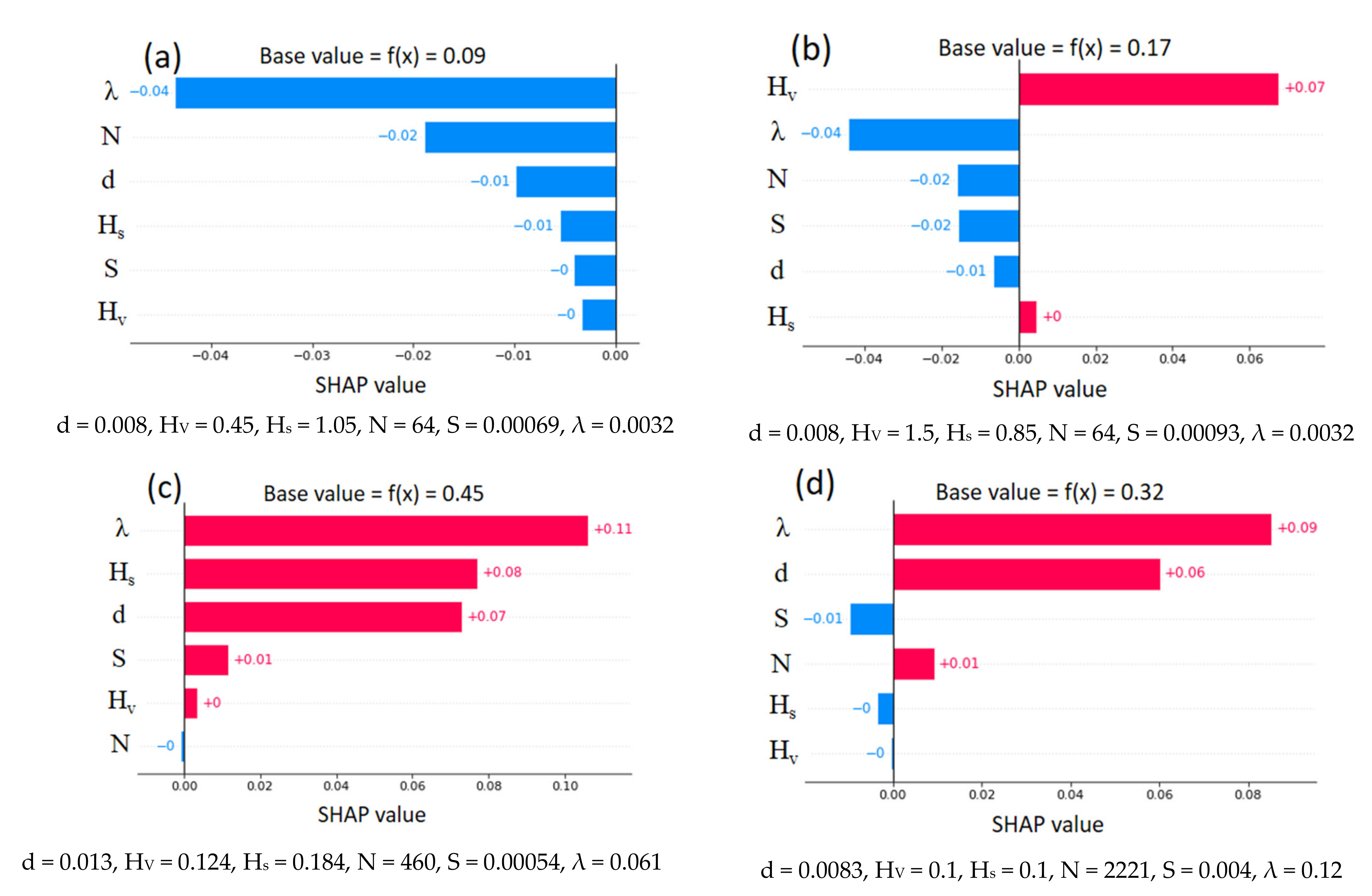

6.2. Post-Hoc Explanation

7. Conclusions

- Ordinary (decision tree) and ensemble tree-based models (extra tree and XGBoost) are accurate in predicting the bulk average velocity (UB). However, XGBoost showcased a superior performance, even when compared to existing regression models (R = 0.984). Further, the XGBoost model is accurate in predicting the friction coefficient of the surface layer (fS) with an accuracy of R = 0.92. Compared to existing regression models, XGBoost provides consistent predictions under sparse vegetation conditions (λ << 0.1). However, as a result of the complex tree structure, a post-hoc explanation was required to elucidate the XGBoost predictions.

- SHAP revealed the inner-working of the XGBoost model and the underlying reasoning behind respective predictions. Explanations present the contribution of each feature in a model in whole and instances, identifying the dominant parameters. SHAP provides the causality of predictions compared to existing complex regression models without sacrificing either the accuracy or complexity of ML models. Knowledge obtained through SHAP can be used to validate models using experimental data. For example, SHAP explanations adhere to what is generally observed in complex flow with rigid vegetation. Therefore, we believe that it will improve end-users’ and ”domain experts’” trust in implementing ML in hydrology-related studies.

8. Limitation and Future Work

- The work proposed was focused on open channel flow with rigid vegetation. However, results do not rule out methods to be used with flexible vegetation. A separate study can be carried out using experimental data and explainable ML. It provides a great opportunity to explain the underlying reasoning behind complex applications. Further, the ability of XAI and ML can be explored in hydrology-related applications.

- We suggested tree-based ordinary and ensemble methods as the optimization is more convenient. Further, these models follow a deterministic and human-comprehensible process compared to a neural network. However, several researchers have already used ANN models for hydrology-related studies. Therefore, we suggest examining the performance of advanced ML architectures, such as deep neural networks, generative adversarial networks (GAN), and artificial neural networks (ANN), for the proposed work. These studies can be combined with XAI to obtain the inner workings of the model to improve end-users’ and domain experts’ trust in these advanced ML models.

- It is important to evaluate different explanation models other than SHAP. For example, Moradi and Samweld [69] reported that the explanation process of LIME is markedly different from that of SHAP. The knowledge of different explanation (post-hoc) methods will assist in comparing a set of obtained predictions (feature importance).

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Hyperpatameter Tuning

| Decision Tree | Extra Tree | XGBoost | |||

|---|---|---|---|---|---|

| Hyperparameter | Optimized/Assigned Value | Hyperparameter | Optimized/Assigned Value | Hyperparameter | Optimized/Assigned Value |

| criterion | Mean square error | criterion | Mean square error | Maximum depth | 3 |

| splitter | Best | Maximum depth | 8 | Gamma | 0.0002 |

| Maximum depth | 8 | Minimum samples leaf | 2 | Learning rate | 0.3 |

| Minimum samples leaf | 2 | Minimum sample split | 2 | Number of Estimators | 50 |

| Minimum sample split | 2 | Number of Estimators | 50 | Random state | 154 |

| Maximum Features | 5 | Bootstrap | FALSE | Reg_Alpha | 0.0001 |

| Minimum impurity decrease | 0 | Minimum impurity decrease | 0 | Base score | 0.5 |

| Random state | 5464 | Random state | 5464 | ||

| CC alpha | 0 | Number of jobs | none | ||

Appendix B

References

- Huai, W.X.; Zeng, Y.H.; Xu, Z.G.; Yang, Z.H. Three-layer model for vertical velocity distribution in open channel flow with submerged rigid vegetation. Adv. Water Resour. 2009, 32, 487–492. [Google Scholar] [CrossRef]

- Nikora, N.; Nikora, V.; O’Donoghue, T. Velocity Profiles in Vegetated Open-Channel Flows: Combined Effects of Multiple Mechanisms. J. Hydraul. Eng. 2013, 139, 1021–1032. [Google Scholar] [CrossRef]

- Tang, H.; Tian, Z.; Yan, J.; Yuan, S. Determining drag coefficients and their application in modelling of turbulent flow with submerged vegetation. Adv. Water Resour. 2014, 69, 134–145. [Google Scholar] [CrossRef]

- Shi, H.; Liang, X.; Huai, W.; Wang, Y. Predicting the bulk average velocity of open-channel flow with submerged rigid vegetation. J. Hydrol. 2019, 572, 213–225. [Google Scholar] [CrossRef]

- Cheng, N.-S. Single-Layer Model for Average Flow Velocity with Submerged Rigid Cylinders. J. Hydraul. Eng. 2015, 141, 06015012. [Google Scholar] [CrossRef]

- Tinoco, R.O.; Goldstein, E.B.; Coco, G. A data-driven approach to develop physically sound predictors: Application to depth-averaged velocities on flows through submerged arrays of rigid cylinders. Water Resour. Res. 2015, 51, 1247–1263. [Google Scholar] [CrossRef]

- Gualtieri, P.; De Felice, S.; Pasquino, V.; Doria, G.P. Use of conventional flow resistance equations and a model for the Nikuradse roughness in vegetated flows at high submergence. J. Hydrol. Hydromech. 2018, 66, 107–120. [Google Scholar] [CrossRef]

- Huthoff, F.; Augustijn, D.C.M.; Hulscher, S.J.M.H. Analytical solution of the depth-averaged flow velocity in case of submerged rigid cylindrical vegetation. Water Resour. Res. 2007, 43, w06413. [Google Scholar] [CrossRef]

- Baptist, M.; Babovic, V.; Uthurburu, J.R.; Keijzer, M.; Uittenbogaard, R.; Mynett, A.; Verwey, A. On inducing equations for vegetation resistance. J. Hydraul. Res. 2007, 45, 435–450. [Google Scholar] [CrossRef]

- Cheng, N.-S. Representative roughness height of submerged vegetation. Water Resour. Res. 2011, 47. [Google Scholar] [CrossRef]

- Stone, B.M.; Shen, H.T. Hydraulic Resistance of Flow in Channels with Cylindrical Roughness. J. Hydraul. Eng. 2002, 128, 500–506. [Google Scholar] [CrossRef]

- Yang, W.; Choi, S.-U. A two-layer approach for depth-limited open-channel flows with submerged vegetation. J. Hydraul. Res. 2010, 48, 466–475. [Google Scholar] [CrossRef]

- Gioia, G.; Bombardelli, F.A. Scaling and Similarity in Rough Channel Flows. Phys. Rev. Lett. 2002, 88, 014501. [Google Scholar] [CrossRef]

- Augustijn, D.C.M.; Huthoff, F.; van Velzen, E.H. Comparison of vegetation roughness descriptions. In Proceedings of the River Flow 2008-Fourth International Conference on Fluvial Hydraulics, Çeşme, Turkey, 3–5 September 2008; pp. 343–350. Available online: https://research.utwente.nl/en/publications/comparison-of-vegetation-roughness-descriptions (accessed on 21 February 2022).

- Nepf, H.M. Flow and Transport in Regions with Aquatic Vegetation. Annu. Rev. Fluid Mech. 2012, 44, 123–142. [Google Scholar] [CrossRef]

- Pasquino, V.; Gualtieri, P.; Doria, G.P. On Evaluating Flow Resistance of Rigid Vegetation Using Classic Hydraulic Roughness at High Submergence Levels: An Experimental Work. In Hydrodynamic and Mass Transport at Freshwater Aquatic Interfaces; Springer: Cham, Switzerland, 2016; pp. 269–277. [Google Scholar] [CrossRef]

- Belcher, S.E.; Jerram, N.; Hunt, J.C.R. Adjustment of a turbulent boundary layer to a canopy of roughness elements. J. Fluid Mech. 2003, 488, 369–398. [Google Scholar] [CrossRef]

- Govindaraju, R.S. Artificial Neural Networks in Hydrology. II: Hydrologic Applications. J. Hydrol. Eng. 2000, 5, 124–137. [Google Scholar] [CrossRef]

- Rajaee, T.; Khani, S.; Ravansalar, M. Artificial intelligence-based single and hybrid models for prediction of water quality in rivers: A review. Chemom. Intell. Lab. Syst. 2020, 200, 103978. [Google Scholar] [CrossRef]

- Zounemat-Kermani, M.; Batelaan, O.; Fadaee, M.; Hinkelmann, R. Ensemble machine learning paradigms in hydrology: A review. J. Hydrol. 2021, 598, 126266. [Google Scholar] [CrossRef]

- Zounemat-Kermani, M.; Scholz, M. Computing Air Demand Using the Takagi–Sugeno Model for Dam Outlets. Water 2013, 5, 1441–1456. [Google Scholar] [CrossRef]

- Shin, J.; Yoon, S.; Cha, Y. Prediction of cyanobacteria blooms in the lower Han River (South Korea) using ensemble learning algorithms. Desalin. Water Treat. 2017, 84, 31–39. [Google Scholar] [CrossRef]

- Singh, G.; Panda, R.K. Bootstrap-based artificial neural network analysis for estimation of daily sediment yield from a small agricultural watershed. Int. J. Hydrol. Sci. Technol. 2015, 5, 333. [Google Scholar] [CrossRef]

- Sun, W.; Lv, Y.; Li, G.; Chen, Y. Modeling River Ice Breakup Dates by k-Nearest Neighbor Ensemble. Water 2020, 12, 220. [Google Scholar] [CrossRef]

- Cannon, A.J.; Whitfield, P.H. Downscaling recent streamflow conditions in British Columbia, Canada using ensemble neural network models. J. Hydrol. 2002, 259, 136–151. [Google Scholar] [CrossRef]

- Diks, C.G.H.; Vrugt, J.A. Comparison of point forecast accuracy of model averaging methods in hydrologic applications. Stoch. Environ. Res. Risk Assess. 2010, 24, 809–820. [Google Scholar] [CrossRef]

- Li, P.-H.; Kwon, H.-H.; Sun, L.; Lall, U.; Kao, J.-J. A modified support vector machine based prediction model on streamflow at the Shihmen Reservoir, Taiwan. Int. J. Climatol. 2009, 30, 1256–1268. [Google Scholar] [CrossRef]

- Tiwari, M.K.; Chatterjee, C. A new wavelet–bootstrap–ANN hybrid model for daily discharge forecasting. J. Hydroinform. 2010, 13, 500–519. [Google Scholar] [CrossRef]

- Erdal, H.I.; Karakurt, O. Advancing monthly streamflow prediction accuracy of CART models using ensemble learning paradigms. J. Hydrol. 2013, 477, 119–128. [Google Scholar] [CrossRef]

- Kim, D.; Yu, H.; Lee, H.; Beighley, E.; Durand, M.; Alsdorf, D.E.; Hwang, E. Ensemble learning regression for estimating river discharges using satellite altimetry data: Central Congo River as a Test-bed. Remote Sens. Environ. 2019, 221, 741–755. [Google Scholar] [CrossRef]

- Schick, S.; Rössler, O.; Weingartner, R. Monthly streamflow forecasting at varying spatial scales in the Rhine basin. Hydrol. Earth Syst. Sci. 2018, 22, 929–942. [Google Scholar] [CrossRef]

- Turco, M.; Ceglar, A.; Prodhomme, C.; Soret, A.; Toreti, A.; Francisco, J.D.-R. Summer drought predictability over Europe: Empirical versus dynamical forecasts. Environ. Res. Lett. 2017, 12, 084006. [Google Scholar] [CrossRef]

- Arabameri, A.; Saha, S.; Chen, W.; Roy, J.; Pradhan, B.; Bui, D.T. Flash flood susceptibility modelling using functional tree and hybrid ensemble techniques. J. Hydrol. 2020, 587, 125007. [Google Scholar] [CrossRef]

- Li, H.; Wen, G.; Yu, Z.; Zhou, T. Random subspace evidence classifier. Neurocomputing 2013, 110, 62–69. [Google Scholar] [CrossRef]

- Pham, B.T.; Jaafari, A.; Van Phong, T.; Yen, H.P.H.; Tuyen, T.T.; Van Luong, V.; Nguyen, H.D.; Van Le, H.; Foong, L.K. Improved flood susceptibility mapping using a best first decision tree integrated with ensemble learning techniques. Geosci. Front. 2021, 12, 101105. [Google Scholar] [CrossRef]

- Shu, C.; Burn, D.H. Artificial neural network ensembles and their application in pooled flood frequency analysis. Water Resour. Res. 2004, 40, W09301. [Google Scholar] [CrossRef]

- Araghinejad, S.; Azmi, M.; Kholghi, M. Application of artificial neural network ensembles in probabilistic hydrological forecasting. J. Hydrol. 2011, 407, 94–104. [Google Scholar] [CrossRef]

- Lee, S.; Kim, J.-C.; Jung, H.-S.; Lee, M.J.; Lee, S. Spatial prediction of flood susceptibility using random-forest and boosted-tree models in Seoul metropolitan city, Korea. Geomat. Nat. Hazards Risk 2017, 8, 1185–1203. [Google Scholar] [CrossRef]

- Singh, K.P.; Gupta, S.; Mohan, D. Evaluating influences of seasonal variations and anthropogenic activities on alluvial groundwater hydrochemistry using ensemble learning approaches. J. Hydrol. 2014, 511, 254–266. [Google Scholar] [CrossRef]

- Barzegar, R.; Fijani, E.; Moghaddam, A.A.; Tziritis, E. Forecasting of groundwater level fluctuations using ensemble hybrid multi-wavelet neural network-based models. Sci. Total Environ. 2017, 599–600, 20–31. [Google Scholar] [CrossRef]

- Avand, M.; Janizadeh, S.; Tien Bui, D.; Pham, V.H.; Ngo, P.T.T.; Nhu, V.-H. A tree-based intelligence ensemble approach for spatial prediction of potential groundwater. Int. J. Digit. Earth 2020, 13, 1408–1429. [Google Scholar] [CrossRef]

- Chen, W.; Zhao, X.; Tsangaratos, P.; Shahabi, H.; Ilia, I.; Xue, W.; Wang, X.; Bin Ahmad, B. Evaluating the usage of tree-based ensemble methods in groundwater spring potential mapping. J. Hydrol. 2020, 583, 124602. [Google Scholar] [CrossRef]

- Belle, V.; Papantonis, I. Principles and Practice of Explainable Machine Learning. Front. Big Data 2021, 4, 688969. [Google Scholar] [CrossRef] [PubMed]

- Roscher, R.; Bohn, B.; Duarte, M.F.; Garcke, J. Explainable Machine Learning for Scientific Insights and Discoveries. IEEE Access 2020, 8, 42200–42216. [Google Scholar] [CrossRef]

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges. In Natural Language Processing and Chinese Computing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 563–574. [Google Scholar] [CrossRef]

- Hu, X.; Shi, L.; Lin, G.; Lin, L. Comparison of physical-based, data-driven and hybrid modeling approaches for evapotranspiration estimation. J. Hydrol. 2021, 601, 126592. [Google Scholar] [CrossRef]

- Wang, S.; Peng, H.; Liang, S. Prediction of estuarine water quality using interpretable machine learning approach. J. Hydrol. 2022, 605, 127320. [Google Scholar] [CrossRef]

- Ahmad, M.A.; Eckert, C.; Teredesai, A. Interpretable Machine Learning in Healthcare. In Proceedings of the 2018 ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics, New York, NY, USA, 29 August–1 September 2018; pp. 559–560. [Google Scholar] [CrossRef]

- Sagi, O.; Rokach, L. Explainable decision forest: Transforming a decision forest into an interpretable tree. Inf. Fusion 2020, 61, 124–138. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; pp. 4768–4777. [Google Scholar]

- Liang, Y.; Li, S.; Yan, C.; Li, M.; Jiang, C. Explaining the black-box model: A survey of local interpretation methods for deep neural networks. Neurocomputing 2021, 419, 168–182. [Google Scholar] [CrossRef]

- Patro, B.N.; Lunayach, M.; Patel, S.; Namboodiri, V.P. U-CAM: Visual Explanation Using Uncertainty Based Class Activation Maps. 2019, pp. 7444–7453. Available online: https://openaccess.thecvf.com/content_ICCV_2019/html/Patro_U-CAM_Visual_Explanation_Using_Uncertainty_Based_Class_Activation_Maps_ICCV_2019_paper.html (accessed on 17 June 2021).

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Ross, A.; Doshi-Velez, F. Improving the adversarial robustness and interpretability of deep neural networks by regularizing their input gradients. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Computer Vision–ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 818–833. [Google Scholar] [CrossRef]

- Binder, A.; Montavon, G.; Lapuschkin, S.; Müller, K.R.; Samek, W. Layer-wise relevance propagation for neural networks with local renormalization layers. In Artificial Neural Networks and Machine Learning–ICANN 2016; Springer: Cham, Switzerland, 2016; pp. 63–71. [Google Scholar] [CrossRef]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic attribution for deep networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; Volume 70, pp. 3319–3328. [Google Scholar]

- Zhang, J.; Bargal, S.A.; Lin, Z.; Brandt, J.; Shen, X.; Sclaroff, S. Top-Down Neural Attention by Excitation Backprop. Int. J. Comput. Vis. 2018, 126, 1084–1102. [Google Scholar] [CrossRef]

- Zhang, Q.; Wu, Y.N.; Zhu, S.-C. Interpretable Convolutional Neural Networks. 2018, pp. 8827–8836. Available online: https://openaccess.thecvf.com/content_cvpr_2018/html/Zhang_Interpretable_Convolutional_Neural_CVPR_2018_paper.html (accessed on 17 June 2021).

- Ghorbani, A.; Wexler, J.; Zou, J.; Kim, B. Towards Automatic Concept-based Explanations. arXiv 2019, arXiv:190203129. Available online: http://arxiv.org/abs/1902.03129 (accessed on 17 June 2021).

- Zhou, B.; Sun, Y.; Bau, D.; Torralba, A. Interpretable Basis Decomposition for Visual Explanation. In Computer Vision–ECCV 2018; Springer: Cham, Switzerland, 2018; pp. 122–138. [Google Scholar] [CrossRef]

- Etmann, C.; Lunz, S.; Maass, P.; Schoenlieb, C. On the Connection between Adversarial Robustness and Saliency Map Interpretability. In Proceedings of the 36th International Conference on Machine Learning, May 2019; pp. 1823–1832. Available online: http://proceedings.mlr.press/v97/etmann19a.html (accessed on 17 June 2021).

- Tao, G.; Ma, S.; Liu, Y.; Zhang, X. Attacks Meet Interpretability: Attribute-steered Detection of Adversarial Samples. arXiv 2018, arXiv:181011580. Available online: http://arxiv.org/abs/1810.11580 (accessed on 17 June 2021).

- Aydin, Y.; Dizdaroğlu, B. Blotch Detection in Archive Films Based on Visual Saliency Map. Complexity 2020, 2020, 5965387. [Google Scholar] [CrossRef]

- Fong, R.C.; Vedaldi, A. Interpretable Explanations of Black Boxes by Meaningful Perturbation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3449–3457. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?” Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Petsiuk, V.; Das, A.; Saenko, K. RISE: Randomized Input Sampling for Explanation of Black-box Models. arXiv 2018, arXiv:180607421. Available online: http://arxiv.org/abs/1806.07421 (accessed on 11 April 2021).

- Moradi, M.; Samwald, M. Post-hoc explanation of black-box classifiers using confident itemsets. Expert Syst. Appl. 2021, 165, 113941. [Google Scholar] [CrossRef]

- Baptist, M.J. Modelling Floodplain Biogeomorphology. 2005. Available online: https://repository.tudelft.nl/islandora/object/uuid%3Ab2739720-e2f6-40e2-b55f-1560f434cbee (accessed on 23 February 2022).

- Dunn, C.; Lopez, F.; Garcia, M.H. Mean Flow and Turbulence in a Laboratory Channel with Simulated Vegatation (HES 51). October 1996. Available online: https://www.ideals.illinois.edu/handle/2142/12229 (accessed on 23 February 2022).

- Liu, D.; Diplas, P.; Fairbanks, J.D.; Hodges, C.C. An experimental study of flow through rigid vegetation. J. Geophys. Res. Earth Surf. 2008, 113, F04015. [Google Scholar] [CrossRef]

- Meijer, D.G.; van Velzen, E.H. Prototype-Scale Flume Experiments on Hydraulic Roughness of Submerged Vegetation. In Proceedings of the 28th IAHR Congress, Graz, Austria, 22–27 August 1999. [Google Scholar]

- Murphy, E.; Ghisalberti, M.; Nepf, H. Model and laboratory study of dispersion in flows with submerged vegetation. Water Resour. Res. 2007, 43, W05438. [Google Scholar] [CrossRef]

- Poggi, D.; Porporato, A.; Ridolfi, L.; Albertson, J.D.; Katul, G. The Effect of Vegetation Density on Canopy Sub-Layer Turbulence. Bound. Layer Meteorol. 2004, 111, 565–587. [Google Scholar] [CrossRef]

- Shimizu, Y.; Tsujimoto, T.; Nakagawa, H.; Kitamura, T. Experimental study on flow over rigid vegetation simulated by cylinders with equi-spacing. Doboku Gakkai Ronbunshu 1991, 1991, 31–40. [Google Scholar] [CrossRef]

- Yang, W. Experimental Study of Turbulent Open-channel Flows with Submerged Vegetation. Ph.D. Thesis, Yonsei University, Seoul, Korea, 2008. [Google Scholar]

- Xu, M.; Watanachaturaporn, P.; Varshney, P.K.; Arora, M.K. Decision tree regression for soft classification of remote sensing data. Remote Sens. Environ. 2005, 97, 322–336. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Chapman and Hall/CRC: Boca Raton, FL, USA, 1984. [Google Scholar]

- Ahmad, M.W.; Reynolds, J.; Rezgui, Y. Predictive modelling for solar thermal energy systems: A comparison of support vector regression, random forest, extra trees and regression trees. J. Clean. Prod. 2018, 203, 810–821. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Sanchez-Castillo, M.; Chica-Olmo, M.; Chica-Rivas, M.J.O.G.R. Machine learning predictive models for mineral prospectivity: An evaluation of neural networks, random forest, regression trees and support vector machines. Ore Geol. Rev. 2015, 71, 804–818. [Google Scholar] [CrossRef]

- Maree, R.; Geurts, P.; Piater, J.; Wehenkel, L. A Generic Approach for Image Classification Based on Decision Tree Ensembles and Local Sub-Windows. In Proceedings of the 6th Asian Conference on Computer Vision, Jeju, Korea, 27–30 January 2004; pp. 860–865. [Google Scholar]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Xu, C.; Liu, X.; Wang, H.; Li, Y.; Jia, W.; Qian, W.; Quan, Q.; Zhang, H.; Xue, F. A study of predicting irradiation-induced transition temperature shift for RPV steels with XGBoost modeling. Nucl. Eng. Technol. 2021, 53, 2610–2615. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

| Description | Mean | Maximum | Minimum | Standard Deviation | Kurtosis | Skewness | |

|---|---|---|---|---|---|---|---|

| Q | Measured flow (m3/s) | 0.58 | 8.98 | 0.00 | 1.45 | 10.11 | 3.11 |

| B | Channal width (m) | 0.89 | 3.00 | 0.38 | 0.96 | 1.08 | 1.72 |

| H | Flow depth (m) | 0.52 | 2.50 | 0.47 | 0.69 | 2.08 | 1.90 |

| S | Energy slope | 0.003 | 0.044 | 0.000 | 0.004 | 40.88 | 5.25 |

| λ | Vegetation density (fraction of bed area with stemps) | 0.020 | 0.120 | 0.020 | 0.022 | 5.22 | 2.16 |

| d | Characteristic diameter of vegetation (m) | 0.007 | 0.013 | 0.006 | 0.004 | −0.67 | 0.33 |

| HV | Height of vegetation layer (m) | 0.24 | 1.50 | 0.14 | 0.36 | 5.77 | 2.61 |

| N | Stems per unit bed area (m−2) | 1210 | 9995 | 625 | 2468 | 8.4 | 3.1 |

| Hs | Height of surface layer (m) | 0.28 | 2.04 | 0.33 | 0.40 | 5.63 | 2.40 |

| UB | Bulk average flow (m/s) | 0.28 | 1.24 | 0.03 | 0.22 | 2.82 | 1.57 |

| Prediction | Model | R | R2 | MAE | RMSE | Fractional Bias |

|---|---|---|---|---|---|---|

| UB | Decision Tree | 0.882 | 0.78 | 0.067 | 0.102 | −0.037 |

| Extra tree | 0.944 | 0.89 | 0.053 | 0.071 | 0.010 | |

| XGBoost | 0.984 | 0.97 | 0.025 | 0.040 | −0.019 | |

| Shi et al., (2019) [4] | 0.970 | 0.94 | 0.032 | 0.053 | 0.006 | |

| Cheng, (2015) [5] | 0.960 | 0.92 | 0.040 | 0.063 | −0.023 | |

| Huthoff et al., (2007) [8] | 0.972 | 0.95 | 0.038 | 0.060 | −0.116 | |

| fS | Decision Tree | 0.761 | 0.58 | 0.060 | 0.096 | −0.061 |

| Extra tree | 0.798 | 0.64 | 0.055 | 0.092 | −0.057 | |

| XGBoost | 0.920 | 0.85 | 0.042 | 0.060 | −0.035 | |

| Shi et al., (2019) [4] | 0.676 | 0.46 | 0.069 | 0.110 | −0.113 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meddage, D.P.P.; Ekanayake, I.U.; Herath, S.; Gobirahavan, R.; Muttil, N.; Rathnayake, U. Predicting Bulk Average Velocity with Rigid Vegetation in Open Channels Using Tree-Based Machine Learning: A Novel Approach Using Explainable Artificial Intelligence. Sensors 2022, 22, 4398. https://doi.org/10.3390/s22124398

Meddage DPP, Ekanayake IU, Herath S, Gobirahavan R, Muttil N, Rathnayake U. Predicting Bulk Average Velocity with Rigid Vegetation in Open Channels Using Tree-Based Machine Learning: A Novel Approach Using Explainable Artificial Intelligence. Sensors. 2022; 22(12):4398. https://doi.org/10.3390/s22124398

Chicago/Turabian StyleMeddage, D. P. P., I. U. Ekanayake, Sumudu Herath, R. Gobirahavan, Nitin Muttil, and Upaka Rathnayake. 2022. "Predicting Bulk Average Velocity with Rigid Vegetation in Open Channels Using Tree-Based Machine Learning: A Novel Approach Using Explainable Artificial Intelligence" Sensors 22, no. 12: 4398. https://doi.org/10.3390/s22124398

APA StyleMeddage, D. P. P., Ekanayake, I. U., Herath, S., Gobirahavan, R., Muttil, N., & Rathnayake, U. (2022). Predicting Bulk Average Velocity with Rigid Vegetation in Open Channels Using Tree-Based Machine Learning: A Novel Approach Using Explainable Artificial Intelligence. Sensors, 22(12), 4398. https://doi.org/10.3390/s22124398