Sensoring the Neck: Classifying Movements and Actions with a Neck-Mounted Wearable Device †

Abstract

1. Introduction

2. Related Work

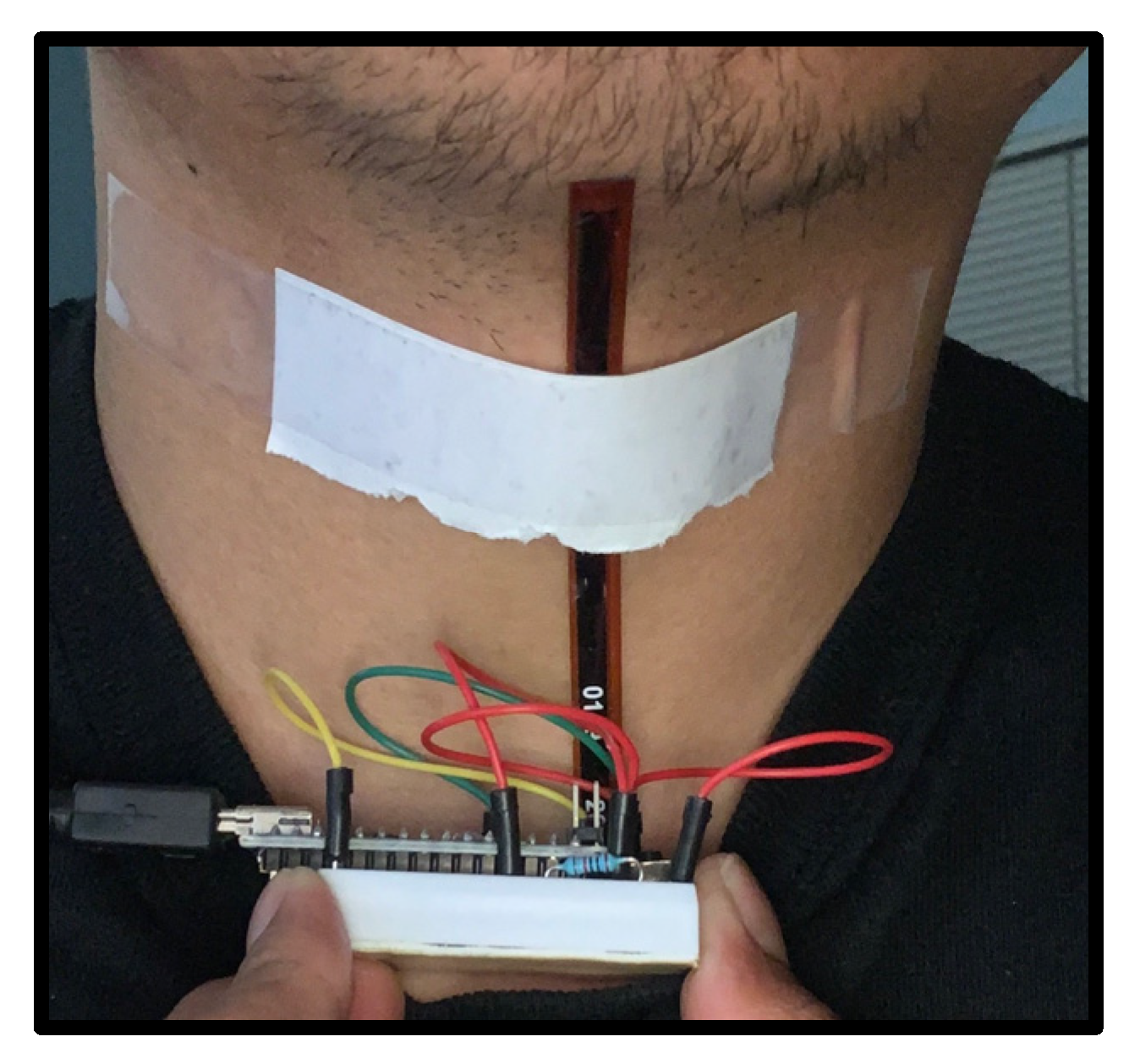

3. Prototype

4. Head Tilt Detection

4.1. Flex Sensor Types and Placement

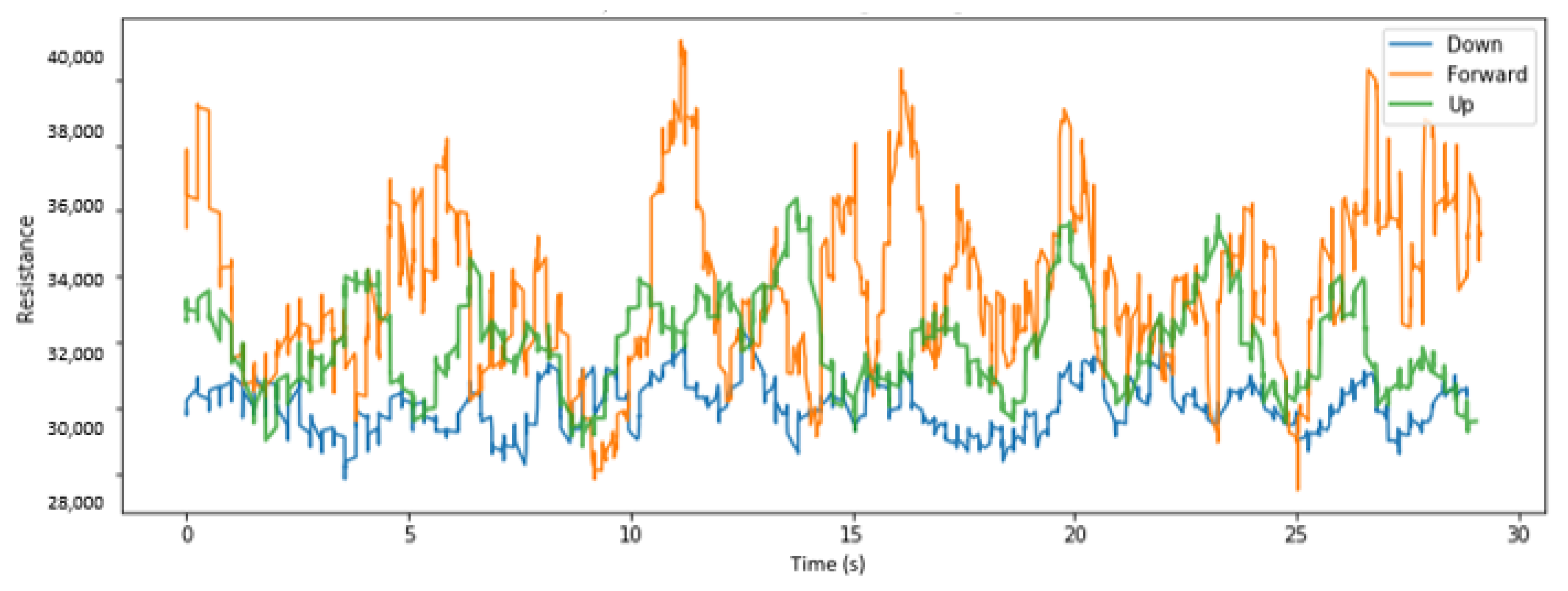

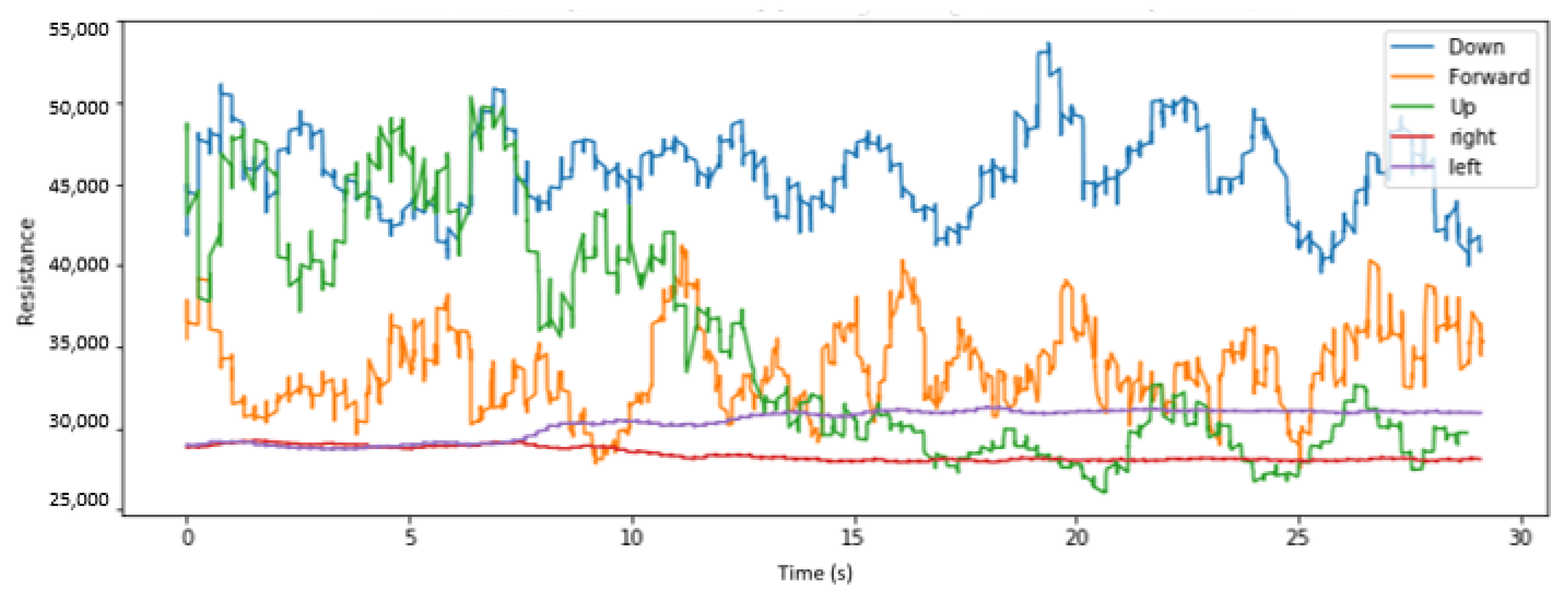

4.2. Data Visualization

4.3. Head Tilt Detection Machine Learning Results

5. Speech and Mouth Movement Detection

5.1. Speech Detection

5.2. Mouth Movement Classification

5.3. Speech Classification

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Orphanides, A.K.; Nam, C.S. Touchscreen interfaces in context: A systematic review of research into touchscreens across settings, populations, and implementations. Appl. Ergon. 2017, 61, 116–143. [Google Scholar] [CrossRef] [PubMed]

- Jonsson, P.; Johnson, P.W.; Hagberg, M.; Forsman, M. Thumb joint movement and muscular activity during mobile phone texting—A methodological study. J. Electromyogr. Kinesiol. 2011, 21, 363–370. [Google Scholar] [CrossRef]

- Lee, M.; Hong, Y.; Lee, S.; Won, J.; Yang, J.; Park, S.; Chang, K.-T.; Hong, Y. The effects of smartphone use on upper extremity muscle activity and pain threshold. J. Phys. Ther. Sci. 2015, 27, 1743–1745. [Google Scholar] [CrossRef] [PubMed]

- Dannenberg, R.B.; Amon, D. A gesture based user interface prototyping system. In Proceedings of the 2nd Annual ACM SIGGRAPH Symposium on User Interface Software and Technology (UIST ‘89), Williamsburg, VA, USA, 13–15 November 1989; Association for Computing Machinery: New York, NY, USA, 1989; pp. 127–132. [Google Scholar] [CrossRef]

- McGuckin, S.; Chowdhury, S.; Mackenzie, L. Tap ‘n’ shake: Gesture-based smartwatch-smartphone communications system. In Proceedings of the 28th Australian Conference on Computer-Human Interaction (OzCHI ‘16), Launceston, TAS, Australia, 29 November–2 December 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 442–446. [Google Scholar] [CrossRef]

- Deponti, D.; Maggiorini, D.; Palazzi, C.E. Smartphone’s physiatric serious game. In Proceedings of the 2011 IEEE 1st International Conference on Serious Games and Applications for Health (SEGAH ‘11), Braga, Portugal, 9–11 November 2011; pp. 1–8. [Google Scholar]

- Deponti, D.; Maggiorini, D.; Palazzi, C.E. DroidGlove: An android-based application for wrist rehabilitation. In Proceedings of the 2009 IEEE International Conference on Ultra Modern Telecommunications (ICUMT 2009), St. Petersburg, Russia, 12–14 October 2009; pp. 1–7. [Google Scholar] [CrossRef]

- Moazen, D.; Sajjadi, S.A.; Nahapetian, A. AirDraw: Leveraging smart watch motion sensors for mobile human computer interactions. In Proceedings of the 2016 13th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2016; pp. 442–446. [Google Scholar] [CrossRef]

- Vance, E.; Nahapetian, A. Bluetooth-based context modeling. In Proceedings of the 4th ACM MobiHoc Workshop on Experiences with the Design and Implementation of Smart Objects (SMARTOBJECTS ‘18), Los Angeles, CA, USA, 25 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Holmes, A.; Desai, S.; Nahapetian, A. LuxLeak: Capturing computing activity using smart device ambient light sensors. In Proceedings of the 2nd Workshop on Experiences in the Design and Implementation of Smart Objects (SmartObjects ‘16), New York City, NY, USA, 3–7 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 47–52. [Google Scholar] [CrossRef]

- Papisyan, A.; Nahapetian, A. LightVest: A wearable body position monitor using ambient and infrared light. In Proceedings of the 9th International Conference on Body Area Networks (BodyNets ‘14), London, UK, 29 September–1 October 2014; ICST (Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering): Brussels, Belgium, 2014; pp. 186–192. [Google Scholar] [CrossRef][Green Version]

- Velloso, E.; Schmidt, D.; Alexander, J.; Gellersen, H.; Bulling, A. The Feet in Human–Computer Interaction. ACM Comput. Surv. 2015, 48, 1–35. [Google Scholar] [CrossRef]

- Scott, J.; Dearman, D.; Yatani, K.; Truong, K.N. Sensing foot gestures from the pocket. In Proceedings of the 23nd Annual ACM Symposium on User Interface Software and Technology (UIST ‘10), New York City, NY, USA, 3–6 October 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 199–208. [Google Scholar] [CrossRef]

- Ohn-Bar, E.; Tran, C.; Trivedi, M. Hand gesture-based visual user interface for infotainment. In Proceedings of the 4th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ‘12), Portsmouth, NH, USA, 17–19 October 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 111–115. [Google Scholar] [CrossRef]

- Davis, J.W.; Vaks, S. A perceptual user interface for recognizing head gesture acknowledgements. In Proceedings of the 2001 Workshop on Perceptive user Interfaces (PUI ‘01), Orlando, FL, USA, 15–16 November 2001; Association for Computing Machinery: New York, NY, USA, 2001; pp. 1–7. [Google Scholar] [CrossRef]

- Li, H.; Trutoiu, L.C.; Olszewski, K.; Wei, L.; Trutna, T.; Hsieh, P.-L.; Nicholls, A.; Ma, C. Facial performance sensing head-mounted display. ACM Trans. Graph. 2015, 34, 1–9. [Google Scholar] [CrossRef]

- McNamara, A.; Kabeerdoss, C.; Egan, C. Mobile User Interfaces based on User Attention. In Proceedings of the 2015 Workshop on Future Mobile User Interfaces (FutureMobileUI ‘15), Florence, Italy, 19–22 May 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1–3. [Google Scholar] [CrossRef]

- Yatani, K.; Truong, K.N. BodyScope: A wearable acoustic sensor for activity recognition. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing (UbiComp ‘12), Pittsburgh, Pennsylvania, 5–8 September 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 341–350. [Google Scholar] [CrossRef]

- Bi, Y.; Xu, W.; Guan, N.; Wei, Y.; Yi, W. Pervasive eating habits monitoring and recognition through a wearable acoustic sensor. In Proceedings of the 8th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth ‘14), Oldenburg, Germany, 20–23 May 2014; ICST (Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering): Brussels, Belgium, 2014; pp. 174–177. [Google Scholar] [CrossRef]

- Erzin, E. Improving Throat Microphone Speech Recognition by Joint Analysis of Throat and Acoustic Microphone Recordings. IEEE Trans. Audio Speech, Lang. Process. 2009, 17, 1316–1324. [Google Scholar] [CrossRef]

- Turan, M.T.; Erzin, E. Empirical Mode Decomposition of Throat Microphone Recordings for Intake Classification. In Proceedings of the 2nd International Workshop on Multimedia for Personal Health and Health Care (MMHealth ‘17), Mountain View, CA, USA, 23 October 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 45–52. [Google Scholar] [CrossRef]

- Cohen, E.; Stogin, W.; Kalantarian, H.; Pfammatter, A.F.; Spring, B.; Alshurafa, N. SmartNecklace: Designing a wearable multi-sensor system for smart eating detection. In Proceedings of the 11th EAI International Conference on Body Area Networks (BodyNets ‘16), Turin, Italy, 15–16 December 2016; ICST (Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering): Brussels, Belgium, 2016; pp. 33–37. [Google Scholar]

- Hirsch, M.; Cheng, J.; Reiss, A.; Sundholm, M.; Lukowicz, P.; Amft, O. Hands-free gesture control with a capacitive textile neckband. In Proceedings of the 2014 ACM International Symposium on Wearable Computers (ISWC ‘14), Seattle, WA, USA, 13–17 September 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 55–58. [Google Scholar] [CrossRef]

- Cheng, J.; Zhou, B.; Kunze, K.; Rheinländer, C.C.; Wille, S.; Wehn, N.; Weppner, J.; Lukowicz, P. Activity recognition and nutrition monitoring in every day situations with a textile capacitive neckband. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication (UbiComp ‘13 Adjunct), Zurich, Switzerland, 8–12 September 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 155–158. [Google Scholar] [CrossRef]

- Kalantarian, H.; Alshurafa, N.; Sarrafzadeh, M. A Wearable Nutrition Monitoring System. In Proceedings of the 2014 11th International Conference on Wearable and Implantable Body Sensor Networks (BSN ‘14), Zurich, Switzerland, 16–19 June 2014; IEEE Computer Society: Washington, DC, USA, 2014; pp. 75–80. [Google Scholar] [CrossRef]

- Kalantarian, H.; Motamed, B.; Alshurafa, N.; Sarrafzadeh, M. A wearable sensor system for medication adherence prediction. Artif. Intell. Med. 2016, 69, 43–52. [Google Scholar] [CrossRef] [PubMed]

- Morrow, K.; Wilbern, D.; Taghavi, R.; Ziat, M. The effects of duration and frequency on the perception of vibrotactile stimulation on the neck. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS ‘16), Philadelphia, PA, USA, 8–11 April 2016; pp. 41–46. [Google Scholar]

- Yamazaki, Y.; Hasegawa, S.; Mitake, H.; Shirai, A. Neck strap haptics: An algorithm for non-visible VR information using haptic perception on the neck. In Proceedings of the ACM SIGGRAPH 2019 Posters (SIGGRAPH ‘19), Los Angeles, CA, USA, 28 July 2019; Association for Computing Machinery: New York, NY, USA, 2019. Article 60. pp. 1–2. [Google Scholar] [CrossRef]

- Yamazaki, Y.; Mitake, H.; Hasegawa, S. Tension-based wearable vibroacoustic device for music appreciation. In Proceedings of the International Conference on Human Haptic Sensing and Touch Enabled Computer Applications (EuroHaptics ’16), London, UK, 4–7 July 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 273–283. [Google Scholar]

- Ephrat, A.; Peleg, S. Vid2speech: Speech reconstruction from silent video. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP ‘17), New Orleans, LA, USA, 5–9 March 2017; pp. 5095–5099. [Google Scholar]

- Ephrat, A.; Halperin, T.; Peleg, S. Improved speech reconstruction from silent video. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 455–462. [Google Scholar]

- Kumar, Y.; Jain, R.; Salik, M.; Shah, R.; Zimmermann, R.; Yin, Y. MyLipper: A Personalized System for Speech Reconstruction using Multi-view Visual Feeds. In Proceedings of the 2018 IEEE International Symposium on Multimedia (ISM), Taichung, Taiwan, 10–12 December 2018; pp. 159–166. [Google Scholar] [CrossRef]

- Kimura, N.; Hayashi, K.; Rekimoto, J. TieLent: A Casual Neck-Mounted Mouth Capturing Device for Silent Speech Interaction. In Proceedings of the International Conference on Advanced Visual Interfaces, Salerno, Italy, 28 September–2 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Sun, K.; Yu, C.; Shi, W.; Liu, L.; Shi, Y. Lip-Interact: Improving Mobile Device Interaction with Silent Speech Commands. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology (UIST ‘18), Berlin, Germany, 14–17 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 581–593. [Google Scholar] [CrossRef]

- Manabe, H.; Hiraiwa, A.; Sugimura, T. Unvoiced speech recognition using EMG—Mime speech recognition. In CHI ‘03 Extended Abstracts on Human Factors in Computing Systems (CHI EA ‘03); Association for Computing Machinery: New York, NY, USA, 2003; pp. 794–795. [Google Scholar] [CrossRef]

- Maier-Hein, L.; Metze, F.; Schultz, T.; Waibel, A. Session independent non-audible speech recognition using surface electromyography. In Proceedings of the IEEE Workshop on Automatic Speech Recognition and Understanding, Cancun, Mexico, 27 November–1 December 2005; pp. 331–336. [Google Scholar] [CrossRef]

- Sahni, H.; Bedri, A.; Reyes, G.; Thukral, P.; Guo, Z.; Starner, T.; Ghovanloo, M. The tongue and ear interface: A wearable system for silent speech recognition. In Proceedings of the 2014 ACM International Symposium on Wearable Computers (ISWC ‘14), Seattle, WA, USA, 13–17 September 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 47–54. [Google Scholar] [CrossRef]

- Li, R.; Wu, J.; Starner, T. TongueBoard: An Oral Interface for Subtle Input. In Proceedings of the 10th Augmented Human International Conference 2019 (AH2019), Reims, France, 11–12 March 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–9. [Google Scholar] [CrossRef]

- Zhang, Q.; Gollakota, S.; Taskar, B.; Rao, R.P. Non-intrusive tongue machine interface. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ‘14), Toronto, ON, Canada, 26 April–1 May 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 2555–2558. [Google Scholar] [CrossRef]

- Nguyen, P.; Bui, N.; Nguyen, A.; Truong, H.; Suresh, A.; Whitlock, M.; Pham, D.; Dinh, T.; Vu, T. TYTH-Typing on Your Teeth: Tongue-Teeth Localization for Human-Computer Interface. In Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services (MobiSys ‘18), Munich, Germany, 10–15 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 269–282. [Google Scholar] [CrossRef]

- Ashbrook, D.; Tejada, C.; Mehta, D.; Jiminez, A.; Muralitharam, G.; Gajendra, S.; Tallents, R. Bitey: An exploration of tooth click gestures for hands-free user interface control. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ‘16), Florence, Italy, 6–9 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 158–169. [Google Scholar] [CrossRef]

- Crossan, A.; McGill, M.; Brewster, S.; Murray-Smith, R. Head tilting for interaction in mobile contexts. In Proceedings of the 11th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ‘09), Bonn, Germany, 15–18 September 2009; Association for Computing Machinery: New York, NY, USA, 2009; pp. 1–10. [Google Scholar] [CrossRef]

- LoPresti, E.; Brienza, D.M.; Angelo, J.; Gilbertson, L.; Sakai, J. Neck range of motion and use of computer head controls. In Proceedings of the Fourth International ACM Conference on Assistive Technologies (Assets ‘00), Arlington, VA, USA, 13–15 November 2000; Association for Computing Machinery: New York, NY, USA, 2000; pp. 121–128. [Google Scholar] [CrossRef]

- Ando, T.; Kubo, Y.; Shizuki, B.; Takahashi, S. CanalSense: Face-Related Movement Recognition System based on Sensing Air Pressure in Ear Canals. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology (UIST ‘17), Quebec City, QC, Canada, 22–25 October 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 679–689. [Google Scholar] [CrossRef]

- Lacanlale, J.; Isayan, P.; Mkrtchyan, K.; Nahapetian, A. Look Ma, No Hands: A Wearable Neck-Mounted Interface. In Proceedings of the Conference on Information Technology for Social Good (GoodIT ‘21), Rome, Italy, 9–11 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 13–18. [Google Scholar] [CrossRef]

| Model | Short Sensor Low Placement | Short Sensor Center Placement | Short Sensor High Placement | Long Sensor | |

|---|---|---|---|---|---|

| Logistic Regression | Train | 0.744 | 0.379 | 0.629 | 0.603 |

| Validate | 0.74 | 0.379 | 0.622 | 0.602 | |

| Test | 0.76 | 0.349 | 0.589 | 0.608 | |

| SVM | Train | 0.825 | 0.594 | 0.648 | 0.891 |

| Validate | 0.809 | 0.547 | 0.612 | 0.881 | |

| Test | 0.824 | 0.555 | 0.575 | 0.891 | |

| Random Forest | Train | 0.955 | 0.918 | 0.854 | 0.989 |

| Validate | 0.821 | 0.665 | 0.694 | 0.945 | |

| Test | 0.834 | 0.669 | 0.671 | 0.960 |

| Model | Short Sensor Low Placement | Long Sensor | |

|---|---|---|---|

| Logistic Regression | Train | 0.734 | 0.337 |

| Validate | 0.733 | 0.338 | |

| Test | 0.755 | 0.363 | |

| SVM | Train | 0.756 | 0.869 |

| Validate | 0.741 | 0.812 | |

| Test | 0.76 | 0.818 | |

| Random Forest | Train | 0.956 | 0.977 |

| Validate | 0.824 | 0.915 | |

| Test | 0.828 | 0.91 |

| Random Forest | Predicated | |||||

|---|---|---|---|---|---|---|

| Down | Forward | Up | Right | Left | ||

| Actual | Down | 259 | 0 | 10 | 0 | 0 |

| Forward | 1 | 285 | 40 | 1 | 3 | |

| Up | 25 | 47 | 65 | 15 | 5 | |

| Right | 0 | 0 | 8 | 185 | 18 | |

| Left | 0 | 0 | 3 | 29 | 194 | |

| Random Forest | Predicated | |||||

|---|---|---|---|---|---|---|

| Down | Forward | Up | Right | Left | ||

| Actual | Down | 202 | 3 | 11 | 0 | 0 |

| Forward | 0 | 494 | 2 | 0 | 0 | |

| Up | 17 | 0 | 182 | 0 | 0 | |

| Right | 0 | 0 | 0 | 204 | 49 | |

| Left | 0 | 0 | 0 | 36 | 166 | |

| Predicated | |||

|---|---|---|---|

| Static Breathing | Talking | ||

| Actual | Static Breathing | 23 | 7 |

| Talking | 3 | 27 | |

| Predicated | |||||

|---|---|---|---|---|---|

| Breathing | One Cycle | Two Cycles | Three Cycles | ||

| Actual | Breathing | 2 | 3 | 3 | 12 |

| One cycle | 0 | 19 | 1 | 0 | |

| Two cycles | 0 | 7 | 13 | 0 | |

| Three cycles | 0 | 0 | 0 | 20 | |

| Predicated | |||||

|---|---|---|---|---|---|

| “I Am …” | “This Is …” | “Who …” | “Can You …” | ||

| Actual | “I am a user who is talking right now.” | 0 | 9 | 1 | 0 |

| “This is me talking with a sensor attached.” | 0 | 10 | 0 | 0 | |

| “Who am I talking to at this very moment?” | 0 | 4 | 6 | 0 | |

| “Can you recognize what I am saying while attached to a sensor?” | 0 | 0 | 1 | 9 | |

| Predicated | |||||

|---|---|---|---|---|---|

| “A Blessing in Disguise” | “Cut Somebody Some Slack” | “Better Late than Never” | “A Dime a Dozen” | ||

| Actual | “A blessing in disguise” | 0 | 0 | 14 | 6 |

| “Cut somebody some slack” | 0 | 2 | 1 | 17 | |

| “Better late than never” | 0 | 0 | 19 | 1 | |

| “A dime a dozen” | 0 | 0 | 15 | 5 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lacanlale, J.; Isayan, P.; Mkrtchyan, K.; Nahapetian, A. Sensoring the Neck: Classifying Movements and Actions with a Neck-Mounted Wearable Device. Sensors 2022, 22, 4313. https://doi.org/10.3390/s22124313

Lacanlale J, Isayan P, Mkrtchyan K, Nahapetian A. Sensoring the Neck: Classifying Movements and Actions with a Neck-Mounted Wearable Device. Sensors. 2022; 22(12):4313. https://doi.org/10.3390/s22124313

Chicago/Turabian StyleLacanlale, Jonathan, Paruyr Isayan, Katya Mkrtchyan, and Ani Nahapetian. 2022. "Sensoring the Neck: Classifying Movements and Actions with a Neck-Mounted Wearable Device" Sensors 22, no. 12: 4313. https://doi.org/10.3390/s22124313

APA StyleLacanlale, J., Isayan, P., Mkrtchyan, K., & Nahapetian, A. (2022). Sensoring the Neck: Classifying Movements and Actions with a Neck-Mounted Wearable Device. Sensors, 22(12), 4313. https://doi.org/10.3390/s22124313