WISP, Wearable Inertial Sensor for Online Wheelchair Propulsion Detection

Abstract

1. Introduction

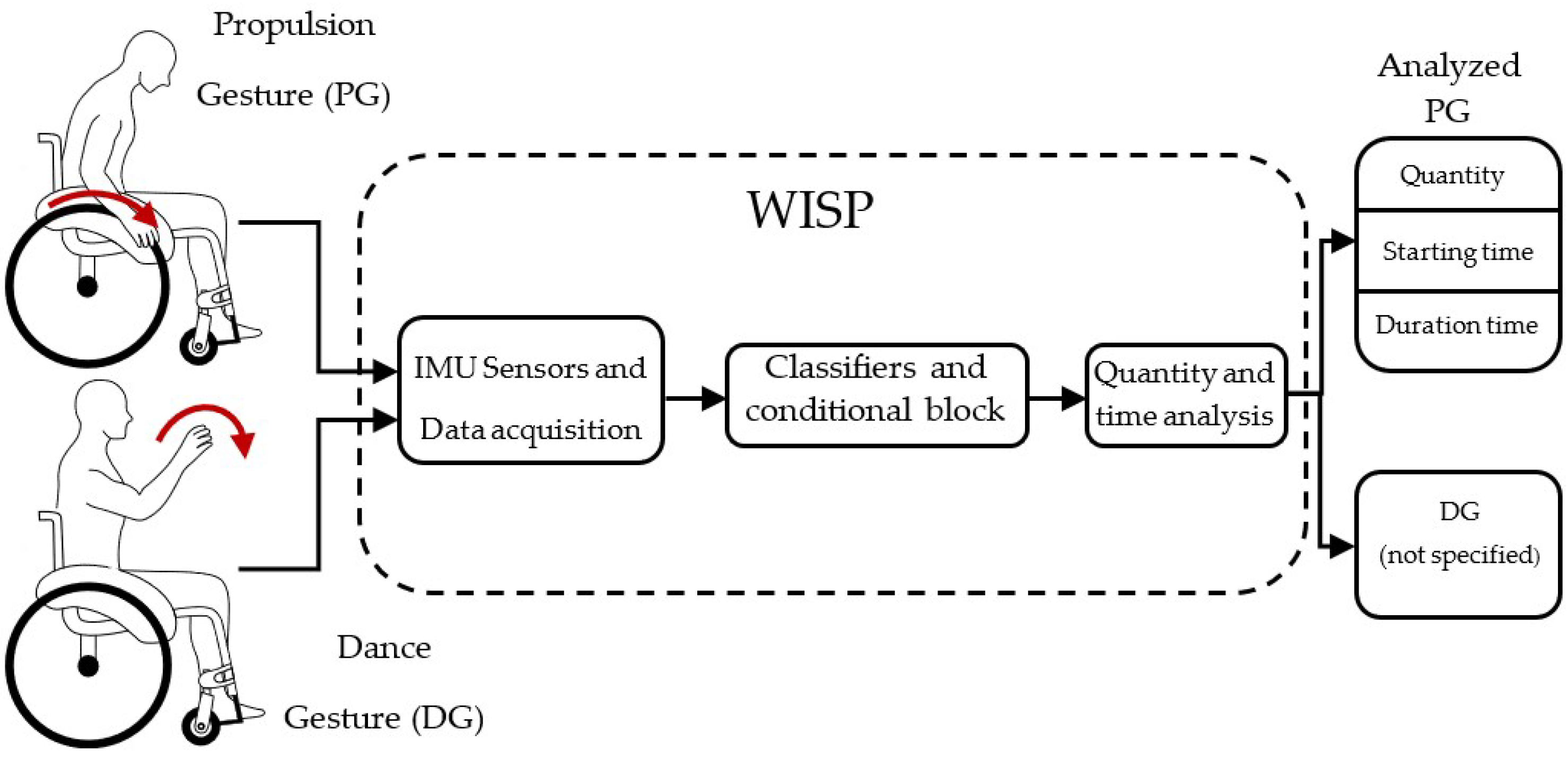

2. WISP

2.1. Proposition

2.2. Hardware Design

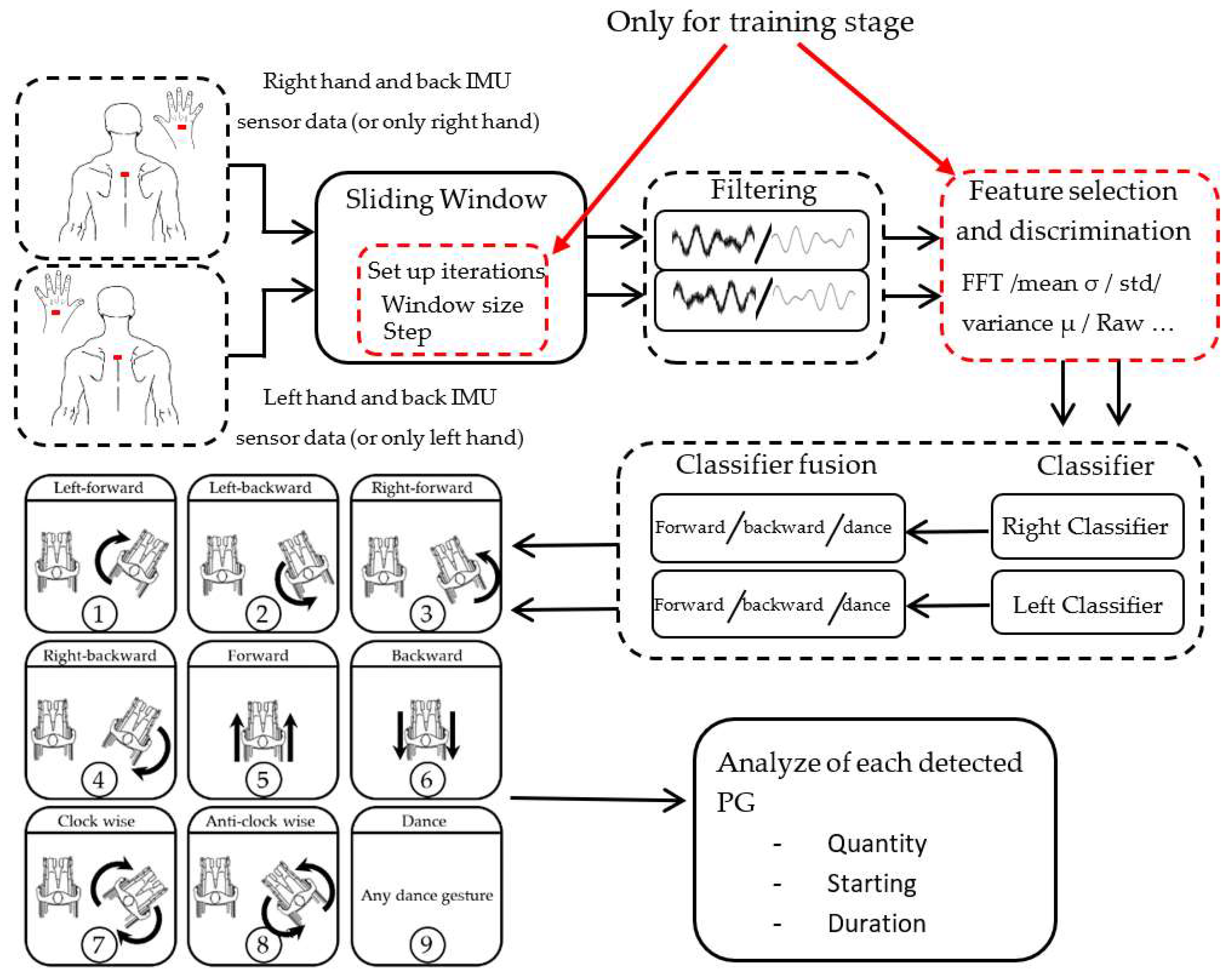

2.3. Algorithm Structure

3. Data Acquisition

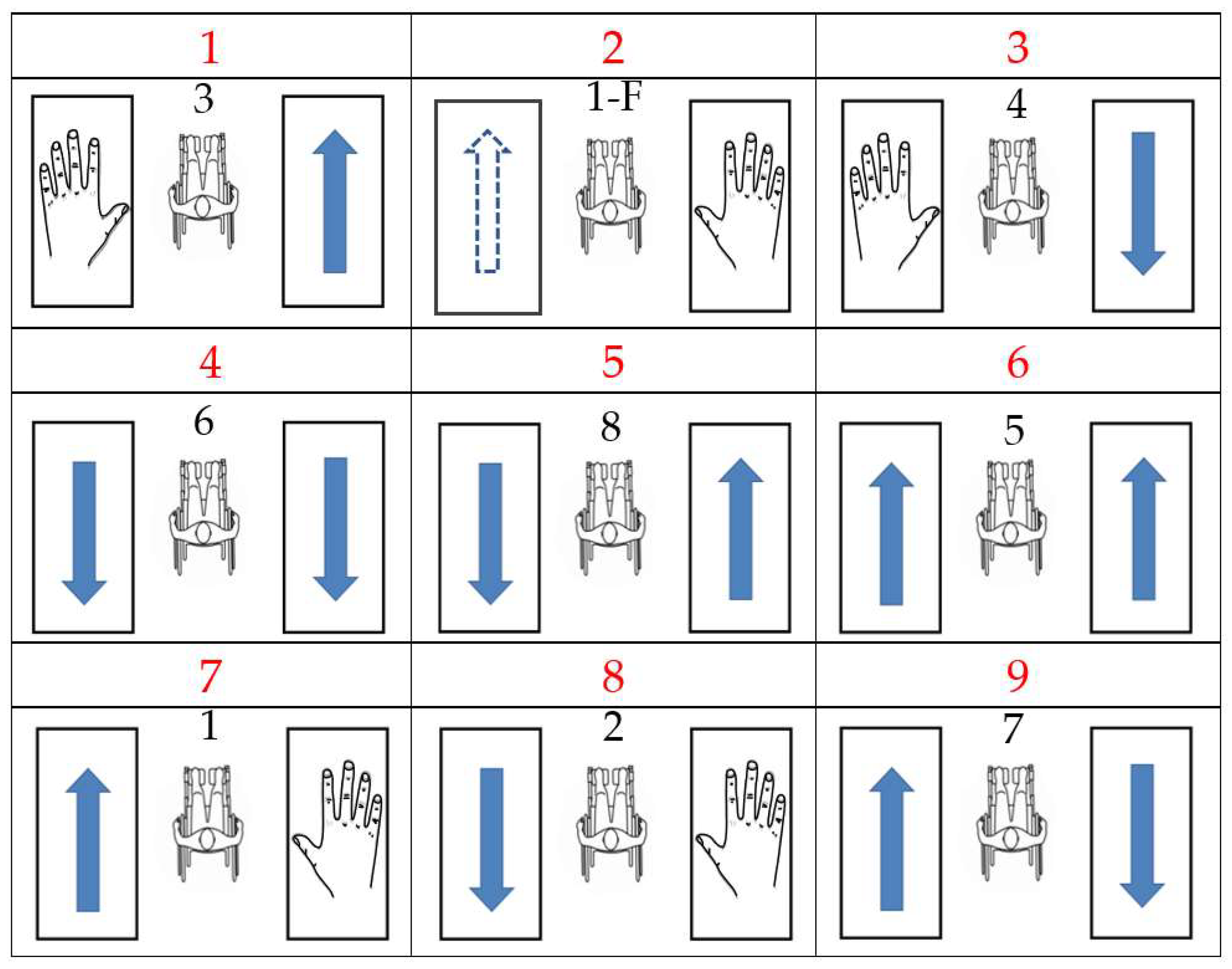

3.1. Detected Gestures

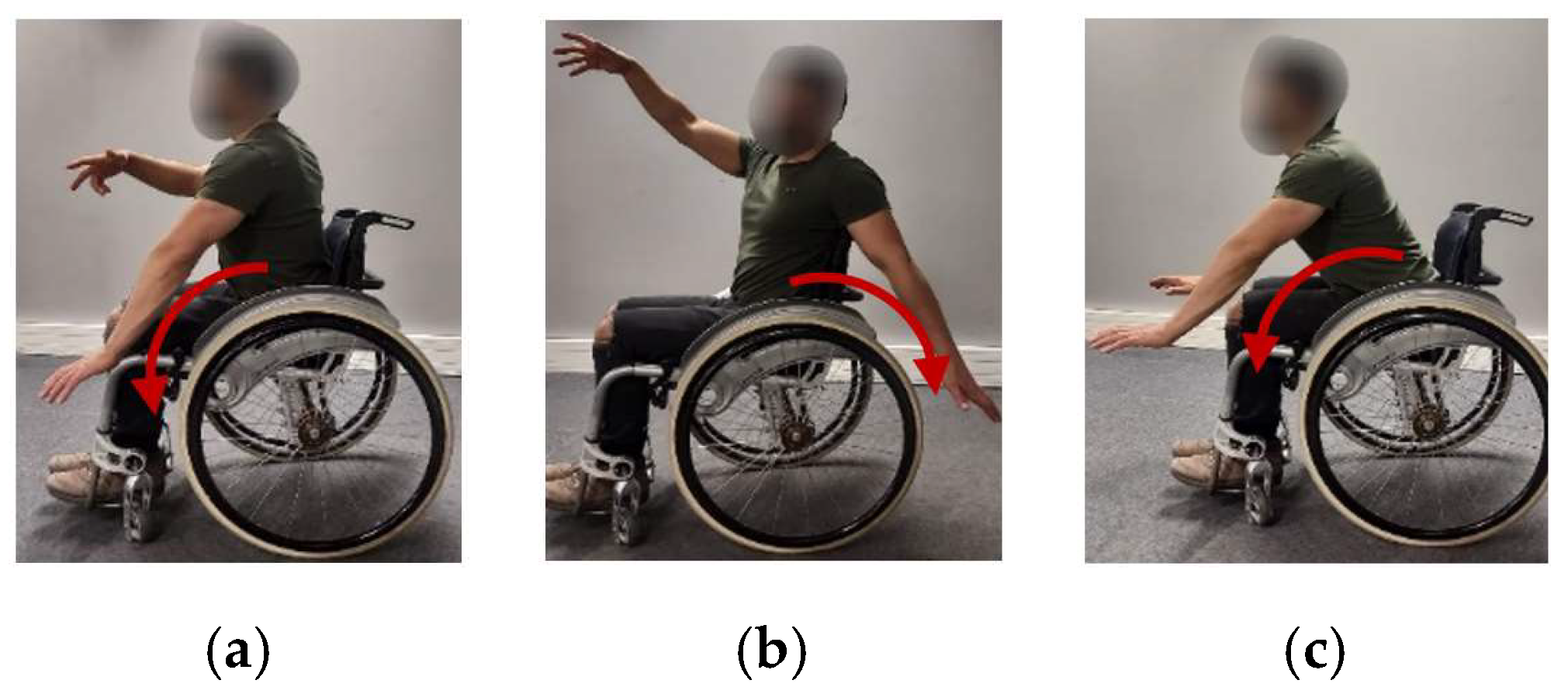

3.1.1. Propulsion Gestures

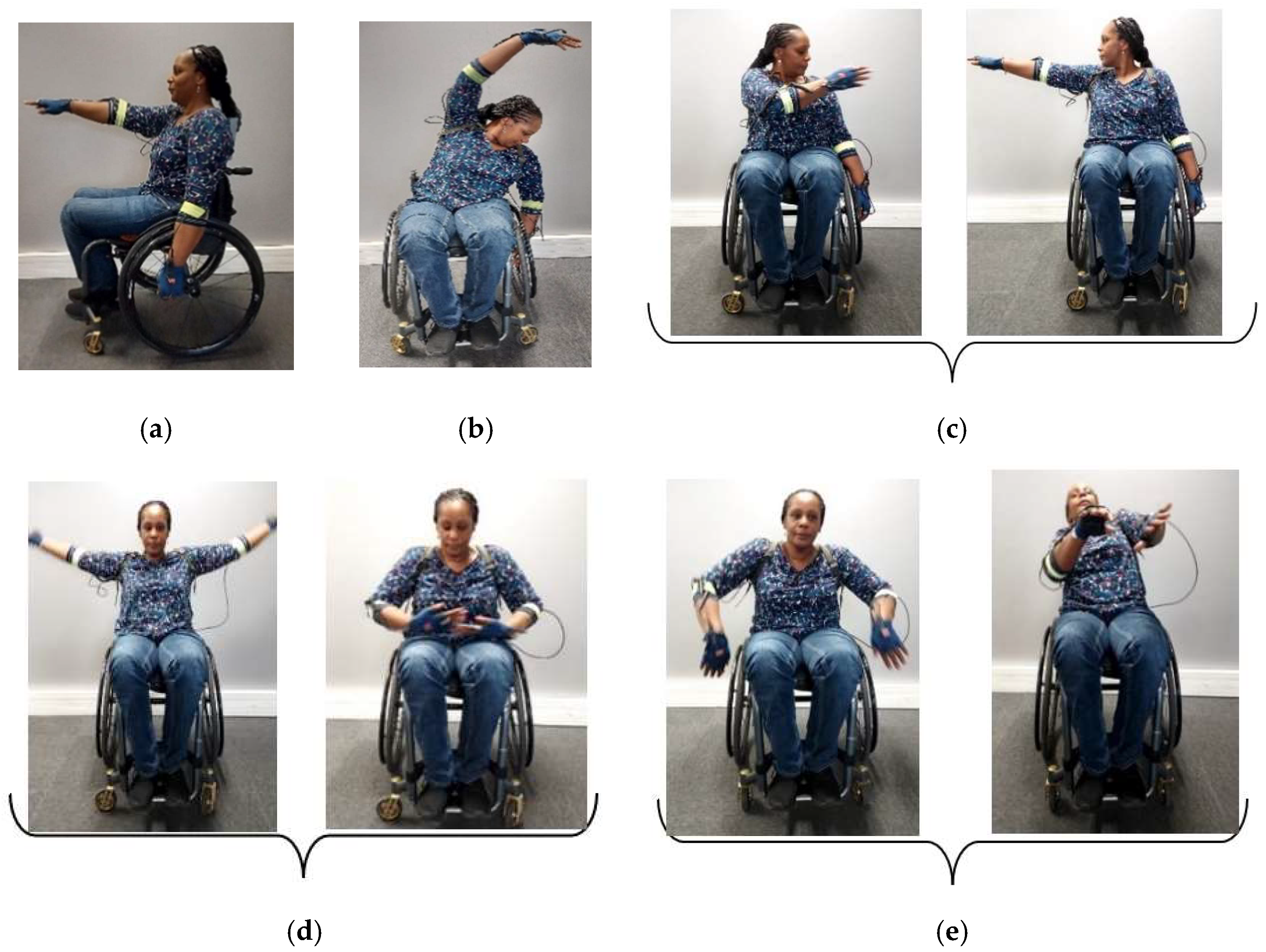

3.1.2. Dance Gestures

3.1.3. Fake Propulsion Gestures

3.2. Wheelchair Dance Choreography

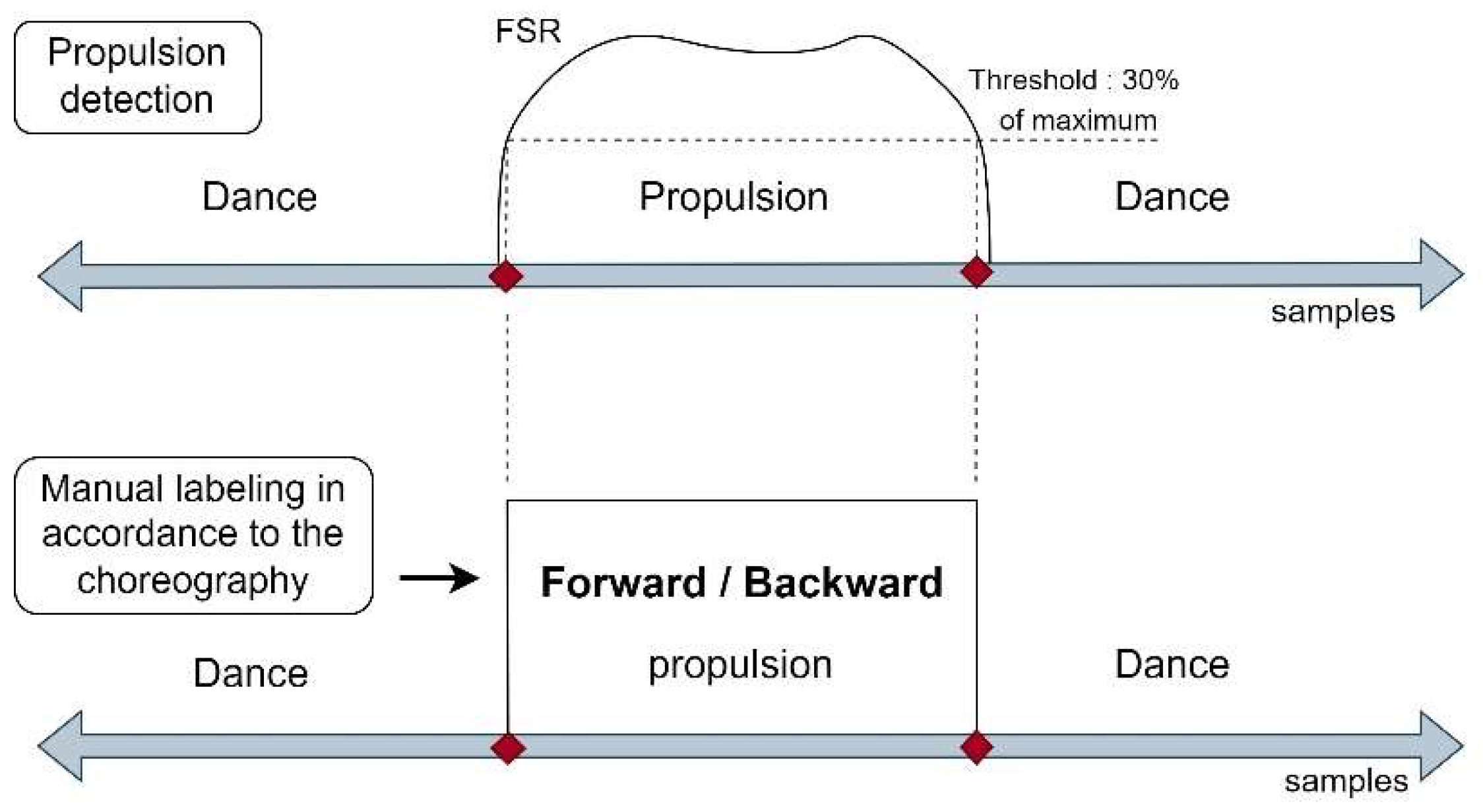

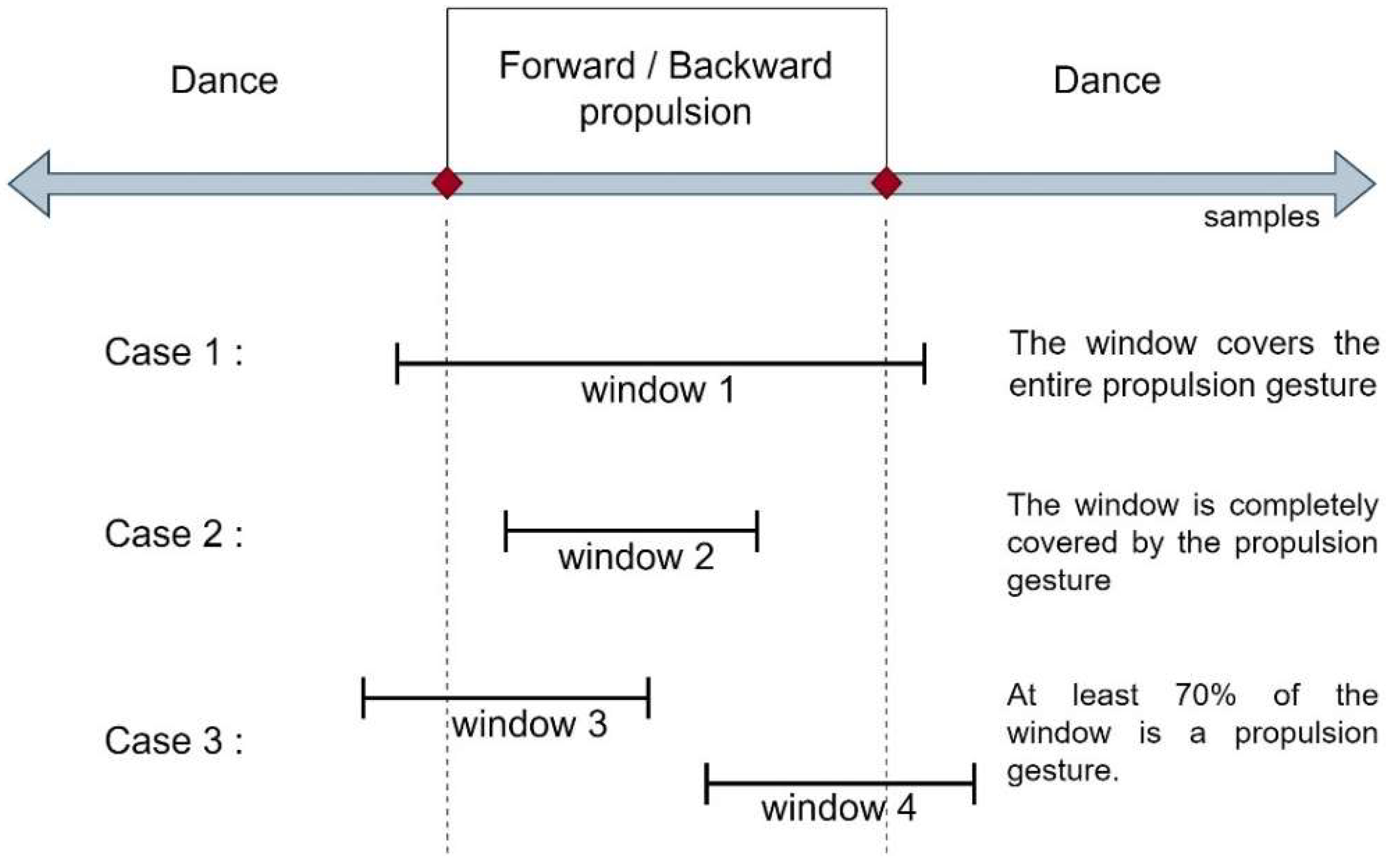

3.3. Semi-Automatic Labelization

4. Algorithm Development

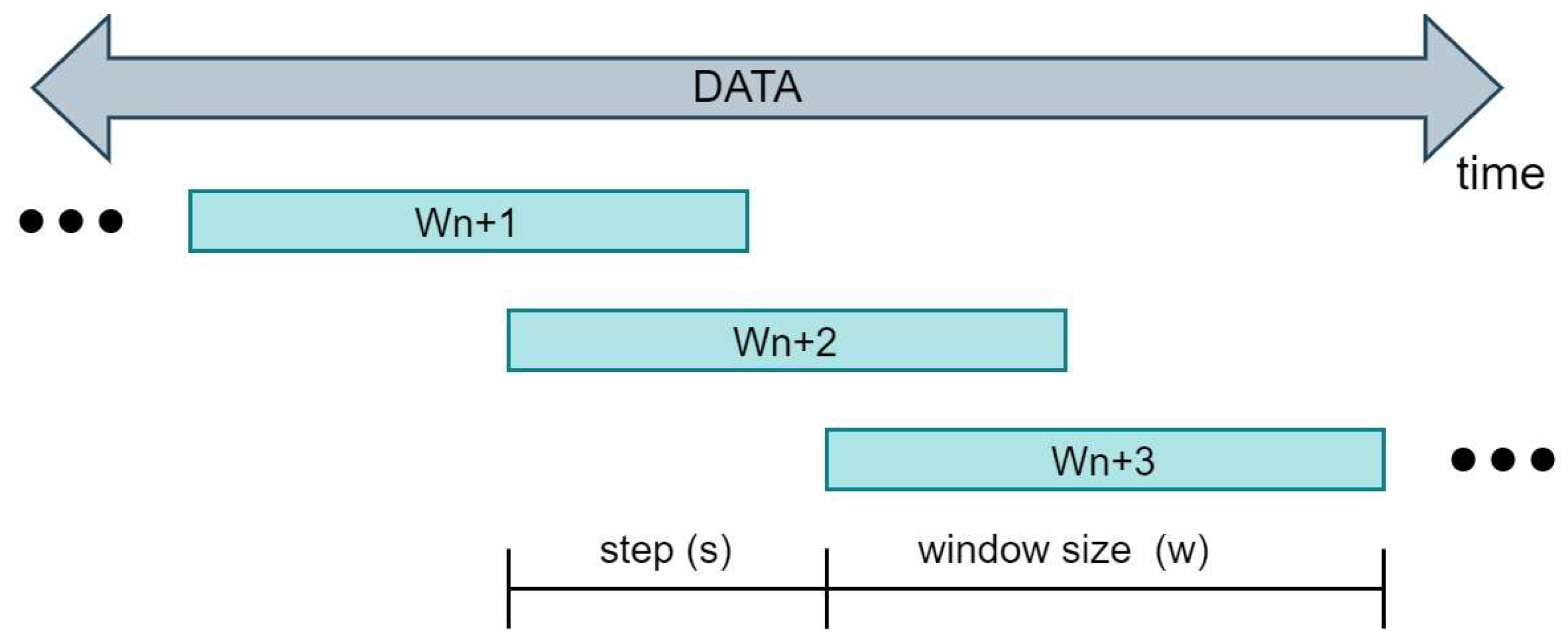

4.1. Sliding Window Processing

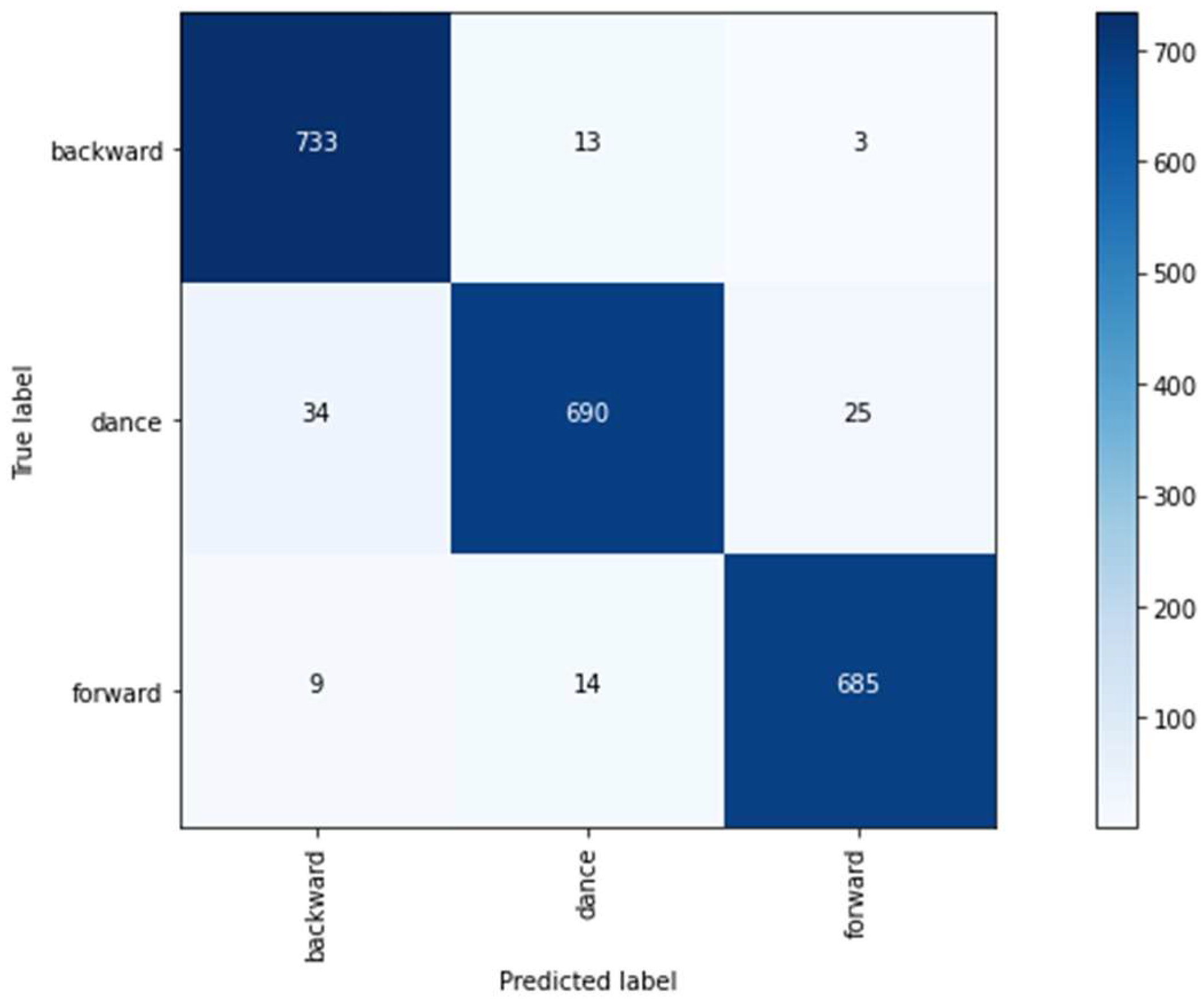

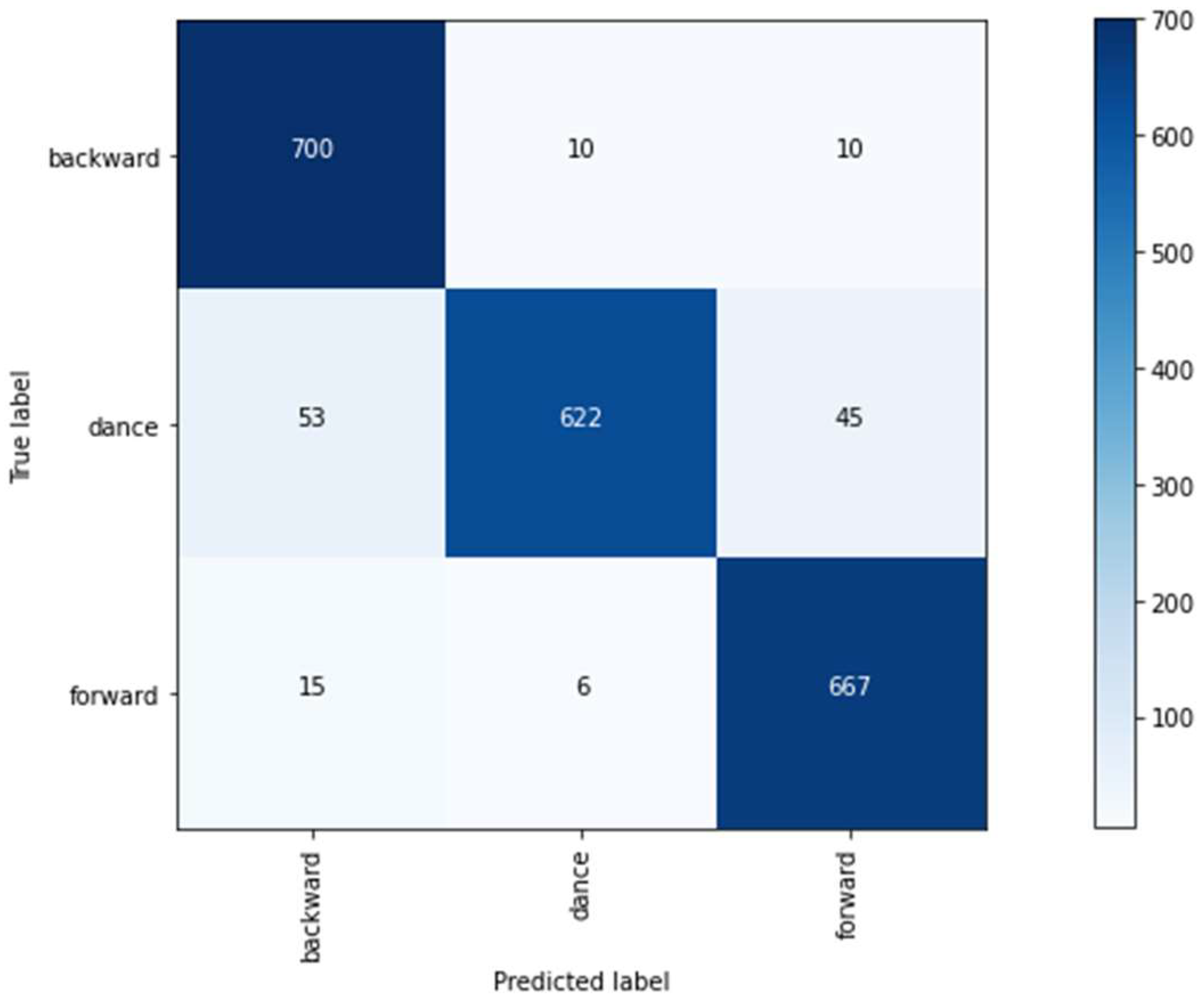

4.2. Classifiers and Features

4.3. Parameters Selection and Training

4.3.1. Classifiers and Their Parameters

4.3.2. Maximum Number of Features

4.3.3. Sliding Window Parameters Selection

4.4. Algorithm and Parameters Selection Results

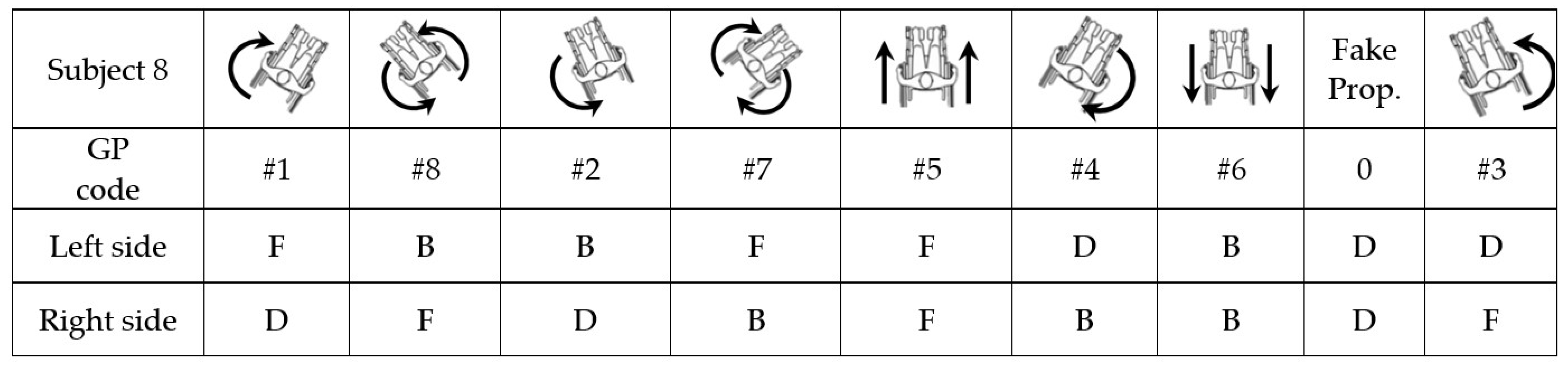

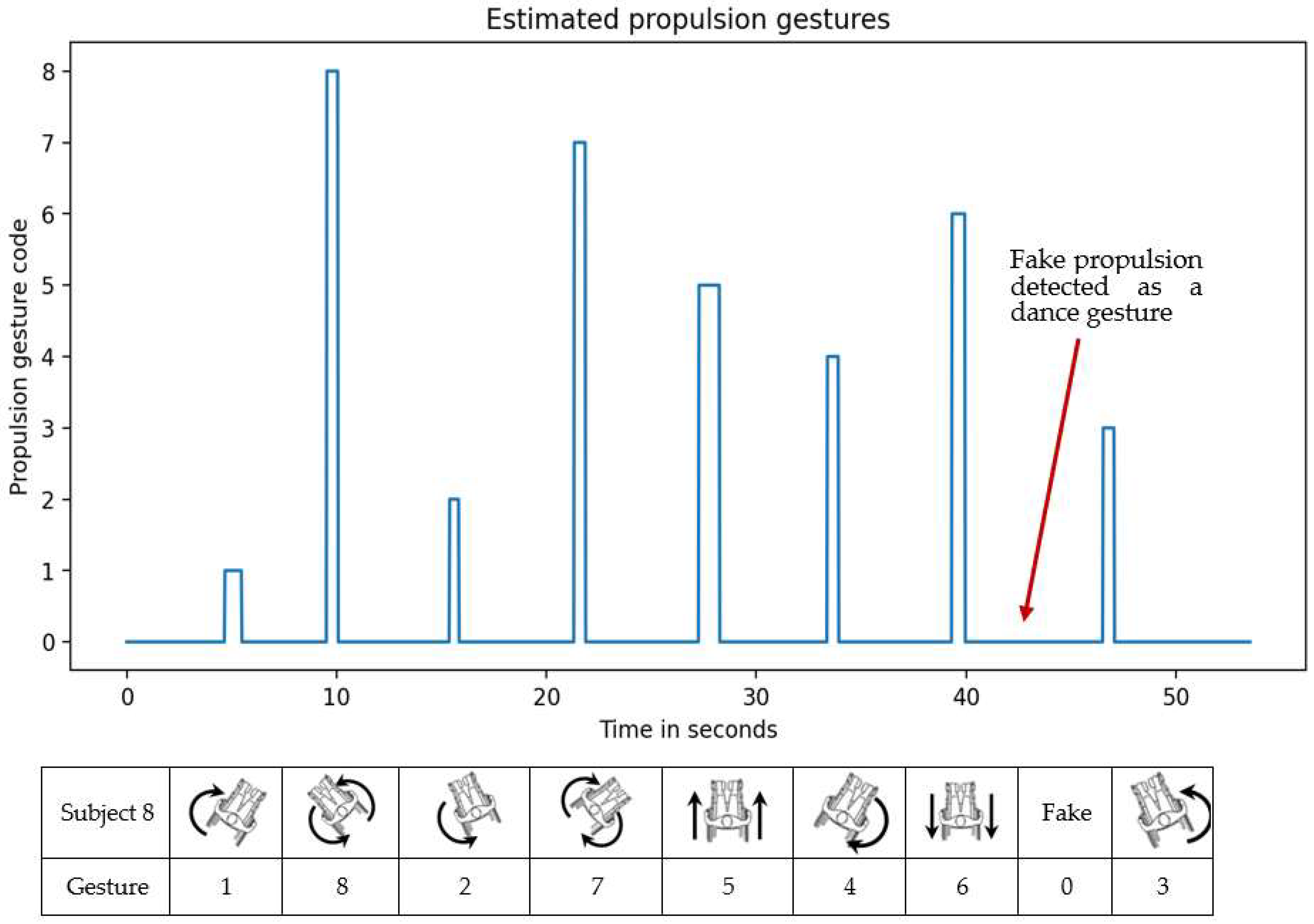

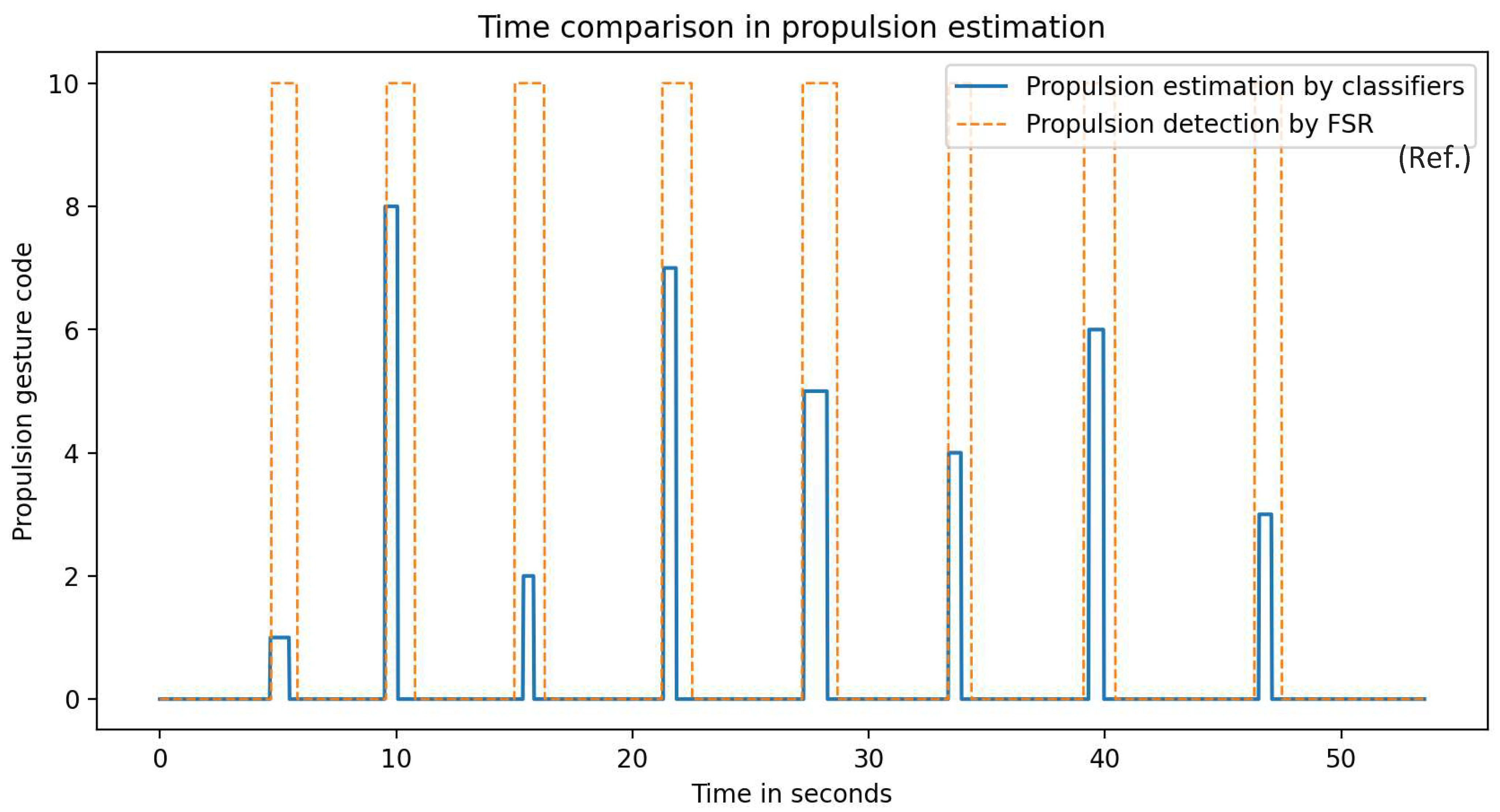

4.5. Estimation of Propulsion Gestures

5. Application of WISP in Wheelchair Dance Teaching

5.1. Issues Addressed in Wheelchair Dance Teaching

5.1.1. Number of Propulsions

5.1.2. Propulsion Starting Time

5.1.3. Propulsion Duration Time

6. Discussion

7. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Inal, S. Competitive Dance for Individuals With Disabilities. Palaestra 2014, 28, 32–35. [Google Scholar]

- Gerling, K.M.; Mandryk, R.L.; Miller, M.; Kalyn, M.R.; Birk, M.; Smeddinck, J.D. Designing wheelchair-based movement games. ACM Trans. Access. Comput. (TACCESS) 2015, 6, 1–23. [Google Scholar] [CrossRef]

- McGill, A.; Houston, S.; Lee, R.Y. Dance for Parkinson’s: A new framework for research on its physical, mental, emotional, and social benefits. Complementary Ther. Med. 2014, 22, 426–432. [Google Scholar] [CrossRef] [PubMed]

- Sapezinskiene, L.; Soraka, A.; Svediene, L. Dance Movement Impact on Independence and Balance of People with Spinal Cord Injuries During Rehabilitation. Int. J. Rehabil. Res. 2009, 32, S100. [Google Scholar] [CrossRef]

- Devi, M.; Saharia, S.; Bhattacharyya, D.K. Dance gesture recognition: A survey. Int. J. Com-Puter. Appl. 2015, 122, 19–26. [Google Scholar] [CrossRef]

- Popp, W.L.; Brogioli, M.; Leuenberger, K.; Albisser, U.; Frotzler, A.; Curt, A.; Gassert, R.; Starkey, M.L. A novel algo-rithm for detecting active propulsion in wheelchair users following spinal cord injury. Med. Eng. Phys. 2016, 38, 267–274. [Google Scholar] [CrossRef]

- Camomilla, V.; Bergamini, E.; Fantozzi, S.; Vannozzi, G. Trends Supporting the In-Field Use of Wearable Inertial Sensors for Sport Performance Evaluation: A Systematic Review. Sensors 2018, 18, 873. [Google Scholar] [CrossRef]

- Shephard, R.J. Sports Medicine and the Wheelchair Athlete. Sports Med. 1988, 5, 226–247. [Google Scholar] [CrossRef]

- Vanlandewijck, Y.; Theisen, D.; Daly, D. Wheelchair Propulsion Biomechanics. Sports Med. 2001, 31, 339–367. [Google Scholar] [CrossRef]

- Rice, I.; Gagnon, D.; Gallagher, J.; Boninger, M. Hand Rim Wheelchair Propulsion Training Using Biomechanical Real-Time Visual Feedback Based on Motor Learning Theory Principles. J. Spinal Cord Med. 2010, 33, 33–42. [Google Scholar] [CrossRef]

- Askari, S.; Kirby, R.L.; Parker, K.; Thompson, K.; O’Neill, J. Wheelchair Propulsion Test: Development and Measurement Properties of a New Test for Manual Wheelchair Users. Arch. Phys. Med. Rehabil. 2013, 94, 1690–1698. [Google Scholar] [CrossRef] [PubMed]

- Hiremath, S.V.; Intille, S.S.; Kelleher, A.; Cooper, R.A.; Ding, D. Detection of physical activities using a phys-ical activity monitor system for wheelchair users. Med. Eng. Phys. 2015, 37, 68–76. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.C.; Hsu, Y.L. A review of accelerometry-based wearable motion detectors for physical activity moni-toring. Sensors 2010, 10, 7772–7788. [Google Scholar] [CrossRef] [PubMed]

- Troiano, R.P.; McClain, J.J.; Brychta, R.J.; Chen, K. Evolution of accelerometer methods for physical activity research. Br. J. Sports Med. 2014, 48, 1019–1023. [Google Scholar] [CrossRef]

- Guo, F.; Li, Y.; Kankanhalli, M.S.; Brown, M.S. An evaluation of wearable activity monitoring devic-es. In Proceedings of the 1st ACM International Workshop on Personal Data Meets Distributed Multimedia, Barcelona, Spain, 22 October 2013; pp. 31–34. [Google Scholar]

- Han, H.; Yoon, S.W. Gyroscope-Based Continuous Human Hand Gesture Recognition for Multi-Modal Wearable Input Device for Human Machine Interaction. Sensors 2019, 19, 2562. [Google Scholar] [CrossRef]

- Kang, M.S.; Kang, H.W.; Lee, C.; Moon, K. The gesture recognition technology based on IMU sensor for personal active spinning. In Proceedings of the 2018 20th International Conference on Advanced Communication Technology (ICACT), Chuncheon, Korea, 11–14 February 2018; pp. 546–552. [Google Scholar]

- Kim, J.-H.; Hong, G.-S.; Kim, B.-G.; Dogra, D.P. deepGesture: Deep learning-based gesture recognition scheme using motion sensors. Displays 2018, 55, 38–45. [Google Scholar] [CrossRef]

- Kratz, S.; Rohs, M.; Essl, G. Combining acceleration and gyroscope data for motion gesture recognition using classifiers with dimensionality constraints. In Proceedings of the 2013 International Conference on Intelligent User Interfaces, Santa Monica, CA, USA, 19–22 March 2013; pp. 173–178. [Google Scholar] [CrossRef]

- Magalhaes, F.A.D.; Vannozzi, G.; Gatta, G.; Fantozzi, S. Wearable inertial sensors in swimming motion analy-sis: A systematic review. J. Sports Sci. 2015, 33, 732–745. [Google Scholar] [CrossRef]

- Wang, Z.; Shi, X.; Wang, J.; Gao, F.; Li, J.; Guo, M.; Zhao, H.; Qiu, S. Swimming Motion Analysis and Posture Recognition Based on Wearable Inertial Sensors. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019. [Google Scholar] [CrossRef]

- Norris, M.; Anderson, R.; Kenny, I.C. Method analysis of accelerometers and gyroscopes in running gait: A systematic review. Proc. Inst. Mech. Eng. Part P J. Sports Eng. Technol. 2013, 228, 3–15. [Google Scholar] [CrossRef]

- Mantyjarvi, J.; Lindholm, M.; Vildjiounaite, E.; Makela, S.; Ailisto, H.A. Identifying users of portable devices from gait pattern with accelerometers. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, 23 March 2005; Volume 2, p. 973. [Google Scholar] [CrossRef]

- Chen, K.Y.; Janz, K.F.; Zhu, W.; Brychta, R.J. Re-defining the roles of sensors in objective physical activity monitoring. Med. Sci. Sports Exerc. 2012, 44 (Suppl. S1), S13. [Google Scholar] [CrossRef]

- Solberg, R.T.; Jensenius, A.R. Optical or inertial? Evaluation of two motion capture systems for studies of dancing to electronic dance music. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016. [Google Scholar]

- Postma, K.; Van Den, H.J.G.B.-E.; Bussmann, J.B.J.; Sluis, T.A.R.; Bergen, M.P.; Stam, H.J. Validity of the detection of wheelchair propulsion as measured with an Activity Monitor in patients with spinal cord injury. Spinal Cord 2005, 43, 550–557. [Google Scholar] [CrossRef]

- Lankhorst, K.; Oerbekke, M.; Berg-Emons, R.V.D.; Takken, T.; de Groot, J. Instruments Measuring Physical Activity in Individuals Who Use a Wheelchair: A Systematic Review of Measurement Properties. Arch. Phys. Med. Rehabil. 2019, 101, 535–552. [Google Scholar] [CrossRef] [PubMed]

- Tsang, K.; Hiremath, S.V.; Crytzer, T.M.; Dicianno, B.E.; Ding, D. Validity of activity monitors in wheelchair users: A systematic review. J. Rehabil. Res. Dev. 2016, 53, 641–658. [Google Scholar] [CrossRef] [PubMed]

- Schantz, P.; Björkman, P.; Sandberg, M.; Andersson, E. Movement and muscle activity pattern in wheelchair ambulation by persons with para-and tetraplegia. Scand. J. Rehabil. Med. 1999, 31, 67–76. [Google Scholar] [PubMed]

- de Groot, S.; de Bruin, M.; Noomen, S.; van der Woude, L. Mechanical efficiency and propulsion technique after 7 weeks of low-intensity wheelchair training. Clin. Biomech. 2008, 23, 434–441. [Google Scholar] [CrossRef] [PubMed]

- Bougenot, M.P.; Tordi, N.; Betik, A.C.; Martin, X.; Le Foll, D.; Parratte, B.; Lonsdorfer, J.; Rouillon, J.D. Effects of a wheel-chair ergometer training programme on spinal cord-injured persons. Spinal Cord 2003, 41, 451–456. [Google Scholar] [CrossRef][Green Version]

- Pouvrasseau, F.; Monacelli, É.; Charles, S.; Schmid, A.; Goncalves, F.; Leyrat, P.A.; Coulmier, F.; Malafosse, B. Discussion about functionalities of the Virtual Fauteuil simulator for wheelchair training environment. In Proceedings of the 2017 International Conference on Virtual Rehabilitation (ICVR), Montreal, QC, Canada, 19–22 June 2017; pp. 1–7. [Google Scholar]

- Govindarajan, M.A.A.; Archambault, P.S.; Haili, Y.L.-E. Comparing the usability of a virtual reality manual wheelchair simulator in two display conditions. J. Rehabil. Assist. Technol. Eng. 2022, 9, 20556683211067174. [Google Scholar] [CrossRef]

- Tolerico, M.L.; Ding, D.; Cooper, R.A.; Spaeth, D.M. Assessing mobility characteristics and activity levels of manual wheelchair users. J. Rehabil. Res. Dev. 2007, 44, 561. [Google Scholar] [CrossRef]

- Sonenblum, S.E.; Sprigle, S.; Harris, F.H.; Maurer, C.L. Characterization of Power Wheelchair Use in the Home and Community. Arch. Phys. Med. Rehabil. 2008, 89, 486–491. [Google Scholar] [CrossRef]

- Hiremath, S.; Ding, D.; Farringdon, J.; Vyas, N.; Cooper, R.A. Physical activity classification utilizing SenseWear activity monitor in manual wheelchair users with spinal cord injury. Spinal Cord 2013, 51, 705–709. [Google Scholar] [CrossRef]

- Kundu, A.S.; Mazumder, O.; Lenka, P.K.; Bhaumik, S. Hand Gesture Recognition Based Omnidirectional Wheelchair Control Using IMU and EMG Sensors. J. Intell. Robot. Syst. 2017, 91, 529–541. [Google Scholar] [CrossRef]

- Antonsson, E.K.; Mann, R.W. The frequency content of gait. J. Biomech. 1985, 18, 39–47. [Google Scholar] [CrossRef]

- Rosati, S.; Balestra, G.; Knaflitz, M. Comparison of Different Sets of Features for Human Activity Recognition by Wearable Sensors. Sensors 2018, 18, 4189. [Google Scholar] [CrossRef]

- Syed, A.S.; Syed, Z.S.; Shah, M.S.; Saddar, S. Using Wearable Sensors for Human Activity Recognition in Logistics: A Comparison of Different Feature Sets and Machine Learning Algorithms. Int. J. Adv. Comput. Sci. Appl. (IJACSA) 2020, 11. [Google Scholar] [CrossRef]

- Badawi, A.A.; Al-Kabbany, A.; Shaban, H.A. Sensor type, axis, and position-based fusion and feature se-lection for multimodal human daily activity recognition in wearable body sensor networks. J. Healthc. Eng. 2020, 2020, 7914649. [Google Scholar] [CrossRef]

- Gao, W.; Zhang, L.; Huang, W.; Min, F.; He, J.; Song, A. Deep Neural Networks for Sensor-Based Human Activity Recognition Using Selective Kernel Convolution. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L.; Min, F.; He, J. Multi-scale Deep Feature Learning for Human Activity Recognition Us-ing Wearable Sensors. IEEE Trans. Ind. Electron. 2022. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Variables | |

|---|---|

| Device | Axis |

| Trunk accelerometer | Z |

| Trunk gyroscope | Y |

| Left-hand accelerometer | X |

| Y | |

| Z | |

| Left-hand gyroscope | X |

| Y | |

| Z | |

| Right-hand accelerometer | X |

| Y | |

| Z | |

| Right-hand gyroscope | X |

| Y | |

| Z | |

| Sampling time | - |

| Left-hand force sensor 1 | - |

| Right-hand force sensor 1 | - |

| Classifier Side | Three-Sensor Mode | Two-Sensor Mode | ||

|---|---|---|---|---|

| Device | Axis | Device | Axis | |

| Left Classifier | Trunk accelerometer Trunk gyroscope | Z X | - - | - - |

| Left-hand accelerometer | X Y Z | Left-hand accelerometer | X Y Z | |

| Left-hand gyroscope | X Y Z | Left-hand gyroscope | X Y Z | |

| Left-hand accelerometer norm Left-hand gyroscope norm | Left-hand accelerometer norm Left-hand gyroscope norm | |||

| Right Classifier | Trunk accelerometer Trunk gyroscope | Z X | - - | - - |

| Right-hand accelerometer | X Y Z | Right-hand accelerometer | X Y Z | |

| Right-hand gyroscope | X Y Z | Right-hand gyroscope | X Y Z | |

| Right-hand accelerometer norm Right-hand gyroscope norm | Right-hand accelerometer norm Right-hand gyroscope norm | |||

| Domain | Feature |

|---|---|

| Mean | |

| Rms | |

| Variance | |

| Standard deviation | |

| Median | |

| Maximum | |

| Time | Minimum |

| Zero crossing | |

| Number of peaks | |

| 25th Percentile | |

| 75th Percentile | |

| Kurtosis | |

| Skew | |

| Number of peaks | |

| PSD Mean | |

| Frequency | PSD rms |

| PSD median | |

| PSD standard deviation | |

| PSD entropy |

| Algorithm | Parameter | Grid Search Values |

|---|---|---|

| SVM | Kernel | Linear, Rbf |

| C | 0.1, 0.3, 0.6, 1.0, 3, 6, 10 | |

| K-neighbors | Number of neighbors | 3, 5, 10, 15, 20, 40 |

| Weights | Uniform, distance | |

| Algorithm | auto, ball tree, kd tree, brute | |

| Random Forest | Number of estimators | 50, 100,200 |

| Criterion | Gini, Entropy | |

| Max depth | 5, 8, 11, 14 | |

| Max features | Auto, Sqrt, Log2 |

| Results with Hand Sensor | ||||||

|---|---|---|---|---|---|---|

| Algorithm | W = 10 | W = 20 | W = 30 | |||

| S = 3 | S = 5 | S = 3 | S = 5 | S = 3 | S = 5 | |

| SVM | 0.9393 | 0.9396 | 0.9499 | 0.9472 | 0.9600 | 0.9438 |

| K-neighbors | 0.9302 | 0.9259 | 0.9347 | 0.9254 | 0.9415 | 0.9138 |

| Random forest | 0.9518 | 0.9423 | 0.9537 | 0.9518 | 0.9572 | 0.9614 |

| Results with Hand and Back Sensors | ||||||

|---|---|---|---|---|---|---|

| Algorithm | W = 10 | W = 20 | W = 30 | |||

| S = 3 | S = 5 | S = 3 | S = 5 | S = 3 | S = 5 | |

| SVM | 0.9457 | 0.9410 | 0.9558 | 0.9502 | 0.9652 | 0.9511 |

| K-neighbors | 0.9378 | 0.9165 | 0.9446 | 0.9376 | 0.9492 | 0.9354 |

| Random forest | 0.9515 | 0.9500 | 0.9614 | 0.9556 | 0.9743 | 0.9578 |

| Detected Gesture by Classifiers | Estimated Propulsion Gesture | |||

|---|---|---|---|---|

| Left Classifier | Right Classifier | |||

| Forward | Dance | #1 | Left-forward |  |

| Backward | Dance | #2 | Left-backward |  |

| Dance | Forward | #3 | Right-forward |  |

| Dance | Backward | #4 | Right-backward |  |

| Forward | Forward | #5 | Forward |  |

| Backward | Backward | #6 | Backward |  |

| Forward | Backward | #7 | Clockwise |  |

| Backward | Forward | #8 | Anti-clockwise |  |

| Dance | Dance | #9 | Any dance gesture (including FPG) | - |

| Propulsion Starting Time | ||||

|---|---|---|---|---|

| Gesture | FSR (ms) | Classifiers (ms) | Error (ms) | MAE (ms) |

| 1 | 4740 | 4680 | −60 | 123.75 |

| 8 | 9600 | 9540 | −60 | |

| 2 | 15,030 | 15,390 | 360 | |

| 7 | 21,270 | 21,330 | 60 | |

| 5 | 27,210 | 27,270 | 60 | |

| 4 | 33,390 | 33,390 | 0 | |

| 6 | 39,120 | 39,330 | 210 | |

| 3 | 46,350 | 46,530 | 180 | |

| Propulsion Duration Time | ||||

|---|---|---|---|---|

| Gesture | FSR (ms) | Classifiers (ms) | Error (%) | Mean Error (%) |

| 1 | 1080 | 810 | 25.00 | 47.84 |

| 8 | 1200 | 540 | 55.00 | |

| 2 | 1260 | 450 | 64.28 | |

| 7 | 1260 | 540 | 57.14 | |

| 5 | 1470 | 990 | 32.65 | |

| 4 | 960 | 540 | 43.75 | |

| 6 | 1320 | 630 | 52.27 | |

| 3 | 1140 | 540 | 52.63 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Callupe Luna, J.; Martinez Rocha, J.; Monacelli, E.; Foggea, G.; Hirata, Y.; Delaplace, S. WISP, Wearable Inertial Sensor for Online Wheelchair Propulsion Detection. Sensors 2022, 22, 4221. https://doi.org/10.3390/s22114221

Callupe Luna J, Martinez Rocha J, Monacelli E, Foggea G, Hirata Y, Delaplace S. WISP, Wearable Inertial Sensor for Online Wheelchair Propulsion Detection. Sensors. 2022; 22(11):4221. https://doi.org/10.3390/s22114221

Chicago/Turabian StyleCallupe Luna, Jhedmar, Juan Martinez Rocha, Eric Monacelli, Gladys Foggea, Yasuhisa Hirata, and Stéphane Delaplace. 2022. "WISP, Wearable Inertial Sensor for Online Wheelchair Propulsion Detection" Sensors 22, no. 11: 4221. https://doi.org/10.3390/s22114221

APA StyleCallupe Luna, J., Martinez Rocha, J., Monacelli, E., Foggea, G., Hirata, Y., & Delaplace, S. (2022). WISP, Wearable Inertial Sensor for Online Wheelchair Propulsion Detection. Sensors, 22(11), 4221. https://doi.org/10.3390/s22114221