Abstract

The most effective methods of preventing COVID-19 infection include maintaining physical distancing and wearing a face mask while in close contact with people in public places. However, densely populated areas have a greater incidence of COVID-19 dissemination, which is caused by people who do not comply with standard operating procedures (SOPs). This paper presents a prototype called PADDIE-C19 (Physical Distancing Device with Edge Computing for COVID-19) to implement the physical distancing monitoring based on a low-cost edge computing device. The PADDIE-C19 provides real-time results and responses, as well as notifications and warnings to anyone who violates the 1-m physical distance rule. In addition, PADDIE-C19 includes temperature screening using an MLX90614 thermometer and ultrasonic sensors to restrict the number of people on specified premises. The Neural Network Processor (KPU) in Grove Artificial Intelligence Hardware Attached on Top (AI HAT), an edge computing unit, is used to accelerate the neural network model on person detection and achieve up to 18 frames per second (FPS). The results show that the accuracy of person detection with Grove AI HAT could achieve 74.65% and the average absolute error between measured and actual physical distance is 8.95 cm. Furthermore, the accuracy of the MLX90614 thermometer is guaranteed to have less than 0.5 °C value difference from the more common Fluke 59 thermometer. Experimental results also proved that when cloud computing is compared to edge computing, the Grove AI HAT achieves the average performance of 18 FPS for a person detector (kmodel) with an average 56 ms execution time in different networks, regardless of the network connection type or speed.

1. Introduction

Public health and the global economy are under threat from the COVID-19 pandemic. As of 15 November 2021, there were 251 million confirmed cases and 5 million deaths from the COVID-19 outbreak [1]. Currently, the most effective infection prevention methods are physical distancing, wearing a face mask, and frequent handwashing [2]. The Malaysian government’s early response to the outbreak is to implement the Movement Control Order (MCO) at the national level to restrict the movement and gathering of people throughout the country, including social, cultural, and religious activities [3]. Besides that, government and private sectors cooperate in body temperature inspection and quarantine enforcement operations in all locations to prevent the spread of COVID-19. However, the critical issue is that it is not easy to implement strong and effective control measures on humans. People still need to address needs such as obtaining food from outside homes, working to cover living costs, and socializing with individuals or family members. The Ministry of Health Malaysia’s concern is that individuals do not take the standard operation procedures (SOP) compliance seriously and lack understanding of COVID-19 disease transmission [4]. In this context, intelligent and automated systems capable of operating 24 h a day to combat the pandemic transmission are critical for long-term economic and public health interests.

Edge computing is a concept that has been widely adopted in the healthcare industry to minimize the cost, energy, and workload of medical personnel [5,6]. Many types of Internet of Things (IoT) components have been proposed, including Radio Frequency Identification (RFID) and Bluetooth technology, magnetic field, infrared, camera and lidar sensors. These components play an important role in physical distancing monitoring via edge computing concept [7,8,9,10,11,12,13]. At the moment, physical distancing monitoring is primarily based on three technologies: wireless communication, electromagnetics, and computer vision. A Bluetooth-based wireless communication has been used to determine the distance between individuals based on the strength of the Received Signal Strength Indicator (RSSI) signal in [8]. Singapore has a “Tracetogether” application based on Bluetooth technology that enables close contacts of COVID-19 patients to be located [14]. The limitation of the application is that users have to download the existing application and then activate Bluetooth at all times in public places. Additionally, the most popular application of deep learning in this context is to detect physical distance using a camera [12]. However, such detection techniques are dependent on the camera location, computing power, and image processing capabilities.

Prevention is better than cure to break the chain of infection and combat the COVID-19 pandemic. The purpose of this study is to build a Physical Distancing Device with Edge Computing for COVID-19 (PADDIE-C19) system to prevent infection based on the concept of edge computing. The three main functions of PADDIE-C19 are: (i) to identify and monitor physical distances using computer vision, (ii) forehead temperature checking, and (iii) to limit the number of people in each room or enclosed area through a counter system that detects people entering/leaving a premise. The edge computing unit can collect image and temperature data from sensors for processing at the edge without relying on the internet network. Furthermore, the edge computing system can provide real-time results and responses, as well as notifications and warnings to anyone who violates the 1-m physical distance rule or has a forehead temperature greater than 37.2 °C.

The following are the main contributions of this paper:

- A PADDIE-C19 prototype based on Raspberry-pi Grove Artificial Intelligence Hardware Attached on Top (Grove AI HAT) with edge computing capability to recognize and classify humans based on image processing. The performance of the person detector system implemented on the Grove AI HAT, Raspberry Pi 4 and Google Colab platform on different mobile networks was evaluated and compared based on frames per second (FPS) and execution time to compare the performance between edge and cloud computing approaches.

- A physical distance monitoring algorithm and implementation technique to operate in low-energy edge computing devices that provide physical distance guidance to the public.

- An accurate sensor platform design for forehead temperature measurement and person counting to manage the flow of visitors in public spaces.

The remainder of this research work is organized as follows. The problem background is demonstrated in Section 2 and the relevant works are discussed in Section 3. The PADDIE-C19 prototype physical distance monitoring algorithm and implementation technique with edge computing are comprehensively discussed in Section 4. The dataset used in this study for training and testing purposes is presented in Section 5. Furthermore, a detailed discussion and analysis on the FPS comparison between edge and cloud, execution time in different networks, the performance of person detector, distance test, comparison between the MLX90614 and Fluke 59 thermometer, and the person counter are discussed in this section. The concluding remarks and potential future research plans are provided in Section 6.

2. Problem Background

Many individuals are unaware or do not have the right knowledge of the seriousness of COVID-19 for individual health and its impact on society. On a cross-sectional survey to measure the level of awareness, views, and behaviours of the Malaysian public toward COVID-19, only 51.2% of participants were reported to wear face masks while out in public [15]. Inconsistent instructions and guidelines from the authorities also create feelings of panic and emotional stress, further reducing the society’s adherence to SOPs for pandemic prevention and physical distancing measures. Aside from psychological reasons, the cultural, economic, and geographical factors can create issues in addressing public violations with movement control orders enforcement. For example, in less than a week in December 2020, the police force in Selangor, Malaysia, issued 606 fines for those who failed to comply with the SOPs [16].

COVID-19 has changed the lives of the people since the MCO was implemented [15]. The government cannot permanently restrict people’s movement because it significantly influences the people’s way of life and the country’s economic sector. Although the flexibility of movement is given in the new life norms, SOPs often fail to be adhered to by the public, such as physical distancing, wearing a face mask, and registration process when entering the premises. Besides, MCO has placed high economic pressure on the low-income group. People require income from employment to meet their basic needs of food. Furthermore, the concept of working from home cannot be implemented in the manufacturing and service sectors. Moreover, large-scale gatherings such as religious activities and weddings can be considered to have serious cultural consequences if they are not conducted.

To fight the spreading of COVID-19, the Malaysian government has created an application, “MySejahtera” that records the entry of visitors into a facility [14]. However, a small percentage of citizens, particularly the elderly, do not own a smartphone. The application relies on QR code as user input, and is unusable for those without handphones. The application’s functionality is also limited to areas with consistent internet coverage. Furthermore, the government and employers are experiencing a shortage of officers and staff responsible for ensuring that people are physically separated at least one meter in public places at all times.

This article aims to create a COVID-19 monitoring system based on the concepts of edge computing, computer vision and the Internet of Things. An automated monitoring system called PADDIE-C19 has been designed to maintain the recommended safe physical distance between crowds in factories, schools, restaurants, and ceremonies to confine the spread of COVID-19. A person detection model is trained based on the transfer learning method and is used to measure physical distances via camera. Meanwhile, an infrared thermometer is used to detect the individual’s forehead temperature at the entrance to identify people with symptoms of COVID-19 infection. Last but not least, the number of people in any room or enclosed location is also limited by using a visitor counting system based on an ultrasonic sensor.

3. Related Works

The COVID-19 pandemic has impacted hospitals worldwide, causing many non-emergency services and treatments, such as cancer, hypertension, and diabetes, to be delayed [17]. This is due to a shortage of healthcare workers to handle COVID-19 patients. Nevertheless, medical equipment with edge computing capability for patient monitoring can help to reduce the load on medical systems. Several studies in Table 1 demonstrate the role of edge computing in healthcare and the COVID-19 pandemic.

Table 1.

Studies on edge computing in the area of healthcare.

According to a current study on edge computing, physical distancing application solutions are divided into three types of technologies: wireless communication, electromagnetics, and computer vision. Therefore, related sensors such as RFID, Bluetooth, magnetic fields, infrared sensors, cameras, and lidars are currently used in existing systems for people detection and physical distancing detection. Each of the following technologies has strengths and weaknesses, which are summarized in Table 2.

Table 2.

Comparison of existing physical distancing solutions.

Object detection based on neural networks and deep learning is the basis of computer vision algorithms to perform person detection in an image or video frame. Computer vision technology with an RGB camera, infrared camera and lidar sensors is widely used in physical distancing monitoring. Table 3 shows some examples of approaches in physical distancing monitoring using computer vision.

Table 3.

Physical distancing solutions based on computer vision.

4. Methodology

4.1. PADDIE-C19 System’s Flow Chart

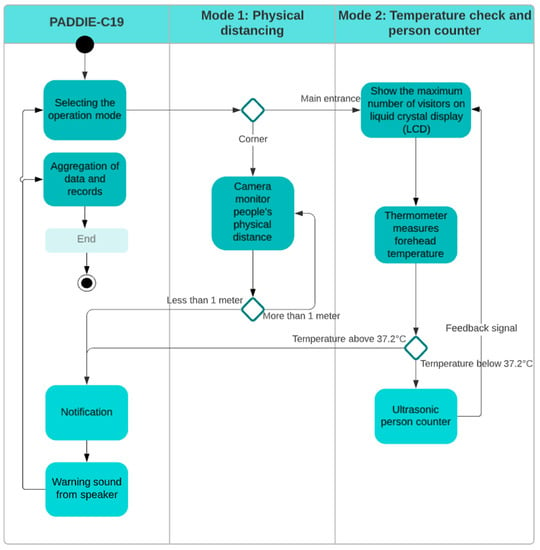

Figure 1 illustrates the flow of PADDIE-C19 operations at the edge, where computation and data storage are located closer to the primary user. PADDIE-C19 operates in two modes: (i) physical distancing monitoring, and (ii) temperature measurement with a person counter. PADDIE-C19 will be in the first mode if installed at the viewpoint corner. Grove AI HAT equipped with an RGB camera is used to monitor physical distancing compliance. A loudspeaker will deliver a warning sound when individuals fail to maintain a physical distance of at least one meter. After that, in the second mode of operation, the infrared thermometer will take the forehead temperature of each individual without making contact before allowing them to enter the building or enclosed area. Simultaneously, the ultrasonic sensor will detect anyone passing through the main door. The Raspberry Pi will be responsible for recording and processing temperature data and people counter data. Following that, both measured values will be displayed on the LCD, along with the maximum number of people permitted in a room or area.

Figure 1.

The proposed PADDIE-C19 system’s flow chart.

4.2. PADDIE-C19 Block Diagram

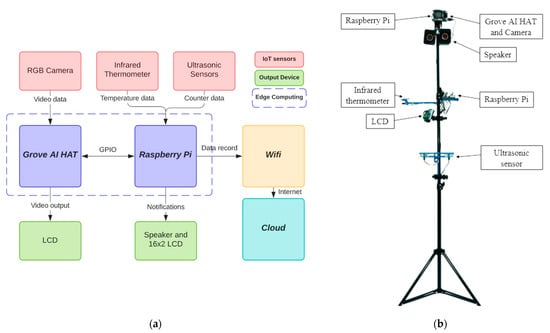

The PADDIE-C19 prototype is illustrated in Figure 2b. A 2 megapixels OV2640 RGB camera is used to provide video data to Grove AI HAT, the edge computing unit for people tracking and physical distance monitoring. An LCD with a resolution of 320 × 240 is used to display the detection results while running the program. The OV2640 camera and LCD connect to the Grove AI HAT via a 24-pin connector with a serial communication protocol. Two ultrasonic and one infrared sensor are connected to the Raspberry Pi microcontroller. A speaker is connected to the analog audio output of the Raspberry Pi to provide warning sound if there are individuals who do not comply with the physical distance rules. The Grove AI HAT and Raspberry Pi can be powered by a 5 V/2 A power adapter via a USB connector. The Raspberry Pi is connected to the internet and eventually the cloud via Wi-Fi, and can be remotely controlled via the VNC Connect software. The PADDIE-C19 design concept is based on the installation at indoor public locations such as shops, offices, and factories. All the connections can be referred to in Figure 2a.

Figure 2.

(a) Block diagram of PADDIE-C19 system and (b) PADDIE-C19 system prototype.

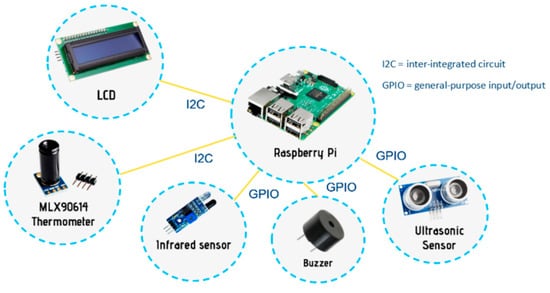

Figure 3 shows the setup of Raspberry Pi with an MLX90641 infrared thermometer used to measure forehead temperature whenever a user places his head in front of it. The forehead distance from the infrared thermometer can be calculated using an infrared sensor. This is due to the fact that each type of thermometer has a unique distance-to-spot ratio. As a result, temperature taking is permitted only at a distance of 3 to 5 cm for the MLX90614 thermometer to obtain an accurate forehead temperature reading. The infrared camera connects to the Raspberry Pi via the I2C protocol. The buzzer will emit a signal sound when the temperature is successfully measured. Furthermore, two ultrasonic sensors were used to detect people moving in and out of the premises from a distance. If the first sensor detects the obstacle ahead of time, the number of people recorded will be increased by one. The recorded number of people will be reduced by one if the second sensor detects the obstacle ahead of time. Algorithm 1 describes the physical distancing monitoring system that consists of two functions.

| Algorithm 1. Physical Distancing Monitoring. |

| Input: Vn: Video V containing N number of frames of size 160*160/[0P,1P,2P] 224*224/[0P,1P,2P] Output: D: Safe and unsafe Distance vector between two objects Initialize Parameter: Distance_Threshold = 100 cm, Temp_Threshold = 37.2, Visitor_Count = 0, Max_Visitor = 15, Function1 Physical distancing () Select = human_detection_framework For () in range (Human_Count) // person detection for each frame in video For x in range(x): // number of person more than 1 D = √((x_2 − x_1)^2 + (y_2 − y_1)^2 // calculate constant, k = (actual distance, cm)/(pixel distance) If D <= Distance_Threshod: // less than 1 m Send notification // output from speaker EndIF Endfor Endfor EndFunction1 Function2 Temperature check and person counter For number of Visitor_Count <= Max_Visitor, Show max number of visitors For (temp_Threshold < 37.2) in range (Visitor_Count) For x in range (x): If proximity sensor detected object at 3 cm distance // calculate forehead temperature if Temp_Threshold < 37.2 Pass Else Display: fever no entry EndIF EndIF EndFor EndFor EndFunction2 |

Figure 3.

Connection of the Raspberry Pi to sensors and output devices.

4.3. Physical Distancing Implementation Steps

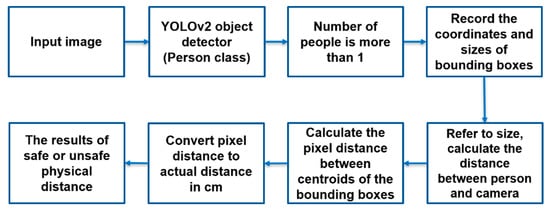

The Grove AI HAT is based on the Sipeed Maix M1 AI module and the Kendryte K210 processor, which is capable of running person detection models for physical distancing applications. The general-purpose neural network processor or the KPU inside the Grove AI HAT can accelerate the convolutional neural network (CNN) model calculations with minimal energy. Kendryte K210 KPU only recognizes models in kmodel format based on the YOLOv2 object detector. The basic steps involved in in-person detection and physical distance measurement in Grove AI HAT are shown in Figure 4.

Figure 4.

Physical distancing flow chart.

In this paper, the person detector based on the YOLOv2 model [24] with MobileNet backbone was trained using transfer learning with Tensorflow framework on an Ubuntu 18.04 machine equipped with an Nvidia GTX 1060. A total of 5632 images were collected and downloaded from Kaggle, CUHK Person, and Google Images from the open-source datasets platform, which the details are summarized in Table 4. Following that, all images were converted to JPEG format with a 224 × 224 resolution. With LabelImg software, all data images were manually labelled with a bounding box as objects of interest in the “Person” class. The original MobileNet weights file was loaded into the Ubuntu machine, along with the processed dataset, and trained until the validation loss curve stopped improving. Once the training is complete, the tflite file will be generated along with the most recent updated weights file. Tensorflow lite files need to be converted to kmodel format via the NNCase Converter tool so that a trained neural network can be run at KPU Grove AI HAT for person detection.

Table 4.

Datasets description.

The YOLOv2-based person detection model was used to detect people from images captured from the OV2640 camera. Next, the KPU Grove AI HAT obtains the information of the people’s sizes and coordinates detected in the bounding boxes. The distance between two detected persons was then calculated according to the centroid of the bounding boxes. The estimated physical distance between individuals was determined using the pixel distance on the LCD. Equation (1) shows the distance formula, with d used to find the pixel distance between two coordinates of the centroid using Pythagoras’ theorem. If the bounding box fails to keep a minimum distance of one meter from the others, it will turn red.

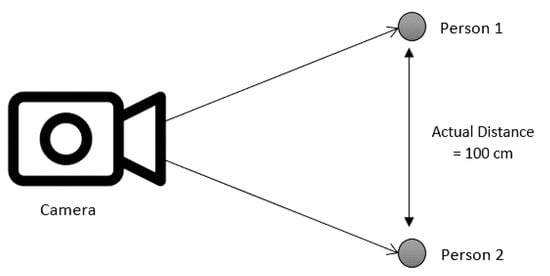

With reference to [22] and Figure 5, the actual distance in centimeters can be calculated according to how many pixels have been used in 1 m with a directly proportional formula, k, as shown in Equation (2). While it is obvious that the rate of change of pixel distance is directly proportional to the actual distance, this method requires calibration according to a fixed camera distance. To improve the accuracy of distance determination, three constant values must be determined with an actual distance of 100 cm between two people using three tests at camera distances of 200 cm, 300 cm, and 400 cm. Since the camera is installed at a fixed location to detect a small area of people, the distance between the camera and people is considered constant. Multiple PADDIE-C19 devices can be arranged in multiple places to cover a larger crowd for detection

Figure 5.

Determination of actual distance from the pixel distance at a fixed camera distance.

4.4. System Evaluation Metrics

In this study, execution time, in seconds, is considered as a metric to determine edge computing performance in real-time implementations. Comparison of execution time based on Wi-Fi, 4G, 3G and 2G networks were implemented between the Raspberry Pi 4, Grove AI HAT and Google Colab platforms. Besides, the effectiveness of the object detection model can be measured with the FPS of output video on the LCD. To examine the benefits of deploying object detection and recognition on edge computing over cloud computing, a YOLOv4 model [25] was trained in Google Colab using the same dataset from Grove AI HAT. Then, the YOLOv4 model was run on the Raspberry Pi 4 and Google Colab platforms to compare frames per second and execution time with the Grove AI HAT using the kmodel shown in Table 5. In addition, the confusion matrix can be used to compute the precision of the trained person detection model. Furthermore, the algorithm for determining physical distance can be tested for accuracy by performing a practical in front of the camera and comparing the measured distance from the pixels with the actual distance using a tape measure.

Table 5.

Comparison of detection models between edge and cloud platforms.

5. Results and Discussions

5.1. FPS Comparison between Edge and Cloud

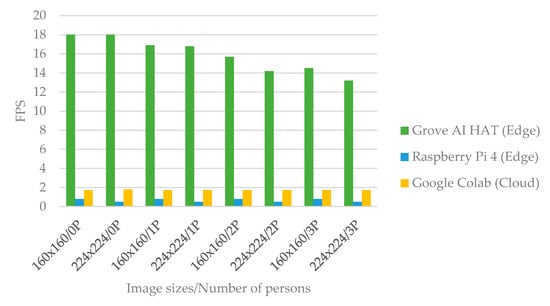

The object detection model is evaluated in terms of FPS to determine how fast the video is processed in Grove AI HAT to detect the object of interest. The central processor unit (CPU) is a significant contribution to frame rate. To examine the differences between edge computing and cloud computing, a YOLOv2-based person detection model was run on Grove AI HAT, and a YOLOv4-based person detection model was run on Raspberry Pi and Google Colab. Due to hardware and software limitations, the YOLOv4 model could not be run on the Grove AI HAT because the kmodel detection model is dedicated for use with the KPU Grove AI HAT. Figure 6 depicts the change of FPS between the three platforms based on the size of the input image and the number of people. Grove AI HAT achieves a maximum FPS of 18 FPS on the LCD, but begins to degrade as the number of people standing in front of the camera increases. Based on the same image size input, Google Colab and Raspberry Pi cannot achieve more than 2 FPS. Google Colab is GPU-enabled. However, the GPU detection resulted in a considerable live streaming delay due to the limited bandwidth of the chosen networks. Finally, the Grove AI HAT on edge outperforms the Raspberry Pi on edge and Google Colab on the cloud in terms of video smoothness.

Figure 6.

FPS comparison of three platforms based on image size and the number of people.

5.2. Execution Time in Different Networks

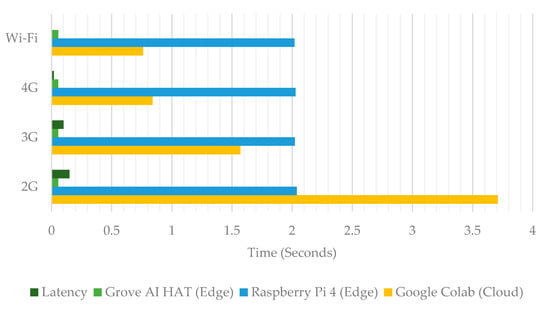

Table 6 displays the evaluation of a Python script’s execution time to complete one iteration in four network conditions. Chrome DevTools was used to define Wi-Fi, 4G, 3G, and 2G internet network profiles to monitor and control network activity. Figure 7 shows that the time spent by Google Colab increases as the Internet speed decreases from the 4G network to the 2G network. However, the execution time of Python scripts on edge computing device terminals remains the same because video data processing services do not rely on the internet. This is due to the fact that Google Colab runs an object detection model in the cloud and its performance and stability are highly dependent on the internet network’s stability and speed. A large amount of internet bandwidth is required to upload video data before the person detection process is carried out in the cloud.

Table 6.

Internet profiles, based on several network technologies.

Figure 7.

Experimental program execution time in different networks.

5.3. Performance of Person Detector

The confusion matrix was used to evaluate the person detection model’s efficiency in Grove AI HAT and Google Colab. The detection model was trained to predict the “person” class and generate a bounding box to the detected person in the LCD. According to Table 7 and Table 8, Google Colab achieves 95.45% accuracy in person classification, whereas Grove AI HAT achieves only 74.65% accuracy. Grove AI HAT does not detect people from a far distance due to memory constraints and the KPU in Grove AI HAT only allows a 224 × 224 image input resolution from the camera. Moreover, the kmodel neural network file size loaded into Grove AI HAT is 1.8 MB, less than the 202 MB file weights used in YOLOv4. Because of their low hardware performance requirements, small neural networks are ideal for edge devices, but they can also produce lower accuracy values.

Table 7.

Accuracy of the person detector with Grove AI HAT.

Table 8.

Accuracy of the person detector with Google Colab.

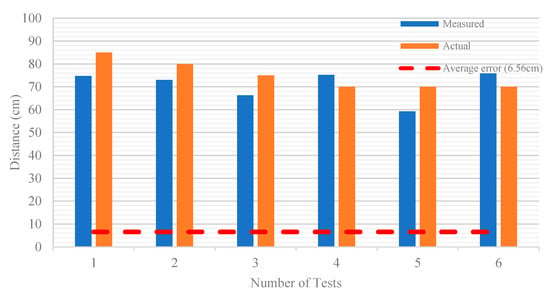

5.4. Distance Test

The size and coordinates of the boundary box generated by the KPU Grove AI HAT on the detected person can be used to calculate the physical distance between them. The method of measuring the distance between two people in Grove AI HAT is first to calculate the pixel distance, d between two people in the image using Equation (1) and then convert the distance into centimeter using constant k in Equation (2). Figure 8 shows an example of physical distance measured from the LCD and Table 9 lists the constant k values according to camera distance. Figure 9 summarizes the measured distance from the pixels compared to the actual distance measured from the measuring tape. The average error between the measured and actual values is shown in red dotted line and the mean absolute error (MAE) between them is 8.95 cm. However, the disadvantage of this method is that the detected person’s measurement and height will affect the accuracy of the distance measurement between two people. Additionally, Grove AI HAT operations such as person detection and physical distance monitoring can only be performed from a frontal view. The 224 × 224 image input resolution was insufficient to produce high-quality person detection at a long-range distance. The main limitation is that the KPU Grove AI HAT has a limited memory capacity, making it incapable of handling image input resolutions greater than 224 × 224 from the camera.

Figure 8.

Pixel distance measured from the LCD of Grove AI HAT.

Table 9.

Constant values determined at different camera distances.

Figure 9.

Measured versus actual distance of two people and the average error.

5.5. Comparison between MLX90614 and Fluke 59 Thermometer

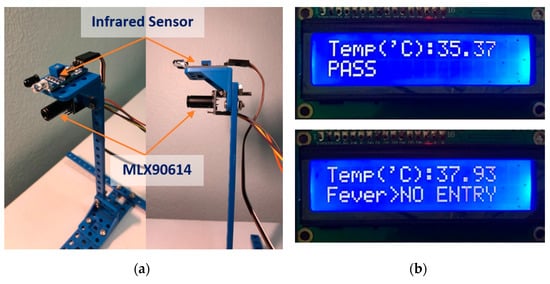

To test the performance of the MLX90614 infrared thermometer, a comparison was made with the manual Fluke 59 thermometer that is widely available for consumers. The forehead temperature measurement prototype is made up of an infrared sensor for obstacle detection and an MLX90614 thermometer (Figure 10a). When the infrared sensor detects an obstacle within 3 cm from the MLX90614 thermometer, it will collect temperature data. The MLX90614 sensor will convert the infrared radiation signal collected from the forehead into electrical signals, which will then be processed by Raspberry and displayed temperature value on the LCD. When a person’s temperature is detected above 37.2 °C, the message “FEVER > NO ENTRY” appears on the LCD immediately, as shown in Figure 10b.

Figure 10.

(a) Forehead MLX90614 thermometer prototype and (b) LCD displays either pass or no entry based on detected temperature with a limit of 37 °C.

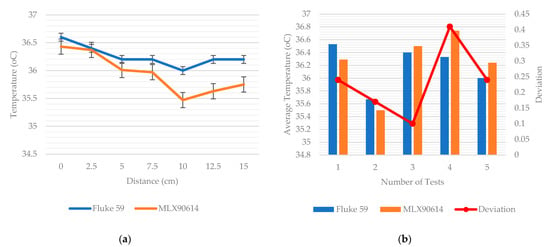

The graph in Figure 11a illustrates the values of forehead temperature obtained from Fluke 59 and MLX90614 at various distances ranging from 0 cm to 15 cm. The temperature value of the MLX90614 thermometer gradually decreases after 5 cm due to the lower distance to spot ratio compared to the Fluke 59 thermometer. According to the product specifications, MLX90614 has a 1.25 distance to spot ratio, whereas Fluke 59 has a value of 8. In addition, the standard deviation value of the MLX90614 is 0.3346, which is higher than the value of 0.1761 for Fluke 59. When the measuring distance exceeds 5 cm, taking the temperature value from MLX90614 becomes less accurate.

Figure 11.

(a) The temperature of the forehead from various distances using Fluke 59 and MLX90614; (b) average temperature with Fluke 59 and MLX90614 fixed at a distance of 3 cm.

However, the MLX90614 performs well when measuring temperature from a distance of 3 cm. To obtain deviation values between the two types of temperature sensors, measurements were repeated three times over five people. Figure 11b shows the forehead temperature data obtained at a distance of 3 cm with the Fluke 59 and MLX90614 thermometers, and the temperature difference between the two thermometers was only 0.1 to 0.4 °C.

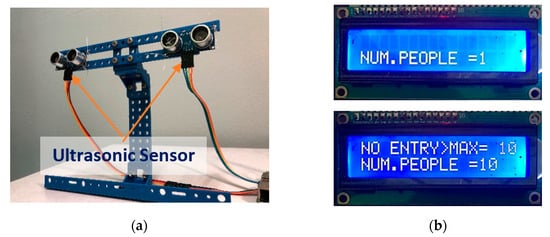

5.6. Person Counter

The person counter prototype is shown in Figure 12a, which consists of two ultrasonic sensors to add confidence to the readings. It will be installed at the premises’ main entrance and will count people’s movements bidirectionally. However, the current system limitation is the capability of counting only one person passing through the sensor at a time. The refresh rate of the two ultrasonic sensors in detecting passing people is 9.8 Hz. If someone passes from left to right, the number of people will be added by one on the LCD, while if someone passes from right to left, the number of people will be subtracted by one. If the number exceeds the maximum limit, the LCD will indicate no entry as shown in Figure 12b. Note that the maximum limit can be reconfigured according to the preference of the premise’s requirement.

Figure 12.

(a) Person counter prototype and (b) LCD indicates the number of people equal to 1 and the no entry indication if there are more than 10 individuals.

5.7. Summary of PADDIE-C19 Performance

To achieve the study’s objective, the design of the PADDIE-C19 prototype has been developed in the alpha stage, i.e., the early design process testing. Each of its features, employed evaluation metrics and experimental results are summarized in Table 10. Issues of poor detection accuracy have been identified and the design will be refined with upgraded hardware to fulfill the needs of a real-world application. The systematic error of 0.5 provided the balance for capturing two different temperatures (Forehead and environment).

Table 10.

Summary of the performance of various devices in PADDIE-C19.

6. Conclusions

The study proposed an edge computing prototype to monitor physical distancing that measures the forehead temperature and keeps track of the person count in managing the flow of visitors in the public spaces. The PADDIE-C19 prototype has a small and portable design for temperature screening and person counting applications. The Grove AI HAT edge computing device on PADDIE-C19 was proven to have a higher frame rate per second than the cloud-based Google Colab and Raspberry Pi, but the accuracy of in-person tracking is relatively lower. While Grove AI HAT can perform all computation at the edge, the main advantage of the Raspberry Pi-based system is that it can be controlled remotely via the internet using VNC software. All hardware only requires a 5 V power supply, which gives the energy saver benefit compared to other commercial devices. Further study might be conducted to solve PADDIE-C19’s shortcomings, such as frontal view-only detection, by replacing Grove AI HAT with edge computing devices that come with bigger memory and higher computing capabilities, such as the Nvidia Jetson Nano and LattePanda Alpha. This way, the accuracy of the person detector can be increased by running larger neural network models at the edge computing device. The second recommendation is to install better resolution cameras to improve the accuracy of person detection from a long distance. Finally, the PADDIE-C19 system can be improved by including a global positioning system (GPS) for outdoors, or Wifi/RFID/Bluetooth-based localization for indoors, to determine the exact location of each PADDIE-C19 system based on various potential locations for public health monitoring in the age of a new normal.

Author Contributions

Conceptualization and methodology, C.H.L., M.S.A., R.N., N.F.A. and A.A.-S.; Execution of experiments, C.H.L. and R.N.; Data analysis and investigation, C.H.L., M.S.A., R.N., N.F.A. and A.A.-S.; Writing—review and editing, C.H.L., M.S.A., R.N., N.F.A. and A.A.-S. All authors have read and agreed to the published version of the manuscript.

Funding

We acknowledge the financial support from the Air Force Office of Scientific Research (AFOSR), under the grant ref number: FA2386-20-1-4045 (UKM Reference: KK-2020-007), for the research fund-related expenses and open access fee payment from this research paper.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in our GitHub (Link: https://github.com/Ya-abba/COVID-19-Physical-Distancing-Monitoring-Device-with-the-Edge-Computing.git) and Google Drive repository (Link: https://drive.google.com/file/d/1zcESizKClTiKIyl64w7D0SbIr3_7vf2g/view?usp=sharing) (accessed on 7 October 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Law, T. 2 Million People Have Died From COVID-19 Worldwide. Time, 15 January 2021. [Google Scholar]

- WHO. Coronavirus Disease (COVID-19) Advice for the Public; WHO: Geneva, Switzerland, 2021. [Google Scholar]

- Shah, A.U.M.; Safri, S.N.A.; Thevadas, R.; Noordin, N.K.; Rahman, A.A.; Sekawi, Z.; Ideris, A.; Sultan, M.T.H. COVID-19 outbreak in Malaysia: Actions taken by the Malaysian government. Int. J. Infect. Dis. 2020, 97, 108–116. [Google Scholar] [CrossRef] [PubMed]

- Abdali, T.-A.N.; Hassan, R.; Aman, A.H.M. A new feature in mysejahtera application to monitoring the spread of COVID-19 using fog computing. In Proceedings of the 2021 3rd International Cyber Resilience Conference (CRC), Virtual Conference, 29–31 January 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar]

- Albayati, A.; Abdullah, N.F.; Abu-Samah, A.; Mutlag, A.H.; Nordin, R. A Serverless Advanced Metering Infrastructure Based on Fog-Edge Computing for a Smart Grid: A Comparison Study for Energy Sector in Iraq. Energies 2020, 13, 5460. [Google Scholar] [CrossRef]

- Abdali, T.-A.N.; Hassan, R.; Aman, A.H.M.; Nguyen, Q.N. Fog Computing Advancement: Concept, Architecture, Applications, Advantages, and Open Issues. IEEE Access 2021, 9, 75961–75980. [Google Scholar] [CrossRef]

- Garg, L.; Chukwu, E.; Nasser, N.; Chakraborty, C.; Garg, G. Anonymity Preserving IoT-Based COVID-19 and Other Infectious Disease Contact Tracing Model. IEEE Access 2020, 8, 159402–159414. [Google Scholar] [CrossRef] [PubMed]

- Ng, P.C.; Spachos, P.; Plataniotis, K.N. COVID-19 and Your Smartphone: BLE-based Smart Contact Tracing. IEEE Syst. J. 2021, 15, 5367–5378. [Google Scholar] [CrossRef]

- Bian, S.; Zhou, B.; Lukowicz, P. Social distance monitor with a wearable magnetic field proximity sensor. Sensors 2020, 20, 5101. [Google Scholar] [CrossRef] [PubMed]

- Nadikattu, R.R.; Mohammad, S.M.; Whig, P. Novel economical social distancing smart device for covid19. Int. J. Electr. Eng. Technol. 2020, 11, 204–217. [Google Scholar] [CrossRef]

- Sathyamoorthy, A.J.; Patel, U.; Savle, Y.A.; Paul, M.; Manocha, D. COVID-Robot: Monitoring social distancing constraints in crowded scenarios. arXiv 2020, arXiv:2008.06585. [Google Scholar]

- Rezaei, M.; Azarmi, M. Deepsocial: Social distancing monitoring and infection risk assessment in covid-19 pandemic. Appl. Sci. 2020, 10, 7514. [Google Scholar] [CrossRef]

- Nguyen, C.T.; Saputra, Y.M.; Van Huynh, N.; Nguyen, N.T.; Khoa, T.V.; Tuan, B.M.; Nguyen, D.N.; Hoang, D.T.; Vu, T.X.; Dutkiewicz, E.; et al. A Comprehensive Survey of Enabling and Emerging Technologies for Social Distancing—Part I: Fundamentals and Enabling Technologies. IEEE Access 2020, 8, 153479–153507. [Google Scholar] [CrossRef] [PubMed]

- Goggin, G. COVID-19 apps in Singapore and Australia: Reimagining healthy nations with digital technology. Media Int. Aust. 2020, 177, 61–75. [Google Scholar] [CrossRef]

- Azlan, A.A.; Hamzah, M.R.; Sern, T.J.; Ayub, S.H.; Mohamad, E. Public knowledge, attitudes and practices towards COVID-19: A cross-sectional study in Malaysia. PLoS ONE 2020, 15, e0233668. [Google Scholar] [CrossRef]

- Idris, M.N.M. 606 Kompaun Langgar SOP di Selangor. Utusan Malaysia, 10 December 2020. [Google Scholar]

- WHO. COVID-19 Significantly Impacts Health Services for Noncommunicable Diseases; WHO: Geneva, Switzerland, 2020. [Google Scholar]

- Mohsin, J.; Saleh, F.H.; Al-muqarm, A.M.A. Real-time Surveillance System to detect and analyzers the Suspects of COVID-19 patients by using IoT under edge computing techniques (RS-SYS). In Proceedings of the 2020 2nd Al-Noor International Conference for Science and Technology (NICST), Baku, Azerbaijan, 28–30 August 2020; IEEE: New York, NY, USA, 2020. [Google Scholar]

- Ranaweera, P.S.; Liyanage, M.; Jurcut, A.D. Novel MEC based Approaches for Smart Hospitals to Combat COVID-19 Pandemic. IEEE Consum. Electron. Mag. 2020, 10, 80–91. [Google Scholar] [CrossRef]

- Hegde, C.; Jiang, Z.; Suresha, P.B.; Zelko, J.; Seyedi, S.; Smith, M.; Wright, D.; Kamaleswaran, R.; Reyna, M.; Clifford, G. AutoTriage—An Open Source Edge Computing Raspberry Pi-based Clinical Screening System. medRxiv 2020, 1–13. [Google Scholar] [CrossRef]

- Saponara, S.; Elhanashi, A.; Gagliardi, A. Implementing a real-time, AI-based, people detection and social distancing measuring system for Covid-19. J. Real-Time Image Process. 2021, 18, 1937–1947. [Google Scholar] [CrossRef] [PubMed]

- Rahim, A.; Maqbool, A.; Rana, T. Monitoring social distancing under various low light conditions with deep learning and a single motionless time of flight camera. PLoS ONE 2021, 16, e0247440. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Guo, D.; Long, F.; Mateos, L.A.; Ding, H.; Xiu, Z.; Hellman, R.B.; King, A.; Chen, S.; Zhang, C.; et al. Robots under COVID-19 Pandemic: A Comprehensive Survey. IEEE Access 2021, 9, 1590–1615. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).