Abstract

Cable-stayed bridges are damaged by multiple factors such as natural disasters, weather, and vehicle load. In particular, if the stayed cable, which is an essential and weak component of the cable-stayed bridge, is damaged, it may adversely affect the adjacent cables and worsen the bridge structure condition. Therefore, we must accurately determine the condition of the cable with a technology-based evaluation strategy. In this paper, we propose a deep learning model that allows us to locate the damaged cable and estimate its cross-sectional area. To obtain the data required for the deep learning training, we use the tension data of the reduced area cable, which are simulated in the Practical Advanced Analysis Program (PAAP), a robust structural analysis program. We represent the sensor data of the damaged cable-stayed bridge as a graph composed of vertices and edges using tension and spatial information of the sensors. We apply the sensor geometry by mapping the tension data to the graph vertices and the connection relationship between sensors to the graph edges. We employ a Graph Neural Network (GNN) to use the graph representation of the sensor data directly. GNN, which has been actively studied recently, can treat graph-structured data with the most advanced performance. We train the GNN framework, the Message Passing Neural Network (MPNN), to perform two tasks to identify damaged cables and estimate the cable areas. We adopt a multi-task learning method for more efficient optimization. We show that the proposed technique achieves high performance with the cable-stayed bridge data generated from PAAP.

1. Introduction

Cable-stayed bridges, one of the essential transportation infrastructures in modern society, are damaged and corroded by external environments such as natural disasters, climate, ambient vibrations, and vehicle loads. As damage accumulates, the condition of the structure deteriorates, and the bridge loses its function. Damaged bridges even lead to collapse, causing severe problems such as human injury and economic loss. In particular, the stayed cable is a necessary but vulnerable primary component of cable-stayed bridges [1]. When the cable starts to be damaged, the stiffness and cross-sectional area decrease [2]. Since the cable has a small cross-sectional area, it may be lost due to low resistance against accidental lateral loads. The cable loss may cause overloading in the bridge and adversely affect adjacent cables [3]. Therefore, we must thoroughly inspect the cable conditions. However, we cannot directly know the damaged cable and its cross-sectional area only with raw data collected from the sensors on the bridge, such as the cable tension. Furthermore, if the damage degree is not significant, it may be challenging to determine whether the damage occurs visually, unlike cracks detection. Manual checking of all cables one by one is very inefficient and increases maintenance costs. Therefore, to ensure the safety and durability of the bridge, we need a technology-based evaluation strategy. Moreover, the technology must be able to capture small changes in the cable area accurately.

The importance of Structural Health Monitoring (SHM) has been emphasized to assess damage such as corrosion, defects, cracks, and material changes in structures. Researchers have introduced deep learning models as well as statistical analysis and machine learning as SHM techniques to determine the damaged cable locations [2] or detect stiffness reduction [4]. With the advancement of the device fabrication process, artificial intelligence meets the need for fast and accurate problem solving using vast amounts of data collected from sensor devices [5]. Deep learning models learn high-level representations of data and complex nonlinear correlations, which are frequently preferred as an automatic damage pattern prediction tool. In many civil engineering studies, deep learning models have achieved high performance with data-driven SHM techniques. Deep learning contributes to the advancement of SHM analysis because it effectively processes both unstructured data such as images and structured data such as time-series data. As SHM technologies, many researchers have proposed architectures such as Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), Deep Autoencoder (DAE), and generative adversarial network (GNN) [6]. Pathiragea et al. [7] trained the autoencoder neural network to perform dimensionality reduction and estimated the stiffness element of the steel frame structure with modal information. Gu et al. [8] calculated the Euclidean distance between the target data and the output of a multilayer Artificial Neural Network (ANN) trained with undamaged structure data. They proposed an unsupervised learning approach to locate damaged structures from the increased Euclidean distance. Truong et al. [9] introduced deep feedforward neural networks (DFNN) to detect damage to truss structures. They simulated damaged structures by reducing the elastic modulus of individual elements and verified the performance of the proposed DFNN. Changa et al. [10] estimated damage locations and severity by training neural networks with modal properties after reducing stiffness to create damage patterns. Abdeljaber et al. [11] proposed a one-dimensional CNN that extracts features from raw accelerometer signals and classifies damage. With the development of computer vision, 2D CNN has been successfully used as a vision-based SHM technique [12]. CNNs trained with structure images successfully classify surface damage such as concrete cracks and spalling conditions [13,14,15,16].

In this paper, we propose a Graph Neural Network (GNN) to evaluate the cable cross-sectional area reduction caused by corrosion or fracture of structures. The proposed method consolidates the overall structure and geometric features of the cable-stayed bridge. The deep learning-based damage detection method requires sufficient data with various damaged states for neural network learning. However, it is almost impossible to obtain data on damaged bridges in operation for safety reasons. Besides, to learn a classifier to detect a damaged location, data on each damaged location is required. Moreover, it is impossible to obtain balanced data for all damaged cases because in the real world, the damage scenarios are very rare since the bridge must guarantee a safe condition for long service life [6]. Therefore, it is difficult to train a damage detection model due to the difficulty of collecting data and the class imbalance problem. To resolve these limitations, there is a growing need for research on applying SHM technology to digital twin models. Therefore, we introduce a Practical Advanced Analysis Program (PAAP) [17,18,19,20] to extract the GNN training data. PAAP is very efficient as it can capture material non-linearities of space structures. In addition, the reliability of PAAP has been evaluated for the cable-stayed bridges [20,21] and suspension bridges [22,23].Therefore, it is possible to simulate cable-stayed bridges with various conditions, such as material properties and loads, similar to real-world bridge-like conditions. Furthermore, we can extract data on various damage states of cable-stayed bridges that cannot be obtained in real-world bridges and use them for deep learning model training. Besides, we can predict the real-world bridge state by utilizing real SHM data into the trained deep learning model. In this work, we employ PAAP to analyze the cable tensions of cable-stayed bridge models with reduced cable areas. Moreover, we represent the sensor data as a graph composed of vertices and edges using the generated tension data and spatial information. Studies using point clouds produced by laser scanning have been proposed to evaluate the structure state regarding the entire bridge structure and 3D spatial information data between sensors [24,25,26]. We notice that 3D spatial information of bridges can provide helpful information about the structure states from previous studies. However, using point cloud data for structural health evaluation is possible only for visible elements such as cracks and spalling [27,28], and the point cloud data are not directly related to the loss of stiffness or strength since the point cloud data do not adequately capture the depth due to occlusion [29]. Since GNN can learn the graph-structured data, it resolves the limitation of CNN, which accepts only the grid-structured data. Thanks to the rapid development of GNN, which is capable of recent graph prediction, the utilization of deep learning increases in various domains such as traffic forecasting [30,31], recommendation system [32,33], molecular property prediction [34], and natural language processing [35,36]. Recently, a study using GNN for cable-stayed bridge monitoring has been proposed. Li et al. [37] explored the spatiotemporal correlation of the sensor network in a cable-stayed bridge using the graph convolutional network and a one-dimensional convolutional neural network. They showed that the proposed method effectively detects sensor faults and structural variation. We expect GNN to be actively examined as an SHM technology in the future. In this study, we use Message Passing Neural Network (MPNN), a representative architecture designed to process graph data. Glimer et al. [34] proposed the MPNN, a GNN framework that represents the message transfer between the vertices of the graph as a learnable function. MPNN learns the representation of the graph while repeating a vertex update with messages received from neighboring vertices. We create a graph with the connection relationship between the cable-stayed bridge nodes and apply the node and element data as the vertex features and edge features of the graph, respectively. We train MPNN to estimate damaged cables using the graphed sensor data. We also estimate the cross-sectional area of the damaged cable and identify the damaged cable location to reveal a detailed bridge condition. We adopt a multi-task learning method to secure that our deep learning model predicts two tasks effectively. The multi-task learning benefits while learning related tasks together [38]. Since estimating the location and cross-sectional area of damaged cables are not independent tasks, deep learning models can be optimized efficiently while simultaneously learning both tasks.

2. Background

Structural health conditions of cable-stayed bridges are generally monitored based on cable tension changes related to cable area parameters. The tensile forces on cables inevitably change when one or more cables are damaged. A machine learning model is one of the damage detection techniques that identify damage location and degree. This section presents a fundamental understanding of the cable-stayed bridge model and our proposed approach for damage detection. A robust structural analysis program, Practical Advanced Analysis Program (PAAP), is introduced, followed by our cable-stayed bridge model. We then introduce a deep learning theory to understand Message Passing Neural Network (MPNN) adopted as a damage detection technique in this work.

2.1. Practical Advanced Analysis Program (PAAP)

The PAAP is an efficient program in capturing the geometric and material non-linearities of space structures using both the stability function and refined plastic hinge concept. The Generalized Displacement Control (GDC) technique is adopted for solving the nonlinear equilibrium equations with an incremental-iterative scheme. This algorithm accurately traces the equilibrium path of the nonlinear problem with multiple limit points and snap-back points. The details of the GDC are presented in [17,39]. In many studies of cable-stayed bridges [21,40], cables have been modeled as truss elements, while pylons, girders, and cross-beams were modeled as plastic-hinge beam-column elements. The plastic-hinge beam-column elements utilize stability functions [41] to predict the second-order effects. The inelastic behavior of the elements is also captured with the refined plastic hinge model [42,43]. To correctly model the realistic behaviors of cable structures, the catenary cable element is employed in the PAAP due to its precise numerical expressions [40]. The advantage of the PAAP is that the nonlinear structural responses are accurately obtained with only one or two elements per structural member leading to low computational costs [17,21]. Thus, the PAAP is employed to analyze and determine the cable tensions in our cable-stayed bridge model.

2.2. Cable-Stayed Bridge Model

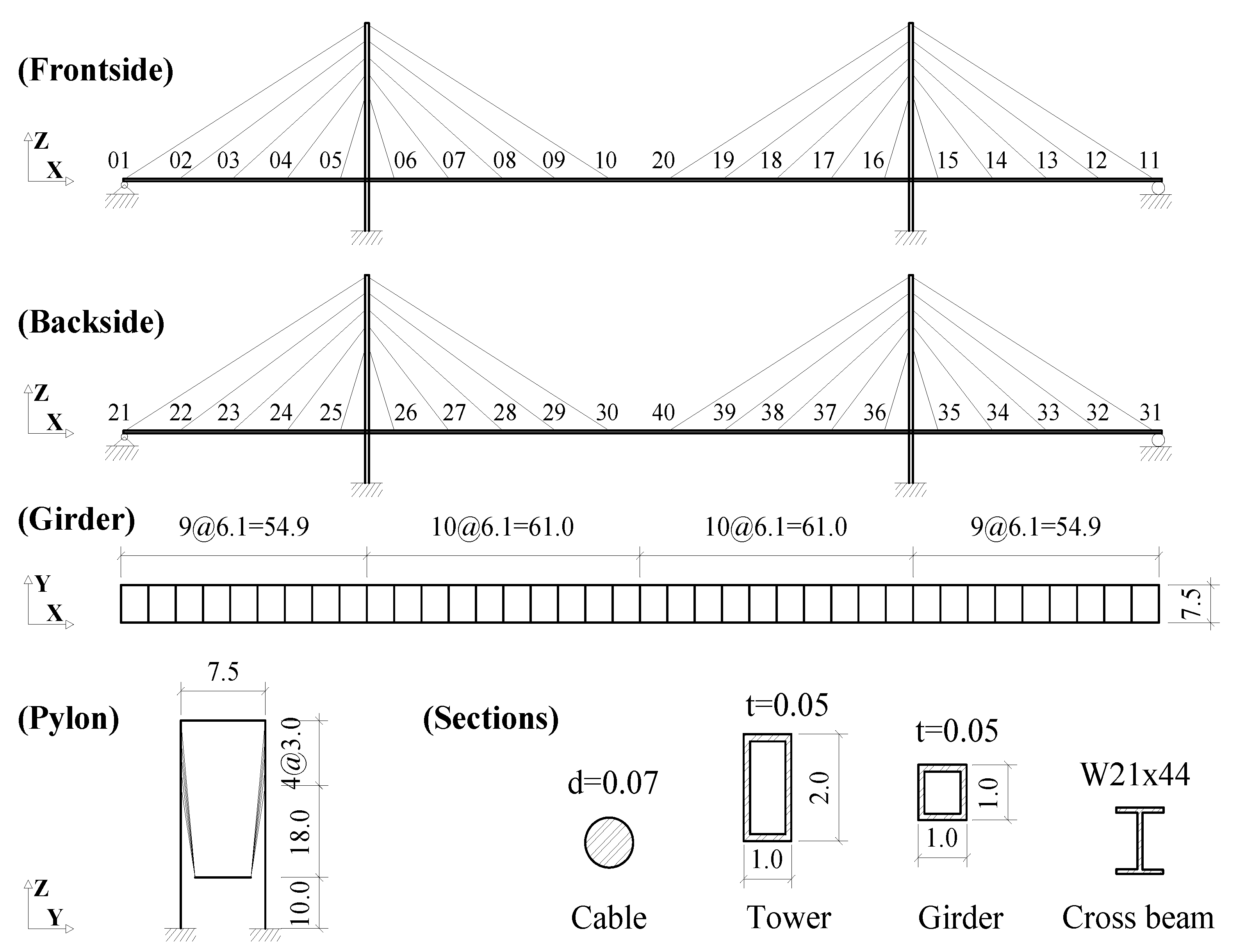

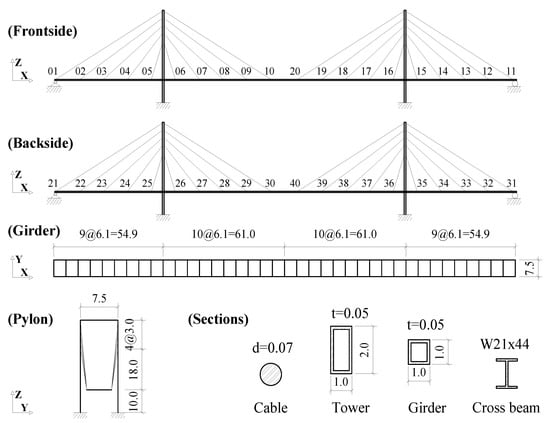

A cable-stayed bridge model of the semi-harp type is proposed as shown in Figure 1. The bridge has a center span of 122 m and two side spans of 54.9 m. Two 30 m-high towers support two traffic lanes with an overall width of 7.5 m. Pylons, girders, and cross beams are made of steel with a specific weight of 76.82 kN/m. The specific weight of the stayed cable is 60.5 kN/m. In the PAAP, the girders, pylons, and cross beams are modeled as plastic-hinge beam-column elements. The stayed cables are modeled as catenary elements. For simplicity in determining the damage of the cable, only the dead load induced by the self-weight of the bridge is considered.

Figure 1.

Cable-stayed bridge model in this study (unit: m).

2.3. Multilayer Percpetron

The most straightforward neural network, Multilayer Percpetron (MLP), has a structure that includes multiple hidden layers between the input layer and the output layer. In each fully-connected hidden layer, the activation function is applied to the affine function of hidden unit as follows.

where, and represent the hidden layer weight and bias, respectively. is an activation function for nonlinear learning. There are various activation functions, and mainly Rectified Linear Unit (ReLU), hyperbolic tangent (tanh), and sigmoid functions defined below are applied frequently.

Dropout is applied to the hidden layer to prevent overfitting of the neural network. During the training process, the dropout disconnects randomly selected hidden units at a certain probability, such as the dropout rate. Then, the network becomes more robust because the network output does not depend only on a specific unit.

2.4. Recurrent Neural Network

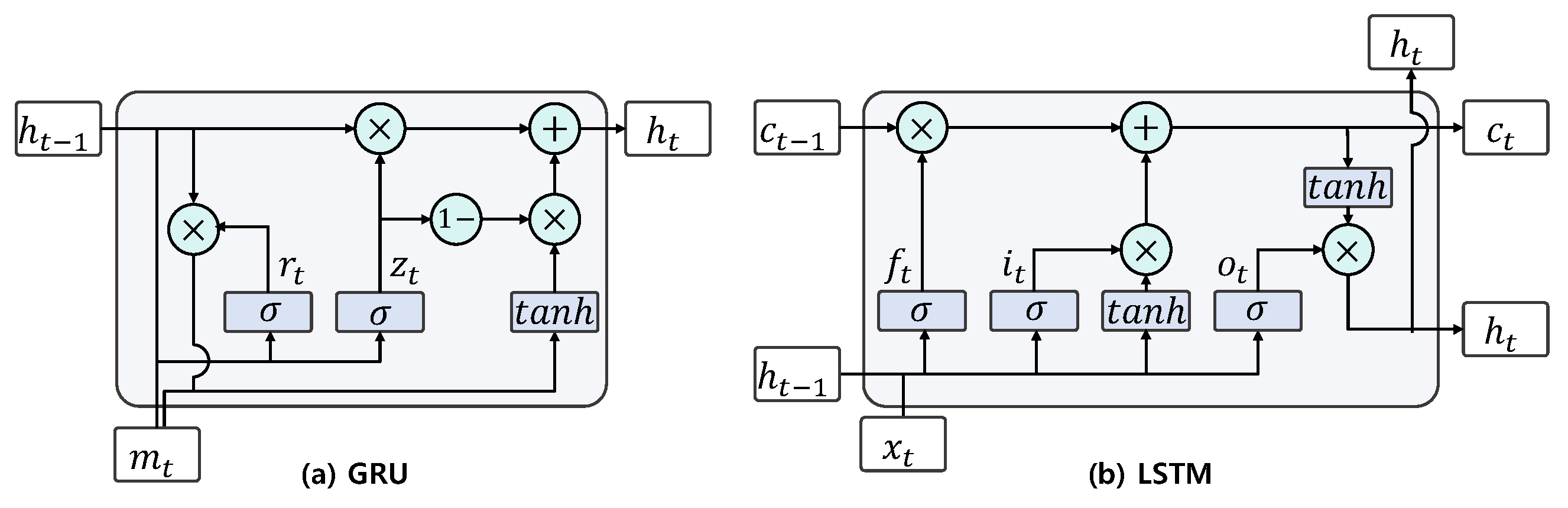

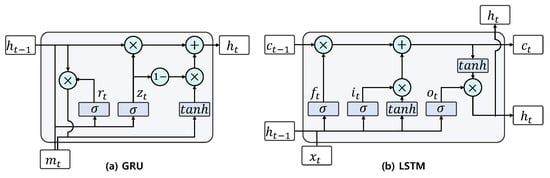

Recurrent Neural Network (RNN) generates the output with current input and hidden state representing past information of sequence data. Typical RNN structures, Gated Recurrent Units (GRUs) [44] and Long Short-Term Memory (LSTM), support the gating of the hidden state and control information flow. Figure 2a shows how the hidden state is calculated in GRU. GRU computes the reset gate that controls the memory from data , where d is the dimension of , in the input sequence and the update gate , where k is the dimension of the hidden state, that controls the similarity between the new state and the old state. GRU integrates the computed gates to determine the candidate hidden state and the hidden state . The equations of GRU are as follows.

where, ⊙ and are Hadamard product and sigmoid functions, respectively. and are weight parameters. are biases.

Figure 2.

(a) GRU structure and (b) LSTM structure.

Figure 2b shows the computation process of hidden state in LSTM. The cell state and hidden state for input data with input gate , forget gate , output gate are computed as follows.

where and are weight parameters. are biases.

The set2set model [45] is permutation invariant for input data using an attention mechanism.

where is the memory vector, is the query vector, and f is the dot product.

2.5. Message Passing Neural Network

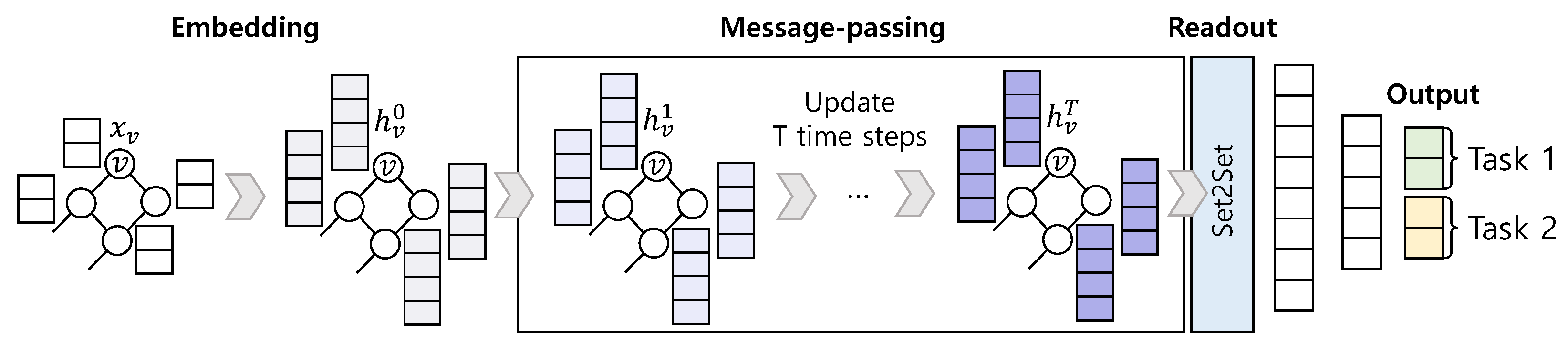

We assess the damage of the bridge structure using Graph Neural Network (GNN) to apply the sensor network topology. GNN is a powerful deep learning model that manipulates graph-structured data, and it is recently adopted in various domains. GNN updates the hidden state of the vertex with the neighbor information, captures the hidden patterns of the graph. Moreover, it effectively analyzes and infers the graph. MPNN [34] is a general framework of GNN. It has been employed to evaluate chemical properties by representing 3D molecular geometry as a graph.

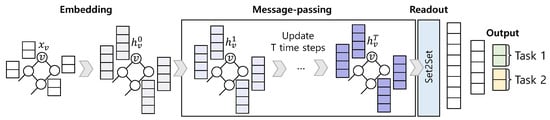

Graph, G, consists of a vertex set, V, and an edge set, E. We denote the feature of vertex, , as and the feature of edge, , as . As shown in Figure 3, MPNN processes the embedded vertices into a message-passing step and a readout step.

Figure 3.

MPNN architecture.

In the message-passing step, each vertex receives the aggregated message from the adjacent vertices along the edges with the message function, . The hidden state of each vertex is updated with the received message, and the previous state of the vertex is updated with the update function, . The message passing step is repeated T times until the message is delivered to a wider range in the graph. In this study, the message function and the update function are defined as follows.

where is the ReLU activation function. is a two-layer neural network generating a matrix and consists of a layer with neurons and ReLU activation, and a layer with neurons. The neighbors of a vertex, , are adjacent vertices connected through edges from v. and are the hidden states of vertices, v and u, respectively. The initial hidden state, , is the embedding of vertex v obtained by substituting into the differentiable function. In Equation (21), we define the update function as GRU described in Section 2.4. GRU integrates the state of the vertex itself and the message received as from adjacent vertices Finally, the hidden state of the updated vertex v is defined as follows.

The readout step aggregates the last hidden states, , after iterating the message passing T times. The prediction, , for the target data is calculated with the readout function, R, as follows.

We define the readout function R as the set2set model presented in Section 2.4. Since the set2set is invariant for graph isomorphism, it effectively integrates the vertices of the graph and produces a graph level embedding.

3. Data Generating Procedure

In this section, we describe how the cable-stayed bridge data used for the MPNN training is generated.The cable damage model is presented based on the elemental area reduction parameter before the measured cables are specified. Then, the structural analyses are performed to analyze the proposed model for reliable datasets that are essential to construct the machine learning model later.

3.1. Cable Damage Model

During the service life of cable-stayed bridges, cables are the most critical load-bearing components [46,47]. Thus, the potential damage of cables should be identified early to prevent terrible disasters [48,49]. In this study, the damage of cable-stayed bridges is assumed to be caused solely by the cable damages. In the cable-stayed bridge model, there are a total of 40 cables corresponding to the 40 catenary elements that are numbered as shown in Figure 1. The cable element is supposed to be perfectly flexible [40] with the self-weight distributed along its length. It has a uniform cross-sectional area of 3846.5 mm in the intact state of the bridge. The cable damage is expressed through a scalar area reduction variable with the value between 0 and 1 as follows:

where represents the cross-section area of the cable in the intact state and denotes the cross-section area of the cable in the damaged state. is the elemental area reduction parameter to be identified. It is noted that indicates a destroyed cable, and indicates an intact cable.

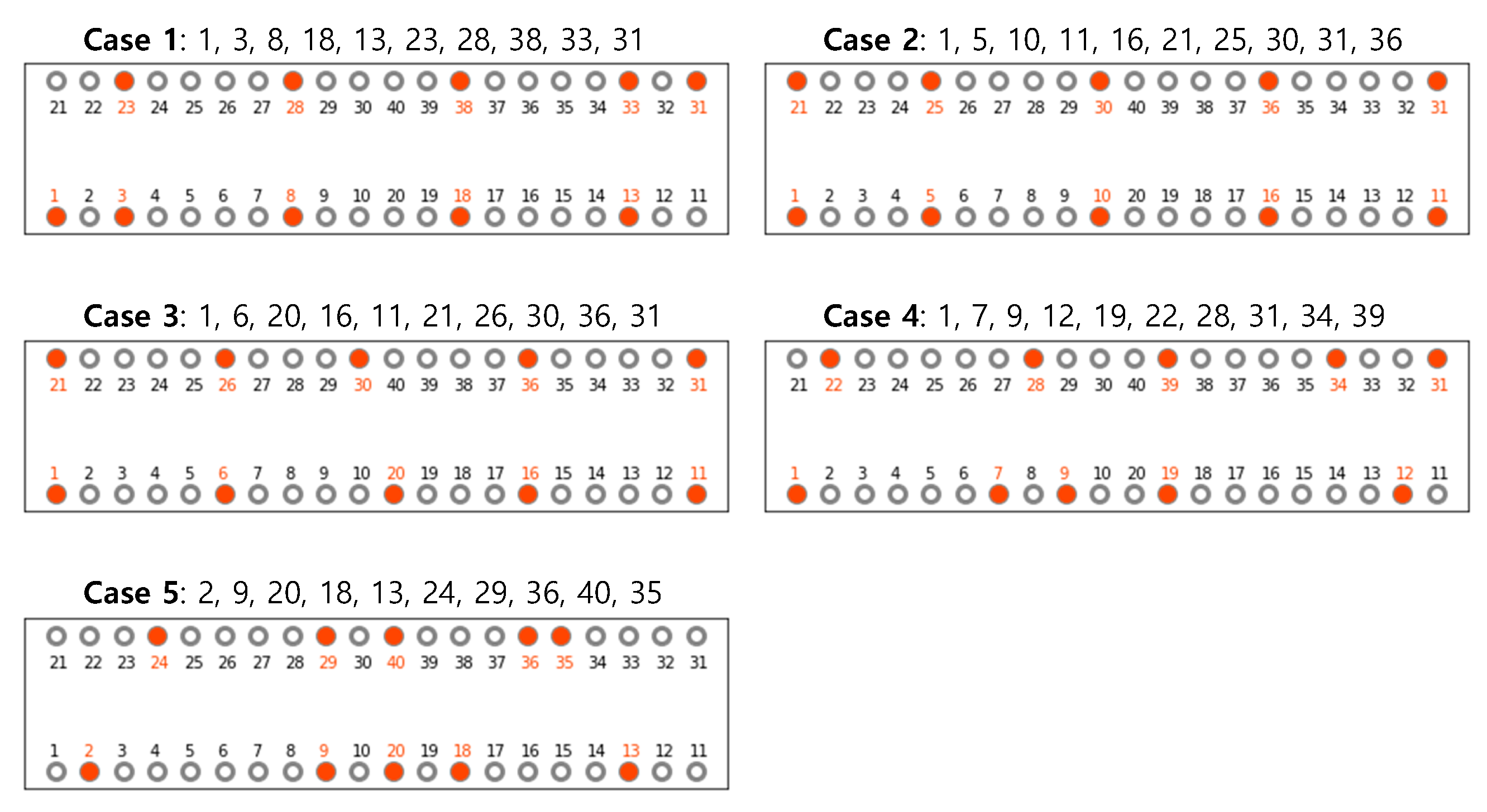

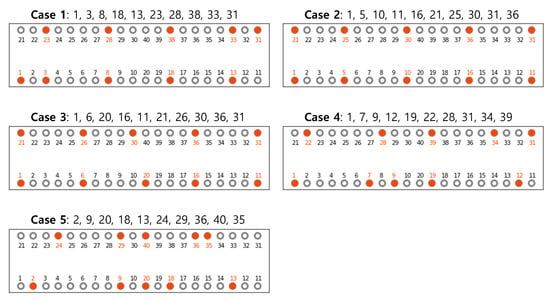

3.2. Observed Cables

In most structural health monitoring systems of cable-stayed bridges, sensors are installed to collect data from specific cables due to the cost-effectiveness. The quantity of surveyed cables depends on the scale and complexity of the bridge and the monitoring objectives [47,50]. At surveyed locations, cable sensitivity and safety degrees are evaluated. The measured data is automatically observed and stored as essential sources for later usage during the monitoring time. In this study, 10 out of 40 cables are surveyed, including 5 cables on the front side and 5 cables on the backside. We examine five sensor layout cases as shown in Figure 4. However, we do not include optimization of sensor placement (OSP) in the scope of this study. Since we do not apply the OSP technology, the sensors are evenly arranged. We analyze multiple cases to avoid skewing the experimental results to specific cases. The tensile forces within these cables are determined by simulating the proposed model in the PAAP. Using the GDC method [20,51] to solve nonlinear problems, the PAAP divides the dead load into many incremental steps. Obtained results from the structural system at each incremental step, including internal forces, deformations, displacements, etc., are exported and stored in data files. However, only cable tensions at the step corresponding to the bridge self-weight are considered as measured data.

Figure 4.

5 sensor layout cases.

3.3. Generating Data

Different cable-stayed bridge models are constructed and analyzed by using the PAAP. The geometry configurations of the bridge girders, pylons, and cross beams are kept constant, while only the cable cross-sectional areas vary. The output is the tensile force on observed cables as determined in Section 3.2. The complete procedure for generating data is presented in Table 1. For single-cable damage, 4000 data samples are generated as the elemental area reduction parameter varies from 0 to 1 with a step of 0.01. To evaluate the cable system failure based on the simulation results, the prediction model performs a reverse problem. The tensile forces of 10 observed cables are examined as the input, while the predefined elemental area reduction parameters are employed for the target data. These input and target data are utilized for the training and validation of the proposed damage detection model concerning the cable-stayed bridge. Upon completion, the model predicts damaged cable and its cross-section area according to 10 inputs of cable tensile forces .

Table 1.

Data generating procedure.

4. Proposed Method for Damage Assessment

In this section, we describe MPNN for damage assessment of the cable-stayed bridge. We present the specific MPNN configuration and show how to apply the proposed multi-task learning to identify the location of the damaged cable created in Section 3 and the cross-sectional area of the corresponding cable.

4.1. Configuration of the Proposed Network

We define the graph vertex feature, , with tensile forces of the 10 cables. The edge feature is defined as the thresholded Gaussian kernel [52] as presented in the equation below using the XYZ coordinates of the nodes on the girder connected to the 10 cables.

where we set the threshold to 0.1 and is the standard deviation of distances. Since we only define vertices and edges as tension and distance, respectively, the dimensions of vertex and edge features are all 1. We embed the vertex features representing the tensor into single fully connected hidden layers with the ReLU activation function. The embedded vertex state is updated with the message function in Equation (19), and the update function in Equation (21). The hyperparameters of the network we tune in the message-passing step include the vertex embedding dimension, the number of iterations of the message passing step, and the hidden state dimension. Also, we tune the number of LSTM layers of the set2set model for the global pooling, readout function R, and the number of computations, which is another hyperparameter of the set2set model. We add the fully connected hidden layer with the ReLU activation function with the same number of neurons as the vertex embedding. The predictions for target data are generated in two output layers, each of 20 linear units. We describe the two outputs in the next section.

4.2. Multi-Task Learning on MPNN

The target data to determine the cable health of the cable-stayed bridge are the damaged cable location and the damage degree (i.e., cross-sectional area, ). Therefore, we adopt multi-task learning to make MPNN learn two tasks effectively. The advantage of multi-task learning is that by predicting multiple tasks simultaneously, related tasks could be learned more efficiently. Therefore, learning to predict the cross-sectional area of the damaged cable and learning to classify the damaged cable simultaneously improves learning efficiency. As shown in Figure 3, the proposed MPNN has outputs for task1 and task2, which are the classification of the damaged cable and the prediction of the cross-sectional area of the damaged cable, respectively. The first task is classification, and the second task is a prediction on continuous data (i.e., regression). Therefore, we utilize the cross-entropy loss function for task1 and the mean absolute error loss function for task2 defined as follows.

where represents the target for ith label for the case that the ith cable is damaged. is the target for the cross-sectional area of a single damaged cable in the range, 0.99 to 0.0. is the vector output by the network for the second task. We define mask as a vector in which one element corresponding to the index of the damaged cable is 1, and all others are 0. In the training phase, the position of 1 in the mask is actually the index of the damaged cable. is actually the error between the cross-sectional area of the damaged cable, , and the dot product of and . Therefore, the loss for task2 is actually only calculated on the damaged cable. In the test step, the mask is created as an output for the network classification. Then means the estimated cross-sectional area of the cable that the network classified as damaged. We define the total loss by combining and as follows.

The total loss is the sum of task1 (classification) and task2 (regression) scaled by L1-norm of mask .

5. Performance Evaluation

To evaluate the proposed model introduced in Section 4, we generate data with the cable-stayed bridge having damaged cables through the PAAP described in Section 3. We preprocess the generated data for the model training and optimize the MPNN model. Then, we train the proposed MPNN to validate the prediction outcomes. We also train the MLP and compare it with the MPNN model results. The input data of the MLP is only ten cable tension data. MLP has four hidden layers with ReLU activation, and we have added a dropout layer to each hidden layer. Similar to MPNN, the MLP output layer generates 80 predictions for two tasks. Additionally, we compare the results with ones by the machine learning technique, XGBoost. Also, we compare the multi-task learning with a network performing only one task. The number of network outputs for the damaged cable classification, which is task1, is 40 and the loss function is the cross-entropy shown in Equation (26). Furthermore, the number of network outputs for the area estimation of damaged cables, which is task2, is 1, and the loss function is the mean absolute error presented in Equation (27).

5.1. Data Preprocessing and Optimization

As mentioned in Section 3, we generate the data for 4000 cases. The input data is the cable-stayed bridge data represented as a graph, as described in Section 4, and the target data include the index and its cross-sectional area of the damaged cable labeled between 1 and 40. The cross-sectional area of the damaged cable is (1), which is between 0.0 (broken state) and 0.99. The is an elemental area reduction parameter defined in Section 3. We scale the vertex feature values, tensile forces, between 0 and 1, as presented as follows.

We divide the data into 6:1:3 and generate a 2400 training set, 400 validation set, and 1200 test set.

Table 2 presents the ranges of hyperparameters and selected optimal values for each model. We select the best hyperparameters in the validation set using Tree-structured Parzen Estimators (TPE) [53] with 20 trials. Moreover, we terminate trials with poor performance using Asynchronous Successive Halving Algorithm (ASHA) [54]. We specify the hyperparameters of MPNN in Section 4.1. The hyperparameters of MLP are the number of hidden neurons in each layer and the dropout rate. We optimize the hyperparameters that determine the network structure, batch size, and learning rate. We perform the hyperparameter optimization individually for each of the 5 cases and models.

Table 2.

Hyperparameter optimization. The optimal values for multitask learning, regression learning, and classification learning are separated by commas and appear in order.

We utilize the ADAM optimizer [55] and train the MPNN model to minimize the loss function, which is defined in Equation (28). We set the number of epochs to 1000. Then we decay by multiplying the learning rate decided from the hyperparameter optimization by 0.995 per epoch. We use Pytorch and Deep Graph Library (DGL) on a single NVIDIA Geforce RTX2080Ti GPU for network implementation and optimization. We train the MLP model with the same settings as the MPNN model.

5.2. Results

In this section, we report the results of the deep learning network for the test set. We examine the accuracy to evaluate the damaged cable classification performance. We employ the mean absolute error (MAE), the root mean squared error (RMSE), and the correlation coefficient between target data and output data as measures to compare the cross-sectional area prediction. MAE, RMSE, and correlation coefficient are defined as follows.

where n is the number of samples, and y, , , and are the target, output, and the average of the target, and the average of the output, respectively. The lower the MAE and RMSE and the higher the correlation coefficient, the better the performance.

Table 3 summarizes the results of MPNN, MLP, and XGBoost. When comparing MPNN with MLP and XGBoost, the classification accuracy and correlation are always higher, and the error for cross-sectional area estimation is lower. Besides, the classification accuracy of MLP drops to 93.58%, depending on the sensor layouts. However, MPNN is more stable with an accuracy of over 98.33% in all 5 cases. Also, for the cross-sectional area prediction, MPNN is better and more stable than MLP and XGBoost. Meanwhile, the multi-task learning performance is similar to one of the single-task learning in which each task is individually trained. However, when multi-task learning is applied, we need to train the network only once, whereas training the network with the single-task increases the time cost by the number of tasks. Therefore, multi-task learning is efficient because it learns multiple tasks simultaneously while achieving performance similar to learning single tasks.

Table 3.

Results of MLP and MPNN on the test set for 5 cases. The best value for each case is noted in bold. (MTL: multi-task learning, Single: single-task learning, Class: classification, Reg: regression).

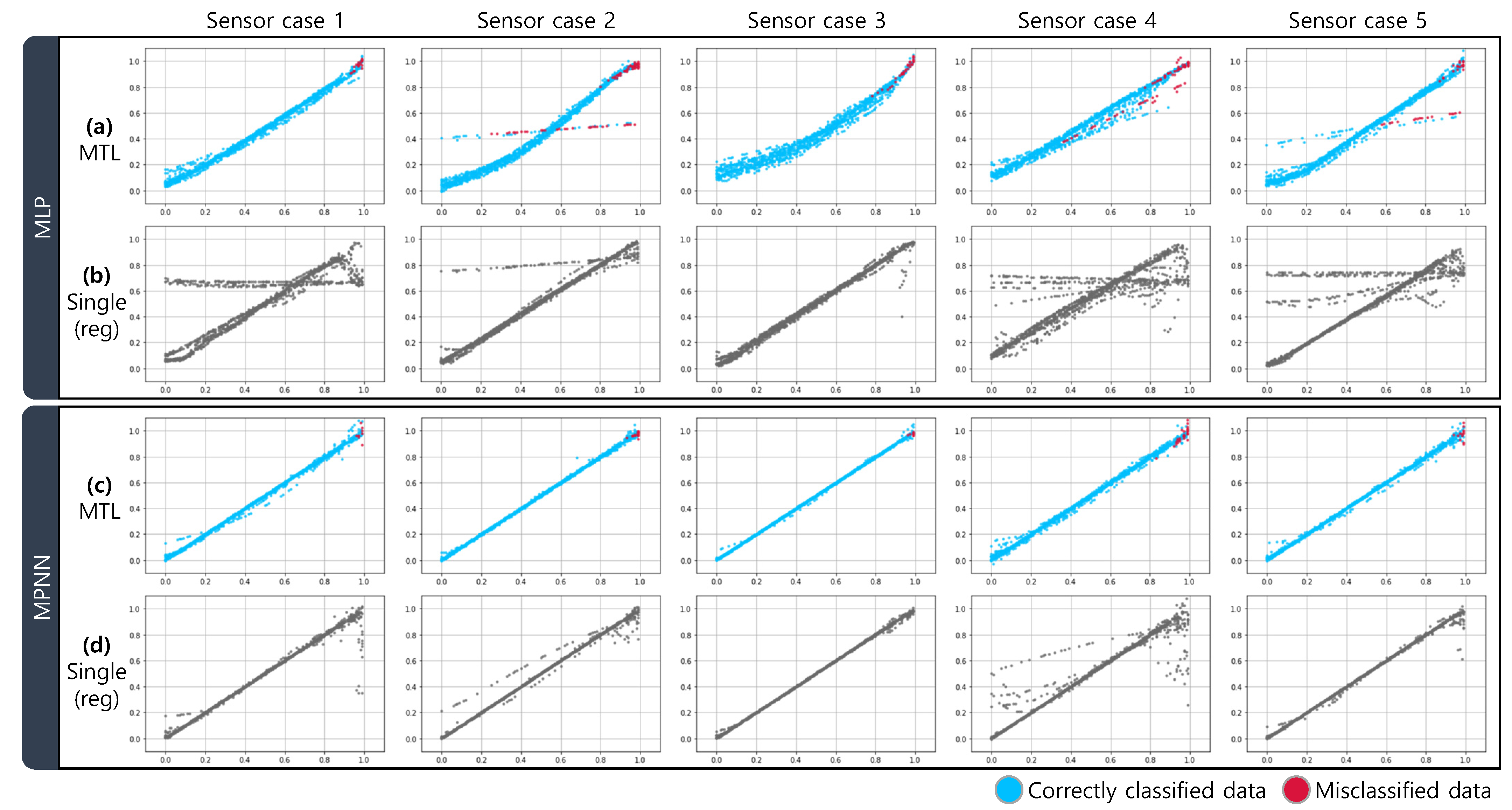

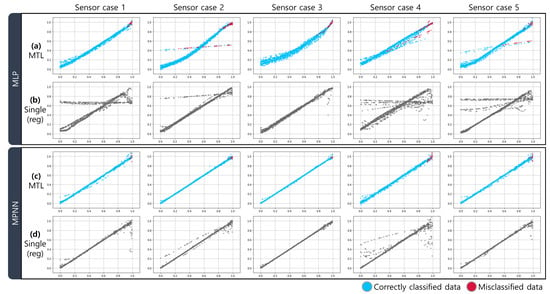

Figure 5 shows scatter plots showing the relationships between the predicted values and actual values for the cross-sectional area estimation of the damaged cables. As shown in Figure 5a,b, which are the results of MLP, since many points deviate enormously from the straight line, especially in cases 2, 4, and 5, we confirm that the errors in the prediction of the cross-sectional area are considerable. However, in the scatter plots of MPNN shown in Figure 5c,d, the data points are closer to the straight line than MLP for all cases. For the classification analysis, in the multi-task learning results Figure 5a,c, we confirm that the red points, which are misclassified data, are mainly concentrated when the cross-sectional area is close to 1. It appears that the smaller the damage, the more likely the damaged cable will be misclassified.

Figure 5.

Scatter plots of MLP and MPNN on the test set for estimating the cross-sectional areas of damaged cables in 5 cases. The x-axis is the actual cross-sectional area (target data), and the y-axis is the predicted cross-sectional area. Correctly classified data are indicated as blue points, and incorrectly classified data are shown as red points in multi-task learning. (a) MLP with multi-task learning, (b) MLP with single-task learning (regression), (c) MPNN with multi-task learning, (d) MPNN with single-task learning (regression).

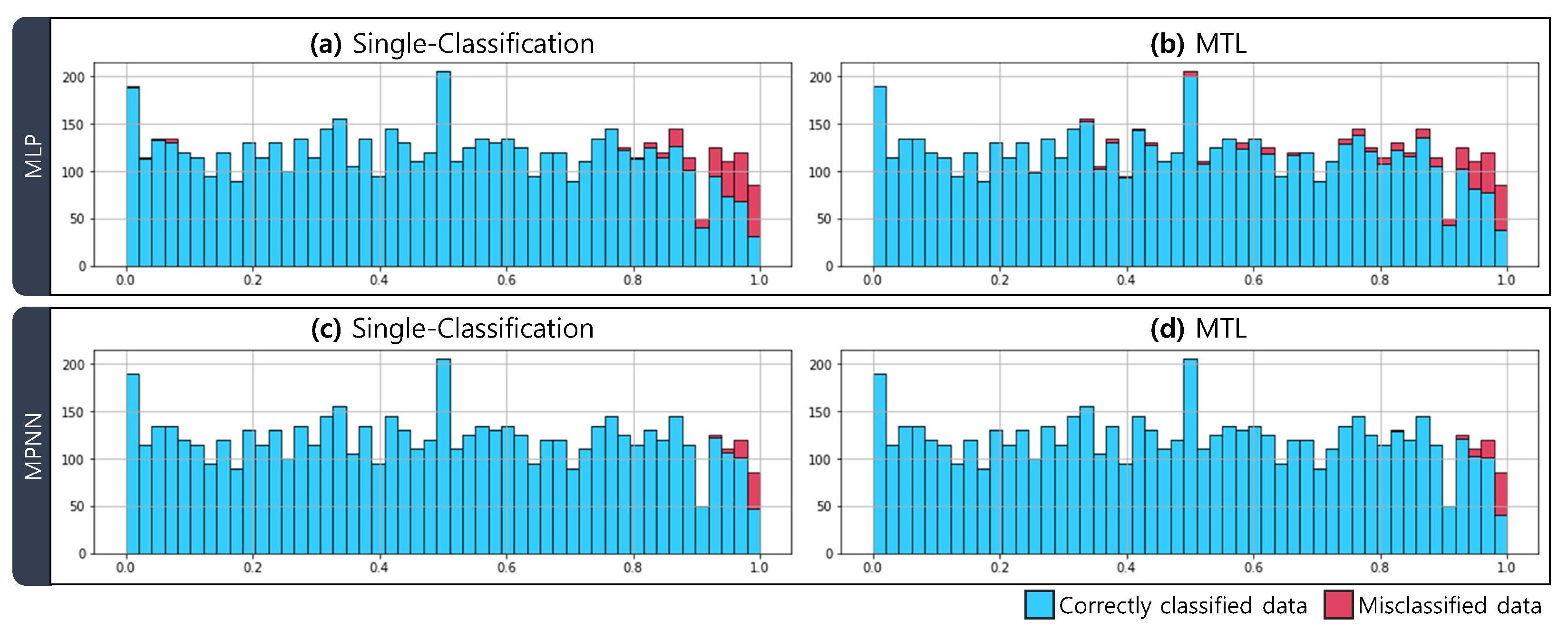

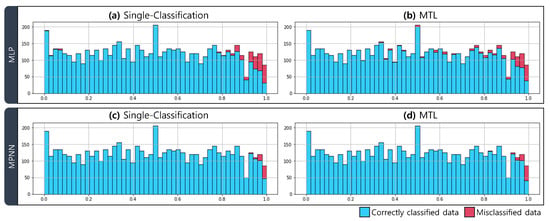

Figure 6 shows the histogram of correctly classified data and incorrectly classified data for varying cross-sectional areas. We observe that in all four network results, in general, the correctly classified data (blue) are evenly distributed, and the misclassified data (red) are skewed toward the cross-sectional area close to 1. For more accurate verification, we divide the cross-sectional area range by 0.1 and calculate the classification accuracy of the data included in each range.

Figure 6.

Histograms of correctly classified data (blue) and misclassified data (red) according to the damaged cable cross-sectional areas. (a) MLP with single-task learning (classification), (b) MLP with multi-task learning, (c) MPNN with single-task learning (classification), (d) MPNN with multi-task learning are presented.

Table 4 presents the accuracies according to the cross-sectional areas. When the cross-sectional area is less than 0.9, the accuracy of the MLP and XGBoost> is between 81% and 100%. When the cross-sectional area is more than 0.9, the classification performance of MLP drops to 50%, and the best accuracy is 79.59% in case 5. However, when the cross-sectional area of MPNN is less than 0.9, the accuracy is over 99.2%. Also, in both multi-task learning and single-task learning, the accuracy of all cases is almost 100%. When the cross-sectional area is more than 0.9, the accuracy of MPNN is at least 73.47% and at most 91.84%. When the cable cross-sectional area loss is small, the accuracy of MPNN decreases slightly, but we notice that MPNN classifies damaged cables relatively more reliably than MLP and XGBoost. Besides, it is noticed that for each cross-sectional area change, none of the multi-task learning method and the single task learning method always outperforms in all cases.

Table 4.

Classification accuracies by cross-sectional areas of damaged cable for 5 cases. For each cross-sectional area, blue indicates the most accurate, and red denotes the least accurate.

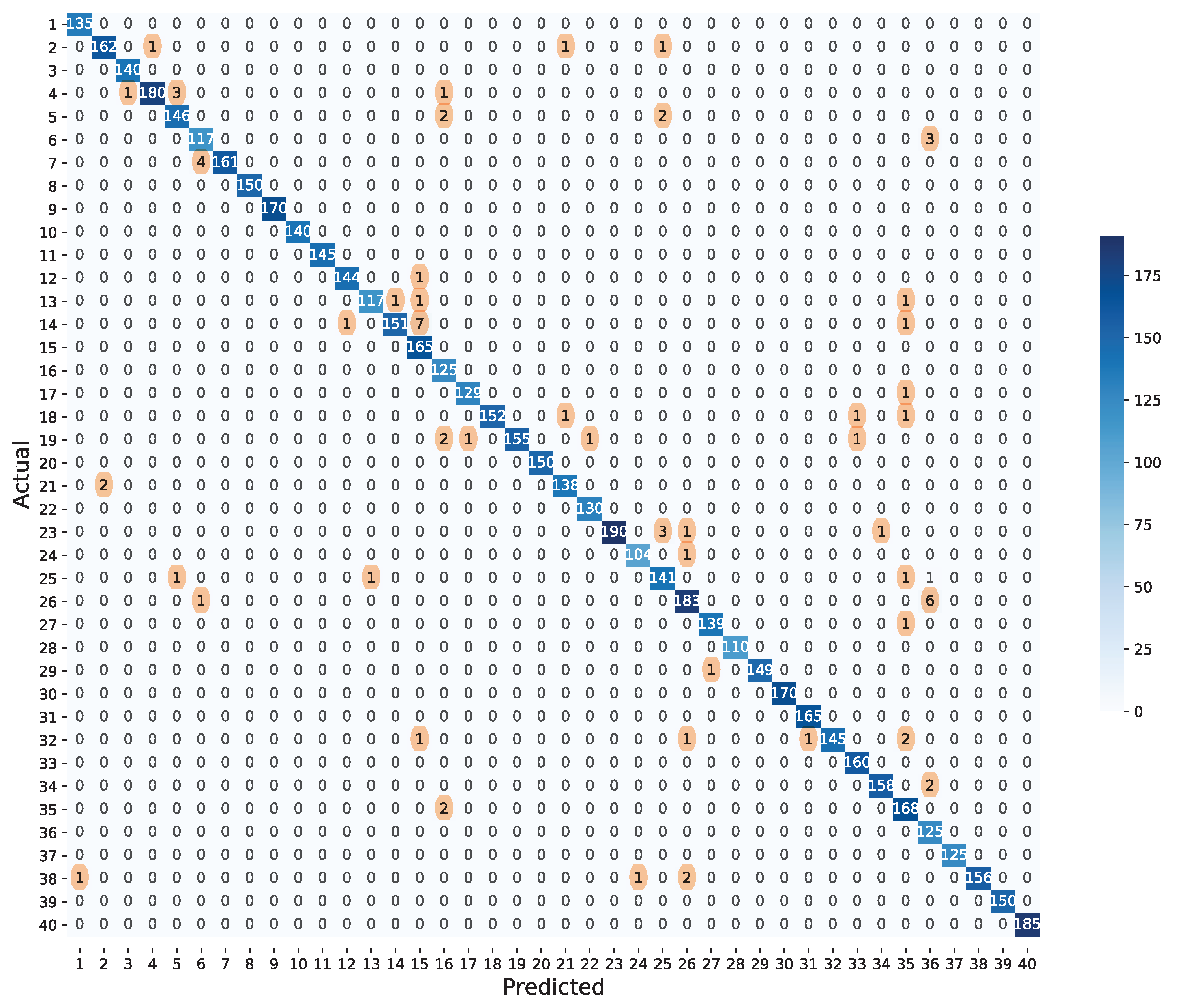

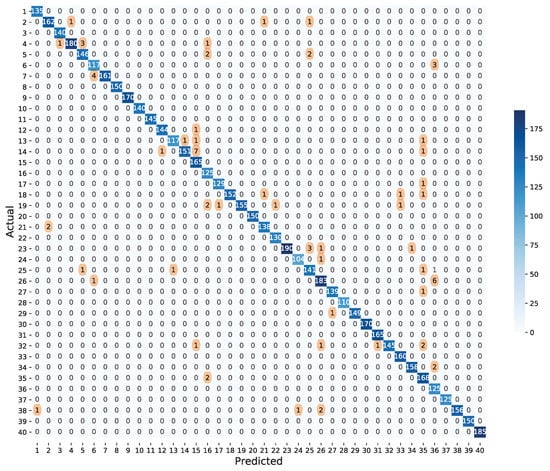

Figure 7 shows the confusion matrix of MPNN combining all 5 cases. Since there are a few misclassified data, we highlight the misclassified data with the orange shade. We observe that the location of the misclassified cable tends to be close to the damaged cable. For example, when the actual labels are 4, 7, 13, and 14, the predicted labels are 3, 6, 14, 16, and 15, respectively. These cables are located next to each other.

Figure 7.

Confusion matrix.

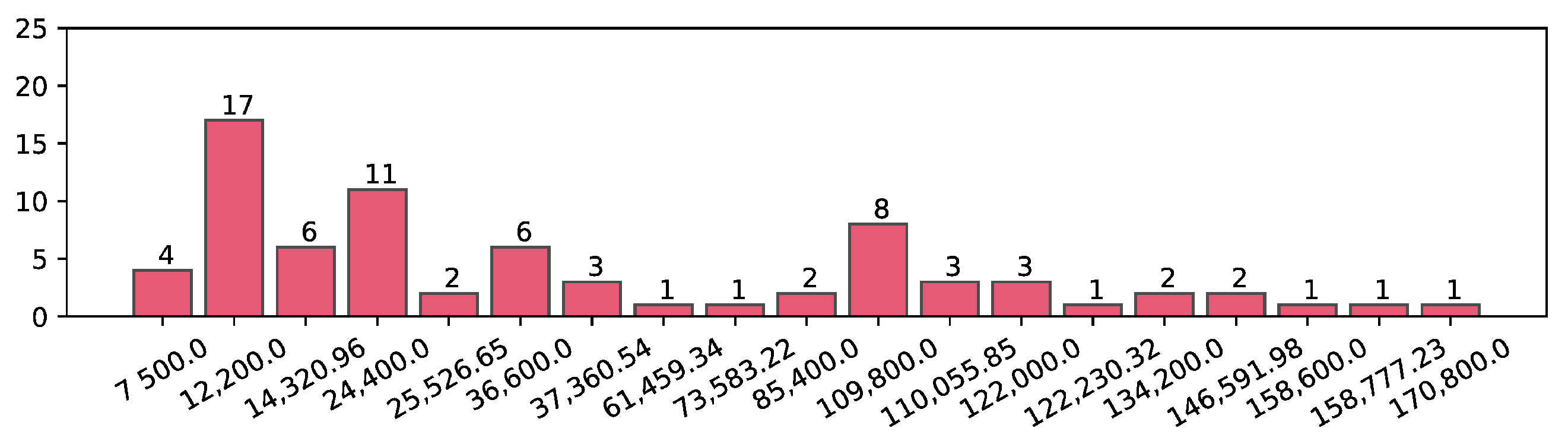

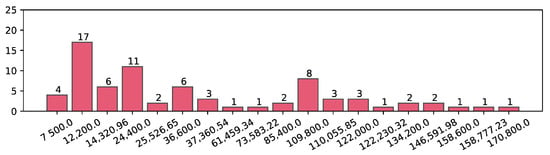

Figure 8 shows a histogram of the sensor distances corresponding to the actual damaged cable and the cable incorrectly classified by the network for all 5 cases to illustrate the spatial relationship between the actual labels and the predicted labels in more detail. Of 75 incorrectly classified data, the distance between 17 actual damaged cables and predicted damaged cables is only 12,200, which is the distance between adjacent cables. Therefore, if we apply the proposed method to an actual bridge, we urge that the cables on both sides of the classified cables must be checked to avoid more significant damage.

Figure 8.

Histogram of the distance between the original damaged cable and misclassified cable in the misclassified data is presented.

The tensions used as input data are measured only on ten cables. However, the proposed technique assesses damages to all 40 cables. Therefore, we need to compare the predictions for the ten cables with tension data and the 30 cables without tension data. Table 5 shows the results for the damaged cables with sensors and the damaged cable without sensors in all cases.

Table 5.

MLP and MPNN results for damaged cable with sensor (Y) and damaged cable without sensor (N). The bold indicates the better result between these two.

We notice that the performance of MPNN is more remarkably different than that of MLP and XGBoost when the cable with no sensor attached is damaged. When the cable with the sensor is damaged, the classification accuracy is higher compared to the case that the cable without the sensor is damaged. The regression performance also shows the similar pattern except for case 1, 2, and 3. In case 1, 2, and 3, on the contrary, the result is better when the cable without the sensor is damaged in results of MLP and MPNN. This seems to be related to a problem with the sensor position. As seen in Figure 4, unlike case 4 and case 5, the spacing of sensors in cases 1, 2, and 3 is always less than five cables. In case 1, 2, and 3, since the sensors are evenly distributed, we observe that even if the cable without the sensor is damaged, especially in the regression task, the performance degradation does not appear. Table 6 for more detailed results for case 3 shows the classification result (accuracy, precision, recall, and F1 score) and the regression result (MAE, RMSE, and correlation coefficient) for each cable of the cross-sectional area when the cable is damaged.

Table 6.

Results of MPNN for each cable in case 3. The rows shaded in green indicate ten cables with tension data and values in the lower 5% of performance appear in red, and values in the upper 5% of performance appear in blue.

The accuracy, precision, recall, and F1 score are calculated as follows.

where is true negative, true positive, false negative, and false positive. The rows shaded in green indicate ten cables with tension data, and all four measures for the classification, including accuracy, precision, recall, and F1 score, are 1.00. For each measure, values in the lower 5% of performance appear in red, and values in the upper 5% of performance appear in blue. Then we observe that cable 25 and cable 33 have the lowest precisions, which can be interpreted as a relatively high probability of misclassification among those predicted by MPNN that cable 15 and cable 24 are damaged in multi-task learning. Cable 14 has the lowest recall in both multi-task learning and single-task learning. Therefore, we can interpret that when cable 14 is damaged, MPNN is relatively likely to predict that the other cable is damaged. Also, the accuracy and F1 score of cable 14 and cable 15 are the lowest in multi-task learning. In addition to this, cable 14 in both multi-task learning and single-task learning have lower performance metric values for the classification. The cables mentioned so far are all sensorless cables in case 3. Unlike classification, the cross-sectional area prediction seems to be mostly unrelated to the use of tension data. For example, the regression performance is excellent even for the damaged cable 17 and 9 that do not have any tension data. Therefore, estimating the cross-sectional area of a single damaged cable is less related to the tension data-position than the classification.

5.3. Discussion

We have shown that MPNN can successfully assess cable damage estimation and outperform MLP. When the cable cross-section is damaged less than 0.9, MPNN always classifies the damaged cable more accurately than MLP. However, when the cable cross-section area damage is negligible as 0.9 or more, the classification accuracy slightly deteriorates. Once we improve the deep learning network to work more accurately for the bridge structure data with minor damage, we expect that the overall accuracy becomes 100%. Misclassified cables by MPNN are often located right next to the actual damaged cables. We can utilize these MPNN misclassification trends to update the algorithm and training process. However, since MPNN has reached 98% or even higher accuracy, achieving sufficiently satisfactory results, we believe that MPNN has potential as an SHM technology. Also, the multi-task learning performance is similar to the multiple single-task learning performance. Therefore, we have shown that multi-task learning can efficiently learn a single network that evaluates a bridge state.Besides, we have presented that the multi-task learning technique achieves similar performance to the network learning two tasks while learning only one network. Therefore, it is possible to evaluate the bridge conditions in several ways using only one network. It is worth adding more tasks other than predicting only the cross-sectional area of the cable in the future.

5.3.1. Contribution

We confirmed that MPNN has a higher overall performance than MLP and XGBoost. In particular, MPNN has a significant difference in performance from MLP and XGBoost when a cable without a sensor is damaged. Since MPNN can process spatial information between sensors, it appears that damages to cables without sensors can be estimated more successfully. MPNN has the advantage of being able to transmit information according to the connectivity relationship between sensors through a structure that passes messages. Also, by adding a readout function, MPNN produces an output as a graph unit value from node information, making it possible to predict the state of 40 cables effectively. In this paper, we captured spatial correlation by considering sensor geometry with MPNN. Moreover, we showed that two tasks (classifying damaged cables and estimating cross-sectional area) could be efficiently trained using multi-task learning. Besides, we proposed a loss function using a mask so that the damaged cable could be more successfully estimated.

5.3.2. Limitation

We could represent the sensor geometry as a graph in this study but did not consider mechanical properties such as the material type or Young’s modulus of the structure since it is difficult to define the relationship among the sensors when several types of materials are included between the two sensors. Besides, it may be challenging to learn the entire structure behaviors with the proposed method because it is not possible to deduce the entire topology of the bridge with only a few sensor data, which may necessitate the installation of a sufficiently large number of sensors. However, it cannot always be satisfied due to cost constraints. Therefore, to understand the condition of the entire bridge with only a small number of sensors, we fundamentally need to examine the influence of the sensor locations and apply it to enhance the model.

5.3.3. Extension To Multiple Damaged Cables

In this study, we assess the GNN-based SHM technology assuming that only one cable is damaged. To generate more realistic data similar to a real-world bridge, we need to simulate several damaged cables. We can apply the proposed technique by transforming from one label classification to a multi-label classification problem even when the number of damaged cables is unknown. A straightforward approach is to replace the cross-entropy loss function with a loss function used for multi-label classification, such as binary cross-entropy. However, the threshold for deciding how many cables to classify as damaged should be appropriately set, which is an essential component. If the target data, which indicates the cross-sectional areas of the actual damaged cables, is represented as a vector of dimension w, where w is the number of damaged cables, then we can use the mask as shown in Equation (27) and compute the loss for the regression task. Suppose we multiply the 40 outputs of the network for task2 and the mask that is a matrix, where each column is a one-hot encoding vector representing the locations of the damaged cables. In that case, we obtain the cross-sectional areas of the damaged cables. Similarly, the mask is created as the actual label in the training step and the predicted label in the test step. The total loss is obtained by combining the loss for the classification task and the loss for the regression task by scaling the L1-norm of the mask. We do not desire to weigh the regression task more than the classification task as the number of damaged cables increases. We can prevent this by scaling the regression loss with the mask. Therefore, even with multiple damaged cables, we can still apply the proposed method as a multi-task learning approach. As discussed above, we will review the conditions for making the cable-stayed bridge model and the real-world bridge similar and improve our technique to apply to the real-world bridge.

6. Conclusions

In this paper, we defined the sensor data as a graph composed of vertex and edge features. We proposed a damage assessment method of a cable-stayed bridge applying the graph representation on MPNN. We used tension data of only 10 cables to increase the practicality of the experiment. It is challenging to assess the conditions of all cables with only a limited number of sensors. Nevertheless, MPNN successfully estimated the damage of the cable-stayed bridge. We adopted multi-task learning to enable MPNN to efficiently learn two tasks: to locate damaged cables and predict the cable areas. The performance of MPNN is better than MLP trained for the comparison. MPNN classified damaged cables more reliably than MLP, not only when the cable is completely broken and has a zero area, but also when the damage is relatively small. Therefore, we presume that MPNN can detect damages at an early stage for structural maintenance. Furthermore, we can apply MPNN to actual bridge data when we have material information about the structural components. For example, we can train MPNN with stayed-cable bridge data simulated under the same conditions as real bridges in PAAP and utilize pre-trained MPNN with real bridge data for prediction directly. Additionally, although we simulated only one damaged cable in this study, we will generate data with multiple damaged cables to train the network to consider the more general real-world bridge cases. We also introduced an approach to conduct damage localization and severity assessment with the proposed method when several cables are damaged as a future study. Our model is likely to be extended by applying additional data such as the displacement of nodes and xyz coordinates for vertex features. Moreover, we can further expand the study by training MPNNs to predict structural damages, such as decreased stiffness besides cable conditions.

Author Contributions

Conceptualization, Y.J.; data curation, V.-T.P. and S.-E.K.; formal analysis, H.S.; methodology, H.S.; project administration, Y.J.; software, H.S.; supervision, Y.J. and S.-E.K.; validation, H.S. and V.-T.P.; writing—original draft, HyeSook Son; writing— review and editing, Y.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Basic Research Program through the National Research Foundation of Korea (NRF) funded by the MSIT under Grant 2019R1A4A1021702, and in part by Institute of Information & communications Technology Planning & Evaluation(IITP) grant funded by the Korea government(MSIT) (No.2019-0-00242, Development of a Big Data Augmented Analysis Profiling Platform for Maximizing Reliability and Utilization of Big Data.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, S.; Li, H.; Liu, Y.; Lan, C.; Zhou, W.; Ou, J. SMC structural health monitoring benchmark problem using monitored data from an actual cable-stayed bridge. Struct. Control Health Monit. 2014, 21, 156–172. [Google Scholar] [CrossRef]

- Kordestani, H.; Xiang, Y.Q.; Ye, X.W.; Yun, C.B.; Shadabfar, M. Localization of damaged cable in a tied-arch bridge using Arias intensity of seismic acceleration response. Struct. Control Health Monit. 2020, 27, e2491. [Google Scholar] [CrossRef]

- Das, R.; Pandey, S.A.; Mahesh, M.; Saini, P.; Anvesh, S. Effect of dynamic unloading of cables in collapse progression through a cable stayed bridge. Asian J. Civ. Eng. 2016, 17, 397–416. [Google Scholar]

- Santos, J.P.; Crémona, C.; Orcesi, A.D.; Silveira, P. Multivariate statistical analysis for early damage detection. Eng. Struct. 2013, 56, 273–285. [Google Scholar] [CrossRef]

- Khan, S.; Yairi, T. A review on the application of deep learning in system health management. Mech. Syst. Signal Process. 2018, 107, 241–265. [Google Scholar] [CrossRef]

- Sun, L.; Shang, Z.; Xia, Y.; Bhowmick, S.; Nagarajaiah, S. Review of bridge structural health monitoring aided by big data and artificial intelligence: From condition assessment to damage detection. J. Struct. Eng. 2020, 146, 04020073. [Google Scholar] [CrossRef]

- Pathirage, C.S.N.; Li, J.; Li, L.; Hao, H.; Liu, W.; Ni, P. Structural damage identification based on autoencoder neural networks and deep learning. Eng. Struct. 2018, 172, 13–28. [Google Scholar] [CrossRef]

- Gu, J.; Gul, M.; Wu, X. Damage detection under varying temperature using artificial neural networks. Struct. Control Health Monit. 2017, 24, e1998. [Google Scholar] [CrossRef]

- Truong, T.T.; Dinh-Cong, D.; Lee, J.; Nguyen-Thoi, T. An effective deep feedforward neural networks (DFNN) method for damage identification of truss structures using noisy incomplete modal data. J. Build. Eng. 2020, 30, 101244. [Google Scholar] [CrossRef]

- Chang, C.M.; Lin, T.K.; Chang, C.W. Applications of neural network models for structural health monitoring based on derived modal properties. Measurement 2018, 129, 457–470. [Google Scholar] [CrossRef]

- Abdeljaber, O.; Avci, O.; Kiranyaz, S.; Gabbouj, M.; Inman, D.J. Real-time vibration-based structural damage detection using one-dimensional convolutional neural networks. J. Sound Vib. 2017, 388, 154–170. [Google Scholar] [CrossRef]

- Azimi, M.; Eslamlou, A.D.; Pekcan, G. Data-Driven Structural Health Monitoring and Damage Detection through Deep Learning: State-of-the-Art Review. Sensors 2020, 20, 2778. [Google Scholar] [CrossRef] [PubMed]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep learning-based crack damage detection using convolutional neural networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Modarres, C.; Astorga, N.; Droguett, E.L.; Meruane, V. Convolutional neural networks for automated damage recognition and damage type identification. Struct. Control Health Monit. 2018, 25, e2230. [Google Scholar] [CrossRef]

- Gao, Y.; Mosalam, K.M. Deep transfer learning for image-based structural damage recognition. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 748–768. [Google Scholar] [CrossRef]

- Kim, B.; Cho, S. Image-based concrete crack assessment using mask and region-based convolutional neural network. Struct. Control Health Monit. 2019, 26, e2381. [Google Scholar] [CrossRef]

- Thai, H.T.; Kim, S.E. Practical advanced analysis software for nonlinear inelastic analysis of space steel structures. Adv. Eng. Softw. 2009, 40, 786–797. [Google Scholar] [CrossRef]

- Ngo-Huu, C.; Nguyen, P.C.; Kim, S.E. Second-order plastic-hinge analysis of space semi-rigid steel frames. Thin-Walled Struct. 2012, 60, 98–104. [Google Scholar] [CrossRef]

- Nguyen, P.C.; Kim, S.E. Nonlinear inelastic time-history analysis of three-dimensional semi-rigid steel frames. J. Constr. Steel Res. 2014, 101, 192–206. [Google Scholar] [CrossRef]

- Truong, V.; Kim, S.E. An efficient method for reliability-based design optimization of nonlinear inelastic steel space frames. Struct. Multidiscip. Optim. 2017, 56, 331–351. [Google Scholar] [CrossRef]

- Thai, H.T.; Kim, S.E. Second-order inelastic analysis of cable-stayed bridges. Finite Elem. Anal. Des. 2012, 53, 48–55. [Google Scholar] [CrossRef]

- Kim, S.E.; Thai, H.T. Nonlinear inelastic dynamic analysis of suspension bridges. Eng. Struct. 2010, 32, 3845–3856. [Google Scholar] [CrossRef]

- Kim, S.E.; Thai, H.T. Second-order inelastic analysis of steel suspension bridges. Finite Elem. Anal. Des. 2011, 47, 351–359. [Google Scholar] [CrossRef]

- Dai, K.; Li, A.; Zhang, H.; Chen, S.E.; Pan, Y. Surface damage quantification of postearthquake building based on terrestrial laser scan data. Struct. Control Health Monit. 2018, 25, e2210. [Google Scholar] [CrossRef]

- Farahani, B.V.; Barros, F.; Sousa, P.J.; Cacciari, P.P.; Tavares, P.J.; Futai, M.M.; Moreira, P. A coupled 3D laser scanning and digital image correlation system for geometry acquisition and deformation monitoring of a railway tunnel. Tunn. Undergr. Space Technol. 2019, 91, 102995. [Google Scholar] [CrossRef]

- Sajedi, S.O.; Liang, X. Vibration-based semantic damage segmentation for large-scale structural health monitoring. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 579–596. [Google Scholar] [CrossRef]

- Olsen, M.J.; Kuester, F.; Chang, B.J.; Hutchinson, T.C. Terrestrial laser scanning-based structural damage assessment. J. Comput. Civ. Eng. 2010, 24, 264–272. [Google Scholar] [CrossRef]

- Suchocki, C.; Jagoda, M.; Obuchovski, R.; Šlikas, D.; Sužiedelytė-Visockienė, J. The properties of terrestrial laser system intensity in measurements of technical conditions of architectural structures. Metrol. Meas. Syst. 2018, 25, 779–792. [Google Scholar]

- Song, M.; Yousefianmoghadam, S.; Mohammadi, M.E.; Moaveni, B.; Stavridis, A.; Wood, R.L. An application of finite element model updating for damage assessment of a two-story reinforced concrete building and comparison with lidar. Struct. Health Monit. 2018, 17, 1129–1150. [Google Scholar] [CrossRef]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-Temporal Graph Convolutional Networks: A Deep Learning Framework for Traffic Forecasting. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI 2018, Stockholm, Sweden, 13–19 July 2018; Lang, J., Ed.; pp. 3634–3640. [Google Scholar] [CrossRef]

- Monti, F.; Bronstein, M.; Bresson, X. Geometric matrix completion with recurrent multi-graph neural networks. Adv. Neural Inf. Process. Syst. 2017. Available online: https://arxiv.org/abs/1704.06803 (accessed on 1 April 2021).

- Ying, R.; He, R.; Chen, K.; Eksombatchai, P.; Hamilton, W.L.; Leskovec, J. Graph Convolutional Neural Networks for Web-Scale Recommender Systems. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD 2018, London, UK, 19–23 August 2018; Guo, Y., Farooq, F., Eds.; ACM: New York, NY, USA, 2018; pp. 974–983. [Google Scholar] [CrossRef]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Neural Message Passing for Quantum Chemistry. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, Australia, 6–11 August 2017; Precup, D., Teh, Y.W., Eds.; Volume 70, pp. 1263–1272. [Google Scholar]

- Marcheggiani, D.; Titov, I. Encoding Sentences with Graph Convolutional Networks for Semantic Role Labeling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, EMNLP 2017, Copenhagen, Denmark, 9–11 September 2017; Palmer, M., Hwa, R., Riedel, S., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 1506–1515. [Google Scholar] [CrossRef]

- Peng, H.; Li, J.; He, Y.; Liu, Y.; Bao, M.; Wang, L.; Song, Y.; Yang, Q. Large-scale hierarchical text classification with recursively regularized deep graph-cnn. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 1063–1072. [Google Scholar]

- Li, S.; Niu, J.; Li, Z. Novelty detection of cable-stayed bridges based on cable force correlation exploration using spatiotemporal graph convolutional networks. Struct. Health Monit. 2021. [Google Scholar] [CrossRef]

- Caruana, R. Multitask learning. Mach. Learn. 1997, 28, 41–75. [Google Scholar] [CrossRef]

- Yang, Y.B.; Shieh, M.S. Solution method for nonlinear problems with multiple critical points. AIAA J. 1990, 28, 2110–2116. [Google Scholar] [CrossRef]

- Thai, H.T.; Kim, S.E. Nonlinear static and dynamic analysis of cable structures. Finite Elem. Anal. Des. 2011, 47, 237–246. [Google Scholar] [CrossRef]

- Rumpf, H. The characteristics of systems and their changes of state disperse. In Particle Technology, Chapman and Hall; Springer: Berlin/Heidelberg, Germany, 1990; pp. 8–54. [Google Scholar]

- Chen, W.F.; Kim, S.E. LRFD Steel Design Using Advanced Analysis; CRC Press: Boca Raton, FL, USA, 1997; Volume 13. [Google Scholar]

- Kim, S.E.; Kim, M.K.; Chen, W.F. Improved refined plastic hinge analysis accounting for strain reversal. Eng. Struct. 2000, 22, 15–25. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Gülçehre, Ç.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Vinyals, O.; Bengio, S.; Kudlur, M. Order Matters: Sequence to sequence for sets. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, 2–4 May 2016; Conference Track Proceedings. Bengio, Y., LeCun, Y., Eds.; 2016. [Google Scholar]

- Nazarian, E.; Ansari, F.; Zhang, X.; Taylor, T. Detection of tension loss in cables of cable-stayed bridges by distributed monitoring of bridge deck strains. J. Struct. Eng. 2016, 142, 04016018. [Google Scholar] [CrossRef]

- Zhang, L.; Qiu, G.; Chen, Z. Structural health monitoring methods of cables in cable-stayed bridge: A review. Measurement 2021, 168, 108343. [Google Scholar] [CrossRef]

- Zhang, J.; Au, F. Effect of baseline calibration on assessment of long-term performance of cable-stayed bridges. Appear. Eng. Fail. Anal. 2013, 35, 234–246. [Google Scholar] [CrossRef]

- Ho, H.N.; Kim, K.D.; Park, Y.S.; Lee, J.J. An efficient image-based damage detection for cable surface in cable-stayed bridges. NDT E Int. 2013, 58, 18–23. [Google Scholar] [CrossRef]

- Hassona, F.; Hashem, M.D.; Abdelmalak, R.I.; Hakeem, B.M. Bumps at bridge approaches: Two case studies for bridges at El-Minia Governorate, Egypt. In International Congress and Exhibition “Sustainable Civil Infrastructures: Innovative Infrastructure Geotechnology”; Springer: Berlin/Heidelberg, Germany, 2017; pp. 265–280. [Google Scholar]

- Thai, H.T.; Kim, S.E. Large deflection inelastic analysis of space trusses using generalized displacement control method. J. Constr. Steel Res. 2009, 65, 1987–1994. [Google Scholar] [CrossRef]

- Shuman, D.I.; Narang, S.K.; Frossard, P.; Ortega, A.; Vandergheynst, P. The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Process. Mag. 2013, 30, 83–98. [Google Scholar] [CrossRef]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for hyper-parameter optimization. In Proceedings of the 25th annual conference on neural information processing systems (NIPS 2011), Neural Information Processing Systems Foundation, Granada, Spain, 12–14 December 2011; Volume 24. [Google Scholar]

- Li, L.; Jamieson, K.G.; Rostamizadeh, A.; Gonina, E.; Ben-tzur, J.; Hardt, M.; Recht, B.; Talwalkar, A. A System for Massively Parallel Hyperparameter Tuning. In Proceedings of the Machine Learning and Systems 2020, MLSys 2020, Austin, TX, USA, 2–4 March 2020. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).