A Supervised Video Hashing Method Based on a Deep 3D Convolutional Neural Network for Large-Scale Video Retrieval

Abstract

1. Introduction

- (1)

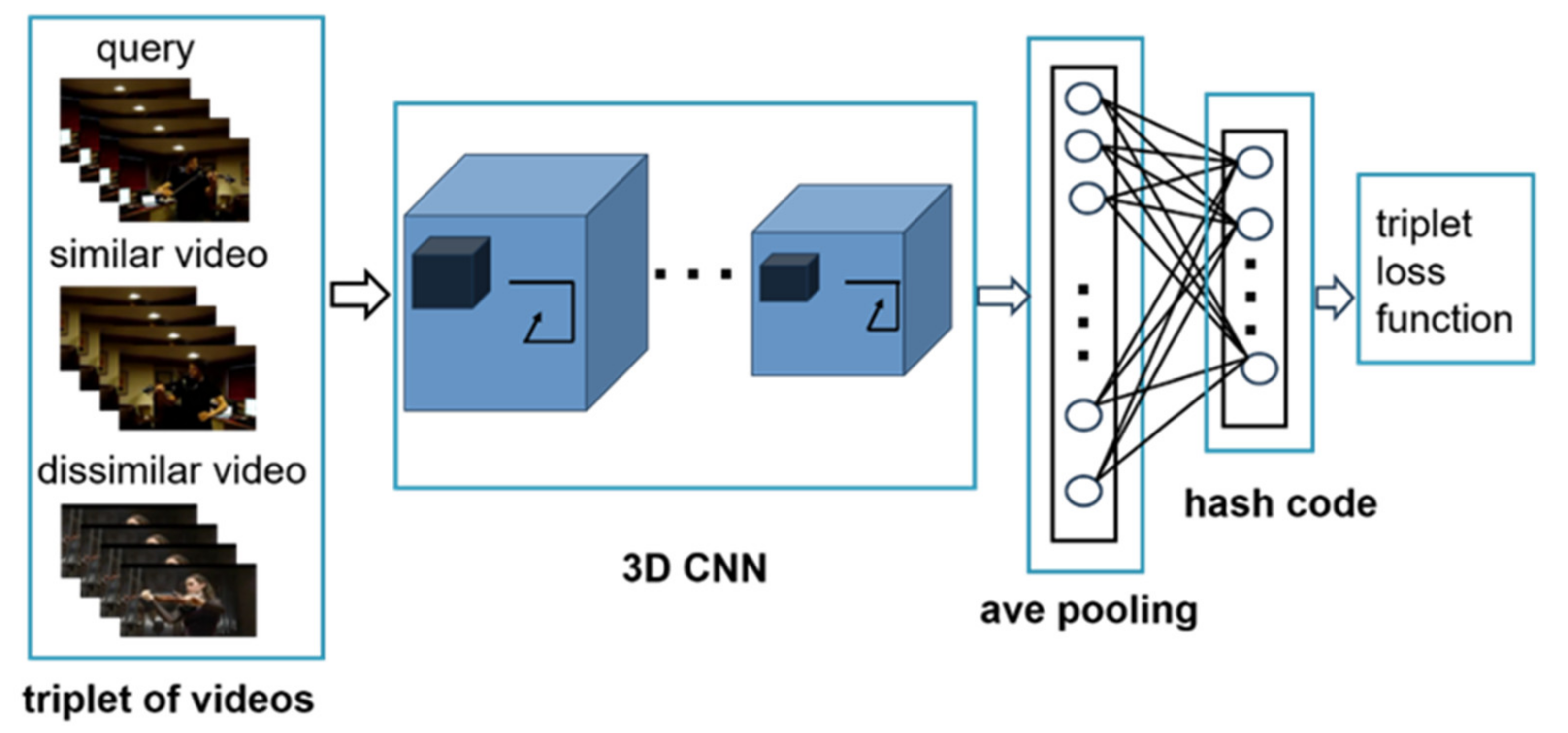

- We design an end-to-end framework for fast video retrieval using the idea of a Deep Supervised Video Hashing. By learning a set of hash functions to transfer video features extract by 3D CNN to a binary space.

- (2)

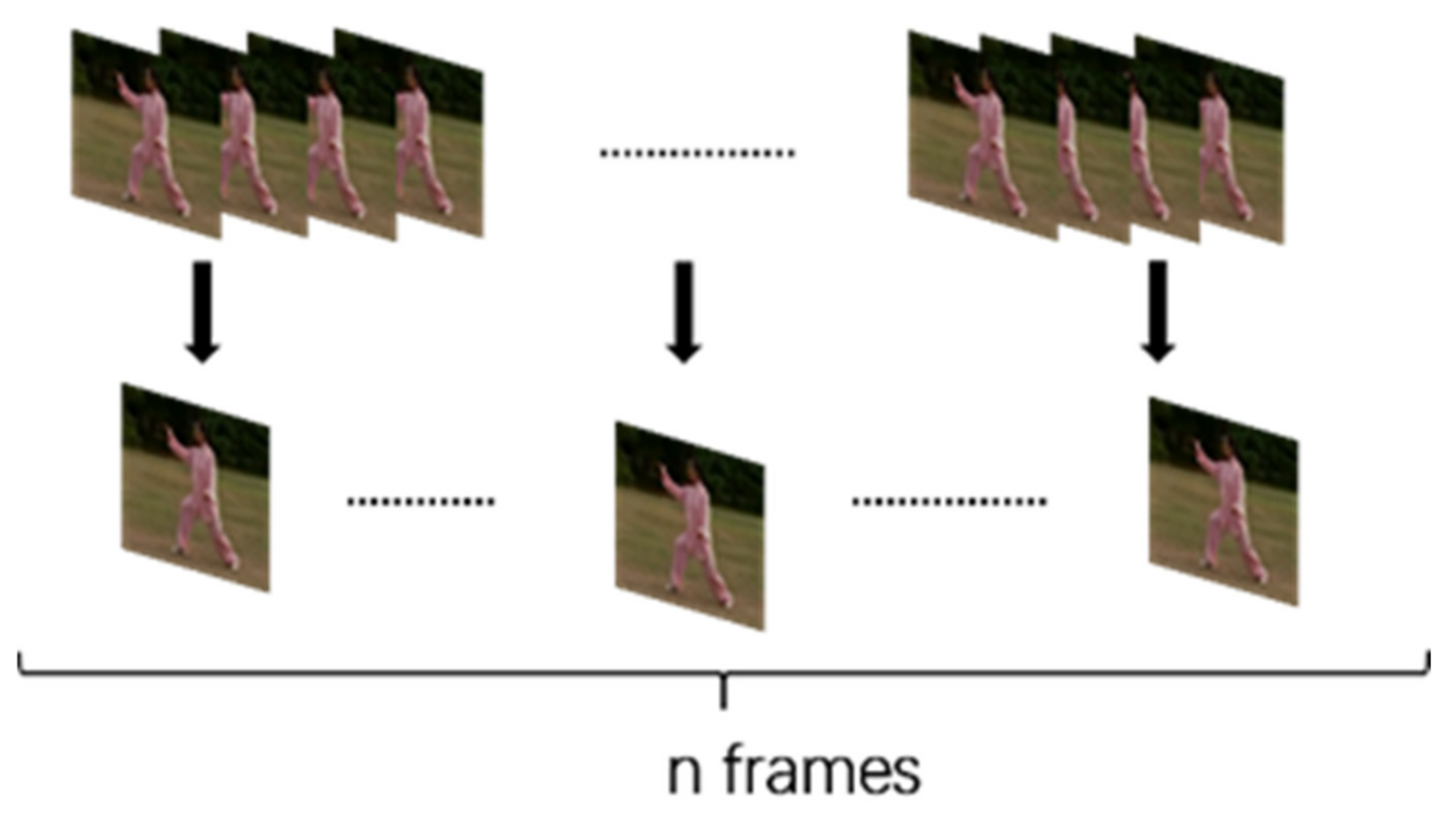

- We choose a fixed number of frames for each video to represent the characteristics of the entire video, which can greatly reduce the computation.

- (3)

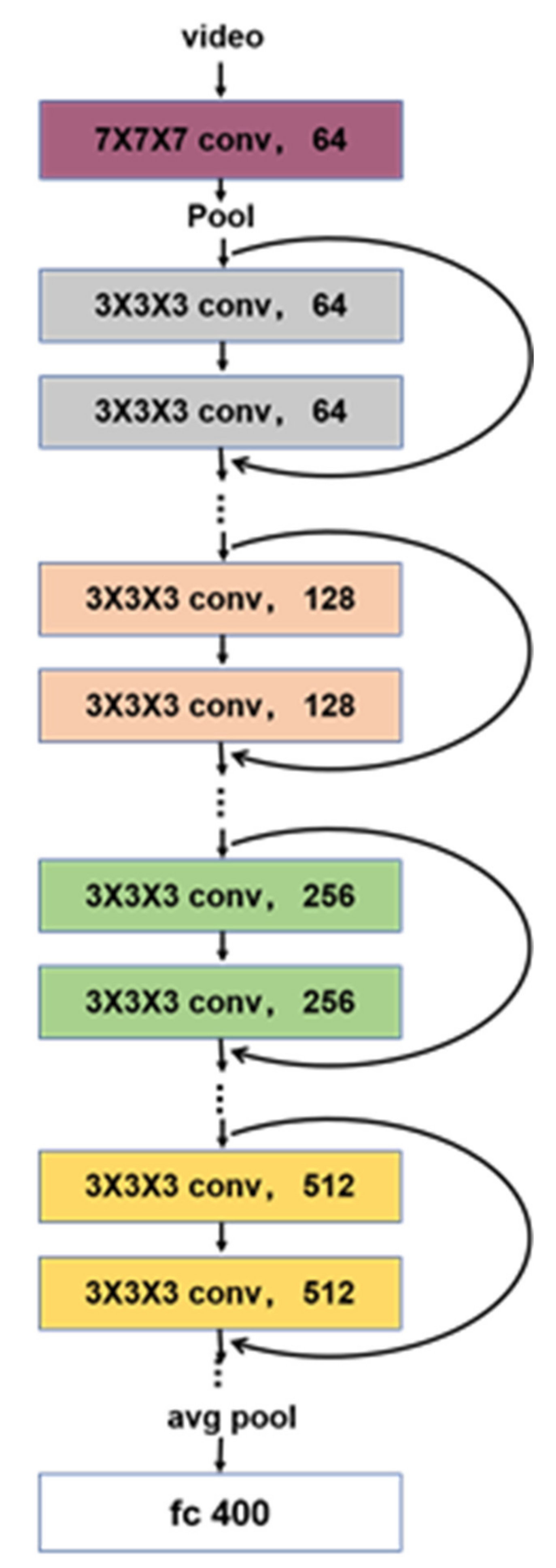

- We apply the idea of transfer learning and use a 3D CNN model with residual links pre-trained on large-scale video dataset to obtain the spatial-temporal features in videos.

- (4)

- We conduct a great quantity of experiments over three datasets to demonstrate that the proposed method outperforms many state-of-the-art methods.

2. Related Works

2.1. D Convolutional Neural Network

2.2. D Convolutional Neural Network

2.3. Hashing

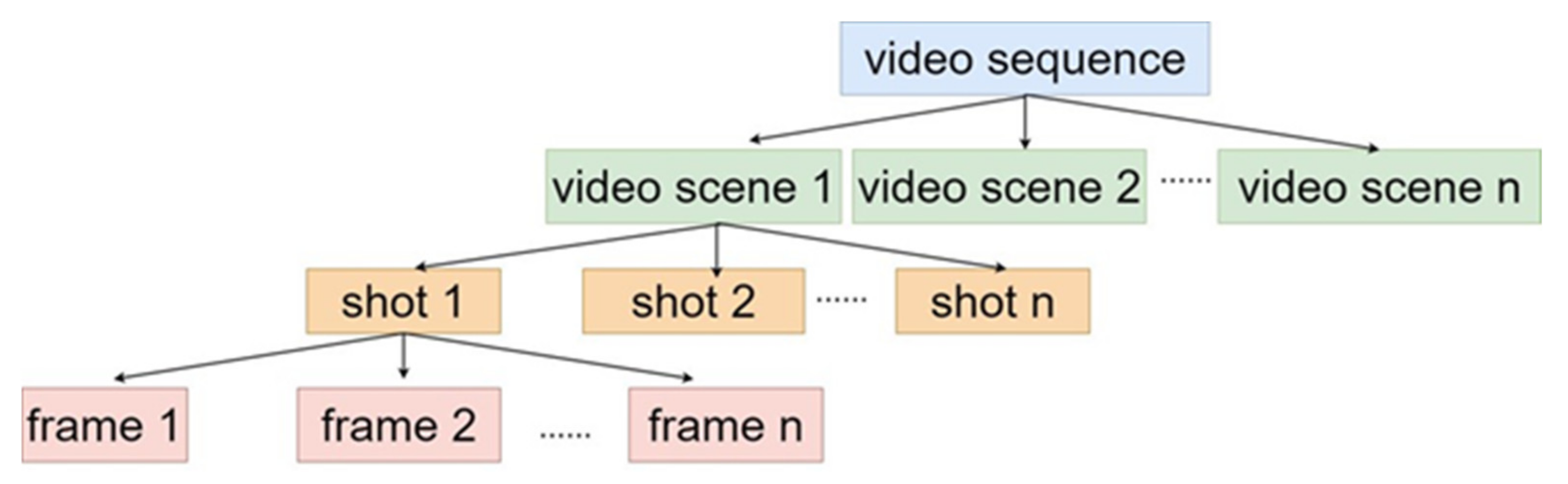

2.4. Video Retrieval

3. Proposed Approach

3.1. Feature Extraction

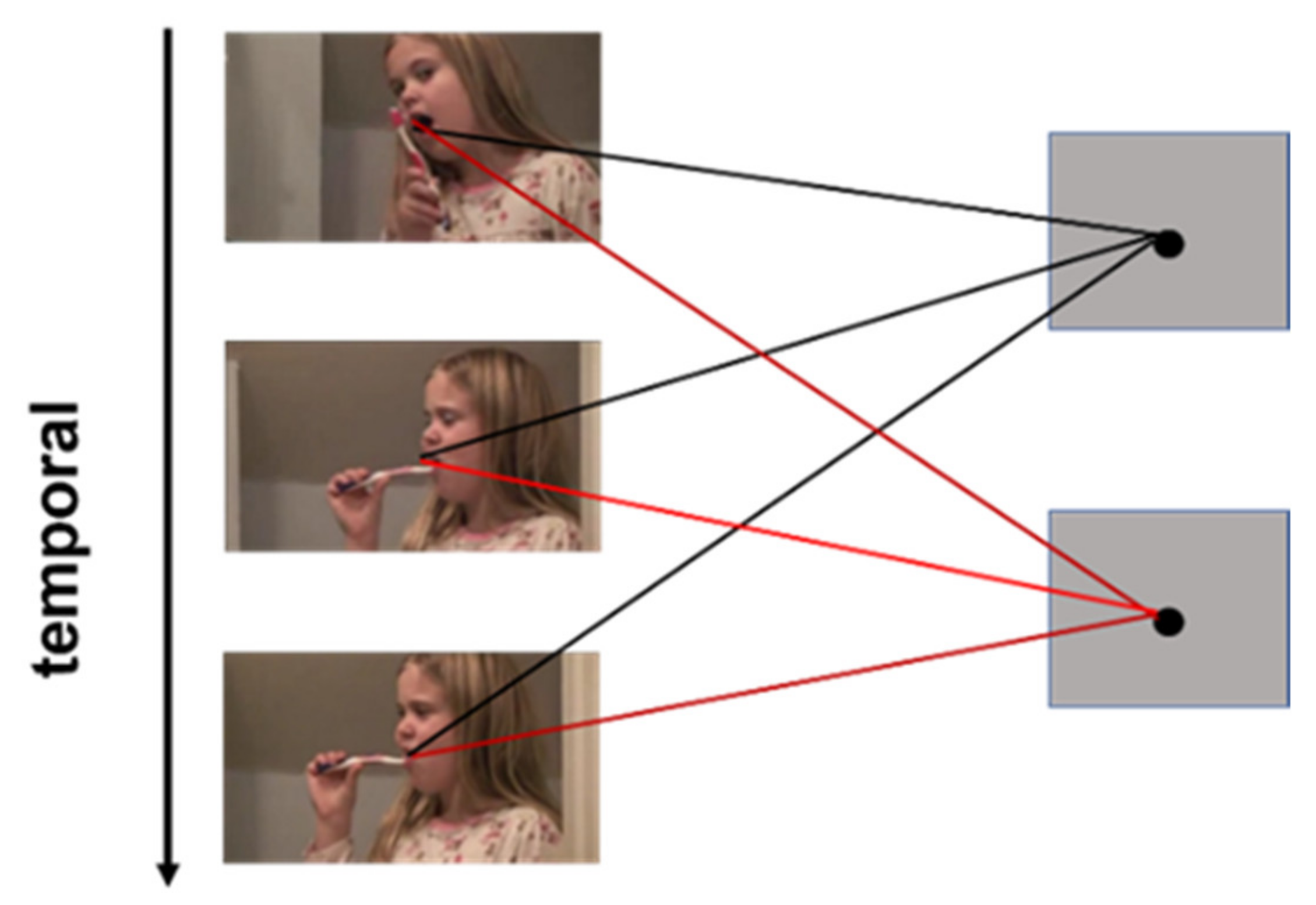

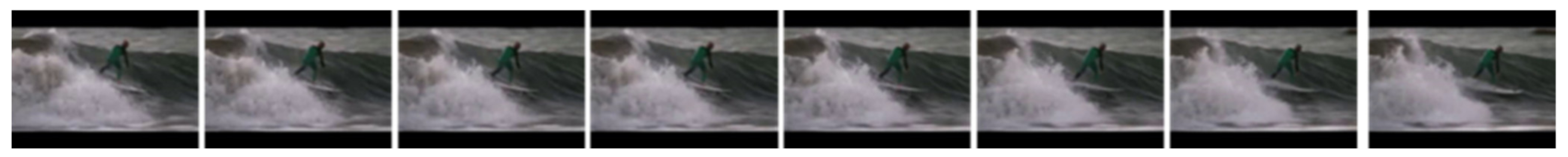

3.1.1. Frames Selection

3.1.2. Feature Extraction

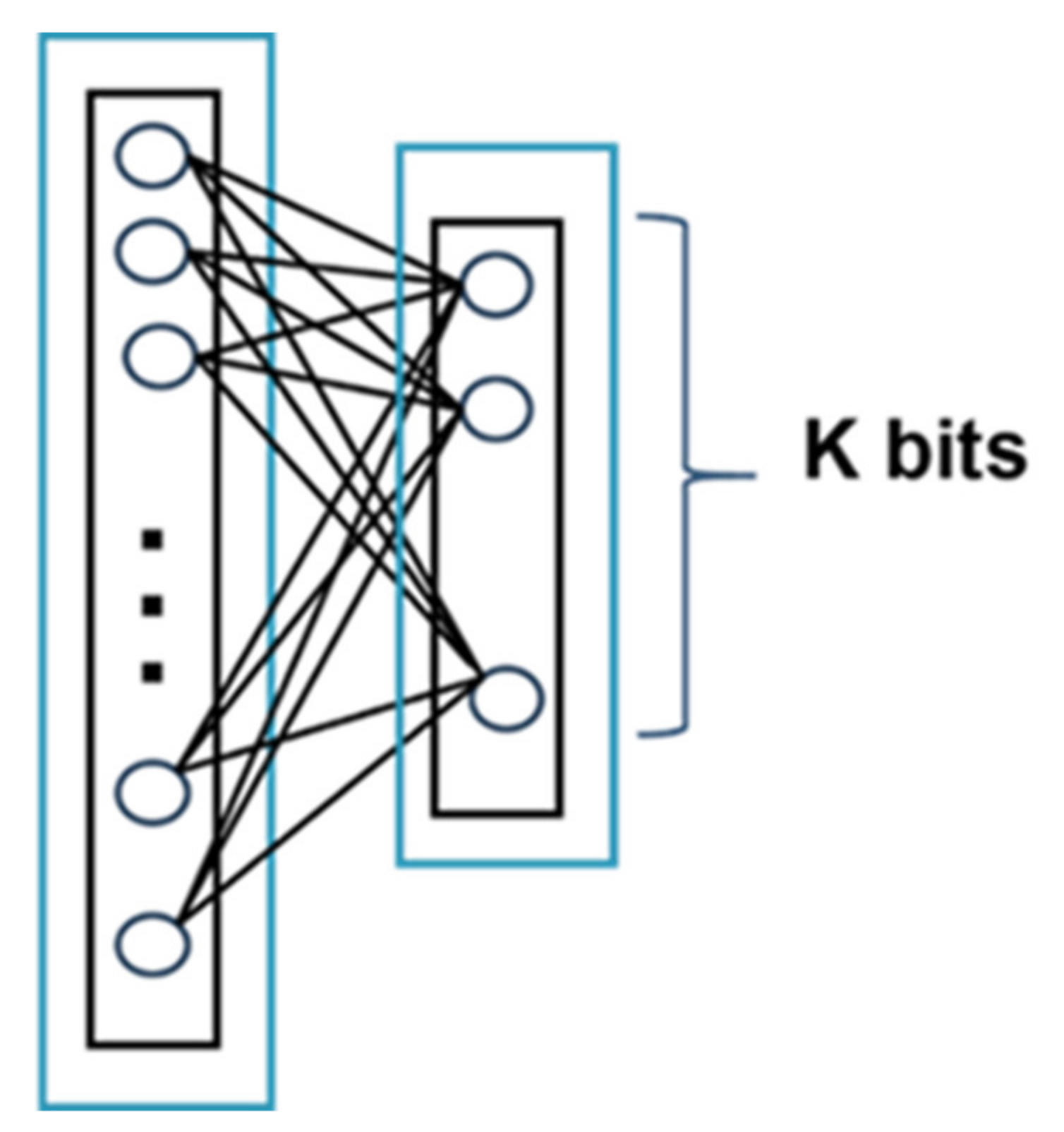

3.2. Hash Layer

3.3. Loss Function

3.3.1. Triplet Selection

4. Experiments

4.1. Datasets and Pre-Trained Model

- UCF-101 Dataset [54]

- JHMDB Dataset [55]

- HMDB-51 Dataset [56]

- Pretrained model

4.2. Experimental Settings and Evaluation Metrics

4.2.1. Experimental Settings

4.2.2. Evaluation Metrics

- Mean Average Precision (mAP)

- Precision@N

4.3. Experimental Results

4.3.1. Experimental Results on UCF-101

4.3.2. Experimental Results on JHMDB

4.3.3. Experimental Results on HMDB-51

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Douze, M.; Jégou, H.; Schmid, C. An image-based approach to video copy detection with spatio-temporal post-filtering. IEEE Trans. Multimed. 2010, 12, 257–266. [Google Scholar] [CrossRef]

- Deldjoo, Y.; Elahi, M.; Cremonesi, P.; Garzotto, F.; Piazzolla, P.; Quadrana, M. Content-based video recommendation system based on stylistic visual features. J. Data Semant. 2016, 5, 99–113. [Google Scholar] [CrossRef]

- Dong, Y.; Li, J. Video Retrieval based on Deep Convolutional Neural Network. In Proceedings of the 3rd International Conference on Multimedia Systems and Signal Processing (ICMSSP), Shenzhen, China, 28 April 2018; pp. 12–16. [Google Scholar]

- Muranoi, R.; Zhao, J.; Hayasaka, R.; Ito, M.; Matsushita, Y. Video retrieval method using shotID for copyright protection systems. In Proceedings of the Multimedia Storage and Archiving Systems III, Boston, MA, USA, 5 October 1998; pp. 245–252. [Google Scholar]

- Shang, L.; Yang, L.; Wang, F.; Chan, K.P.; Hua, X.S. Real-Time Large Scale Near-Duplicate Web Video Retrieval. In Proceedings of the 18th ACM International Conference on Multimedia (ACM MM), Firenze, Italy, 25–29 October 2010; pp. 531–540. [Google Scholar]

- Wu, X.; Hauptmann, A.G.; Ngo, C.W. Practical Elimination of Near-Duplicates from Web Video Search. In Proceedings of the 15th ACM International Conference on Multimedia (ACM MM), Augsburg, Bavaria, Germany, 24–29 September 2007; pp. 218–227. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Xie, L.; Lee, F.; Liu, L.; Yin, Z.; Chen, Q. Hierarchical coding of convolutional features for scene recognition. IEEE Trans. Multimed. 2020, 22, 1182–1192. [Google Scholar] [CrossRef]

- Chen, L.; Bo, K.; Lee, F.; Chen, Q. Advanced feature fusion algorithm based on multiple convolutional neural network for scene recognition. Comput. Model. Eng. Sci. 2020, 122, 505–523. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, Canada, 11–12 December 2015; pp. 91–99. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 4690–4699. [Google Scholar]

- Xia, R.; Pan, Y.; Lai, H.; Liu, C.; Yan, S. Supervised Hashing for Image Retrieval via Image Representation Learning. In Proceedings of the 28th AAAI Conference on Artificial Intelligence, Quebec City, QC, Canada, 27–31 July 2014. [Google Scholar]

- Zhao, F.; Huang, Y.; Wang, L.; Tan, T. Deep Semantic Ranking based Hashing for Multi-Label Image Retrieval. In Proceedings of the 2015 IEEE Conference on Computer VISION and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1556–1564. [Google Scholar]

- Lu, C.; Lee, F.; Chen, L.; Huang, S.; Chen, Q. IDSH: An improved deep supervised hashing method for image retrieval. Comput. Model. Eng. Sci. 2019, 121, 593–608. [Google Scholar] [CrossRef]

- Tan, H.K.; Ngo, C.W.; Hong, R.; Chua, T.S. Scalable Detection of Partial Near-Duplicate Videos by Visual-Temporal Consistency. In Proceedings of the 17th ACM International Conference on Multimedia (ACM MM), Beijing, China, 19–24 October 2009; pp. 145–154. [Google Scholar]

- Douze, M.; Jégou, H.; Schmid, C.; Pérez, P. Compact Video Description for Copy Detection with Precise Temporal Alignment. In Proceedings of the European Conference on Computer Vision (ECCV), Crete, Greece, 5–11 September 2010; pp. 522–535. [Google Scholar]

- Baraldi, L.; Douze, M.; Cucchiara, R.; Jégou, H. LAMV: Learning to align and match videos with kernelized temporal layers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7804–7813. [Google Scholar]

- Kordopatis-Zilos, G.; Papadopoulos, S.; Patras, I.; Kompatsiaris, I. Visil: Fine-Grained Spatio-Temporal Video Similarity Learning. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6351–6360. [Google Scholar]

- Hu, Y.; Lu, X. Learning spatial-temporal features for video copy detection by the combination of CNN and RNN. J. Vis. Commun. Image Represent. 2018, 55, 21–29. [Google Scholar] [CrossRef]

- Lin, K.; Yang, H.F.; Hsiao, J.H.; Chen, C.S. Deep Learning of Binary Hash Codes for Fast Image Retrieval. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 27–35. [Google Scholar]

- Lai, H.; Pan, Y.; Liu, Y.; Yan, S. Simultaneous Feature Learning and Hash Coding with Deep Neural Networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3270–3278. [Google Scholar]

- Wang, X.; Lee, F.; Chen, Q. Similarity-preserving hashing based on deep neural networks for large-scale image retrieval. J. Vis. Commun. Image Represent. 2019, 61, 260–271. [Google Scholar] [CrossRef]

- Wang, L.; Qian, X.; Zhang, Y.; Shen, J.; Cao, X. Enhancing sketch-based image retrieval by cnn semantic re-ranking. IEEE Trans. Cybern. 2019, 50, 3330–3342. [Google Scholar] [CrossRef] [PubMed]

- Song, J.; Yang, Y.; Huang, Z.; Shen, H.T.; Hong, R. Multiple Feature Hashing for Real-Time Large Scale Near-Duplicate Video Retrieval. In Proceedings of the 19th ACM International Conference on Multimedia (ACM MM), Scottsdale, AZ, USA, 28 November–1 December 2011; pp. 423–432. [Google Scholar]

- Shen, L.; Hong, R.; Zhang, H.; Tian, X.; Wang, M. Video retrieval with similarity-preserving deep temporal hashing. ACM Trans. Multimedia Comput. Commun. Appl. 2019, 15, 1–16. [Google Scholar] [CrossRef]

- Anuranji, R.; Srimathi, H. A supervised deep convolutional based bidirectional long short term memory video hashing for large scale video retrieval applications. Digit. Signal Process. 2020, 102, 102729. [Google Scholar] [CrossRef]

- Jiang, Y.G.; Wang, J. Partial copy detection in videos: A benchmark and an evaluation of popular methods. IEEE Trans. Big Data 2016, 2, 32–42. [Google Scholar] [CrossRef]

- Wang, L.; Bao, Y.; Li, H.; Fan, X.; Luo, Z. Compact CNN based Video Representation for Efficient Video Copy Detection. In Proceedings of the International Conference on Multimedia Modeling (MMM), Reykjavik, Iceland, 4–6 January 2017; pp. 576–587. [Google Scholar]

- Kordopatis-Zilos, G.; Papadopoulos, S.; Patras, I.; Kompatsiaris, Y. Near-Duplicate Video Retrieval with Deep Metric Learning. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 347–356. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef]

- Huang, X.; Shan, J.; Vaidya, V. Lung Nodule Detection in CT Using 3D Convolutional Neural Networks. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI), Melbourne, VIC, Australia, 18–21 April 2017; pp. 379–383. [Google Scholar]

- Molchanov, P.; Gupta, S.; Kim, K.; Kautz, J. Hand Gesture Recognition with 3D Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 1–7. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3d Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015; pp. 4489–4497. [Google Scholar]

- Qiu, Z.; Yao, T.; Mei, T. Learning Spatio-Temporal Representation with Pseudo-3d Residual Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5533–5541. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A Closer Look at Spatiotemporal Convolutions for Action Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6450–6459. [Google Scholar]

- Liu, W.; Wang, J.; Ji, R.; Jiang, Y.G.; Chang, S.F. Supervised hashing with kernels. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2074–2081. [Google Scholar]

- Norouzi, M.; Fleet, D.J. Minimal Loss Hashing for Compact Binary Codes. In Proceedings of the 28th International Conference on Machine Learning (ICML), Bellevue, WA, USA, 28 June–2 July 2011; pp. 353–360. [Google Scholar]

- Gionis, A.; Indyk, P.; Motwani, R. Similarity Search in High Dimensions via Hashing. In Proceedings of the 25th International Conference on Very Large Data Bases (VLDB), Edinburgh, Scotland, UK, 7–10 September 1999; pp. 518–529. [Google Scholar]

- Gong, Y.; Lazebnik, S.; Gordo, A.; Perronnin, F. Iterative quantization: A procrustean approach to learning binary codes for large-scale image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2916–2929. [Google Scholar] [CrossRef]

- Zhu, L.; Shen, J.; Xie, L.; Cheng, Z. Unsupervised topic hypergraph hashing for efficient mobile image retrieval. IEEE Trans Cybern. 2016, 47, 3941–3954. [Google Scholar] [CrossRef]

- Zhu, L.; Shen, J.; Xie, L.; Cheng, Z. Unsupervised visual hashing with semantic assistant for content-based image retrieval. IEEE Trans Knowl Data Eng. 2016, 29, 472–486. [Google Scholar] [CrossRef]

- Song, J.; Zhang, H.; Li, X.; Gao, L.; Wang, M.; Hong, R. Self-supervised video hashing with hierarchical binary auto-encoder. IEEE Trans. Image Process. 2018, 27, 3210–3221. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.; Han, J.; Guo, Y.; Liu, L.; Ding, G.; Ni, Q.; Shao, L. Unsupervised deep video hashing via balanced code for large-scale video retrieval. IEEE Trans. Image Process. 2018, 28, 1993–2007. [Google Scholar] [CrossRef]

- Galanopoulos, D.; Mezaris, V. Attention Mechanisms, Signal Encodings and Fusion Strategies for Improved Ad-Hoc Video Search with Dual Encoding Networks. In Proceedings of the 2020 International Conference on Multimedia Retrieval (ICMR), Dublin, Ireland, 8–11 June 2020; pp. 336–340. [Google Scholar]

- Prathiba, T.; Kumari, R.S.S. Content based video retrieval system based on multimodal feature grouping by KFCM clustering algorithm to promote human–computer interaction. J. Ambient Intell. Humaniz Comput. 2020, 1–15. [Google Scholar] [CrossRef]

- Li, S.; Chen, Z.; Lu, J.; Li, X.; Zhou, J. Neighborhood Preserving Hashing for Scalable Video Retrieval. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 8212–8221. [Google Scholar]

- Kumar, V.; Tripathi, V.; Pant, B. Learning compact spatio-temporal features for fast content based video retrieval. Int. J. Innov. Tech. Explor. Eng. 2019, 9, 2402–2409. [Google Scholar]

- Yuan, L.; Wang, T.; Zhang, X.; Tay, F.E.; Jie, Z.; Liu, W.; Feng, J. Central Similarity Quantization for Efficient Image and Video Retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3083–3092. [Google Scholar]

- Hara, K.; Kataoka, H.; Satoh, Y. Learning Spatio-Temporal Features with 3D Residual Networks for Action Recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 3154–3160. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. A dataset of 101 human action classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Jhuang, H.; Gall, J.; Zuffi, S.; Schmid, C.; Black, M.J. Towards Understanding Action Recognition. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013; pp. 3192–3199. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A Large Video Database for Human Motion Recognition. In Proceedings of the 2011 International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2556–2563. [Google Scholar]

- Liong, V.E.; Lu, J.; Tan, Y.P.; Zhou, J. Deep video hashing. IEEE Trans. Multimed. 2017, 19, 1209–1219. [Google Scholar] [CrossRef]

| Layer Name | Architecture |

|---|---|

| Conv1 stride 1(T), 2(XY) max pool, stride 2 Conv2_x Conv3_x Conv4_x Conv5_x Average pool, 400-d fc, softmax | |

| Datasets | Training Set | Test Set | Gallery Set |

|---|---|---|---|

| UCF-101 | 9537 | 3783 | - |

| JHMDB | 210 | 210 | 410 |

| HMDB-51 | 3570 | 1530 | - |

| (a) Comparison Results of mAP on UCF-101 Dataset | |||

|---|---|---|---|

| Methods | UCF-101 Dataset | ||

| Bits | 64 Bits | 128 Bits | 256 Bits |

| ITQ | 0.294 | 0.305 | 0.306 |

| DVH | 0.705 | 0.712 | 0.729 |

| DCNNH | 0.747 | 0.783 | 0.796 |

| SPDTH | 0.741 | 0.750 | 0.762 |

| DSVH (ours) | 0.798 | 0.801 | 0.806 |

| (b) Comparison Results of Precision@N on UCF-101 Dataset | |||

| Methods | Precision@30 | ||

| Bits | 64 Bits | 128 Bits | 256 Bits |

| ITQ | 0.389 | 0.419 | 0.432 |

| DVH | 0.734 | 0.744 | 0.756 |

| DCNNH | - | - | - |

| SPDTH | 0.751 | 0.757 | 0.765 |

| DSVH (ours) | 0.781 | 0.774 | 0.784 |

| (c) Comparison Results of mAP with Different Network Depths | |||

| Networks | UCF-101 Dataset | ||

| Bits | 64 Bits | 128 Bits | 256 Bits |

| 3D ResNet-18 | 0.735 | 0.756 | 0.757 |

| 3D ResNet-34 | 0.798 | 0.801 | 0.806 |

| (d) Influence of Hash Layer on mAP | |||

| Networks | UCF-101 Dataset | ||

| Bits | 64 Bits | 128 Bits | 256 Bits |

| With Hash Layer | 0.798 | 0.801 | 0.806 |

| Without Hash Layer | 0.752 | 0.787 | 0.785 |

| (a) Comparison Results of mAP on JHMDB Dataset | |||

| Methods | JHMDB dataset | ||

| Bits | 16 Bits | 32 Bits | 64 Bits |

| DVH | 0.332 | 0.368 | 0.369 |

| ITQ | 0.132 | 0.145 | 0.151 |

| SPDTH | 0.386 | 0.438 | 0.464 |

| BIDLSTM | 0.410 | 0.455 | 0.482 |

| DSVH (ours) | 0.430 | 0.458 | 0.494 |

| (b) Comparison Results of Precision@N on JHMDB Dataset | |||

| Methods | Precision@20 | ||

| Bits | 16 Bits | 32 Bits | 64 Bits |

| DVH | 0.342 | 0.368 | 0.382 |

| ITQ | 0.152 | 0.175 | 0.191 |

| SPDTH | 0.375 | 0.408 | 0.438 |

| BIDLSTM | - | - | - |

| DSVH (ours) | 0.397 | 0.430 | 0.469 |

| (c) Comparison Results of mAP with Different Network Depths | |||

| Networks | JHMDB Dataset | ||

| Bits | 16 Bits | 32 Bits | 64 Bits |

| 3D ResNet-18 | 0.371 | 0.411 | 0.444 |

| 3D ResNet-34 | 0.397 | 0.430 | 0.469 |

| (d) Influence of Hash Layer on mAP | |||

| Networks | JHMDB Dataset | ||

| Bits | 16 bits | 32 bits | 64 bits |

| With Hash Layer | 0.397 | 0.430 | 0.469 |

| Without Hash Layer | 0.320 | 0.384 | 0.416 |

| (a) Comparison Results of mAP on HMDB-51 Dataset | |||

| Methods | HMDB-51 Dataset | ||

| Bits | 64 Bits | 128 Bits | 256 Bits |

| ITQ | 0.408 | 0.416 | 0.436 |

| DBH | 0.389 | 0.391 | 0.386 |

| DNNH | 0.487 | 0.503 | 0.493 |

| DCNNH | 0.458 | 0.451 | 0.467 |

| DSVH (ours) | 0.562 | 0.565 | 0.575 |

| (b) Comparison of Different N on Precision@ Based HMDB-51 Dataset | |||

| Dateset | HMDB-51 Dataset | ||

| Bits | 64 Bits | 128 Bits | 256 Bits |

| Precision@10 | 0.522 | 0.531 | 0.537 |

| Precision@50 | 0.513 | 0.519 | 0.530 |

| Precision@100 | 0.405 | 0.408 | 0.413 |

| (c) Comparison mAP of Different Frames on HMDB-51 Dataset | |||

| Dateset | HMDB-51 Dataset | ||

| Bits | 64 Bits | 128 Bits | 256 Bits |

| 12 frames | 0.490 | 0.516 | 0.539 |

| 16 frames | 0.562 | 0.565 | 0.575 |

| 20 frames | 0.527 | 0.550 | 0.556 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Hu, C.; Lee, F.; Lin, C.; Yao, W.; Chen, L.; Chen, Q. A Supervised Video Hashing Method Based on a Deep 3D Convolutional Neural Network for Large-Scale Video Retrieval. Sensors 2021, 21, 3094. https://doi.org/10.3390/s21093094

Chen H, Hu C, Lee F, Lin C, Yao W, Chen L, Chen Q. A Supervised Video Hashing Method Based on a Deep 3D Convolutional Neural Network for Large-Scale Video Retrieval. Sensors. 2021; 21(9):3094. https://doi.org/10.3390/s21093094

Chicago/Turabian StyleChen, Hanqing, Chunyan Hu, Feifei Lee, Chaowei Lin, Wei Yao, Lu Chen, and Qiu Chen. 2021. "A Supervised Video Hashing Method Based on a Deep 3D Convolutional Neural Network for Large-Scale Video Retrieval" Sensors 21, no. 9: 3094. https://doi.org/10.3390/s21093094

APA StyleChen, H., Hu, C., Lee, F., Lin, C., Yao, W., Chen, L., & Chen, Q. (2021). A Supervised Video Hashing Method Based on a Deep 3D Convolutional Neural Network for Large-Scale Video Retrieval. Sensors, 21(9), 3094. https://doi.org/10.3390/s21093094